Storm入门(四)WordCount示例

一、关联代码

使用maven,代码如下。

pom.xml 和Storm入门(三)HelloWorld示例相同

RandomSentenceSpout.java

/**

* Licensed to the Apache Software Foundation (ASF) under one

* or more contributor license agreements. See the NOTICE file

* distributed with this work for additional information

* regarding copyright ownership. The ASF licenses this file

* to you under the Apache License, Version 2.0 (the

* "License"); you may not use this file except in compliance

* with the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package cn.ljh.storm.wordcount; import org.apache.storm.spout.SpoutOutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichSpout;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Values;

import org.apache.storm.utils.Utils;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory; import java.text.SimpleDateFormat;

import java.util.Date;

import java.util.Map;

import java.util.Random; public class RandomSentenceSpout extends BaseRichSpout {

private static final Logger LOG = LoggerFactory.getLogger(RandomSentenceSpout.class); SpoutOutputCollector _collector;

Random _rand; public void open(Map conf, TopologyContext context, SpoutOutputCollector collector) {

_collector = collector;

_rand = new Random();

} public void nextTuple() {

Utils.sleep(100);

String[] sentences = new String[]{

sentence("the cow jumped over the moon"),

sentence("an apple a day keeps the doctor away"),

sentence("four score and seven years ago"),

sentence("snow white and the seven dwarfs"),

sentence("i am at two with nature")};

final String sentence = sentences[_rand.nextInt(sentences.length)]; LOG.debug("Emitting tuple: {}", sentence); _collector.emit(new Values(sentence));

} protected String sentence(String input) {

return input;

} @Override

public void ack(Object id) {

} @Override

public void fail(Object id) {

} public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("word"));

} // Add unique identifier to each tuple, which is helpful for debugging

public static class TimeStamped extends RandomSentenceSpout {

private final String prefix; public TimeStamped() {

this("");

} public TimeStamped(String prefix) {

this.prefix = prefix;

} protected String sentence(String input) {

return prefix + currentDate() + " " + input;

} private String currentDate() {

return new SimpleDateFormat("yyyy.MM.dd_HH:mm:ss.SSSSSSSSS").format(new Date());

}

}

}

WordCountTopology.java

/**

* Licensed to the Apache Software Foundation (ASF) under one

* or more contributor license agreements. See the NOTICE file

* distributed with this work for additional information

* regarding copyright ownership. The ASF licenses this file

* to you under the Apache License, Version 2.0 (the

* "License"); you may not use this file except in compliance

* with the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package cn.ljh.storm.wordcount; import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.StormSubmitter;

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.BasicOutputCollector;

import org.apache.storm.topology.IRichBolt;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.TopologyBuilder;

import org.apache.storm.topology.base.BaseBasicBolt;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Tuple;

import org.apache.storm.tuple.Values; import java.util.ArrayList;

import java.util.Collections;

import java.util.HashMap;

import java.util.List;

import java.util.Map; public class WordCountTopology {

public static class SplitSentence implements IRichBolt {

private OutputCollector _collector;

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("word"));

} public Map<String, Object> getComponentConfiguration() {

return null;

} public void prepare(Map stormConf, TopologyContext context,

OutputCollector collector) {

_collector = collector;

} public void execute(Tuple input) {

String sentence = input.getStringByField("word");

String[] words = sentence.split(" ");

for(String word : words){

this._collector.emit(new Values(word));

}

} public void cleanup() {

// TODO Auto-generated method stub }

} public static class WordCount extends BaseBasicBolt {

Map<String, Integer> counts = new HashMap<String, Integer>(); public void execute(Tuple tuple, BasicOutputCollector collector) {

String word = tuple.getString(0);

Integer count = counts.get(word);

if (count == null)

count = 0;

count++;

counts.put(word, count);

collector.emit(new Values(word, count));

} public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("word", "count"));

}

} public static class WordReport extends BaseBasicBolt {

Map<String, Integer> counts = new HashMap<String, Integer>(); public void execute(Tuple tuple, BasicOutputCollector collector) {

String word = tuple.getStringByField("word");

Integer count = tuple.getIntegerByField("count");

this.counts.put(word, count);

} public void declareOutputFields(OutputFieldsDeclarer declarer) { } @Override

public void cleanup() {

System.out.println("-----------------FINAL COUNTS START-----------------------");

List<String> keys = new ArrayList<String>();

keys.addAll(this.counts.keySet());

Collections.sort(keys); for(String key : keys){

System.out.println(key + " : " + this.counts.get(key));

} System.out.println("-----------------FINAL COUNTS END-----------------------");

} } public static void main(String[] args) throws Exception { TopologyBuilder builder = new TopologyBuilder(); builder.setSpout("spout", new RandomSentenceSpout(), 5); //ShuffleGrouping:随机选择一个Task来发送。

builder.setBolt("split", new SplitSentence(), 8).shuffleGrouping("spout");

//FiledGrouping:根据Tuple中Fields来做一致性hash,相同hash值的Tuple被发送到相同的Task。

builder.setBolt("count", new WordCount(), 12).fieldsGrouping("split", new Fields("word"));

//GlobalGrouping:所有的Tuple会被发送到某个Bolt中的id最小的那个Task。

builder.setBolt("report", new WordReport(), 6).globalGrouping("count"); Config conf = new Config();

conf.setDebug(true); if (args != null && args.length > 0) {

conf.setNumWorkers(3); StormSubmitter.submitTopologyWithProgressBar(args[0], conf, builder.createTopology());

}

else {

conf.setMaxTaskParallelism(3); LocalCluster cluster = new LocalCluster();

cluster.submitTopology("word-count", conf, builder.createTopology()); Thread.sleep(20000); cluster.shutdown();

}

}

}

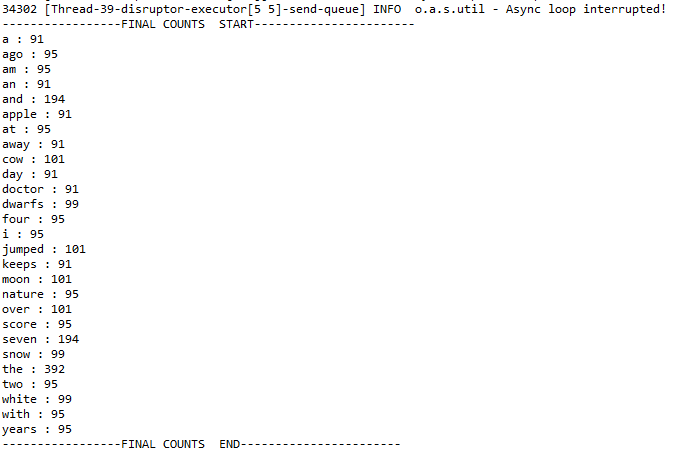

二、执行效果

Storm入门(四)WordCount示例的更多相关文章

- 【第四篇】ASP.NET MVC快速入门之完整示例(MVC5+EF6)

目录 [第一篇]ASP.NET MVC快速入门之数据库操作(MVC5+EF6) [第二篇]ASP.NET MVC快速入门之数据注解(MVC5+EF6) [第三篇]ASP.NET MVC快速入门之安全策 ...

- 《Storm入门》中文版

本文翻译自<Getting Started With Storm>译者:吴京润 编辑:郭蕾 方腾飞 本书的译文仅限于学习和研究之用,没有原作者和译者的授权不能用于商业用途. 译者序 ...

- 【原创】NIO框架入门(四):Android与MINA2、Netty4的跨平台UDP双向通信实战

概述 本文演示的是一个Android客户端程序,通过UDP协议与两个典型的NIO框架服务端,实现跨平台双向通信的完整Demo. 当前由于NIO框架的流行,使得开发大并发.高性能的互联网服务端成为可能. ...

- Storm系列(二):使用Csharp创建你的第一个Storm拓扑(wordcount)

WordCount在大数据领域就像学习一门语言时的hello world,得益于Storm的开源以及Storm.Net.Adapter,现在我们也可以像Java或Python一样,使用Csharp创建 ...

- 脑残式网络编程入门(四):快速理解HTTP/2的服务器推送(Server Push)

本文原作者阮一峰,作者博客:ruanyifeng.com. 1.前言 新一代HTTP/2 协议的主要目的是为了提高网页性能(有关HTTP/2的介绍,请见<从HTTP/0.9到HTTP/2:一文读 ...

- 大数据入门第七天——MapReduce详解(一)入门与简单示例

一.概述 1.map-reduce是什么 Hadoop MapReduce is a software framework for easily writing applications which ...

- hadoop学习第三天-MapReduce介绍&&WordCount示例&&倒排索引示例

一.MapReduce介绍 (最好以下面的两个示例来理解原理) 1. MapReduce的基本思想 Map-reduce的思想就是“分而治之” Map Mapper负责“分”,即把复杂的任务分解为若干 ...

- MapReduce 编程模型 & WordCount 示例

学习大数据接触到的第一个编程思想 MapReduce. 前言 之前在学习大数据的时候,很多东西很零散的做了一些笔记,但是都没有好好去整理它们,这篇文章也是对之前的笔记的整理,或者叫输出吧.一来是加 ...

- WordCount示例深度学习MapReduce过程(1)

我们都安装完Hadoop之后,按照一些案例先要跑一个WourdCount程序,来测试Hadoop安装是否成功.在终端中用命令创建一个文件夹,简单的向两个文件中各写入一段话,然后运行Hadoop,Wou ...

随机推荐

- 加载静态界面----,要不要会加载cookie和页面参数

Server.Transfer(string.Format("/shouji/StaticHtml/RobLine/{0}.html", id),true); 加cookie. S ...

- LOJ #6041. 「雅礼集训 2017 Day7」事情的相似度

我可以大喊一声这就是个套路题吗? 首先看到LCP问题,那么套路的想到SAM(SA的做法也有) LCP的长度是它们在parent树上的LCA(众所周知),所以我们考虑同时统计多个点之间的LCA对 树上问 ...

- 如何在eclipse中快速debug到想要的参数条件场景下

前言 俗话说,工欲善其事必先利其器. 对于我们经常使用的开发工具多一些了解,这也是对我们自己工作效率的一种提升. 场景 作为开发,我们经常会遇到各种bug,大部分的bug很明确,我们直接可以打断点定位 ...

- .NET Core 3.0-preview3 发布

.NET Core 3.0 Preview 3已经发布,框架和ASP.NET Core有许多有趣的更新.这是最重要的更新列表. 下载地址 :https://aka.ms/netcore3downloa ...

- 单元测试过多,导致The configured user limit (128) on the number of inotify instances has been reached.

最近在一个asp.net core web项目中使用TDD的方式开发,结果单元测试超过128个之后,在CI中报错了:"The configured user limit (128) on t ...

- Winows + VSCode + Debug PHP

一.环境 环境:Win10 二.软件 1.安装VSCode 下载地址:https://code.visualstudio.com/Download 2.PHP 代码包 版本:php-7.2.9-Win ...

- 1.6W star 的 JCSprout 阅读体验大提升

万万没想到 JCSprout 截止目前居然有将近1.6W star.真的非常感谢各位大佬的支持. 年初时创建这个 repo 原本只是想根据自己面试与被面试的经历记录一些核心知识点,结果却是越写越多. ...

- 用python读文件如.c文件生成excel文件

记录一下,如何实现的,代码如下: #!/usr/bin/env python # coding=utf-8 # 打开文件 import xlwt import re import sys bookfi ...

- Reactor 典型的 NIO 编程模型

Doug Lea 在 Scalable IO in Java 的 PPT 中描述了 Reactor 编程模型的思想,大部分 NIO 框架和一些中间件的 NIO 编程都与它一样或是它的变体.本文结合 P ...

- python基础2--数据结构(列表List、元组Tuple、字典Dict)

1.Print函数中文编码问题 print中的编码:# -*- coding: utf-8 -*- 注:此处的#代表的是配置信息 print中的换行符,与C语言相同,为"\n" 2 ...