Hadoop生态圈-Oozie部署实战

Hadoop生态圈-Oozie部署实战

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

一.Oozie简介

1>.什么是Oozie

Oozie英文翻译为:驯象人。一个基于工作流引擎的开源框架,由Cloudera公司贡献给Apache,提供对Hadoop Mapreduce、Pig Jobs的任务调度与协调。Oozie需要部署到Java Servlet容器中运行。主要用于定时调度任务,多任务可以按照执行的逻辑顺序调度。

2>.Oozie的功能模块介绍

>.Workflow

顺序执行流程节点,支持fork(分支多个节点),join(合并多个节点为一个)。 >.Coordinator

定时触发workflow,它类似与一个定时器。 >.Bundle Job

绑定多个Coordinator,它是一个绑定任务的容器。

3>.Oozie的常用节点

>.控制流节点(Control Flow Nodes)

控制流节点一般都是定义在工作流开始或者结束的位置,比如start,end,kill等。以及提供工作流的执行路径机制,如decision,fork,join等。 >.动作节点(Action Nodes)

负责执行具体动作的节点,比如:拷贝文件,执行某个Shell脚本等等。

二.部署Hadoop测试环境

1>.下载hadoop版本

我把测试的版本放在百度云了,详细链接请参考:链接:https://pan.baidu.com/s/1w5G5ReKdJgDJe6931bA8Lw 密码:nal3

2>.解压CDH版本的hadoop

[yinzhengjie@s101 cdh]$ pwd

/home/yinzhengjie/download/cdh

[yinzhengjie@s101 cdh]$

[yinzhengjie@s101 cdh]$ ll

total

-rw-r--r-- yinzhengjie yinzhengjie Sep cdh5.3.6-snappy-lib-natirve.tar.gz

-rw-r--r-- yinzhengjie yinzhengjie Sep hadoop-2.5.-cdh5.3.6.tar.gz

-rw-r--r-- yinzhengjie yinzhengjie Sep oozie-4.0.-cdh5.3.6.tar.gz

[yinzhengjie@s101 cdh]$

[yinzhengjie@s101 cdh]$ ll

total

-rw-r--r-- yinzhengjie yinzhengjie Sep cdh5.3.6-snappy-lib-natirve.tar.gz

drwxr-xr-x yinzhengjie yinzhengjie Jul hadoop-2.5.-cdh5.3.6

-rw-r--r-- yinzhengjie yinzhengjie Sep hadoop-2.5.-cdh5.3.6.tar.gz

-rw-r--r-- yinzhengjie yinzhengjie Sep oozie-4.0.-cdh5.3.6.tar.gz

[yinzhengjie@s101 cdh]$

[yinzhengjie@s101 cdh]$ cd hadoop-2.5.-cdh5.3.6/lib/native/

[yinzhengjie@s101 native]$

[yinzhengjie@s101 native]$ ll

total

[yinzhengjie@s101 native]$

3>.解压snappy版本

[yinzhengjie@s101 native]$ tar -zxf /home/yinzhengjie/download/cdh/cdh5.3.6-snappy-lib-natirve.tar.gz -C ./

[yinzhengjie@s101 native]$

[yinzhengjie@s101 native]$ ll

total

drwxrwxr-x yinzhengjie yinzhengjie Sep lib

[yinzhengjie@s101 native]$ mv lib/native/* ./

[yinzhengjie@s101 native]$

[yinzhengjie@s101 native]$ ll

total 15472

drwxrwxr-x 3 yinzhengjie yinzhengjie 19 Sep 13 2015 lib

-rw-rw-r-- 1 yinzhengjie yinzhengjie 1279980 Sep 13 2015 libhadoop.a

-rw-rw-r-- 1 yinzhengjie yinzhengjie 1487052 Sep 13 2015 libhadooppipes.a

lrwxrwxrwx 1 yinzhengjie yinzhengjie 18 Sep 13 2015 libhadoop.so -> libhadoop.so.1.0.0

-rwxrwxr-x 1 yinzhengjie yinzhengjie 747310 Sep 13 2015 libhadoop.so.1.0.0

-rw-rw-r-- 1 yinzhengjie yinzhengjie 582056 Sep 13 2015 libhadooputils.a

-rw-rw-r-- 1 yinzhengjie yinzhengjie 359770 Sep 13 2015 libhdfs.a

lrwxrwxrwx 1 yinzhengjie yinzhengjie 16 Sep 13 2015 libhdfs.so -> libhdfs.so.0.0.0

-rwxrwxr-x 1 yinzhengjie yinzhengjie 228715 Sep 13 2015 libhdfs.so.0.0.0

-rw-rw-r-- 1 yinzhengjie yinzhengjie 7684148 Sep 13 2015 libnativetask.a

lrwxrwxrwx 1 yinzhengjie yinzhengjie 22 Sep 13 2015 libnativetask.so -> libnativetask.so.1.0.0

-rwxrwxr-x 1 yinzhengjie yinzhengjie 3060775 Sep 13 2015 libnativetask.so.1.0.0

-rw-r--r-- 1 yinzhengjie yinzhengjie 233506 Sep 13 2015 libsnappy.a

-rwxr-xr-x 1 yinzhengjie yinzhengjie 961 Sep 13 2015 libsnappy.la

lrwxrwxrwx 1 yinzhengjie yinzhengjie 18 Sep 13 2015 libsnappy.so -> libsnappy.so.1.2.0

lrwxrwxrwx 1 yinzhengjie yinzhengjie 18 Sep 13 2015 libsnappy.so.1 -> libsnappy.so.1.2.0

-rwxr-xr-x 1 yinzhengjie yinzhengjie 147718 Sep 13 2015 libsnappy.so.1.2.0

[yinzhengjie@s101 native]$ rm -rf lib

[yinzhengjie@s101 native]$

4>.编辑“mapred-site.xml”配置文件

[yinzhengjie@s101 hadoop-2.5.-cdh5.3.6]$ pwd

/home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6

[yinzhengjie@s101 hadoop-2.5.-cdh5.3.6]$ ll

total

drwxr-xr-x yinzhengjie yinzhengjie Jul bin

drwxr-xr-x yinzhengjie yinzhengjie Jul bin-mapreduce1

drwxr-xr-x yinzhengjie yinzhengjie Jul cloudera

drwxr-xr-x yinzhengjie yinzhengjie Jul etc

drwxr-xr-x yinzhengjie yinzhengjie Jul examples

drwxr-xr-x yinzhengjie yinzhengjie Jul examples-mapreduce1

drwxr-xr-x yinzhengjie yinzhengjie Jul include

drwxr-xr-x yinzhengjie yinzhengjie Jul lib

drwxr-xr-x yinzhengjie yinzhengjie Jul libexec

drwxr-xr-x yinzhengjie yinzhengjie Jul sbin

drwxr-xr-x yinzhengjie yinzhengjie Jul share

drwxr-xr-x yinzhengjie yinzhengjie Jul src

[yinzhengjie@s101 hadoop-2.5.-cdh5.3.6]$ cd etc/hadoop

[yinzhengjie@s101 hadoop]$

[yinzhengjie@s101 hadoop]$ rm -rf *.cmd

[yinzhengjie@s101 hadoop]$

[yinzhengjie@s101 hadoop]$ mv mapred-site.xml.template mapred-site.xml

[yinzhengjie@s101 hadoop]$

[yinzhengjie@s101 hadoop]$ vi mapred-site.xml

[yinzhengjie@s101 hadoop]$

[yinzhengjie@s101 hadoop]$ more mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

--> <!-- Put site-specific property overrides in this file. --> <configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property> <!-- 配置 MapReduce JobHistory Server 地址 ,默认端口10020 -->

<property>

<name>mapreduce.jobhistory.address</name>

<value>s101:</value>

</property> <!-- 配置 MapReduce JobHistory Server web ui 地址, 默认端口19888 -->

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>s101:</value>

</property>

</configuration>

[yinzhengjie@s101 hadoop]$

[yinzhengjie@s101 hadoop]$ more mapred-site.xml

5>.编辑"core-site.xml"配置文件

[yinzhengjie@s101 hadoop]$ vi core-site.xml

[yinzhengjie@s101 hadoop]$

[yinzhengjie@s101 hadoop]$ more core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

--> <!-- Put site-specific property overrides in this file. --> <configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://s101:8020</value>

</property> <property>

<name>hadoop.tmp.dir</name>

<value>/home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/data</value>

</property>

</configuration>

[yinzhengjie@s101 hadoop]$

[yinzhengjie@s101 hadoop]$ more core-site.xml

6>.编辑“yarn-site.xml”配置文件

[yinzhengjie@s101 hadoop]$ more yarn-site.xml

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration> <!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property> <property>

<name>yarn.resourcemanager.hostname</name>

<value>s101</value>

</property> <property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property> <property>

<name>yarn.log-aggregation.retain-seconds</name>

<value></value>

</property> <!-- 任务历史服务 -->

<property>

<name>yarn.log.server.url</name>

<value>http://s101:19888/jobhistory/logs/</value>

</property> <property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value></value>

</property> <property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>2.1</value>

</property> <property>

<name>mapred.child.java.opts</name>

<value>-Xmx1024m</value>

</property> </configuration>

[yinzhengjie@s101 hadoop]$

[yinzhengjie@s101 hadoop]$ more yarn-site.xml

7>.编辑“hdfs-site.xml”配置文件

[yinzhengjie@s101 hadoop]$ vi hdfs-site.xml

[yinzhengjie@s101 hadoop]$

[yinzhengjie@s101 hadoop]$

[yinzhengjie@s101 hadoop]$ more hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

--> <!-- Put site-specific property overrides in this file. --> <configuration> <!-- 指定数据冗余份数 -->

<property>

<name>dfs.replication</name>

<value></value>

</property> <!-- 关闭权限检查-->

<property>

<name>dfs.permissions.enable</name>

<value>false</value>

</property> <property>

<name>dfs.namenode.secondary.http-address</name>

<value>s101:</value>

</property> <property>

<name>dfs.namenode.http-address</name>

<value>s101:</value>

</property> <property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property> </configuration>

[yinzhengjie@s101 hadoop]$

[yinzhengjie@s101 hadoop]$ more hdfs-site.xml

8>.编辑“slaves”配置文件

[yinzhengjie@s101 hadoop]$ pwd

/home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/etc/hadoop

[yinzhengjie@s101 hadoop]$ more slaves

s102

s103

s104

[yinzhengjie@s101 hadoop]$

[yinzhengjie@s101 hadoop]$ more slaves

9>.分发文件到DataNode节点

[yinzhengjie@s101 download]$ xcall.sh mkdir -pv /home/yinzhengjie/download/

============= s101 mkdir -pv /home/yinzhengjie/download/ ============

命令执行成功

============= s102 mkdir -pv /home/yinzhengjie/download/ ============

mkdir: created directory ‘/home/yinzhengjie/download/’

命令执行成功

============= s103 mkdir -pv /home/yinzhengjie/download/ ============

mkdir: created directory ‘/home/yinzhengjie/download/’

命令执行成功

============= s104 mkdir -pv /home/yinzhengjie/download/ ============

mkdir: created directory ‘/home/yinzhengjie/download/’

命令执行成功

[yinzhengjie@s101 download]$

[yinzhengjie@s101 download]$

[yinzhengjie@s101 download]$ xrsync.sh /home/yinzhengjie/download/cdh

=========== s102 %file ===========

命令执行成功

=========== s103 %file ===========

命令执行成功

=========== s104 %file ===========

命令执行成功

[yinzhengjie@s101 download]$

[yinzhengjie@s101 download]$ xcall.sh cat /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/etc/hadoop/slaves

============= s101 cat /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/etc/hadoop/slaves ============

s102

s103

s104

命令执行成功

============= s102 cat /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/etc/hadoop/slaves ============

s102

s103

s104

命令执行成功

============= s103 cat /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/etc/hadoop/slaves ============

s102

s103

s104

命令执行成功

============= s104 cat /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/etc/hadoop/slaves ============

s102

s103

s104

命令执行成功

[yinzhengjie@s101 hadoop-2.5.-cdh5.3.6]$

[yinzhengjie@s101 download]$ xrsync.sh /home/yinzhengjie/download/cdh

10>.格式化namenode

[yinzhengjie@s101 ~]$ cd /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6

[yinzhengjie@s101 hadoop-2.5.-cdh5.3.6]$

[yinzhengjie@s101 hadoop-2.5.-cdh5.3.6]$ bin/hdfs namenode -format

// :: INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = s101/172.30.1.101

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.5.0-cdh5.3.6

STARTUP_MSG: classpath = /home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/etc/hadoop:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-cli-1.2.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/guava-11.0.2.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jsch-0.1.42.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-digester-1.8.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jets3t-0.9.0.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-logging-1.1.3.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-configuration-1.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-compress-1.4.1.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/xz-1.0.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jettison-1.1.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jetty-util-6.1.26.cloudera.4.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/httpcore-4.2.5.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/netty-3.6.2.Final.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-math3-3.1.1.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jsp-api-2.1.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-collections-3.2.1.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/xmlenc-0.52.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jersey-core-1.9.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-httpclient-3.1.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jackson-core-asl-1.8.8.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/asm-3.2.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-el-1.0.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-codec-1.4.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jersey-json-1.9.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-lang-2.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/stax-api-1.0-2.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/curator-framework-2.6.0.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/hadoop-auth-2.5.0-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jackson-xc-1.8.8.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jackson-mapper-asl-1.8.8.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/curator-recipes-2.6.0.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jsr305-1.3.9.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/hamcrest-core-1.3.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/gson-2.2.4.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/paranamer-2.3.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/avro-1.7.6-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-net-3.1.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/junit-4.11.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/mockito-all-1.8.5.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/activation-1.1.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/servlet-api-2.5.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/hadoop-annotations-2.5.0-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jersey-server-1.9.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/httpclient-4.2.5.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/log4j-1.2.17.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/zookeeper-3.4.5-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jackson-jaxrs-1.8.8.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/curator-client-2.6.0.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/jetty-6.1.26.cloudera.4.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/lib/commons-io-2.4.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/hadoop-common-2.5.0-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/hadoop-nfs-2.5.0-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/common/hadoop-common-2.5.0-cdh5.3.6-tests.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/guava-11.0.2.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/jetty-util-6.1.26.cloudera.4.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/jackson-core-asl-1.8.8.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/asm-3.2.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/commons-el-1.0.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/jackson-mapper-asl-1.8.8.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/jetty-6.1.26.cloudera.4.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/lib/commons-io-2.4.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/hadoop-hdfs-nfs-2.5.0-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/hadoop-hdfs-2.5.0-cdh5.3.6-tests.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/hdfs/hadoop-hdfs-2.5.0-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jline-0.9.94.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/commons-cli-1.2.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/guava-11.0.2.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/xz-1.0.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jettison-1.1.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jetty-util-6.1.26.cloudera.4.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jersey-client-1.9.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/commons-collections-3.2.1.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jersey-core-1.9.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/commons-httpclient-3.1.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jackson-core-asl-1.8.8.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/asm-3.2.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/commons-codec-1.4.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jersey-json-1.9.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/commons-lang-2.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/guice-3.0.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/javax.inject-1.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jackson-xc-1.8.8.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jackson-mapper-asl-1.8.8.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jsr305-1.3.9.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/activation-1.1.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/servlet-api-2.5.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jersey-server-1.9.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/log4j-1.2.17.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/zookeeper-3.4.5-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/aopalliance-1.0.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jackson-jaxrs-1.8.8.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/jetty-6.1.26.cloudera.4.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/lib/commons-io-2.4.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.5.0-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.5.0-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-client-2.5.0-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.5.0-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-common-2.5.0-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.5.0-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.5.0-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-api-2.5.0-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.5.0-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-server-common-2.5.0-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/yarn/hadoop-yarn-server-tests-2.5.0-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/xz-1.0.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/jackson-core-asl-1.8.8.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/asm-3.2.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/guice-3.0.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/javax.inject-1.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.8.8.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/avro-1.7.6-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/junit-4.11.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/hadoop-annotations-2.5.0-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-nativetask-2.5.0-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.5.0-cdh5.3.6-tests.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.5.0-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.5.0-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.5.0-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.5.0-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.5.0-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.5.0-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.5.0-cdh5.3.6.jar:/home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.5.0-cdh5.3.6.jar:/contrib/capacity-scheduler/*.jar

STARTUP_MSG: build = http://github.com/cloudera/hadoop -r 6743ef286bfdd317b600adbdb154f982cf2fac7a; compiled by 'jenkins' on 2015-07-28T22:14Z

STARTUP_MSG: java = 1.8.0_131

************************************************************/

// :: INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

// :: INFO namenode.NameNode: createNameNode [-format]

Formatting using clusterid: CID-01be06db-eedb-4bcd-ac47-25440eb47bf9

// :: INFO namenode.FSNamesystem: No KeyProvider found.

// :: INFO namenode.FSNamesystem: fsLock is fair:true

// :: INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=

// :: INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

// :: INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to :::00.000

// :: INFO blockmanagement.BlockManager: The block deletion will start around Sep ::

// :: INFO util.GSet: Computing capacity for map BlocksMap

// :: INFO util.GSet: VM type = -bit

// :: INFO util.GSet: 2.0% max memory MB = 17.8 MB

// :: INFO util.GSet: capacity = ^ = entries

// :: INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false

// :: INFO blockmanagement.BlockManager: defaultReplication =

// :: INFO blockmanagement.BlockManager: maxReplication =

// :: INFO blockmanagement.BlockManager: minReplication =

// :: INFO blockmanagement.BlockManager: maxReplicationStreams =

// :: INFO blockmanagement.BlockManager: shouldCheckForEnoughRacks = false

// :: INFO blockmanagement.BlockManager: replicationRecheckInterval =

// :: INFO blockmanagement.BlockManager: encryptDataTransfer = false

// :: INFO blockmanagement.BlockManager: maxNumBlocksToLog =

// :: INFO namenode.FSNamesystem: fsOwner = yinzhengjie (auth:SIMPLE)

// :: INFO namenode.FSNamesystem: supergroup = supergroup

// :: INFO namenode.FSNamesystem: isPermissionEnabled = true

// :: INFO namenode.FSNamesystem: HA Enabled: false

// :: INFO namenode.FSNamesystem: Append Enabled: true

// :: INFO util.GSet: Computing capacity for map INodeMap

// :: INFO util.GSet: VM type = -bit

// :: INFO util.GSet: 1.0% max memory MB = 8.9 MB

// :: INFO util.GSet: capacity = ^ = entries

// :: INFO namenode.NameNode: Caching file names occuring more than times

// :: INFO util.GSet: Computing capacity for map cachedBlocks

// :: INFO util.GSet: VM type = -bit

// :: INFO util.GSet: 0.25% max memory MB = 2.2 MB

// :: INFO util.GSet: capacity = ^ = entries

// :: INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

// :: INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes =

// :: INFO namenode.FSNamesystem: dfs.namenode.safemode.extension =

// :: INFO namenode.FSNamesystem: Retry cache on namenode is enabled

// :: INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is millis

// :: INFO util.GSet: Computing capacity for map NameNodeRetryCache

// :: INFO util.GSet: VM type = -bit

// :: INFO util.GSet: 0.029999999329447746% max memory MB = 273.1 KB

// :: INFO util.GSet: capacity = ^ = entries

// :: INFO namenode.NNConf: ACLs enabled? false

// :: INFO namenode.NNConf: XAttrs enabled? true

// :: INFO namenode.NNConf: Maximum size of an xattr:

// :: INFO namenode.FSImage: Allocated new BlockPoolId: BP--172.30.1.101-

// :: INFO common.Storage: Storage directory /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/data/dfs/name has been successfully formatted.

// :: INFO namenode.NNStorageRetentionManager: Going to retain images with txid >=

// :: INFO util.ExitUtil: Exiting with status

// :: INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at s101/172.30.1.101

************************************************************/

[yinzhengjie@s101 hadoop-2.5.-cdh5.3.6]$

[yinzhengjie@s101 hadoop-2.5.0-cdh5.3.6]$ bin/hdfs namenode -format

11>.启动hadoop集群

[root@s101 hadoop-2.5.-cdh5.3.6]# pwd

/home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6

[root@s101 hadoop-2.5.-cdh5.3.6]#

[root@s101 hadoop-2.5.-cdh5.3.6]# sbin/start-dfs.sh

Starting namenodes on [s101]

s101: starting namenode, logging to /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/logs/hadoop-root-namenode-s101.out

s103: starting datanode, logging to /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/logs/hadoop-root-datanode-s103.out

s104: starting datanode, logging to /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/logs/hadoop-root-datanode-s104.out

s102: starting datanode, logging to /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/logs/hadoop-root-datanode-s102.out

Starting secondary namenodes [s101]

s101: starting secondarynamenode, logging to /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/logs/hadoop-root-secondarynamenode-s101.out

[root@s101 hadoop-2.5.-cdh5.3.6]#

[root@s101 hadoop-2.5.-cdh5.3.6]#

[root@s101 hadoop-2.5.-cdh5.3.6]#

[root@s101 hadoop-2.5.-cdh5.3.6]# xcall.sh jps

============= s101 jps ============

Jps

NameNode

SecondaryNameNode

命令执行成功

============= s102 jps ============

DataNode

Jps

命令执行成功

============= s103 jps ============

DataNode

Jps

命令执行成功

============= s104 jps ============

DataNode

Jps

命令执行成功

[root@s101 hadoop-2.5.-cdh5.3.6]#

启动hdfs([root@s101 hadoop-2.5.0-cdh5.3.6]# sbin/start-dfs.sh )

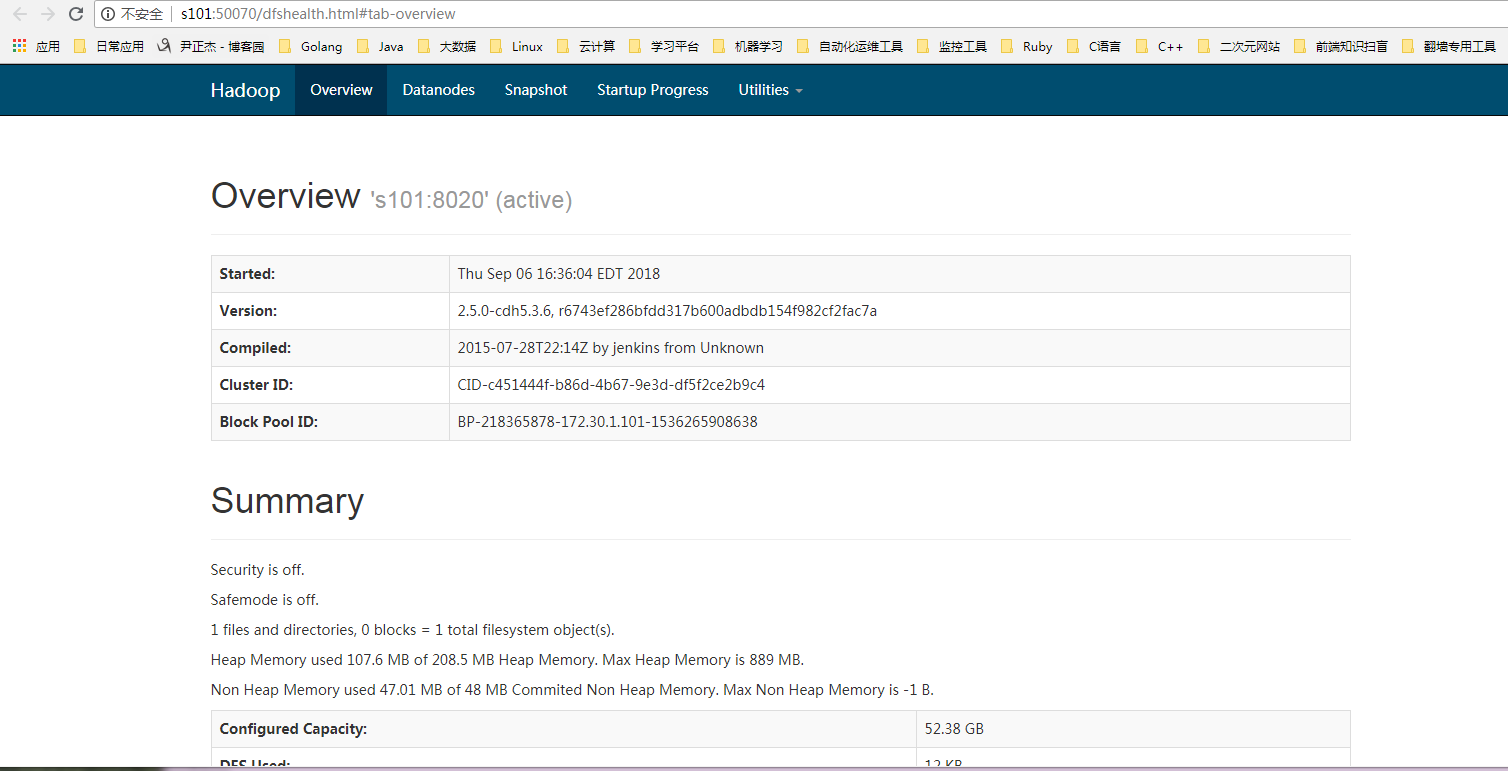

检查hdfs是否启动成功,查看webUI界面:

[root@s101 hadoop-2.5.-cdh5.3.6]# sbin/start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/logs/yarn-yinzhengjie-resourcemanager-s101.out

s104: starting nodemanager, logging to /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/logs/yarn-root-nodemanager-s104.out

s102: starting nodemanager, logging to /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/logs/yarn-root-nodemanager-s102.out

s103: starting nodemanager, logging to /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/logs/yarn-root-nodemanager-s103.out

[root@s101 hadoop-2.5.-cdh5.3.6]#

[root@s101 hadoop-2.5.-cdh5.3.6]#

[root@s101 hadoop-2.5.-cdh5.3.6]# xcall.sh jps

============= s101 jps ============

ResourceManager

NameNode

Jps

SecondaryNameNode

命令执行成功

============= s102 jps ============

NodeManager

DataNode

Jps

命令执行成功

============= s103 jps ============

NodeManager

DataNode

Jps

命令执行成功

============= s104 jps ============

NodeManager

Jps

DataNode

命令执行成功

[root@s101 hadoop-2.5.-cdh5.3.6]#

启动yarn([root@s101 hadoop-2.5.0-cdh5.3.6]# sbin/start-yarn.sh )

启动成功后,查看webUI如下:

12>.使用Wordcount案例进行测试集群是否可以正常工作

[root@s101 hadoop-2.5.-cdh5.3.6]# sbin/mr-jobhistory-daemon.sh start historyserver

starting historyserver, logging to /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/logs/mapred-yinzhengjie-historyserver-s101.out

[root@s101 hadoop-2.5.-cdh5.3.6]#

启动日志服务([root@s101 hadoop-2.5.0-cdh5.3.6]# sbin/mr-jobhistory-daemon.sh start historyserver)

[root@s101 hadoop-2.5.-cdh5.3.6]# vi /home/yinzhengjie/data/word.txt

[root@s101 hadoop-2.5.-cdh5.3.6]#

[root@s101 hadoop-2.5.-cdh5.3.6]# more /home/yinzhengjie/data/word.txt

a,b,c,d

b,b,f,e

a,a,c,f

d,a,b,c

[root@s101 hadoop-2.5.-cdh5.3.6]#

编辑测试文件([root@s101 hadoop-2.5.0-cdh5.3.6]# more /home/yinzhengjie/data/word.txt)

[root@s101 hadoop-2.5.-cdh5.3.6]# bin/hdfs dfs -put /home/yinzhengjie/data/word.txt /

[root@s101 hadoop-2.5.-cdh5.3.6]#

[root@s101 hadoop-2.5.-cdh5.3.6]# bin/hdfs dfs -ls -R /

-rw-r--r-- root supergroup -- : /word.txt

[root@s101 hadoop-2.5.-cdh5.3.6]#

上传文件到hdfs([root@s101 hadoop-2.5.0-cdh5.3.6]# bin/hdfs dfs -put /home/yinzhengjie/data/word.txt /)

[root@s101 hadoop-2.5.-cdh5.3.6]# bin/yarn jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.5.-cdh5.3.6.jar wordcount /word.txt /output

// :: INFO client.RMProxy: Connecting to ResourceManager at s101/172.30.1.101:

// :: INFO input.FileInputFormat: Total input paths to process :

// :: INFO mapreduce.JobSubmitter: number of splits:

// :: INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1536266578757_0001

// :: INFO impl.YarnClientImpl: Submitted application application_1536266578757_0001

// :: INFO mapreduce.Job: The url to track the job: http://s101:8088/proxy/application_1536266578757_0001/

// :: INFO mapreduce.Job: Running job: job_1536266578757_0001

// :: INFO mapreduce.Job: Job job_1536266578757_0001 running in uber mode : false

// :: INFO mapreduce.Job: map % reduce %

// :: INFO mapreduce.Job: map % reduce %

// :: INFO mapreduce.Job: map % reduce %

// :: INFO mapreduce.Job: Job job_1536266578757_0001 completed successfully

// :: INFO mapreduce.Job: Counters:

File System Counters

FILE: Number of bytes read=

FILE: Number of bytes written=

FILE: Number of read operations=

FILE: Number of large read operations=

FILE: Number of write operations=

HDFS: Number of bytes read=

HDFS: Number of bytes written=

HDFS: Number of read operations=

HDFS: Number of large read operations=

HDFS: Number of write operations=

Job Counters

Launched map tasks=

Launched reduce tasks=

Rack-local map tasks=

Total time spent by all maps in occupied slots (ms)=

Total time spent by all reduces in occupied slots (ms)=

Total time spent by all map tasks (ms)=

Total time spent by all reduce tasks (ms)=

Total vcore-seconds taken by all map tasks=

Total vcore-seconds taken by all reduce tasks=

Total megabyte-seconds taken by all map tasks=

Total megabyte-seconds taken by all reduce tasks=

Map-Reduce Framework

Map input records=

Map output records=

Map output bytes=

Map output materialized bytes=

Input split bytes=

Combine input records=

Combine output records=

Reduce input groups=

Reduce shuffle bytes=

Reduce input records=

Reduce output records=

Spilled Records=

Shuffled Maps =

Failed Shuffles=

Merged Map outputs=

GC time elapsed (ms)=

CPU time spent (ms)=

Physical memory (bytes) snapshot=

Virtual memory (bytes) snapshot=

Total committed heap usage (bytes)=

Shuffle Errors

BAD_ID=

CONNECTION=

IO_ERROR=

WRONG_LENGTH=

WRONG_MAP=

WRONG_REDUCE=

File Input Format Counters

Bytes Read=

File Output Format Counters

Bytes Written=

[root@s101 hadoop-2.5.-cdh5.3.6]#

执行MapReduce作业([root@s101 hadoop-2.5.0-cdh5.3.6]# bin/yarn jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.5.0-cdh5.3.6.jar wordcount /word.txt /output)

查看hdfs是否有新文件产生:

查看MapReduce是否有日志输出:

查看是否有以下界面信息:

三.Oozie环境部署

1>.停止hadoop集群

[root@s101 hadoop-2.5.-cdh5.3.6]# xcall.sh jps

============= s101 jps ============

ResourceManager

JobHistoryServer

Jps

NameNode

SecondaryNameNode

命令执行成功

============= s102 jps ============

NodeManager

Jps

DataNode

命令执行成功

============= s103 jps ============

NodeManager

Jps

DataNode

命令执行成功

============= s104 jps ============

Jps

NodeManager

DataNode

命令执行成功

[root@s101 hadoop-2.5.-cdh5.3.6]#

[root@s101 hadoop-2.5.-cdh5.3.6]# sbin/stop-all.sh

This script is Deprecated. Instead use stop-dfs.sh and stop-yarn.sh

Stopping namenodes on [s101]

s101: stopping namenode

s104: stopping datanode

s102: stopping datanode

s103: stopping datanode

Stopping secondary namenodes [s101]

s101: stopping secondarynamenode

stopping yarn daemons

stopping resourcemanager

s104: stopping nodemanager

s103: stopping nodemanager

s102: stopping nodemanager

no proxyserver to stop

[root@s101 hadoop-2.5.-cdh5.3.6]#

[root@s101 hadoop-2.5.-cdh5.3.6]# xcall.sh jps

============= s101 jps ============

Jps

JobHistoryServer

命令执行成功

============= s102 jps ============

Jps

命令执行成功

============= s103 jps ============

Jps

命令执行成功

============= s104 jps ============

Jps

命令执行成功

[root@s101 hadoop-2.5.-cdh5.3.6]#

停止hadoop集群([root@s101 hadoop-2.5.0-cdh5.3.6]# sbin/stop-all.sh )

[root@s101 hadoop-2.5.-cdh5.3.6]# xcall.sh jps

============= s101 jps ============

Jps

JobHistoryServer

命令执行成功

============= s102 jps ============

Jps

命令执行成功

============= s103 jps ============

Jps

命令执行成功

============= s104 jps ============

Jps

命令执行成功

[root@s101 hadoop-2.5.-cdh5.3.6]#

[root@s101 hadoop-2.5.-cdh5.3.6]#

[root@s101 hadoop-2.5.-cdh5.3.6]# sbin/mr-jobhistory-daemon.sh stop historyserver

stopping historyserver

[root@s101 hadoop-2.5.-cdh5.3.6]#

[root@s101 hadoop-2.5.-cdh5.3.6]# xcall.sh jps

============= s101 jps ============

Jps

命令执行成功

============= s102 jps ============

Jps

命令执行成功

============= s103 jps ============

Jps

命令执行成功

============= s104 jps ============

Jps

命令执行成功

[root@s101 hadoop-2.5.-cdh5.3.6]#

停止日志服务([root@s101 hadoop-2.5.0-cdh5.3.6]# sbin/mr-jobhistory-daemon.sh stop historyserver)

2>.解压Oozie安装包

[root@s101 ~]# cd /home/yinzhengjie/download/cdh/

[root@s101 cdh]# ll

total

-rw-r--r-- yinzhengjie yinzhengjie Sep cdh5.3.6-snappy-lib-natirve.tar.gz

drwxr-xr-x yinzhengjie yinzhengjie Sep : hadoop-2.5.-cdh5.3.6

-rw-r--r-- yinzhengjie yinzhengjie Sep hadoop-2.5.-cdh5.3.6.tar.gz

-rw-r--r-- yinzhengjie yinzhengjie Sep oozie-4.0.-cdh5.3.6.tar.gz

[root@s101 cdh]# tar -zxf oozie-4.0.-cdh5.3.6.tar.gz -C ./

[root@s101 cdh]#

[root@s101 cdh]# ll

total

-rw-r--r-- yinzhengjie yinzhengjie Sep cdh5.3.6-snappy-lib-natirve.tar.gz

drwxr-xr-x yinzhengjie yinzhengjie Sep : hadoop-2.5.-cdh5.3.6

-rw-r--r-- yinzhengjie yinzhengjie Sep hadoop-2.5.-cdh5.3.6.tar.gz

drwxr-xr-x Jul oozie-4.0.-cdh5.3.6

-rw-r--r-- yinzhengjie yinzhengjie Sep oozie-4.0.-cdh5.3.6.tar.gz

[root@s101 cdh]# cd oozie-4.0.-cdh5.3.6

[root@s101 oozie-4.0.-cdh5.3.6]# ll

total

drwxr-xr-x Jul bin

drwxr-xr-x Jul conf

drwxr-xr-x Jul docs

drwxr-xr-x Jul lib

drwxr-xr-x Jul libtools

-rw-r--r-- Jul LICENSE.txt

-rw-r--r-- Jul NOTICE.txt

drwxr-xr-x Jul oozie-core

-rw-r--r-- Jul oozie-examples.tar.gz

-rw-r--r-- Jul oozie-hadooplibs-4.0.-cdh5.3.6.tar.gz

drwxr-xr-x Jul oozie-server

-r--r--r-- Jul oozie-sharelib-4.0.-cdh5.3.6.tar.gz

-r--r--r-- Jul oozie-sharelib-4.0.-cdh5.3.6-yarn.tar.gz

-rw-r--r-- Jul oozie.war

-rw-r--r-- Jul release-log.txt

drwxr-xr-x Jul src

[root@s101 oozie-4.0.-cdh5.3.6]#

[root@s101 cdh]# tar -zxf oozie-4.0.0-cdh5.3.6.tar.gz -C ./

3>.修改Hadoop配置文件并分发到各个DataNode节点

[root@s101 hadoop]# more core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

--> <!-- Put site-specific property overrides in this file. --> <configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://s101:8020</value>

</property> <property>

<name>hadoop.tmp.dir</name>

<value>/home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/data</value>

</property> <!-- Oozie Server的Hostname,注意,如果你当前用户不是yinzhengjie,那么name的值应该为“hadoop.proxyuser.yinzhengjie.hosts” -->

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property> <!-- 允许被Oozie代理的用户组,注意,如果你当前用户不是yinzhengjie,那么name的值应该为“hadoop.proxyuser.yinzhengjie.groups”-->

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property> </configuration>

[root@s101 hadoop]#

[root@s101 hadoop]# more core-site.xml

[root@s101 hadoop]# more mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

--> <!-- Put site-specific property overrides in this file. --> <configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property> <!-- 配置 MapReduce JobHistory Server 地址 ,默认端口10020 -->

<property>

<name>mapreduce.jobhistory.address</name>

<value>s101:</value>

</property> <!-- 配置 MapReduce JobHistory Server web ui 地址, 默认端口19888 -->

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>s101:</value>

</property>

</configuration>

[root@s101 hadoop]#

[root@s101 hadoop]# more mapred-site.xml

[root@s101 hadoop]# more yarn-site.xml

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration> <!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property> <property>

<name>yarn.resourcemanager.hostname</name>

<value>s101</value>

</property> <property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property> <property>

<name>yarn.log-aggregation.retain-seconds</name>

<value></value>

</property> <!-- 任务历史服务 -->

<property>

<name>yarn.log.server.url</name>

<value>http://s101:19888/jobhistory/logs/</value>

</property> <property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value></value>

</property> <property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>2.1</value>

</property> <property>

<name>mapred.child.java.opts</name>

<value>-Xmx1024m</value>

</property> </configuration>

[root@s101 hadoop]#

[root@s101 hadoop]# more yarn-site.xml

[root@s101 hadoop]# pwd

/home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/etc/hadoop

[root@s101 hadoop]#

[root@s101 hadoop]#

[root@s101 hadoop]# xrsync.sh /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/etc/hadoop

=========== s102 %file ===========

命令执行成功

=========== s103 %file ===========

命令执行成功

=========== s104 %file ===========

命令执行成功

[root@s101 hadoop]#

[root@s101 hadoop]# xrsync.sh /home/yinzhengjie/download/cdh/hadoop-2.5.0-cdh5.3.6/etc/hadoop

4>.启动hadoop集群

[root@s101 ~]# cd /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6

[root@s101 hadoop-2.5.-cdh5.3.6]# sbin/start-dfs.sh

Starting namenodes on [s101]

s101: starting namenode, logging to /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/logs/hadoop-root-namenode-s101.out

s104: starting datanode, logging to /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/logs/hadoop-root-datanode-s104.out

s102: starting datanode, logging to /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/logs/hadoop-root-datanode-s102.out

s103: starting datanode, logging to /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/logs/hadoop-root-datanode-s103.out

Starting secondary namenodes [s101]

s101: starting secondarynamenode, logging to /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/logs/hadoop-root-secondarynamenode-s101.out

[root@s101 hadoop-2.5.-cdh5.3.6]#

启动hdfs([root@s101 hadoop-2.5.0-cdh5.3.6]# sbin/start-dfs.sh )

[root@s101 hadoop-2.5.-cdh5.3.6]# sbin/start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/logs/yarn-yinzhengjie-resourcemanager-s101.out

s104: starting nodemanager, logging to /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/logs/yarn-root-nodemanager-s104.out

s102: starting nodemanager, logging to /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/logs/yarn-root-nodemanager-s102.out

s103: starting nodemanager, logging to /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/logs/yarn-root-nodemanager-s103.out

[root@s101 hadoop-2.5.-cdh5.3.6]#

启动yarn([root@s101 hadoop-2.5.0-cdh5.3.6]# sbin/start-yarn.sh )

[root@s101 hadoop-2.5.-cdh5.3.6]# sbin/mr-jobhistory-daemon.sh start historyserver

starting historyserver, logging to /home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/logs/mapred-yinzhengjie-historyserver-s101.out

[root@s101 hadoop-2.5.-cdh5.3.6]#

[root@s101 hadoop-2.5.-cdh5.3.6]#

启动历史服务([root@s101 hadoop-2.5.0-cdh5.3.6]# sbin/mr-jobhistory-daemon.sh start historyserver)

[root@s101 hadoop-2.5.-cdh5.3.6]# xcall.sh jps

============= s101 jps ============

JobHistoryServer

ResourceManager

SecondaryNameNode

NameNode

Jps

命令执行成功

============= s102 jps ============

NodeManager

DataNode

Jps

命令执行成功

============= s103 jps ============

DataNode

Jps

NodeManager

命令执行成功

============= s104 jps ============

Jps

DataNode

NodeManager

命令执行成功

[root@s101 hadoop-2.5.-cdh5.3.6]#

查看hadoop集群启动状况([root@s101 hadoop-2.5.0-cdh5.3.6]# xcall.sh jps)

5>.在oozie根目录下解压hadooplibs

[root@s101 ~]# cd /home/yinzhengjie/download/cdh/oozie-4.0.-cdh5.3.6

[root@s101 oozie-4.0.-cdh5.3.6]# ll

total

drwxr-xr-x Jul bin

drwxr-xr-x Jul conf

drwxr-xr-x Jul docs

drwxr-xr-x Jul lib

drwxr-xr-x Jul libtools

-rw-r--r-- Jul LICENSE.txt

-rw-r--r-- Jul NOTICE.txt

drwxr-xr-x Jul oozie-core

-rw-r--r-- Jul oozie-examples.tar.gz

-rw-r--r-- Jul oozie-hadooplibs-4.0.-cdh5.3.6.tar.gz

drwxr-xr-x Jul oozie-server

-r--r--r-- Jul oozie-sharelib-4.0.-cdh5.3.6.tar.gz

-r--r--r-- Jul oozie-sharelib-4.0.-cdh5.3.6-yarn.tar.gz

-rw-r--r-- Jul oozie.war

-rw-r--r-- Jul release-log.txt

drwxr-xr-x Jul src

[root@s101 oozie-4.0.-cdh5.3.6]# tar -zxf oozie-hadooplibs-4.0.-cdh5.3.6.tar.gz -C ../

[root@s101 oozie-4.0.-cdh5.3.6]#

[root@s101 oozie-4.0.-cdh5.3.6]# ll

total

drwxr-xr-x Jul bin

drwxr-xr-x Jul conf

drwxr-xr-x Jul docs

drwxr-xr-x Jul hadooplibs

drwxr-xr-x Jul lib

drwxr-xr-x Jul libtools

-rw-r--r-- Jul LICENSE.txt

-rw-r--r-- Jul NOTICE.txt

drwxr-xr-x Jul oozie-core

-rw-r--r-- Jul oozie-examples.tar.gz

-rw-r--r-- Jul oozie-hadooplibs-4.0.-cdh5.3.6.tar.gz

drwxr-xr-x Jul oozie-server

-r--r--r-- Jul oozie-sharelib-4.0.-cdh5.3.6.tar.gz

-r--r--r-- Jul oozie-sharelib-4.0.-cdh5.3.6-yarn.tar.gz

-rw-r--r-- Jul oozie.war

-rw-r--r-- Jul release-log.txt

drwxr-xr-x Jul src

[root@s101 oozie-4.0.-cdh5.3.6]# ll hadooplibs/

total

drwxr-xr-x Jul hadooplib-2.5.-cdh5.3.6.oozie-4.0.-cdh5.3.6

drwxr-xr-x Jul hadooplib-2.5.-mr1-cdh5.3.6.oozie-4.0.-cdh5.3.6

[root@s101 oozie-4.0.-cdh5.3.6]#

[root@s101 oozie-4.0.0-cdh5.3.6]# tar -zxf oozie-hadooplibs-4.0.0-cdh5.3.6.tar.gz -C ../

6>.在Oozie根目录下创建libext目录

[root@s101 oozie-4.0.-cdh5.3.6]# ll

total

drwxr-xr-x Jul bin

drwxr-xr-x Jul conf

drwxr-xr-x Jul docs

drwxr-xr-x Jul hadooplibs

drwxr-xr-x Jul lib

drwxr-xr-x Jul libtools

-rw-r--r-- Jul LICENSE.txt

-rw-r--r-- Jul NOTICE.txt

drwxr-xr-x Jul oozie-core

-rw-r--r-- Jul oozie-examples.tar.gz

-rw-r--r-- Jul oozie-hadooplibs-4.0.-cdh5.3.6.tar.gz

drwxr-xr-x Jul oozie-server

-r--r--r-- Jul oozie-sharelib-4.0.-cdh5.3.6.tar.gz

-r--r--r-- Jul oozie-sharelib-4.0.-cdh5.3.6-yarn.tar.gz

-rw-r--r-- Jul oozie.war

-rw-r--r-- Jul release-log.txt

drwxr-xr-x Jul src

[root@s101 oozie-4.0.-cdh5.3.6]#

[root@s101 oozie-4.0.-cdh5.3.6]# pwd

/home/yinzhengjie/download/cdh/oozie-4.0.-cdh5.3.6

[root@s101 oozie-4.0.-cdh5.3.6]#

[root@s101 oozie-4.0.-cdh5.3.6]# mkdir libext

[root@s101 oozie-4.0.-cdh5.3.6]#

[root@s101 oozie-4.0.-cdh5.3.6]# ll

total

drwxr-xr-x Jul bin

drwxr-xr-x Jul conf

drwxr-xr-x Jul docs

drwxr-xr-x Jul hadooplibs

drwxr-xr-x Jul lib

drwxr-xr-x root root Sep : libext

drwxr-xr-x Jul libtools

-rw-r--r-- Jul LICENSE.txt

-rw-r--r-- Jul NOTICE.txt

drwxr-xr-x Jul oozie-core

-rw-r--r-- Jul oozie-examples.tar.gz

-rw-r--r-- Jul oozie-hadooplibs-4.0.-cdh5.3.6.tar.gz

drwxr-xr-x Jul oozie-server

-r--r--r-- Jul oozie-sharelib-4.0.-cdh5.3.6.tar.gz

-r--r--r-- Jul oozie-sharelib-4.0.-cdh5.3.6-yarn.tar.gz

-rw-r--r-- Jul oozie.war

-rw-r--r-- Jul release-log.txt

drwxr-xr-x Jul src

[root@s101 oozie-4.0.-cdh5.3.6]#

[root@s101 oozie-4.0.0-cdh5.3.6]# mkdir libext

7>. 将hadooplibs里面的jar包,拷贝到libext目录下:

[root@s101 oozie-4.0.-cdh5.3.6]# cp -ra hadooplibs/hadooplib-2.5.-cdh5.3.6.oozie-4.0.-cdh5.3.6/* libext/

[root@s101 oozie-4.0.0-cdh5.3.6]# ll libext/

total 60000

-rw-r--r-- 1 1106 4001 62983 Jul 28 2015 activation-1.1.jar

-rw-r--r-- 1 1106 4001 44925 Jul 28 2015 apacheds-i18n-2.0.0-M15.jar

-rw-r--r-- 1 1106 4001 691479 Jul 28 2015 apacheds-kerberos-codec-2.0.0-M15.jar

-rw-r--r-- 1 1106 4001 16560 Jul 28 2015 api-asn1-api-1.0.0-M20.jar

-rw-r--r-- 1 1106 4001 79912 Jul 28 2015 api-util-1.0.0-M20.jar

-rw-r--r-- 1 1106 4001 437647 Jul 28 2015 avro-1.7.6-cdh5.3.6.jar

-rw-r--r-- 1 1106 4001 11948376 Jul 28 2015 aws-java-sdk-1.7.4.jar

-rw-r--r-- 1 1106 4001 188671 Jul 28 2015 commons-beanutils-1.7.0.jar

-rw-r--r-- 1 1106 4001 206035 Jul 28 2015 commons-beanutils-core-1.8.0.jar

-rw-r--r-- 1 1106 4001 41123 Jul 28 2015 commons-cli-1.2.jar

-rw-r--r-- 1 1106 4001 58160 Jul 28 2015 commons-codec-1.4.jar

-rw-r--r-- 1 1106 4001 575389 Jul 28 2015 commons-collections-3.2.1.jar

-rw-r--r-- 1 1106 4001 241367 Jul 28 2015 commons-compress-1.4.1.jar

-rw-r--r-- 1 1106 4001 298829 Jul 28 2015 commons-configuration-1.6.jar

-rw-r--r-- 1 1106 4001 143602 Jul 28 2015 commons-digester-1.8.jar

-rw-r--r-- 1 1106 4001 305001 Jul 28 2015 commons-httpclient-3.1.jar

-rw-r--r-- 1 1106 4001 185140 Jul 28 2015 commons-io-2.4.jar

-rw-r--r-- 1 1106 4001 261809 Jul 28 2015 commons-lang-2.4.jar

-rw-r--r-- 1 1106 4001 52915 Jul 28 2015 commons-logging-1.1.jar

-rw-r--r-- 1 1106 4001 1599627 Jul 28 2015 commons-math3-3.1.1.jar

-rw-r--r-- 1 1106 4001 273370 Jul 28 2015 commons-net-3.1.jar

-rw-r--r-- 1 1106 4001 68866 Jul 28 2015 curator-client-2.6.0.jar

-rw-r--r-- 1 1106 4001 185245 Jul 28 2015 curator-framework-2.6.0.jar

-rw-r--r-- 1 1106 4001 244782 Jul 28 2015 curator-recipes-2.5.0.jar

-rw-r--r-- 1 1106 4001 190432 Jul 28 2015 gson-2.2.4.jar

-rw-r--r-- 1 1106 4001 1648200 Jul 28 2015 guava-11.0.2.jar

-rw-r--r-- 1 1106 4001 21576 Jul 28 2015 hadoop-annotations-2.5.0-cdh5.3.6.jar

-rw-r--r-- 1 1106 4001 73104 Jul 28 2015 hadoop-auth-2.5.0-cdh5.3.6.jar

-rw-r--r-- 1 1106 4001 14377281 Jul 28 2015 hadoop-aws-2.5.0-cdh5.3.6.jar

-rw-r--r-- 1 1106 4001 9634 Jul 28 2015 hadoop-client-2.5.0-cdh5.3.6.jar

-rw-r--r-- 1 1106 4001 3172847 Jul 28 2015 hadoop-common-2.5.0-cdh5.3.6.jar

-rw-r--r-- 1 1106 4001 7754145 Jul 28 2015 hadoop-hdfs-2.5.0-cdh5.3.6.jar

-rw-r--r-- 1 1106 4001 505686 Jul 28 2015 hadoop-mapreduce-client-app-2.5.0-cdh5.3.6.jar

-rw-r--r-- 1 1106 4001 750361 Jul 28 2015 hadoop-mapreduce-client-common-2.5.0-cdh5.3.6.jar

-rw-r--r-- 1 1106 4001 1522127 Jul 28 2015 hadoop-mapreduce-client-core-2.5.0-cdh5.3.6.jar

-rw-r--r-- 1 1106 4001 42973 Jul 28 2015 hadoop-mapreduce-client-jobclient-2.5.0-cdh5.3.6.jar

-rw-r--r-- 1 1106 4001 50786 Jul 28 2015 hadoop-mapreduce-client-shuffle-2.5.0-cdh5.3.6.jar

-rw-r--r-- 1 1106 4001 1607504 Jul 28 2015 hadoop-yarn-api-2.5.0-cdh5.3.6.jar

-rw-r--r-- 1 1106 4001 126058 Jul 28 2015 hadoop-yarn-client-2.5.0-cdh5.3.6.jar

-rw-r--r-- 1 1106 4001 1432412 Jul 28 2015 hadoop-yarn-common-2.5.0-cdh5.3.6.jar

-rw-r--r-- 1 1106 4001 279691 Jul 28 2015 hadoop-yarn-server-common-2.5.0-cdh5.3.6.jar

-rw-r--r-- 1 1106 4001 433368 Jul 28 2015 httpclient-4.2.5.jar

-rw-r--r-- 1 1106 4001 227708 Jul 28 2015 httpcore-4.2.5.jar

-rw-r--r-- 1 1106 4001 33483 Jul 28 2015 jackson-annotations-2.2.3.jar

-rw-r--r-- 1 1106 4001 192699 Jul 28 2015 jackson-core-2.2.3.jar

-rw-r--r-- 1 1106 4001 227500 Jul 28 2015 jackson-core-asl-1.8.8.jar

-rw-r--r-- 1 1106 4001 865838 Jul 28 2015 jackson-databind-2.2.3.jar

-rw-r--r-- 1 1106 4001 17884 Jul 28 2015 jackson-jaxrs-1.8.8.jar

-rw-r--r-- 1 1106 4001 668564 Jul 28 2015 jackson-mapper-asl-1.8.8.jar

-rw-r--r-- 1 1106 4001 32353 Jul 28 2015 jackson-xc-1.8.8.jar

-rw-r--r-- 1 1106 4001 105134 Jul 28 2015 jaxb-api-2.2.2.jar

-rw-r--r-- 1 1106 4001 130458 Jul 28 2015 jersey-client-1.9.jar

-rw-r--r-- 1 1106 4001 458739 Jul 28 2015 jersey-core-1.9.jar

-rw-r--r-- 1 1106 4001 177417 Jul 28 2015 jetty-util-6.1.26.cloudera.2.jar

-rw-r--r-- 1 1106 4001 33015 Jul 28 2015 jsr305-1.3.9.jar

-rw-r--r-- 1 1106 4001 1045744 Jul 28 2015 leveldbjni-all-1.8.jar

-rw-r--r-- 1 1106 4001 481535 Jul 28 2015 log4j-1.2.16.jar

-rw-r--r-- 1 1106 4001 1199572 Jul 28 2015 netty-3.6.2.Final.jar

-rw-r--r-- 1 1106 4001 29555 Jul 28 2015 paranamer-2.3.jar

-rw-r--r-- 1 1106 4001 533455 Jul 28 2015 protobuf-java-2.5.0.jar

-rw-r--r-- 1 1106 4001 105112 Jul 28 2015 servlet-api-2.5.jar

-rw-r--r-- 1 1106 4001 26084 Jul 28 2015 slf4j-api-1.7.5.jar

-rw-r--r-- 1 1106 4001 8869 Jul 28 2015 slf4j-log4j12-1.7.5.jar

-rw-r--r-- 1 1106 4001 995968 Jul 28 2015 snappy-java-1.0.4.1.jar

-rw-r--r-- 1 1106 4001 23346 Jul 28 2015 stax-api-1.0-2.jar

-rw-r--r-- 1 1106 4001 15010 Jul 28 2015 xmlenc-0.52.jar

-rw-r--r-- 1 1106 4001 94672 Jul 28 2015 xz-1.0.jar

-rw-r--r-- 1 1106 4001 1351929 Jul 28 2015 zookeeper-3.4.5-cdh5.3.6.jar

[root@s101 oozie-4.0.0-cdh5.3.6]#

[root@s101 oozie-4.0.0-cdh5.3.6]# cp -ra hadooplibs/hadooplib-2.5.0-cdh5.3.6.oozie-4.0.0-cdh5.3.6/* libext/

8>.拷贝Mysql驱动包到libext目录下:

[root@s101 oozie-4.0.-cdh5.3.6]# cp -a /home/yinzhengjie/download/mysql-connector-java-5.1..jar libext/

[root@s101 oozie-4.0.-cdh5.3.6]#

[root@s101 oozie-4.0.0-cdh5.3.6]# cp -a /home/yinzhengjie/download/mysql-connector-java-5.1.41.jar libext/

9>.将ext-2.2.zip拷贝到libext/目录下(用于Oozie的显示框架,ext是一个js框架,用于展示oozie前端页面)

[root@s101 oozie-4.0.-cdh5.3.6]# cp -a /home/yinzhengjie/download/cdh/mysql-connector-java-5.1.-bin.jar libext/

[root@s101 oozie-4.0.-cdh5.3.6]#

[root@s101 oozie-4.0.0-cdh5.3.6]# cp -a /home/yinzhengjie/download/cdh/mysql-connector-java-5.1.27-bin.jar libext/

10>.修改Oozie配置文件

属性:oozie.service.JPAService.jdbc.driver

属性值:com.mysql.jdbc.Driver

解释:JDBC的驱动 属性:oozie.service.JPAService.jdbc.url

属性值:jdbc:mysql://s101:3306/oozie

解释:oozie所需的数据库地址 属性:oozie.service.JPAService.jdbc.username

属性值:root

解释:数据库用户名 属性:oozie.service.JPAService.jdbc.password

属性值:yinzhengjie

解释:数据库密码 属性:oozie.service.HadoopAccessorService.hadoop.configurations

属性值:*=/home/yinzhengjie/download/cdh/hadoop-2.5.-cdh5.3.6/etc/hadoop

解释:让Oozie引用Hadoop的配置文件

[root@s101 oozie-4.0.0-cdh5.3.6]# vi conf/oozie-site.xml

11>.在Mysql中创建Oozie的数据库

[root@s101 ~]# mysql -uroot -pyinzhengjie

Warning: Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is

Server version: 5.6. MySQL Community Server (GPL) Copyright (c) , , Oracle and/or its affiliates. All rights reserved. Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| cm |

| mysql |

| performance_schema |

+--------------------+

rows in set (0.01 sec) mysql> create database oozie;

Query OK, row affected (0.00 sec) mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| cm |

| mysql |

| oozie |

| performance_schema |

+--------------------+

rows in set (0.00 sec) mysql>

mysql> create database oozie;

12>.初始化Oozie

[root@s101 oozie-4.0.-cdh5.3.6]# bin/oozie-setup.sh sharelib create -fs hdfs://s101:8020 -locallib oozie-sharelib-4.0.0-cdh5.3.6-yarn.tar.gz

setting CATALINA_OPTS="$CATALINA_OPTS -Xmx1024m"

log4j:WARN No appenders could be found for logger (org.apache.hadoop.util.Shell).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/yinzhengjie/download/cdh/oozie-4.0.-cdh5.3.6/libtools/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/yinzhengjie/download/cdh/oozie-4.0.-cdh5.3.6/libtools/slf4j-simple-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/yinzhengjie/download/cdh/oozie-4.0.-cdh5.3.6/libext/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

the destination path for sharelib is: /user/root/share/lib/lib_20180906212707

[root@s101 oozie-4.0.-cdh5.3.6]#

上传Oozie目录下的yarn.tar.gz文件到HDFS,温馨提示:yarn.tar.gz文件会自行解压([root@s101 oozie-4.0.0-cdh5.3.6]# bin/oozie-setup.sh sharelib create -fs hdfs://s101:8020 -locallib oozie-sharelib-4.0.0-cdh5.3.6-yarn.tar.gz )

[root@s101 oozie-4.0.-cdh5.3.6]# bin/oozie-setup.sh db create -run -sqlfile oozie.sql

setting CATALINA_OPTS="$CATALINA_OPTS -Xmx1024m" Validate DB Connection

DONE

Check DB schema does not exist

DONE

Check OOZIE_SYS table does not exist

DONE

Create SQL schema

DONE

Create OOZIE_SYS table

DONE Oozie DB has been created for Oozie version '4.0.0-cdh5.3.6' The SQL commands have been written to: oozie.sql

[root@s101 oozie-4.0.-cdh5.3.6]#

[root@s101 oozie-4.0.-cdh5.3.6]# more oozie.sql

CREATE TABLE BUNDLE_ACTIONS (bundle_action_id VARCHAR() NOT NULL, bundle_id VARCHAR(), coord_id VARCHAR(), coord_name VARCHAR(), critical INTEGER, last_modified_time DATETIME, pending INTEGER, status V

ARCHAR(), PRIMARY KEY (bundle_action_id)) ENGINE = innodb;

CREATE TABLE BUNDLE_JOBS (id VARCHAR() NOT NULL, app_name VARCHAR(), app_path VARCHAR(), conf MEDIUMBLOB, created_time DATETIME, end_time DATETIME, external_id VARCHAR(), group_name VARCHAR(), job_x

ml MEDIUMBLOB, kickoff_time DATETIME, last_modified_time DATETIME, orig_job_xml MEDIUMBLOB, pause_time DATETIME, pending INTEGER, start_time DATETIME, status VARCHAR(), suspended_time DATETIME, time_out INTEGER

, time_unit VARCHAR(), user_name VARCHAR(), PRIMARY KEY (id)) ENGINE = innodb;

CREATE TABLE COORD_ACTIONS (id VARCHAR() NOT NULL, action_number INTEGER, action_xml MEDIUMBLOB, console_url VARCHAR(), created_conf MEDIUMBLOB, created_time DATETIME, error_code VARCHAR(), error_message

VARCHAR(), external_id VARCHAR(), external_status VARCHAR(), job_id VARCHAR(), last_modified_time DATETIME, missing_dependencies MEDIUMBLOB, nominal_time DATETIME, pending INTEGER, push_missing_depende

ncies MEDIUMBLOB, rerun_time DATETIME, run_conf MEDIUMBLOB, sla_xml MEDIUMBLOB, status VARCHAR(), time_out INTEGER, tracker_uri VARCHAR(), job_type VARCHAR(), PRIMARY KEY (id)) ENGINE = innodb;

CREATE TABLE COORD_JOBS (id VARCHAR() NOT NULL, app_name VARCHAR(), app_namespace VARCHAR(), app_path VARCHAR(), bundle_id VARCHAR(), concurrency INTEGER, conf MEDIUMBLOB, created_time DATETIME, don

e_materialization INTEGER, end_time DATETIME, execution VARCHAR(), external_id VARCHAR(), frequency VARCHAR(), group_name VARCHAR(), job_xml MEDIUMBLOB, last_action_number INTEGER, last_action DATETIME

, last_modified_time DATETIME, mat_throttling INTEGER, next_matd_time DATETIME, orig_job_xml MEDIUMBLOB, pause_time DATETIME, pending INTEGER, sla_xml MEDIUMBLOB, start_time DATETIME, status VARCHAR(), suspende

d_time DATETIME, time_out INTEGER, time_unit VARCHAR(), time_zone VARCHAR(), user_name VARCHAR(), PRIMARY KEY (id)) ENGINE = innodb;

CREATE TABLE OPENJPA_SEQUENCE_TABLE (ID TINYINT NOT NULL, SEQUENCE_VALUE BIGINT, PRIMARY KEY (ID)) ENGINE = innodb;

CREATE TABLE SLA_EVENTS (event_id BIGINT NOT NULL AUTO_INCREMENT, alert_contact VARCHAR(), alert_frequency VARCHAR(), alert_percentage VARCHAR(), app_name VARCHAR(), dev_contact VARCHAR(), group_nam

e VARCHAR(), job_data TEXT, notification_msg TEXT, parent_client_id VARCHAR(), parent_sla_id VARCHAR(), qa_contact VARCHAR(), se_contact VARCHAR(), sla_id VARCHAR(), upstream_apps TEXT, user_name

VARCHAR(), bean_type VARCHAR(), app_type VARCHAR(), event_type VARCHAR(), expected_end DATETIME, expected_start DATETIME, job_status VARCHAR(), status_timestamp DATETIME, PRIMARY KEY (event_id)) ENG

INE = innodb;

CREATE TABLE SLA_REGISTRATION (job_id VARCHAR() NOT NULL, app_name VARCHAR(), app_type VARCHAR(), created_time DATETIME, expected_duration BIGINT, expected_end DATETIME, expected_start DATETIME, job_data

VARCHAR(), nominal_time DATETIME, notification_msg VARCHAR(), parent_id VARCHAR(), sla_config VARCHAR(), upstream_apps VARCHAR(), user_name VARCHAR(), PRIMARY KEY (job_id)) ENGINE = innodb;

CREATE TABLE SLA_SUMMARY (job_id VARCHAR() NOT NULL, actual_duration BIGINT, actual_end DATETIME, actual_start DATETIME, app_name VARCHAR(), app_type VARCHAR(), created_time DATETIME, event_processed TINY

INT, event_status VARCHAR(), expected_duration BIGINT, expected_end DATETIME, expected_start DATETIME, job_status VARCHAR(), last_modified DATETIME, nominal_time DATETIME, parent_id VARCHAR(), sla_status

VARCHAR(), user_name VARCHAR(), PRIMARY KEY (job_id)) ENGINE = innodb;

CREATE TABLE VALIDATE_CONN (id BIGINT NOT NULL, dummy INTEGER, PRIMARY KEY (id)) ENGINE = innodb;

CREATE TABLE WF_ACTIONS (id VARCHAR() NOT NULL, conf MEDIUMBLOB, console_url VARCHAR(), created_time DATETIME, cred VARCHAR(), data MEDIUMBLOB, end_time DATETIME, error_code VARCHAR(), error_message VA

RCHAR(), execution_path VARCHAR(), external_child_ids MEDIUMBLOB, external_id VARCHAR(), external_status VARCHAR(), last_check_time DATETIME, log_token VARCHAR(), name VARCHAR(), pending INTEGER

, pending_age DATETIME, retries INTEGER, signal_value VARCHAR(), sla_xml MEDIUMBLOB, start_time DATETIME, stats MEDIUMBLOB, status VARCHAR(), tracker_uri VARCHAR(), transition VARCHAR(), type VARCHAR(

), user_retry_count INTEGER, user_retry_interval INTEGER, user_retry_max INTEGER, wf_id VARCHAR(), PRIMARY KEY (id)) ENGINE = innodb;

CREATE TABLE WF_JOBS (id VARCHAR() NOT NULL, app_name VARCHAR(), app_path VARCHAR(), conf MEDIUMBLOB, created_time DATETIME, end_time DATETIME, external_id VARCHAR(), group_name VARCHAR(), last_modi

fied_time DATETIME, log_token VARCHAR(), parent_id VARCHAR(), proto_action_conf MEDIUMBLOB, run INTEGER, sla_xml MEDIUMBLOB, start_time DATETIME, status VARCHAR(), user_name VARCHAR(), wf_instance MEDI

UMBLOB, PRIMARY KEY (id)) ENGINE = innodb;

CREATE INDEX I_BNDLTNS_BUNDLE_ID ON BUNDLE_ACTIONS (bundle_id);

CREATE INDEX I_BNDLJBS_CREATED_TIME ON BUNDLE_JOBS (created_time);

CREATE INDEX I_BNDLJBS_LAST_MODIFIED_TIME ON BUNDLE_JOBS (last_modified_time);

CREATE INDEX I_BNDLJBS_STATUS ON BUNDLE_JOBS (status);

CREATE INDEX I_BNDLJBS_SUSPENDED_TIME ON BUNDLE_JOBS (suspended_time);

CREATE INDEX I_CRD_TNS_CREATED_TIME ON COORD_ACTIONS (created_time);

CREATE INDEX I_CRD_TNS_EXTERNAL_ID ON COORD_ACTIONS (external_id);

CREATE INDEX I_CRD_TNS_JOB_ID ON COORD_ACTIONS (job_id);

CREATE INDEX I_CRD_TNS_LAST_MODIFIED_TIME ON COORD_ACTIONS (last_modified_time);

CREATE INDEX I_CRD_TNS_NOMINAL_TIME ON COORD_ACTIONS (nominal_time);

CREATE INDEX I_CRD_TNS_RERUN_TIME ON COORD_ACTIONS (rerun_time);

CREATE INDEX I_CRD_TNS_STATUS ON COORD_ACTIONS (status);

CREATE INDEX I_CRD_JBS_BUNDLE_ID ON COORD_JOBS (bundle_id);

CREATE INDEX I_CRD_JBS_CREATED_TIME ON COORD_JOBS (created_time);

CREATE INDEX I_CRD_JBS_LAST_MODIFIED_TIME ON COORD_JOBS (last_modified_time);

CREATE INDEX I_CRD_JBS_NEXT_MATD_TIME ON COORD_JOBS (next_matd_time);

CREATE INDEX I_CRD_JBS_STATUS ON COORD_JOBS (status);

CREATE INDEX I_CRD_JBS_SUSPENDED_TIME ON COORD_JOBS (suspended_time);

CREATE INDEX I_SL_VNTS_DTYPE ON SLA_EVENTS (bean_type);

CREATE INDEX I_SL_RRTN_NOMINAL_TIME ON SLA_REGISTRATION (nominal_time);

CREATE INDEX I_SL_SMRY_APP_NAME ON SLA_SUMMARY (app_name);

CREATE INDEX I_SL_SMRY_EVENT_PROCESSED ON SLA_SUMMARY (event_processed);

CREATE INDEX I_SL_SMRY_LAST_MODIFIED ON SLA_SUMMARY (last_modified);

CREATE INDEX I_SL_SMRY_NOMINAL_TIME ON SLA_SUMMARY (nominal_time);

CREATE INDEX I_SL_SMRY_PARENT_ID ON SLA_SUMMARY (parent_id);

CREATE INDEX I_WF_CTNS_PENDING_AGE ON WF_ACTIONS (pending_age);

CREATE INDEX I_WF_CTNS_STATUS ON WF_ACTIONS (status);

CREATE INDEX I_WF_CTNS_WF_ID ON WF_ACTIONS (wf_id);

CREATE INDEX I_WF_JOBS_END_TIME ON WF_JOBS (end_time);

CREATE INDEX I_WF_JOBS_EXTERNAL_ID ON WF_JOBS (external_id);

CREATE INDEX I_WF_JOBS_LAST_MODIFIED_TIME ON WF_JOBS (last_modified_time);

CREATE INDEX I_WF_JOBS_PARENT_ID ON WF_JOBS (parent_id);

CREATE INDEX I_WF_JOBS_STATUS ON WF_JOBS (status); create table OOZIE_SYS (name varchar(), data varchar())

insert into OOZIE_SYS (name, data) values ('db.version', '')

insert into OOZIE_SYS (name, data) values ('oozie.version', '4.0.0-cdh5.3.6')

[root@s101 oozie-4.0.-cdh5.3.6]#

创建oozie.sql文件([root@s101 oozie-4.0.0-cdh5.3.6]# bin/oozie-setup.sh db create -run -sqlfile oozie.sql)

[root@s101 oozie-4.0.-cdh5.3.6]# yum -y install unzip

Loaded plugins: fastestmirror

base | 3.6 kB ::

elasticsearch-.x | 2.9 kB ::

extras | 3.4 kB ::

mysql-connectors-community | 2.5 kB ::

mysql-tools-community | 2.5 kB ::

mysql56-community | 2.5 kB ::

updates | 3.4 kB ::

mysql-connectors-community/x86_64/primary_db | kB ::

Loading mirror speeds from cached hostfile

* base: mirrors.huaweicloud.com

* extras: mirrors.huaweicloud.com

* updates: mirrors.huaweicloud.com

Resolving Dependencies

--> Running transaction check

---> Package unzip.x86_64 :6.0-.el7 will be installed

--> Finished Dependency Resolution Dependencies Resolved =====================================================================================================================================================================================================================

Package Arch Version Repository Size

=====================================================================================================================================================================================================================

Installing:

unzip x86_64 6.0-.el7 base k Transaction Summary

=====================================================================================================================================================================================================================

Install Package Total download size: k

Installed size: k

Downloading packages:

unzip-6.0-.el7.x86_64.rpm | kB ::

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Installing : unzip-6.0-.el7.x86_64 /

Verifying : unzip-6.0-.el7.x86_64 / Installed:

unzip.x86_64 :6.0-.el7 Complete!

[root@s101 oozie-4.0.-cdh5.3.6]#

[root@s101 oozie-4.0.-cdh5.3.6]#

安装unzip解压包([root@s101 oozie-4.0.0-cdh5.3.6]# yum -y install unzip)

[root@s101 oozie-4.0.-cdh5.3.6]# yum -y install zip

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

* base: mirrors.huaweicloud.com

* extras: mirrors.huaweicloud.com

* updates: mirrors.huaweicloud.com

Resolving Dependencies

--> Running transaction check

---> Package zip.x86_64 :3.0-.el7 will be installed

--> Finished Dependency Resolution Dependencies Resolved =====================================================================================================================================================================================================================

Package Arch Version Repository Size