Netty实践与NIO原理

一、阻塞IO与非阻塞IO

Linux网络IO模型(5种)

(1)阻塞IO模型

所有文件操作都是阻塞的,以套接字接口为例,在进程空间中调用recvfrom,系统调用直到数据包到达且被复制到应用进程缓冲区或发生错误时才返回,期间会一直等待(阻塞)。模型如图:

(2)非阻塞IO模型

recvfrom从应用层到内核时,如果该缓冲区没数据,直接返回一个EWOULDBLOCK错误,反复轮询检查这个状态,看是否有数据到来。如图:

(3)IO复用模型

Linux提高select/poll,进程通过将一个或多个fd(file descriptor)传递给select或poll系统调用,阻塞在select操作上,侦测多个fd是否处于就绪状态。select/poll顺序扫描fd是否就绪,而且支持的fd数量有限。Linux还提供了一个epoll系统调用,使用基于事件驱动的方式代替顺序扫描,性能更高。当有fd就绪时,立即回调函数rollback。如图:

(4)信号驱动IO模型

首先开启套接口信号驱动IO功能,通过系统调用sigaction执行一个信号处理函数,该函数立即返回,进程继续工作,它是非阻塞的。当数据准备就绪时,就为该进程生成一个SIGIO信号,通过信号回调通知应用程序调用recfrom来读取数据,通知主循环函数处理数据。如图:

(5)异步IO模型

告知内核启动某个操作,让内核在整个操作完成后(包括将数据从内核复制到用户自己的缓冲区)通知我们。它与信号驱动的主要区别是:信号驱动IO由内核告知我们何时开始一个IO操作,异步IO模型由内核通知我们IO操作何时已经完成。如图所示:

IO多路复用的应用:

通过把多个IO的阻塞复用到一个select的阻塞上,使系统在单线程下可处理多个客户端请求。与传统多线程模型相比,最大优势是系统开销小,不需要创建额外进程或线程。主要应用场景如下:

(1)服务器需要同时处理多个处于监听状态或连接状态的套接字

(2)服务器需要同时处理多种网络协议的套接字

Linux最终选择epoll支持IO多路复用的系统调用,优点如下:

(1)支持一个进程打开的socket描述符(FD)不受限制(select单线程默认1024太少,epoll仅受限操作系统最大文件句柄数,1GB内存机器大约10万句柄)

(2)IO效率不会随FD数目增加而线性下降(只对“活跃”的socke进行t操作,活跃socket才会去主动调用callback函数)

(3)使用mmap加速内核与用户空间消息传递(同一块内存,避免不必要复制)

(4)API简单:创建epoll描述符,添加监听事件,阻塞等待监听事件发生,关闭epoll描述符等

二、阻塞IO的例子(结合线程池)

//1.服务端

package com.xbai.io;

import java.io.IOException;

import java.net.ServerSocket;

import java.net.Socket;

import com.xbai.executor.TimeServerHandlerExecutePool;

import com.xbai.handler.TimeServerHandler;

public class TimeServerExecutor {

public static void main(String[] args)throws IOException {

int port =8080;

if(args !=null && args.length >0){

try {

port = Integer.valueOf(args[0]);

}catch (Exception e) {

// TODO: handle exception

}

}

ServerSocket server =null;

try {

server =new ServerSocket(port);

System.out.println("The time server is started in port : " + port);

TimeServerHandlerExecutePool singleExecutor =new TimeServerHandlerExecutePool(50,10000);

while(true){

Socket socket = server.accept();

singleExecutor.execute(new TimeServerHandler(socket));

}

}finally {

if(server !=null){

System.out.println("The time server closed");

server.close();

server =null;

}

}

}

}

//2.服务端线程池

package com.xbai.executor;

import java.util.concurrent.ArrayBlockingQueue;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.ThreadPoolExecutor;

import java.util.concurrent.TimeUnit;

public class TimeServerHandlerExecutePool {

private ExecutorService executor;

public TimeServerHandlerExecutePool(int maxPoolSize,int queueSize){

executor =new ThreadPoolExecutor(Runtime.getRuntime().availableProcessors(),maxPoolSize,120L,TimeUnit.SECONDS,

new ArrayBlockingQueue(queueSize));//线程池要执行的任务阻塞成一个队列,其内部的机制是等待唤醒生产者和消费者线程,有一个生产就可唤醒一个消费,去看源码的线程池原理

}

public void execute(Runnable task){

executor.execute(task);

}

}

//3.服务端处理器

package com.xbai.handler;

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStreamReader;

import java.io.OutputStreamWriter;

import java.io.PrintWriter;

import java.net.Socket;

import java.sql.Date;

public class TimeServerHandler implements Runnable{

private Socketsocket;

public TimeServerHandler(Socket socket) {

this.socket = socket;

}

@Override

public void run() {

// TODO Auto-generated method stub

BufferedReader br =null;

PrintWriter pw =null;

try {

br =new BufferedReader(new InputStreamReader(socket.getInputStream()));

pw =new PrintWriter(socket.getOutputStream(),true);

String curTime =null;

String msg =null;

while(true){

msg = br.readLine();

if(msg ==null){

break;

}

System.out.println("The time server received order:" + msg);

curTime ="query time order".equalsIgnoreCase(msg) ?new Date(

System.currentTimeMillis()).toString() :"bad order";

pw.println(curTime);//这里不写println,就无法插入换行符,那边就不能readLine,一直阻塞,无法获取数据

}

}catch (IOException e) {

if(br !=null){

try {

br.close();

}catch (IOException e1) {

// TODO Auto-generated catch block

e1.printStackTrace();

}

}

if(pw !=null){

pw.close();

pw =null;

}

if(socket !=null){

try {

socket.close();

}catch (IOException e1) {

// TODO Auto-generated catch block

e1.printStackTrace();

}

socket =null;

}

}

}

}

//4.客户端代码

package com.xbai.io;

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStreamReader;

import java.io.PrintWriter;

import java.net.Socket;

import java.net.UnknownHostException;

public class TimeClient {

public static void main(String[] args) {

int port =8080;

if(args !=null && args.length >0){

try {

port = Integer.valueOf(args[0]);

}catch (Exception e) {

// TODO: handle exception

}

}

Socket socket =null;

BufferedReader br =null;

PrintWriter pw =null;

try {

socket =new Socket("localhost",port);

br =new BufferedReader(new InputStreamReader(socket.getInputStream()));

pw =new PrintWriter(socket.getOutputStream(),true);

pw.println("query time order");

System.out.println("send order succeed");

String resp = br.readLine();

System.out.println("Now is :" + resp);

}catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}finally{

if(pw !=null){

pw.close();

pw =null;

}

if(br !=null){

try {

br.close();

}catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

br =null;

}

if(socket !=null){

try {

socket.close();

}catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

socket =null;

}

}

}

}

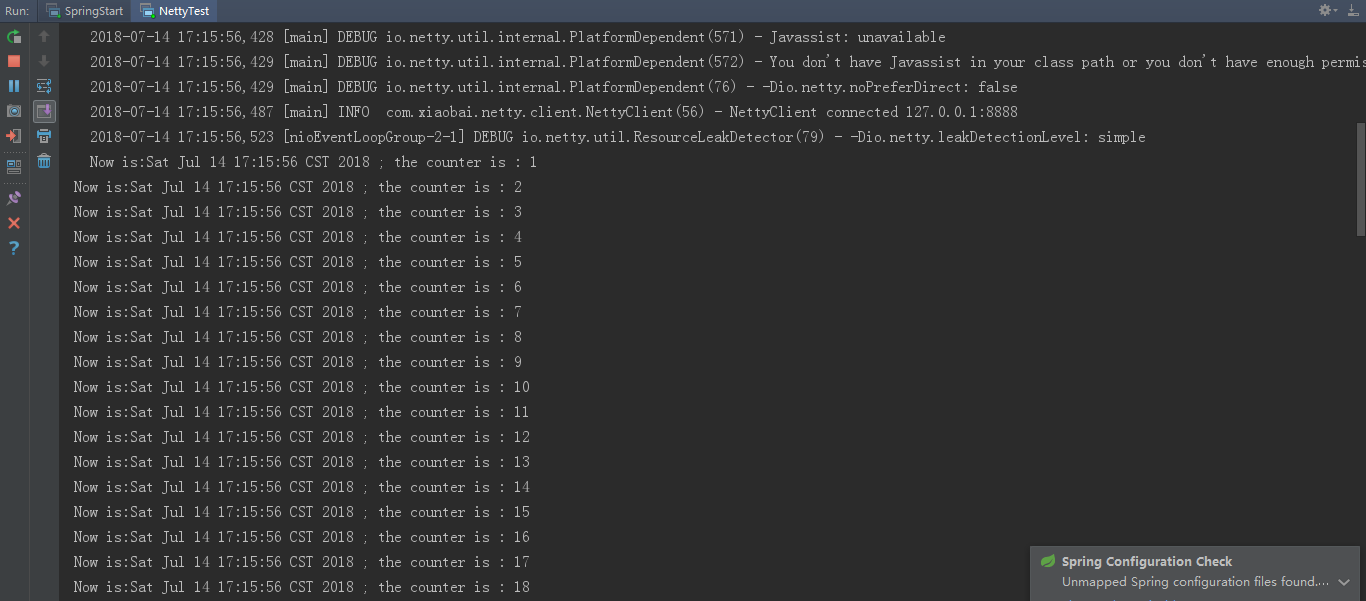

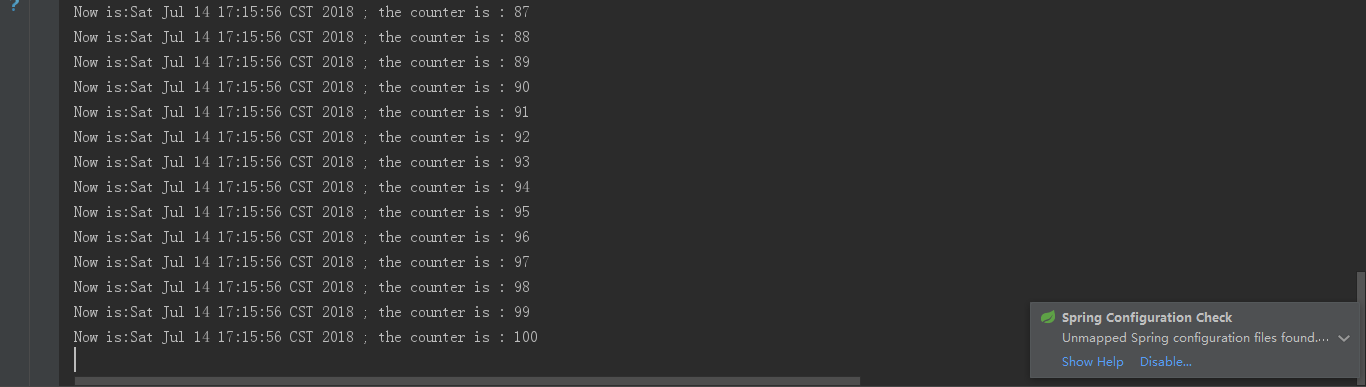

执行结果

服务端启动及收发:

客户端发送和接收:

三、非阻塞IO的例子(原生Java NIO,目前有写半包等问题,怀疑服务端没有写出去导致的客户端Selector的关闭状态异常)

//1.服务端主程序

package com.xiaobai.nio; public class NIOServer { public static void main(String[] args) {

MultiplexerTimeServer timeServer = new MultiplexerTimeServer();

new Thread(timeServer,"NIO-MultiplexerTimeServer-001").start();

}

}

//2.服务端timeServer

package com.xiaobai.nio; import java.io.IOException;

import java.net.InetSocketAddress;

import java.nio.ByteBuffer;

import java.nio.channels.SelectionKey;

import java.nio.channels.Selector;

import java.nio.channels.ServerSocketChannel;

import java.nio.channels.SocketChannel;

import java.util.Date;

import java.util.Iterator;

import java.util.Set; public class MultiplexerTimeServer implements Runnable {

private Selector selector;

private ServerSocketChannel servChannel;

private volatile boolean stop; public MultiplexerTimeServer() {

try {

selector = Selector.open();//建立Selector

servChannel = ServerSocketChannel.open();//建立Channel

servChannel.configureBlocking(false);

servChannel.socket().bind(new InetSocketAddress(2048), 1024);//ServerSocket绑定

servChannel.register(selector, SelectionKey.OP_ACCEPT);//向Selector注册ACCEPT事件

System.out.println("The time server is started in port 2048");

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

@Override

public void run() {

while(!stop){

try {

selector.select(1000);//轮询Channel

Set<SelectionKey> selectedKeys = selector.selectedKeys();

Iterator<SelectionKey> it = selectedKeys.iterator();

SelectionKey key = null;

while(it.hasNext()){

key = it.next();

it.remove();//移除它

try {

handleInput(key);

} catch (Exception e) {

if(key != null){

key.cancel();

if(key.channel() != null){

key.channel().close();

}

}

}

}

} catch (IOException e1) {

// TODO Auto-generated catch block

e1.printStackTrace();

}

}

if(selector != null){

try {

selector.close();

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

}

private void handleInput(SelectionKey key) throws IOException{

if(key.isValid()){

//处理新接入的请求

if(key.isAcceptable()){//此前已向Selector注册,并已open

//获取server channel

ServerSocketChannel ssc = (ServerSocketChannel) key.channel();

//获取client channel

SocketChannel sc = ssc.accept();

sc.configureBlocking(false);

//第一次捕捉到的客户端向Selector注册READ事件

sc.register(selector, SelectionKey.OP_READ);

}

//处理已注册的读事件

if(key.isReadable()){

//获取客户端Channel

SocketChannel sc = (SocketChannel) key.channel();

ByteBuffer readBuffer = ByteBuffer.allocate(1024);

int readBytes = sc.read(readBuffer);//读到缓冲

if(readBytes > 0){

readBuffer.flip();

// Buffer java.nio.Buffer.flip()

//

//

// Flips this buffer. The limit is set to the current position and then the position is set to zero. If the mark is defined then it is discarded.

//

// After a sequence of channel-read or put operations, invoke this method to prepare for a sequence of channel-write or relative get operations. For example:

//

// buf.put(magic); // Prepend header

// in.read(buf); // Read data into rest of buffer

// buf.flip(); // Flip buffer

// out.write(buf); // Write header + data to channel

byte[] bytes = new byte[readBuffer.remaining()];//缓冲中有多少个字节数据

readBuffer.get(bytes);

String body = new String(bytes,"UTF-8");

System.out.println("The time server receive order : " + body);

String currentTime = "QUERY TIME ORDER".equalsIgnoreCase(body) ? new Date(//

System.currentTimeMillis()).toString() : "BAD ORDER";

doWrite(sc,currentTime);

}else if(readBytes < 0){

//贵在坚持!

//对端链路关闭

// key.cancel();

// sc.close();

}else{

;//读到0字节,忽略

}

}

}

}

private void doWrite(SocketChannel channel, String response) throws IOException{

if(response != null && response.trim().length() > 0){

byte[] bytes = response.getBytes();

ByteBuffer writeBuffer = ByteBuffer.allocate(bytes.length);//根据字节数组容量创建ByteBuffer

writeBuffer.put(bytes);//字节数组复制到缓冲区

writeBuffer.flip();

channel.write(writeBuffer);//SocketChannel是异步非阻塞的,不保证一次发送完,出现“写半包”问题,

//这里缺少注册写操作,不断轮询Selector将没有发送完的ByteBuffer发送完毕

//TODO 这里有问题,没有写出去,导致客户端无法收到消息,显示Selector关闭状态异常 }

}

}

//3.客户端主程序

package com.xiaobai.nio; public class NIOClient { public static void main(String[] args) {

TimeClientHandle timeClientHandle = new TimeClientHandle("127.0.0.1",2048);

new Thread(timeClientHandle,"NIO-TimeClient-001").start();

}

}

//4.客户端timeClient

package com.xiaobai.nio; import java.io.IOException;

import java.net.InetSocketAddress;

import java.nio.ByteBuffer;

import java.nio.channels.SelectionKey;

import java.nio.channels.Selector;

import java.nio.channels.SocketChannel;

import java.util.Date;

import java.util.Iterator;

import java.util.Set; public class TimeClientHandle implements Runnable { private String host;

private int port;

private Selector selector;

private SocketChannel socketChannel;

private volatile boolean stop; public TimeClientHandle(String host,int port) {

this.host = host==null?"127.0.0.1":host;

this.port = port;

try {

selector = Selector.open();

socketChannel = SocketChannel.open();

socketChannel.configureBlocking(false);

} catch (Exception e) {

// TODO: handle exception

} }

@Override

public void run() {

try {

doConnect();

} catch (Exception e) {

// TODO: handle exception

}

while(!stop){

try {

selector.select(3000);

Set<SelectionKey> selectedKeys = selector.selectedKeys();

Iterator<SelectionKey> it = selectedKeys.iterator();

SelectionKey key = null;

while(it.hasNext()){

key = it.next();

it.remove();

try {

handleInput(key);

} catch (Exception e) {

if(key != null){

key.cancel();

if(key.channel() != null){

key.channel().close();

}

}

}

}

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

if(selector != null){

try {

selector.close();

} catch (Exception e) {

// TODO: handle exception

}

}

}

}

private void handleInput(SelectionKey key) throws Exception{

if(key.isValid()){

//判断是否连接成功

//连接方法中已有连接不成功注册连接事件的逻辑,反复尝试连接,这里判断,如果成功,注册该客户连接的read事件准备接收数据

SocketChannel sc = (SocketChannel) key.channel();

if(key.isConnectable()){

if(sc.finishConnect()){

sc.register(selector, SelectionKey.OP_READ);

doWrite(sc);//本客户向外写东西

}

}

//下面是从服务器接收数据

if(key.isReadable()){

ByteBuffer readBuffer = ByteBuffer.allocate(1024);

int readBytes = sc.read(readBuffer);//读到缓冲

if(readBytes > 0){

readBuffer.flip();

// Buffer java.nio.Buffer.flip()

//

//

// Flips this buffer. The limit is set to the current position and then the position is set to zero. If the mark is defined then it is discarded.

//

// After a sequence of channel-read or put operations, invoke this method to prepare for a sequence of channel-write or relative get operations. For example:

//

// buf.put(magic); // Prepend header

// in.read(buf); // Read data into rest of buffer

// buf.flip(); // Flip buffer

// out.write(buf); // Write header + data to channel

byte[] bytes = new byte[readBuffer.remaining()];//缓冲中有多少个字节数据

readBuffer.get(bytes);

String body = new String(bytes,"UTF-8");

System.out.println("Now is : " + body);

this.stop = true;

}else if(readBytes < 0){

//贵在坚持!

//对端链路关闭

key.cancel();

sc.close();

}else{

;//读到0字节,忽略

}

}

}

}

private void doConnect() throws IOException {

//如果连接成功,则直接注册到多路复用器上,发送请求消息,读应答

if(socketChannel.connect(new InetSocketAddress(host, port))){//异步连接,直至成功

socketChannel.register(selector, SelectionKey.OP_READ);

doWrite(socketChannel);

}else{//注册连接事件,轮询直至连接成功

//异步,到底是什么概念?底层是什么原理?TCP/IP层面

socketChannel.register(selector, SelectionKey.OP_CONNECT);

}

}

private void doWrite(SocketChannel sc) throws IOException {

//本客户向外写东西

byte[] req = "QUERY TIME ORDER".getBytes();

ByteBuffer writeBuffer = ByteBuffer.allocate(req.length);

writeBuffer.put(req);

writeBuffer.flip();

sc.write(writeBuffer);

if(!writeBuffer.hasRemaining()){

System.out.println("Send order 2 server succeed.");

}

} }

四、TCP与UDP

五、网络传输粘包与拆包问题

六、Netty入门案例与原理分析、Reactor模式

第一个例子:

//1.NettyServer

package com.xiaobai.server.netty; import io.netty.bootstrap.ServerBootstrap;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.EventLoopGroup;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioServerSocketChannel; import java.net.InetSocketAddress; public class NettyServer { private final int port; public NettyServer(int port) {

this.port = port;

} public static void main(String[] args) throws InterruptedException { if(args.length != 1) {

System.err.println("Usage:" + NettyServer.class.getSimpleName() + " <port>");

return;

}

int port = Integer.parseInt(args[0]);

new NettyServer(port).start();

} private void start() throws InterruptedException {

final NettyServerHandler serverHandler = new NettyServerHandler();

EventLoopGroup group = new NioEventLoopGroup();

try {

ServerBootstrap b = new ServerBootstrap();

b.group(group).channel(NioServerSocketChannel.class)

.localAddress(new InetSocketAddress(port))

.childHandler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel socketChannel) throws Exception {

socketChannel.pipeline().addLast(serverHandler);

}

});

ChannelFuture f = b.bind().sync();

f.channel().closeFuture().sync();

} catch (InterruptedException e) {

e.printStackTrace();

} finally {

group.shutdownGracefully().sync();

}

}

}

//2.NettyServerHandler

package com.xiaobai.server.netty; import io.netty.buffer.ByteBuf;

import io.netty.buffer.Unpooled;

import io.netty.channel.ChannelFutureListener;

import io.netty.channel.ChannelHandler;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.ChannelInboundHandlerAdapter;

import io.netty.util.CharsetUtil; @ChannelHandler.Sharable

public class NettyServerHandler extends ChannelInboundHandlerAdapter {

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception {

cause.printStackTrace();

ctx.close();

} @Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

ByteBuf in = (ByteBuf) msg;

System.out.println("Server received:" + in.toString(CharsetUtil.UTF_8));

ctx.write(in);

} @Override

public void channelReadComplete(ChannelHandlerContext ctx) throws Exception {

ctx.writeAndFlush(Unpooled.EMPTY_BUFFER)

.addListener(ChannelFutureListener.CLOSE);//关闭该Channel

}

}

//3.NettyClient

package com.xiaobai.server.netty; import io.netty.bootstrap.Bootstrap;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.EventLoopGroup;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioSocketChannel; import java.net.InetSocketAddress; public class NettyClient { private final String host;

private final int port; public NettyClient(String host, int port) {

this.host = host;

this.port = port;

} public static void main(String[] args) throws InterruptedException { if(args.length != 2) {

System.err.println("Usage:" + NettyServer.class.getSimpleName() + " <host> <port>");

return;

}

String host = args[0];

int port = Integer.parseInt(args[1]);

new NettyClient(host,port).start();

} private void start() throws InterruptedException { EventLoopGroup group = new NioEventLoopGroup();

try {

Bootstrap b = new Bootstrap();

b.group(group).channel(NioSocketChannel.class)

.remoteAddress(new InetSocketAddress(host,port))

.handler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel socketChannel) throws Exception {

socketChannel.pipeline().addLast(new NettyClientHandler());

}

});

ChannelFuture f = b.connect().sync();

f.channel().closeFuture().sync();

} catch (InterruptedException e) {

e.printStackTrace();

} finally {

group.shutdownGracefully().sync();

}

} }

//4.NettyClientHandler

package com.xiaobai.server.netty; import io.netty.buffer.ByteBuf;

import io.netty.buffer.Unpooled;

import io.netty.channel.ChannelHandler;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.ChannelHandlerInvoker;

import io.netty.channel.SimpleChannelInboundHandler;

import io.netty.util.CharsetUtil;

import io.netty.util.concurrent.EventExecutorGroup; public class NettyClientHandler extends SimpleChannelInboundHandler<ByteBuf> { @Override

protected void messageReceived(ChannelHandlerContext channelHandlerContext, ByteBuf byteBuf) throws Exception {

System.out.println("Client received: " + byteBuf.toString(CharsetUtil.UTF_8));

} @Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception {

cause.printStackTrace();

ctx.close();

} @Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

ctx.writeAndFlush(Unpooled.copiedBuffer("Netty rocks!",CharsetUtil.UTF_8));

}

}

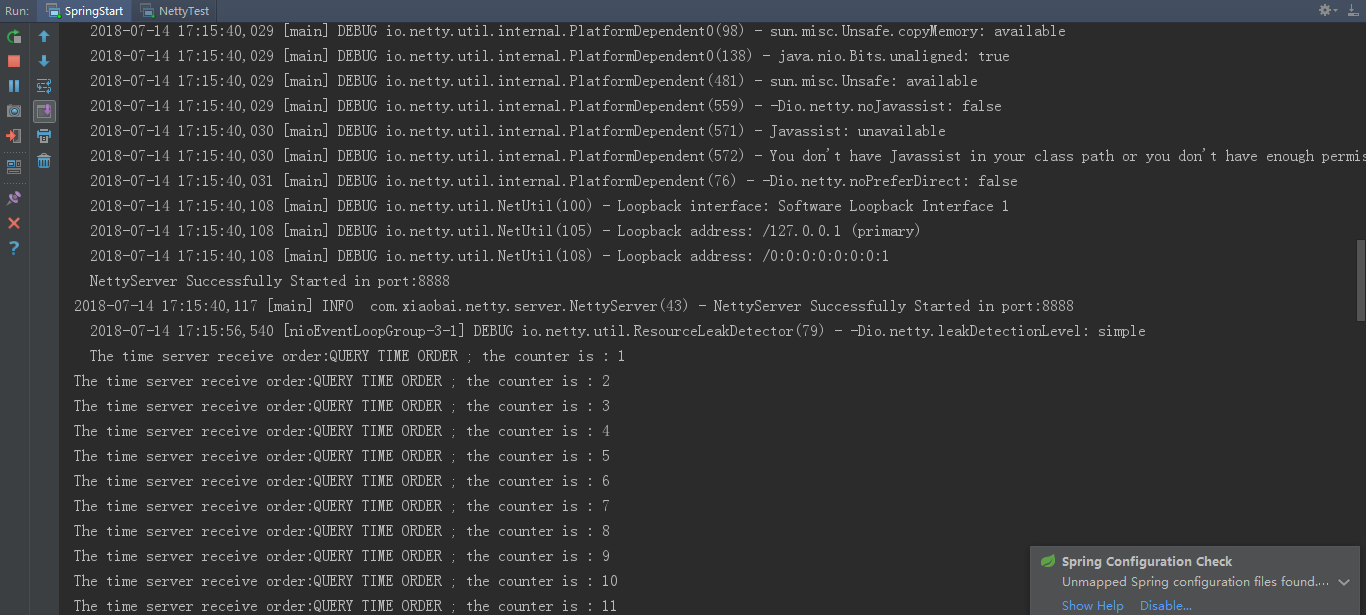

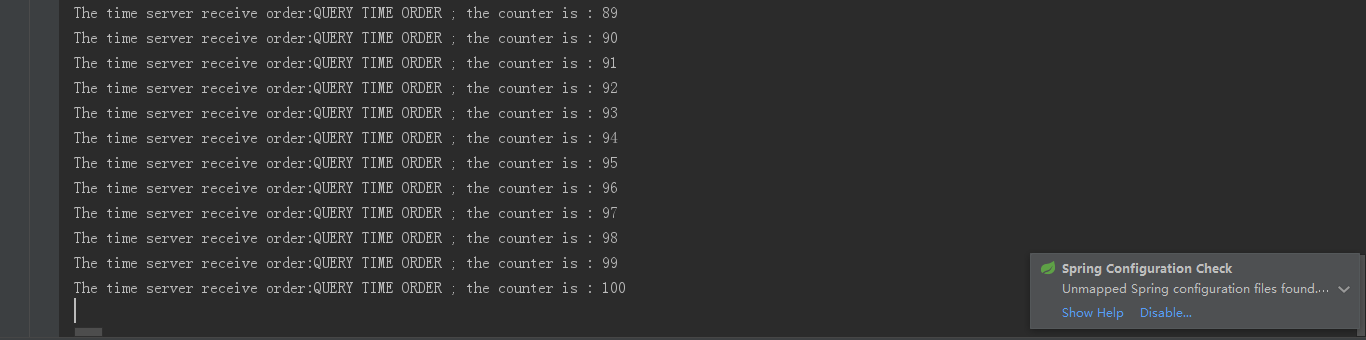

执行结果:

服务端:

客户端:

//服务端启动spring配置文件applicationContext.xml

<?xml version="1.0" encoding="UTF-8" ?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.springframework.org/schema/beans

http://www.springframework.org/schema/beans/spring-beans-3.0.xsd"> <bean id="nettyServer" class="com.xiaobai.netty.server.NettyServer" init-method="start">

<constructor-arg index="0" type="int">

<value>8888</value>

</constructor-arg>

</bean>

</beans>

//服务端启动类

package com.xiaobai.netty.spring; import org.springframework.context.ApplicationContext;

import org.springframework.context.support.ClassPathXmlApplicationContext; public class SpringStart { public static void main(String[] args) { ApplicationContext application = new ClassPathXmlApplicationContext("com/xiaobai/netty/spring/applicationContext.xml");

}

}

//NettyServer

package com.xiaobai.netty.server; import com.xiaobai.netty.handlers.ChildChannelHandler;

import io.netty.bootstrap.ServerBootstrap;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelOption;

import io.netty.channel.EventLoopGroup;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.nio.NioServerSocketChannel;

import org.apache.log4j.Logger; public class NettyServer { private static final Logger logger = Logger.getLogger(NettyServer.class); //无参

public NettyServer() { }

//用于spring管理构造函数初始化bean

public NettyServer(int port) {

this.port = port;

} private EventLoopGroup bossGroup;

private EventLoopGroup workerGroup;

private ServerBootstrap bootstrap;

private int port; public void start() {

bossGroup = new NioEventLoopGroup();

workerGroup = new NioEventLoopGroup();

try {

bootstrap = new ServerBootstrap();

bootstrap.group(bossGroup,workerGroup)

.channel(NioServerSocketChannel.class)

.option(ChannelOption.SO_BACKLOG,1024)

.childHandler(new ChildChannelHandler());

//同步等待绑定端口成功

ChannelFuture future = bootstrap.bind(port).sync();

System.out.println("NettyServer Successfully Started in port:" + port);

logger.info("NettyServer Successfully Started in port:" + port);

//同步等待服务器端口关闭

future.channel().closeFuture().sync();//经实验,这是阻塞方法,一直阻塞

}catch (Exception e) {

//优雅退出,释放线程池资源

bossGroup.shutdownGracefully();

workerGroup.shutdownGracefully();

}

} }

//ChildChannelHandler

package com.xiaobai.netty.handlers; import io.netty.channel.ChannelInitializer;

import io.netty.channel.socket.SocketChannel;

import io.netty.handler.codec.LineBasedFrameDecoder;

import io.netty.handler.codec.string.StringDecoder; //注意参数化类型不要引错包

public class ChildChannelHandler extends ChannelInitializer<SocketChannel>{ //原理来自平时读书实践(nio,aio,同步/异步原理,底层)

//接收到客户端连接(可连接)后的初始化动作,使用责任链模式绑定一系列数据读写操作,用于可读可写时的操作

@Override

protected void initChannel(SocketChannel socketChannel) throws Exception {

socketChannel.pipeline().addLast(new LineBasedFrameDecoder(1024));

socketChannel.pipeline().addLast(new StringDecoder());

socketChannel.pipeline().addLast(new TimeServerHandler());//由简入难,不断调试、琢磨的框架

}

}

//TimeServerHandler

package com.xiaobai.netty.handlers; import io.netty.buffer.ByteBuf;

import io.netty.buffer.Unpooled;

import io.netty.channel.ChannelHandlerAdapter;

import io.netty.channel.ChannelHandlerContext; import java.util.Date; public class TimeServerHandler extends ChannelHandlerAdapter { private int counter;

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

// ByteBuf buf = (ByteBuf) msg;

// byte[] req = new byte[buf.readableBytes()];

// buf.readBytes(req);

// String body = new String(req,"UTF-8").substring(0,req.length - System.getProperty("line.separator").length());

String body = (String)msg;

System.out.println("The time server receive order:" + body + " ; the counter is : " + ++counter);

String currentTime = "QUERY TIME ORDER".equalsIgnoreCase(body) ? new Date(System.currentTimeMillis()).toString() :"BAD ORDER";

currentTime = currentTime + System.getProperty("line.separator");

ByteBuf resp = Unpooled.copiedBuffer(currentTime.getBytes());

ctx.write(resp);

} //这些都是主程序代码中出现这些情况后调用的接口代码

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception {

ctx.close();

} @Override

public void channelReadComplete(ChannelHandlerContext ctx) throws Exception {

ctx.flush();

}

}

//客户端启动类

package com.xiaobai.netty.client; import org.apache.log4j.Logger; public class NettyTest { private static final Logger logger = Logger.getLogger(NettyTest.class); public static void main(String[] args) {

try {

logger.info("Netty communication start!");

NettyClient client = new NettyClient("127.0.0.1",8888);

client.send();

}catch (Exception e) {

logger.error("Client connected failure!");

} }

}

//NettyClient

package com.xiaobai.netty.client; import com.xiaobai.netty.handlers.ChildChannelHandler;

import com.xiaobai.netty.handlers.TimeClientHandler;

import io.netty.bootstrap.Bootstrap;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.ChannelOption;

import io.netty.channel.EventLoopGroup;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioServerSocketChannel;

import io.netty.channel.socket.nio.NioSocketChannel;

import io.netty.handler.codec.LineBasedFrameDecoder;

import io.netty.handler.codec.string.StringDecoder;

import org.apache.log4j.Logger; public class NettyClient { private static final Logger logger = Logger.getLogger(NettyClient.class);

//无参

public NettyClient() { }

//用于spring管理构造函数初始化bean

public NettyClient(String host,int port) {

this.host = host;

this.port = port;

} private EventLoopGroup group;

private Bootstrap bootstrap;

private int port;

private String host; public void send() {

try {

group = new NioEventLoopGroup();

if(host != null && host.trim() != "") {

bootstrap = new Bootstrap();

bootstrap.group(group)

.channel(NioSocketChannel.class)

.option(ChannelOption.TCP_NODELAY,true)

.handler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel socketChannel) throws Exception {

socketChannel.pipeline().addLast(new LineBasedFrameDecoder(1024));

socketChannel.pipeline().addLast(new StringDecoder());

socketChannel.pipeline().addLast(new TimeClientHandler());

}

});

//发起异步连接操作

System.out.println("NettyClient connecting " + host +":" + port);

ChannelFuture future = bootstrap.connect(host,port).sync();

logger.info("NettyClient connected " + host +":" + port);

//等待客户端关闭

future.channel().closeFuture().sync();

}else{

logger.info("accessing nowhere!");

}

}catch (Exception e) {

//优雅退出,释放线程池资源

group.shutdownGracefully();

}

} }

//TimeClientHandler

package com.xiaobai.netty.handlers; import io.netty.buffer.ByteBuf;

import io.netty.buffer.Unpooled;

import io.netty.channel.ChannelHandlerAdapter;

import io.netty.channel.ChannelHandlerContext;

import org.apache.log4j.Logger; public class TimeClientHandler extends ChannelHandlerAdapter { private static final Logger logger = Logger.getLogger(TimeClientHandler.class); private int counter; private byte[] req; public TimeClientHandler() {

req = ("QUERY TIME ORDER" + System.getProperty("line.separator")).getBytes();

} @Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception {

logger.warn("Unexpected exception from downstream:" + cause.getMessage());

ctx.close();

} //已连接后发送消息

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

//不要反复用一个ByteBuf,经测试会出现无法发布的问题--上网多查,研究其中原理!!

ByteBuf message = null;

for(int i = 0;i < 100; i++) {

message = Unpooled.buffer(req.length);

message.writeBytes(req);

ctx.writeAndFlush(message);

}

} @Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

// ByteBuf buf = (ByteBuf) msg;

// byte[] req = new byte[buf.readableBytes()];

// buf.readBytes(req);

// String body = new String(req,"UTF-8");

String body = (String)msg;

System.out.println("Now is:" + body + " ; the counter is : " + ++counter);

}

}

执行结果:

服务端:

客户端:

重要服务端组件类:

NioEventLoopGroup:一个线程组,包含了一组NIO线程,专门用于网络事件处理,实际上它们就是Reactor线程组。这里创建两个,一个用于服务端接受客户端的连接,另一个用于SocketChannel的网络读写。

ServerBootstrap:Netty用于启动NIO服务端的辅助启动类,降低开发复杂度。它的group方法将两个NIO线程组当作入参传递到ServerBootstrap中。backlog:TCP参数,这里设置为1024.

NioServerSocketChannel:功能对应于JDK NIO类库中的ServerSocketChannel类。

ChildChannelHandler:绑定I/O事件的处理类,作用类似于Reactor模式中的Handler类,主要用于处理网络I/O事件,例如记录日志、对消息进行编解码等。

服务端启动辅助类配置完成后,调用它的bind方法绑定监听端口,随后调用它的同步阻塞方法sync等待绑定操作完成。完成之后Netty会返回一个ChannelFuture,它的功能类似于JDK的java.util.concurrent.Future,主要用于异步操作的通知回调。

future.channel().closeFuture.sync()是阻塞方法(一直阻塞,直到服务关闭),等待服务端链路关闭后才退出。

shutdownGracefully方法:优雅退出,释放关联资源。

ByteBuf:类似于JDK中的java.nio.ByteBuffer对象,不过它提供了更加强大和灵活的功能。通过ByteBuf的readableBytes方法可获取缓冲区可读字节数,根据可读字节数创建byte数组,通过ByteBuf的readBytes方法将缓冲区字节数组复制到新建byte数组中,通过ChannelHandlerContext的write方法异步发送应答消息给客户端。

ChannelHandlerContext的flush方法:将消息发送队列中的消息写入到SocketChannel中发送给对方。从性能角度考虑,为了防止频繁唤醒Selector进行消息发送(Writable事件),Netty的write方法并不直接将消息写入SocketChannel中,只是把待发送的消息放到发送缓冲数组中,再通过调用flush方法,将发送缓冲区中的消息全部写到SocketChannel中。

服务端创建、客户端接入源码与流程

1)创建ServerBootstrap实例。ServerBootstrap是Netty服务端启动辅助类,提供了一系列方法用于设置服务端启动相关参数,底层通过门面模式对各种能力进行抽象和封装,降低用户开发难度。ServerBootstrap只有一个无参构造函数,因为需要设置的参数太多了,且可能发生变化,故采用的是Builder模式。

2)设置并绑定Reactor线程池。ServerBootstrap的线程池是EventLoopGroup,实际就是EventLoop数组。EventLoop的职责是处理所有注册到本线程多路复用器Selector上的Channel,Selector轮询操作由绑定的EventLoop线程run方法驱动,在一个循环体内循环执行。EventLoop不仅仅处理网络I/O事件,用户自定义Task和定时任务Task也统一由EventLoop负责处理,实现了线程模型统一。从调度层面看,也不存在从EventLoop线程中再启动其他类型线程用于异步执行其他任务。避免了多线程并发操作和锁竞争,提升了I/O线程的处理和调度性能。

创建两个EventLoopGroup(不是必需两个,可只创建一个共享),前一个是父,后一个是子。父被传入父类构造函数。

3)设置并绑定服务端Channel.作为NIO服务端,需创建ServerSocketChannel.Netty对原生NIO类库进行了封装,对应实现是NioServerSocketChannel.Netty的ServerBootstrap提供了channel方法用于指定服务端Channel类型,通过工厂类,利用反射创建NioServerSocketChannel对象。

指定NioServerSocketChannel后,设置TCP的backlog参数,底层C对应接口:

int listen(int fd,int backlog);

backlog指定内核为此套接口排队的最大连接个数。对于给定监听套接口,内核要维护两个队列:未链接队列和已连接队列,根据TCP三路握手过程中的三个分节来分隔这两个队列。服务器处于listen状态时,收到客户端syn分节(connect)时在未完成队列中创建 一个新条目,然后后三路握手的第二个分节即服务器syn响应客户端,此条目在第三分节到达前(客户端对服务器syn的ack)一直保留在未完成 连接队列中。三路握手完成,条目从未完成连接队列搬到已完成连接队列尾部。当进程调用accept时,从已完成队列中的头部取出一个条目给进程。当已完成队列未空时进程将 睡眠,直到有条目才唤醒。backlog被规定为两个队列总和最大值。大多数实现默认为5,高并发下不够,未完成连接队列长度可能因客户端syn的到达及等待三路握手 第三分节的到达延时而增大。Netty默认backlog为100,用户可根据实际场景和网络状况 灵活设置。

4)链路建立时创建并初始化ChannelPipeline.ChannelPipeline不是NIO服务端必需,本质是一个负责处理网络事件的职责链,管理和执行ChannelHandler.网络事件以事件流形式流转,根据ChannelHandler执行策略调度执行。典型网络事件:

a.链路注册 b.链路激活 c.链路断开 d.接收到请求消息 e.请求消息接收并处理完毕 f.发送应答消息 g.链路发生异常 h.发生用户自定义事件

5)添加并设置ChannelHandler.ChannelHandler是Netty提供给用户定制和扩展的关键接口,例如消息编解码,心跳,安全认证,TSL/SSL认证,流量控制,流量整形等。Netty提供了大量系统ChannelHandler,比较实用的如下:

a.系统编解码框架:ByteToMessageCodec b.通用基于长度的半包解码器:LengthFieldBasedFrameDecoder c.码流日志打印:LoggingHandler d.SSL安全认证:SslHandler e.链路空闲检测:IdleStateHandler f.流量整形:ChannelTrafficShapingHandler g.Base64编解码:Base64Decoder和Base64Encoder

创建和添加ChannelHandler示例代码:

bossGroup = new NioEventLoopGroup();

workerGroup = new NioEventLoopGroup();

try {

bootstrap = new ServerBootstrap();

bootstrap.group(bossGroup,workerGroup)

.channel(NioServerSocketChannel.class)

.option(ChannelOption.SO_BACKLOG,1024)

.childHandler(new ChannelInitializer<SocketChannel>(){

@Override

protected void initChannel(SocketChannel socketChannel) throws Exception {

socketChannel.pipeline().addLast(new LineBasedFrameDecoder(1024));

socketChannel.pipeline().addLast(new StringDecoder());

socketChannel.pipeline().addLast(new TimeServerHandler());//由简入难,不断调试、琢磨的框架

}

});

//同步等待绑定端口成功

ChannelFuture future = bootstrap.bind(port).sync();

System.out.println("NettyServer Successfully Started in port:" + port);

logger.info("NettyServer Successfully Started in port:" + port);

//同步等待服务器端口关闭

future.channel().closeFuture().sync();//经实验,这是阻塞方法,一直阻塞

}catch (Exception e) {

//优雅退出,释放线程池资源

bossGroup.shutdownGracefully();

workerGroup.shutdownGracefully();

}

用户可为启动辅助类和其父类分别指定Handler.两类Handler的用途不同:子类Handler是NioServerSocketChannel对应的ChannelPipeline的Handler,父类中的Handler是客户端新接入的连接SocketChannel对应的ChannelPipeline的Handler.

本质区别就是:ServerBootstrap中的Handler是NioServerSocketChannel使用的,所有连接该监听端口的客户端都会执行它;父类AbstractBootstrap中的Handler是个工厂类,为每个新接入的客户端都创建一个新的Handler.

6)绑定并启动监听端口。将ServerSocketChannel注册到Selector上监听客户端连接。

创建新的NioServerSocketChannel,两个参数:第一个参数是从父类NIO线程池中顺序获取的一个NioEventLoop,它就是服务端用于监听和接收客户端连接的Reactor线程,第二个参数是所谓workerGroup线程池,是处理I/O读写的Reactor线程组。

NioServerSocketChannel创建成功后的初始化:

a.设置Socket参数和NioServerSocketChannel附加属性 b.将AbstractBootstrap的Handler添加到NioServerSocketChannel的ChannelPipeline c.将用于服务端注册的Handler(ServerBootstrapAcceptor)添加到ChannelPipeline

NioServerSocketChannel注册:封装成Task投递到NioEventLoop,将NioServerSocketChannel注册到NioEventLoop的Selector上(此时注册0,不监听任何网络操作)

注册成功后,触发ChannelRegistered事件,判断监听是否成功,成功则触发ChannelActive事件,根据配置决定是否自动触发Channel的读事件,最终触发ChannelPipeline读操作,调用到HeadHandler的读方法(业务处理)。不同Channel(无论客户端还是服务端)对读操作准备工作不同,因此doBeginRead是个多态方法,这里都要修改网络监听操作位为自身感兴趣的,NioServerSocketChannel感兴趣的为OP_ACCEPT(16)

用4 bit表示所有4种网络操作类型:OP_READ 0001 OP_WRITE 0010 OP_CONNECT 0100 OP_ACCEPT 1000

好处是方便通过位操作进行操作位判断和状态修改,提升操作性能。

7)Selector轮询。由Reactor线程NioEventLoop负责调度和执行Selector轮询操作,选择就绪的Channel集合。

根据就绪的操作位,执行不同操作。NioServerSocketChannel监听的是连接操作,执行的是NioUnsafe(接口)的read方法,这里使用的是NioMessageUnsafe(实现类,还有一个NioByteUnsafe)

doReadMessages方法:实际就是接收新的客户端连接并创建NioSocketChannel.

接收到新的客户端连接后,触发ChannelPipeline的ChannelRead方法,执行headChannelHandlerContext的fireChannelRead方法(触发事件),事件在ChannelPipeline中传递,执行ServerBootstrapAcceptor的channelRead方法,该方法分三个步骤:

a.将启动时传入的childHandler加入到客户端SocketChannel的ChannelPipeline中 b.设置客户端SocketChannel的TCP参数 c.注册SocketChannel到多路复用器

NioSocketChannel注册:仍注册操作位为0,触发ChannelReadComplete事件,执行ChannelPipeline的read方法,执行HeadHandler的read方法,将网络操作位改为OP_READ.

到此,新接入客户端连接处理完成,可进行网络读写I/O操作

8)轮询到准备就绪的Channel后,由Reactor线程NioEventLoop执行ChannelPipeline相应方法(fire各种事件,触发各种ChannelHandler的事件回调,观察者模式),最终调度并执行ChannelHandler.

9)根据网络事件类型,调度执行Netty系统ChannelHandler和用户定制ChannelHandler.

七、Netty对粘包拆包的解决方案

八、编码与解码

九、序列化与反序列化

十、网络传输私有协议制定与聊天室业务实现

Netty实践与NIO原理的更多相关文章

- Google Protobuf结合Netty实践

1.Win版Protobuf代码生成工具下载: https://github.com/protocolbuffers/protobuf/releases 注意下载protoc-3.6.1-win32. ...

- 京东的Netty实践,京麦TCP网关长连接容器架构

背景 早期京麦搭建 HTTP 和 TCP 长连接功能主要用于消息通知的推送,并未应用于 API 网关.随着逐步对 NIO 的深入学习和对 Netty 框架的了解,以及对系统通信稳定能力越来越高的要求, ...

- 深入理解NIO(三)—— NIO原理及部分源码的解析

深入理解NIO(三)—— NIO原理及部分源码的解析 欢迎回到淦™的源码看爆系列 在看完前面两个系列之后,相信大家对NIO也有了一定的理解,接下来我们就来深入源码去解读它,我这里的是OpenJDK-8 ...

- [JavaEE]Java NIO原理图文分析及代码实现

转http://weixiaolu.iteye.com/blog/1479656 目录: 一.java NIO 和阻塞I/O的区别 1. 阻塞I/O通信模型 2. java NIO ...

- Java NIO原理分析

Java IO 在Client/Server模型中,Server往往需要同时处理大量来自Client的访问请求,因此Server端需采用支持高并发访问的架构.一种简单而又直接的解决方案是“one-th ...

- Java NIO原理图文分析及代码实现

原文: http://weixiaolu.iteye.com/blog/1479656 目录: 一.java NIO 和阻塞I/O的区别 1. 阻塞I/O通信模型 2. java ...

- 微信技术分享:微信的海量IM聊天消息序列号生成实践(算法原理篇)

1.点评 对于IM系统来说,如何做到IM聊天消息离线差异拉取(差异拉取是为了节省流量).消息多端同步.消息顺序保证等,是典型的IM技术难点. 就像即时通讯网整理的以下IM开发干货系列一样: <I ...

- Java NIO原理 图文分析及代码实现

Java NIO原理图文分析及代码实现 前言: 最近在分析hadoop的RPC(Remote Procedure Call Protocol ,远程过程调用协议,它是一种通过网络从远程计算机程序上请 ...

- 客户端(springmvc)调用netty构建的nio服务端,获得响应后返回页面(同步响应)

后面考虑通过netty做一个真正意义的简约版RPC框架,今天先尝试通过正常调用逻辑调用netty构建的nio服务端并同步获得返回信息.为后面做铺垫 服务端实现 我们先完成服务端的逻辑,逻辑很简单,把客 ...

随机推荐

- Unity shader学习之折射

shader如下: Shader "Custom/Refraction" { Properties { _Cubemap("Cubemap", Cube) = ...

- 基于AOP注解实现业务功能的动态配置

一.导入jar包 <dependency><!-- 4.引入AOP--> <groupId>org.springframework.boot</groupId ...

- memory consistency

目前的计算机系统中,都是shared memory结构,提供统一的控制接口给软件, shared memory结构中,为了memory correctness,可以将问题分为:memory consi ...

- 每天记命令:lscpu 和 cat /proc/cpuinfo

[1]lscpu lscpu命令,查看cpu相关的统计信息. socket 就是主板上插cpu的槽的数目,也就是可以插入的物理CPU的个数(比如上例,可以插入1个CPU). core 就是我们平时说的 ...

- python 修改xml文档 ing

原xml文件 <?xml version="1.0" encoding="utf-8"?> <catalog> <maxid> ...

- 【Alpha版本】冲刺阶段——Day4

[Alpha版本]冲刺阶段--Day4 阅读目录 今日进展 问题困难 明日任务 今日贡献量 TODOlist [今日进展] 完成注册类代码 public class Register { privat ...

- 【Alpha版本】冲刺阶段——Day5

[Alpha版本]冲刺阶段--Day5 阅读目录 今日进展 问题困难 明日任务 今日贡献量 站立式会议 TODOlist [今日进展] 完成登录类代码 public void LOGIN() { co ...

- formdata 和 Payload 区别

FormData和Payload是浏览器传输给接口的两种格式,这两种方式浏览器是通过Content-Type来进行区分的(了解Content-Type),如果是 application/x-www-f ...

- golang学习笔记12 beego table name `xxx` repeat register, must be unique 错误问题

golang学习笔记12 beego table name `xxx` repeat register, must be unique 错误问题 今天测试了重新建一个项目生成新的表,然后复制到旧的项目 ...

- POSIX rename语义

POSIX对rename行为的定义如下(http://www.opengroup.org/onlinepubs/009695399/functions/rename.html): 将一个文件重命名为一 ...