对《禁忌搜索(Tabu Search)算法及python实现》的修改

这个算法是在听北大人工智能mooc的时候,老师讲的一种局部搜索算法,可是举得例子不太明白。搜索网页后,发现《禁忌搜索(Tabu Search)算法及python实现》(https://blog.csdn.net/adkjb/article/details/81712969) 已经做了好详细的介绍,仔细看了下很有收获。于是想泡泡代码,看前面还好,后边的代码有些看不懂了,而且在函数里定义函数,这种做法少见,并且把函数有当作类来用,为什么不直接用类呢。还有就是,可能对禁忌搜索不太了解,可能具体算法在代码里有问题,欢迎提出。

import random class Tabu:

def __init__(self,tabulen=100,preparelen=200):

self.tabulen=tabulen

self.preparelen=preparelen

self.city,self.cityids,self.stid=self.loadcity2() #我直接把他的数据放到代码里了 self.route=self.randomroute()

self.tabu=[]

self.prepare=[]

self.curroute=self.route.copy()

self.bestcost=self.costroad(self.route)

self.bestroute=self.route

def loadcity(self,f="d:/Documents/code/aiclass/tsp.txt",stid=1):

city = {}

cityid=[] for line in open(f):

place,lon,lat = line.strip().split(" ")

city[int(place)]=float(lon),float(lat) #导入城市的坐标

cityid.append(int(place))

return city,cityid,stid

def loadcity2(self,stid=1):

city={1: (1150.0, 1760.0), 2: (630.0, 1660.0), 3: (40.0, 2090.0), 4: (750.0, 1100.0),

5: (750.0, 2030.0), 6: (1030.0, 2070.0), 7: (1650.0, 650.0), 8: (1490.0, 1630.0),

9: (790.0, 2260.0), 10: (710.0, 1310.0), 11: (840.0, 550.0), 12: (1170.0, 2300.0),

13: (970.0, 1340.0), 14: (510.0, 700.0), 15: (750.0, 900.0), 16: (1280.0, 1200.0),

17: (230.0, 590.0), 18: (460.0, 860.0), 19: (1040.0, 950.0), 20: (590.0, 1390.0),

21: (830.0, 1770.0), 22: (490.0, 500.0), 23: (1840.0, 1240.0), 24: (1260.0, 1500.0),

25: (1280.0, 790.0), 26: (490.0, 2130.0), 27: (1460.0, 1420.0), 28: (1260.0, 1910.0),

29: (360.0, 1980.0)} #原博客里的数据

cityid=list(city.keys())

return city,cityid,stid

def costroad(self,road):

#计算当前路径的长度 与原博客里的函数功能相同

d=-1

st=0,0

cur=0,0

city=self.city

for v in road:

if d==-1:

st=city[v]

cur=st

d=0

else:

d+=((cur[0]-city[v][0])**2+(cur[1]-city[v][1])**2)**0.5 #计算所求解的距离,这里为了简单,视作二位平面上的点,使用了欧式距离

cur=city[v]

d+=((cur[0]-st[0])**2+(cur[1]-st[1])**2)**0.5

return d

def randomroute(self):

#产生一条随机的路

stid=self.stid

rt=self.cityids.copy()

random.shuffle(rt)

rt.pop(rt.index(stid))

rt.insert(0,stid)

return rt

def randomswap2(self,route):

#随机交换路径的两个节点

route=route.copy()

while True:

a=random.choice(route)

b=random.choice(route)

if a==b or a==1 or b==1:

continue

ia=route.index(a)

ib=route.index(b)

route[ia]=b

route[ib]=a

return route

def step(self):

#搜索一步路找出当前下应该搜寻的下一条路

rt=self.curroute i=0

while i<self.preparelen: #产生候选路径

prt=self.randomswap2(rt)

if int(self.costroad(prt)) not in self.tabu: #产生不在禁忌表中的路径

self.prepare.append(prt.copy())

i+=1

c=[]

for r in self.prepare:

c.append(self.costroad(r))

mc=min(c)

mrt=self.prepare[c.index(mc)] #选出候选路径里最好的一条

if mc<self.bestcost:

self.bestcost=mc

self.bestroute=mrt.copy() #如果他比最好的还要好,那么记录下来

self.tabu.append(mc)#int(mrt)) #这里本来要加 mrt的 ,可是mrt是路径,要对比起来麻烦,这里假设每条路是由长度决定的

#也就是说 每个路径和他的长度是一一对应,这样比对起来速度快点,当然这样可能出问题,更好的有待研究

self.curroute=mrt #用候选里最好的做下次搜索的起点

self.prepare=[]

if len(self.tabu)>self.tabulen:

self.tabu.pop(0)

下面跑跑看:

import timeit

t=Tabu()print('ok')

print(t.city)

print(t.route)

print(t.bestcost)

print(t.curroute)

for i in range(1000):

t.step()

if i%50==0:

print(t.bestcost)

print(t.bestroute)

print(t.curroute) print('ok')

#print(timeit.timeit(stmt="t.step()", number=1000,globals=globals()))

print('ok')

ok

{1: (1150.0, 1760.0), 2: (630.0, 1660.0), 3: (40.0, 2090.0), 4: (750.0, 1100.0), 5: (750.0, 2030.0), 6: (1030.0, 2070.0), 7: (1650.0, 650.0), 8: (1490.0, 1630.0), 9: (790.0, 2260.0), 10: (710.0, 1310.0), 11: (840.0, 550.0), 12: (1170.0, 2300.0), 13: (970.0, 1340.0), 14: (510.0, 700.0), 15: (750.0, 900.0), 16: (1280.0, 1200.0), 17: (230.0, 590.0), 18: (460.0, 860.0), 19: (1040.0, 950.0), 20: (590.0, 1390.0), 21: (830.0, 1770.0), 22: (490.0, 500.0), 23: (1840.0, 1240.0), 24: (1260.0, 1500.0), 25: (1280.0, 790.0), 26: (490.0, 2130.0), 27: (1460.0, 1420.0), 28: (1260.0, 1910.0), 29: (360.0, 1980.0)}

[1, 6, 2, 12, 9, 28, 15, 21, 8, 22, 29, 19, 26, 24, 20, 18, 17, 7, 4, 16, 27, 11, 14, 25, 10, 3, 13, 23, 5]

24651.706120672443

[1, 6, 2, 12, 9, 28, 15, 21, 8, 22, 29, 19, 26, 24, 20, 18, 17, 7, 4, 16, 27, 11, 14, 25, 10, 3, 13, 23, 5]

21567.36269967159

[1, 6, 2, 12, 9, 28, 15, 21, 8, 22, 29, 3, 26, 24, 20, 18, 17, 7, 4, 16, 27, 11, 14, 25, 10, 19, 13, 23, 5]

[1, 6, 2, 12, 9, 28, 15, 21, 8, 22, 29, 3, 26, 24, 20, 18, 17, 7, 4, 16, 27, 11, 14, 25, 10, 19, 13, 23, 5]

9701.867337779715

[1, 28, 6, 12, 9, 5, 26, 3, 29, 21, 2, 20, 10, 18, 14, 17, 22, 11, 19, 16, 27, 8, 23, 7, 25, 15, 4, 13, 24]

[1, 28, 6, 12, 9, 5, 26, 3, 29, 21, 2, 20, 10, 18, 14, 17, 22, 11, 19, 16, 8, 27, 23, 7, 25, 15, 4, 13, 24]

9701.867337779715

[1, 28, 6, 12, 9, 5, 26, 3, 29, 21, 2, 20, 10, 18, 14, 17, 22, 11, 19, 16, 27, 8, 23, 7, 25, 15, 4, 13, 24]

[1, 28, 12, 6, 5, 9, 26, 3, 29, 21, 2, 20, 10, 18, 14, 17, 22, 11, 15, 16, 27, 8, 23, 7, 25, 19, 4, 13, 24]

9701.867337779715

[1, 28, 6, 12, 9, 5, 26, 3, 29, 21, 2, 20, 10, 18, 14, 17, 22, 11, 19, 16, 27, 8, 23, 7, 25, 15, 4, 13, 24]

[1, 28, 12, 6, 5, 9, 26, 29, 3, 21, 2, 20, 10, 18, 14, 17, 22, 11, 19, 16, 27, 8, 23, 7, 25, 15, 4, 13, 24]

9701.867337779715

[1, 28, 6, 12, 9, 5, 26, 3, 29, 21, 2, 20, 10, 18, 14, 17, 22, 11, 19, 16, 27, 8, 23, 7, 25, 15, 4, 13, 24]

[1, 28, 12, 6, 9, 5, 26, 3, 29, 21, 2, 20, 10, 18, 14, 17, 22, 11, 19, 16, 27, 8, 23, 7, 25, 15, 4, 13, 24]

9667.242844275002

[1, 28, 6, 12, 9, 26, 3, 29, 5, 21, 2, 20, 10, 18, 14, 17, 22, 11, 19, 16, 27, 8, 23, 7, 25, 15, 4, 13, 24]

[1, 28, 6, 12, 9, 3, 29, 26, 5, 21, 2, 20, 10, 18, 14, 17, 22, 11, 15, 16, 8, 27, 23, 7, 25, 19, 4, 13, 24]

9667.242844275002

[1, 28, 6, 12, 9, 26, 3, 29, 5, 21, 2, 20, 10, 18, 14, 17, 22, 11, 19, 16, 27, 8, 23, 7, 25, 15, 4, 13, 24]

[1, 28, 6, 12, 9, 26, 3, 29, 5, 21, 2, 20, 10, 18, 14, 17, 22, 11, 19, 16, 27, 8, 23, 7, 25, 15, 4, 13, 24]

9667.242844275002

[1, 28, 6, 12, 9, 26, 3, 29, 5, 21, 2, 20, 10, 18, 14, 17, 22, 11, 19, 16, 27, 8, 23, 7, 25, 15, 4, 13, 24]

[1, 28, 6, 12, 9, 5, 26, 29, 3, 21, 2, 20, 10, 18, 14, 17, 22, 11, 19, 16, 27, 8, 23, 7, 25, 15, 4, 13, 24]

9667.242844275002

[1, 28, 6, 12, 9, 26, 3, 29, 5, 21, 2, 20, 10, 18, 14, 17, 22, 11, 19, 16, 27, 8, 23, 7, 25, 15, 4, 13, 24]

[1, 28, 6, 12, 9, 5, 26, 3, 29, 21, 2, 20, 10, 18, 14, 17, 22, 11, 15, 16, 27, 8, 23, 7, 25, 19, 4, 13, 24]

9248.522952771107

[1, 28, 6, 12, 9, 5, 26, 3, 29, 21, 2, 20, 10, 18, 14, 17, 22, 11, 15, 4, 13, 16, 19, 25, 7, 23, 27, 8, 24]

[1, 28, 12, 6, 9, 5, 29, 3, 26, 21, 2, 20, 10, 18, 14, 17, 22, 11, 15, 4, 13, 16, 19, 25, 7, 23, 27, 8, 24]

9213.898459266395

[1, 28, 6, 12, 9, 26, 3, 29, 5, 21, 2, 20, 10, 18, 14, 17, 22, 11, 15, 4, 13, 16, 19, 25, 7, 23, 27, 8, 24]

[1, 28, 6, 12, 9, 26, 3, 29, 5, 21, 2, 20, 10, 18, 14, 17, 22, 11, 19, 15, 4, 13, 16, 25, 7, 23, 8, 27, 24]

9213.898459266395

[1, 28, 6, 12, 9, 26, 3, 29, 5, 21, 2, 20, 10, 18, 14, 17, 22, 11, 15, 4, 13, 16, 19, 25, 7, 23, 27, 8, 24]

[1, 28, 12, 6, 9, 5, 26, 3, 29, 21, 2, 20, 10, 18, 14, 17, 22, 11, 15, 4, 13, 16, 19, 25, 7, 23, 8, 27, 24]

9213.898459266395

[1, 28, 6, 12, 9, 26, 3, 29, 5, 21, 2, 20, 10, 18, 14, 17, 22, 11, 15, 4, 13, 16, 19, 25, 7, 23, 27, 8, 24]

[1, 28, 6, 12, 9, 5, 26, 3, 29, 21, 2, 20, 10, 18, 14, 17, 22, 11, 15, 4, 13, 16, 19, 25, 7, 23, 27, 8, 24]

9213.898459266395

[1, 28, 6, 12, 9, 26, 3, 29, 5, 21, 2, 20, 10, 18, 14, 17, 22, 11, 15, 4, 13, 16, 19, 25, 7, 23, 27, 8, 24]

[1, 28, 12, 6, 5, 9, 26, 3, 29, 21, 2, 20, 10, 18, 14, 17, 22, 11, 15, 4, 13, 16, 19, 25, 7, 23, 27, 8, 24]

9213.898459266395

[1, 28, 6, 12, 9, 26, 3, 29, 5, 21, 2, 20, 10, 18, 14, 17, 22, 11, 15, 4, 13, 16, 19, 25, 7, 23, 27, 8, 24]

[1, 28, 6, 12, 9, 5, 29, 3, 26, 21, 2, 20, 10, 18, 17, 14, 22, 11, 15, 4, 13, 16, 19, 25, 7, 23, 27, 8, 24]

9213.898459266395

[1, 28, 6, 12, 9, 26, 3, 29, 5, 21, 2, 20, 10, 18, 14, 17, 22, 11, 15, 4, 13, 16, 19, 25, 7, 23, 27, 8, 24]

[1, 28, 12, 6, 9, 26, 29, 3, 5, 21, 2, 20, 10, 18, 14, 17, 22, 11, 15, 4, 13, 16, 19, 25, 7, 23, 8, 27, 24]

9213.898459266395

[1, 28, 6, 12, 9, 26, 3, 29, 5, 21, 2, 20, 10, 18, 14, 17, 22, 11, 15, 4, 13, 16, 19, 25, 7, 23, 27, 8, 24]

[1, 28, 12, 6, 9, 5, 26, 29, 3, 21, 2, 20, 10, 18, 14, 17, 22, 11, 15, 4, 13, 16, 19, 25, 7, 23, 27, 8, 24]

9213.898459266395

[1, 28, 6, 12, 9, 26, 3, 29, 5, 21, 2, 20, 10, 18, 14, 17, 22, 11, 15, 4, 13, 16, 19, 25, 7, 23, 27, 8, 24]

[1, 28, 12, 6, 9, 26, 3, 29, 5, 21, 2, 20, 10, 18, 14, 17, 22, 11, 15, 4, 13, 16, 19, 25, 7, 23, 27, 8, 24]

9213.898459266395

[1, 28, 6, 12, 9, 26, 3, 29, 5, 21, 2, 20, 10, 18, 14, 17, 22, 11, 15, 4, 13, 16, 19, 25, 7, 23, 27, 8, 24]

[1, 28, 12, 6, 5, 9, 26, 3, 29, 21, 2, 20, 10, 18, 14, 17, 22, 11, 15, 4, 13, 16, 19, 25, 7, 23, 27, 8, 24]

9213.898459266395

[1, 28, 6, 12, 9, 26, 3, 29, 5, 21, 2, 20, 10, 18, 14, 17, 22, 11, 15, 4, 13, 16, 19, 25, 7, 23, 27, 8, 24]

[1, 28, 6, 12, 9, 5, 26, 3, 29, 21, 2, 20, 10, 18, 14, 17, 22, 11, 15, 4, 13, 16, 19, 25, 7, 23, 27, 8, 24]

看到最小路径是9213.89 如果我们把timeit去掉,跑1000步我的电脑是不到4秒大概

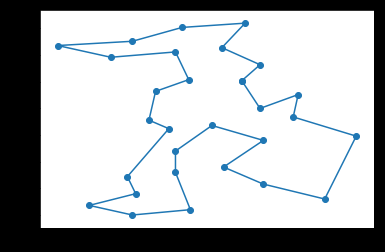

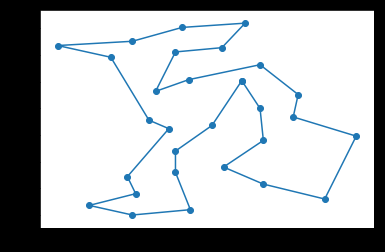

为了直观把路径画下来:

from matplotlib import pyplot x=[]

y=[]

print("最优路径长度:",t.bestcost)

for i in t.bestroute:

x0,y0=t.city[i]

x.append(x0)

y.append(y0)

x.append(x[0])

y.append(y[0])

pyplot.plot(x,y)

pyplot.scatter(x,y)

貌似找到最好的了。。。。

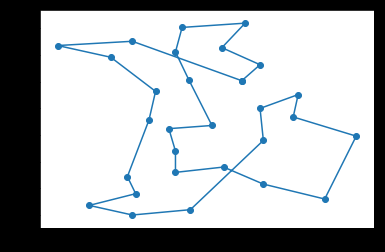

再跑一次:

9760.12

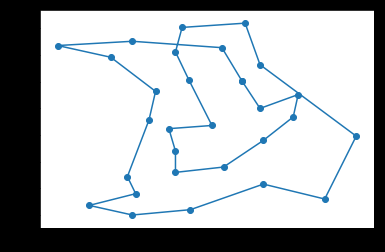

再来一次:

10212

10500

10100

10080

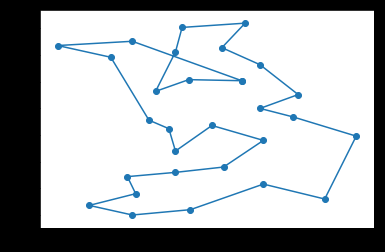

发现有些地方总有不变的地方,是不是可以把多条线路给叠加起来,做个链接的加权图,按照路径的权再来启发,是否能得到更好的结果呢?

比如右下角,一直都是一个形状,是否说明,这几个点的连接状况固定了呢?

这样可以把总是连续的点给合并成一个整体再来搜索是否也是个办法?

CSDN我的博客不知道怎么给禁用了,不能留言给博主,只能这样了。

对《禁忌搜索(Tabu Search)算法及python实现》的修改的更多相关文章

- 采用梯度下降优化器(Gradient Descent optimizer)结合禁忌搜索(Tabu Search)求解矩阵的全部特征值和特征向量

[前言] 对于矩阵(Matrix)的特征值(Eigens)求解,采用数值分析(Number Analysis)的方法有一些,我熟知的是针对实对称矩阵(Real Symmetric Matrix)的特征 ...

- 【算法】禁忌搜索算法(Tabu Search,TS)超详细通俗解析附C++代码实例

01 什么是禁忌搜索算法? 1.1 先从爬山算法说起 爬山算法从当前的节点开始,和周围的邻居节点的值进行比较. 如果当前节点是最大的,那么返回当前节点,作为最大值 (既山峰最高点):反之就用最高的邻居 ...

- MIP启发式求解:局部搜索 (local search)

*本文主要记录和分享学习到的知识,算不上原创. *参考文献见链接. 本文讲述的是求解MIP问题的启发式算法. 启发式算法的目的在于短时间内获得较优解. 个人认为局部搜索(local search)几乎 ...

- LeetCode初级算法的Python实现--排序和搜索、设计问题、数学及其他

LeetCode初级算法的Python实现--排序和搜索.设计问题.数学及其他 1.排序和搜索 class Solution(object): # 合并两个有序数组 def merge(self, n ...

- C++实现禁忌搜索解决TSP问题

C++实现禁忌搜索解决TSP问题 使用的搜索方法是Tabu Search(禁忌搜索) 程序设计 1) 文件读入坐标点计算距离矩阵/读入距离矩阵 for(int i = 0; i < CityNu ...

- 选择性搜索(Selective Search)

1 概述 本文牵涉的概念是候选区域(Region Proposal ),用于物体检测算法的输入.无论是机器学习算法还是深度学习算法,候选区域都有用武之地. 2 物体检测和物体识别 物体识别是要分辨出图 ...

- 常用查找数据结构及算法(Python实现)

目录 一.基本概念 二.无序表查找 三.有序表查找 3.1 二分查找(Binary Search) 3.2 插值查找 3.3 斐波那契查找 四.线性索引查找 4.1 稠密索引 4.2 分块索引 4.3 ...

- 集束搜索beam search和贪心搜索greedy search

贪心搜索(greedy search) 贪心搜索最为简单,直接选择每个输出的最大概率,直到出现终结符或最大句子长度. 集束搜索(beam search) 集束搜索可以认为是维特比算法的贪心形式,在维特 ...

- 第三十三节,目标检测之选择性搜索-Selective Search

在基于深度学习的目标检测算法的综述 那一节中我们提到基于区域提名的目标检测中广泛使用的选择性搜索算法.并且该算法后来被应用到了R-CNN,SPP-Net,Fast R-CNN中.因此我认为还是有研究的 ...

随机推荐

- gitblit系列七:使用Jenkins配置自动化持续集成构建

1.安装 方法一: 下载jenkin.exe安装文件 下载地址:https://jenkins.io/content/thank-you-downloading-windows-installer/ ...

- java中方法内可以调用同一个类中的方法

在同一个类中,java的普通方法的相互调用,可以使用this+点号+方法名,也可省略this+点号,java编 译器会自动补上.

- [Leetcode 3] 最长不重复子串 Longest substring without repeating 滑动窗口

[题目] Given a string, find the length of the longest substring without repeating characters. [举例] Exa ...

- node 慕课笔记

global global.testVar2 = 200; 在别的文件中可以任意调用到 因为global是全局变量相当于js的window一样的

- poj3080(kmp+枚举)

Blue Jeans Time Limit: 1000MS Memory Limit: 65536K Total Submissions: 20163 Accepted: 8948 Descr ...

- HTML5中input[type='date']自定义样式

HTML5提供了日历控件功能,缩减了开发时间,但有时它的样式确实不如人意,我们可以根据下面的代码自行修改. 建议:复制下面的代码段,单独建立一个css文件,方便我们修改. /* 修改日历控件类型 */ ...

- mysql 优化2

6. 合理使用EXISTS,NOT EXISTS子句.如下所示: 1.SELECT SUM(T1.C1) FROM T1 WHERE (SELECT COUNT(*)FROM T2 WHERE T2. ...

- ob 函数的使用

ob 函数的使用1. 页面静态化 $id = isset($_GET['id'])?$_GET['id']-0:0; $filename = "html/".date(" ...

- git clone新项目后如何拉取分支代码到本地

1.git clone git@git.n.xxx.com:xxx/xxx.git 2.git fetch origin dev 命令来把远程dev分支拉到本地 3.checkout -b de ...

- TEST mathjax

这里是第一个公式 $ F = ma^2 $ \[ \text{Reinforcement Learning} \doteq \pi_* \\ \quad \updownarrow \\ \pi_* \ ...