Spark Streaming 例子

NetworkWordCount.scala

/*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/ // scalastyle:off println

package com.gong.spark161.streaming import org.apache.spark.SparkConf

import org.apache.spark.storage.StorageLevel

import org.apache.spark.streaming.{Seconds, StreamingContext} /**

* Counts words in UTF8 encoded, '\n' delimited text received from the network every second.

*

* Usage: NetworkWordCount <hostname> <port>

* <hostname> and <port> describe the TCP server that Spark Streaming would connect to receive data.

*

* To run this on your local machine, you need to first run a Netcat server

* `$ nc -lk 9999`

* and then run the example

* `$ bin/run-example org.apache.spark.examples.streaming.NetworkWordCount localhost 9999`

*/

object NetworkWordCount {

def main(args: Array[String]) {

if (args.length < ) {

System.err.println("Usage: NetworkWordCount <hostname> <port>")

System.exit()

} StreamingExamples.setStreamingLogLevels() // Create the context with a 1 second batch size

val sparkConf = new SparkConf().setAppName("NetworkWordCount")

val ssc = new StreamingContext(sparkConf, Seconds()) // Create a socket stream on target ip:port and count the

// words in input stream of \n delimited text (eg. generated by 'nc')

// Note that no duplication in storage level only for running locally.

// Replication necessary in distributed scenario for fault tolerance.

//socket监听网络请求创建stream args(0)机器 args(1)端口号 StorageLevel存储级别

val lines = ssc.socketTextStream(args(), args().toInt, StorageLevel.MEMORY_AND_DISK_SER)

val words = lines.flatMap(_.split(" "))

val wordCounts = words.map(x => (x, )).reduceByKey(_ + _)

wordCounts.print()

ssc.start()

ssc.awaitTermination()

}

}

// scalastyle:on println

下在集群跑一下

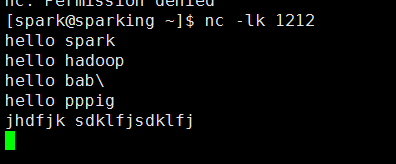

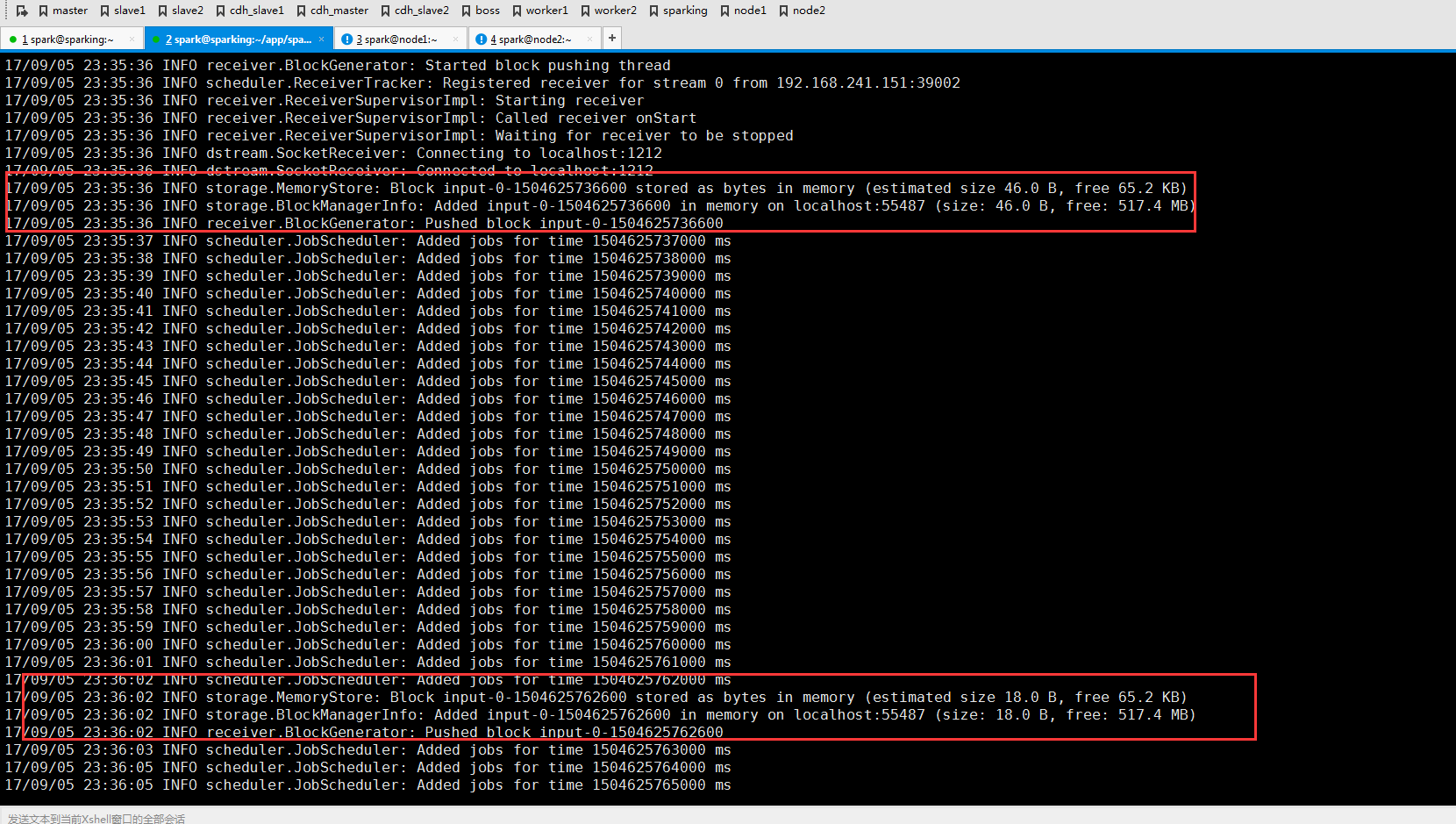

监听1212端口(端口可以自己随便取)

可以看到反馈信息

Spark Streaming 例子的更多相关文章

- Spark Streaming 入门指南

这篇博客帮你开始使用Apache Spark Streaming和HBase.Spark Streaming是核心Spark API的一个扩展,它能够处理连续数据流. Spark Streaming是 ...

- [Spark][Streaming]Spark读取网络输入的例子

Spark读取网络输入的例子: 参考如下的URL进行试验 https://stackoverflow.com/questions/46739081/how-to-get-record-in-strin ...

- spark streaming 入门例子

spark streaming 入门例子: spark shell import org.apache.spark._ import org.apache.spark.streaming._ sc.g ...

- 基于Spark Streaming预测股票走势的例子(一)

最近学习Spark Streaming,不知道是不是我搜索的姿势不对,总找不到具体的.完整的例子,一怒之下就决定自己写一个出来.下面以预测股票走势为例,总结了用Spark Streaming开发的具体 ...

- Spark Streaming 002 统计单词的例子

1.准备 事先在hdfs上创建两个目录: 保存上传数据的目录:hdfs://alamps:9000/library/SparkStreaming/data checkpoint的目录:hdfs://a ...

- spark streaming的有状态例子

import org.apache.spark._ import org.apache.spark.streaming._ /** * Created by code-pc on 16/3/14. * ...

- 一个spark streaming的黑名单过滤小例子

> nc -lk 9999 20190912,sz 20190913,lin package com.lin.spark.streaming import org.apache.spark.Sp ...

- Storm介绍及与Spark Streaming对比

Storm介绍 Storm是由Twitter开源的分布式.高容错的实时处理系统,它的出现令持续不断的流计算变得容易,弥补了Hadoop批处理所不能满足的实时要求.Storm常用于在实时分析.在线机器学 ...

- Spark入门实战系列--7.Spark Streaming(上)--实时流计算Spark Streaming原理介绍

[注]该系列文章以及使用到安装包/测试数据 可以在<倾情大奉送--Spark入门实战系列>获取 .Spark Streaming简介 1.1 概述 Spark Streaming 是Spa ...

随机推荐

- HAWQ + MADlib 玩转数据挖掘之(六)——主成分分析与主成分投影

一.主成分分析(Principal Component Analysis,PCA)简介 在数据挖掘中经常会遇到多个变量的问题,而且在多数情况下,多个变量之间常常存在一定的相关性.例如,网站的" ...

- Foundation--结构体

一,NSRange typedef struct _NSRange { NSUInteger location; NSUInteger length; }NSRange; 这个结构体用来表示事物的一个 ...

- win+linux双系统安装笔记

1.出现win与linux只能引导之一启动,此时启动linux并更改启动文件可以用linux自带的grub2引导启动 2.ubuntu64位安装时需要联网,因为其有bug,镜像文件中缺少gurb2,需 ...

- [LeetCode&Python] Problem 669. Trim a Binary Search Tree

Given a binary search tree and the lowest and highest boundaries as L and R, trim the tree so that a ...

- ConfigUtil读取配置文件

package utils; import java.util.ResourceBundle; public class ConfigUtil { private static ResourceBun ...

- 在Docker中运行crontab

在把自己的项目通过Docker进行打包时,由于项目中用到了crontab,不过使用到的基础镜像python:3.6-slim并没有安装这项服务,记录下在镜像中安装和配置crontab的过程. Dock ...

- 2017年最新cocoapods安装教程(解决淘宝镜像源无效以及其他源下载慢问题)

首先,先来说一下一般的方法吧,就是把之前的淘宝源替换成一个可用的的源: 使用终端查看当前的源 gem sources -l gem sources -r https://rubygems.org/ # ...

- System.out.println()详解 和 HttpServletRequest 和 XMLHttpRequest

System是一个类,位于java.lang这个包里面.out是这个System类的一个PrintStream类型静态属性.println()是这个静态属性out所属类PrintStream的方法. ...

- adnanh webhook 框架 hook rule

adnanh webhook 支持一系列的逻辑操作 AND 所有的条件都必须匹配 { "and": [ { "match": { "type" ...

- nginx 各参数说明

nginx 各参数说明: 参数 所在上下文 含义