23、CacheManager原理剖析与源码分析

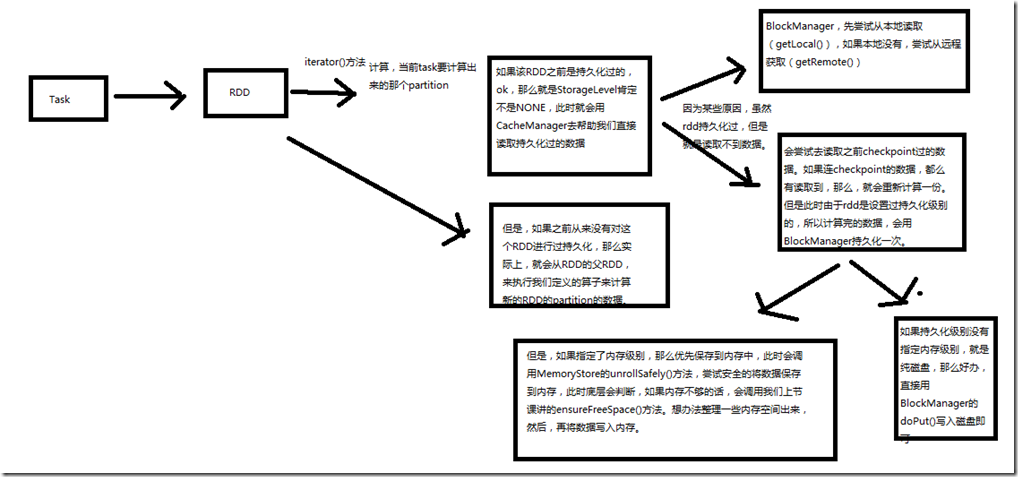

一、图解

二、源码分析

###org.apache.spark.rdd/RDD.scalal

###入口 final def iterator(split: Partition, context: TaskContext): Iterator[T] = {

if (storageLevel != StorageLevel.NONE) {

// cacheManager相关东西

// 如果storageLevel不为NONE,就是说,我们之前持久化过RDD,那么就不要直接去父RDD执行算子,计算新的RDD的partition了

// 优先尝试使用CacheManager,去获取持久化的数据

SparkEnv.get.cacheManager.getOrCompute(this, split, context, storageLevel)

} else {

// 进行rdd partition的计算

computeOrReadCheckpoint(split, context)

}

} ###org.apache.spark/CacheManager.scala def getOrCompute[T](

rdd: RDD[T],

partition: Partition,

context: TaskContext,

storageLevel: StorageLevel): Iterator[T] = { val key = RDDBlockId(rdd.id, partition.index)

logDebug(s"Looking for partition $key")

// 直接用BlockManager来获取数据,如果获取到了,直接返回就好了

blockManager.get(key) match {

case Some(blockResult) =>

// Partition is already materialized, so just return its values

val inputMetrics = blockResult.inputMetrics

val existingMetrics = context.taskMetrics

.getInputMetricsForReadMethod(inputMetrics.readMethod)

existingMetrics.incBytesRead(inputMetrics.bytesRead) val iter = blockResult.data.asInstanceOf[Iterator[T]]

new InterruptibleIterator[T](context, iter) {

override def next(): T = {

existingMetrics.incRecordsRead(1)

delegate.next()

}

}

// 如果从BlockManager获取不到数据,要进行后续的处理

// 虽然RDD持久化过,但是因为未知原因,书籍即不在本地内存或磁盘,也不在远程BlockManager的本地或磁盘

case None =>

// Acquire a lock for loading this partition

// If another thread already holds the lock, wait for it to finish return its results

// 再次调用一次BlockManager的get()方法,去获取数据,如果获取到了,那么直接返回数据,如果还是没有获取数据,那么往后走

val storedValues = acquireLockForPartition[T](key)

if (storedValues.isDefined) {

return new InterruptibleIterator[T](context, storedValues.get)

} // Otherwise, we have to load the partition ourselves

try {

logInfo(s"Partition $key not found, computing it")

// 调用computeOrReadCheckpoint()方法

// 如果rdd之前checkpoint过,那么尝试读取它的checkpoint,如果rdd没有checkpoint过,那么只能重新使用父RDD的数据,执行算子,计算一份

val computedValues = rdd.computeOrReadCheckpoint(partition, context) // If the task is running locally, do not persist the result

if (context.isRunningLocally) {

return computedValues

} // Otherwise, cache the values and keep track of any updates in block statuses

val updatedBlocks = new ArrayBuffer[(BlockId, BlockStatus)]

// 由于走CacheManager,肯定意味着RDD是设置过持久化级别的,只是因为某些原因,持久化数据没有找到,才会到这

// 所以读取了checkpoint的数据,或者是重新计算数据之后,要用putInBlockManager()方法,将数据再BlockManager中持久化一份

val cachedValues = putInBlockManager(key, computedValues, storageLevel, updatedBlocks)

val metrics = context.taskMetrics

val lastUpdatedBlocks = metrics.updatedBlocks.getOrElse(Seq[(BlockId, BlockStatus)]())

metrics.updatedBlocks = Some(lastUpdatedBlocks ++ updatedBlocks.toSeq)

new InterruptibleIterator(context, cachedValues) } finally {

loading.synchronized {

loading.remove(key)

loading.notifyAll()

}

}

}

} ###org.apache.spark.storage/BlockManager.scala // 通过BlockManager获取数据的入口方法,优先从本地获取,如果本地没有,那么从远程获取

def get(blockId: BlockId): Option[BlockResult] = {

val local = getLocal(blockId)

if (local.isDefined) {

logInfo(s"Found block $blockId locally")

return local

}

val remote = getRemote(blockId)

if (remote.isDefined) {

logInfo(s"Found block $blockId remotely")

return remote

}

None

} ###org.apache.spark.rdd/RDD.scalal private[spark] def computeOrReadCheckpoint(split: Partition, context: TaskContext): Iterator[T] =

{

// Checkpointed相关先忽略

if (isCheckpointed) firstParent[T].iterator(split, context) else compute(split, context)

} ###org.apache.spark/CacheManager.scala private def putInBlockManager[T](

key: BlockId,

values: Iterator[T],

level: StorageLevel,

updatedBlocks: ArrayBuffer[(BlockId, BlockStatus)],

effectiveStorageLevel: Option[StorageLevel] = None): Iterator[T] = { val putLevel = effectiveStorageLevel.getOrElse(level)

// 如果持久化级别,没有指定内存级别,仅仅是纯磁盘的级别

if (!putLevel.useMemory) {

/*

* This RDD is not to be cached in memory, so we can just pass the computed values as an

* iterator directly to the BlockManager rather than first fully unrolling it in memory.

*/

updatedBlocks ++=

// 直接调用blockManager的putIterator()方法,将数据写入磁盘即可

blockManager.putIterator(key, values, level, tellMaster = true, effectiveStorageLevel)

blockManager.get(key) match {

case Some(v) => v.data.asInstanceOf[Iterator[T]]

case None =>

logInfo(s"Failure to store $key")

throw new BlockException(key, s"Block manager failed to return cached value for $key!")

}

}

// 如果指定了内存级别,往下看

else {

/*

* This RDD is to be cached in memory. In this case we cannot pass the computed values

* to the BlockManager as an iterator and expect to read it back later. This is because

* we may end up dropping a partition from memory store before getting it back.

*

* In addition, we must be careful to not unroll the entire partition in memory at once.

* Otherwise, we may cause an OOM exception if the JVM does not have enough space for this

* single partition. Instead, we unroll the values cautiously, potentially aborting and

* dropping the partition to disk if applicable.

*/

// 这里会调用blockManager的unrollSafely()方法,尝试将数据写入内存

// 如果unrollSafely()方法判断数据可以写入内存,那么就将数据写入内存

// 如果unrollSafely()方法判断某些数据无法写入内存,那么只能写入磁盘文件

blockManager.memoryStore.unrollSafely(key, values, updatedBlocks) match {

case Left(arr) =>

// We have successfully unrolled the entire partition, so cache it in memory

updatedBlocks ++=

blockManager.putArray(key, arr, level, tellMaster = true, effectiveStorageLevel)

arr.iterator.asInstanceOf[Iterator[T]]

case Right(it) =>

// There is not enough space to cache this partition in memory

val returnValues = it.asInstanceOf[Iterator[T]]

// 如果有些数据实在无法写入内存,那么就判断,数据是否有磁盘级别

// 如果有的话,那么就使用磁盘级别,将数据写入磁盘文件

if (putLevel.useDisk) {

logWarning(s"Persisting partition $key to disk instead.")

val diskOnlyLevel = StorageLevel(useDisk = true, useMemory = false,

useOffHeap = false, deserialized = false, putLevel.replication)

putInBlockManager[T](key, returnValues, level, updatedBlocks, Some(diskOnlyLevel))

} else {

returnValues

}

}

}

} ###org.apache.spark.storage/MemoryStore.scala def unrollSafely(

blockId: BlockId,

values: Iterator[Any],

droppedBlocks: ArrayBuffer[(BlockId, BlockStatus)])

: Either[Array[Any], Iterator[Any]] = { // Number of elements unrolled so far

var elementsUnrolled = 0

// Whether there is still enough memory for us to continue unrolling this block

var keepUnrolling = true

// Initial per-thread memory to request for unrolling blocks (bytes). Exposed for testing.

val initialMemoryThreshold = unrollMemoryThreshold

// How often to check whether we need to request more memory

val memoryCheckPeriod = 16

// Memory currently reserved by this thread for this particular unrolling operation

var memoryThreshold = initialMemoryThreshold

// Memory to request as a multiple of current vector size

val memoryGrowthFactor = 1.5

// Previous unroll memory held by this thread, for releasing later (only at the very end)

val previousMemoryReserved = currentUnrollMemoryForThisThread

// Underlying vector for unrolling the block

var vector = new SizeTrackingVector[Any] // Request enough memory to begin unrolling

keepUnrolling = reserveUnrollMemoryForThisThread(initialMemoryThreshold) if (!keepUnrolling) {

logWarning(s"Failed to reserve initial memory threshold of " +

s"${Utils.bytesToString(initialMemoryThreshold)} for computing block $blockId in memory.")

} // Unroll this block safely, checking whether we have exceeded our threshold periodically

try {

while (values.hasNext && keepUnrolling) {

vector += values.next()

if (elementsUnrolled % memoryCheckPeriod == 0) {

// If our vector's size has exceeded the threshold, request more memory

val currentSize = vector.estimateSize()

if (currentSize >= memoryThreshold) {

val amountToRequest = (currentSize * memoryGrowthFactor - memoryThreshold).toLong

// Hold the accounting lock, in case another thread concurrently puts a block that

// takes up the unrolling space we just ensured here

accountingLock.synchronized {

if (!reserveUnrollMemoryForThisThread(amountToRequest)) {

// If the first request is not granted, try again after ensuring free space

// If there is still not enough space, give up and drop the partition

val spaceToEnsure = maxUnrollMemory - currentUnrollMemory

// 反复循环,判断,只要还有数据没有写入内存,而且可以继续尝试往内存中写

// 那么就判断,如果内存大小够不够存放数据,调用ensureFreeSpace()方法,尝试清空一些内存空间

if (spaceToEnsure > 0) {

val result = ensureFreeSpace(blockId, spaceToEnsure)

droppedBlocks ++= result.droppedBlocks

}

keepUnrolling = reserveUnrollMemoryForThisThread(amountToRequest)

}

}

// New threshold is currentSize * memoryGrowthFactor

memoryThreshold += amountToRequest

}

}

elementsUnrolled += 1

} if (keepUnrolling) {

// We successfully unrolled the entirety of this block

Left(vector.toArray)

} else {

// We ran out of space while unrolling the values for this block

logUnrollFailureMessage(blockId, vector.estimateSize())

Right(vector.iterator ++ values)

} } finally {

// If we return an array, the values returned do not depend on the underlying vector and

// we can immediately free up space for other threads. Otherwise, if we return an iterator,

// we release the memory claimed by this thread later on when the task finishes.

if (keepUnrolling) {

val amountToRelease = currentUnrollMemoryForThisThread - previousMemoryReserved

releaseUnrollMemoryForThisThread(amountToRelease)

}

}

}

23、CacheManager原理剖析与源码分析的更多相关文章

- 65、Spark Streaming:数据接收原理剖析与源码分析

一.数据接收原理 二.源码分析 入口包org.apache.spark.streaming.receiver下ReceiverSupervisorImpl类的onStart()方法 ### overr ...

- 66、Spark Streaming:数据处理原理剖析与源码分析(block与batch关系透彻解析)

一.数据处理原理剖析 每隔我们设置的batch interval 的time,就去找ReceiverTracker,将其中的,从上次划分batch的时间,到目前为止的这个batch interval ...

- 20、Task原理剖析与源码分析

一.Task原理 1.图解 二.源码分析 1. ###org.apache.spark.executor/Executor.scala /** * 从TaskRunner开始,来看Task的运行的工作 ...

- 18、TaskScheduler原理剖析与源码分析

一.源码分析 ###入口 ###org.apache.spark.scheduler/DAGScheduler.scala // 最后,针对stage的task,创建TaskSet对象,调用taskS ...

- 64、Spark Streaming:StreamingContext初始化与Receiver启动原理剖析与源码分析

一.StreamingContext源码分析 ###入口 org.apache.spark.streaming/StreamingContext.scala /** * 在创建和完成StreamCon ...

- 22、BlockManager原理剖析与源码分析

一.原理 1.图解 Driver上,有BlockManagerMaster,它的功能,就是负责对各个节点上的BlockManager内部管理的数据的元数据进行维护, 比如Block的增删改等操作,都会 ...

- 21、Shuffle原理剖析与源码分析

一.普通shuffle原理 1.图解 假设有一个节点上面运行了4个 ShuffleMapTask,然后这个节点上只有2个 cpu core.假如有另外一台节点,上面也运行了4个ResultTask,现 ...

- 19、Executor原理剖析与源码分析

一.原理图解 二.源码分析 1.Executor注册机制 worker中为Application启动的executor,实际上是启动了这个CoarseGrainedExecutorBackend进程: ...

- 16、job触发流程原理剖析与源码分析

一.以Wordcount为例来分析 1.Wordcount val lines = sc.textFile() val words = lines.flatMap(line => line.sp ...

随机推荐

- Bootstrap-table 使用总结,和其参数说明

转载于:https://www.cnblogs.com/laowangc/p/8875526.html 一.什么是Bootstrap-table? 在业务系统开发中,对表格记录的查询.分页.排序等处理 ...

- Spring AOP创建BeforeAdvice和AfterAdvice实例

BeforeAdvice 1.会在目标对象的方法执行之前被调用. 2.通过实现MethodBeforeAdvice接口来实现. 3.该接口中定义了一个方法即before方法,before方法会在目标对 ...

- Oracle 11g安装过程工作Oracle数据库安装图解

一.Oracle 下载 注意Oracle分成两个文件,下载完后,将两个文件解压到同一目录下即可. 路径名称中,最好不要出现中文,也不要出现空格等不规则字符. 官方下地址: oracle.com/tec ...

- ② Python3.0 运算符

Python3.0 语言支持的运算符有: 算术运算符.比较(关系)运算符.赋值运算符.逻辑运算符.位运算符.成员运算符.身份运算符.运算符优先级 一.算术运算符 常见的算术运算符有+,-,*,/,%, ...

- ubuntu ufw 配置

ubuntu ufw 配置 Ubuntu 18.04 LTS 系统中已经默认附带了 UFW 工具,如果您的系统中没有安装,可以在「终端」中执行如下命令进行安装: 1 sudo apt install ...

- Matlab函数装饰器

info.m function result_func= info(msg) function res_func =wrap(func) function varargout = inner_wrap ...

- thinkphp5 使用PHPExcel 导入导出

首先下载PHPExcel类.网上很多,自行下载. 然后把文件放到vendor文件里面. 一般引用vendor里面的类或者插件用vendor(); 里面加载的就是vendor文件,然后想要加载哪个文件, ...

- oracle rpad()和lpad()函数

函数参数:rpad( string1, padded_length, [ pad_string ] ) rpad函数从右边对字符串使用指定的字符进行填充 string 表示:被填充的字符串 padde ...

- P1801 黑匣子[堆]

题目描述 Black Box是一种原始的数据库.它可以储存一个整数数组,还有一个特别的变量i.最开始的时候Black Box是空的.而i等于0.这个Black Box要处理一串命令. 命令只有两种: ...

- 题解 洛谷P3745 【[六省联考2017]期末考试】

这题有点绕,我写了\(2h\)终于搞明白了. 主要思路:枚举最晚公布成绩的时间\(maxt\),然后将所有公布时间大于\(maxt\)的课程都严格降为\(maxt\)即可. 在此之前,还要搞清楚一个概 ...