【spark】spark应用(分布式估算圆周率+基于Spark MLlib的贷款风险预测)

注:本章不涉及spark和scala原理的探讨,详情见其他随笔

一、分布式估算圆周率

计算原理:

假设正方形的面积S等于x²,而正方形的内切圆的面积C等于Pi×(x/2)²,因此圆面积与正方形面积之比C/S就为Pi/4,于是就有Pi=4×C/S。

可以利用计算机随机产生大量位于正方形内部的点,通过点的数量去近似表示面积。假设位于正方形中点的数量为Ps,落在圆内的点的数量为Pc,则随机点的数量趋近于无穷时,4×Pc/Ps将逼近于Pi。

idea实现代码:

package com.hadoop import scala.math.random

import org.apache.spark._ //导入spark包

/**

* 在一个边长为2的正方形内画个圆,正方形的面积 S1=4,圆的半径 r=1,面积 S2=πr^2=π现在只需要计算出S2就可以知道π,

* 这里取圆心为坐标轴原点,在正方向中不断的随机选点,总共选n个点,

* 计算在圆内的点的数目为count,则 S2=S1*count/n,然后就出来了

* -- 蒙特-卡罗方法

*/

object sparkPi {

def main(args: Array[String]) {

/*初始化SparkContext*/

val conf = new SparkConf().setAppName("sparkPi") //创建SparkConf

val spark = new SparkContext(conf) //基于SparkConf创建一个SparkContext对象

val slices = if (args.length > 0) args(0).toInt else 2

val n = 10000 * slices //选n个点

val count = spark.parallelize(1 to n, slices).map { i => //RDD创建:将[1:20000]的集合传给SparkContext中parallelize方法。RDD操作:map函数应用RDD里面每个函数并创建新的RDD

/*这里取圆心为坐标轴原点,在正方向中不断的随机选点*/

val x = random * 2 - 1

val y = random * 2 - 1

if (x * x + y * y < 1) 1 else 0 //若选的这个点在圆内则计1,不是则计0

}.reduce(_ + _) //对已有的值进行相加

println("Pi is roughly " + 4.0 * count / n) //pi=S2=S1*count/n

spark.stop()

}

}

分布式运行测试:

分布式运行,指在客户端以命令行方式想spark集群提交jar包的运行方式,所以需要将上面的程序编译成jar包(俗称打jar包)

打jar包的方式:

File -- Project Structure -- Artifacts -- + -- jar -- From modules with dependencies -- 将Main Class设置为com.hadoop.sparkPi -- OK -- 在Output Layout下只留下一个compile output -- OK -- Build-Build Artifacts-Build

复制到spark安装目录下:

[hadoop@hadoop01 ~]$ cp /home/hadoop/IdeaProjects/sparkapp/out/artifacts/sparkapp_jar/sparkapp.jar /home/hadoop/spark-2.4.4-bin-without-hadoop

跳转到spark安装目录下运行:、

[hadoop@hadoop01 ~]$ cd spark-2.4.4-bin-without-hadoop

A.本地模式

[hadoop@hadoop01 spark-2.4.4-bin-without-hadoop]$ bin/spark-submit --master local --class com.hadoop.sparkPi sparkapp.jar

运行结果:

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/spark-2.4.4-bin-without-hadoop/jars/slf4j-log4j12-1.7.16.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/hadoop-3.2.0/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

2019-10-04 11:12:09,551 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2019-10-04 11:12:09,777 INFO spark.SparkContext: Running Spark version 2.4.4

2019-10-04 11:12:09,801 INFO spark.SparkContext: Submitted application: sparkPi

2019-10-04 11:12:09,862 INFO spark.SecurityManager: Changing view acls to: hadoop

2019-10-04 11:12:09,862 INFO spark.SecurityManager: Changing modify acls to: hadoop

2019-10-04 11:12:09,862 INFO spark.SecurityManager: Changing view acls groups to:

2019-10-04 11:12:09,862 INFO spark.SecurityManager: Changing modify acls groups to:

2019-10-04 11:12:09,862 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); groups with view permissions: Set(); users with modify permissions: Set(hadoop); groups with modify permissions: Set()

2019-10-04 11:12:10,140 INFO util.Utils: Successfully started service 'sparkDriver' on port 34911.

2019-10-04 11:12:10,168 INFO spark.SparkEnv: Registering MapOutputTracker

2019-10-04 11:12:10,190 INFO spark.SparkEnv: Registering BlockManagerMaster

2019-10-04 11:12:10,191 INFO storage.BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

2019-10-04 11:12:10,192 INFO storage.BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

2019-10-04 11:12:10,204 INFO storage.DiskBlockManager: Created local directory at /tmp/blockmgr-f08f8fb2-3c19-4f99-b24e-df08f23cff23

2019-10-04 11:12:10,220 INFO memory.MemoryStore: MemoryStore started with capacity 1048.8 MB

2019-10-04 11:12:10,235 INFO spark.SparkEnv: Registering OutputCommitCoordinator

2019-10-04 11:12:10,300 INFO util.log: Logging initialized @2617ms

2019-10-04 11:12:10,353 INFO server.Server: jetty-9.3.z-SNAPSHOT, build timestamp: 2018-06-06T01:11:56+08:00, git hash: 84205aa28f11a4f31f2a3b86d1bba2cc8ab69827

2019-10-04 11:12:10,371 INFO server.Server: Started @2689ms

2019-10-04 11:12:10,385 INFO server.AbstractConnector: Started ServerConnector@3c2772d1{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

2019-10-04 11:12:10,385 INFO util.Utils: Successfully started service 'SparkUI' on port 4040.

2019-10-04 11:12:10,411 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@c3c4c1c{/jobs,null,AVAILABLE,@Spark}

2019-10-04 11:12:10,411 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@9f6e406{/jobs/json,null,AVAILABLE,@Spark}

2019-10-04 11:12:10,412 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@7a94b64e{/jobs/job,null,AVAILABLE,@Spark}

2019-10-04 11:12:10,413 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@12477988{/jobs/job/json,null,AVAILABLE,@Spark}

2019-10-04 11:12:10,414 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@2caf6912{/stages,null,AVAILABLE,@Spark}

2019-10-04 11:12:10,415 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@73d69c0f{/stages/json,null,AVAILABLE,@Spark}

2019-10-04 11:12:10,415 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@34237b90{/stages/stage,null,AVAILABLE,@Spark}

2019-10-04 11:12:10,416 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@d400943{/stages/stage/json,null,AVAILABLE,@Spark}

2019-10-04 11:12:10,417 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@22101c80{/stages/pool,null,AVAILABLE,@Spark}

2019-10-04 11:12:10,417 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@31ff1390{/stages/pool/json,null,AVAILABLE,@Spark}

2019-10-04 11:12:10,417 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@759d81f3{/storage,null,AVAILABLE,@Spark}

2019-10-04 11:12:10,418 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@781a9412{/storage/json,null,AVAILABLE,@Spark}

2019-10-04 11:12:10,418 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5a4c638d{/storage/rdd,null,AVAILABLE,@Spark}

2019-10-04 11:12:10,419 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@13e698c7{/storage/rdd/json,null,AVAILABLE,@Spark}

2019-10-04 11:12:10,419 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@aed0151{/environment,null,AVAILABLE,@Spark}

2019-10-04 11:12:10,419 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@267bbe1a{/environment/json,null,AVAILABLE,@Spark}

2019-10-04 11:12:10,420 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@1f12e153{/executors,null,AVAILABLE,@Spark}

2019-10-04 11:12:10,420 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@389562d6{/executors/json,null,AVAILABLE,@Spark}

2019-10-04 11:12:10,421 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5a101b1c{/executors/threadDump,null,AVAILABLE,@Spark}

2019-10-04 11:12:10,422 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@2160e52a{/executors/threadDump/json,null,AVAILABLE,@Spark}

2019-10-04 11:12:10,428 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@29f0802c{/static,null,AVAILABLE,@Spark}

2019-10-04 11:12:10,429 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@779de014{/,null,AVAILABLE,@Spark}

2019-10-04 11:12:10,431 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5c41d037{/api,null,AVAILABLE,@Spark}

2019-10-04 11:12:10,432 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@1450078a{/jobs/job/kill,null,AVAILABLE,@Spark}

2019-10-04 11:12:10,433 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@c68a5f8{/stages/stage/kill,null,AVAILABLE,@Spark}

2019-10-04 11:12:10,436 INFO ui.SparkUI: Bound SparkUI to 0.0.0.0, and started at http://hadoop01:4040

2019-10-04 11:12:10,467 INFO spark.SparkContext: Added JAR file:/home/hadoop/spark-2.4.4-bin-without-hadoop/sparkapp.jar at spark://hadoop01:34911/jars/sparkapp.jar with timestamp 1570158730467

2019-10-04 11:12:10,513 INFO executor.Executor: Starting executor ID driver on host localhost

2019-10-04 11:12:10,583 INFO util.Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 37776.

2019-10-04 11:12:10,583 INFO netty.NettyBlockTransferService: Server created on hadoop01:37776

2019-10-04 11:12:10,584 INFO storage.BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

2019-10-04 11:12:10,606 INFO storage.BlockManagerMaster: Registering BlockManager BlockManagerId(driver, hadoop01, 37776, None)

2019-10-04 11:12:10,611 INFO storage.BlockManagerMasterEndpoint: Registering block manager hadoop01:37776 with 1048.8 MB RAM, BlockManagerId(driver, hadoop01, 37776, None)

2019-10-04 11:12:10,614 INFO storage.BlockManagerMaster: Registered BlockManager BlockManagerId(driver, hadoop01, 37776, None)

2019-10-04 11:12:10,616 INFO storage.BlockManager: Initialized BlockManager: BlockManagerId(driver, hadoop01, 37776, None)

2019-10-04 11:12:10,745 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6aef4eb8{/metrics/json,null,AVAILABLE,@Spark}

2019-10-04 11:12:11,013 INFO spark.SparkContext: Starting job: reduce at sparkPi.scala:20

2019-10-04 11:12:11,036 INFO scheduler.DAGScheduler: Got job 0 (reduce at sparkPi.scala:20) with 2 output partitions

2019-10-04 11:12:11,036 INFO scheduler.DAGScheduler: Final stage: ResultStage 0 (reduce at sparkPi.scala:20)

2019-10-04 11:12:11,036 INFO scheduler.DAGScheduler: Parents of final stage: List()

2019-10-04 11:12:11,037 INFO scheduler.DAGScheduler: Missing parents: List()

2019-10-04 11:12:11,041 INFO scheduler.DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[1] at map at sparkPi.scala:16), which has no missing parents

2019-10-04 11:12:11,107 INFO memory.MemoryStore: Block broadcast_0 stored as values in memory (estimated size 1904.0 B, free 1048.8 MB)

2019-10-04 11:12:11,139 INFO memory.MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 1254.0 B, free 1048.8 MB)

2019-10-04 11:12:11,142 INFO storage.BlockManagerInfo: Added broadcast_0_piece0 in memory on hadoop01:37776 (size: 1254.0 B, free: 1048.8 MB)

2019-10-04 11:12:11,144 INFO spark.SparkContext: Created broadcast 0 from broadcast at DAGScheduler.scala:1161

2019-10-04 11:12:11,210 INFO scheduler.DAGScheduler: Submitting 2 missing tasks from ResultStage 0 (MapPartitionsRDD[1] at map at sparkPi.scala:16) (first 15 tasks are for partitions Vector(0, 1))

2019-10-04 11:12:11,211 INFO scheduler.TaskSchedulerImpl: Adding task set 0.0 with 2 tasks

2019-10-04 11:12:11,255 INFO scheduler.TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, localhost, executor driver, partition 0, PROCESS_LOCAL, 7866 bytes)

2019-10-04 11:12:11,262 INFO executor.Executor: Running task 0.0 in stage 0.0 (TID 0)

2019-10-04 11:12:11,271 INFO executor.Executor: Fetching spark://hadoop01:34911/jars/sparkapp.jar with timestamp 1570158730467

2019-10-04 11:12:11,346 INFO client.TransportClientFactory: Successfully created connection to hadoop01/192.168.1.100:34911 after 27 ms (0 ms spent in bootstraps)

2019-10-04 11:12:11,352 INFO util.Utils: Fetching spark://hadoop01:34911/jars/sparkapp.jar to /tmp/spark-c35e81e3-5419-4858-b25c-93fbbc73e431/userFiles-c7eb44d6-5f78-4f9e-bfdf-986881e946b4/fetchFileTemp656185270030476350.tmp

2019-10-04 11:12:11,390 INFO executor.Executor: Adding file:/tmp/spark-c35e81e3-5419-4858-b25c-93fbbc73e431/userFiles-c7eb44d6-5f78-4f9e-bfdf-986881e946b4/sparkapp.jar to class loader

2019-10-04 11:12:11,426 INFO executor.Executor: Finished task 0.0 in stage 0.0 (TID 0). 824 bytes result sent to driver

2019-10-04 11:12:11,429 INFO scheduler.TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, localhost, executor driver, partition 1, PROCESS_LOCAL, 7923 bytes)

2019-10-04 11:12:11,431 INFO executor.Executor: Running task 1.0 in stage 0.0 (TID 1)

2019-10-04 11:12:11,437 INFO scheduler.TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 199 ms on localhost (executor driver) (1/2)

2019-10-04 11:12:11,438 INFO executor.Executor: Finished task 1.0 in stage 0.0 (TID 1). 824 bytes result sent to driver

2019-10-04 11:12:11,445 INFO scheduler.TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 17 ms on localhost (executor driver) (2/2)

2019-10-04 11:12:11,447 INFO scheduler.TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

2019-10-04 11:12:11,449 INFO scheduler.DAGScheduler: ResultStage 0 (reduce at sparkPi.scala:20) finished in 0.392 s

2019-10-04 11:12:11,456 INFO scheduler.DAGScheduler: Job 0 finished: reduce at sparkPi.scala:20, took 0.442551 s

Pi is roughly 3.1378

2019-10-04 11:12:11,466 INFO server.AbstractConnector: Stopped Spark@3c2772d1{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

2019-10-04 11:12:11,471 INFO ui.SparkUI: Stopped Spark web UI at http://hadoop01:4040

2019-10-04 11:12:11,481 INFO spark.MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

2019-10-04 11:12:11,501 INFO memory.MemoryStore: MemoryStore cleared

2019-10-04 11:12:11,502 INFO storage.BlockManager: BlockManager stopped

2019-10-04 11:12:11,508 INFO storage.BlockManagerMaster: BlockManagerMaster stopped

2019-10-04 11:12:11,509 INFO scheduler.OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

2019-10-04 11:12:11,518 INFO spark.SparkContext: Successfully stopped SparkContext

2019-10-04 11:12:11,520 INFO util.ShutdownHookManager: Shutdown hook called

2019-10-04 11:12:11,522 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-2edea92d-9604-43f3-99c1-8e541a518199

2019-10-04 11:12:11,527 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-c35e81e3-5419-4858-b25c-93fbbc73e431

如果只想显示结果,则执行:

[hadoop@hadoop01 spark-2.4.4-bin-without-hadoop]$ bin/spark-submit --master local --class com.hadoop.sparkPi sparkapp.jar 2>&1 | grep "Pi is roughly"

结果:Pi is roughly 3.1384

B.Yarn-cluster模式(需先启动hadoop与spark)

[hadoop@hadoop01 spark-2.4.4-bin-without-hadoop]$ bin/spark-submit --master yarn-cluster --class com.hadoop.sparkPi sparkapp.jar

输出内容:

2019-10-04 13:08:55,136 INFO yarn.Client:

client token: N/A

diagnostics: AM container is launched, waiting for AM container to Register with RM

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: default

start time: 1570165734049

final status: UNDEFINED

tracking URL: http://hadoop01:8088/proxy/application_1570165372810_0002/

user: hadoop

结果在Tracking URL里的logs中的stdout中查看:

b_1:进入http://hadoop01:50070网页

b_2:点击logs里面的user_logs目录,如:/logs/userlogs/

b_3:点击对应的文件,如:application_1570165372810_0002(对应前面输出内容里面的文件)

b_4:点开里面的stdout,就可以看见输出结果了

输出结果:

Pi is roughly 3.1294

C.Yarn-client模式(需先启动hadoop与spark)

[hadoop@hadoop01 spark-2.4.4-bin-without-hadoop]$ bin/spark-submit --master yarn-client --class com.hadoop.sparkPi sparkapp.jar

二、基于Spark MLlib的贷款风险预测

创建工程,编辑启动配置:

Edit Configuration -- Application

Name (Credit)

Main Class (com.hadoop.Credit)

Program arguments (/home/hadoop/IdeaProjects/Gredit)

VM options (-Dspark.master=local -Dspark.app.name=Credit -server -XX:PermSize=128M -XX:MaxPermSize=256M)

添加spark依赖包:

File -- Project Structure -- Libraries -- + -- Java -- /home/hadoop/spark-2.4.4-bin-without-hadoop/jars下的所有jar包-OK

拷贝UserGredit.csv文件到 /home/hadoop/IdeaProjects/Gredit/ 目录下

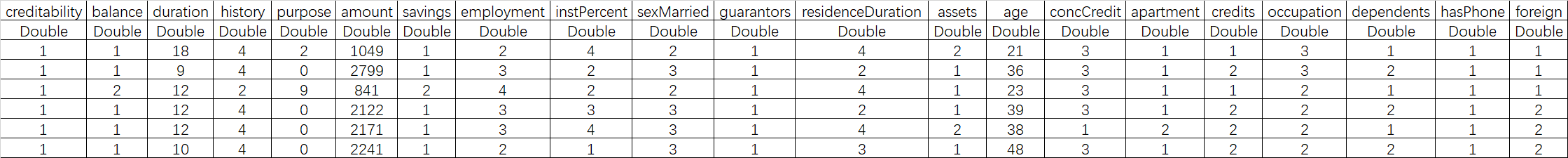

UserGredit.csv内容:

1,1,18,4,2,1049,1,2,4,2,1,4,2,21,3,1,1,3,1,1,1

1,1,9,4,0,2799,1,3,2,3,1,2,1,36,3,1,2,3,2,1,1

1,2,12,2,9,841,2,4,2,2,1,4,1,23,3,1,1,2,1,1,1

1,1,12,4,0,2122,1,3,3,3,1,2,1,39,3,1,2,2,2,1,2

1,1,12,4,0,2171,1,3,4,3,1,4,2,38,1,2,2,2,1,1,2

1,1,10,4,0,2241,1,2,1,3,1,3,1,48,3,1,2,2,2,1,2

注:完整的用户信用数据(UserGredit)

拷贝测试程序到运行界面(其中需要修改文件名):

package com.hadoop /**导入机器学习相关算法包*/

import org.apache.spark._

import org.apache.spark.rdd.RDD

import org.apache.spark.sql.SQLContext

import org.apache.spark.sql.functions._

import org.apache.spark.sql.types._

import org.apache.spark.sql._

import org.apache.spark.ml.classification.RandomForestClassifier

import org.apache.spark.ml.evaluation.BinaryClassificationEvaluator

import org.apache.spark.ml.feature.StringIndexer

import org.apache.spark.ml.feature.VectorAssembler

import org.apache.spark.ml.tuning.{ ParamGridBuilder, CrossValidator }

import org.apache.spark.ml.{ Pipeline, PipelineStage }

import org.apache.spark.mllib.evaluation.RegressionMetrics /*创建一个Gredit对象*/

object Gredit {

//创建一个Credit类,定义Credit类的属性

case class Credit(

creditability: Double,

balance: Double, duration: Double, history: Double, purpose: Double, amount: Double,

savings: Double, employment: Double, instPercent: Double, sexMarried: Double, guarantors: Double,

residenceDuration: Double, assets: Double, age: Double, concCredit: Double, apartment: Double,

credits: Double, occupation: Double, dependents: Double, hasPhone: Double, foreign: Double

)

//解析Credit文件每行数据,将值存入Credit中

def parseCredit(line: Array[Double]): Credit = {

Credit(

line(0),

line(1) - 1, line(2), line(3), line(4), line(5),

line(6) - 1, line(7) - 1, line(8), line(9) - 1, line(10) - 1,

line(11) - 1, line(12) - 1, line(13), line(14) - 1, line(15) - 1,

line(16) - 1, line(17) - 1, line(18) - 1, line(19) - 1, line(20) - 1

)

}

//将数据类型从String转换成Double类型

def parseRDD(rdd: RDD[String]): RDD[Array[Double]] = {

rdd.map(_.split(",")).map(_.map(_.toDouble))

}

/**

*主函数

*/

def main(args: Array[String]) {

/**导入UserGredit.csv文件中数据,并存储为一个String类型的RDD

*对RDD做Map操作,将RDD中的每个字符串经过ParseRDDR函数的映射,转换为一个Double类型的数组

*对RDD做另一个Map操作,使用ParseCredit函数,将每个Double类型的RDD转换为Credit对象

*toDF()函数则是将Array[[Credit]]类型的RDD转换为一个Credit类的DataFrame

*/

val conf = new SparkConf().setAppName("SparkDFebay")

val sc = new SparkContext(conf)

val sqlContext = new SQLContext(sc)

import sqlContext._

import sqlContext.implicits._

val creditDF = parseRDD(sc.textFile("UserGredit.csv")).map(parseCredit).toDF().cache()

creditDF.registerTempTable("credit")

//以树状形式打印出Credit每个字段的含义

creditDF.printSchema

//显示DataFrame,默认前20行

creditDF.show

//用sqlContext执行SQL命令,并显示出来

sqlContext.sql("SELECT creditability, avg(balance) as avgbalance, avg(amount) as avgamt, avg(duration) as avgdur FROM credit GROUP BY creditability ").show creditDF.describe("balance").show

creditDF.groupBy("creditability").avg("balance").show

//将数据的每个特征列转换为特征矢量

val featureCols = Array("balance", "duration", "history", "purpose", "amount",

"savings", "employment", "instPercent", "sexMarried", "guarantors",

"residenceDuration", "assets", "age", "concCredit", "apartment",

"credits", "occupation", "dependents", "hasPhone", "foreign")

//设置输入和输出的列名,用VectorAssembler()方法将每个维度的特征做转换

val assembler = new VectorAssembler().setInputCols(featureCols).setOutputCol("features")

//返回一个新的DataFrame,新增一个feature列

val df2 = assembler.transform(creditDF)

df2.show

//使用StringIndexer方法返回一个DataFrame,增加信用度这一列作为标签

val labelIndexer = new StringIndexer().setInputCol("creditability").setOutputCol("label")

val df3 = labelIndexer.fit(df2).transform(df2)

df3.show

//为了训练模型,数据集分为训练数据和测试数据两个部分,70%的数据用来训练模型,30%的数据用来测试模型

val splitSeed = 5043

val Array(trainingData, testData) = df3.randomSplit(Array(0.7, 0.3), splitSeed)

//注:后面的算法这块,后面有时间再更新哈!!!

val classifier = new RandomForestClassifier().setImpurity("gini").setMaxDepth(3).setNumTrees(20).setFeatureSubsetStrategy("auto").setSeed(5043)

val model = classifier.fit(trainingData) val evaluator = new BinaryClassificationEvaluator().setLabelCol("label")

val predictions = model.transform(testData)

model.toDebugString val accuracy = evaluator.evaluate(predictions)

println("accuracy before pipeline fitting" + accuracy) val rm = new RegressionMetrics(

predictions.select("prediction", "label").rdd.map(x =>

(x(0).asInstanceOf[Double], x(1).asInstanceOf[Double]))

)

println("MSE: " + rm.meanSquaredError)

println("MAE: " + rm.meanAbsoluteError)

println("RMSE Squared: " + rm.rootMeanSquaredError)

println("R Squared: " + rm.r2)

println("Explained Variance: " + rm.explainedVariance + "\n") val paramGrid = new ParamGridBuilder()

.addGrid(classifier.maxBins, Array(25, 31))

.addGrid(classifier.maxDepth, Array(5, 10))

.addGrid(classifier.numTrees, Array(20, 60))

.addGrid(classifier.impurity, Array("entropy", "gini"))

.build() val steps: Array[PipelineStage] = Array(classifier)

val pipeline = new Pipeline().setStages(steps) val cv = new CrossValidator()

.setEstimator(pipeline)

.setEvaluator(evaluator)

.setEstimatorParamMaps(paramGrid)

.setNumFolds(10) val pipelineFittedModel = cv.fit(trainingData) val predictions2 = pipelineFittedModel.transform(testData)

val accuracy2 = evaluator.evaluate(predictions2)

println("accuracy after pipeline fitting" + accuracy2) println(pipelineFittedModel.bestModel.asInstanceOf[org.apache.spark.ml.PipelineModel].stages(0)) pipelineFittedModel

.bestModel.asInstanceOf[org.apache.spark.ml.PipelineModel]

.stages(0)

.extractParamMap val rm2 = new RegressionMetrics(

predictions2.select("prediction", "label").rdd.map(x =>

(x(0).asInstanceOf[Double], x(1).asInstanceOf[Double]))

) println("MSE: " + rm2.meanSquaredError)

println("MAE: " + rm2.meanAbsoluteError)

println("RMSE Squared: " + rm2.rootMeanSquaredError)

println("R Squared: " + rm2.r2)

println("Explained Variance: " + rm2.explainedVariance + "\n") }

}

第一次,运行报错:

Exception in thread "main" java.lang.IllegalArgumentException: System memory 425197568 must be at least 471859200.

在main主函数下:val conf = new SparkConf().setAppName("SparkDFebay")后面添加“.set("spark.testing.memory","2147480000")”

添加后:val conf = new SparkConf().setAppName("SparkDFebay").set("spark.testing.memory","2147480000")

第二次,RUN PROJECT 运行程序,查看结果:

输出结果:日志INFO太多了,看不到啥。考虑将INFO日志隐藏

解决方法:就是将spark安装文件夹下的默认日志配置文件拷贝到工程的src下并修改在控制台显示的日志的级别。

[hadoop@hadoop01 ~]$ cd spark-2.4.4-bin-without-hadoop/conf

[hadoop@hadoop01 conf]$ cp log4j.properties.template /home/hadoop/IdeaProjects/Gredit/src/

[hadoop@hadoop01 conf]$ cd /home/hadoop/IdeaProjects/Gredit/src/

[hadoop@hadoop01 src]$ mv log4j.properties.template log4j.properties

[hadoop@hadoop01 src]$ gedit log4j.properties

在日志的配置文件中修改日志级别,只将ERROR级别的日志输出在控制台

log4j.properties修改内容:

log4j.rootCategory=ERROR, console

第三次,运行查看结果:

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128M; support was removed in 8.0

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=256M; support was removed in 8.0

root

|-- creditability: double (nullable = false)

|-- balance: double (nullable = false)

|-- duration: double (nullable = false)

|-- history: double (nullable = false)

|-- purpose: double (nullable = false)

|-- amount: double (nullable = false)

|-- savings: double (nullable = false)

|-- employment: double (nullable = false)

|-- instPercent: double (nullable = false)

|-- sexMarried: double (nullable = false)

|-- guarantors: double (nullable = false)

|-- residenceDuration: double (nullable = false)

|-- assets: double (nullable = false)

|-- age: double (nullable = false)

|-- concCredit: double (nullable = false)

|-- apartment: double (nullable = false)

|-- credits: double (nullable = false)

|-- occupation: double (nullable = false)

|-- dependents: double (nullable = false)

|-- hasPhone: double (nullable = false)

|-- foreign: double (nullable = false) +-------------+-------+--------+-------+-------+------+-------+----------+-----------+----------+----------+-----------------+------+----+----------+---------+-------+----------+----------+--------+-------+

|creditability|balance|duration|history|purpose|amount|savings|employment|instPercent|sexMarried|guarantors|residenceDuration|assets| age|concCredit|apartment|credits|occupation|dependents|hasPhone|foreign|

+-------------+-------+--------+-------+-------+------+-------+----------+-----------+----------+----------+-----------------+------+----+----------+---------+-------+----------+----------+--------+-------+

| 1.0| 0.0| 18.0| 4.0| 2.0|1049.0| 0.0| 1.0| 4.0| 1.0| 0.0| 3.0| 1.0|21.0| 2.0| 0.0| 0.0| 2.0| 0.0| 0.0| 0.0|

| 1.0| 0.0| 9.0| 4.0| 0.0|2799.0| 0.0| 2.0| 2.0| 2.0| 0.0| 1.0| 0.0|36.0| 2.0| 0.0| 1.0| 2.0| 1.0| 0.0| 0.0|

| 1.0| 1.0| 12.0| 2.0| 9.0| 841.0| 1.0| 3.0| 2.0| 1.0| 0.0| 3.0| 0.0|23.0| 2.0| 0.0| 0.0| 1.0| 0.0| 0.0| 0.0|

| 1.0| 0.0| 12.0| 4.0| 0.0|2122.0| 0.0| 2.0| 3.0| 2.0| 0.0| 1.0| 0.0|39.0| 2.0| 0.0| 1.0| 1.0| 1.0| 0.0| 1.0|

| 1.0| 0.0| 12.0| 4.0| 0.0|2171.0| 0.0| 2.0| 4.0| 2.0| 0.0| 3.0| 1.0|38.0| 0.0| 1.0| 1.0| 1.0| 0.0| 0.0| 1.0|

| 1.0| 0.0| 10.0| 4.0| 0.0|2241.0| 0.0| 1.0| 1.0| 2.0| 0.0| 2.0| 0.0|48.0| 2.0| 0.0| 1.0| 1.0| 1.0| 0.0| 1.0|

+-------------+-------+--------+-------+-------+------+-------+----------+-----------+----------+----------+-----------------+------+----+----------+---------+-------+----------+----------+--------+-------+ +-------------+-------------------+------+------------------+

|creditability| avgbalance|avgamt| avgdur|

+-------------+-------------------+------+------------------+

| 1.0|0.16666666666666666|1870.5|12.166666666666666|

+-------------+-------------------+------+------------------+ +-------+-------------------+

|summary| balance|

+-------+-------------------+

| count| 6|

| mean|0.16666666666666666|

| stddev| 0.408248290463863|

| min| 0.0|

| max| 1.0|

+-------+-------------------+ +-------------+-------------------+

|creditability| avg(balance)|

+-------------+-------------------+

| 1.0|0.16666666666666666|

+-------------+-------------------+ +-------------+-------+--------+-------+-------+------+-------+----------+-----------+----------+----------+-----------------+------+----+----------+---------+-------+----------+----------+--------+-------+--------------------+

|creditability|balance|duration|history|purpose|amount|savings|employment|instPercent|sexMarried|guarantors|residenceDuration|assets| age|concCredit|apartment|credits|occupation|dependents|hasPhone|foreign| features|

+-------------+-------+--------+-------+-------+------+-------+----------+-----------+----------+----------+-----------------+------+----+----------+---------+-------+----------+----------+--------+-------+--------------------+

| 1.0| 0.0| 18.0| 4.0| 2.0|1049.0| 0.0| 1.0| 4.0| 1.0| 0.0| 3.0| 1.0|21.0| 2.0| 0.0| 0.0| 2.0| 0.0| 0.0| 0.0|(20,[1,2,3,4,6,7,...|

| 1.0| 0.0| 9.0| 4.0| 0.0|2799.0| 0.0| 2.0| 2.0| 2.0| 0.0| 1.0| 0.0|36.0| 2.0| 0.0| 1.0| 2.0| 1.0| 0.0| 0.0|(20,[1,2,4,6,7,8,...|

| 1.0| 1.0| 12.0| 2.0| 9.0| 841.0| 1.0| 3.0| 2.0| 1.0| 0.0| 3.0| 0.0|23.0| 2.0| 0.0| 0.0| 1.0| 0.0| 0.0| 0.0|[1.0,12.0,2.0,9.0...|

| 1.0| 0.0| 12.0| 4.0| 0.0|2122.0| 0.0| 2.0| 3.0| 2.0| 0.0| 1.0| 0.0|39.0| 2.0| 0.0| 1.0| 1.0| 1.0| 0.0| 1.0|[0.0,12.0,4.0,0.0...|

| 1.0| 0.0| 12.0| 4.0| 0.0|2171.0| 0.0| 2.0| 4.0| 2.0| 0.0| 3.0| 1.0|38.0| 0.0| 1.0| 1.0| 1.0| 0.0| 0.0| 1.0|[0.0,12.0,4.0,0.0...|

| 1.0| 0.0| 10.0| 4.0| 0.0|2241.0| 0.0| 1.0| 1.0| 2.0| 0.0| 2.0| 0.0|48.0| 2.0| 0.0| 1.0| 1.0| 1.0| 0.0| 1.0|[0.0,10.0,4.0,0.0...|

+-------------+-------+--------+-------+-------+------+-------+----------+-----------+----------+----------+-----------------+------+----+----------+---------+-------+----------+----------+--------+-------+--------------------+ +-------------+-------+--------+-------+-------+------+-------+----------+-----------+----------+----------+-----------------+------+----+----------+---------+-------+----------+----------+--------+-------+--------------------+-----+

|creditability|balance|duration|history|purpose|amount|savings|employment|instPercent|sexMarried|guarantors|residenceDuration|assets| age|concCredit|apartment|credits|occupation|dependents|hasPhone|foreign| features|label|

+-------------+-------+--------+-------+-------+------+-------+----------+-----------+----------+----------+-----------------+------+----+----------+---------+-------+----------+----------+--------+-------+--------------------+-----+

| 1.0| 0.0| 18.0| 4.0| 2.0|1049.0| 0.0| 1.0| 4.0| 1.0| 0.0| 3.0| 1.0|21.0| 2.0| 0.0| 0.0| 2.0| 0.0| 0.0| 0.0|(20,[1,2,3,4,6,7,...| 0.0|

| 1.0| 0.0| 9.0| 4.0| 0.0|2799.0| 0.0| 2.0| 2.0| 2.0| 0.0| 1.0| 0.0|36.0| 2.0| 0.0| 1.0| 2.0| 1.0| 0.0| 0.0|(20,[1,2,4,6,7,8,...| 0.0|

| 1.0| 1.0| 12.0| 2.0| 9.0| 841.0| 1.0| 3.0| 2.0| 1.0| 0.0| 3.0| 0.0|23.0| 2.0| 0.0| 0.0| 1.0| 0.0| 0.0| 0.0|[1.0,12.0,2.0,9.0...| 0.0|

| 1.0| 0.0| 12.0| 4.0| 0.0|2122.0| 0.0| 2.0| 3.0| 2.0| 0.0| 1.0| 0.0|39.0| 2.0| 0.0| 1.0| 1.0| 1.0| 0.0| 1.0|[0.0,12.0,4.0,0.0...| 0.0|

| 1.0| 0.0| 12.0| 4.0| 0.0|2171.0| 0.0| 2.0| 4.0| 2.0| 0.0| 3.0| 1.0|38.0| 0.0| 1.0| 1.0| 1.0| 0.0| 0.0| 1.0|[0.0,12.0,4.0,0.0...| 0.0|

| 1.0| 0.0| 10.0| 4.0| 0.0|2241.0| 0.0| 1.0| 1.0| 2.0| 0.0| 2.0| 0.0|48.0| 2.0| 0.0| 1.0| 1.0| 1.0| 0.0| 1.0|[0.0,10.0,4.0,0.0...| 0.0|

+-------------+-------+--------+-------+-------+------+-------+----------+-----------+----------+----------+-----------------+------+----+----------+---------+-------+----------+----------+--------+-------+--------------------+-----+

【spark】spark应用(分布式估算圆周率+基于Spark MLlib的贷款风险预测)的更多相关文章

- Spark SQL 代码简要阅读(基于Spark 1.1.0)

Spark SQL允许相关的查询如SQL,HiveQL或Scala运行在spark上.其核心组件是一个新的RDD:SchemaRDD,SchemaRDDs由行对象组成,并包含一个描述此行对象的每一列的 ...

- 大数据实时处理-基于Spark的大数据实时处理及应用技术培训

随着互联网.移动互联网和物联网的发展,我们已经切实地迎来了一个大数据 的时代.大数据是指无法在一定时间内用常规软件工具对其内容进行抓取.管理和处理的数据集合,对大数据的分析已经成为一个非常重要且紧迫的 ...

- 基于Spark自动扩展scikit-learn (spark-sklearn)(转载)

转载自:https://blog.csdn.net/sunbow0/article/details/50848719 1.基于Spark自动扩展scikit-learn(spark-sklearn)1 ...

- 基于 Spark 的文本情感分析

转载自:https://www.ibm.com/developerworks/cn/cognitive/library/cc-1606-spark-seniment-analysis/index.ht ...

- 基于Spark的电影推荐系统(电影网站)

第一部分-电影网站: 软件架构: SpringBoot+Mybatis+JSP 项目描述:主要实现电影网站的展现 和 用户的所有动作的地方 技术选型: 技术 名称 官网 Spring Boot 容器 ...

- 基于Spark的电影推荐系统(推荐系统~1)

第四部分-推荐系统-项目介绍 行业背景: 快速:Apache Spark以内存计算为核心 通用 :一站式解决各个问题,ADHOC SQL查询,流计算,数据挖掘,图计算 完整的生态圈 只要掌握Spark ...

- 京东基于Spark的风控系统架构实践和技术细节

京东基于Spark的风控系统架构实践和技术细节 时间 2016-06-02 09:36:32 炼数成金 原文 http://www.dataguru.cn/article-9419-1.html ...

- Oozie分布式任务的工作流——Spark篇

Spark是现在应用最广泛的分布式计算框架,oozie支持在它的调度中执行spark.在我的日常工作中,一部分工作就是基于oozie维护好每天的spark离线任务,合理的设计工作流并分配适合的参数对于 ...

- 基于Spark ALS构建商品推荐引擎

基于Spark ALS构建商品推荐引擎 一般来讲,推荐引擎试图对用户与某类物品之间的联系建模,其想法是预测人们可能喜好的物品并通过探索物品之间的联系来辅助这个过程,让用户能更快速.更准确的获得所需 ...

随机推荐

- ios开发 需要注意的地方、注意事项

/* 一.LaunchScreenLaunchScreen产生原因:代替之前的启动图片好处:1.可以展示更多的东西2.可以只需要出一个尺寸的图片. 启动图片的优先级启动图片 < LaunchSc ...

- CDH集群手动导入scm库

一.手动导入 scm 库 背景:正常安装 cloudera-scm-server 时,安装 scm 库是通过脚本 /usr/share/cmf/schema/scm_prepare_database. ...

- [LeetCode] 681. Next Closest Time 下一个最近时间点

Given a time represented in the format "HH:MM", form the next closest time by reusing the ...

- Azure上部署Barracuda WAF集群 --- 2

前面一篇文章讲了如何在Azure上部署Barracuda.这篇文章聊一聊如何配置Barracuda. License 向Barracuda的销售人员申请WAF的License.得到License后打开 ...

- 【VS开发】VS2015没修改源文件也导致重新编译的解决办法

在使用VS2010编译C++程序的时候,每次修改工程中的某一个文件,点击"生成-仅用于项目-仅生成**"时,往往都是整个工程都需要重新编译一遍.由于这个工程代码量太大,每次编译完成 ...

- [转帖]Windows 7寿终正寝 为何Windows 10屡被吐槽它却无比经典?

Windows 7寿终正寝 为何Windows 10屡被吐槽它却无比经典? https://www.cnbeta.com/articles/tech/908897.htm 是的,一代经典操作系统Win ...

- 【转帖】你知道X86构架,你知道SH构架吗?

你知道X86构架,你知道SH构架吗? https://www.eefocus.com/mcu-dsp/363100 前面我们讲到了 8 位处理器,32 位处理器,以及 X86 构架,那么除了这些还 ...

- ContainsExtensions不分区大小写

public static class ContainsExtensions { public static bool Contains(this string source, string valu ...

- Mybatis中实体类属性与数据库列表间映射方法介绍

这篇文章主要介绍了Mybatis中实体类属性与数据列表间映射方法介绍,一共四种方法方法,供大家参考. Mybatis不像Hibernate中那么自动化,通过@Co ...

- Word 中实现公式居中编号右对齐 -- 含视频教程(9)

1. 两种方法 不管你用「Word 自带公式」还是「Mathtype」,一般来说,Word 中实现公式居中编号右对齐的方法有两种.(1):表格法:(2):制表位. 2. 方法1:表格法 >> ...