tensorflow自动写诗

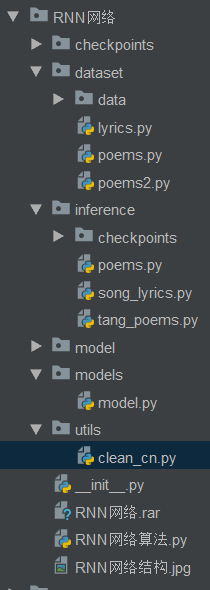

1、目录结构

2、入口类

# coding = utf-8

"""

注意:RNN使用的数据为序列化的数据

RNN网络:主要由多个LSTM计算单元组成,依靠BPTT算法进行时序展开

LSTM:含有保留门和忘记门,是一个多输入多输出的网络结构。

LSTM具备抑制梯度特性

"""

# import numpy as np

# import tensorflow as tf

# from .models.model import rnn_model

# from .dataset.poems import process_poems,generate_batch

import argparse

import sys

sys.path.append(r'D:\study\python-数据分析\深度学习\RNN网络\inference') def parse_args():

"""

参数设定

:return:

"""

#参数描述

parser = argparse.ArgumentParser(description='Intelligence Poem and Lyric Writer.') help_ = 'you can set this value in terminal --write value can be poem or lyric.'

parser.add_argument('-w', '--write', default='poem', choices=['poem', 'lyric'], help=help_) help_ = 'choose to train or generate.'

#训练

parser.add_argument('--train', dest='train', action='store_true', help=help_)

#测试

parser.add_argument('--no-train', dest='train', action='store_false', help=help_)

parser.set_defaults(train=False) args_ = parser.parse_args()

return args_ if __name__ == '__main__':

args = parse_args()

if args.write == 'poem':

from inference import tang_poems

if args.train:

tang_poems.main(True) #训练

else:

tang_poems.main(False) #测试

elif args.write == 'lyric':

from inference import song_lyrics

print(args.train)

if args.train:

song_lyrics.main(True)

else:

song_lyrics.main(False)

else:

print('[INFO] write option can only be poem or lyric right now.')

3、tang_poems.py

# -*- coding: utf-8 -*-

# file: tang_poems.py

import collections

import os

import sys

import numpy as np

import tensorflow as tf

from models.model import rnn_model

from dataset.poems import process_poems, generate_batch

import heapq tf.app.flags.DEFINE_integer('batch_size', 64, 'batch size.')

tf.app.flags.DEFINE_float('learning_rate', 0.01, 'learning rate.') # set this to 'main.py' relative path

tf.app.flags.DEFINE_string('checkpoints_dir', os.path.abspath('./checkpoints/poems/'), 'checkpoints save path.')

tf.app.flags.DEFINE_string('file_path', os.path.abspath('./dataset/data/poems.txt'), 'file name of poems.') tf.app.flags.DEFINE_string('model_prefix', 'poems', 'model save prefix.') tf.app.flags.DEFINE_integer('epochs', 50, 'train how many epochs.') FLAGS = tf.app.flags.FLAGS start_token = 'G'

end_token = 'E' def run_training():

#模型保存路径配置

if not os.path.exists(os.path.dirname(FLAGS.checkpoints_dir)):

os.mkdir(os.path.dirname(FLAGS.checkpoints_dir))

if not os.path.exists(FLAGS.checkpoints_dir):

os.mkdir(FLAGS.checkpoints_dir)

#1、诗集数据处理

poems_vector, word_to_int, vocabularies = process_poems(FLAGS.file_path)

#2、生成批量数据用于训练

batches_inputs, batches_outputs = generate_batch(FLAGS.batch_size, poems_vector, word_to_int) input_data = tf.placeholder(tf.int32, [FLAGS.batch_size, None])

output_targets = tf.placeholder(tf.int32, [FLAGS.batch_size, None])

#3、建立模型

end_points = rnn_model(model='lstm', input_data=input_data, output_data=output_targets, vocab_size=len(

vocabularies), rnn_size=128, num_layers=2, batch_size=64, learning_rate=FLAGS.learning_rate) saver = tf.train.Saver(tf.global_variables())

init_op = tf.group(tf.global_variables_initializer(), tf.local_variables_initializer())

#4、开始训练

with tf.Session() as sess:

# sess = tf_debug.LocalCLIDebugWrapperSession(sess=sess)

# sess.add_tensor_filter("has_inf_or_nan", tf_debug.has_inf_or_nan)

sess.run(init_op) start_epoch = 0

checkpoint = tf.train.latest_checkpoint(FLAGS.checkpoints_dir)

if checkpoint:

saver.restore(sess, checkpoint)

print("[INFO] restore from the checkpoint {0}".format(checkpoint))

start_epoch += int(checkpoint.split('-')[-1])

print('[INFO] start training...')

try:

for epoch in range(start_epoch, FLAGS.epochs):

n = 0

n_chunk = len(poems_vector) // FLAGS.batch_size

for batch in range(n_chunk):

loss, _, _ = sess.run([

end_points['total_loss'],

end_points['last_state'],

end_points['train_op']

], feed_dict={input_data: batches_inputs[n], output_targets: batches_outputs[n]})

n += 1

print('[INFO] Epoch: %d , batch: %d , training loss: %.6f' % (epoch, batch, loss)) if epoch % 6 == 0:

saver.save(sess, './model/', global_step=epoch)

#saver.save(sess, os.path.join(FLAGS.checkpoints_dir, FLAGS.model_prefix), global_step=epoch)

except KeyboardInterrupt:

print('[INFO] Interrupt manually, try saving checkpoint for now...')

saver.save(sess, os.path.join(FLAGS.checkpoints_dir, FLAGS.model_prefix), global_step=epoch)

print('[INFO] Last epoch were saved, next time will start from epoch {}.'.format(epoch)) def to_word(predict, vocabs):

t = np.cumsum(predict)

s = np.sum(predict)

sample = int(np.searchsorted(t, np.random.rand(1) * s))

if sample > len(vocabs):

sample = len(vocabs) - 1

return vocabs[sample] def gen_poem(begin_word):

batch_size = 1

print('[INFO] loading corpus from %s' % FLAGS.file_path)

poems_vector, word_int_map, vocabularies = process_poems(FLAGS.file_path) input_data = tf.placeholder(tf.int32, [batch_size, None]) end_points = rnn_model(model='lstm', input_data=input_data, output_data=None, vocab_size=len(

vocabularies), rnn_size=128, num_layers=2, batch_size=64, learning_rate=FLAGS.learning_rate) saver = tf.train.Saver(tf.global_variables())

init_op = tf.group(tf.global_variables_initializer(), tf.local_variables_initializer())

with tf.Session() as sess:

sess.run(init_op) #checkpoint = tf.train.latest_checkpoint(FLAGS.checkpoints_dir)

checkpoint = tf.train.latest_checkpoint('./model/')

#saver.restore(sess, checkpoint)

saver.restore(sess, './model/-24') x = np.array([list(map(word_int_map.get, start_token))]) [predict, last_state] = sess.run([end_points['prediction'], end_points['last_state']],

feed_dict={input_data: x})

if begin_word:

word = begin_word

else:

word = to_word(predict, vocabularies)

poem = ''

while word != end_token:

print ('runing')

poem += word

x = np.zeros((1, 1))

x[0, 0] = word_int_map[word]

[predict, last_state] = sess.run([end_points['prediction'], end_points['last_state']],

feed_dict={input_data: x, end_points['initial_state']: last_state})

word = to_word(predict, vocabularies)

# word = words[np.argmax(probs_)]

return poem def pretty_print_poem(poem):

poem_sentences = poem.split('。')

for s in poem_sentences:

if s != '' and len(s) > 10:

print(s + '。') def main(is_train):

if is_train:

print('[INFO] train tang poem...')

run_training()

else:

print('[INFO] write tang poem...') begin_word = input('输入起始字:')

#begin_word = '我'

poem2 = gen_poem(begin_word)

pretty_print_poem(poem2) if __name__ == '__main__':

tf.app.run()

4、inference中poems.py

import numpy as np

import tensorflow as tf

from models.model import rnn_model

from dataset.poems import process_poems,generate_batch tf.app.flags.DEFINE_integer('batch_size',64,'batch size = ?')

tf.app.flags.DEFINE_float('learning_rate',0.01,'learning_rate')

tf.app.flags.DEFINE_string('check_pointss_dir','./model/','check_pointss_dir')

tf.app.flags.DEFINE_string('file_path','./data/.txt','file_path')

tf.app.flags.DEFINE_integer('epoch',50,'train epoch') start_token = 'G'

end_token = 'E'

FLAGS = tf.app.flags.FLAGS

def run_training():

poems_vector,word_to_int,vocabularies = process_poems(FLAGS.file_path)

batch_inputs,batch_outputs = generate_batch(FLAGS.batch_size,poems_vector,word_to_int) input_data = tf.placeholder(tf.int32, [FLAGS.batch_size,None])

output_targets = tf.placeholder(tf.int32, [FLAGS.batch_size,None]) end_points = rnn_model(model='lstm',input=input_data,output_data = output_targets,vocab_size = len(vocabularies)

,run_size = 128,num_layers = 2,batch_size = 64,learning_rate = 0.01) def main(is_train):

if is_train:

print ('training')

run_training()

else:

print ('test')

begin_word = input('word') if __name__ == '__main__':

tf.app.run()

5、model.py

# -*- coding: utf-8 -*-

# file: model.py import tensorflow as tf

import numpy as np def rnn_model(model, input_data, output_data, vocab_size, rnn_size=128, num_layers=2, batch_size=64,

learning_rate=0.01):

"""

construct rnn seq2seq model.

:param model: model class

:param input_data: input data placeholder

:param output_data: output data placeholder

:param vocab_size:

:param rnn_size:

:param num_layers:

:param batch_size:

:param learning_rate:

:return:

"""

end_points = {} #1、选择网络

if model == 'rnn':

cell_fun = tf.contrib.rnn.BasicRNNCell #RNN API

elif model == 'gru':

cell_fun = tf.contrib.rnn.GRUCell

elif model == 'lstm':

cell_fun = tf.contrib.rnn.BasicLSTMCell cell = cell_fun(rnn_size, state_is_tuple=True)

cell = tf.contrib.rnn.MultiRNNCell([cell] * num_layers, state_is_tuple=True) #lstm api #2、lstm 状态初始化

if output_data is not None:

initial_state = cell.zero_state(batch_size, tf.float32)

else:

initial_state = cell.zero_state(1, tf.float32) #3、使用cpu运算

with tf.device("/cpu:0"):

embedding = tf.get_variable('embedding', initializer=tf.random_uniform(

[vocab_size + 1, rnn_size], -1.0, 1.0))

inputs = tf.nn.embedding_lookup(embedding, input_data) # [batch_size, ?, rnn_size] = [64, ?, 128]

outputs, last_state = tf.nn.dynamic_rnn(cell, inputs, initial_state=initial_state)

output = tf.reshape(outputs, [-1, rnn_size])

#4、模型建立

weights = tf.Variable(tf.truncated_normal([rnn_size, vocab_size + 1]))

bias = tf.Variable(tf.zeros(shape=[vocab_size + 1]))

logits = tf.nn.bias_add(tf.matmul(output, weights), bias=bias)

# [?, vocab_size+1]

#5、损失以及优化

if output_data is not None:

# output_data must be one-hot encode

labels = tf.one_hot(tf.reshape(output_data, [-1]), depth=vocab_size + 1)

# should be [?, vocab_size+1] loss = tf.nn.softmax_cross_entropy_with_logits(labels=labels, logits=logits)

# loss shape should be [?, vocab_size+1]

total_loss = tf.reduce_mean(loss)

train_op = tf.train.AdamOptimizer(learning_rate).minimize(total_loss) end_points['initial_state'] = initial_state

end_points['output'] = output

end_points['train_op'] = train_op

end_points['total_loss'] = total_loss

end_points['loss'] = loss

end_points['last_state'] = last_state

else:

prediction = tf.nn.softmax(logits) end_points['initial_state'] = initial_state

end_points['last_state'] = last_state

end_points['prediction'] = prediction return end_points

6、dataset中poems.py

# -*- coding: utf-8 -*-

# file: poems.py import collections

import os

import sys

import numpy as np start_token = 'G'

end_token = 'E' def process_poems(file_name):

"""

诗数据处理,

:param file_name: 文件名

:return:

"""

# 诗集

poems = []

with open(file_name, "r", encoding='utf-8', ) as f:

for line in f.readlines():

try:

title, content = line.strip().split(':')

content = content.replace(' ', '')

#过滤不符合的诗,或者脏数据

if '_' in content or '(' in content or '(' in content or '《' in content or '[' in content or \

start_token in content or end_token in content:

continue

if len(content) < 5 or len(content) > 79:

continue

content = start_token + content + end_token

poems.append(content)

except ValueError as e:

pass

# 按诗的字数排序

poems = sorted(poems, key=lambda l: len(line)) # 统计每个字出现次数

all_words = []

for poem in poems:

all_words += [word for word in poem]

# 这里根据包含了每个字对应的频率

counter = collections.Counter(all_words)

count_pairs = sorted(counter.items(), key=lambda x: -x[1])

words, _ = zip(*count_pairs) # 取前多少个常用字

words = words[:len(words)] + (' ',)

# 每个字映射为一个数字ID

word_int_map = dict(zip(words, range(len(words))))

poems_vector = [list(map(lambda word: word_int_map.get(word, len(words)), poem)) for poem in poems] return poems_vector, word_int_map, words def generate_batch(batch_size, poems_vec, word_to_int):

# 每次取64首诗进行训练

n_chunk = len(poems_vec) // batch_size

x_batches = []

y_batches = []

for i in range(n_chunk):

start_index = i * batch_size

end_index = start_index + batch_size batches = poems_vec[start_index:end_index]

# 找到这个batch的所有poem中最长的poem的长度

length = max(map(len, batches))

# 填充一个这么大小的空batch,空的地方放空格对应的index标号

x_data = np.full((batch_size, length), word_to_int[' '], np.int32)

for row in range(batch_size):

# 每一行就是一首诗,在原本的长度上把诗还原上去

x_data[row, :len(batches[row])] = batches[row]

y_data = np.copy(x_data)

# y的话就是x向左边也就是前面移动一个

y_data[:, :-1] = x_data[:, 1:]

"""

x_data y_data

[6,2,4,6,9] [2,4,6,9,9]

[1,4,2,8,5] [4,2,8,5,5]

"""

x_batches.append(x_data)

y_batches.append(y_data)

return x_batches, y_batches

7、clean_cn.py

# -*- coding: utf-8 -*-

# file: clean_cn.py """

this script using for clean Chinese corpus.

you can set level for clean, i.e.:

level='all', will clean all character that not Chinese, include punctuations

level='normal', this will generate corpus like normal use, reserve alphabets and numbers

level='clean', this will remove all except Chinese and Chinese punctuations besides, if you want remove complex Chinese characters, just set this to be true:

simple_only=True

"""

import numpy as np

import os

import string cn_punctuation_set = [',', '。', '!', '?', '"', '"', '、']

en_punctuation_set = [',', '.', '?', '!', '"', '"'] def clean_cn_corpus(file_name, clean_level='all', simple_only=True, is_save=True):

"""

clean Chinese corpus.

:param file_name:

:param clean_level:

:param simple_only:

:param is_save:

:return: clean corpus in list type.

"""

if os.path.dirname(file_name):

base_dir = os.path.dirname(file_name)

else:

print('not set dir. please check') save_file = os.path.join(base_dir, os.path.basename(file_name).split('.')[0] + '_cleaned.txt')

with open(file_name, 'r+') as f:

clean_content = []

for l in f.readlines():

l = l.strip()

if l == '':

pass

else:

l = list(l)

should_remove_words = []

for w in l:

if not should_reserve(w, clean_level):

should_remove_words.append(w)

clean_line = [c for c in l if c not in should_remove_words]

clean_line = ''.join(clean_line)

if clean_line != '':

clean_content.append(clean_line)

if is_save:

with open(save_file, 'w+') as f:

for l in clean_content:

f.write(l + '\n')

print('[INFO] cleaned file have been saved to %s.' % save_file)

return clean_content def should_reserve(w, clean_level):

if w == ' ':

return True

else:

if clean_level == 'all':

# only reserve Chinese characters

if w in cn_punctuation_set or w in string.punctuation or is_alphabet(w):

return False

else:

return is_chinese(w)

elif clean_level == 'normal':

# reserve Chinese characters, English alphabet, number

if is_chinese(w) or is_alphabet(w) or is_number(w):

return True

elif w in cn_punctuation_set or w in en_punctuation_set:

return True

else:

return False

elif clean_level == 'clean':

if is_chinese(w):

return True

elif w in cn_punctuation_set:

return True

else:

return False

else:

raise "clean_level not support %s, please set for all, normal, clean" % clean_level def is_chinese(uchar):

"""is chinese"""

if u'\u4e00' <= uchar <= u'\u9fa5':

return True

else:

return False def is_number(uchar):

"""is number"""

if u'\u0030' <= uchar <= u'\u0039':

return True

else:

return False def is_alphabet(uchar):

"""is alphabet"""

if (u'\u0041' <= uchar <= u'\u005a') or (u'\u0061' <= uchar <= u'\u007a'):

return True

else:

return False def semi_angle_to_sbc(uchar):

"""半角转全角"""

inside_code = ord(uchar)

if inside_code < 0x0020 or inside_code > 0x7e:

return uchar

if inside_code == 0x0020:

inside_code = 0x3000

else:

inside_code += 0xfee0

return chr(inside_code) def sbc_to_semi_angle(uchar):

"""全角转半角"""

inside_code = ord(uchar)

if inside_code == 0x3000:

inside_code = 0x0020

else:

inside_code -= 0xfee0

if inside_code < 0x0020 or inside_code > 0x7e:

return uchar

return chr(inside_code)

tensorflow自动写诗的更多相关文章

- [Swust OJ 385]--自动写诗

题目链接:http://acm.swust.edu.cn/problem/0385/ Time limit(ms): 5000 Memory limit(kb): 65535 Descripti ...

- tensorflow的写诗代码分析【转】

本文转载自:https://dongzhixiao.github.io/2018/07/21/so-hot/ 今天周六,早晨出门吃饭,全身汗湿透.天气真的是太热了!我决定一天不出门,在屋子里面休息! ...

- 简单明朗的 RNN 写诗教程

目录 简单明朗的 RNN 写诗教程 数据集介绍 代码思路 输入 and 输出 训练集构建 生成一首完整的诗 代码实现 读取文件 统计字数 构建word 与 id的映射 转成one-hot代码 随机打乱 ...

- 为你写诗:3 步搭建 Serverless AI 应用

作者 | 杜万(倚贤) 阿里巴巴技术专家 本文整理自 1 月 2 日社群分享,每月 2 场高质量分享,点击加入社群. 关注"阿里巴巴云原生"公众号,回复关键词 0102 即可下载本 ...

- 神经网络写诗(charRNN)

https://github.com/chenyuntc/pytorch-book 基于pytorch ,许多有趣的小应用.感谢作者! 作者的代码写得非常清晰,配置方法也很明确,只需要按照提示,安装依 ...

- 机器学习PAI为你自动写歌词,妈妈再也不用担心我的freestyle了(提供数据、代码)

背景 最近互联网上出现一个热词就是“freestyle”,源于一个比拼rap的综艺节目.在节目中需要大量考验选手的freestyle能力,freestyle指的是rapper即兴的根据一段主题讲一串r ...

- AI:为你写诗,为你做不可能的事

最近,一档全程高能的神仙节目,高调地杀入了我们的视野: 没错,就是撒贝宁主持,董卿.康辉等央视名嘴作为评审嘉宾,同时集齐央视"三大名嘴"同台的央视<主持人大赛>,这够不 ...

- 急速搭建 Serverless AI 应用:为你写诗

前言 首先介绍下在本文出现的几个比较重要的概念: 函数计算(Function Compute): 函数计算是一个事件驱动的服务,通过函数计算,用户无需管理服务器等运行情况,只需编写代码并上传.函数计算 ...

- Qt侠:像写诗一样写代码,玩游戏一样的开心心情,还能领工资!

[软]上海-Qt侠 2017/7/12 16:11:20我完全是兴趣主导,老板不给我钱,我也要写好代码!白天干,晚上干,周一周五干,周末继续干!编程已经深入我的基因,深入我的骨髓,深入我的灵魂!当我解 ...

随机推荐

- ES bulk 批量操作

bulk允许在一个请求中进行多个操作(create.index.update.delete),也就是可以在一次请求裡做很多事情 也由于这个关系,因此bulk的请求体和其他请求的格式会有点不同 bulk ...

- 利用Pycharm部署同步更新Django项目文件

利用Pycharm部署同步更新Django项目文件 这里使用同步更新的前提是你已经在服务器上上传了你的Django项目文件. 在"工具(Tools)"菜单中找到"部署(D ...

- linux安装vsftpd后无法登陆

安装完成后在主机上登陆时,不管是输入用户,还是匿名都无法登陆 经过检查,发现是因为/etc/hosts.deny禁止了所有ip访问 将hosts.deny中的all:all删除,或者在/etc/hos ...

- python笔记:学习设置Python虚拟环境+配置 virtualenvwarpper+创建Python3.6的虚拟环境+安装numpy

虚拟环境它是一个虚拟化,从电脑独立开辟出来的环境.就是借助虚拟机docker来把一部分内容独立出来,我们把这部分独立出来的东西称作“容器”,在这个容器中,我们可以只安装我们需要的依赖包,各个容器之间互 ...

- day_02比特币的转账机制及其7个名词

一:比特币的转账机制: 类似于普通转账:登录钱包--->选择转出(入)币的钱包地址--->填入转出数额及其手续费--->比特币签名--->提交比特币网络--->进行矿工打 ...

- Permission denied (publickey,gssapi-keyex,gssapi-with-mic)

当出现警告的时候,恭喜你,你已经离成功很近了. 远程主机这里设为slave2,用户为Hadoop. 本地主机设为slave1 以下都是在远程主机slave2上的配置,使得slave1可以免密码连接到s ...

- Educational Codeforces Round 73 (Rated for Div. 2) B. Knights(构造)

链接: https://codeforces.com/contest/1221/problem/B 题意: You are given a chess board with n rows and n ...

- 【Android-自定义控件】SwipeRefreshDemo 下拉刷新,上拉加载

参考:https://github.com/PingerOne/SwipeRefreshDemo 谷歌官方的SwipeRefreshLayout控件,只有下拉刷新功能. 自定义的SwipeRefres ...

- 【JAVA-算法】 截取2个字符中间的字符串

Java Code /** 截取2个字符中间的字符串 */ private void GetMiddleString() { String str = "BB022220011BB007EB ...

- CWnd与HWND,GetSafeHwnd()与m_hWnd

HWND是Windows系统中的窗口句柄,CWnd是MFC中的窗体类,两者的所属不同.CWnd对HWND进行了封装类,更加高级也更加简化. HWND是Window内核处理对象,系统通过HWND进行操作 ...