HiBench成长笔记——(3) HiBench测试Spark

很多内容之前的博客已经提过,这里不再赘述,详细内容参照本系列前面的博客:https://www.cnblogs.com/ratels/p/10970905.html

创建并修改配置文件conf/spark.conf

cp conf/spark.conf.template conf/spark.conf

参考:https://github.com/Intel-bigdata/HiBench/blob/master/docs/run-sparkbench.md,设置属性为下列值

# Spark home hibench.spark.home /opt/cloudera/parcels/CDH--.cdh5./lib/spark # Spark master # standalone mode: spark://xxx:7077 # YARN mode: yarn-client hibench.spark.master yarn-client

执行脚本

bin/workloads/micro/wordcount/prepare/prepare.sh

返回信息

[root@node1 prepare]# ./prepare.sh patching args= Parsing conf: /home/cf/app/HiBench-master/conf/hadoop.conf Parsing conf: /home/cf/app/HiBench-master/conf/hibench.conf Parsing conf: /home/cf/app/HiBench-master/conf/spark.conf Parsing conf: /home/cf/app/HiBench-master/conf/workloads/micro/wordcount.conf probe -.cdh5./lib/hadoop/../../jars/hadoop-mapreduce-client-jobclient--cdh5.14.2-tests.jar start HadoopPrepareWordcount bench hdfs -.cdh5./bin/hadoop --config /etc/hadoop/conf.cloudera.yarn fs -rm -r -skipTrash hdfs://node1:8020/HiBench/Wordcount/Input Deleted hdfs://node1:8020/HiBench/Wordcount/Input Submit MapReduce Job: /opt/cloudera/parcels/CDH--.cdh5./bin/hadoop --config /etc/hadoop/conf.cloudera.yarn jar /opt/cloudera/parcels/CDH--.cdh5./lib/hadoop/../../jars/hadoop-mapreduce-examples--cdh5. -D mapreduce.randomtextwriter.bytespermap= -D mapreduce.job.maps= -D mapreduce.job.reduces= hdfs://node1:8020/HiBench/Wordcount/Input The job took seconds. finish HadoopPrepareWordcount bench

执行脚本

bin/workloads/micro/wordcount/spark/run.sh

返回信息

[root@node1 spark]# ./run.sh patching args= Parsing conf: /home/cf/app/HiBench-master/conf/hadoop.conf Parsing conf: /home/cf/app/HiBench-master/conf/hibench.conf Parsing conf: /home/cf/app/HiBench-master/conf/spark.conf Parsing conf: /home/cf/app/HiBench-master/conf/workloads/micro/wordcount.conf probe -.cdh5./lib/hadoop/../../jars/hadoop-mapreduce-client-jobclient--cdh5.14.2-tests.jar start ScalaSparkWordcount bench hdfs -.cdh5./bin/hadoop --config /etc/hadoop/conf.cloudera.yarn fs -rm -r -skipTrash hdfs://node1:8020/HiBench/Wordcount/Output Deleted hdfs://node1:8020/HiBench/Wordcount/Output hdfs -.cdh5./bin/hadoop --config /etc/hadoop/conf.cloudera.yarn fs -du -s hdfs://node1:8020/HiBench/Wordcount/Input Export env: SPARKBENCH_PROPERTIES_FILES=/home/cf/app/HiBench-master/report/wordcount/spark/conf/sparkbench/sparkbench.conf Export env: HADOOP_CONF_DIR=/etc/hadoop/conf.cloudera.yarn Submit Spark job: /opt/cloudera/parcels/CDH--.cdh5./lib/spark/bin/spark-submit --properties- --executor-cores --executor-memory 4g /home/cf/app/HiBench-master/sparkbench/assembly/target/sparkbench-assembly-7.1-SNAPSHOT-dist.jar hdfs://node1:8020/HiBench/Wordcount/Input hdfs://node1:8020/HiBench/Wordcount/Output // :: INFO remote.RemoteActorRefProvider$RemotingTerminator: Shutting down remote daemon. finish ScalaSparkWordcount bench

查看report/hibench.report

Type Date Time Input_data_size Duration(s) Throughput(bytes/s) Throughput/node HadoopWordcount -- :: ScalaSparkWordcount -- ::

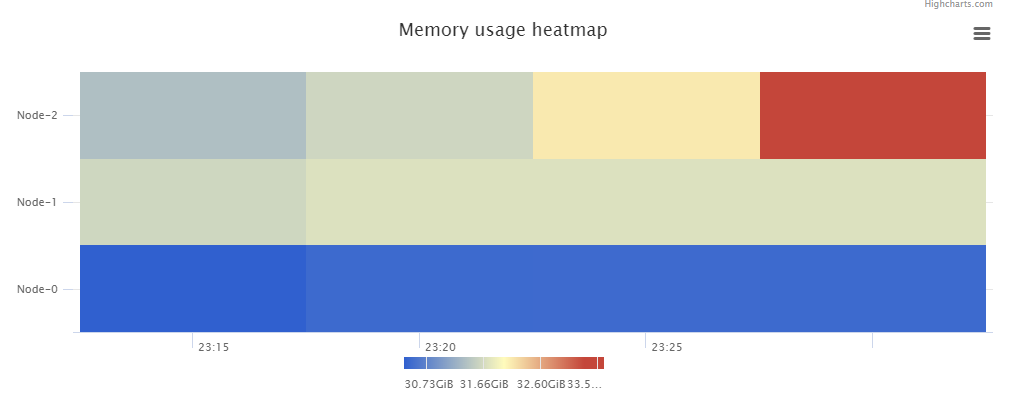

\report\wordcount\spark下有多个文件:monitor.log是原始日志,bench.log是scheduler.DAGScheduler和scheduler.TaskSetManager信息,monitor.html可视化了系统的性能信息,\conf\wordcount.conf、\conf\sparkbench\spark.conf和\conf\sparkbench\sparkbench.conf是本次任务的环境变量

monitor.html中包含了Memory usage heatmap等统计图:

根据官方文档 https://github.com/Intel-bigdata/HiBench/blob/master/docs/run-sparkbench.md ,还可以修改 hibench.scale.profile 调整测试的数据规模,修改 hibench.default.map.parallelism 和 hibench.default.shuffle.parallelism 调整并行化,修改hibench.yarn.executor.num、hibench.yarn.executor.cores、spark.executor.memory和spark.driver.memory控制Spark executor 的数量、核数、内存和driver的内存。

HiBench成长笔记——(3) HiBench测试Spark的更多相关文章

- HiBench成长笔记——(1) HiBench概述

测试分类 HiBench共计19个测试方向,可大致分为6个测试类别:分别是micro,ml(机器学习),sql,graph,websearch和streaming. 2.1 micro Benchma ...

- HiBench成长笔记——(4) HiBench测试Spark SQL

很多内容之前的博客已经提过,这里不再赘述,详细内容参照本系列前面的博客:https://www.cnblogs.com/ratels/p/10970905.html 和 https://www.cnb ...

- HiBench成长笔记——(6) HiBench测试结果分析

Scan Join Aggregation Scan Join Aggregation Scan Join Aggregation Scan Join Aggregation Scan Join Ag ...

- HiBench成长笔记——(5) HiBench-Spark-SQL-Scan源码分析

run.sh #!/bin/bash # Licensed to the Apache Software Foundation (ASF) under one or more # contributo ...

- HiBench成长笔记——(2) CentOS部署安装HiBench

安装Scala 使用spark-shell命令进入shell模式,查看spark版本和Scala版本: 下载Scala2.10.5 wget https://downloads.lightbend.c ...

- HiBench成长笔记——(7) 阅读《The HiBench Benchmark Suite: Characterization of the MapReduce-Based Data Analysis》

<The HiBench Benchmark Suite: Characterization of the MapReduce-Based Data Analysis>内容精选 We th ...

- HiBench成长笔记——(10) 分析源码execute_with_log.py

#!/usr/bin/env python2 # Licensed to the Apache Software Foundation (ASF) under one or more # contri ...

- HiBench成长笔记——(9) 分析源码monitor.py

monitor.py 是主监控程序,将监控数据写入日志,并统计监控数据生成HTML统计展示页面: #!/usr/bin/env python2 # Licensed to the Apache Sof ...

- HiBench成长笔记——(8) 分析源码workload_functions.sh

workload_functions.sh 是测试程序的入口,粘连了监控程序 monitor.py 和 主运行程序: #!/bin/bash # Licensed to the Apache Soft ...

随机推荐

- SqlCacheDependency 缓存数据库依赖

启用SQL SERVER 通知 aspnet_regsql.exe -S <Server> -U <Username> -P <Password> -ed -d N ...

- 【转】Node.js到底是用来做什么的

Node.js的到底是用来做什么的 在阐述之前我想放一个链接,这是国外的一个大神,对于node.js非常好的一篇介绍的文章,英文比较好的朋友可以直接去阅读,本文也很大程度上参考了这篇文章,也同时感谢知 ...

- 19年读100本书之第二本--《OKR工作法》-克里斯蒂娜 沃特克

0,一句话概括书的内容? OKR(objective key result),即目标与关键结果. 1,我从这本书能得到什么? 2,核心内容是什么? 3,我要怎么做?

- nginx防盗链处理模块referer和secure_link模块

使用场景:某网站听过URI引用你的页面:当用户在网站点击url时:http头部会通过referer头部,将该网站当前页面的url带上,告诉服务本次请求是由这个页面发起的 思路:通过referer模块, ...

- 吴裕雄 Bootstrap 前端框架开发——Bootstrap 排版:引用(Blockquote)

<!DOCTYPE html> <html> <head> <meta charset="utf-8"> <title> ...

- tomcat启动报错failed to start component

严重: A child container failed during start java.util.concurrent.ExecutionException: org.apache.catali ...

- Fiddler抓包(基本使用方法、web+app端抓包、篡改数据、模拟低速)

1.HTTP代理原理图 http服务器代理:既是web服务器,又是web客户端 接口vs端口: 接口:包含地址和端口 端口:类似于USB接口 地址:127.0.0.1,端口默认:8888 ...

- Centos7 之 MariaDB(Mysql) root密码忘记的解决办法

MariaDB(Mysql) root密码忘记的解决办法 1.首先先关闭mariadb数据库的服务 # 关闭mariadb服务命令(mysql的话命令就是将mariadb换成mysql) [root@ ...

- Spring Schedule 实现定时任务

很多时候我们都需要为系统建立一个定时任务来帮我们做一些事情,SpringBoot 已经帮我们实现好了一个,我们只需要直接使用即可,当然你也可以不用 SpringBoot 自带的定时任务,整合 Quar ...

- 设计模式课程 设计模式精讲 13-2 享元模式coding

1 代码演练 1.1 代码演练1 1 代码演练 1.1 代码演练1 需求: 每周由随机部门经历做报告: 重点关注: a 该案例是单例模式和享元模式共同使用 b 外部传入的department是外部状态 ...