运行一个nodejs服务,先发布为deployment,然后创建service,让集群外可以访问

问题来源

海口-老男人 17:42:43

就是我要运行一个nodejs服务,先发布为deployment,然后创建service,让集群外可以访问

旧报纸 17:43:35

也就是 你的需求为 一个app 用delployment 发布 然后service 然后 代理 对吧

解决方案:

部署traefik

发布deployment pod

创建service

traefik 代理service

外部访问

环境

docker 19.03.5

kubernetes 1.17.2

traefik部署

为什么选择 traefik,抛弃nginx:

https://www.php.cn/nginx/422461.html

特别说明:traefik 2.1 版本更新了灰色发布和流量复制功能 为容器/微服务而生

traefik 官网写的都很不错了 附带一篇 快速入门博文:https://www.qikqiak.com/post/traefik-2.1-101/

建议部署traefik 不要用上述链接的方案

注意:这里 Traefik 是部署在 assembly Namespace 下,如果不是需要修改下面部署文件中的 Namespace 属性。

traefik-crd.yaml

在 traefik v2.1 版本后,开始使用 CRD(Custom Resource Definition)来完成路由配置等,所以需要提前创建 CRD 资源。

# cat traefik-crd.yaml

##########################################################################

#Author: zisefeizhu

#QQ: 2********0

#Date: 2020-03-16

#FileName: traefik-crd.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): 2020 All rights reserved

###########################################################################

## IngressRoute

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: ingressroutes.traefik.containo.us

spec:

scope: Namespaced

group: traefik.containo.us

version: v1alpha1

names:

kind: IngressRoute

plural: ingressroutes

singular: ingressroute

---

## IngressRouteTCP

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: ingressroutetcps.traefik.containo.us

spec:

scope: Namespaced

group: traefik.containo.us

version: v1alpha1

names:

kind: IngressRouteTCP

plural: ingressroutetcps

singular: ingressroutetcp

---

## Middleware

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: middlewares.traefik.containo.us

spec:

scope: Namespaced

group: traefik.containo.us

version: v1alpha1

names:

kind: Middleware

plural: middlewares

singular: middleware

---

## TLSOption

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: tlsoptions.traefik.containo.us

spec:

scope: Namespaced

group: traefik.containo.us

version: v1alpha1

names:

kind: TLSOption

plural: tlsoptions

singular: tlsoption

---

## TraefikService

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: traefikservices.traefik.containo.us

spec:

scope: Namespaced

group: traefik.containo.us

version: v1alpha1

names:

kind: TraefikService

plural: traefikservices

singular: traefikservice

创建RBAC权限

Traefik 需要一定的权限,所以这里提前创建好 Traefik ServiceAccount 并分配一定的权限。

# cat traefik-rbac.yaml

##########################################################################

#Author: zisefeizhu

#QQ: 2********0

#Date: 2020-03-16

#FileName: traefik-rbac.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): 2020 All rights reserved

###########################################################################

## ServiceAccount

apiVersion: v1

kind: ServiceAccount

metadata:

namespace: kube-system

name: traefik-ingress-controller

---

## ClusterRole

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: traefik-ingress-controller

rules:

- apiGroups: [""]

resources: ["services","endpoints","secrets"]

verbs: ["get","list","watch"]

- apiGroups: ["extensions"]

resources: ["ingresses"]

verbs: ["get","list","watch"]

- apiGroups: ["extensions"]

resources: ["ingresses/status"]

verbs: ["update"]

- apiGroups: ["traefik.containo.us"]

resources: ["middlewares"]

verbs: ["get","list","watch"]

- apiGroups: ["traefik.containo.us"]

resources: ["ingressroutes"]

verbs: ["get","list","watch"]

- apiGroups: ["traefik.containo.us"]

resources: ["ingressroutetcps"]

verbs: ["get","list","watch"]

- apiGroups: ["traefik.containo.us"]

resources: ["tlsoptions"]

verbs: ["get","list","watch"]

- apiGroups: ["traefik.containo.us"]

resources: ["traefikservices"]

verbs: ["get","list","watch"]

---

## ClusterRoleBinding

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: traefik-ingress-controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: traefik-ingress-controller

subjects:

- kind: ServiceAccount

name: traefik-ingress-controller

namespace: kube-system

创建traefik配置文件

由于 Traefik 配置很多,通过 CLI 定义不是很方便,一般时候选择将其配置选项放到配置文件中,然后存入 ConfigMap,将其挂入 traefik 中。

traefik-config.yaml

# cat traefik-config.yaml

##########################################################################

#Author: zisefeizhu

#QQ: 2********0

#Date: 2020-03-16

#FileName: traefik-config.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): 2020 All rights reserved

###########################################################################

kind: ConfigMap

apiVersion: v1

metadata:

name: traefik-config

namespace: kube-system

data:

traefik.yaml: |-

ping: "" ## 启用 Ping

serversTransport:

insecureSkipVerify: true ## Traefik 忽略验证代理服务的 TLS 证书

api:

insecure: true ## 允许 HTTP 方式访问 API

dashboard: true ## 启用 Dashboard

debug: false ## 启用 Debug 调试模式

metrics:

prometheus: "" ## 配置 Prometheus 监控指标数据,并使用默认配置

entryPoints:

web:

address: ":80" ## 配置 80 端口,并设置入口名称为 web

websecure:

address: ":443" ## 配置 443 端口,并设置入口名称为 websecure

redis:

address: ":663"

providers:

kubernetesCRD: "" ## 启用 Kubernetes CRD 方式来配置路由规则

kubernetesIngress: "" ## 启动 Kubernetes Ingress 方式来配置路由规则

log:

filePath: "" ## 设置调试日志文件存储路径,如果为空则输出到控制台

level: error ## 设置调试日志级别

format: json ## 设置调试日志格式

accessLog:

filePath: "" ## 设置访问日志文件存储路径,如果为空则输出到控制台

format: json ## 设置访问调试日志格式

bufferingSize: 0 ## 设置访问日志缓存行数

filters:

#statusCodes: ["200"] ## 设置只保留指定状态码范围内的访问日志

retryAttempts: true ## 设置代理访问重试失败时,保留访问日志

minDuration: 20 ## 设置保留请求时间超过指定持续时间的访问日志

fields: ## 设置访问日志中的字段是否保留(keep 保留、drop 不保留)

defaultMode: keep ## 设置默认保留访问日志字段

names: ## 针对访问日志特别字段特别配置保留模式

ClientUsername: drop

headers: ## 设置 Header 中字段是否保留

defaultMode: keep ## 设置默认保留 Header 中字段

names: ## 针对 Header 中特别字段特别配置保留模式

User-Agent: redact

Authorization: drop

Content-Type: keep

关键配置部分

entryPoints:

web:

address: ":80" ## 配置 80 端口,并设置入口名称为 web

websecure:

address: ":443" ## 配置 443 端口,并设置入口名称为 websecure

redis:

address: ":6379" ## 配置 6379 端口,并设置入口名称为 redis

部署traefik

采用daemonset这种方式部署Traefik,所以需要提前给节点设置 Label,这样当程序部署时 Pod 会自动调度到设置 Label 的节点上

traefik-deploy.yaml

kubectl label nodes 20.0.0.202 IngressProxy=true

#cat traefik-deploy.yaml

##########################################################################

#Author: zisefeizhu

#QQ: 2********0

#Date: 2020-03-16

#FileName: traefik-deploy.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): 2020 All rights reserved

###########################################################################

apiVersion: v1

kind: Service

metadata:

name: traefik

namespace: kube-system

spec:

type: NodePort

ports:

- name: web

port: 80

- name: websecure

port: 443

- name: admin

port: 8080

- name: redis

port: 6379

selector:

app: traefik

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: traefik-ingress-controller

namespace: kube-system

labels:

app: traefik

spec:

selector:

matchLabels:

app: traefik

template:

metadata:

name: traefik

labels:

app: traefik

spec:

serviceAccountName: traefik-ingress-controller

terminationGracePeriodSeconds: 1

containers:

- image: traefik:v2.1.2

name: traefik-ingress-lb

ports:

- name: web

containerPort: 80

hostPort: 80 ## 将容器端口绑定所在服务器的 80 端口

- name: websecure

containerPort: 443

hostPort: 443 ## 将容器端口绑定所在服务器的 443 端口

- name: redis

containerPort: 6379

hostPort: 6379

- name: admin

containerPort: 8080 ## Traefik Dashboard 端口

resources:

limits:

cpu: 2000m

memory: 1024Mi

requests:

cpu: 1000m

memory: 1024Mi

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

args:

- --configfile=/config/traefik.yaml

volumeMounts:

- mountPath: "/config"

name: "config"

volumes:

- name: config

configMap:

name: traefik-config

tolerations: ## 设置容忍所有污点,防止节点被设置污点

- operator: "Exists"

nodeSelector: ## 设置node筛选器,在特定label的节点上启动

IngressProxy: "true"

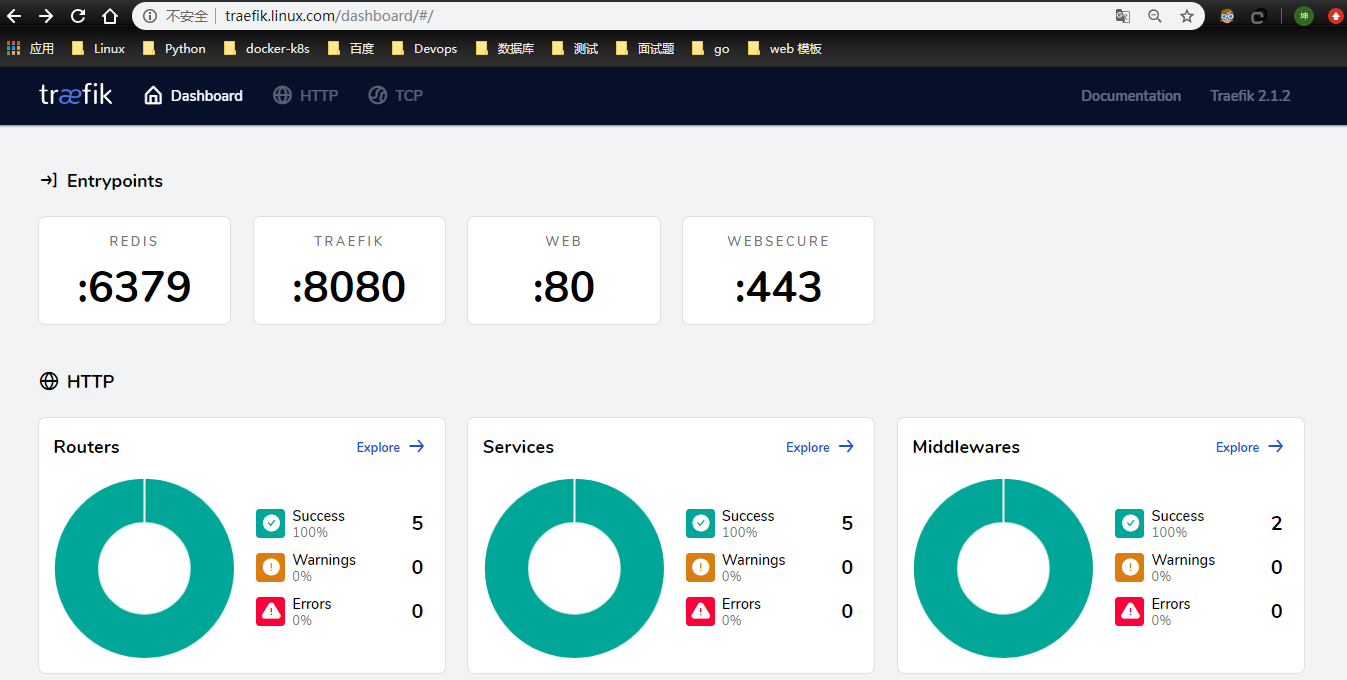

Traefik2.1 应用已经部署完成,但是想让外部访问 Kubernetes 内部服务,还需要配置路由规则,这里开启了 Traefik Dashboard 配置,所以首先配置 Traefik Dashboard 看板的路由规则,使外部能够访问 Traefik Dashboard。

部署traefik dashboard

# cat traefik-dashboard-route.yaml

##########################################################################

#Author: zisefeizhu

#QQ: 2********0

#Date: 2020-03-16

#FileName: traefik-dashboard-route.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): 2020 All rights reserved

###########################################################################

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: traefik-dashboard-route

namespace: kube-system

spec:

entryPoints:

- web

routes:

- match: Host(`traefik.linux.com`)

kind: Rule

services:

- name: traefik

port: 8080

本地host解析

其实到这里 已经能基本看出traefik是如何进行代理的了

apiVersion: v1

kind: Service

metadata:

name: traefik

namespace: kube-system

spec:

type: NodePort

ports:

- name: web

port: 80

- name: websecure

port: 443

- name: admin

port: 8080

selector:

app: traefik

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: traefik-dashboard-route

namespace: kube-system

spec:

entryPoints:

- web //入口

routes:

- match: Host(`traefik.linux.com`) //外部访问域名

kind: Rule

services:

- name: traefik //service.selector

port: 8080 //service.ports

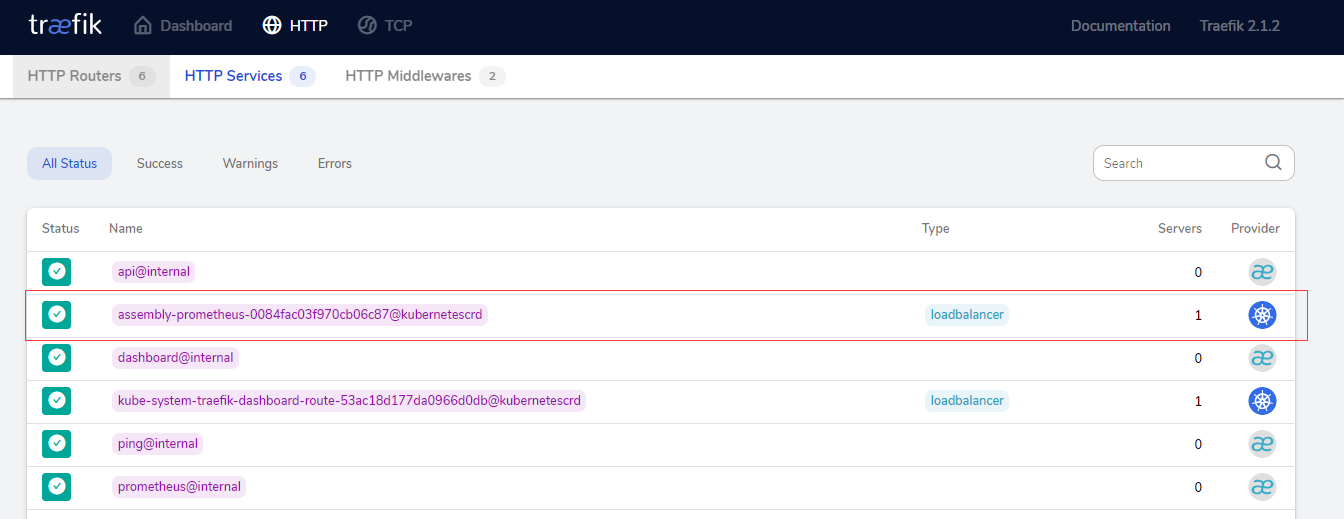

代理一个deployment pod

问题要求是: 部署一个app 用delployment 发布 然后service 然后 代理

端口是3009

那这里就用个应用实验吧 这里我随便用个prometheus 应用吧

[root@bs-k8s-master01 prometheus]# cat prometheus-cm.yaml

##########################################################################

#Author: zisefeizhu

#QQ: 2********0

#Date: 2020-03-20

#FileName: prometheus-cm.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): 2020 All rights reserved

###########################################################################

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: assembly

data:

prometheus.yml: |

global:

scrape_interval: 15s

scrape_timeout: 15s

alerting:

alertmanagers:

- static_configs:

- targets: ["alertmanager-svc:9093"]

rule_files:

- /etc/prometheus/rules.yaml

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: 'traefik'

static_configs:

- targets: ['traefik.kube-system.svc.cluster.local:8080']

- job_name: "kubernetes-nodes"

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: [__address__]

regex: '(.*):10250'

replacement: '${1}:9100'

target_label: __address__

action: replace

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- job_name: 'kubernetes-kubelet'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- job_name: "kubernetes-apiserver"

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: "kubernetes-scheduler"

kubernetes_sd_configs:

- role: endpoints

- job_name: 'kubernetes-cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

- job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

rules.yaml: |

groups:

- name: test-rule

rules:

- alert: NodeMemoryUsage

expr: (sum(node_memory_MemTotal_bytes) - sum(node_memory_MemFree_bytes + node_memory_Buffers_bytes+node_memory_Cached_bytes)) / sum(node_memory_MemTotal_bytes) * 100 > 5

for: 2m

labels:

team: node

annotations:

summary: "{{$labels.instance}}: High Memory usage detected"

description: "{{$labels.instance}}: Memory usage is above 80% (current value is: {{ $value }}"

[root@bs-k8s-master01 prometheus]# cat prometheus-rbac.yaml

##########################################################################

#Author: zisefeizhu

#QQ: 2********0

#Date: 2020-03-20

#FileName: prometheus-rbac.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): 2020 All rights reserved

###########################################################################

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: assembly

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups:

- ""

resources:

- nodes

- services

- endpoints

- pods

- nodes/proxy

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- configmaps

- nodes/metrics

verbs:

- get

- nonResourceURLs:

- /metrics

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: assembly

# cat prometheus-deploy.yaml

##########################################################################

#Author: zisefeizhu

#QQ: 2********0

#Date: 2020-03-20

#FileName: prometheus-deploy.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): 2020 All rights reserved

###########################################################################

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus

namespace: assembly

labels:

app: prometheus

spec:

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

imagePullSecrets:

- name: k8s-harbor-login

serviceAccountName: prometheus

containers:

- image: harbor.linux.com/prometheus/prometheus:v2.4.3

name: prometheus

command:

- "/bin/prometheus"

args:

- "--config.file=/etc/prometheus/prometheus.yml"

- "--storage.tsdb.path=/prometheus"

- "--storage.tsdb.retention=24h"

- "--web.enable-admin-api" # 控制对admin HTTP API的访问,其中包括删除时间序列等功能

- "--web.enable-lifecycle" # 支持热更新,直接执行localhost:9090/-/reload立即生效

ports:

- containerPort: 9090

protocol: TCP

name: http

volumeMounts:

- mountPath: "/prometheus"

subPath: prometheus

name: data

- mountPath: "/etc/prometheus"

name: config-volume

resources:

requests:

cpu: 100m

memory: 512Mi

limits:

cpu: 100m

memory: 512Mi

securityContext:

runAsUser: 0

volumes:

- name: data

persistentVolumeClaim:

claimName: prometheus-pvc

- configMap:

name: prometheus-config

name: config-volume

nodeSelector: ## 设置node筛选器,在特定label的节点上启动

prometheus: "true"

[root@bs-k8s-master01 prometheus]# cat prometheus-svc.yaml

##########################################################################

#Author: zisefeizhu

#QQ: 2********0

#Date: 2020-03-20

#FileName: prometheus-svc.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): 2020 All rights reserved

###########################################################################

apiVersion: v1

kind: Service

metadata:

name: prometheus

namespace: assembly

labels:

app: prometheus

spec:

selector:

app: prometheus

type: NodePort

ports:

- name: web

port: 9090

targetPort: http

[root@bs-k8s-master01 prometheus]# cat prometheus-ingressroute.yaml

##########################################################################

#Author: zisefeizhu

#QQ: 2********0

#Date: 2020-03-20

#FileName: prometheus-ingressroute.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): 2020 All rights reserved

###########################################################################

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: prometheus

namespace: assembly

spec:

entryPoints:

- web

routes:

- match: Host(`prometheus.linux.com`)

kind: Rule

services:

- name: prometheus

port: 9090

验证

显然已经代理成功了 本地hosts解析

还是没得问题的

运行一个nodejs服务,先发布为deployment,然后创建service,让集群外可以访问的更多相关文章

- 【Azure微服务 Service Fabric 】使用az命令创建Service Fabric集群

问题描述 在使用Service Fabric的快速入门文档: 将 Windows 容器部署到 Service Fabric. 其中在创建Service Fabric时候,示例代码中使用的是PowerS ...

- 庐山真面目之十微服务架构 Net Core 基于 Docker 容器部署 Nginx 集群

庐山真面目之十微服务架构 Net Core 基于 Docker 容器部署 Nginx 集群 一.简介 前面的两篇文章,我们已经介绍了Net Core项目基于Docker容器部署在Linux服 ...

- k8s暴露集群内和集群外服务的方法

集群内服务 一般 pod 都是根据 service 资源来进行集群内的暴露,因为 k8s 在 pod 启动前就已经给调度节点上的 pod 分配好 ip 地址了,因此我们并不能提前知道提供服务的 pod ...

- 【Azure微服务 Service Fabric 】因证书过期导致Service Fabric集群挂掉(升级无法完成,节点不可用)

问题描述 创建Service Fabric时,证书在整个集群中是非常重要的部分,有着用户身份验证,节点之间通信,SF升级时的身份及授权认证等功能.如果证书过期则会导致节点受到影响集群无法正常工作. 当 ...

- 微服务之:从零搭建ocelot网关和consul集群

介绍 微服务中有关键的几项技术,其中网关和服务服务发现,服务注册相辅相成. 首先解释几个本次教程中需要的术语 网关 Gateway(API GW / API 网关),顾名思义,是企业 IT 在系统边界 ...

- Spark运行模式_spark自带cluster manager的standalone cluster模式(集群)

这种运行模式和"Spark自带Cluster Manager的Standalone Client模式(集群)"还是有很大的区别的.使用如下命令执行应用程序(前提是已经启动了spar ...

- Nginx(http协议代理 搭建虚拟主机 服务的反向代理 在反向代理中配置集群的负载均衡)

Nginx 简介 Nginx (engine x) 是一个高性能的 HTTP 和反向代理服务.Nginx 是由伊戈尔·赛索耶夫为俄罗斯访问量第二的 Rambler.ru 站点(俄文:Рамблер)开 ...

- 【微服务架构】SpringCloud之Eureka(注册中心集群篇)(三)

上一篇讲解了spring注册中心(eureka),但是存在一个单点故障的问题,一个注册中心远远无法满足实际的生产环境,那么我们需要多个注册中心进行集群,达到真正的高可用.今天我们实战来搭建一个Eure ...

- Spark运行模式_基于YARN的Resource Manager的Client模式(集群)

现在越来越多的场景,都是Spark跑在Hadoop集群中,所以为了做到资源能够均衡调度,会使用YARN来做为Spark的Cluster Manager,来为Spark的应用程序分配资源. 在执行Spa ...

随机推荐

- nop 配置阿里cdn 联通4g 页面显示不全 查看源代码发现被截断

开发中遇见特别诡异的问题, 项目使用nop框架,pavilion主题,之后配置阿里cdn,然后在联通4g的情况下苹果手机网页显示不完全,nop首页和产品详情页都是如此,排查过程: 1.阿里cdn设置了 ...

- 条件随机场 CRF

2019-09-29 15:38:26 问题描述:请解释一下NER任务中CRF层的作用. 问题求解: 在做NER任务的时候,神经网络学习到了文本间的信息,而CRF学习到了Tag间的信息. 加入CRF与 ...

- excel中存储的icount,赋值完之后

最近需要实现一个功能,为了确保每次函数运行的时候count是唯一的,所以想读取excel中存储的icount,赋值完之后对其进行+1操作,并存入excel文件,确保下次读取的count是新的,没有出现 ...

- 磐创AI GPU租用平台上线,1小时不到1块钱

>> 小白也能看懂的PyTorch从入门到精通系列 << 今天磐创AI GPU租赁平台上线了!!!为大家解决用GPU难的问题!一块10G显存的GPU,1小时租用费用不到1块钱, ...

- 线程 -- ThreadLocal

1,ThreadLocal 不是“本地线程”的意思,而是Thread 的局部变量.每个使用该变量的线程提供独立的变量副本,所以每一个线程都可以独立地改变自己的副本,而不会影响其它线程所对应的副本 2, ...

- 《Mathematical Analysis of Algorithms》中有关“选择第t大的数”的算法分析

开头废话 这个问题是Donald.E.Knuth在他发表的论文Mathematical Analysis of Algorithms中提到的,这里对他的算法分析过程给出了更详细的解释. 问题描述: 给 ...

- iOS 真机查看沙盒目录

iExplorer 的方法试的时候设备都无法检测到,建议放弃 启用iTunes文件共享,才能够看沙盒内的文件,只需要在plist文件中添加如下信息: <key>UIFileSharingE ...

- 【译】Java SE 14 Hotspot 虚拟机垃圾回收调优指南

原文链接:HotSpot Virtual Machine Garbage Collection Tuning Guide,基于Java SE 14. 本文主要包括以下内容: 优化目标与策略(Ergon ...

- WebView中Java与JavaScript的交互

原文首发于微信公众号:jzman-blog,欢迎关注交流! Android 开发过程中 WebView 的使用比较广泛,常用来加载网页,比如使用 WebView 加载新闻页面.使用 WebView 打 ...

- 苦涩的技术我该怎么学?Akka 实战

上次我们在“懵 B”的状态下,聊了聊 Actor 模型的理论知识.稍微再补充两句,如上图所示在 Actor 模型系统中,主要有互不依赖的 Actor 组成(图中圆圈),Actor 之间的通信是通过消息 ...