030:Cetus中间件和MHA读写分离

030:Cetus中间件和MHA读写分离

############################################

line:V1.1

mail: gczheng@139.com

date: 2018-08-30

############################################

一、主机环境

- 虚拟机配置

| CPU | 内存 | 硬盘 | OS版本 | MySQL版本 | MHA版本 | Cetus版本 |

|---|---|---|---|---|---|---|

| 2-core | 4G | 500G | CentOS 7.5.1804 | 5.7.18 | 0.57 | v1.0.0-44 |

- 主机信息

| 主机名 | IP地址 | Server_ID | MHA Manager | MHA Node | Cetus | 备注 |

|---|---|---|---|---|---|---|

| node05 | 192.168.222.175 | 部署 | 部署 | 部署 | 监控/MySQL主库的故障转移 | |

| node02 | 192.168.222.172 | 172 | - | 部署 | - | |

| node03 | 192.168.222.173 | 173 | - | 部署 | - | |

| node04 | 192.168.222.174 | 174 | - | 部署 | - |

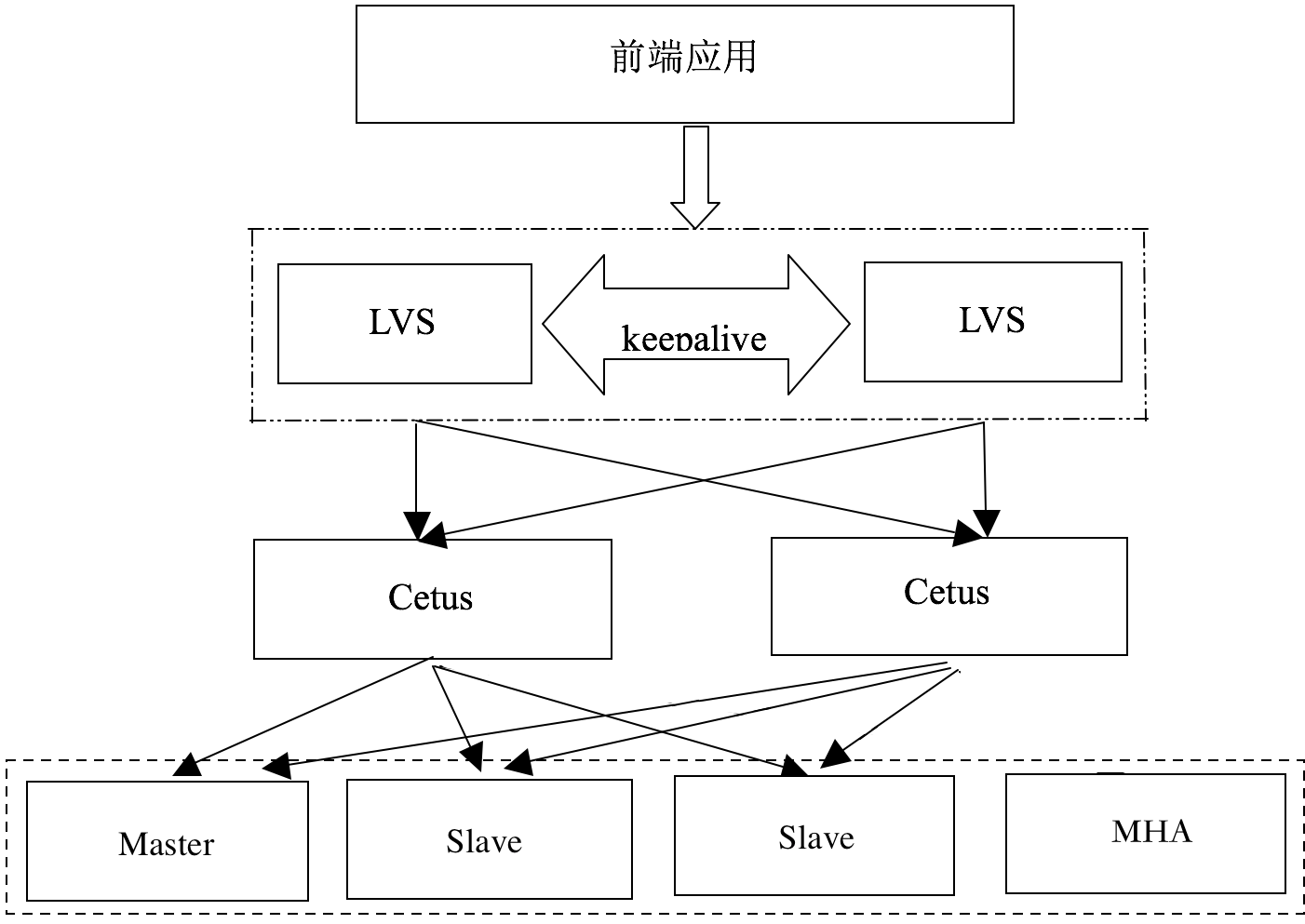

- 参考官方架构图

二、搭建主从

1、配置主从

- 搭建基于GTID主从复制环境

- 若开启主从延迟检测需创建库proxy_heart_beat和表tb_heartbeat

- 创建用户和密码(默认用户对tb_heartbeat有读写权限)

- 确认Cetus可以远程登录MySQL

0.主库创建同步帐号grant RELOAD,REPLICATION SLAVE, REPLICATION CLIENT on *.* to repl@'%' identified by password 'xxxxxx'; flush privileges;

1.mysqldump 出主库数据,增加 master-data=2;

2.导入从库;

3.change master to master_host="192.168.222.171", master_port=3306, master_user='repl',master_password='repl', master_auto_position=1;

4.start slave;

5.创建mysql cetus测试账号 grant all on *.* to gcdb@'%' identified by password 'xxxxxx'; flush privileges;

6.备库设置set global read_only=1;

7.创建延迟校库proxy_heart_beat和表tb_heartbeat

CREATE DATABASE proxy_heart_beat;

USE proxy_heart_beat;

CREATE TABLE tb_heartbeat ( p_id varchar(128) NOT NULL, p_ts timestamp(3) NOT NULL DEFAULT CURRENT_TIMESTAMP(3), PRIMARY KEY (p_id) ) ENGINE = InnoDB DEFAULT CHARSET = utf8;

8.创建测试库ttt

create database if not exists ttt;

create table ttt.t1(id int(4)primary key not null auto_increment,nums int(20) not null);

insert into ttt.t1(nums) values(1),(2),(3),(4),(5);

update ttt.t1 set nums=100 where id =3;

delete from ttt.t1 where id =4;

select * from ttt.t1;

9.安装sendmail服务

yum install sendmail -y

2、配置 hosts

- 添加hosts

cat <<EOF >/etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.222.171 node01.test.com node01

192.168.222.172 node02.test.com node02

192.168.222.173 node03.test.com node03

192.168.222.174 node04.test.com node04

192.168.222.175 node05.test.com node05

192.168.222.176 node06.test.com node06

EOF

3、配置免秘钥

- mha管理节点:

ssh-keygen -t rsa

ssh-copy-id -i /root/.ssh/id_rsa.pub "root@192.168.222.172"

ssh-copy-id -i /root/.ssh/id_rsa.pub "root@192.168.222.173"

ssh-copy-id -i /root/.ssh/id_rsa.pub "root@192.168.222.174"

- mysql节点各自生成公私钥,并将公钥拷贝给其他mysql节点

# 192.168.222.172

ssh-keygen -t rsa

ssh-copy-id -i /root/.ssh/id_rsa.pub "root@192.168.222.171"

ssh-copy-id -i /root/.ssh/id_rsa.pub "root@192.168.222.173"

ssh-copy-id -i /root/.ssh/id_rsa.pub "root@192.168.222.174"

# 192.168.222.173

ssh-keygen -t rsa

ssh-copy-id -i /root/.ssh/id_rsa.pub "root@192.168.222.172"

ssh-copy-id -i /root/.ssh/id_rsa.pub "root@192.168.222.171"

ssh-copy-id -i /root/.ssh/id_rsa.pub "root@192.168.222.174"

# 192.168.222.174

ssh-keygen -t rsa

ssh-copy-id -i /root/.ssh/id_rsa.pub "root@192.168.222.172"

ssh-copy-id -i /root/.ssh/id_rsa.pub "root@192.168.222.171"

ssh-copy-id -i /root/.ssh/id_rsa.pub "root@192.168.222.173"

- 测试ssh是否免密码登录

三、安装MHA和Cetus

1、下载包和安装依赖包

mha4mysql-manager-0.57.tar.gz和mha4mysql-node-0.57.tar.gz

cetus源码下载

git clone https://github.com/Lede-Inc/cetus.git

# 安装Cetus依赖包

yum -y install cmake gcc glib2-devel flex libevent-devel mysql-devel gperftools-libs

# 安装mha依赖包

yum -y install perl-DBD-MySQL perl-Config-Tiny perl-Log-Dispatch perl-Parallel-ForkManager perl-ExtUtils-CBuilder perl-ExtUtils-MakeMaker perl-CPAN perl-ExtUtils-Manifest

2、安装MHA Node

tar zxvf mha4mysql-node-0.57.tar.gz

cd mha4mysql-node-0.57

perl Makefile.PL

make && make install

-- Node安装完成后会在/usr/local/bin/下生成这些文件

#save_binary_logs : 保存和复制master的二进制日志。

#apply_diff_relay_logs : 识别差异的中继日志事件并应用于其它slave。

#filter_mysqlbinlog : 去除不必要的ROLLBACK事件(MHA已不再使用这个工具)。

#purge_relay_logs : 清除中继日志(不会阻塞SQL线程)。

3、安装MHA manager

- 在所有的 MySQL上安装

Node 节点;在 MHA 的管理节点安装manager 节点。

tar zvxf mha4mysql-manager-0.57.tar.gz

cd mha4mysql-manager-0.57

perl Makefile.PL

make && make install

-- Manage安装完成后会在/usr/local/bin/下生成这些文件

#masterha_check_ssh : 检查MHA的SSH配置。

#masterha_check_repl : 检查MySQL复制。

#masterha_manager : 启动MHA。

#masterha_check_status : 检测当前MHA运行状态。

#masterha_master_monitor : 监测master是否宕机。

#masterha_master_switch : 控制故障转移(自动或手动)。

#masterha_conf_host : 添加或删除配置的server信息。

cp -r /software/mha4mysql-manager-0.57/samples/scripts/* /usr/local/bin/

-- 拷贝/software/mha4mysql-manager-0.57/samples/scripts/下的脚本到/usr/local/bin/

#master_ip_failover :自动切换时vip管理的脚本,不是必须,如果我们使用keepalived的,我们可以自己编写脚本完成对vip的管理,比如监控mysql,如果mysql异常,我们停止keepalived就行,这样vip就会自动漂移

#master_ip_online_change:在线切换时vip的管理,不是必须,同样可以可以自行编写简单的shell完成

#power_manager:故障发生后关闭主机的脚本,不是必须

#send_report:因故障切换后发送报警的脚本,不是必须,可自行编写简单的shell完成。

4、安装Cetus

1.安装说明

Cetus利用自动化建构系统CMake进行编译安装,其中描述构建过程的构建文件CMakeLists.txt已经在源码中的主目录和子目录中,下载源码并解压后具体安装步骤如下:

- 创建编译目录:在源码主目录下创建独立的目录build,并转到该目录下

mkdir build/

cd build/

- 编译:利用cmake进行编译,指令如下

读写分离版本:

cmake ../ -DCMAKE_BUILD_TYPE=Debug -DCMAKE_INSTALL_PREFIX=/home/user/cetus_install -DSIMPLE_PARSER=ON

分库版本:

cmake ../ -DCMAKE_BUILD_TYPE=Debug -DCMAKE_INSTALL_PREFIX=/home/user/cetus_install -DSIMPLE_PARSER=OFF

其中CMAKE_BUILD_TYPE变量可以选择生成 debug 版和或release 版的程序,CMAKE_INSTALL_PREFIX变量确定软件的实际安装目录的绝对路径,安装目录建议以/home/user/日期.编译版本.分支.commit_id的方式命名;SIMPLE_PARSER变量确定软件的编译版本,设置为ON则编译读写分离版本,否则编译分库版本。

该过程会检查您的系统是否缺少一些依赖库和依赖软件,可以根据错误代码安装相应依赖。

- 安装:执行make install进行安装

make install

- 配置:Cetus运行前还需要编辑配置文件

cd /home/user/cetus_install/conf/

cp XXX.json.example XXX.json

cp XXX.conf.example XXX.conf

vi XXX.json

vi XXX.conf

配置文件在make insatll后存在示例文件,以.example结尾,目录为/home/user/cetus_install/conf/,包括用户设置文件(users.json)、变量处理配置文件(variables.json)、分库版本的分片规则配置文件(sharding.json)、读写分离版本的启动配置文件(proxy.conf)和分库版本的启动配置文件(shard.conf)。

根据具体编译安装的版本编辑相关配置文件,若使用读写分离功能则需配置users.json和proxy.conf,若使用sharding功能则需配置users.json、sharding.json和shard.conf,其中两个版本的variables.json均可选配。

配置文件的具体说明见Cetus 读写分离版配置文件说明和Cetus 分库(sharding)版配置文件说明。

- 启动:Cetus可以利用bin/cetus启动

读写分离版本:

bin/cetus --defaults-file=conf/proxy.conf [--conf-dir=/home/user/cetus_install/conf/]

分库版本:

bin/cetus --defaults-file=conf/shard.conf [--conf-dir=/home/user/cetus_install/conf/]

其中Cetus启动时可以添加命令行选项,--defaults-file选项用来加载启动配置文件(proxy.conf或者shard.conf),且在启动前保证启动配置文件的权限为660;--conf-dir是可选项,用来加载其他配置文件(.json文件),默认为当前目录下conf文件夹。

Cetus可起动守护进程后台运行,也可在进程意外终止自动启动一个新进程,可通过启动配置选项进行设置。

2.安装实施

#进入源码目录

[root@node05 software]# git clone https://github.com/Lede-Inc/cetus.git

[root@node05 software]# cd cetus/

[root@node05 cetus]# mkdir build/ && cd build

[root@node05 build]# cmake ../ -DCMAKE_BUILD_TYPE=Debug -DCMAKE_INSTALL_PREFIX=/usr/local/cetus -DSIMPLE_PARSER=ON

[root@node05 build]# make install

[root@node05 build]# ll /usr/local/cetus/

total 0

drwxr-xr-x 2 root root 19 Aug 31 09:29 bin

drwxr-xr-x 2 root root 143 Aug 31 09:29 conf

drwxr-xr-x 4 root root 210 Aug 31 09:29 lib

drwxr-xr-x 2 root root 19 Aug 31 09:29 libexec

drwxr-xr-x 2 root root 23 Aug 31 09:29 logs

[root@node05 build]# ll /usr/local/share/perl5/MHA/

total 388

-r--r--r-- 1 root root 12997 May 31 2015 BinlogHeaderParser.pm

-r--r--r-- 1 root root 18783 May 31 2015 BinlogManager.pm

-r--r--r-- 1 root root 2251 May 31 2015 BinlogPosFinderElp.pm

-r--r--r-- 1 root root 1648 May 31 2015 BinlogPosFinder.pm

-r--r--r-- 1 root root 3130 May 31 2015 BinlogPosFinderXid.pm

-r--r--r-- 1 root root 5684 May 31 2015 BinlogPosFindManager.pm

-r--r--r-- 1 root root 17480 May 31 2015 Config.pm

-r--r--r-- 1 root root 27019 May 31 2015 DBHelper.pm

-r--r--r-- 1 root root 3075 May 31 2015 FileStatus.pm

-r--r--r-- 1 root root 20370 May 31 2015 HealthCheck.pm

-r--r--r-- 1 root root 10560 May 31 2015 ManagerAdmin.pm

-r--r--r-- 1 root root 3679 May 31 2015 ManagerAdminWrapper.pm

-r--r--r-- 1 root root 3508 May 31 2015 ManagerConst.pm

-r--r--r-- 1 root root 4612 May 31 2015 ManagerUtil.pm

-r--r--r-- 1 root root 74140 May 31 2015 MasterFailover.pm

-r--r--r-- 1 root root 23654 May 31 2015 MasterMonitor.pm

-r--r--r-- 1 root root 23466 May 31 2015 MasterRotate.pm

-r--r--r-- 1 root root 1308 May 31 2015 NodeConst.pm

-r--r--r-- 1 root root 6689 May 31 2015 NodeUtil.pm

-r--r--r-- 1 root root 44325 May 31 2015 ServerManager.pm

-r--r--r-- 1 root root 33213 May 31 2015 Server.pm

-r--r--r-- 1 root root 6531 May 31 2015 SlaveUtil.pm

-r--r--r-- 1 root root 4874 May 31 2015 SSHCheck.pm

[root@node05 build]#

5、cetus替换mha部分文件

#使用 mha_ld/src 替换所有文件/usr/share/perl5/vendor_perl/MHA/目录的所有同名文件

[root@node05 build]# cd ../mha_ld/src/

[root@node05 src]# ls

BinlogHeaderParser.pm BinlogPosFindManager.pm HealthCheck.pm MasterFailover.pm NodeUtil.pm ServerManager.pm

BinlogManager.pm cetus.cnf ManagerAdmin.pm masterha_secondary_check ProxyManager.pm Server.pm

BinlogPosFinderElp.pm Config.pm ManagerAdminWrapper.pm MasterMonitor.pm sample.cnf SlaveUtil.pm

BinlogPosFinder.pm DBHelper.pm ManagerConst.pm MasterRotate.pm sendMail.sh SSHCheck.pm

BinlogPosFinderXid.pm FileStatus.pm ManagerUtil.pm NodeConst.pm sendmail.txt

[root@node05 src]# ls /usr/local/share/perl5/MHA/

BinlogHeaderParser.pm BinlogPosFinderXid.pm FileStatus.pm ManagerConst.pm MasterRotate.pm Server.pm

BinlogManager.pm BinlogPosFindManager.pm HealthCheck.pm ManagerUtil.pm NodeConst.pm SlaveUtil.pm

BinlogPosFinderElp.pm Config.pm ManagerAdmin.pm MasterFailover.pm NodeUtil.pm SSHCheck.pm

BinlogPosFinder.pm DBHelper.pm ManagerAdminWrapper.pm MasterMonitor.pm ServerManager.pm

[root@node05 src]# rsync /software/cetus/mha_ld/src/* /usr/local/share/perl5/MHA/

[root@node05 src]# ls /usr/local/share/perl5/MHA/

BinlogHeaderParser.pm BinlogPosFindManager.pm HealthCheck.pm MasterFailover.pm NodeUtil.pm ServerManager.pm

BinlogManager.pm cetus.cnf ManagerAdmin.pm masterha_secondary_check ProxyManager.pm Server.pm

BinlogPosFinderElp.pm Config.pm ManagerAdminWrapper.pm MasterMonitor.pm sample.cnf SlaveUtil.pm

BinlogPosFinder.pm DBHelper.pm ManagerConst.pm MasterRotate.pm sendMail.sh SSHCheck.pm

BinlogPosFinderXid.pm FileStatus.pm ManagerUtil.pm NodeConst.pm sendmail.txt

#使用 mha_ld/masterha_secondary_check替换masterha_secondary_check命令 which masterha_secondary_check

[root@node05 src]# which masterha_secondary_check

/usr/local/bin/masterha_secondary_check

[root@node05 src]# rsync /software/cetus/mha_ld/src/masterha_secondary_check /usr/local/bin/

[root@node05 src]# chmod +x /usr/local/bin/masterha_secondary_check

[root@node05 src]# ll /usr/local/bin/masterha_secondary_check

-r-xr-xr-x 1 root root 5186 Aug 31 11:48 /usr/local/bin/masterha_secondary_check

四、MHA和cetus配置

1、创建和修改配置文件

- 在manager节点上创建cetus配置文件(cetus.cnf)

[root@node05 src]# cp /software/cetus/mha_ld/cetus.cnf /etc/cetus.cnf

[root@node05 src]# vim /etc/cetus.cnf

[root@node05 src]# cat /etc/cetus.cnf

middle_ipport=192.168.222.175:23306

middle_user=admin

middle_pass=admin

- 在manager节点上创建cetus的user和proxy.配置文件(users.json和proxy.conf)

[root@node05 src]# cp /usr/local/cetus/conf/proxy.conf.example /usr/local/cetus/conf/proxy.conf

[root@node05 src]# cp /usr/local/cetus/conf/users.json.example /usr/local/cetus/conf/users.json

[root@node05 src]# vim /usr/local/cetus/conf/users.json

[root@node05 src]# cat /usr/local/cetus/conf/users.json

{

"users": [{

"user": "gcdb",

"client_pwd": "iforgot",

"server_pwd": "iforgot"

}, {

"user": "cetus_app1",

"client_pwd": "cetus_app1",

"server_pwd": "cetus_app1"

}]

}

[root@node05 src]# vim /usr/local/cetus/conf/proxy.conf

[root@node05 src]# cat /usr/local/cetus/conf/proxy.conf

[cetus]

# For mode-switch

daemon = true

# Loaded Plugins

plugins=proxy,admin

# Proxy Configuration, For example: MySQL master and salve host ip are both 192.0.0.1

proxy-address=192.168.222.175:13306

proxy-backend-addresses=192.168.222.172:3306

proxy-read-only-backend-addresses=192.168.222.173:3306,192.168.222.174:3306

# Admin Configuration

admin-address=192.168.222.175:23306

admin-username=admin

admin-password=admin

# Backend Configuration, use test db and username created

default-db=ttt

default-username=gcdb

default-pool-size=100

max-resp-size=10485760

long-query-time=100

# File and Log Configuration, put log in /data and marked by proxy port, /data/cetus needs to be created manually and has rw authority for cetus os user

max-open-files = 65536

pid-file = cetus6001.pid

plugin-dir=lib/cetus/plugins

log-file=/var/log/cetus.log

log-level=debug

# Check salve delay

disable-threads=false

check-slave-delay=true

slave-delay-down=5

slave-delay-recover=1

# For trouble

keepalive=true

verbose-shutdown=true

log-backtrace-on-crash=true

- 在manager节点创建全局配置文件(masterha_default.cnf)

[root@node05 software]# cat /etc/masterha_default.cnf

[server default]

#用于指定mha manager产生相关状态文件全路径

manager_workdir=/var/log/masterha

#指定mha manager的绝对路径的文件名日志文件

manager_log=/var/log/masterha/mha.log

# cetus porxy文件绝对路径

proxy_conf=/etc/cetus.cnf

# 登陆mysql数据库账户及密码

user=gcdb

password=iforgot

# ssh用户

ssh_user=root

ssh_port=22

# mysql数据库master节点binlog的位置,该参数用于当master节点死掉后通过ssh方式顺序读取binlog event,需要配置,因为master节点死掉后无法通过replication机制来自动获取binlog日志位置

master_binlog_dir=/r2/mysqldata

#master_binlog_dir= /r2/mysqldata

# 用于检测各节点间的连接性,此处详细可参考MHA parameters描述部分

secondary_check_script= masterha_secondary_check -s 192.168.222.173 -s 192.168.222.174

ping_interval=3

ping_type=select

remote_workdir=/tmp

#定义用于实现VIP漂移的脚本,后面的是shutdown以及report脚本

master_ip_failover_script=/usr/local/bin/master_ip_failover

#shutdown_script=/usr/local/bin/power_manager

shutdown_script=

report_script="/usr/local/share/perl5/MHA/sendMail.sh"

#MySQL用于复制的账号

repl_user=repl

repl_password=repl

- 在manager节点上创建配置文件(mha01.cnf)

[root@node05 software]# cat /etc/mha.cnf

[server default]

manager_log=/var/log/masterha/mha.log

manager_workdir=/var/log/masterha

[server1]

hostname=192.168.222.172

master_binlog_dir=/r2/mysqldata

port=3306

[server2]

hostname=192.168.222.173

master_binlog_dir=/r2/mysqldata

port=3306

[server3]

hostname=192.168.222.174

master_binlog_dir=/r2/mysqldata

port=3306

- 在manager节点上修改故障转移脚本(master_ip_failover)

[root@node05 mha4mysql-manager-0.57]# vim /usr/local/bin/master_ip_failover

#!/usr/bin/env perl

use strict;

use warnings FATAL => 'all';

use Getopt::Long;

use MHA::DBHelper;

my (

$command, $ssh_user, $orig_master_host, $orig_master_ip,

$orig_master_port, $new_master_host, $new_master_ip, $new_master_port

);

#添加切换vip

my $vip = '192.168.222.99/24';

my $key = '1';

my $ssh_start_vip = "/sbin/ifconfig ens224:$key $vip";

my $ssh_stop_vip = "/sbin/ifconfig ens224:$key down";

$ssh_user = "root";

GetOptions(

'command=s' => \$command,

'ssh_user=s' => \$ssh_user,

'orig_master_host=s' => \$orig_master_host,

'orig_master_ip=s' => \$orig_master_ip,

'orig_master_port=i' => \$orig_master_port,

'new_master_host=s' => \$new_master_host,

'new_master_ip=s' => \$new_master_ip,

'new_master_port=i' => \$new_master_port,

);

exit &main();

sub main {

print "\n\nIN SCRIPT TEST====$ssh_stop_vip==$ssh_start_vip===\n\n";

if ( $command eq "stop" || $command eq "stopssh" ) {

my $exit_code = 1;

eval {

print "Disabling the VIP on old master: $orig_master_host \n";

&stop_vip();

$exit_code = 0;

};

if ($@) {

warn "Got Error: $@\n";

exit $exit_code;

}

exit $exit_code;

}

elsif ( $command eq "start" ) {

my $exit_code = 10;

eval {

print "Enabling the VIP - $vip on the new master - $new_master_host \n";

&start_vip();

$exit_code = 0;

};

if ($@) {

warn $@;

exit $exit_code;

}

exit $exit_code;

}

elsif ( $command eq "status" ) {

print "Checking the Status of the script.. OK \n";

exit 0;

}

else {

&usage();

exit 1;

}

}

sub start_vip() {

`ssh $ssh_user\@$new_master_host \" $ssh_start_vip \"`;

}

sub stop_vip() {

return 0 unless ($ssh_user);

`ssh $ssh_user\@$orig_master_host \" $ssh_stop_vip \"`;

}

sub usage {

print

"Usage: master_ip_failover --command=start|stop|stopssh|status --orig_master_host=host --orig_master_ip=ip --orig_master_port=port --new_master_host=host --new_master_ip=ip --new_master_port=port\n";

}

2、检查 SSH/复制/MHA Manager 的配置

- 检查SSH 情况:masterha_check_ssh --conf=/etc/mha.cnf

[root@node05 src]# masterha_check_ssh --conf=/etc/mha.cnf

Fri Aug 31 13:55:51 2018 - [info] Reading default configuration from /etc/masterha_default.cnf..

Fri Aug 31 13:55:51 2018 - [info] Reading application default configuration from /etc/mha.cnf..

Fri Aug 31 13:55:51 2018 - [info] Reading server configuration from /etc/mha.cnf..

Fri Aug 31 13:55:51 2018 - [info] Starting SSH connection tests..

Fri Aug 31 13:55:52 2018 - [debug]

Fri Aug 31 13:55:51 2018 - [debug] Connecting via SSH from root@192.168.222.172(192.168.222.172:22) to root@192.168.222.173(192.168.222.173:22)..

Fri Aug 31 13:55:51 2018 - [debug] ok.

Fri Aug 31 13:55:51 2018 - [debug] Connecting via SSH from root@192.168.222.172(192.168.222.172:22) to root@192.168.222.174(192.168.222.174:22)..

Fri Aug 31 13:55:51 2018 - [debug] ok.

Fri Aug 31 13:55:52 2018 - [debug]

Fri Aug 31 13:55:51 2018 - [debug] Connecting via SSH from root@192.168.222.173(192.168.222.173:22) to root@192.168.222.172(192.168.222.172:22)..

Fri Aug 31 13:55:51 2018 - [debug] ok.

Fri Aug 31 13:55:51 2018 - [debug] Connecting via SSH from root@192.168.222.173(192.168.222.173:22) to root@192.168.222.174(192.168.222.174:22)..

Fri Aug 31 13:55:52 2018 - [debug] ok.

Fri Aug 31 13:55:53 2018 - [debug]

Fri Aug 31 13:55:52 2018 - [debug] Connecting via SSH from root@192.168.222.174(192.168.222.174:22) to root@192.168.222.172(192.168.222.172:22)..

Fri Aug 31 13:55:52 2018 - [debug] ok.

Fri Aug 31 13:55:52 2018 - [debug] Connecting via SSH from root@192.168.222.174(192.168.222.174:22) to root@192.168.222.173(192.168.222.173:22)..

Fri Aug 31 13:55:52 2018 - [debug] ok.

Fri Aug 31 13:55:53 2018 - [info] All SSH connection tests passed successfully.

[root@node05 src]#

- 检查复制情况:masterha_check_repl --conf=/etc/mha.cnf

[root@node05 src]# masterha_check_repl --conf=/etc/mha.cnf

Fri Aug 31 13:59:17 2018 - [info] Reading default configuration from /etc/masterha_default.cnf..

Fri Aug 31 13:59:17 2018 - [info] Reading application default configuration from /etc/mha.cnf..

Fri Aug 31 13:59:17 2018 - [info] Reading server configuration from /etc/mha.cnf..

Fri Aug 31 13:59:17 2018 - [info] MHA::MasterMonitor version 0.56.

Fri Aug 31 13:59:17 2018 - [info] g_workdir: /var/log/masterha

Fri Aug 31 13:59:17 2018 - [info] proxy_conf: /etc/cetus.cnf

Fri Aug 31 13:59:17 2018 - [info] -------------ManagerUtil::check_node_version-----------

Fri Aug 31 13:59:18 2018 - [info] GTID failover mode = 1

Fri Aug 31 13:59:18 2018 - [info] Dead Servers:

Fri Aug 31 13:59:18 2018 - [info] Alive Servers:

Fri Aug 31 13:59:18 2018 - [info] 192.168.222.172(192.168.222.172:3306)

Fri Aug 31 13:59:18 2018 - [info] 192.168.222.173(192.168.222.173:3306)

Fri Aug 31 13:59:18 2018 - [info] 192.168.222.174(192.168.222.174:3306)

Fri Aug 31 13:59:18 2018 - [info] Alive Slaves:

Fri Aug 31 13:59:18 2018 - [info] 192.168.222.173(192.168.222.173:3306) Version=5.7.18-log (oldest major version between slaves) log-bin:enabled

Fri Aug 31 13:59:18 2018 - [info] GTID ON

Fri Aug 31 13:59:18 2018 - [info] Replicating from 192.168.222.172(192.168.222.172:3306)

Fri Aug 31 13:59:18 2018 - [info] 192.168.222.174(192.168.222.174:3306) Version=5.7.18-log (oldest major version between slaves) log-bin:enabled

Fri Aug 31 13:59:18 2018 - [info] GTID ON

Fri Aug 31 13:59:18 2018 - [info] Replicating from 192.168.222.172(192.168.222.172:3306)

Fri Aug 31 13:59:18 2018 - [info] Current Alive Master: 192.168.222.172(192.168.222.172:3306)

Fri Aug 31 13:59:18 2018 - [info] Checking slave configurations..

Fri Aug 31 13:59:18 2018 - [info] Checking replication filtering settings..

Fri Aug 31 13:59:18 2018 - [info] binlog_do_db= , binlog_ignore_db=

Fri Aug 31 13:59:18 2018 - [info] Replication filtering check ok.

Fri Aug 31 13:59:18 2018 - [info] GTID (with auto-pos) is supported. Skipping all SSH and Node package checking.

Fri Aug 31 13:59:18 2018 - [info] Checking SSH publickey authentication settings on the current master..

Fri Aug 31 13:59:18 2018 - [info] HealthCheck: SSH to 192.168.222.172 is reachable.

Fri Aug 31 13:59:18 2018 - [info]

192.168.222.172(192.168.222.172:3306) (current master)

+--192.168.222.173(192.168.222.173:3306)

+--192.168.222.174(192.168.222.174:3306)

Fri Aug 31 13:59:18 2018 - [info] Checking replication health on 192.168.222.173..

Fri Aug 31 13:59:18 2018 - [info] ok.

Fri Aug 31 13:59:18 2018 - [info] Checking replication health on 192.168.222.174..

Fri Aug 31 13:59:18 2018 - [info] ok.

Fri Aug 31 13:59:18 2018 - [info] Checking master_ip_failover_script status:

Fri Aug 31 13:59:18 2018 - [info] /usr/local/bin/master_ip_failover --command=status --ssh_user=root --orig_master_host=192.168.222.172 --orig_master_ip=192.168.222.172 --orig_master_port=3306

IN SCRIPT TEST====/sbin/ifconfig ens224:1 down==/sbin/ifconfig ens224:1 192.168.222.99/24===

Checking the Status of the script.. OK

Fri Aug 31 13:59:18 2018 - [info] OK.

Fri Aug 31 13:59:18 2018 - [warning] shutdown_script is not defined.

Fri Aug 31 13:59:18 2018 - [info] Got exit code 0 (Not master dead).

MySQL Replication Health is OK.

[root@node05 src]#

- 检查MHA 状态:masterha_check_status --conf=/etc/mha.cnf

[root@node05 src]# masterha_check_status --conf=/etc/mha.cnf

mha is stopped(2:NOT_RUNNING).

- 检查masterha_secondary_check脚本中mysql客户端安装位置

[root@node05 software]# which mysql

/usr/local/mysql/bin/mysql

[root@node05 software]# grep -w /usr/bin/mysql /usr/local/bin/*

/usr/local/bin/masterha_secondary_check: . "/usr/bin/mysql -u$master_user -p$master_password -h$master_host -P$master_port "

[root@node05 software]# ln -s /usr/local/mysql/bin/mysql /usr/bin/mysql

[root@node05 software]# which mysql

/usr/bin/mysql

3、启动MHA manager

- session A

[root@node05 src]# mkdir -p /var/log/masterha/

- session B

[root@node05 mha4mysql-manager-0.57]# nohup masterha_manager --conf=/etc/masterha/app1.cnf --remove_dead_master_conf --ignore_last_failover < /dev/null > /var/log/masterha/app1/manager.log 2>&1 &

[1] 19246

- session A

[root@node05 ~]# tail -f /var/log/masterha/app1/manager.log

IN SCRIPT TEST====/sbin/ifconfig ens224:1 down==/sbin/ifconfig ens224:1 192.168.222.99/24===

Checking the Status of the script.. OK

Fri Aug 31 14:06:45 2018 - [info] OK.

Fri Aug 31 14:06:45 2018 - [warning] shutdown_script is not defined.

Fri Aug 31 14:06:45 2018 - [info] Set master ping interval 3 seconds.

Fri Aug 31 14:06:45 2018 - [info] Set secondary check script: masterha_secondary_check -s 192.168.222.172 -s 192.168.222.173 -s 192.168.222.174

Fri Aug 31 14:06:45 2018 - [info] Starting ping health check on 192.168.222.172(192.168.222.172:3306)..

Fri Aug 31 14:06:45 2018 - [info] Ping(SELECT) succeeded, waiting until MySQL doesn't respond..

[root@node05 src]# cat /var/log/masterha/mha.log

Fri Aug 31 14:06:44 2018 - [info] Reading default configuration from /etc/masterha_default.cnf..

Fri Aug 31 14:06:44 2018 - [info] Reading application default configuration from /etc/mha.cnf..

Fri Aug 31 14:06:44 2018 - [info] Reading server configuration from /etc/mha.cnf..

Fri Aug 31 14:06:44 2018 - [info] MHA::MasterMonitor version 0.56.

Fri Aug 31 14:06:44 2018 - [info] g_workdir: /var/log/masterha

Fri Aug 31 14:06:44 2018 - [info] proxy_conf: /etc/cetus.cnf

Fri Aug 31 14:06:44 2018 - [info] -------------ManagerUtil::check_node_version-----------

Fri Aug 31 14:06:45 2018 - [info] GTID failover mode = 1

Fri Aug 31 14:06:45 2018 - [info] Dead Servers:

Fri Aug 31 14:06:45 2018 - [info] Alive Servers:

Fri Aug 31 14:06:45 2018 - [info] 192.168.222.172(192.168.222.172:3306)

Fri Aug 31 14:06:45 2018 - [info] 192.168.222.173(192.168.222.173:3306)

Fri Aug 31 14:06:45 2018 - [info] 192.168.222.174(192.168.222.174:3306)

Fri Aug 31 14:06:45 2018 - [info] Alive Slaves:

Fri Aug 31 14:06:45 2018 - [info] 192.168.222.173(192.168.222.173:3306) Version=5.7.18-log (oldest major version between slaves) log-bin:enabled

Fri Aug 31 14:06:45 2018 - [info] GTID ON

Fri Aug 31 14:06:45 2018 - [info] Replicating from 192.168.222.172(192.168.222.172:3306)

Fri Aug 31 14:06:45 2018 - [info] 192.168.222.174(192.168.222.174:3306) Version=5.7.18-log (oldest major version between slaves) log-bin:enabled

Fri Aug 31 14:06:45 2018 - [info] GTID ON

Fri Aug 31 14:06:45 2018 - [info] Replicating from 192.168.222.172(192.168.222.172:3306)

Fri Aug 31 14:06:45 2018 - [info] Current Alive Master: 192.168.222.172(192.168.222.172:3306)

Fri Aug 31 14:06:45 2018 - [info] Checking slave configurations..

Fri Aug 31 14:06:45 2018 - [info] Checking replication filtering settings..

Fri Aug 31 14:06:45 2018 - [info] binlog_do_db= , binlog_ignore_db=

Fri Aug 31 14:06:45 2018 - [info] Replication filtering check ok.

Fri Aug 31 14:06:45 2018 - [info] GTID (with auto-pos) is supported. Skipping all SSH and Node package checking.

Fri Aug 31 14:06:45 2018 - [info] Checking SSH publickey authentication settings on the current master..

Fri Aug 31 14:06:45 2018 - [info] HealthCheck: SSH to 192.168.222.172 is reachable.

Fri Aug 31 14:06:45 2018 - [info]

192.168.222.172(192.168.222.172:3306) (current master)

+--192.168.222.173(192.168.222.173:3306)

+--192.168.222.174(192.168.222.174:3306)

Fri Aug 31 14:06:45 2018 - [info] Checking master_ip_failover_script status:

Fri Aug 31 14:06:45 2018 - [info] /usr/local/bin/master_ip_failover --command=status --ssh_user=root --orig_master_host=192.168.222.172 --orig_master_ip=192.168.222.172 --orig_master_port=3306

IN SCRIPT TEST====/sbin/ifconfig ens224:1 down==/sbin/ifconfig ens224:1 192.168.222.99/24===

Checking the Status of the script.. OK

Fri Aug 31 14:06:45 2018 - [info] OK.

Fri Aug 31 14:06:45 2018 - [warning] shutdown_script is not defined.

Fri Aug 31 14:06:45 2018 - [info] Set master ping interval 3 seconds.

Fri Aug 31 14:06:45 2018 - [info] Set secondary check script: masterha_secondary_check -s 192.168.222.172 -s 192.168.222.173 -s 192.168.222.174

Fri Aug 31 14:06:45 2018 - [info] Starting ping health check on 192.168.222.172(192.168.222.172:3306)..

Fri Aug 31 14:06:45 2018 - [info] Ping(SELECT) succeeded, waiting until MySQL doesn't respond.. --表示已开启监听

- session B

[root@node05 software]# masterha_check_status --conf=/etc/mha.cnf

mha (pid:25386) is running(0:PING_OK), master:192.168.222.172

[root@node05 software]#

4、启动cetus

- 启动前保证启动配置文件的权限为660

[root@node05 src]# chmod 660 /usr/local/cetus/conf/*

[root@node05 src]# ll /usr/local/cetus/conf/

total 28

-rw-rw---- 1 root root 1053 Aug 31 12:12 proxy.conf

-rw-rw---- 1 root root 1011 Aug 31 09:21 proxy.conf.example

-rw-rw---- 1 root root 1199 Aug 31 09:21 shard.conf.example

-rw-rw---- 1 root root 1030 Aug 31 09:21 sharding.json.example

-rw-rw---- 1 root root 189 Aug 31 12:04 users.json

-rw-rw---- 1 root root 198 Aug 31 09:21 users.json.example

-rw-rw---- 1 root ro

- 守护进程模式启动Cetus

- session A

[root@node01 software]# ll mha*

-rw-r--r-- 1 root root 118521 Aug 28 15:41 mha4mysql-manager-0.57.tar.gz

-rw-r--r-- 1 root root 54484 Aug 28 15:41 mha4mysql-node-0.57.tar.gz

[root@node05 src]# /usr/local/cetus/bin/cetus --defaults-file=/usr/local/cetus/conf/proxy.conf

[root@node05 src]# ps -ef |grep cetusb

root 26580 1 0 14:23 ? 00:00:00 /usr/local/cetus/libexec/cetus --defaults-file=/usr/local/cetus/conf/proxy.conf

root 26581 26580 0 14:23 ? 00:00:00 /usr/local/cetus/libexec/cetus --defaults-file=/usr/local/cetus/conf/proxy.conf

root 26624 2272 0 14:23 pts/0 00:00:00 grep --color=auto cetus

[root@node05 src]#

- 查看Cetus状态

- session B

[root@node05 src]# mysql -ugcdb -piforgot -h192.168.222.175 -P13306

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 1

Server version: 5.7.18-log (cetus) MySQL Community Server (GPL)

Copyright (c) 2000, 2017, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

(gcdb@192.168.222.175) 14:26:55 [(none)]> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| proxy_heart_beat |

| sys |

| ttt |

+--------------------+

6 rows in set (0.00 sec)

(gcdb@192.168.222.175) 14:27:02 [(none)]> exit

Bye

[root@node05 src]# mysql -uadmin -padmin -h192.168.222.175 -P23306

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 1

Server version: 5.7 admin

Copyright (c) 2000, 2017, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

(admin@192.168.222.175) 14:27:41 [(none)]> select conn_details from backends;select * from backends;

+-------------+----------+------------+------------+-------------+

| backend_ndx | username | idle_conns | used_conns | total_conns |

+-------------+----------+------------+------------+-------------+

| 0 | gcdb | 100 | 0 | 100 |

| 1 | gcdb | 100 | 0 | 100 |

| 2 | gcdb | 100 | 0 | 100 |

+-------------+----------+------------+------------+-------------+

3 rows in set (0.00 sec)

+-------------+----------------------+-------+------+-------------+------+------------+------------+-------------+

| backend_ndx | address | state | type | slave delay | uuid | idle_conns | used_conns | total_conns |

+-------------+----------------------+-------+------+-------------+------+------------+------------+-------------+

| 1 | 192.168.222.172:3306 | up | rw | NULL | NULL | 100 | 0 | 100 | --172,读写

| 2 | 192.168.222.173:3306 | up | ro | 426 | NULL | 100 | 0 | 100 | --173,只读

| 3 | 192.168.222.174:3306 | up | ro | 427 | NULL | 100 | 0 | 100 | --174,只读

+-------------+----------------------+-------+------+-------------+------+------------+------------+-------------+

3 rows in set (0.00 sec)

(admin@192.168.222.175) 14:58:37 [(none)]> cetus;

+--------------------------+---------------------+

| Status | Value |

+--------------------------+---------------------+

| Cetus version | v1.0.0-44-g5b1bd43 |

| Startup time | 2018-08-31 14:23:07 |

| Loaded modules | proxy admin |

| Idle backend connections | 300 |

| Used backend connections | 0 |

| Client connections | 4 |

| Query count | 5 |

| QPS (1min, 5min, 15min) | 0.00, 0.01, 0.00 |

| TPS (1min, 5min, 15min) | 0.00, 0.00, 0.00 |

+--------------------------+---------------------+

9 rows in set (0.00 sec)

(admin@192.168.222.175) 14:58:39 [(none)]>

5、验证cetus读写分离功能

- session A

[root@node05 software]# mysql -ugcdb -piforgot -h192.168.222.175 -P13306

(gcdb@192.168.222.175) 15:18:37 [(none)]> select sleep(10) from ttt.t1;

- session B

(admin@192.168.222.175) 15:18:44 [(none)]> select conn_details from backends;select * from backends;

+-------------+----------+------------+------------+-------------+

| backend_ndx | username | idle_conns | used_conns | total_conns |

+-------------+----------+------------+------------+-------------+

| 0 | gcdb | 100 | 0 | 100 |

| 1 | gcdb | 100 | 0 | 100 |

| 2 | gcdb | 99 | 1 | 100 | --读负载到backend_ndx=3这台

+-------------+----------+------------+------------+-------------+

3 rows in set (0.00 sec)

+-------------+----------------------+-------+------+-------------+------+------------+------------+-------------+

| backend_ndx | address | state | type | slave delay | uuid | idle_conns | used_conns | total_conns |

+-------------+----------------------+-------+------+-------------+------+------------+------------+-------------+

| 1 | 192.168.222.172:3306 | up | rw | NULL | NULL | 100 | 0 | 100 |

| 2 | 192.168.222.173:3306 | up | ro | 403 | NULL | 100 | 0 | 100 |

| 3 | 192.168.222.174:3306 | up | ro | 404 | NULL | 99 | 1 | 100 | --读负载到192.168.222.174

+-------------+----------------------+-------+------+-------------+------+------------+------------+-------------+

3 rows in set (0.00 sec)

(admin@192.168.222.175) 15:18:46 [(none)]>

- session A

(gcdb@192.168.222.175) 15:18:37 [(none)]> select sleep(10) from ttt.t1;

+-----------+

| sleep(10) |

+-----------+

| 0 |

| 0 |

| 0 |

| 0 |

+-----------+

4 rows in set (40.00 sec)

(gcdb@192.168.222.175) 15:19:20 [(none)]>

#再次执行查询看后端负载到那个服务器

(gcdb@192.168.222.175) 15:19:20 [(none)]> (gcdb@192.168.222.175) 15:19:20 [(none)]> select sleep(10) from ttt.t1;

+-----------+

| sleep(10) |

+-----------+

| 0 |

| 0 |

| 0 |

| 0 |

+-----------+

4 rows in set (40.01 sec)

- session B

(admin@192.168.222.175) 15:19:22 [(none)]> select conn_details from backends;select * from backends;

+-------------+----------+------------+------------+-------------+

| backend_ndx | username | idle_conns | used_conns | total_conns |

+-------------+----------+------------+------------+-------------+

| 0 | gcdb | 100 | 0 | 100 |

| 1 | gcdb | 99 | 1 | 100 | --读负载到backend_ndx=2这台

| 2 | gcdb | 100 | 0 | 100 |

+-------------+----------+------------+------------+-------------+

3 rows in set (0.00 sec)

+-------------+----------------------+-------+------+-------------+------+------------+------------+-------------+

| backend_ndx | address | state | type | slave delay | uuid | idle_conns | used_conns | total_conns |

+-------------+----------------------+-------+------+-------------+------+------------+------------+-------------+

| 1 | 192.168.222.172:3306 | up | rw | NULL | NULL | 100 | 0 | 100 |

| 2 | 192.168.222.173:3306 | up | ro | 783 | NULL | 99 | 1 | 100 | --读负载到192.168.222.173

| 3 | 192.168.222.174:3306 | up | ro | 432 | NULL | 100 | 0 | 100 |

+-------------+----------------------+-------+------+-------------+------+------------+------------+-------------+

3 rows in set (0.00 sec)

- 验证简单读写

(gcdb@192.168.222.175) 15:23:11 [(none)]> select sleep(5);insert into ttt.t1(nums) values(6),(7),(8),(9),(10);

+----------+

| sleep(5) |

+----------+

| 0 |

+----------+

1 row in set (5.00 sec)

Query OK, 5 rows affected (0.05 sec)

Records: 5 Duplicates: 0 Warnings: 0

(gcdb@192.168.222.175) 15:30:35 [(none)]> select * from ttt.t1;

+----+------+

| id | nums |

+----+------+

| 1 | 1 |

| 2 | 2 |

| 3 | 100 |

| 5 | 5 |

| 6 | 6 |

| 7 | 7 |

| 8 | 8 |

| 9 | 9 |

| 10 | 10 |

+----+------+

9 rows in set (0.01 sec)

(gcdb@192.168.222.175) 15:31:02 [(none)]>

五 MHA和Cetu联动切换

1、添加mail告警功能

- 修改配置

[root@node05 software]# vim /etc/mail.rc

#最后追加以下内容

set from=xxxxxx@163.com #此处设置发件人的信息

set smtp=smtp.163.com #此处配置邮件服务地址,因为邮箱是163的,所以此处配置为smtp.163.com

set smtp-auth-user=xxxxxx@163.com #此处配置发件人邮箱地址

set smtp-auth-password=xxxxxx #切记此处配置abc是客户端授权码,不是发件人邮箱地址密码

set smtp-auth=login #邮件认证方式

- 启动sendmail测试

[root@node05 ~]# systemctl start sendmail

[root@node05 ~]# mail -s "status" xxxxxx@139.com < /etc/hosts

- 修改sendmail脚本

[root@node05 ~]# chmod 755 /usr/local/share/perl5/MHA/sendMail.sh

[root@node05 ~]# ll /usr/local/share/perl5/MHA/sendMail.sh

-rwxr-xr-x 1 root root 593 Sep 3 09:27 /usr/local/share/perl5/MHA/sendMail.sh

[root@node05 ~]# cat /usr/local/share/perl5/MHA/sendMail.sh

#!/bin/bash

## author : cch

## desc: sendmail

############ variable part ########################

conf=/etc/mha.cnf

if [ $# -ne 1 ];then

mailsubject="mha--failover--`date +%Y%m%d%H%M`"

else

mailsubject=$1

fi

############# main #########################

find_flag=`cat $conf|grep -v '^#'|grep "manager_workdir"|awk -F= '{print $2}'|wc -l`

if [ ${find_flag} -eq 1 ];then

manager_workdir=`cat $conf|grep -v '^#'|grep "manager_workdir"|awk -F= '{print $2}'|sed 's/ //g'`

fi

#修改邮箱地址

mail -s "${mailsubject}" xxxx@139.com < ${manager_workdir}/sendmail.txt

echo `date` >> ./sendMail.log

[root@node05 ~]#

2、切换主库

模拟主库故障

1、node02 会话

[root@node02 scripts]# systemctl stop mysql

[root@node02 scripts]# ps -ef |grep mysql |grep -v grep

root 26319 2289 0 Aug31 pts/0 00:00:00 mysql -uroot -px xxxxx

[root@node02 scripts]#

2、node05 会话

切换前cetus中查看读写状态

- session B

(admin@192.168.222.175) 10:04:01 [(none)]> select * from backends;

+-------------+----------------------+-------+------+-------------+------+------------+------------+-------------+

| backend_ndx | address | state | type | slave delay | uuid | idle_conns | used_conns | total_conns |

+-------------+----------------------+-------+------+-------------+------+------------+------------+-------------+

| 1 | 192.168.222.172:3306 | up | rw | NULL | NULL | 100 | 0 | 100 |

| 2 | 192.168.222.173:3306 | up | ro | 423 | NULL | 100 | 0 | 100 |

| 3 | 192.168.222.174:3306 | up | ro | 423 | NULL | 100 | 0 | 100 |

+-------------+----------------------+-------+------+-------------+------+------------+------------+-------------+

3 rows in set (0.00 sec)

(admin@192.168.222.175) 10:10:44 [(none)]>

- session A

[root@node05 ~]# tailf /var/log/masterha/mha.log

Mon Sep 3 10:06:24 2018 - [warning] Got error on MySQL select ping: 2013 (Lost connection to MySQL server during query)

Mon Sep 3 10:06:24 2018 - [info] Executing secondary network check script: masterha_secondary_check -s 192.168.222.173 -s 192.168.222.174 --user=root --master_host=192.168.222.172 --master_ip=192.168.222.172 --master_port=3306 --master_user=gcdb --master_password=iforgot --ping_type=SELECT

Mon Sep 3 10:06:24 2018 - [info] Executing SSH check script: exit 0

Mon Sep 3 10:06:24 2018 - [info] HealthCheck: SSH to 192.168.222.172 is reachable.

Monitoring server 192.168.222.173 is reachable, Master is not reachable from 192.168.222.173. OK.

Monitoring server 192.168.222.174 is reachable, Master is not reachable from 192.168.222.174. OK.

Mon Sep 3 10:06:24 2018 - [info] Master is not reachable from all other monitoring servers. Failover should start.

Mon Sep 3 10:06:27 2018 - [warning] Got error on MySQL connect: 2003 (Can't connect to MySQL server on '192.168.222.172' (111))

Mon Sep 3 10:06:27 2018 - [warning] Connection failed 2 time(s)..

Mon Sep 3 10:06:30 2018 - [warning] Got error on MySQL connect: 2003 (Can't connect to MySQL server on '192.168.222.172' (111))

Mon Sep 3 10:06:30 2018 - [warning] Connection failed 3 time(s)..

Mon Sep 3 10:06:33 2018 - [warning] Got error on MySQL connect: 2003 (Can't connect to MySQL server on '192.168.222.172' (111))

Mon Sep 3 10:06:33 2018 - [warning] Connection failed 4 time(s)..

Mon Sep 3 10:06:33 2018 - [warning] Master is not reachable from health checker!

Mon Sep 3 10:06:33 2018 - [warning] Master 192.168.222.172(192.168.222.172:3306) is not reachable!

Mon Sep 3 10:06:33 2018 - [warning] SSH is reachable.

Mon Sep 3 10:06:33 2018 - [info] Connecting to a master server failed. Reading configuration file /etc/masterha_default.cnf and /etc/mha.cnf again, and trying to connect to all servers to check server status..

Mon Sep 3 10:06:33 2018 - [info] Reading default configuration from /etc/masterha_default.cnf..

Mon Sep 3 10:06:33 2018 - [info] Reading application default configuration from /etc/mha.cnf..

Mon Sep 3 10:06:33 2018 - [info] Reading server configuration from /etc/mha.cnf..

Mon Sep 3 10:06:34 2018 - [info] GTID failover mode = 1

Mon Sep 3 10:06:34 2018 - [info] Dead Servers:

Mon Sep 3 10:06:34 2018 - [info] 192.168.222.172(192.168.222.172:3306)

Mon Sep 3 10:06:34 2018 - [info] Alive Servers:

Mon Sep 3 10:06:34 2018 - [info] 192.168.222.173(192.168.222.173:3306)

Mon Sep 3 10:06:34 2018 - [info] 192.168.222.174(192.168.222.174:3306)

Mon Sep 3 10:06:34 2018 - [info] Alive Slaves:

Mon Sep 3 10:06:34 2018 - [info] 192.168.222.173(192.168.222.173:3306) Version=5.7.18-log (oldest major version between slaves) log-bin:enabled

Mon Sep 3 10:06:34 2018 - [info] GTID ON

Mon Sep 3 10:06:34 2018 - [info] Replicating from 192.168.222.172(192.168.222.172:3306)

Mon Sep 3 10:06:34 2018 - [info] 192.168.222.174(192.168.222.174:3306) Version=5.7.18-log (oldest major version between slaves) log-bin:enabled

Mon Sep 3 10:06:34 2018 - [info] GTID ON

Mon Sep 3 10:06:34 2018 - [info] Replicating from 192.168.222.172(192.168.222.172:3306)

Mon Sep 3 10:06:34 2018 - [info] Checking slave configurations..

Mon Sep 3 10:06:34 2018 - [info] read_only=1 is not set on slave 192.168.222.173(192.168.222.173:3306).

Mon Sep 3 10:06:34 2018 - [info] read_only=1 is not set on slave 192.168.222.174(192.168.222.174:3306).

Mon Sep 3 10:06:34 2018 - [info] Checking replication filtering settings..

Mon Sep 3 10:06:34 2018 - [info] Replication filtering check ok.

Mon Sep 3 10:06:34 2018 - [info] Master is down!

Mon Sep 3 10:06:34 2018 - [info] Terminating monitoring script.

Mon Sep 3 10:06:34 2018 - [info] Got exit code 20 (Master dead).

Mon Sep 3 10:06:34 2018 - [info] MHA::MasterFailover version 0.56.

Mon Sep 3 10:06:34 2018 - [info] g_interactive: 0

Mon Sep 3 10:06:34 2018 - [info] g_logfile: /var/log/masterha/mha.log

Mon Sep 3 10:06:34 2018 - [info] g_global_config_file: /etc/masterha_default.cnf

Mon Sep 3 10:06:34 2018 - [info] g_config_file: /etc/mha.cnf

Mon Sep 3 10:06:34 2018 - [info] g_proxy_config_file: /etc/cetus.cnf

Mon Sep 3 10:06:34 2018 - [info] Starting master failover.

Mon Sep 3 10:06:34 2018 - [info]

Mon Sep 3 10:06:34 2018 - [info] * Phase 1: Configuration Check Phase..

Mon Sep 3 10:06:34 2018 - [info]

Mon Sep 3 10:06:34 2018 - [info] -------------ManagerUtil::check_node_version-----------

Mon Sep 3 10:06:35 2018 - [info] GTID failover mode = 1

Mon Sep 3 10:06:35 2018 - [info] Dead Servers:

Mon Sep 3 10:06:35 2018 - [info] 192.168.222.172(192.168.222.172:3306)

Mon Sep 3 10:06:35 2018 - [info] Checking master reachability via MySQL(double check)...

Mon Sep 3 10:06:35 2018 - [info] ok.

Mon Sep 3 10:06:35 2018 - [info] Dead server: 192.168.222.172(192.168.222.172:3306)

Mon Sep 3 10:06:35 2018 - [info] deadaddr: 192.168.222.172:3306

Mon Sep 3 10:06:35 2018 - [info] Reading default configuration from /etc/masterha_default.cnf..

Mon Sep 3 10:06:35 2018 - [info] Reading application default configuration from /etc/mha.cnf..

Mon Sep 3 10:06:35 2018 - [info] Reading server configuration from /etc/mha.cnf..

Mon Sep 3 10:06:35 2018 - [info] [ProxyManager::setproxy] proxy_conf: /etc/cetus.cnf

Mon Sep 3 10:06:35 2018 - [info] type : failover

Mon Sep 3 10:06:35 2018 - [info] status : down

Mon Sep 3 10:06:35 2018 - [info] addr : 192.168.222.172:3306

Mon Sep 3 10:06:35 2018 - [info] dbtype : ro

Mon Sep 3 10:06:35 2018 - [info] Reading default configuration from /etc/masterha_default.cnf..

Mon Sep 3 10:06:35 2018 - [info] Reading application default configuration from /etc/mha.cnf..

Mon Sep 3 10:06:35 2018 - [info] Reading server configuration from /etc/mha.cnf..

Mon Sep 3 10:06:35 2018 - [info] [ProxyManager::setdb] proxy_conf: /etc/cetus.cnf

Mon Sep 3 10:06:35 2018 - [info] ** 192.168.222.175:23306 started, pid: 5144

Mon Sep 3 10:06:35 2018 - [info] exec command: /usr/bin/mysql -h192.168.222.175 -uadmin -P23306 -padmin -e " update backends set state='down' , type='ro' where address='192.168.222.172:3306';"

Mon Sep 3 10:06:35 2018 - [info] ......setdb.....

Mon Sep 3 10:06:35 2018 - [info] Alive Servers:

Mon Sep 3 10:06:35 2018 - [info] 192.168.222.173(192.168.222.173:3306)

Mon Sep 3 10:06:35 2018 - [info] 192.168.222.174(192.168.222.174:3306)

Mon Sep 3 10:06:35 2018 - [info] Alive Slaves:

Mon Sep 3 10:06:35 2018 - [info] 192.168.222.173(192.168.222.173:3306) Version=5.7.18-log (oldest major version between slaves) log-bin:enabled

Mon Sep 3 10:06:35 2018 - [info] GTID ON

Mon Sep 3 10:06:35 2018 - [info] Replicating from 192.168.222.172(192.168.222.172:3306)

Mon Sep 3 10:06:35 2018 - [info] 192.168.222.174(192.168.222.174:3306) Version=5.7.18-log (oldest major version between slaves) log-bin:enabled

Mon Sep 3 10:06:35 2018 - [info] GTID ON

Mon Sep 3 10:06:35 2018 - [info] Replicating from 192.168.222.172(192.168.222.172:3306)

Mon Sep 3 10:06:35 2018 - [info] Starting GTID based failover.

Mon Sep 3 10:06:35 2018 - [info]

Mon Sep 3 10:06:35 2018 - [info] ** Phase 1: Configuration Check Phase completed.

Mon Sep 3 10:06:35 2018 - [info]

Mon Sep 3 10:06:35 2018 - [info] * Phase 2: Dead Master Shutdown Phase..

Mon Sep 3 10:06:35 2018 - [info]

Mon Sep 3 10:06:35 2018 - [info] Forcing shutdown so that applications never connect to the current master..

Mon Sep 3 10:06:35 2018 - [info] Executing master IP deactivation script:

Mon Sep 3 10:06:35 2018 - [info] /usr/local/bin/master_ip_failover --orig_master_host=192.168.222.172 --orig_master_ip=192.168.222.172 --orig_master_port=3306 --command=stopssh --ssh_user=root

IN SCRIPT TEST====/sbin/ifconfig ens224:1 down==/sbin/ifconfig ens224:1 192.168.222.99/24===

Disabling the VIP on old master: 192.168.222.172

Mon Sep 3 10:06:35 2018 - [info] done.

Mon Sep 3 10:06:35 2018 - [warning] shutdown_script is not set. Skipping explicit shutting down of the dead master.

Mon Sep 3 10:06:35 2018 - [info] * Phase 2: Dead Master Shutdown Phase completed.

Mon Sep 3 10:06:35 2018 - [info]

Mon Sep 3 10:06:35 2018 - [info] * Phase 3: Master Recovery Phase..

Mon Sep 3 10:06:35 2018 - [info]

Mon Sep 3 10:06:35 2018 - [info] * Phase 3.1: Getting Latest Slaves Phase..

Mon Sep 3 10:06:35 2018 - [info]

Mon Sep 3 10:06:35 2018 - [info] The latest binary log file/position on all slaves is binlog.000006:3477689

Mon Sep 3 10:06:35 2018 - [info] Retrieved Gtid Set: d3f2e80d-acf1-11e8-869e-005056abaf95:8953-15333

Mon Sep 3 10:06:35 2018 - [info] Latest slaves (Slaves that received relay log files to the latest):

Mon Sep 3 10:06:35 2018 - [info] 192.168.222.173(192.168.222.173:3306) Version=5.7.18-log (oldest major version between slaves) log-bin:enabled

Mon Sep 3 10:06:35 2018 - [info] GTID ON

Mon Sep 3 10:06:35 2018 - [info] Replicating from 192.168.222.172(192.168.222.172:3306)

Mon Sep 3 10:06:35 2018 - [info] 192.168.222.174(192.168.222.174:3306) Version=5.7.18-log (oldest major version between slaves) log-bin:enabled

Mon Sep 3 10:06:35 2018 - [info] GTID ON

Mon Sep 3 10:06:35 2018 - [info] Replicating from 192.168.222.172(192.168.222.172:3306)

Mon Sep 3 10:06:35 2018 - [info] The oldest binary log file/position on all slaves is binlog.000006:3477689

Mon Sep 3 10:06:35 2018 - [info] Retrieved Gtid Set: d3f2e80d-acf1-11e8-869e-005056abaf95:8953-15333

Mon Sep 3 10:06:35 2018 - [info] Oldest slaves:

Mon Sep 3 10:06:35 2018 - [info] 192.168.222.173(192.168.222.173:3306) Version=5.7.18-log (oldest major version between slaves) log-bin:enabled

Mon Sep 3 10:06:35 2018 - [info] GTID ON

Mon Sep 3 10:06:35 2018 - [info] Replicating from 192.168.222.172(192.168.222.172:3306)

Mon Sep 3 10:06:35 2018 - [info] 192.168.222.174(192.168.222.174:3306) Version=5.7.18-log (oldest major version between slaves) log-bin:enabled

Mon Sep 3 10:06:35 2018 - [info] GTID ON

Mon Sep 3 10:06:35 2018 - [info] Replicating from 192.168.222.172(192.168.222.172:3306)

Mon Sep 3 10:06:35 2018 - [info]

Mon Sep 3 10:06:35 2018 - [info] * Phase 3.3: Determining New Master Phase..

Mon Sep 3 10:06:35 2018 - [info]

Mon Sep 3 10:06:35 2018 - [info] Searching new master from slaves..

Mon Sep 3 10:06:35 2018 - [info] Candidate masters from the configuration file:

Mon Sep 3 10:06:35 2018 - [info] Non-candidate masters:

Mon Sep 3 10:06:35 2018 - [info] New master is 192.168.222.173(192.168.222.173:3306)

Mon Sep 3 10:06:35 2018 - [info] Starting master failover..

Mon Sep 3 10:06:35 2018 - [info]

From:

192.168.222.172(192.168.222.172:3306) (current master)

+--192.168.222.173(192.168.222.173:3306)

+--192.168.222.174(192.168.222.174:3306)

To:

192.168.222.173(192.168.222.173:3306) (new master)

+--192.168.222.174(192.168.222.174:3306)

Mon Sep 3 10:06:35 2018 - [info]

Mon Sep 3 10:06:35 2018 - [info] * Phase 3.3: New Master Recovery Phase..

Mon Sep 3 10:06:35 2018 - [info]

Mon Sep 3 10:06:35 2018 - [info] Waiting all logs to be applied..

Mon Sep 3 10:06:35 2018 - [info] done.

Mon Sep 3 10:06:35 2018 - [info] Getting new master's binlog name and position..

Mon Sep 3 10:06:35 2018 - [info] binlog.000003:2361204

Mon Sep 3 10:06:35 2018 - [info] All other slaves should start replication from here. Statement should be: CHANGE MASTER TO MASTER_HOST='192.168.222.173', MASTER_PORT=3306, MASTER_AUTO_POSITION=1, MASTER_USER='repl', MASTER_PASSWORD='xxx';

Mon Sep 3 10:06:35 2018 - [info] Master Recovery succeeded. File:Pos:Exec_Gtid_Set: binlog.000003, 2361204, 75ee239c-aaa5-11e8-84db-005056aba7b5:1-2795,

d3f2e80d-acf1-11e8-869e-005056abaf95:1-15333

Mon Sep 3 10:06:35 2018 - [info] Executing master IP activate script:

Mon Sep 3 10:06:35 2018 - [info] /usr/local/bin/master_ip_failover --command=start --ssh_user=root --orig_master_host=192.168.222.172 --orig_master_ip=192.168.222.172 --orig_master_port=3306 --new_master_host=192.168.222.173 --new_master_ip=192.168.222.173 --new_master_port=3306 --new_master_user='gcdb' --new_master_password='iforgot'

Unknown option: new_master_user

Unknown option: new_master_password

IN SCRIPT TEST====/sbin/ifconfig ens224:1 down==/sbin/ifconfig ens224:1 192.168.222.99/24===

Enabling the VIP - 192.168.222.99/24 on the new master - 192.168.222.173

Mon Sep 3 10:06:36 2018 - [info] OK.

Mon Sep 3 10:06:36 2018 - [info] ** Finished master recovery successfully.

Mon Sep 3 10:06:36 2018 - [info] MHA::Server=HASH(0x274df80)->get_hostinfo()

Mon Sep 3 10:06:36 2018 - [info] before newmaster_addr: 192.168.222.173(192.168.222.173:3306)

Mon Sep 3 10:06:36 2018 - [info] Reading default configuration from /etc/masterha_default.cnf..

Mon Sep 3 10:06:36 2018 - [info] Reading application default configuration from /etc/mha.cnf..

Mon Sep 3 10:06:36 2018 - [info] Reading server configuration from /etc/mha.cnf..

Mon Sep 3 10:06:36 2018 - [info] [ProxyManager::setproxy] proxy_conf: /etc/cetus.cnf

Mon Sep 3 10:06:36 2018 - [info] type : setmaster

Mon Sep 3 10:06:36 2018 - [info] status : up

Mon Sep 3 10:06:36 2018 - [info] addr : 192.168.222.173:3306

Mon Sep 3 10:06:36 2018 - [info] dbtype : rw

Mon Sep 3 10:06:36 2018 - [info] Reading default configuration from /etc/masterha_default.cnf..

Mon Sep 3 10:06:36 2018 - [info] Reading application default configuration from /etc/mha.cnf..

Mon Sep 3 10:06:36 2018 - [info] Reading server configuration from /etc/mha.cnf..

Mon Sep 3 10:06:36 2018 - [info] [ProxyManager::setdb] proxy_conf: /etc/cetus.cnf

Mon Sep 3 10:06:36 2018 - [info] ** 192.168.222.175:23306 started, pid: 5157

Mon Sep 3 10:06:36 2018 - [info] exec command: /usr/bin/mysql -h192.168.222.175 -uadmin -P23306 -padmin -e " update backends set state='up' , type='rw' where address='192.168.222.173:3306';"

Mon Sep 3 10:06:36 2018 - [info] ......setdb.....

Mon Sep 3 10:06:36 2018 - [info] * Phase 3: Master Recovery Phase completed.

Mon Sep 3 10:06:36 2018 - [info]

Mon Sep 3 10:06:36 2018 - [info] * Phase 4: Slaves Recovery Phase..

Mon Sep 3 10:06:36 2018 - [info]

Mon Sep 3 10:06:36 2018 - [info]

Mon Sep 3 10:06:36 2018 - [info] * Phase 4.1: Starting Slaves in parallel..

Mon Sep 3 10:06:36 2018 - [info]

Mon Sep 3 10:06:36 2018 - [info] -- Slave recovery on host 192.168.222.174(192.168.222.174:3306) started, pid: 5159. Check tmp log /var/log/masterha/192.168.222.174_3306_20180903100634.log if it takes time..

Mon Sep 3 10:06:37 2018 - [info]

Mon Sep 3 10:06:37 2018 - [info] Log messages from 192.168.222.174 ...

Mon Sep 3 10:06:37 2018 - [info]

Mon Sep 3 10:06:36 2018 - [info] Resetting slave 192.168.222.174(192.168.222.174:3306) and starting replication from the new master 192.168.222.173(192.168.222.173:3306)..

Mon Sep 3 10:06:36 2018 - [info] Executed CHANGE MASTER.

Mon Sep 3 10:06:36 2018 - [info] Slave started.

Mon Sep 3 10:06:36 2018 - [info] gtid_wait(75ee239c-aaa5-11e8-84db-005056aba7b5:1-2795,

d3f2e80d-acf1-11e8-869e-005056abaf95:1-15333) completed on 192.168.222.174(192.168.222.174:3306). Executed 0 events.

Mon Sep 3 10:06:37 2018 - [info] End of log messages from 192.168.222.174.

Mon Sep 3 10:06:37 2018 - [info] -- Slave on host 192.168.222.174(192.168.222.174:3306) started.

Mon Sep 3 10:06:37 2018 - [info] All new slave servers recovered successfully.

Mon Sep 3 10:06:37 2018 - [info]

Mon Sep 3 10:06:37 2018 - [info] * Phase 5: New master cleanup phase..

Mon Sep 3 10:06:37 2018 - [info]

Mon Sep 3 10:06:37 2018 - [info] Resetting slave info on the new master..

Mon Sep 3 10:06:37 2018 - [info] 192.168.222.173: Resetting slave info succeeded.

Mon Sep 3 10:06:37 2018 - [info] Master failover to 192.168.222.173(192.168.222.173:3306) completed successfully.

Mon Sep 3 10:06:37 2018 - [info] Deleted server1 entry from /etc/mha.cnf .

Mon Sep 3 10:06:37 2018 - [info]

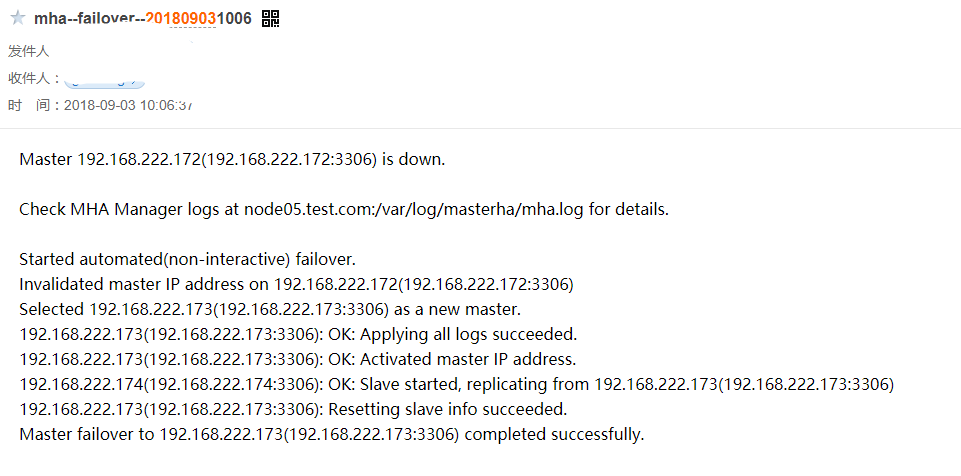

----- Failover Report -----

mha: MySQL Master failover 192.168.222.172(192.168.222.172:3306) to 192.168.222.173(192.168.222.173:3306) succeeded

Master 192.168.222.172(192.168.222.172:3306) is down.

Check MHA Manager logs at node05.test.com:/var/log/masterha/mha.log for details.

Started automated(non-interactive) failover.

Invalidated master IP address on 192.168.222.172(192.168.222.172:3306)

Selected 192.168.222.173(192.168.222.173:3306) as a new master.

192.168.222.173(192.168.222.173:3306): OK: Applying all logs succeeded.

192.168.222.173(192.168.222.173:3306): OK: Activated master IP address.

192.168.222.174(192.168.222.174:3306): OK: Slave started, replicating from 192.168.222.173(192.168.222.173:3306)

192.168.222.173(192.168.222.173:3306): Resetting slave info succeeded.

Master failover to 192.168.222.173(192.168.222.173:3306) completed successfully.

Mon Sep 3 10:06:37 2018 - [info] g_workdir: /var/log/masterha

Mon Sep 3 10:06:37 2018 - [info] sendmail: /var/log/masterha/sendmail.txt

Mon Sep 3 10:06:37 2018 - [info] Sending mail..

- session B

切换后Cetus中查看读写状态

(admin@192.168.222.175) 10:04:01 [(none)]> select * from backends;

+-------------+----------------------+-------+------+-------------+------+------------+------------+-------------+

| backend_ndx | address | state | type | slave delay | uuid | idle_conns | used_conns | total_conns |

+-------------+----------------------+-------+------+-------------+------+------------+------------+-------------+

| 1 | 192.168.222.172:3306 | down | ro | 15177 | NULL | 0 | 0 | 0 | --172,状态已经down

| 2 | 192.168.222.173:3306 | up | rw | NULL | NULL | 100 | 0 | 100 | --173,读写

| 3 | 192.168.222.174:3306 | up | ro | 402 | NULL | 100 | 0 | 100 |

+-------------+----------------------+-------+------+-------------+------+------------+------------+-------------+

3 rows in set (0.00 sec)

(admin@192.168.222.175) 10:10:44 [(none)]>

- session A

切换后mha.cnf 配置里面原主库会被清除掉

[root@node05 software]# cat /etc/mha.cnf

[server default]

manager_log=/var/log/masterha/mha.log

manager_workdir=/var/log/masterha

[server2]

hostname=192.168.222.173

master_binlog_dir=/r2/mysqldata

port=3306

[server3]

hostname=192.168.222.174

master_binlog_dir=/r2/mysqldata

port=3306

[root@node05 software]# ll /var/log/masterha/mha.failover.complete --mha成功执行切换,会生成该文件,如果重新构建MHA把这个文件删除掉

-rw-r--r-- 1 root root 0 Sep 3 10:06 /var/log/masterha/mha.failover.complete

3 、邮件告警

六 总结

1 、目录的树形结构

[root@node05 software]# tree -fp /usr/local/share/perl5/MHA/

/usr/local/share/perl5/MHA

├── [-r--r--r--] /usr/local/share/perl5/MHA/BinlogHeaderParser.pm

├── [-r--r--r--] /usr/local/share/perl5/MHA/BinlogManager.pm

├── [-r--r--r--] /usr/local/share/perl5/MHA/BinlogPosFinderElp.pm

├── [-r--r--r--] /usr/local/share/perl5/MHA/BinlogPosFinder.pm

├── [-r--r--r--] /usr/local/share/perl5/MHA/BinlogPosFinderXid.pm

├── [-r--r--r--] /usr/local/share/perl5/MHA/BinlogPosFindManager.pm

├── [-rw-r--r--] /usr/local/share/perl5/MHA/cetus.cnf

├── [-r--r--r--] /usr/local/share/perl5/MHA/Config.pm

├── [-r--r--r--] /usr/local/share/perl5/MHA/DBHelper.pm

├── [-r--r--r--] /usr/local/share/perl5/MHA/FileStatus.pm

├── [-r--r--r--] /usr/local/share/perl5/MHA/HealthCheck.pm

├── [-r--r--r--] /usr/local/share/perl5/MHA/ManagerAdmin.pm

├── [-r--r--r--] /usr/local/share/perl5/MHA/ManagerAdminWrapper.pm

├── [-r--r--r--] /usr/local/share/perl5/MHA/ManagerConst.pm

├── [-r--r--r--] /usr/local/share/perl5/MHA/ManagerUtil.pm

├── [-r--r--r--] /usr/local/share/perl5/MHA/MasterFailover.pm

├── [-rw-r--r--] /usr/local/share/perl5/MHA/masterha_secondary_check

├── [-r--r--r--] /usr/local/share/perl5/MHA/MasterMonitor.pm

├── [-r--r--r--] /usr/local/share/perl5/MHA/MasterRotate.pm

├── [-r--r--r--] /usr/local/share/perl5/MHA/NodeConst.pm

├── [-r--r--r--] /usr/local/share/perl5/MHA/NodeUtil.pm

├── [-rw-r--r--] /usr/local/share/perl5/MHA/ProxyManager.pm

├── [-rw-r--r--] /usr/local/share/perl5/MHA/sample.cnf

├── [-rwxr-xr-x] /usr/local/share/perl5/MHA/sendMail.sh

├── [-rw-r--r--] /usr/local/share/perl5/MHA/sendmail.txt

├── [-r--r--r--] /usr/local/share/perl5/MHA/ServerManager.pm

├── [-r--r--r--] /usr/local/share/perl5/MHA/Server.pm

├── [-r--r--r--] /usr/local/share/perl5/MHA/SlaveUtil.pm

└── [-r--r--r--] /usr/local/share/perl5/MHA/SSHCheck.pm

0 directories, 29 files

[root@node05 software]# tree -fp /var/log/masterha/

/var/log/masterha

├── [-rw-r--r--] /var/log/masterha/mha.failover.complete

├── [-rw-r--r--] /var/log/masterha/mha.log

└── [-rw-r--r--] /var/log/masterha/sendmail.txt

0 directories, 3 files

[root@node05 software]# tree -fp /etc/ |grep .cnf

├── [-rw-r--r--] /etc/cetus.cnf

├── [-rw-r--r--] /etc/masterha_default.cnf

├── [-rw-r--r--] /etc/mha.cnf

[root@node05 software]# tree -fp /usr/local/cetus/

[root@node05 software]# tree -fp /usr/local/cetus/conf/

/usr/local/cetus/conf

├── [-rw-rw----] /usr/local/cetus/conf/proxy.conf

├── [-rw-rw----] /usr/local/cetus/conf/proxy.conf.example

├── [-rw-rw----] /usr/local/cetus/conf/shard.conf.example

├── [-rw-rw----] /usr/local/cetus/conf/sharding.json.example

├── [-rw-rw----] /usr/local/cetus/conf/users.json

├── [-rw-rw----] /usr/local/cetus/conf/users.json.example

└── [-rw-rw----] /usr/local/cetus/conf/variables.json.example

2、mysql客户端问题

[root@node05 software]# grep -w /usr/bin/mysql /usr/local/bin/*

/usr/local/bin/masterha_secondary_check: . "/usr/bin/mysql -u$master_user -p$master_password -h$master_host -P$master_port "

See online reference (http://code.google.com/p/mysql-master-ha/wiki/Parameters#secondary_check_script) for details.

See online reference (http://code.google.com/p/mysql-master-ha/wiki/Parameters#secondary_check_script) for details.

[root@node05 software]# ln -s /usr/local/mysql/bin/mysql /usr/bin/mysql --做链接来解决

[root@node05 software]# which mysql

/usr/bin/mysql

3、master_ip_failover failed

Fri Aug 31 13:47:40 2018 - [info] /usr/local/bin/master_ip_failover --command=status --ssh_user=root --orig_master_host=192.168.222.172 --orig_master_ip=192.168.222.172 --orig_master_port=3306

Bareword "FIXME_xxx" not allowed while "strict subs" in use at /usr/local/bin/master_ip_failover line 93.

Execution of /usr/local/bin/master_ip_failover aborted due to compilation errors.

Fri Aug 31 13:47:40 2018 - [error][/usr/local/share/perl5/MHA/MasterMonitor.pm, ln267] Failed to get master_ip_failover_script status with return code 255:0.

Fri Aug 31 13:47:40 2018 - [error][/usr/local/share/perl5/MHA/MasterMonitor.pm, ln485] Error happened on checking configurations. at /usr/local/bin/masterha_check_repl line 48.

Fri Aug 31 13:47:40 2018 - [error][/usr/local/share/perl5/MHA/MasterMonitor.pm, ln585] Error happened on monitoring servers.

Fri Aug 31 13:47:40 2018 - [info] Got exit code 1 (Not master dead).

master_ip_failover里面添加切换vip

#添加切换vip

my $vip = '192.168.222.99/24';

my $key = '1';

my $ssh_start_vip = "/sbin/ifconfig ens224:$key $vip";

my $ssh_stop_vip = "/sbin/ifconfig ens224:$key down";

$ssh_user = "root";

4、多网卡cetus配置问题

[root@node05 software]# cat /etc/cetus.cnf

middle_ipport=192.168.222.175:23306 --要配置同一网段,且与proxy.conf文件里面admin-address的端口和ip相对应

5、重新构建MHA

- 删除mha.failover.complete文件

- mha.cnf 把主机信息重新添加

- masterha_default.cnf里面修改 masterha_secondary_check -s 连接测试

030:Cetus中间件和MHA读写分离的更多相关文章

- MySQL中间件之ProxySQL_读写分离/查询重写配置

MySQL中间件之ProxySQL_读写分离/查询重写配置 Posted on 2016-12-25 by mark blue, mark Leave a comment MySQL 1.闲扯几句 读 ...

- Mysql 高可用(MHA)-读写分离(Atlas)-分布式架构(Mycat)

Mysql 高可用(MHA)-读写分离(Atlas) 1. 搭建主从复制(一主两从) 1.1 准备环境 1 主库:10.0.0.51/db01 2 从库:10.0.0.52/db02,10.0.0.5 ...

- Mycat数据库中间件对Mysql读写分离和分库分表配置

Mycat是一个开源的分布式数据库系统,不同于oracle和mysql,Mycat并没有存储引擎,但是Mycat实现了mysql协议,前段用户可以把它当做一个Proxy.其核心功能是分表分库,即将一个 ...

- 数据库中间件之mycat读写分离

mycat核心概念 逻辑库 mycat中定义.管理的数据库 逻辑表 逻辑库中包含的需分库分表存储的表 datanode 数据节点(分片节点),逻辑表分片的存放节点 datahost 数据主机(节点主机 ...

- 基于MYCAT中间件实现MYSQL读写分离

基于mycat实现mysql读写分离 完成主从复制的配置 /* 主节点:192.168.47.101 从节点:192.168.47.102 */ /*mycat为同一网段客户端*/ /* 修改主节点基 ...

- MaxScale中间件部署数据库读写分离

操作系统:CentOS7 (Core) 数据库:MariaDB-10.2.6-linux-glibc_214-x86_64 MaxScale服务器:192.168.40.134 主服务器:192.16 ...

- mysql高可用架构之MHA,haproxy实现读写分离详解

MySQL高可用架构之MHA 一.运维人员需要掌握的MySQL技术: 1.基本SQL语句 2.基本的管理[库表数据的管理 权限的管理] 3.容灾 保证数据不丢失. 二.工作中MySQ ...

- 从零开发分布式数据库中间件 二、构建MyBatis的读写分离数据库中间件

在上一节 从零开发分布式数据库中间件 一.读写分离的数据库中间件 中,我们讲了如何通过ThreadLocal来指定每次访问的数据源,并通过jdbc的连接方式来切换数据源,那么这一节我们使用我们常用的数 ...

- Mysql - 读写分离与读负载均衡之Maxscale

一.概述 常见的高可用方案如MMM和MHA等都将重点放在主库上,一旦主库出现故障,通过这些方案能将主库故障进行转移. 本文将给大家介绍一款由mariadb公司出品的中间件Maxscale,该中间件能实 ...

随机推荐

- 【异常记录(六)】vs文件乱码:文件加载,使用Unicode(UTF-8)编码加载文件xxx时,有些字节已用Unicode替换字符替换。保存该文件将不会保留原始文件内容。

VS2013偶遇这种情况,页面汉字编码出现乱码. .... 按照网上查到的: 工具>选项>文本编辑器> 勾选了 然并卵,还是乱码... 其实炒鸡简单 用记事本打开另存为,选择 ...

- 线程池ThreadPoolExecutor里面4种拒绝策略

ThreadPoolExecutor类实现了ExecutorService接口和Executor接口,可以设置线程池corePoolSize,最大线程池大小,AliveTime,拒绝策略等.常用构造方 ...

- python 作用域,命名空间

def scope_test(): def do_local(): spam = "local spam" def do_nonlocal(): nonlocal spam spa ...

- [原][译][osgearth]样式表style中参数总结(OE官方文档翻译)

几何Geometry 高度Altitude 挤压Extrusion 图标Icon 模型Model 渲染Render 皮肤Skin 文本Text 覆盖Coverage 提示: 在SDK中,样式表的命名空 ...

- node 常用指令 node 扩展链接

node -v node 版本 npm -v npm版本号,npm是在安装nodejs时一同安装的nodejs包管理器 (注册.安装模块,和小乌龟有点像) npm list 当 ...

- vue 脚手架(二,项目依赖说明 package.json)

本文以转移至本人的个人博客,请多多关注! 本文以转移至本人的个人博客,请多多关注! 本文以转移至本人的个人博客,请多多关注! 本文以转移至本人的个人博客,请多多关注! 继续上一篇, 上一篇讲了 vue ...

- Java Spring-AOP的概述

2017-11-08 19:31:23 AOP为Aspect Oriented Programming的缩写,意为:面向切面编程,通过预编译方式和运行期动态代理实现程序功能的统一维护的一种技术.AOP ...

- hdu 3264 09 宁波 现场 E - Open-air shopping malls 计算几何 二分 圆相交面积 难度:1

Description The city of M is a famous shopping city and its open-air shopping malls are extremely at ...

- hdu 3689 杭州 10 现场 J - Infinite monkey theorem 概率dp kmp 难度:1

J - Infinite monkey theorem Time Limit:1000MS Memory Limit:32768KB 64bit IO Format:%I64d &am ...

- ADS1.2使用

ADS编译错误Error : A1163E: Unknown opcode ARM汇编指令不支持顶格写,否则不能识别,指令前加上空格即可. 使用for(;;;)//死循环,编译报错如下,说是该语句有错 ...