Stanford coursera Andrew Ng 机器学习课程第四周总结(附Exercise 3)

Introduction

Neural NetWork的由来

先考虑一个非线性分类,当特征数很少时,逻辑回归就可以完成了,但是当特征数变大时,高阶项将呈指数性增长,复杂度可想而知。如下图:对房屋进行高低档的分类,当特征值只有x1,x2,x3时,我们可以对它进行处理,分类。但是当特征数增长为x1,x2....x100时,分类器的效率就会很低了。

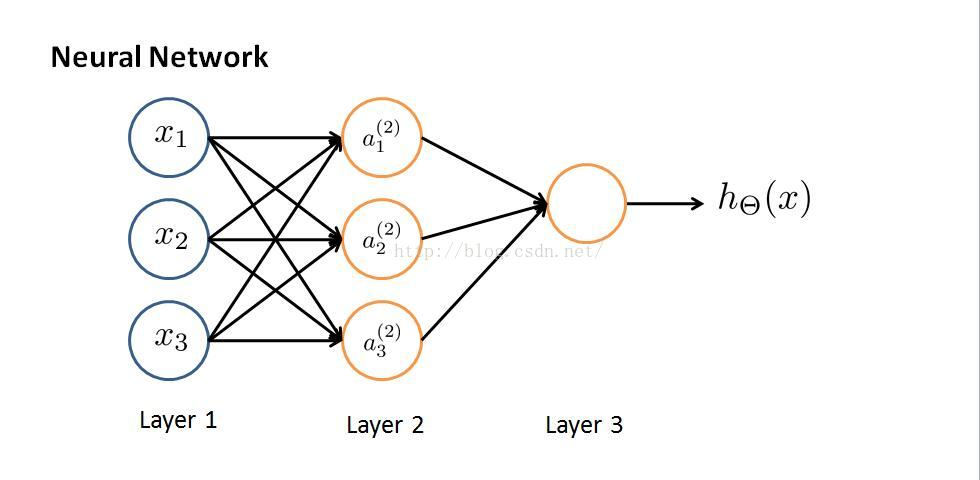

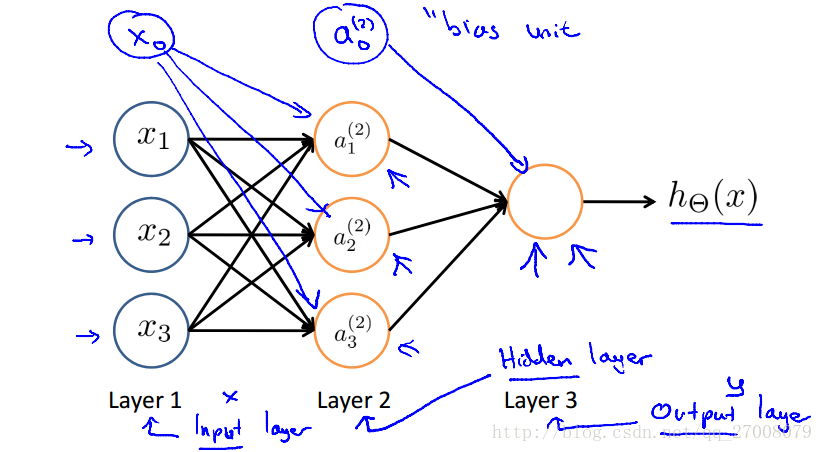

Neural NetWork模型

该图是最简单的神经网络,共有3层,输入层Layer1;隐藏层Layer2;输出层Layer3,每层都有多个激励函数ai(j).通过层与层之间的传递参数Θ得到最终的假设函数hΘ(x)。我们的目的是通过大量的输入样本x(作为第一层),训练层与层之间的传递参数(经常称为权重),使得假设函数尽可能的与实际输出值接近h(x)≈y(代价函数J尽可能的小)。

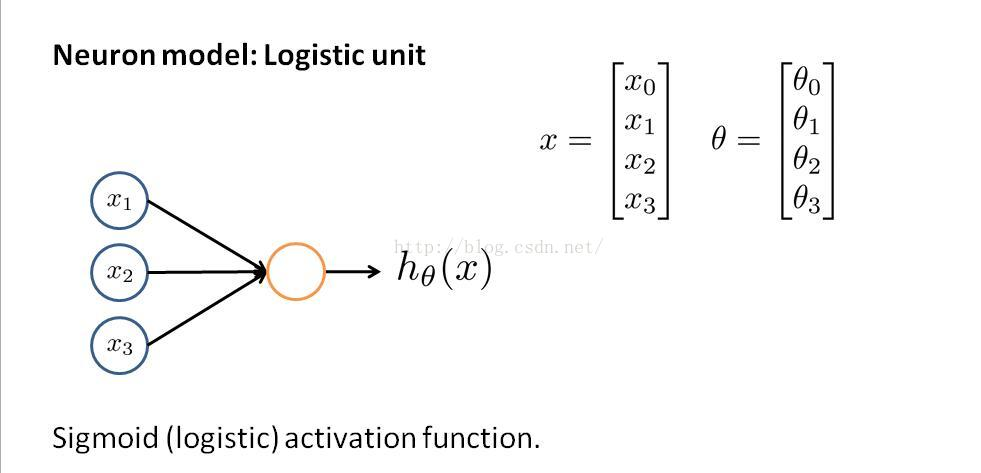

逻辑回归模型

很容易看出,逻辑回归是没有隐藏层的神经网络,层与层之间的传递函数就是θ。

Neural NetWork

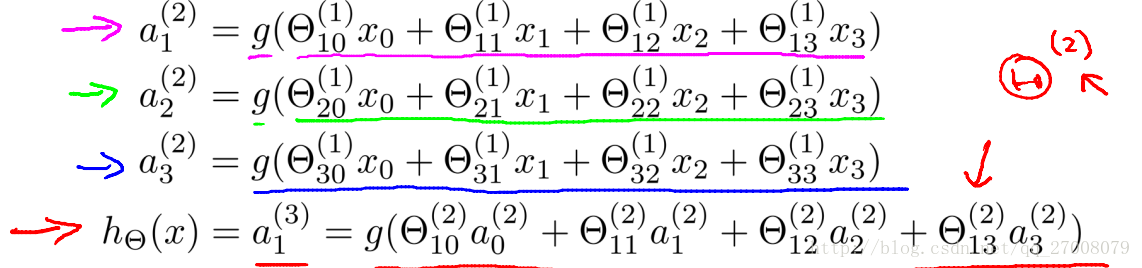

神经网络模型---正向传播

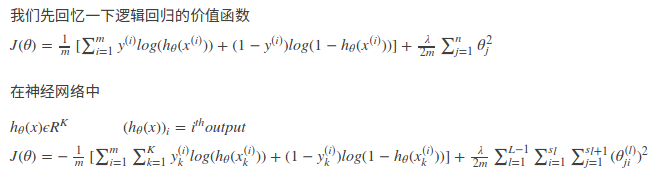

Cost function(代价函数)

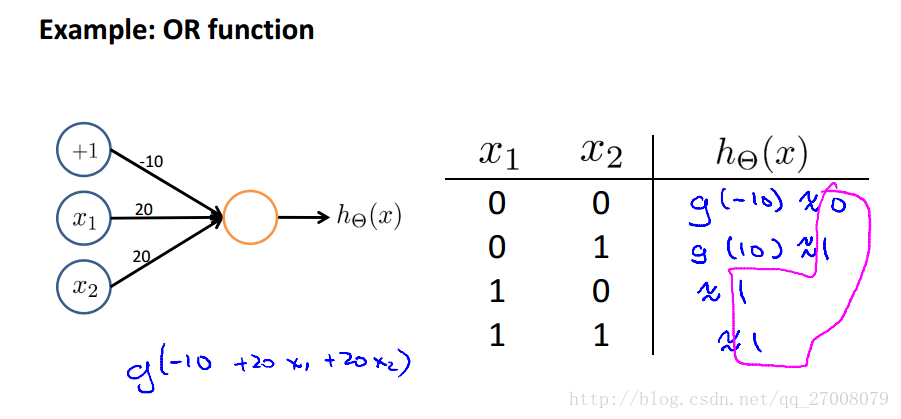

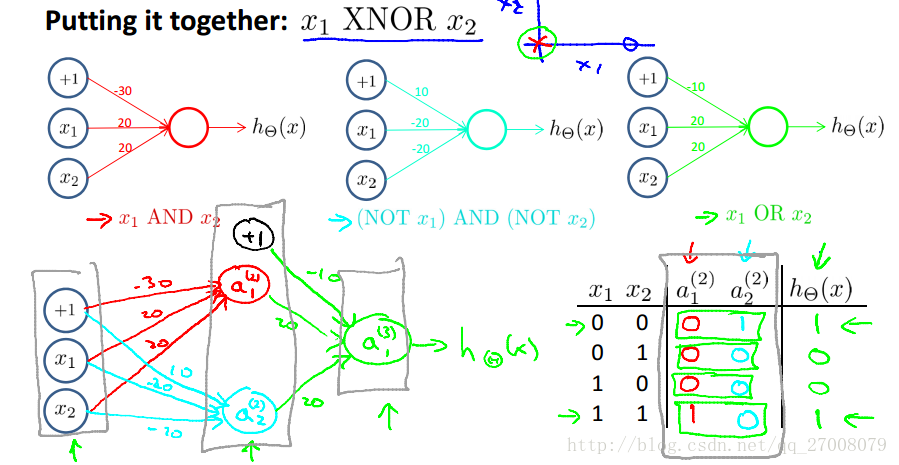

Examples and intuitions

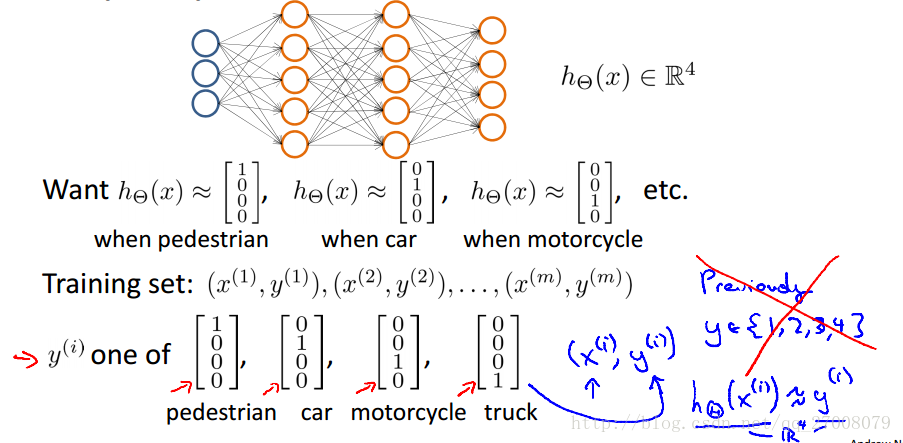

Multi-class classification

对于多分类问题,我们可以通过设置多个输出值来实现。

编程作业就是一个多分类问题——手写数字识别

输入的是手写的照片(数字0-9),5000组样本、每个像素点用20×20的点阵表示成一行,输入向量为5000×400的矩阵X,经过神经网络传递后,输出一个假设函数(列向量),取最大值所在的行号即为假设值(0-9中的一个)。也就是输出值y = 1,2,3,4,5.....10又有可能,为了方便数值运算,我们用10×1的列向量表示,譬如 y = 5,有

Exercises

这次的作业是用逻辑回归和神经网络来实现手写数字识别,比较下两者的准确性。

Logistic Regression

lrCostFunction.m

function [J, grad] = lrCostFunction(theta, X, y, lambda)

%LRCOSTFUNCTION Compute cost and gradient for logistic regression with

%regularization

% J = LRCOSTFUNCTION(theta, X, y, lambda) computes the cost of using

% theta as the parameter for regularized logistic regression and the

% gradient of the cost w.r.t. to the parameters. % Initialize some useful values

m = length(y); % number of training examples % You need to return the following variables correctly

J = 0;

grad = zeros(size(theta)); % ====================== YOUR CODE HERE ======================

% Instructions: Compute the cost of a particular choice of theta.

% You should set J to the cost.

% Compute the partial derivatives and set grad to the partial

% derivatives of the cost w.r.t. each parameter in theta

%

% Hint: The computation of the cost function and gradients can be

% efficiently vectorized. For example, consider the computation

%

% sigmoid(X * theta)

%

% Each row of the resulting matrix will contain the value of the

% prediction for that example. You can make use of this to vectorize

% the cost function and gradient computations.

%

% Hint: When computing the gradient of the regularized cost function,

% there're many possible vectorized solutions, but one solution

% looks like:

% grad = (unregularized gradient for logistic regression)

% temp = theta;

% temp(1) = 0; % because we don't add anything for j = 0

% grad = grad + YOUR_CODE_HERE (using the temp variable)

%

theta_reg=[0;theta(2:size(theta))]; J = (-y'*log(sigmoid(X*theta))-(1-y)'*log(1-sigmoid(X*theta)))/m + lambda/(2*m)*(theta_reg')*theta_reg; grad = X'*(sigmoid(X*theta)-y)/m + lambda/m*theta_reg; % ============================================================= grad = grad(:); end

oneVsAll.m

function [all_theta] = oneVsAll(X, y, num_labels, lambda)

%ONEVSALL trains multiple logistic regression classifiers and returns all

%the classifiers in a matrix all_theta, where the i-th row of all_theta

%corresponds to the classifier for label i

% [all_theta] = ONEVSALL(X, y, num_labels, lambda) trains num_labels

% logistic regression classifiers and returns each of these classifiers

% in a matrix all_theta, where the i-th row of all_theta corresponds

% to the classifier for label i % Some useful variables

m = size(X, 1);

n = size(X, 2); % You need to return the following variables correctly

all_theta = zeros(num_labels, n + 1); % Add ones to the X data matrix

X = [ones(m, 1) X]; % ====================== YOUR CODE HERE ======================

% Instructions: You should complete the following code to train num_labels

% logistic regression classifiers with regularization

% parameter lambda.

%

% Hint: theta(:) will return a column vector.

%

% Hint: You can use y == c to obtain a vector of 1's and 0's that tell you

% whether the ground truth is true/false for this class.

%

% Note: For this assignment, we recommend using fmincg to optimize the cost

% function. It is okay to use a for-loop (for c = 1:num_labels) to

% loop over the different classes.

%

% fmincg works similarly to fminunc, but is more efficient when we

% are dealing with large number of parameters.

%

% Example Code for fmincg:

%

% % Set Initial theta

% initial_theta = zeros(n + 1, 1);

%

% % Set options for fminunc

% options = optimset('GradObj', 'on', 'MaxIter', 50);

%

% % Run fmincg to obtain the optimal theta

% % This function will return theta and the cost

% [theta] = ...

% fmincg (@(t)(lrCostFunction(t, X, (y == c), lambda)), ...

% initial_theta, options);

% initial_theta = zeros(n + 1, 1); options = optimset('GradObj', 'on', 'MaxIter', 50); for c = 1:num_labels

all_theta(c,:) = fmincg (@(t)(lrCostFunction(t, X, (y == c), lambda)), initial_theta, options);

end % ========================================================================= end

predictOneVsAll.m

function p = predictOneVsAll(all_theta, X)

%PREDICT Predict the label for a trained one-vs-all classifier. The labels

%are in the range 1..K, where K = size(all_theta, 1).

% p = PREDICTONEVSALL(all_theta, X) will return a vector of predictions

% for each example in the matrix X. Note that X contains the examples in

% rows. all_theta is a matrix where the i-th row is a trained logistic

% regression theta vector for the i-th class. You should set p to a vector

% of values from 1..K (e.g., p = [1; 3; 1; 2] predicts classes 1, 3, 1, 2

% for 4 examples) m = size(X, 1);

num_labels = size(all_theta, 1); % You need to return the following variables correctly

p = zeros(size(X, 1), 1); % Add ones to the X data matrix

X = [ones(m, 1) X]; % ====================== YOUR CODE HERE ======================

% Instructions: Complete the following code to make predictions using

% your learned logistic regression parameters (one-vs-all).

% You should set p to a vector of predictions (from 1 to

% num_labels).

%

% Hint: This code can be done all vectorized using the max function.

% In particular, the max function can also return the index of the

% max element, for more information see 'help max'. If your examples

% are in rows, then, you can use max(A, [], 2) to obtain the max

% for each row.

% [maxx, p]=max(X*all_theta',[],2); % ========================================================================= end

Training Set Accuracy: 95.100000

下面是以三层bp神经网络处理的手写数字识别,其中权重矩阵已给出。

predict.m

function p = predict(Theta1, Theta2, X)

%PREDICT Predict the label of an input given a trained neural network

% p = PREDICT(Theta1, Theta2, X) outputs the predicted label of X given the

% trained weights of a neural network (Theta1, Theta2) % Useful values

m = size(X, 1);

num_labels = size(Theta2, 1); % You need to return the following variables correctly

p = zeros(size(X, 1), 1); % ====================== YOUR CODE HERE ======================

% Instructions: Complete the following code to make predictions using

% your learned neural network. You should set p to a

% vector containing labels between 1 to num_labels.

%

% Hint: The max function might come in useful. In particular, the max

% function can also return the index of the max element, for more

% information see 'help max'. If your examples are in rows, then, you

% can use max(A, [], 2) to obtain the max for each row.

%

X = [ones(m, 1) X]; temp=sigmoid(X*Theta1'); temp = [ones(m, 1) temp]; temp2=sigmoid(temp*Theta2'); [maxx, p]=max(temp2, [], 2); % ========================================================================= end

Training Set Accuracy: 97.520000

注意事项

1.X = [ones(m, 1) X];是确保矩阵维度一致。X0就是一行1

2.正则化时theta0要用0替代,处理如theta_reg=[0;theta(2:size(theta))];

Stanford coursera Andrew Ng 机器学习课程第四周总结(附Exercise 3)的更多相关文章

- Stanford coursera Andrew Ng 机器学习课程编程作业(Exercise 2)及总结

Exercise 1:Linear Regression---实现一个线性回归 关于如何实现一个线性回归,请参考:http://www.cnblogs.com/hapjin/p/6079012.htm ...

- Stanford coursera Andrew Ng 机器学习课程第二周总结(附Exercise 1)

Exercise 1:Linear Regression---实现一个线性回归 重要公式 1.h(θ)函数 2.J(θ)函数 思考一下,在matlab里面怎么表达?如下: 原理如下:(如果你懂了这道作 ...

- Stanford coursera Andrew Ng 机器学习课程编程作业(Exercise 1)

Exercise 1:Linear Regression---实现一个线性回归 在本次练习中,需要实现一个单变量的线性回归.假设有一组历史数据<城市人口,开店利润>,现需要预测在哪个城市中 ...

- 【原】Coursera—Andrew Ng机器学习—课程笔记 Lecture 15—Anomaly Detection异常检测

Lecture 15 Anomaly Detection 异常检测 15.1 异常检测问题的动机 Problem Motivation 异常检测(Anomaly detection)问题是机器学习算法 ...

- 【原】Coursera—Andrew Ng机器学习—课程笔记 Lecture 1_Introduction and Basic Concepts 介绍和基本概念

目录 1.1 欢迎1.2 机器学习是什么 1.2.1 机器学习定义 1.2.2 机器学习算法 - Supervised learning 监督学习 - Unsupervised learning 无 ...

- 【原】Coursera—Andrew Ng机器学习—课程笔记 Lecture 17—Large Scale Machine Learning 大规模机器学习

Lecture17 Large Scale Machine Learning大规模机器学习 17.1 大型数据集的学习 Learning With Large Datasets 如果有一个低方差的模型 ...

- 【原】Coursera—Andrew Ng机器学习—课程笔记 Lecture 16—Recommender Systems 推荐系统

Lecture 16 Recommender Systems 推荐系统 16.1 问题形式化 Problem Formulation 在机器学习领域,对于一些问题存在一些算法, 能试图自动地替你学习到 ...

- 【原】Coursera—Andrew Ng机器学习—课程笔记 Lecture 14—Dimensionality Reduction 降维

Lecture 14 Dimensionality Reduction 降维 14.1 降维的动机一:数据压缩 Data Compression 现在讨论第二种无监督学习问题:降维. 降维的一个作用是 ...

- 【原】Coursera—Andrew Ng机器学习—课程笔记 Lecture 12—Support Vector Machines 支持向量机

Lecture 12 支持向量机 Support Vector Machines 12.1 优化目标 Optimization Objective 支持向量机(Support Vector Machi ...

随机推荐

- Python开发【1.4数据类型】

1.数字 数字数据类型用于存储数值. 他们是不可改变的数据类型,这意味着改变数字数据类型会分配一个新的对象. # 创建对象 var1 = 1 var2 = 2 # 删除对象 del var1 del ...

- 跨线程访问UI控件时的Lambda表达式

工作中经常会用到跨线程访问UI控件的情况,由于.net本身机制,是不允许在非UI线程访问UI控件的,实际上跨线程访问UI控件还是 将访问UI的操作交给UI线程来处理的, 利用Control.Invok ...

- Android开发之接收系统广播消息

BroadcastReceiver除了接收用户所发送的广播消息之外.另一个重要的用途:接收系统广播. 假设应用须要在系统特定时刻运行某些操作,就能够通过监听系统广播来实现.Android的大量系统事件 ...

- HDU 5753Permutation Bo

Permutation Bo Time Limit: 2000/1000 MS (Java/Others) Memory Limit: 131072/131072 K (Java/Others) ...

- YTU 2639: 改错题:类中私有成员的访问

2639: 改错题:类中私有成员的访问 时间限制: 1 Sec 内存限制: 128 MB 提交: 431 解决: 297 题目描述 /* 改错题: 设计一个日期类和时间类,并编写全局函数displ ...

- 多台Mac电脑使用同一个apple开发者账号测试

因为公司有苹果一体机,家里有macbook和黑苹果台式机,多台电脑用同一个开发者账号,每次真机调试时都是选择直接reset,回到另外一台电脑,又要重新设置,太麻烦了.直到最近才设置三台电脑都可以,分享 ...

- LOJ 6089 小Y的背包计数问题 —— 前缀和优化DP

题目:https://loj.ac/problem/6089 对于 i <= √n ,设 f[i][j] 表示前 i 种,体积为 j 的方案数,那么 f[i][j] = ∑(1 <= k ...

- obs nginx-rtmp-module搭建流媒体服务器实现直播 ding

接下来我就简单跟大家介绍一下利用nginx来搭建流媒体服务器. 我选择的是腾讯云服务器 1.下载nginx-rtmp-module: nginx-rtmp-module的官方github地址:http ...

- Win7 64 位 vs2012 pthread 配置

1. 首先下载pthread,解压后我放在了e盘. 2. 然后用vs2012新建一个工程,然后右键项目属性,在配置属性->VC++目录->包含目录中输入E:\pthre ...

- Python 之reduce()函数

reduce()函数: reduce()函数也是Python内置的一个高阶函数.reduce()函数接收的参数和 map()类似,一个函数 f,一个list,但行为和 map()不同,reduce() ...