CUDA JPEG编码

基于英伟达的jpegNPP工程,分离实现独立的JPEG压缩。

由于原工程是直接把解码时的jpeg图片的信息直接作为编码时的信息,所以在做独立的JPEG编码时,需要自己来填充各种信息。

1.JPEG编码流程

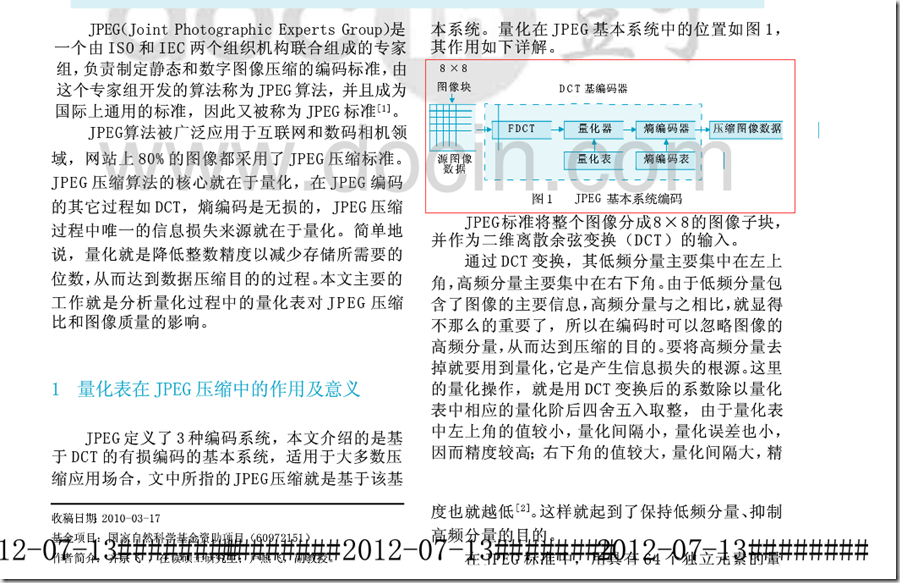

从网上一片文章中解出来的图,红色框框中的流程图算是JPEG编码的一个流程图,对JPEG编码流程的了解有助于对代码的理解。

2.Huffman表和量化表

Huffman表和量化表采用标准Huffman表和标准量化表。

//标准亮度信号量化模板

static unsigned char std_Y_QT[64] =

{

16, 11, 10, 16, 24, 40, 51, 61,

12, 12, 14, 19, 26, 58, 60, 55,

14, 13, 16, 24, 40, 57, 69, 56,

14, 17, 22, 29, 51, 87, 80, 62,

18, 22, 37, 56, 68, 109,103,77,

24, 35, 55, 64, 81, 104,113,92,

49, 64, 78, 87, 103,121,120,101,

72, 92, 95, 98, 112,100,103,99

}; //标准色差信号量化模板

static unsigned char std_UV_QT[64] =

{

17, 18, 24, 47, 99, 99, 99, 99,

18, 21, 26, 66, 99, 99, 99, 99,

24, 26, 56, 99, 99, 99, 99, 99,

47, 66, 99 ,99, 99, 99, 99, 99,

99, 99, 99, 99, 99, 99, 99, 99,

99, 99, 99, 99, 99, 99, 99, 99,

99, 99, 99, 99, 99, 99, 99, 99,

99, 99, 99, 99, 99, 99, 99, 99

}; // 标准Huffman表 (cf. JPEG standard section K.3)

static unsigned char STD_DC_Y_NRCODES[17]={0, 0, 1, 5, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0};

static unsigned char STD_DC_Y_VALUES[12] ={0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11}; static unsigned char STD_DC_UV_NRCODES[17]={0, 0, 3, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0};

static unsigned char STD_DC_UV_VALUES[12] ={0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11}; static unsigned char STD_AC_Y_NRCODES[17]={0,0,2,1,3,3,2,4,3,5,5,4,4,0,0,1,0X7D};

static unsigned char STD_AC_Y_VALUES[162]=

{

0x01, 0x02, 0x03, 0x00, 0x04, 0x11, 0x05, 0x12,

0x21, 0x31, 0x41, 0x06, 0x13, 0x51, 0x61, 0x07,

0x22, 0x71, 0x14, 0x32, 0x81, 0x91, 0xa1, 0x08,

0x23, 0x42, 0xb1, 0xc1, 0x15, 0x52, 0xd1, 0xf0,

0x24, 0x33, 0x62, 0x72, 0x82, 0x09, 0x0a, 0x16,

0x17, 0x18, 0x19, 0x1a, 0x25, 0x26, 0x27, 0x28,

0x29, 0x2a, 0x34, 0x35, 0x36, 0x37, 0x38, 0x39,

0x3a, 0x43, 0x44, 0x45, 0x46, 0x47, 0x48, 0x49,

0x4a, 0x53, 0x54, 0x55, 0x56, 0x57, 0x58, 0x59,

0x5a, 0x63, 0x64, 0x65, 0x66, 0x67, 0x68, 0x69,

0x6a, 0x73, 0x74, 0x75, 0x76, 0x77, 0x78, 0x79,

0x7a, 0x83, 0x84, 0x85, 0x86, 0x87, 0x88, 0x89,

0x8a, 0x92, 0x93, 0x94, 0x95, 0x96, 0x97, 0x98,

0x99, 0x9a, 0xa2, 0xa3, 0xa4, 0xa5, 0xa6, 0xa7,

0xa8, 0xa9, 0xaa, 0xb2, 0xb3, 0xb4, 0xb5, 0xb6,

0xb7, 0xb8, 0xb9, 0xba, 0xc2, 0xc3, 0xc4, 0xc5,

0xc6, 0xc7, 0xc8, 0xc9, 0xca, 0xd2, 0xd3, 0xd4,

0xd5, 0xd6, 0xd7, 0xd8, 0xd9, 0xda, 0xe1, 0xe2,

0xe3, 0xe4, 0xe5, 0xe6, 0xe7, 0xe8, 0xe9, 0xea,

0xf1, 0xf2, 0xf3, 0xf4, 0xf5, 0xf6, 0xf7, 0xf8,

0xf9, 0xfa

}; static unsigned char STD_AC_UV_NRCODES[17]={0,0,2,1,2,4,4,3,4,7,5,4,4,0,1,2,0X77};

static unsigned char STD_AC_UV_VALUES[162]=

{

0x00, 0x01, 0x02, 0x03, 0x11, 0x04, 0x05, 0x21,

0x31, 0x06, 0x12, 0x41, 0x51, 0x07, 0x61, 0x71,

0x13, 0x22, 0x32, 0x81, 0x08, 0x14, 0x42, 0x91,

0xa1, 0xb1, 0xc1, 0x09, 0x23, 0x33, 0x52, 0xf0,

0x15, 0x62, 0x72, 0xd1, 0x0a, 0x16, 0x24, 0x34,

0xe1, 0x25, 0xf1, 0x17, 0x18, 0x19, 0x1a, 0x26,

0x27, 0x28, 0x29, 0x2a, 0x35, 0x36, 0x37, 0x38,

0x39, 0x3a, 0x43, 0x44, 0x45, 0x46, 0x47, 0x48,

0x49, 0x4a, 0x53, 0x54, 0x55, 0x56, 0x57, 0x58,

0x59, 0x5a, 0x63, 0x64, 0x65, 0x66, 0x67, 0x68,

0x69, 0x6a, 0x73, 0x74, 0x75, 0x76, 0x77, 0x78,

0x79, 0x7a, 0x82, 0x83, 0x84, 0x85, 0x86, 0x87,

0x88, 0x89, 0x8a, 0x92, 0x93, 0x94, 0x95, 0x96,

0x97, 0x98, 0x99, 0x9a, 0xa2, 0xa3, 0xa4, 0xa5,

0xa6, 0xa7, 0xa8, 0xa9, 0xaa, 0xb2, 0xb3, 0xb4,

0xb5, 0xb6, 0xb7, 0xb8, 0xb9, 0xba, 0xc2, 0xc3,

0xc4, 0xc5, 0xc6, 0xc7, 0xc8, 0xc9, 0xca, 0xd2,

0xd3, 0xd4, 0xd5, 0xd6, 0xd7, 0xd8, 0xd9, 0xda,

0xe2, 0xe3, 0xe4, 0xe5, 0xe6, 0xe7, 0xe8, 0xe9,

0xea, 0xf2, 0xf3, 0xf4, 0xf5, 0xf6, 0xf7, 0xf8,

0xf9, 0xfa

};

2.RGB转YUV

编码的输入时YUV数据,所以对于原始数据需要先把RGB转成YUV。要说的是,我这里输入的RGB数据的存储形式为BBBBBBB……….GGGGGGG………RRRRRRR…..,其他形式的RGB数据需要对kernel函数中的坐标做点调整。

RGB转YUV的公式网上有许多中,我这里采用:

Y = 0.299R + 0.587G + 0.114BU = -0.147R - 0.289G + 0.436BV = 0.615R - 0.515G - 0.100B

代码:

#include "cuda_kernels.h" typedef unsigned char uint8;

typedef unsigned int uint32;

typedef int int32; namespace cuda_common

{

__device__ unsigned char clip_value(unsigned char x, unsigned char min_val, unsigned char max_val){

if (x>max_val){

return max_val;

}

else if (x<min_val){

return min_val;

}

else{

return x;

}

} extern "C"

__global__ void kernel_rgb2yuv(float *src_img, unsigned char* Y, unsigned char* u, unsigned char* v,

int src_width, int src_height, size_t yPitch)

{

const int x = blockIdx.x * blockDim.x + threadIdx.x;

const int y = blockIdx.y * blockDim.y + threadIdx.y; if (x >= src_width)

return; //x = width - 1; if (y >= src_height)

return; // y = height - 1; float B = src_img[y * src_width + x];

float G = src_img[src_width * src_height + y * src_width + x];

float R = src_img[src_width * src_height * 2 + y * src_width + x]; Y[y * yPitch + x] = clip_value((unsigned char)(0.299 * R + 0.587 * G + 0.114 * B), 0, 255);

u[y * src_width + x] = clip_value((unsigned char)(-0.147 * R - 0.289 * G + 0.436 * B + 128), 0, 255);

v[y * src_width + x] = clip_value((unsigned char)(0.615 * R - 0.515 * G - 0.100 * B + 128), 0, 255); //Y[y * yPitch + x] = clip_value((unsigned char)(0.257 * R + 0.504 * G + 0.098 * B + 16), 0, 255);

//u[y * src_width + x] = clip_value((unsigned char)(-0.148 * R - 0.291 * G + 0.439 * B + 128), 0, 255);

//v[y * src_width + x] = clip_value((unsigned char)(0.439 * R - 0.368 * G - 0.071 * B + 128), 0, 255);

} extern "C"

__global__ void kernel_resize_UV(unsigned char* src_img, unsigned char *dst_img,

int src_width, int src_height, int dst_width, int dst_height, int nPitch)

{

const int x = blockIdx.x * blockDim.x + threadIdx.x;

const int y = blockIdx.y * blockDim.y + threadIdx.y; if (x >= dst_width)

return; //x = width - 1; if (y >= dst_height)

return; // y = height - 1; float fx = (x + 0.5)*src_width / (float)dst_width - 0.5;

float fy = (y + 0.5)*src_height / (float)dst_height - 0.5;

int ax = floor(fx);

int ay = floor(fy);

if (ax < 0)

{

ax = 0;

}

else if (ax > src_width - 2)

{

ax = src_width - 2;

} if (ay < 0){

ay = 0;

}

else if (ay > src_height - 2)

{

ay = src_height - 2;

} int A = ax + ay*src_width;

int B = ax + ay*src_width + 1;

int C = ax + ay*src_width + src_width;

int D = ax + ay*src_width + src_width + 1; float w1, w2, w3, w4;

w1 = fx - ax;

w2 = 1 - w1;

w3 = fy - ay;

w4 = 1 - w3; unsigned char val = src_img[A] * w2*w4 + src_img[B] * w1*w4 + src_img[C] * w2*w3 + src_img[D] * w1*w3; dst_img[y * nPitch + x] = clip_value(val,0,255);

} cudaError_t RGB2YUV(float* d_srcRGB, int src_width, int src_height,

unsigned char* Y, size_t yPitch, int yWidth, int yHeight,

unsigned char* U, size_t uPitch, int uWidth, int uHeight,

unsigned char* V, size_t vPitch, int vWidth, int vHeight)

{

unsigned char * u ;

unsigned char * v ; cudaError_t cudaStatus; cudaStatus = cudaMalloc((void**)&u, src_width * src_height * sizeof(unsigned char));

cudaStatus = cudaMalloc((void**)&v, src_width * src_height * sizeof(unsigned char)); dim3 block(32, 16, 1);

dim3 grid((src_width + (block.x - 1)) / block.x, (src_height + (block.y - 1)) / block.y, 1);

dim3 grid1((uWidth + (block.x - 1)) / block.x, (uHeight + (block.y - 1)) / block.y, 1);

dim3 grid2((vWidth + (block.x - 1)) / block.x, (vHeight + (block.y - 1)) / block.y, 1); kernel_rgb2yuv << < grid, block >> >(d_srcRGB, Y, u, v, src_width, src_height, yPitch); cudaStatus = cudaGetLastError();

if (cudaStatus != cudaSuccess) {

fprintf(stderr, "kernel_rgb2yuv launch failed: %s\n", cudaGetErrorString(cudaStatus));

goto Error;

} cudaStatus = cudaDeviceSynchronize();

if (cudaStatus != cudaSuccess) {

fprintf(stderr, "cudaDeviceSynchronize returned error code %d after launching kernel_rgb2yuv!\n", cudaStatus);

goto Error;

} kernel_resize_UV << < grid1, block >> >(u, U, src_width, src_height, uWidth, uHeight, uPitch); cudaStatus = cudaGetLastError();

if (cudaStatus != cudaSuccess) {

fprintf(stderr, "kernel_resize_UV launch failed: %s\n", cudaGetErrorString(cudaStatus));

goto Error;

} cudaStatus = cudaDeviceSynchronize();

if (cudaStatus != cudaSuccess) {

fprintf(stderr, "cudaDeviceSynchronize returned error code %d after launching kernel_resize_UV!\n", cudaStatus);

goto Error;

} kernel_resize_UV << < grid2, block >> >(v, V, src_width, src_height, vWidth, vHeight, vPitch); cudaStatus = cudaGetLastError();

if (cudaStatus != cudaSuccess) {

fprintf(stderr, "kernel_resize_UV launch failed: %s\n", cudaGetErrorString(cudaStatus));

goto Error;

} cudaStatus = cudaDeviceSynchronize();

if (cudaStatus != cudaSuccess) {

fprintf(stderr, "cudaDeviceSynchronize returned error code %d after launching kernel_resize_UV!\n", cudaStatus);

goto Error;

} Error :

cudaFree(u);

cudaFree(v); return cudaStatus;

}

}

RGB转码分了两步,第一步是按照RGB转YUV公式做转换,由kernel_rgb2yuv函数来完成;第二部把U、V的宽高缩小到原来的一半,由kernel_resize_UV函数来做,这个地方会影响到最终压缩后的图片的效果,我这里用的是一个双线性插值来缩小的,效果凑合,但和原图一比较还是能看出明显的色差,网上查到怎么缩小U、V的,这里的做法可能与标准做法不符,有待更好的方法。

3.JPEG压缩

jpegNPP工程包含了一个解码然后编码的过程,要分离出解码的话把编码部分去掉就行了,应该来说是比较容易的;要分离出编码部分就有点困难了,因为工程中编码所使用的参数是直接使用的解码读取到的图片的参数,所以要分离出编码,就需要自己来填充这些参数,这对于不懂JPEG压缩的来说就有点难度了,好在网上资料多。

int jpegNPP(const char *szOutputFile, float* d_srcRGB, int img_width, int img_height)

{

NppiDCTState *pDCTState;

NPP_CHECK_NPP(nppiDCTInitAlloc(&pDCTState)); // Parsing and Huffman Decoding (on host)

FrameHeader oFrameHeader;

QuantizationTable aQuantizationTables[4];

Npp8u *pdQuantizationTables;

cudaMalloc(&pdQuantizationTables, 64 * 4); HuffmanTable aHuffmanTables[4];

HuffmanTable *pHuffmanDCTables = aHuffmanTables;

HuffmanTable *pHuffmanACTables = &aHuffmanTables[2];

ScanHeader oScanHeader;

memset(&oFrameHeader,0,sizeof(FrameHeader));

memset(aQuantizationTables,0, 4 * sizeof(QuantizationTable));

memset(aHuffmanTables,0, 4 * sizeof(HuffmanTable));

int nMCUBlocksH = 0;

int nMCUBlocksV = 0; int nRestartInterval = -1; NppiSize aSrcSize[3];

Npp16s *apdDCT[3] = {0,0,0};

Npp32s aDCTStep[3]; Npp8u *apSrcImage[3] = {0,0,0};

Npp32s aSrcImageStep[3];

size_t aSrcPitch[3]; /***************************

*

* Output 编码部分

*

***************************/ unsigned char STD_DC_Y_NRCODES[16] = { 0, 1, 5, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0 };

unsigned char STD_DC_Y_VALUES[12] = { 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11 }; unsigned char STD_DC_UV_NRCODES[16] = { 0, 3, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0 };

unsigned char STD_DC_UV_VALUES[12] = { 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11 }; unsigned char STD_AC_Y_NRCODES[16] = { 0, 2, 1, 3, 3, 2, 4, 3, 5, 5, 4, 4, 0, 0, 1, 0X7D };

unsigned char STD_AC_Y_VALUES[162] =

{

0x01, 0x02, 0x03, 0x00, 0x04, 0x11, 0x05, 0x12,

0x21, 0x31, 0x41, 0x06, 0x13, 0x51, 0x61, 0x07,

0x22, 0x71, 0x14, 0x32, 0x81, 0x91, 0xa1, 0x08,

0x23, 0x42, 0xb1, 0xc1, 0x15, 0x52, 0xd1, 0xf0,

0x24, 0x33, 0x62, 0x72, 0x82, 0x09, 0x0a, 0x16,

0x17, 0x18, 0x19, 0x1a, 0x25, 0x26, 0x27, 0x28,

0x29, 0x2a, 0x34, 0x35, 0x36, 0x37, 0x38, 0x39,

0x3a, 0x43, 0x44, 0x45, 0x46, 0x47, 0x48, 0x49,

0x4a, 0x53, 0x54, 0x55, 0x56, 0x57, 0x58, 0x59,

0x5a, 0x63, 0x64, 0x65, 0x66, 0x67, 0x68, 0x69,

0x6a, 0x73, 0x74, 0x75, 0x76, 0x77, 0x78, 0x79,

0x7a, 0x83, 0x84, 0x85, 0x86, 0x87, 0x88, 0x89,

0x8a, 0x92, 0x93, 0x94, 0x95, 0x96, 0x97, 0x98,

0x99, 0x9a, 0xa2, 0xa3, 0xa4, 0xa5, 0xa6, 0xa7,

0xa8, 0xa9, 0xaa, 0xb2, 0xb3, 0xb4, 0xb5, 0xb6,

0xb7, 0xb8, 0xb9, 0xba, 0xc2, 0xc3, 0xc4, 0xc5,

0xc6, 0xc7, 0xc8, 0xc9, 0xca, 0xd2, 0xd3, 0xd4,

0xd5, 0xd6, 0xd7, 0xd8, 0xd9, 0xda, 0xe1, 0xe2,

0xe3, 0xe4, 0xe5, 0xe6, 0xe7, 0xe8, 0xe9, 0xea,

0xf1, 0xf2, 0xf3, 0xf4, 0xf5, 0xf6, 0xf7, 0xf8,

0xf9, 0xfa

}; unsigned char STD_AC_UV_NRCODES[16] = { 0, 2, 1, 2, 4, 4, 3, 4, 7, 5, 4, 4, 0, 1, 2, 0X77 };

unsigned char STD_AC_UV_VALUES[162] =

{

0x00, 0x01, 0x02, 0x03, 0x11, 0x04, 0x05, 0x21,

0x31, 0x06, 0x12, 0x41, 0x51, 0x07, 0x61, 0x71,

0x13, 0x22, 0x32, 0x81, 0x08, 0x14, 0x42, 0x91,

0xa1, 0xb1, 0xc1, 0x09, 0x23, 0x33, 0x52, 0xf0,

0x15, 0x62, 0x72, 0xd1, 0x0a, 0x16, 0x24, 0x34,

0xe1, 0x25, 0xf1, 0x17, 0x18, 0x19, 0x1a, 0x26,

0x27, 0x28, 0x29, 0x2a, 0x35, 0x36, 0x37, 0x38,

0x39, 0x3a, 0x43, 0x44, 0x45, 0x46, 0x47, 0x48,

0x49, 0x4a, 0x53, 0x54, 0x55, 0x56, 0x57, 0x58,

0x59, 0x5a, 0x63, 0x64, 0x65, 0x66, 0x67, 0x68,

0x69, 0x6a, 0x73, 0x74, 0x75, 0x76, 0x77, 0x78,

0x79, 0x7a, 0x82, 0x83, 0x84, 0x85, 0x86, 0x87,

0x88, 0x89, 0x8a, 0x92, 0x93, 0x94, 0x95, 0x96,

0x97, 0x98, 0x99, 0x9a, 0xa2, 0xa3, 0xa4, 0xa5,

0xa6, 0xa7, 0xa8, 0xa9, 0xaa, 0xb2, 0xb3, 0xb4,

0xb5, 0xb6, 0xb7, 0xb8, 0xb9, 0xba, 0xc2, 0xc3,

0xc4, 0xc5, 0xc6, 0xc7, 0xc8, 0xc9, 0xca, 0xd2,

0xd3, 0xd4, 0xd5, 0xd6, 0xd7, 0xd8, 0xd9, 0xda,

0xe2, 0xe3, 0xe4, 0xe5, 0xe6, 0xe7, 0xe8, 0xe9,

0xea, 0xf2, 0xf3, 0xf4, 0xf5, 0xf6, 0xf7, 0xf8,

0xf9, 0xfa

}; //填充Huffman表

aHuffmanTables[0].nClassAndIdentifier = 0;

memcpy(aHuffmanTables[0].aCodes, STD_DC_Y_NRCODES, 16);

memcpy(aHuffmanTables[0].aTable, STD_DC_Y_VALUES, 12); aHuffmanTables[1].nClassAndIdentifier = 1;

memcpy(aHuffmanTables[1].aCodes, STD_DC_UV_NRCODES, 16);

memcpy(aHuffmanTables[1].aTable, STD_DC_UV_VALUES, 12); aHuffmanTables[2].nClassAndIdentifier = 16;

memcpy(aHuffmanTables[2].aCodes, STD_AC_Y_NRCODES, 16);

memcpy(aHuffmanTables[2].aTable, STD_AC_Y_VALUES, 162); aHuffmanTables[3].nClassAndIdentifier = 17;

memcpy(aHuffmanTables[3].aCodes, STD_AC_UV_NRCODES, 16);

memcpy(aHuffmanTables[3].aTable, STD_AC_UV_VALUES, 162); //标准亮度信号量化模板

unsigned char std_Y_QT[64] =

{

16, 11, 10, 16, 24, 40, 51, 61,

12, 12, 14, 19, 26, 58, 60, 55,

14, 13, 16, 24, 40, 57, 69, 56,

14, 17, 22, 29, 51, 87, 80, 62,

18, 22, 37, 56, 68, 109, 103, 77,

24, 35, 55, 64, 81, 104, 113, 92,

49, 64, 78, 87, 103, 121, 120, 101,

72, 92, 95, 98, 112, 100, 103, 99

}; //标准色差信号量化模板

unsigned char std_UV_QT[64] =

{

17, 18, 24, 47, 99, 99, 99, 99,

18, 21, 26, 66, 99, 99, 99, 99,

24, 26, 56, 99, 99, 99, 99, 99,

47, 66, 99, 99, 99, 99, 99, 99,

99, 99, 99, 99, 99, 99, 99, 99,

99, 99, 99, 99, 99, 99, 99, 99,

99, 99, 99, 99, 99, 99, 99, 99,

99, 99, 99, 99, 99, 99, 99, 99

}; //填充量化表

aQuantizationTables[0].nPrecisionAndIdentifier = 0;

memcpy(aQuantizationTables[0].aTable, std_Y_QT, 64);

aQuantizationTables[1].nPrecisionAndIdentifier = 1;

memcpy(aQuantizationTables[1].aTable, std_UV_QT, 64); NPP_CHECK_CUDA(cudaMemcpyAsync(pdQuantizationTables , aQuantizationTables[0].aTable, 64, cudaMemcpyHostToDevice));

NPP_CHECK_CUDA(cudaMemcpyAsync(pdQuantizationTables + 64, aQuantizationTables[1].aTable, 64, cudaMemcpyHostToDevice)); //填充帧头

oFrameHeader.nSamplePrecision = 8;

oFrameHeader.nComponents = 3;

oFrameHeader.aComponentIdentifier[0] = 1;

oFrameHeader.aComponentIdentifier[1] = 2;

oFrameHeader.aComponentIdentifier[2] = 3;

oFrameHeader.aSamplingFactors[0] = 34;

oFrameHeader.aSamplingFactors[1] = 17;

oFrameHeader.aSamplingFactors[2] = 17;

oFrameHeader.aQuantizationTableSelector[0] = 0;

oFrameHeader.aQuantizationTableSelector[1] = 1;

oFrameHeader.aQuantizationTableSelector[2] = 1;

oFrameHeader.nWidth = img_width;

oFrameHeader.nHeight = img_height; for (int i = 0; i < oFrameHeader.nComponents; ++i)

{

nMCUBlocksV = max(nMCUBlocksV, oFrameHeader.aSamplingFactors[i] & 0x0f);

nMCUBlocksH = max(nMCUBlocksH, oFrameHeader.aSamplingFactors[i] >> 4);

} for (int i = 0; i < oFrameHeader.nComponents; ++i)

{

NppiSize oBlocks;

NppiSize oBlocksPerMCU = { oFrameHeader.aSamplingFactors[i] >> 4, oFrameHeader.aSamplingFactors[i] & 0x0f }; oBlocks.width = (int)ceil((oFrameHeader.nWidth + 7) / 8 *

static_cast<float>(oBlocksPerMCU.width) / nMCUBlocksH);

oBlocks.width = DivUp(oBlocks.width, oBlocksPerMCU.width) * oBlocksPerMCU.width; oBlocks.height = (int)ceil((oFrameHeader.nHeight + 7) / 8 *

static_cast<float>(oBlocksPerMCU.height) / nMCUBlocksV);

oBlocks.height = DivUp(oBlocks.height, oBlocksPerMCU.height) * oBlocksPerMCU.height; aSrcSize[i].width = oBlocks.width * 8;

aSrcSize[i].height = oBlocks.height * 8; // Allocate Memory

size_t nPitch;

NPP_CHECK_CUDA(cudaMallocPitch(&apdDCT[i], &nPitch, oBlocks.width * 64 * sizeof(Npp16s), oBlocks.height));

aDCTStep[i] = static_cast<Npp32s>(nPitch); NPP_CHECK_CUDA(cudaMallocPitch(&apSrcImage[i], &nPitch, aSrcSize[i].width, aSrcSize[i].height)); aSrcPitch[i] = nPitch;

aSrcImageStep[i] = static_cast<Npp32s>(nPitch);

} //RGB2YUV

cudaError_t cudaStatus;

cudaStatus = cuda_common::RGB2YUV(d_srcRGB, img_width, img_height,

apSrcImage[0], aSrcPitch[0], aSrcSize[0].width, aSrcSize[0].height,

apSrcImage[1], aSrcPitch[1], aSrcSize[1].width, aSrcSize[1].height,

apSrcImage[2], aSrcPitch[2], aSrcSize[2].width, aSrcSize[2].height); /**

* Forward DCT, quantization and level shift part of the JPEG encoding.

* Input is expected in 8x8 macro blocks and output is expected to be in 64x1

* macro blocks. The new version of the primitive takes the ROI in image pixel size and

* works with DCT coefficients that are in zig-zag order.

*/

int k = 0;

NPP_CHECK_NPP(nppiDCTQuantFwd8x8LS_JPEG_8u16s_C1R_NEW(apSrcImage[0], aSrcImageStep[0],

apdDCT[0], aDCTStep[0],

pdQuantizationTables + k * 64,

aSrcSize[0],

pDCTState));

k = 1;

NPP_CHECK_NPP(nppiDCTQuantFwd8x8LS_JPEG_8u16s_C1R_NEW(apSrcImage[1], aSrcImageStep[1],

apdDCT[1], aDCTStep[1],

pdQuantizationTables + k * 64,

aSrcSize[1],

pDCTState)); NPP_CHECK_NPP(nppiDCTQuantFwd8x8LS_JPEG_8u16s_C1R_NEW(apSrcImage[2], aSrcImageStep[2],

apdDCT[2], aDCTStep[2],

pdQuantizationTables + k * 64,

aSrcSize[2],

pDCTState)); // Huffman Encoding

Npp8u *pdScan;

Npp32s nScanLength;

NPP_CHECK_CUDA(cudaMalloc(&pdScan, 4 << 20)); Npp8u *pJpegEncoderTemp;

Npp32s nTempSize;

NPP_CHECK_NPP(nppiEncodeHuffmanGetSize(aSrcSize[0], 3, &nTempSize));

NPP_CHECK_CUDA(cudaMalloc(&pJpegEncoderTemp, nTempSize)); NppiEncodeHuffmanSpec *apHuffmanDCTable[3];

NppiEncodeHuffmanSpec *apHuffmanACTable[3]; /**

* Allocates memory and creates a Huffman table in a format that is suitable for the encoder.

*/

NppStatus t_status ;

t_status = nppiEncodeHuffmanSpecInitAlloc_JPEG(pHuffmanDCTables[0].aCodes, nppiDCTable, &apHuffmanDCTable[0]);

t_status = nppiEncodeHuffmanSpecInitAlloc_JPEG(pHuffmanACTables[0].aCodes, nppiACTable, &apHuffmanACTable[0]);

t_status = nppiEncodeHuffmanSpecInitAlloc_JPEG(pHuffmanDCTables[1].aCodes, nppiDCTable, &apHuffmanDCTable[1]);

t_status = nppiEncodeHuffmanSpecInitAlloc_JPEG(pHuffmanACTables[1].aCodes, nppiACTable, &apHuffmanACTable[1]);

t_status = nppiEncodeHuffmanSpecInitAlloc_JPEG(pHuffmanDCTables[1].aCodes, nppiDCTable, &apHuffmanDCTable[2]);

t_status = nppiEncodeHuffmanSpecInitAlloc_JPEG(pHuffmanACTables[1].aCodes, nppiACTable, &apHuffmanACTable[2]); /**

* Huffman Encoding of the JPEG Encoding.

* Input is expected to be 64x1 macro blocks and output is expected as byte stuffed huffman encoded JPEG scan.

*/

Npp32s nSs = 0;

Npp32s nSe = 63;

Npp32s nH = 0;

Npp32s nL = 0;

NPP_CHECK_NPP(nppiEncodeHuffmanScan_JPEG_8u16s_P3R(apdDCT, aDCTStep,

0, nSs, nSe, nH, nL,

pdScan, &nScanLength,

apHuffmanDCTable,

apHuffmanACTable,

aSrcSize,

pJpegEncoderTemp)); for (int i = 0; i < 3; ++i)

{

nppiEncodeHuffmanSpecFree_JPEG(apHuffmanDCTable[i]);

nppiEncodeHuffmanSpecFree_JPEG(apHuffmanACTable[i]);

} // Write JPEG

unsigned char *pDstJpeg = new unsigned char[4 << 20];

unsigned char *pDstOutput = pDstJpeg; writeMarker(0x0D8, pDstOutput);

writeJFIFTag(pDstOutput);

writeQuantizationTable(aQuantizationTables[0], pDstOutput);

writeQuantizationTable(aQuantizationTables[1], pDstOutput); writeFrameHeader(oFrameHeader, pDstOutput);

writeHuffmanTable(pHuffmanDCTables[0], pDstOutput);

writeHuffmanTable(pHuffmanACTables[0], pDstOutput);

writeHuffmanTable(pHuffmanDCTables[1], pDstOutput);

writeHuffmanTable(pHuffmanACTables[1], pDstOutput); oScanHeader.nComponents = 3;

oScanHeader.aComponentSelector[0] = 1;

oScanHeader.aComponentSelector[1] = 2;

oScanHeader.aComponentSelector[2] = 3;

oScanHeader.aHuffmanTablesSelector[0] = 0;

oScanHeader.aHuffmanTablesSelector[1] = 17;

oScanHeader.aHuffmanTablesSelector[2] = 17;

oScanHeader.nSs = 0;

oScanHeader.nSe = 63;

oScanHeader.nA = 0; writeScanHeader(oScanHeader, pDstOutput);

NPP_CHECK_CUDA(cudaMemcpy(pDstOutput, pdScan, nScanLength, cudaMemcpyDeviceToHost));

pDstOutput += nScanLength;

writeMarker(0x0D9, pDstOutput); {

// Write result to file.

std::ofstream outputFile(szOutputFile, ios::out | ios::binary);

outputFile.write(reinterpret_cast<const char *>(pDstJpeg), static_cast<int>(pDstOutput - pDstJpeg));

} // Cleanup

delete [] pDstJpeg; cudaFree(pJpegEncoderTemp);

cudaFree(pdQuantizationTables);

cudaFree(pdScan); nppiDCTFree(pDCTState); for (int i = 0; i < 3; ++i)

{

cudaFree(apdDCT[i]);

cudaFree(apSrcImage[i]);

} return EXIT_SUCCESS;

}

可以看到,编码就是按照1中的流程图进行的。关键的地方都做了注释(中文的或英文的)。

4.要注意的点

把jpegNPP代码移到自己工程的时候,注意添加nppi.lib、nppc.lib。原工程这两个库添加的位置有点与我通常的添加方式相比有点隐秘,希望能帮助遇到同样问题的同学绕过这个坎。

5.结果

(1)原RGB图像

(2)JPEG编码后的图像

由于有跳帧,所以这两张图片不是同一帧。这两张图片在我的电脑上看起来是有色差的。一个更有意思的现象是,用不同的软件打开编码出来的jpg图片,有的软件显示的效果正常,有一些软件显示的颜色会偏红。

主要代码:http://download.csdn.net/download/qq_33892166/9924901

修改自CUDA自带的jpegNPP工程,缺什么就到jpegNPP工程里面去找,工程配置也参考它。

CUDA JPEG编码的更多相关文章

- 形成一个zigzag数组(JPEG编码里取像素数据的排列顺序)

面试例题:输入n,求一个nXn矩阵,规定矩阵沿45度递增,形成一个zigzag数组(JPEG编码里取像素数据的排列顺序),请问如何用C++实现? (中国台湾著名硬件公司2007年11月面试题)(自程序 ...

- JPEG编码(二)

来自CSDN评论区http://bbs.csdn.net/topics/190980 1. 色彩模型 JPEG 的图片使用的是 YCrCb 颜色模型, 而不是计算机上最常用的 RGB. 关于色彩模型, ...

- JPEG编码(一)

JPEG编码介绍. 转自:http://blog.chinaunix.net/uid-20451980-id-1945156.html JPEG(Joint Photographic Experts ...

- JPEG 编码

WIN8. DNJXJ-7XBW8-2378T-X22TX-BKG7J 模板:类的宏,泛型,甜饼切割机 类模板:泛型类: 函数模板:泛型函数 STL standard template Library ...

- 在NVIDIA A100 GPU上利用硬件JPEG解码器和NVIDIA nvJPEG库

在NVIDIA A100 GPU上利用硬件JPEG解码器和NVIDIA nvJPEG库 根据调查,普通人产生的1.2万亿张图像可以通过电话或数码相机捕获.这样的图像的存储,尤其是以高分辨率的原始格式, ...

- NVIDIA A100 GPUs上硬件JPEG解码器和NVIDIA nvJPEG库

NVIDIA A100 GPUs上硬件JPEG解码器和NVIDIA nvJPEG库 Leveraging the Hardware JPEG Decoder and NVIDIA nvJPEG Lib ...

- Atitit 视频编码与动画原理attilax总结

Atitit 视频编码与动画原理attilax总结 1.1. 第一步:实现有损图像压缩和解压1 1.2. 接着将其量化,所谓量化,就是信号采样的步长,1 1.3. 第二步:实现宏块误差计算2 1.4. ...

- [数据结构与算法]哈夫曼(Huffman)树与哈夫曼编码

声明:原创作品,转载时请注明文章来自SAP师太技术博客( 博/客/园www.cnblogs.com):www.cnblogs.com/jiangzhengjun,并以超链接形式标明文章原始出处,否则将 ...

- dm8148 jpeg编解码器测试

测试过程: 1)于A8将jpeg传送到videoM3解码,然后,videoM3编码.在编译jpeg图像传输到A8,主要是测试jpeg编码的图像需要多少时间: 1000w像素: 编码时间:43ms. ...

随机推荐

- Linux系统——引导过程与服务控制

一.Linux开机启动原理(十步) (1)开机自检BIOS 开机检测,主板检测 (2)MBR引导 硬盘512字节 (3)GRUB菜单 操作系统菜单 (4)加载内核(kernel) 启动操作系统核心,根 ...

- nginx 413文件上报太大

在nginx使用过程中,上传文件的过程中,通常需要设置nginx报文大小限制.避免出现413 Request Entity Too Large. 于是奇葩的问题被我们遇到了,详细配置请参考下面.我们的 ...

- JQuery变量数字相加的研究

在 jquery中,一个变量和一个数字相加,期望得到1+2=3但是: eg: <input type="text" class="input_Num" i ...

- 82. Remove Duplicates from Sorted List II(删除有序链表中的重复元素)

Given a sorted linked list, delete all nodes that have duplicate numbers, leaving only distinct numb ...

- akka消息传递

消息传递 消息本身要求是什么?是否必须可以序列化?消息传递根据传递的要求严格程序依次分为三类,1.至多一次到达 至消息最多一次传递到目的端,消息可能会丢失,但不会重复2.至少一次到达 潜在可能存在多次 ...

- 带你走进ajax(3)

使用ajax实现用户名有效性验证 需求:当用户输入完用户名时,用户可以通过页面上的按键来确认当前的用户名是否有效.如下图所示 思路:用户触发按键后可以向服务器发起http请求,将用户名提交给服务器来进 ...

- linux 注销其他用户

本文主要参考:http://ysz520020.blog.163.com/blog/static/352595722011969264745 linux kill掉其他的登录用户 : 方法一: 使用 ...

- jvm2

垃圾回收器的实现: 1.让用户都暂停,不再产生垃圾,就去收集垃圾.新生代用复制算法清理垃圾,老生代用标记整理算法搜集垃圾. 优秀的算法:服务端默认是CMS收集器. %..jvm案例演示 内存: Jco ...

- Django学习笔记之Django QuerySet的方法

一般情况下,我们在写Django项目需要操作QuerySet时一些常用的方法已经满足我们日常大多数需求,比如get.filter.exclude.delete神马的感觉就已经无所不能了,但随着项目但业 ...

- C++中string的成员函数

string类的构造函数: string(const char *s); //用c字符串s初始化 string(int n,char c); //用n个字符c初始化 此外,string类还支持默认构造 ...