详细解读DiskLruCache

DiskLruCache这个类我记忆中是来自Google的一个开源项目,叫做BitmapFun,目的是更方便的加载bitmap。项目的源码:https://developer.android.com/training/displaying-bitmaps/index.html,这个项目中就有一个DiskLruCache类,用来做图片的磁盘缓存。了解缓存机制的朋友应该知道缓存应该做内存和磁盘两个,这个类提供的磁盘缓存用的是Lru(最近最少使用)算法。这个算法保证了经常使用的数据会被缓存,如果经常没用的数据就被清理。这就和人类的大脑一样,最近经常看过的知识就能记得很牢,如果长时间没看了,就可能记不得了。好了,说了这么多了,开始正文。

一、源码分析

1.1 源码

刚上来就开始源码分析不是我的风格,所以仅仅是贴一下,然后通过感性的认识进行总结。首先需要说明的是这个类在android的SDK中是没有的,需要自己下载导入。

/*

* Copyright (C) 2011 The Android Open Source Project

*

* Licensed under the Apache License, Version 2.0 (the "License");

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package libcore.io;

import java.io.BufferedWriter;

import java.io.Closeable;

import java.io.EOFException;

import java.io.File;

import java.io.FileInputStream;

import java.io.FileNotFoundException;

import java.io.FileOutputStream;

import java.io.FileWriter;

import java.io.FilterOutputStream;

import java.io.IOException;

import java.io.InputStream;

import java.io.InputStreamReader;

import java.io.OutputStream;

import java.io.OutputStreamWriter;

import java.io.Writer;

import java.nio.charset.Charsets;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.Iterator;

import java.util.LinkedHashMap;

import java.util.Map;

import java.util.concurrent.Callable;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.LinkedBlockingQueue;

import java.util.concurrent.ThreadPoolExecutor;

import java.util.concurrent.TimeUnit;

/**

* A cache that uses a bounded amount of space on a filesystem. Each cache

* entry has a string key and a fixed number of values. Values are byte

* sequences, accessible as streams or files. Each value must be between {@code

* 0} and {@code Integer.MAX_VALUE} bytes in length.

*

* <p>The cache stores its data in a directory on the filesystem. This

* directory must be exclusive to the cache; the cache may delete or overwrite

* files from its directory. It is an error for multiple processes to use the

* same cache directory at the same time.

*

* <p>This cache limits the number of bytes that it will store on the

* filesystem. When the number of stored bytes exceeds the limit, the cache will

* remove entries in the background until the limit is satisfied. The limit is

* not strict: the cache may temporarily exceed it while waiting for files to be

* deleted. The limit does not include filesystem overhead or the cache

* journal so space-sensitive applications should set a conservative limit.

*

* <p>Clients call {@link #edit} to create or update the values of an entry. An

* entry may have only one editor at one time; if a value is not available to be

* edited then {@link #edit} will return null.

* <ul>

* <li>When an entry is being <strong>created</strong> it is necessary to

* supply a full set of values; the empty value should be used as a

* placeholder if necessary.

* <li>When an entry is being <strong>edited</strong>, it is not necessary

* to supply data for every value; values default to their previous

* value.

* </ul>

* Every {@link #edit} call must be matched by a call to {@link Editor#commit}

* or {@link Editor#abort}. Committing is atomic: a read observes the full set

* of values as they were before or after the commit, but never a mix of values.

*

* <p>Clients call {@link #get} to read a snapshot of an entry. The read will

* observe the value at the time that {@link #get} was called. Updates and

* removals after the call do not impact ongoing reads.

*

* <p>This class is tolerant of some I/O errors. If files are missing from the

* filesystem, the corresponding entries will be dropped from the cache. If

* an error occurs while writing a cache value, the edit will fail silently.

* Callers should handle other problems by catching {@code IOException} and

* responding appropriately.

*/

public final class DiskLruCache implements Closeable {

static final String JOURNAL_FILE = "journal";

static final String JOURNAL_FILE_TMP = "journal.tmp";

static final String MAGIC = "libcore.io.DiskLruCache";

static final String VERSION_1 = "1";

static final long ANY_SEQUENCE_NUMBER = -1;

private static final String CLEAN = "CLEAN";

private static final String DIRTY = "DIRTY";

private static final String REMOVE = "REMOVE";

private static final String READ = "READ";

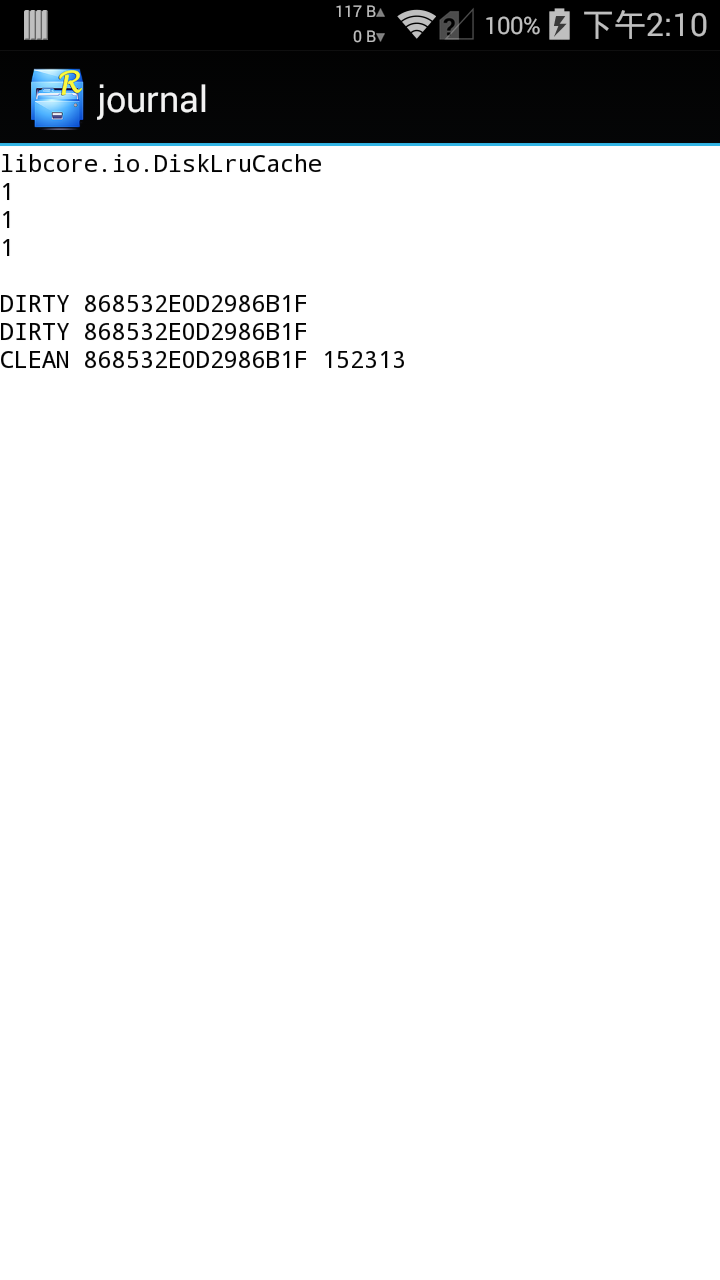

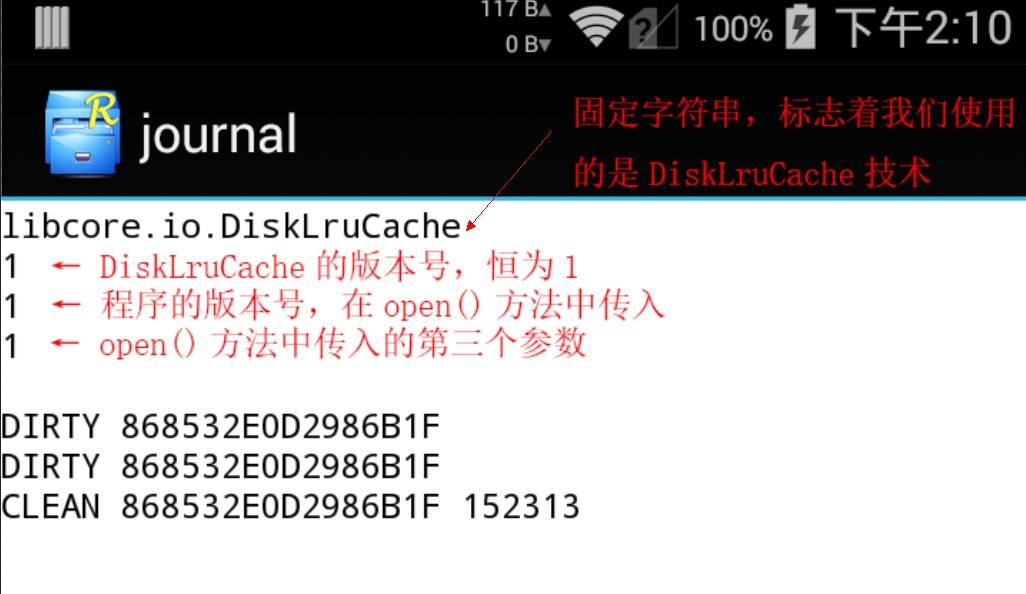

/*

* This cache uses a journal file named "journal". A typical journal file

* looks like this:

* libcore.io.DiskLruCache

* 1

* 100

* 2

*

* CLEAN 3400330d1dfc7f3f7f4b8d4d803dfcf6 832 21054

* DIRTY 335c4c6028171cfddfbaae1a9c313c52

* CLEAN 335c4c6028171cfddfbaae1a9c313c52 3934 2342

* REMOVE 335c4c6028171cfddfbaae1a9c313c52

* DIRTY 1ab96a171faeeee38496d8b330771a7a

* CLEAN 1ab96a171faeeee38496d8b330771a7a 1600 234

* READ 335c4c6028171cfddfbaae1a9c313c52

* READ 3400330d1dfc7f3f7f4b8d4d803dfcf6

*

* The first five lines of the journal form its header. They are the

* constant string "libcore.io.DiskLruCache", the disk cache's version,

* the application's version, the value count, and a blank line.

*

* Each of the subsequent lines in the file is a record of the state of a

* cache entry. Each line contains space-separated values: a state, a key,

* and optional state-specific values.

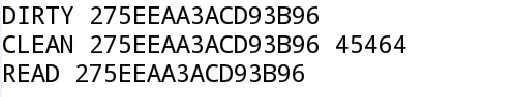

* o DIRTY lines track that an entry is actively being created or updated.

* Every successful DIRTY action should be followed by a CLEAN or REMOVE

* action. DIRTY lines without a matching CLEAN or REMOVE indicate that

* temporary files may need to be deleted.

* o CLEAN lines track a cache entry that has been successfully published

* and may be read. A publish line is followed by the lengths of each of

* its values.

* o READ lines track accesses for LRU.

* o REMOVE lines track entries that have been deleted.

*

* The journal file is appended to as cache operations occur. The journal may

* occasionally be compacted by dropping redundant lines. A temporary file named

* "journal.tmp" will be used during compaction; that file should be deleted if

* it exists when the cache is opened.

*/

private final File directory;

private final File journalFile;

private final File journalFileTmp;

private final int appVersion;

private final long maxSize;

private final int valueCount;

private long size = 0;

private Writer journalWriter;

private final LinkedHashMap<String, Entry> lruEntries

= new LinkedHashMap<String, Entry>(0, 0.75f, true);

private int redundantOpCount;

/**

* To differentiate between old and current snapshots, each entry is given

* a sequence number each time an edit is committed. A snapshot is stale if

* its sequence number is not equal to its entry's sequence number.

*/

private long nextSequenceNumber = 0;

/** This cache uses a single background thread to evict entries. */

private final ExecutorService executorService = new ThreadPoolExecutor(0, 1,

60L, TimeUnit.SECONDS, new LinkedBlockingQueue<Runnable>());

private final Callable<Void> cleanupCallable = new Callable<Void>() {

@Override public Void call() throws Exception {

synchronized (DiskLruCache.this) {

if (journalWriter == null) {

return null; // closed

}

trimToSize();

if (journalRebuildRequired()) {

rebuildJournal();

redundantOpCount = 0;

}

}

return null;

}

};

private DiskLruCache(File directory, int appVersion, int valueCount, long maxSize) {

this.directory = directory;

this.appVersion = appVersion;

this.journalFile = new File(directory, JOURNAL_FILE);

this.journalFileTmp = new File(directory, JOURNAL_FILE_TMP);

this.valueCount = valueCount;

this.maxSize = maxSize;

}

/**

* Opens the cache in {@code directory}, creating a cache if none exists

* there.

*

* @param directory a writable directory

* @param appVersion

* @param valueCount the number of values per cache entry. Must be positive.

* @param maxSize the maximum number of bytes this cache should use to store

* @throws IOException if reading or writing the cache directory fails

*/

public static DiskLruCache open(File directory, int appVersion, int valueCount, long maxSize)

throws IOException {

if (maxSize <= 0) {

throw new IllegalArgumentException("maxSize <= 0");

}

if (valueCount <= 0) {

throw new IllegalArgumentException("valueCount <= 0");

}

// prefer to pick up where we left off

DiskLruCache cache = new DiskLruCache(directory, appVersion, valueCount, maxSize);

if (cache.journalFile.exists()) {

try {

cache.readJournal();

cache.processJournal();

cache.journalWriter = new BufferedWriter(new FileWriter(cache.journalFile, true));

return cache;

} catch (IOException journalIsCorrupt) {

System.logW("DiskLruCache " + directory + " is corrupt: "

+ journalIsCorrupt.getMessage() + ", removing");

cache.delete();

}

}

// create a new empty cache

directory.mkdirs();

cache = new DiskLruCache(directory, appVersion, valueCount, maxSize);

cache.rebuildJournal();

return cache;

}

private void readJournal() throws IOException {

StrictLineReader reader = new StrictLineReader(new FileInputStream(journalFile),

Charsets.US_ASCII);

try {

String magic = reader.readLine();

String version = reader.readLine();

String appVersionString = reader.readLine();

String valueCountString = reader.readLine();

String blank = reader.readLine();

if (!MAGIC.equals(magic)

|| !VERSION_1.equals(version)

|| !Integer.toString(appVersion).equals(appVersionString)

|| !Integer.toString(valueCount).equals(valueCountString)

|| !"".equals(blank)) {

throw new IOException("unexpected journal header: ["

+ magic + ", " + version + ", " + valueCountString + ", " + blank + "]");

}

int lineCount = 0;

while (true) {

try {

readJournalLine(reader.readLine());

lineCount++;

} catch (EOFException endOfJournal) {

break;

}

}

redundantOpCount = lineCount - lruEntries.size();

} finally {

IoUtils.closeQuietly(reader);

}

}

private void readJournalLine(String line) throws IOException {

int firstSpace = line.indexOf(' ');

if (firstSpace == -1) {

throw new IOException("unexpected journal line: " + line);

}

int keyBegin = firstSpace + 1;

int secondSpace = line.indexOf(' ', keyBegin);

final String key;

if (secondSpace == -1) {

key = line.substring(keyBegin);

if (firstSpace == REMOVE.length() && line.startsWith(REMOVE)) {

lruEntries.remove(key);

return;

}

} else {

key = line.substring(keyBegin, secondSpace);

}

Entry entry = lruEntries.get(key);

if (entry == null) {

entry = new Entry(key);

lruEntries.put(key, entry);

}

if (secondSpace != -1 && firstSpace == CLEAN.length() && line.startsWith(CLEAN)) {

String[] parts = line.substring(secondSpace + 1).split(" ");

entry.readable = true;

entry.currentEditor = null;

entry.setLengths(parts);

} else if (secondSpace == -1 && firstSpace == DIRTY.length() && line.startsWith(DIRTY)) {

entry.currentEditor = new Editor(entry);

} else if (secondSpace == -1 && firstSpace == READ.length() && line.startsWith(READ)) {

// this work was already done by calling lruEntries.get()

} else {

throw new IOException("unexpected journal line: " + line);

}

}

/**

* Computes the initial size and collects garbage as a part of opening the

* cache. Dirty entries are assumed to be inconsistent and will be deleted.

*/

private void processJournal() throws IOException {

deleteIfExists(journalFileTmp);

for (Iterator<Entry> i = lruEntries.values().iterator(); i.hasNext(); ) {

Entry entry = i.next();

if (entry.currentEditor == null) {

for (int t = 0; t < valueCount; t++) {

size += entry.lengths[t];

}

} else {

entry.currentEditor = null;

for (int t = 0; t < valueCount; t++) {

deleteIfExists(entry.getCleanFile(t));

deleteIfExists(entry.getDirtyFile(t));

}

i.remove();

}

}

}

/**

* Creates a new journal that omits redundant information. This replaces the

* current journal if it exists.

*/

private synchronized void rebuildJournal() throws IOException {

if (journalWriter != null) {

journalWriter.close();

}

Writer writer = new BufferedWriter(new FileWriter(journalFileTmp));

writer.write(MAGIC);

writer.write("\n");

writer.write(VERSION_1);

writer.write("\n");

writer.write(Integer.toString(appVersion));

writer.write("\n");

writer.write(Integer.toString(valueCount));

writer.write("\n");

writer.write("\n");

for (Entry entry : lruEntries.values()) {

if (entry.currentEditor != null) {

writer.write(DIRTY + ' ' + entry.key + '\n');

} else {

writer.write(CLEAN + ' ' + entry.key + entry.getLengths() + '\n');

}

}

writer.close();

journalFileTmp.renameTo(journalFile);

journalWriter = new BufferedWriter(new FileWriter(journalFile, true));

}

private static void deleteIfExists(File file) throws IOException {

try {

Libcore.os.remove(file.getPath());

} catch (ErrnoException errnoException) {

if (errnoException.errno != OsConstants.ENOENT) {

throw errnoException.rethrowAsIOException();

}

}

}

/**

* Returns a snapshot of the entry named {@code key}, or null if it doesn't

* exist is not currently readable. If a value is returned, it is moved to

* the head of the LRU queue.

*/

public synchronized Snapshot get(String key) throws IOException {

checkNotClosed();

validateKey(key);

Entry entry = lruEntries.get(key);

if (entry == null) {

return null;

}

if (!entry.readable) {

return null;

}

/*

* Open all streams eagerly to guarantee that we see a single published

* snapshot. If we opened streams lazily then the streams could come

* from different edits.

*/

InputStream[] ins = new InputStream[valueCount];

try {

for (int i = 0; i < valueCount; i++) {

ins[i] = new FileInputStream(entry.getCleanFile(i));

}

} catch (FileNotFoundException e) {

// a file must have been deleted manually!

return null;

}

redundantOpCount++;

journalWriter.append(READ + ' ' + key + '\n');

if (journalRebuildRequired()) {

executorService.submit(cleanupCallable);

}

return new Snapshot(key, entry.sequenceNumber, ins);

}

/**

* Returns an editor for the entry named {@code key}, or null if another

* edit is in progress.

*/

public Editor edit(String key) throws IOException {

return edit(key, ANY_SEQUENCE_NUMBER);

}

private synchronized Editor edit(String key, long expectedSequenceNumber) throws IOException {

checkNotClosed();

validateKey(key);

Entry entry = lruEntries.get(key);

if (expectedSequenceNumber != ANY_SEQUENCE_NUMBER

&& (entry == null || entry.sequenceNumber != expectedSequenceNumber)) {

return null; // snapshot is stale

}

if (entry == null) {

entry = new Entry(key);

lruEntries.put(key, entry);

} else if (entry.currentEditor != null) {

return null; // another edit is in progress

}

Editor editor = new Editor(entry);

entry.currentEditor = editor;

// flush the journal before creating files to prevent file leaks

journalWriter.write(DIRTY + ' ' + key + '\n');

journalWriter.flush();

return editor;

}

/**

* Returns the directory where this cache stores its data.

*/

public File getDirectory() {

return directory;

}

/**

* Returns the maximum number of bytes that this cache should use to store

* its data.

*/

public long maxSize() {

return maxSize;

}

/**

* Returns the number of bytes currently being used to store the values in

* this cache. This may be greater than the max size if a background

* deletion is pending.

*/

public synchronized long size() {

return size;

}

private synchronized void completeEdit(Editor editor, boolean success) throws IOException {

Entry entry = editor.entry;

if (entry.currentEditor != editor) {

throw new IllegalStateException();

}

// if this edit is creating the entry for the first time, every index must have a value

if (success && !entry.readable) {

for (int i = 0; i < valueCount; i++) {

if (!editor.written[i]) {

editor.abort();

throw new IllegalStateException("Newly created entry didn't create value for index " + i);

}

if (!entry.getDirtyFile(i).exists()) {

editor.abort();

System.logW("DiskLruCache: Newly created entry doesn't have file for index " + i);

return;

}

}

}

for (int i = 0; i < valueCount; i++) {

File dirty = entry.getDirtyFile(i);

if (success) {

if (dirty.exists()) {

File clean = entry.getCleanFile(i);

dirty.renameTo(clean);

long oldLength = entry.lengths[i];

long newLength = clean.length();

entry.lengths[i] = newLength;

size = size - oldLength + newLength;

}

} else {

deleteIfExists(dirty);

}

}

redundantOpCount++;

entry.currentEditor = null;

if (entry.readable | success) {

entry.readable = true;

journalWriter.write(CLEAN + ' ' + entry.key + entry.getLengths() + '\n');

if (success) {

entry.sequenceNumber = nextSequenceNumber++;

}

} else {

lruEntries.remove(entry.key);

journalWriter.write(REMOVE + ' ' + entry.key + '\n');

}

if (size > maxSize || journalRebuildRequired()) {

executorService.submit(cleanupCallable);

}

}

/**

* We only rebuild the journal when it will halve the size of the journal

* and eliminate at least 2000 ops.

*/

private boolean journalRebuildRequired() {

final int REDUNDANT_OP_COMPACT_THRESHOLD = 2000;

return redundantOpCount >= REDUNDANT_OP_COMPACT_THRESHOLD

&& redundantOpCount >= lruEntries.size();

}

/**

* Drops the entry for {@code key} if it exists and can be removed. Entries

* actively being edited cannot be removed.

*

* @return true if an entry was removed.

*/

public synchronized boolean remove(String key) throws IOException {

checkNotClosed();

validateKey(key);

Entry entry = lruEntries.get(key);

if (entry == null || entry.currentEditor != null) {

return false;

}

for (int i = 0; i < valueCount; i++) {

File file = entry.getCleanFile(i);

if (!file.delete()) {

throw new IOException("failed to delete " + file);

}

size -= entry.lengths[i];

entry.lengths[i] = 0;

}

redundantOpCount++;

journalWriter.append(REMOVE + ' ' + key + '\n');

lruEntries.remove(key);

if (journalRebuildRequired()) {

executorService.submit(cleanupCallable);

}

return true;

}

/**

* Returns true if this cache has been closed.

*/

public boolean isClosed() {

return journalWriter == null;

}

private void checkNotClosed() {

if (journalWriter == null) {

throw new IllegalStateException("cache is closed");

}

}

/**

* Force buffered operations to the filesystem.

*/

public synchronized void flush() throws IOException {

checkNotClosed();

trimToSize();

journalWriter.flush();

}

/**

* Closes this cache. Stored values will remain on the filesystem.

*/

public synchronized void close() throws IOException {

if (journalWriter == null) {

return; // already closed

}

for (Entry entry : new ArrayList<Entry>(lruEntries.values())) {

if (entry.currentEditor != null) {

entry.currentEditor.abort();

}

}

trimToSize();

journalWriter.close();

journalWriter = null;

}

private void trimToSize() throws IOException {

while (size > maxSize) {

Map.Entry<String, Entry> toEvict = lruEntries.eldest();

remove(toEvict.getKey());

}

}

/**

* Closes the cache and deletes all of its stored values. This will delete

* all files in the cache directory including files that weren't created by

* the cache.

*/

public void delete() throws IOException {

close();

IoUtils.deleteContents(directory);

}

private void validateKey(String key) {

if (key.contains(" ") || key.contains("\n") || key.contains("\r")) {

throw new IllegalArgumentException(

"keys must not contain spaces or newlines: \"" + key + "\"");

}

}

private static String inputStreamToString(InputStream in) throws IOException {

return Streams.readFully(new InputStreamReader(in, Charsets.UTF_8));

}

/**

* A snapshot of the values for an entry.

*/

public final class Snapshot implements Closeable {

private final String key;

private final long sequenceNumber;

private final InputStream[] ins;

private Snapshot(String key, long sequenceNumber, InputStream[] ins) {

this.key = key;

this.sequenceNumber = sequenceNumber;

this.ins = ins;

}

/**

* Returns an editor for this snapshot's entry, or null if either the

* entry has changed since this snapshot was created or if another edit

* is in progress.

*/

public Editor edit() throws IOException {

return DiskLruCache.this.edit(key, sequenceNumber);

}

/**

* Returns the unbuffered stream with the value for {@code index}.

*/

public InputStream getInputStream(int index) {

return ins[index];

}

/**

* Returns the string value for {@code index}.

*/

public String getString(int index) throws IOException {

return inputStreamToString(getInputStream(index));

}

@Override public void close() {

for (InputStream in : ins) {

IoUtils.closeQuietly(in);

}

}

}

/**

* Edits the values for an entry.

*/

public final class Editor {

private final Entry entry;

private final boolean[] written;

private boolean hasErrors;

private Editor(Entry entry) {

this.entry = entry;

this.written = (entry.readable) ? null : new boolean[valueCount];

}

/**

* Returns an unbuffered input stream to read the last committed value,

* or null if no value has been committed.

*/

public InputStream newInputStream(int index) throws IOException {

synchronized (DiskLruCache.this) {

if (entry.currentEditor != this) {

throw new IllegalStateException();

}

if (!entry.readable) {

return null;

}

return new FileInputStream(entry.getCleanFile(index));

}

}

/**

* Returns the last committed value as a string, or null if no value

* has been committed.

*/

public String getString(int index) throws IOException {

InputStream in = newInputStream(index);

return in != null ? inputStreamToString(in) : null;

}

/**

* Returns a new unbuffered output stream to write the value at

* {@code index}. If the underlying output stream encounters errors

* when writing to the filesystem, this edit will be aborted when

* {@link #commit} is called. The returned output stream does not throw

* IOExceptions.

*/

public OutputStream newOutputStream(int index) throws IOException {

synchronized (DiskLruCache.this) {

if (entry.currentEditor != this) {

throw new IllegalStateException();

}

if (!entry.readable) {

written[index] = true;

}

return new FaultHidingOutputStream(new FileOutputStream(entry.getDirtyFile(index)));

}

}

/**

* Sets the value at {@code index} to {@code value}.

*/

public void set(int index, String value) throws IOException {

Writer writer = null;

try {

writer = new OutputStreamWriter(newOutputStream(index), Charsets.UTF_8);

writer.write(value);

} finally {

IoUtils.closeQuietly(writer);

}

}

/**

* Commits this edit so it is visible to readers. This releases the

* edit lock so another edit may be started on the same key.

*/

public void commit() throws IOException {

if (hasErrors) {

completeEdit(this, false);

remove(entry.key); // the previous entry is stale

} else {

completeEdit(this, true);

}

}

/**

* Aborts this edit. This releases the edit lock so another edit may be

* started on the same key.

*/

public void abort() throws IOException {

completeEdit(this, false);

}

private class FaultHidingOutputStream extends FilterOutputStream {

private FaultHidingOutputStream(OutputStream out) {

super(out);

}

@Override public void write(int oneByte) {

try {

out.write(oneByte);

} catch (IOException e) {

hasErrors = true;

}

}

@Override public void write(byte[] buffer, int offset, int length) {

try {

out.write(buffer, offset, length);

} catch (IOException e) {

hasErrors = true;

}

}

@Override public void close() {

try {

out.close();

} catch (IOException e) {

hasErrors = true;

}

}

@Override public void flush() {

try {

out.flush();

} catch (IOException e) {

hasErrors = true;

}

}

}

}

private final class Entry {

private final String key;

/** Lengths of this entry's files. */

private final long[] lengths;

/** True if this entry has ever been published */

private boolean readable;

/** The ongoing edit or null if this entry is not being edited. */

private Editor currentEditor;

/** The sequence number of the most recently committed edit to this entry. */

private long sequenceNumber;

private Entry(String key) {

this.key = key;

this.lengths = new long[valueCount];

}

public String getLengths() throws IOException {

StringBuilder result = new StringBuilder();

for (long size : lengths) {

result.append(' ').append(size);

}

return result.toString();

}

/**

* Set lengths using decimal numbers like "10123".

*/

private void setLengths(String[] strings) throws IOException {

if (strings.length != valueCount) {

throw invalidLengths(strings);

}

try {

for (int i = 0; i < strings.length; i++) {

lengths[i] = Long.parseLong(strings[i]);

}

} catch (NumberFormatException e) {

throw invalidLengths(strings);

}

}

private IOException invalidLengths(String[] strings) throws IOException {

throw new IOException("unexpected journal line: " + Arrays.toString(strings));

}

public File getCleanFile(int i) {

return new File(directory, key + "." + i);

}

public File getDirtyFile(int i) {

return new File(directory, key + "." + i + ".tmp");

}

}

}

BitmapFun新版本的源码:

/*

* Copyright (C) 2011 The Android Open Source Project

*

* Licensed under the Apache License, Version 2.0 (the "License");

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/ package com.example.android.displayingbitmaps.util; import java.io.BufferedInputStream;

import java.io.BufferedWriter;

import java.io.Closeable;

import java.io.EOFException;

import java.io.File;

import java.io.FileInputStream;

import java.io.FileNotFoundException;

import java.io.FileOutputStream;

import java.io.FileWriter;

import java.io.FilterOutputStream;

import java.io.IOException;

import java.io.InputStream;

import java.io.InputStreamReader;

import java.io.OutputStream;

import java.io.OutputStreamWriter;

import java.io.Reader;

import java.io.StringWriter;

import java.io.Writer;

import java.lang.reflect.Array;

import java.nio.charset.Charset;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.Iterator;

import java.util.LinkedHashMap;

import java.util.Map;

import java.util.concurrent.Callable;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.LinkedBlockingQueue;

import java.util.concurrent.ThreadPoolExecutor;

import java.util.concurrent.TimeUnit; /**

******************************************************************************

* Taken from the JB source code, can be found in:

* libcore/luni/src/main/java/libcore/io/DiskLruCache.java

* or direct link:

* https://android.googlesource.com/platform/libcore/+/android-4.1.1_r1/luni/src/main/java/libcore/io/DiskLruCache.java

******************************************************************************

*

* A cache that uses a bounded amount of space on a filesystem. Each cache

* entry has a string key and a fixed number of values. Values are byte

* sequences, accessible as streams or files. Each value must be between {@code

* 0} and {@code Integer.MAX_VALUE} bytes in length.

*

* <p>The cache stores its data in a directory on the filesystem. This

* directory must be exclusive to the cache; the cache may delete or overwrite

* files from its directory. It is an error for multiple processes to use the

* same cache directory at the same time.

*

* <p>This cache limits the number of bytes that it will store on the

* filesystem. When the number of stored bytes exceeds the limit, the cache will

* remove entries in the background until the limit is satisfied. The limit is

* not strict: the cache may temporarily exceed it while waiting for files to be

* deleted. The limit does not include filesystem overhead or the cache

* journal so space-sensitive applications should set a conservative limit.

*

* <p>Clients call {@link #edit} to create or update the values of an entry. An

* entry may have only one editor at one time; if a value is not available to be

* edited then {@link #edit} will return null.

* <ul>

* <li>When an entry is being <strong>created</strong> it is necessary to

* supply a full set of values; the empty value should be used as a

* placeholder if necessary.

* <li>When an entry is being <strong>edited</strong>, it is not necessary

* to supply data for every value; values default to their previous

* value.

* </ul>

* Every {@link #edit} call must be matched by a call to {@link Editor#commit}

* or {@link Editor#abort}. Committing is atomic: a read observes the full set

* of values as they were before or after the commit, but never a mix of values.

*

* <p>Clients call {@link #get} to read a snapshot of an entry. The read will

* observe the value at the time that {@link #get} was called. Updates and

* removals after the call do not impact ongoing reads.

*

* <p>This class is tolerant of some I/O errors. If files are missing from the

* filesystem, the corresponding entries will be dropped from the cache. If

* an error occurs while writing a cache value, the edit will fail silently.

* Callers should handle other problems by catching {@code IOException} and

* responding appropriately.

*/

public final class DiskLruCache implements Closeable {

static final String JOURNAL_FILE = "journal";

static final String JOURNAL_FILE_TMP = "journal.tmp";

static final String MAGIC = "libcore.io.DiskLruCache";

static final String VERSION_1 = "1";

static final long ANY_SEQUENCE_NUMBER = -1;

private static final String CLEAN = "CLEAN";

private static final String DIRTY = "DIRTY";

private static final String REMOVE = "REMOVE";

private static final String READ = "READ"; private static final Charset UTF_8 = Charset.forName("UTF-8");

private static final int IO_BUFFER_SIZE = 8 * 1024; /*

* This cache uses a journal file named "journal". A typical journal file

* looks like this:

* libcore.io.DiskLruCache

* 1

* 100

* 2

*

* CLEAN 3400330d1dfc7f3f7f4b8d4d803dfcf6 832 21054

* DIRTY 335c4c6028171cfddfbaae1a9c313c52

* CLEAN 335c4c6028171cfddfbaae1a9c313c52 3934 2342

* REMOVE 335c4c6028171cfddfbaae1a9c313c52

* DIRTY 1ab96a171faeeee38496d8b330771a7a

* CLEAN 1ab96a171faeeee38496d8b330771a7a 1600 234

* READ 335c4c6028171cfddfbaae1a9c313c52

* READ 3400330d1dfc7f3f7f4b8d4d803dfcf6

*

* The first five lines of the journal form its header. They are the

* constant string "libcore.io.DiskLruCache", the disk cache's version,

* the application's version, the value count, and a blank line.

*

* Each of the subsequent lines in the file is a record of the state of a

* cache entry. Each line contains space-separated values: a state, a key,

* and optional state-specific values.

* o DIRTY lines track that an entry is actively being created or updated.

* Every successful DIRTY action should be followed by a CLEAN or REMOVE

* action. DIRTY lines without a matching CLEAN or REMOVE indicate that

* temporary files may need to be deleted.

* o CLEAN lines track a cache entry that has been successfully published

* and may be read. A publish line is followed by the lengths of each of

* its values.

* o READ lines track accesses for LRU.

* o REMOVE lines track entries that have been deleted.

*

* The journal file is appended to as cache operations occur. The journal may

* occasionally be compacted by dropping redundant lines. A temporary file named

* "journal.tmp" will be used during compaction; that file should be deleted if

* it exists when the cache is opened.

*/ private final File directory;

private final File journalFile;

private final File journalFileTmp;

private final int appVersion;

private final long maxSize;

private final int valueCount;

private long size = 0;

private Writer journalWriter;

private final LinkedHashMap<String, Entry> lruEntries

= new LinkedHashMap<String, Entry>(0, 0.75f, true);

private int redundantOpCount; /**

* To differentiate between old and current snapshots, each entry is given

* a sequence number each time an edit is committed. A snapshot is stale if

* its sequence number is not equal to its entry's sequence number.

*/

private long nextSequenceNumber = 0; /* From java.util.Arrays */

@SuppressWarnings("unchecked")

private static <T> T[] copyOfRange(T[] original, int start, int end) {

final int originalLength = original.length; // For exception priority compatibility.

if (start > end) {

throw new IllegalArgumentException();

}

if (start < 0 || start > originalLength) {

throw new ArrayIndexOutOfBoundsException();

}

final int resultLength = end - start;

final int copyLength = Math.min(resultLength, originalLength - start);

final T[] result = (T[]) Array

.newInstance(original.getClass().getComponentType(), resultLength);

System.arraycopy(original, start, result, 0, copyLength);

return result;

} /**

* Returns the remainder of 'reader' as a string, closing it when done.

*/

public static String readFully(Reader reader) throws IOException {

try {

StringWriter writer = new StringWriter();

char[] buffer = new char[1024];

int count;

while ((count = reader.read(buffer)) != -1) {

writer.write(buffer, 0, count);

}

return writer.toString();

} finally {

reader.close();

}

} /**

* Returns the ASCII characters up to but not including the next "\r\n", or

* "\n".

*

* @throws java.io.EOFException if the stream is exhausted before the next newline

* character.

*/

public static String readAsciiLine(InputStream in) throws IOException {

// TODO: support UTF-8 here instead StringBuilder result = new StringBuilder(80);

while (true) {

int c = in.read();

if (c == -1) {

throw new EOFException();

} else if (c == '\n') {

break;

} result.append((char) c);

}

int length = result.length();

if (length > 0 && result.charAt(length - 1) == '\r') {

result.setLength(length - 1);

}

return result.toString();

} /**

* Closes 'closeable', ignoring any checked exceptions. Does nothing if 'closeable' is null.

*/

public static void closeQuietly(Closeable closeable) {

if (closeable != null) {

try {

closeable.close();

} catch (RuntimeException rethrown) {

throw rethrown;

} catch (Exception ignored) {

}

}

} /**

* Recursively delete everything in {@code dir}.

*/

// TODO: this should specify paths as Strings rather than as Files

public static void deleteContents(File dir) throws IOException {

File[] files = dir.listFiles();

if (files == null) {

throw new IllegalArgumentException("not a directory: " + dir);

}

for (File file : files) {

if (file.isDirectory()) {

deleteContents(file);

}

if (!file.delete()) {

throw new IOException("failed to delete file: " + file);

}

}

} /** This cache uses a single background thread to evict entries. */

private final ExecutorService executorService = new ThreadPoolExecutor(0, 1,

60L, TimeUnit.SECONDS, new LinkedBlockingQueue<Runnable>());

private final Callable<Void> cleanupCallable = new Callable<Void>() {

@Override public Void call() throws Exception {

synchronized (DiskLruCache.this) {

if (journalWriter == null) {

return null; // closed

}

trimToSize();

if (journalRebuildRequired()) {

rebuildJournal();

redundantOpCount = 0;

}

}

return null;

}

}; private DiskLruCache(File directory, int appVersion, int valueCount, long maxSize) {

this.directory = directory;

this.appVersion = appVersion;

this.journalFile = new File(directory, JOURNAL_FILE);

this.journalFileTmp = new File(directory, JOURNAL_FILE_TMP);

this.valueCount = valueCount;

this.maxSize = maxSize;

} /**

* Opens the cache in {@code directory}, creating a cache if none exists

* there.

*

* @param directory a writable directory

* @param appVersion

* @param valueCount the number of values per cache entry. Must be positive.

* @param maxSize the maximum number of bytes this cache should use to store

* @throws java.io.IOException if reading or writing the cache directory fails

*/

public static DiskLruCache open(File directory, int appVersion, int valueCount, long maxSize)

throws IOException {

if (maxSize <= 0) {

throw new IllegalArgumentException("maxSize <= 0");

}

if (valueCount <= 0) {

throw new IllegalArgumentException("valueCount <= 0");

} // prefer to pick up where we left off

DiskLruCache cache = new DiskLruCache(directory, appVersion, valueCount, maxSize);

if (cache.journalFile.exists()) {

try {

cache.readJournal();

cache.processJournal();

cache.journalWriter = new BufferedWriter(new FileWriter(cache.journalFile, true),

IO_BUFFER_SIZE);

return cache;

} catch (IOException journalIsCorrupt) {

// System.logW("DiskLruCache " + directory + " is corrupt: "

// + journalIsCorrupt.getMessage() + ", removing");

cache.delete();

}

} // create a new empty cache

directory.mkdirs();

cache = new DiskLruCache(directory, appVersion, valueCount, maxSize);

cache.rebuildJournal();

return cache;

} private void readJournal() throws IOException {

InputStream in = new BufferedInputStream(new FileInputStream(journalFile), IO_BUFFER_SIZE);

try {

String magic = readAsciiLine(in);

String version = readAsciiLine(in);

String appVersionString = readAsciiLine(in);

String valueCountString = readAsciiLine(in);

String blank = readAsciiLine(in);

if (!MAGIC.equals(magic)

|| !VERSION_1.equals(version)

|| !Integer.toString(appVersion).equals(appVersionString)

|| !Integer.toString(valueCount).equals(valueCountString)

|| !"".equals(blank)) {

throw new IOException("unexpected journal header: ["

+ magic + ", " + version + ", " + valueCountString + ", " + blank + "]");

} while (true) {

try {

readJournalLine(readAsciiLine(in));

} catch (EOFException endOfJournal) {

break;

}

}

} finally {

closeQuietly(in);

}

} private void readJournalLine(String line) throws IOException {

String[] parts = line.split(" ");

if (parts.length < 2) {

throw new IOException("unexpected journal line: " + line);

} String key = parts[1];

if (parts[0].equals(REMOVE) && parts.length == 2) {

lruEntries.remove(key);

return;

} Entry entry = lruEntries.get(key);

if (entry == null) {

entry = new Entry(key);

lruEntries.put(key, entry);

} if (parts[0].equals(CLEAN) && parts.length == 2 + valueCount) {

entry.readable = true;

entry.currentEditor = null;

entry.setLengths(copyOfRange(parts, 2, parts.length));

} else if (parts[0].equals(DIRTY) && parts.length == 2) {

entry.currentEditor = new Editor(entry);

} else if (parts[0].equals(READ) && parts.length == 2) {

// this work was already done by calling lruEntries.get()

} else {

throw new IOException("unexpected journal line: " + line);

}

} /**

* Computes the initial size and collects garbage as a part of opening the

* cache. Dirty entries are assumed to be inconsistent and will be deleted.

*/

private void processJournal() throws IOException {

deleteIfExists(journalFileTmp);

for (Iterator<Entry> i = lruEntries.values().iterator(); i.hasNext(); ) {

Entry entry = i.next();

if (entry.currentEditor == null) {

for (int t = 0; t < valueCount; t++) {

size += entry.lengths[t];

}

} else {

entry.currentEditor = null;

for (int t = 0; t < valueCount; t++) {

deleteIfExists(entry.getCleanFile(t));

deleteIfExists(entry.getDirtyFile(t));

}

i.remove();

}

}

} /**

* Creates a new journal that omits redundant information. This replaces the

* current journal if it exists.

*/

private synchronized void rebuildJournal() throws IOException {

if (journalWriter != null) {

journalWriter.close();

} Writer writer = new BufferedWriter(new FileWriter(journalFileTmp), IO_BUFFER_SIZE);

writer.write(MAGIC);

writer.write("\n");

writer.write(VERSION_1);

writer.write("\n");

writer.write(Integer.toString(appVersion));

writer.write("\n");

writer.write(Integer.toString(valueCount));

writer.write("\n");

writer.write("\n"); for (Entry entry : lruEntries.values()) {

if (entry.currentEditor != null) {

writer.write(DIRTY + ' ' + entry.key + '\n');

} else {

writer.write(CLEAN + ' ' + entry.key + entry.getLengths() + '\n');

}

} writer.close();

journalFileTmp.renameTo(journalFile);

journalWriter = new BufferedWriter(new FileWriter(journalFile, true), IO_BUFFER_SIZE);

} private static void deleteIfExists(File file) throws IOException {

// try {

// Libcore.os.remove(file.getPath());

// } catch (ErrnoException errnoException) {

// if (errnoException.errno != OsConstants.ENOENT) {

// throw errnoException.rethrowAsIOException();

// }

// }

if (file.exists() && !file.delete()) {

throw new IOException();

}

} /**

* Returns a snapshot of the entry named {@code key}, or null if it doesn't

* exist is not currently readable. If a value is returned, it is moved to

* the head of the LRU queue.

*/

public synchronized Snapshot get(String key) throws IOException {

checkNotClosed();

validateKey(key);

Entry entry = lruEntries.get(key);

if (entry == null) {

return null;

} if (!entry.readable) {

return null;

} /*

* Open all streams eagerly to guarantee that we see a single published

* snapshot. If we opened streams lazily then the streams could come

* from different edits.

*/

InputStream[] ins = new InputStream[valueCount];

try {

for (int i = 0; i < valueCount; i++) {

ins[i] = new FileInputStream(entry.getCleanFile(i));

}

} catch (FileNotFoundException e) {

// a file must have been deleted manually!

return null;

} redundantOpCount++;

journalWriter.append(READ + ' ' + key + '\n');

if (journalRebuildRequired()) {

executorService.submit(cleanupCallable);

} return new Snapshot(key, entry.sequenceNumber, ins);

} /**

* Returns an editor for the entry named {@code key}, or null if another

* edit is in progress.

*/

public Editor edit(String key) throws IOException {

return edit(key, ANY_SEQUENCE_NUMBER);

} private synchronized Editor edit(String key, long expectedSequenceNumber) throws IOException {

checkNotClosed();

validateKey(key);

Entry entry = lruEntries.get(key);

if (expectedSequenceNumber != ANY_SEQUENCE_NUMBER

&& (entry == null || entry.sequenceNumber != expectedSequenceNumber)) {

return null; // snapshot is stale

}

if (entry == null) {

entry = new Entry(key);

lruEntries.put(key, entry);

} else if (entry.currentEditor != null) {

return null; // another edit is in progress

} Editor editor = new Editor(entry);

entry.currentEditor = editor; // flush the journal before creating files to prevent file leaks

journalWriter.write(DIRTY + ' ' + key + '\n');

journalWriter.flush();

return editor;

} /**

* Returns the directory where this cache stores its data.

*/

public File getDirectory() {

return directory;

} /**

* Returns the maximum number of bytes that this cache should use to store

* its data.

*/

public long maxSize() {

return maxSize;

} /**

* Returns the number of bytes currently being used to store the values in

* this cache. This may be greater than the max size if a background

* deletion is pending.

*/

public synchronized long size() {

return size;

} private synchronized void completeEdit(Editor editor, boolean success) throws IOException {

Entry entry = editor.entry;

if (entry.currentEditor != editor) {

throw new IllegalStateException();

} // if this edit is creating the entry for the first time, every index must have a value

if (success && !entry.readable) {

for (int i = 0; i < valueCount; i++) {

if (!entry.getDirtyFile(i).exists()) {

editor.abort();

throw new IllegalStateException("edit didn't create file " + i);

}

}

} for (int i = 0; i < valueCount; i++) {

File dirty = entry.getDirtyFile(i);

if (success) {

if (dirty.exists()) {

File clean = entry.getCleanFile(i);

dirty.renameTo(clean);

long oldLength = entry.lengths[i];

long newLength = clean.length();

entry.lengths[i] = newLength;

size = size - oldLength + newLength;

}

} else {

deleteIfExists(dirty);

}

} redundantOpCount++;

entry.currentEditor = null;

if (entry.readable | success) {

entry.readable = true;

journalWriter.write(CLEAN + ' ' + entry.key + entry.getLengths() + '\n');

if (success) {

entry.sequenceNumber = nextSequenceNumber++;

}

} else {

lruEntries.remove(entry.key);

journalWriter.write(REMOVE + ' ' + entry.key + '\n');

} if (size > maxSize || journalRebuildRequired()) {

executorService.submit(cleanupCallable);

}

} /**

* We only rebuild the journal when it will halve the size of the journal

* and eliminate at least 2000 ops.

*/

private boolean journalRebuildRequired() {

final int REDUNDANT_OP_COMPACT_THRESHOLD = 2000;

return redundantOpCount >= REDUNDANT_OP_COMPACT_THRESHOLD

&& redundantOpCount >= lruEntries.size();

} /**

* Drops the entry for {@code key} if it exists and can be removed. Entries

* actively being edited cannot be removed.

*

* @return true if an entry was removed.

*/

public synchronized boolean remove(String key) throws IOException {

checkNotClosed();

validateKey(key);

Entry entry = lruEntries.get(key);

if (entry == null || entry.currentEditor != null) {

return false;

} for (int i = 0; i < valueCount; i++) {

File file = entry.getCleanFile(i);

if (!file.delete()) {

throw new IOException("failed to delete " + file);

}

size -= entry.lengths[i];

entry.lengths[i] = 0;

} redundantOpCount++;

journalWriter.append(REMOVE + ' ' + key + '\n');

lruEntries.remove(key); if (journalRebuildRequired()) {

executorService.submit(cleanupCallable);

} return true;

} /**

* Returns true if this cache has been closed.

*/

public boolean isClosed() {

return journalWriter == null;

} private void checkNotClosed() {

if (journalWriter == null) {

throw new IllegalStateException("cache is closed");

}

} /**

* Force buffered operations to the filesystem.

*/

public synchronized void flush() throws IOException {

checkNotClosed();

trimToSize();

journalWriter.flush();

} /**

* Closes this cache. Stored values will remain on the filesystem.

*/

public synchronized void close() throws IOException {

if (journalWriter == null) {

return; // already closed

}

for (Entry entry : new ArrayList<Entry>(lruEntries.values())) {

if (entry.currentEditor != null) {

entry.currentEditor.abort();

}

}

trimToSize();

journalWriter.close();

journalWriter = null;

} private void trimToSize() throws IOException {

while (size > maxSize) {

// Map.Entry<String, Entry> toEvict = lruEntries.eldest();

final Map.Entry<String, Entry> toEvict = lruEntries.entrySet().iterator().next();

remove(toEvict.getKey());

}

} /**

* Closes the cache and deletes all of its stored values. This will delete

* all files in the cache directory including files that weren't created by

* the cache.

*/

public void delete() throws IOException {

close();

deleteContents(directory);

} private void validateKey(String key) {

if (key.contains(" ") || key.contains("\n") || key.contains("\r")) {

throw new IllegalArgumentException(

"keys must not contain spaces or newlines: \"" + key + "\"");

}

} private static String inputStreamToString(InputStream in) throws IOException {

return readFully(new InputStreamReader(in, UTF_8));

} /**

* A snapshot of the values for an entry.

*/

public final class Snapshot implements Closeable {

private final String key;

private final long sequenceNumber;

private final InputStream[] ins; private Snapshot(String key, long sequenceNumber, InputStream[] ins) {

this.key = key;

this.sequenceNumber = sequenceNumber;

this.ins = ins;

} /**

* Returns an editor for this snapshot's entry, or null if either the

* entry has changed since this snapshot was created or if another edit

* is in progress.

*/

public Editor edit() throws IOException {

return DiskLruCache.this.edit(key, sequenceNumber);

} /**

* Returns the unbuffered stream with the value for {@code index}.

*/

public InputStream getInputStream(int index) {

return ins[index];

} /**

* Returns the string value for {@code index}.

*/

public String getString(int index) throws IOException {

return inputStreamToString(getInputStream(index));

} @Override public void close() {

for (InputStream in : ins) {

closeQuietly(in);

}

}

} /**

* Edits the values for an entry.

*/

public final class Editor {

private final Entry entry;

private boolean hasErrors; private Editor(Entry entry) {

this.entry = entry;

} /**

* Returns an unbuffered input stream to read the last committed value,

* or null if no value has been committed.

*/

public InputStream newInputStream(int index) throws IOException {

synchronized (DiskLruCache.this) {

if (entry.currentEditor != this) {

throw new IllegalStateException();

}

if (!entry.readable) {

return null;

}

return new FileInputStream(entry.getCleanFile(index));

}

} /**

* Returns the last committed value as a string, or null if no value

* has been committed.

*/

public String getString(int index) throws IOException {

InputStream in = newInputStream(index);

return in != null ? inputStreamToString(in) : null;

} /**

* Returns a new unbuffered output stream to write the value at

* {@code index}. If the underlying output stream encounters errors

* when writing to the filesystem, this edit will be aborted when

* {@link #commit} is called. The returned output stream does not throw

* IOExceptions.

*/

public OutputStream newOutputStream(int index) throws IOException {

synchronized (DiskLruCache.this) {

if (entry.currentEditor != this) {

throw new IllegalStateException();

}

return new FaultHidingOutputStream(new FileOutputStream(entry.getDirtyFile(index)));

}

} /**

* Sets the value at {@code index} to {@code value}.

*/

public void set(int index, String value) throws IOException {

Writer writer = null;

try {

writer = new OutputStreamWriter(newOutputStream(index), UTF_8);

writer.write(value);

} finally {

closeQuietly(writer);

}

} /**

* Commits this edit so it is visible to readers. This releases the

* edit lock so another edit may be started on the same key.

*/

public void commit() throws IOException {

if (hasErrors) {

completeEdit(this, false);

remove(entry.key); // the previous entry is stale

} else {

completeEdit(this, true);

}

} /**

* Aborts this edit. This releases the edit lock so another edit may be

* started on the same key.

*/

public void abort() throws IOException {

completeEdit(this, false);

} private class FaultHidingOutputStream extends FilterOutputStream {

private FaultHidingOutputStream(OutputStream out) {

super(out);

} @Override public void write(int oneByte) {

try {

out.write(oneByte);

} catch (IOException e) {

hasErrors = true;

}

} @Override public void write(byte[] buffer, int offset, int length) {

try {

out.write(buffer, offset, length);

} catch (IOException e) {

hasErrors = true;

}

} @Override public void close() {

try {

out.close();

} catch (IOException e) {

hasErrors = true;

}

} @Override public void flush() {

try {

out.flush();

} catch (IOException e) {

hasErrors = true;

}

}

}

} private final class Entry {

private final String key; /** Lengths of this entry's files. */

private final long[] lengths; /** True if this entry has ever been published */

private boolean readable; /** The ongoing edit or null if this entry is not being edited. */

private Editor currentEditor; /** The sequence number of the most recently committed edit to this entry. */

private long sequenceNumber; private Entry(String key) {

this.key = key;

this.lengths = new long[valueCount];

} public String getLengths() throws IOException {

StringBuilder result = new StringBuilder();

for (long size : lengths) {

result.append(' ').append(size);

}

return result.toString();

} /**

* Set lengths using decimal numbers like "10123".

*/

private void setLengths(String[] strings) throws IOException {

if (strings.length != valueCount) {

throw invalidLengths(strings);

} try {

for (int i = 0; i < strings.length; i++) {

lengths[i] = Long.parseLong(strings[i]);

}

} catch (NumberFormatException e) {

throw invalidLengths(strings);

}

} private IOException invalidLengths(String[] strings) throws IOException {

throw new IOException("unexpected journal line: " + Arrays.toString(strings));

} public File getCleanFile(int i) {

return new File(directory, key + "." + i);

} public File getDirtyFile(int i) {

return new File(directory, key + "." + i + ".tmp");

}

}

}

BitmapFun老版本的源码:http://files.cnblogs.com/xiaoQLu/bitmapfun_old.rar

/*

* Copyright (C) 2012 The Android Open Source Project

*

* Licensed under the Apache License, Version 2.0 (the "License");

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/ package com.example.android.bitmapfun.util; import android.content.Context;

import android.graphics.Bitmap;

import android.graphics.Bitmap.CompressFormat;

import android.graphics.BitmapFactory;

import android.os.Environment;

import android.util.Log; import com.example.android.bitmapfun.BuildConfig; import java.io.BufferedOutputStream;

import java.io.File;

import java.io.FileNotFoundException;

import java.io.FileOutputStream;

import java.io.FilenameFilter;

import java.io.IOException;

import java.io.OutputStream;

import java.io.UnsupportedEncodingException;

import java.net.URLEncoder;

import java.util.Collections;

import java.util.LinkedHashMap;

import java.util.Map;

import java.util.Map.Entry; /**

* A simple disk LRU bitmap cache to illustrate how a disk cache would be used for bitmap caching. A

* much more robust and efficient disk LRU cache solution can be found in the ICS source code

* (libcore/luni/src/main/java/libcore/io/DiskLruCache.java) and is preferable to this simple

* implementation.

*/

public class DiskLruCache {

private static final String TAG = "DiskLruCache";

private static final String CACHE_FILENAME_PREFIX = "cache_";

private static final int MAX_REMOVALS = 4;

private static final int INITIAL_CAPACITY = 32;

private static final float LOAD_FACTOR = 0.75f; private final File mCacheDir;

private int cacheSize = 0;

private int cacheByteSize = 0;

private final int maxCacheItemSize = 64; // 64 item default

private long maxCacheByteSize = 1024 * 1024 * 5; // 5MB default

private CompressFormat mCompressFormat = CompressFormat.JPEG;

private int mCompressQuality = 70; private final Map<String, String> mLinkedHashMap =

Collections.synchronizedMap(new LinkedHashMap<String, String>(

INITIAL_CAPACITY, LOAD_FACTOR, true)); /**

* A filename filter to use to identify the cache filenames which have CACHE_FILENAME_PREFIX

* prepended.

*/

private static final FilenameFilter cacheFileFilter = new FilenameFilter() {

@Override

public boolean accept(File dir, String filename) {

return filename.startsWith(CACHE_FILENAME_PREFIX);

}

}; /**

* Used to fetch an instance of DiskLruCache.

*

* @param context

* @param cacheDir

* @param maxByteSize

* @return

*/

public static DiskLruCache openCache(Context context, File cacheDir, long maxByteSize) {

if (!cacheDir.exists()) {

cacheDir.mkdir();

} if (cacheDir.isDirectory() && cacheDir.canWrite()

&& Utils.getUsableSpace(cacheDir) > maxByteSize) {

return new DiskLruCache(cacheDir, maxByteSize);

} return null;

} /**

* Constructor that should not be called directly, instead use

* {@link DiskLruCache#openCache(Context, File, long)} which runs some extra checks before

* creating a DiskLruCache instance.

*

* @param cacheDir

* @param maxByteSize

*/

private DiskLruCache(File cacheDir, long maxByteSize) {

mCacheDir = cacheDir;

maxCacheByteSize = maxByteSize;

} /**

* Add a bitmap to the disk cache.

*

* @param key A unique identifier for the bitmap.

* @param data The bitmap to store.

*/

public void put(String key, Bitmap data) {

synchronized (mLinkedHashMap) {

if (mLinkedHashMap.get(key) == null) {

try {

final String file = createFilePath(mCacheDir, key);

if (writeBitmapToFile(data, file)) {

put(key, file);

flushCache();

}

} catch (final FileNotFoundException e) {

Log.e(TAG, "Error in put: " + e.getMessage());

} catch (final IOException e) {

Log.e(TAG, "Error in put: " + e.getMessage());

}

}

}

} private void put(String key, String file) {

mLinkedHashMap.put(key, file);

cacheSize = mLinkedHashMap.size();

cacheByteSize += new File(file).length();

} /**

* Flush the cache, removing oldest entries if the total size is over the specified cache size.

* Note that this isn't keeping track of stale files in the cache directory that aren't in the

* HashMap. If the images and keys in the disk cache change often then they probably won't ever

* be removed.

*/

private void flushCache() {

Entry<String, String> eldestEntry;

File eldestFile;

long eldestFileSize;

int count = 0; while (count < MAX_REMOVALS &&

(cacheSize > maxCacheItemSize || cacheByteSize > maxCacheByteSize)) {

eldestEntry = mLinkedHashMap.entrySet().iterator().next();

eldestFile = new File(eldestEntry.getValue());

eldestFileSize = eldestFile.length();

mLinkedHashMap.remove(eldestEntry.getKey());

eldestFile.delete();

cacheSize = mLinkedHashMap.size();

cacheByteSize -= eldestFileSize;

count++;

if (BuildConfig.DEBUG) {

Log.d(TAG, "flushCache - Removed cache file, " + eldestFile + ", "

+ eldestFileSize);

}

}

} /**

* Get an image from the disk cache.

*

* @param key The unique identifier for the bitmap

* @return The bitmap or null if not found

*/

public Bitmap get(String key) {

synchronized (mLinkedHashMap) {

final String file = mLinkedHashMap.get(key);

if (file != null) {

if (BuildConfig.DEBUG) {

Log.d(TAG, "Disk cache hit");

}

return BitmapFactory.decodeFile(file);

} else {

final String existingFile = createFilePath(mCacheDir, key);

if (new File(existingFile).exists()) {

put(key, existingFile);

if (BuildConfig.DEBUG) {

Log.d(TAG, "Disk cache hit (existing file)");

}

return BitmapFactory.decodeFile(existingFile);

}

}

return null;

}

} /**

* Checks if a specific key exist in the cache.

*

* @param key The unique identifier for the bitmap

* @return true if found, false otherwise

*/

public boolean containsKey(String key) {

// See if the key is in our HashMap

if (mLinkedHashMap.containsKey(key)) {

return true;

} // Now check if there's an actual file that exists based on the key

final String existingFile = createFilePath(mCacheDir, key);

if (new File(existingFile).exists()) {

// File found, add it to the HashMap for future use

put(key, existingFile);

return true;

}

return false;

} /**

* Removes all disk cache entries from this instance cache dir

*/

public void clearCache() {

DiskLruCache.clearCache(mCacheDir);

} /**

* Removes all disk cache entries from the application cache directory in the uniqueName

* sub-directory.

*

* @param context The context to use

* @param uniqueName A unique cache directory name to append to the app cache directory

*/

public static void clearCache(Context context, String uniqueName) {

File cacheDir = getDiskCacheDir(context, uniqueName);

clearCache(cacheDir);

} /**

* Removes all disk cache entries from the given directory. This should not be called directly,

* call {@link DiskLruCache#clearCache(Context, String)} or {@link DiskLruCache#clearCache()}

* instead.

*

* @param cacheDir The directory to remove the cache files from

*/

private static void clearCache(File cacheDir) {

final File[] files = cacheDir.listFiles(cacheFileFilter);

for (int i=0; i<files.length; i++) {

files[i].delete();

}

} /**

* Get a usable cache directory (external if available, internal otherwise).

*

* @param context The context to use

* @param uniqueName A unique directory name to append to the cache dir

* @return The cache dir

*/

public static File getDiskCacheDir(Context context, String uniqueName) { // Check if media is mounted or storage is built-in, if so, try and use external cache dir

// otherwise use internal cache dir

final String cachePath =

Environment.getExternalStorageState() == Environment.MEDIA_MOUNTED ||

!Utils.isExternalStorageRemovable() ?

Utils.getExternalCacheDir(context).getPath() :

context.getCacheDir().getPath(); return new File(cachePath + File.separator + uniqueName);

} /**

* Creates a constant cache file path given a target cache directory and an image key.

*

* @param cacheDir

* @param key

* @return

*/

public static String createFilePath(File cacheDir, String key) {

try {

// Use URLEncoder to ensure we have a valid filename, a tad hacky but it will do for

// this example

return cacheDir.getAbsolutePath() + File.separator +

CACHE_FILENAME_PREFIX + URLEncoder.encode(key.replace("*", ""), "UTF-8");

} catch (final UnsupportedEncodingException e) {

Log.e(TAG, "createFilePath - " + e);

} return null;

} /**

* Create a constant cache file path using the current cache directory and an image key.

*

* @param key

* @return

*/

public String createFilePath(String key) {

return createFilePath(mCacheDir, key);

} /**

* Sets the target compression format and quality for images written to the disk cache.

*

* @param compressFormat

* @param quality

*/

public void setCompressParams(CompressFormat compressFormat, int quality) {

mCompressFormat = compressFormat;

mCompressQuality = quality;

} /**

* Writes a bitmap to a file. Call {@link DiskLruCache#setCompressParams(CompressFormat, int)}

* first to set the target bitmap compression and format.

*

* @param bitmap

* @param file

* @return

*/

private boolean writeBitmapToFile(Bitmap bitmap, String file)

throws IOException, FileNotFoundException { OutputStream out = null;

try {

out = new BufferedOutputStream(new FileOutputStream(file), Utils.IO_BUFFER_SIZE);

return bitmap.compress(mCompressFormat, mCompressQuality, out);

} finally {

if (out != null) {

out.close();

}

}

}

}

很奇怪,为啥我要把同一个类贴出三个源码呢?因为它们各有不同之处。

最新版本的源码来源网址也又很多,这个咱们不管。关键是它代码中用到了很多别的类对象,用这个代码的话就需要导入关联的对象,而那些和它有关的类对象又相互依赖,十分麻烦。所以不是很推荐用第一个源码,如果你非要用的话,可以下载我在文章最后放的源码,在那里面我已经导入了与DiskLruCache有关的类对象。

BitmapFun的新版本源码是完全独立的,算是第一个源码的精简版,只有一个类,你直接复制到工程中就行,但这里有个不算问题的问题。这个缓存类的重点就是日志记录,实现LruCache算法的关键是通过操作日志来看哪个对象经常被访问,哪个对象没怎么用过,这样就可以有选择性的删除了。但问题就出在这个日志记录上,要知道这个日志是写在磁盘上的,要读写就必须要进行IO操作,IO操作又是众所周知的慢,频繁的IO操作必然会引起性能的下降。如果深入分析的话,你会发现读取磁盘上的图片(这里用图片来举例,可以是其他文件类型)应该是多线程的,但我们必须确保同一时间只能有一个线程操作日志,因此就必须加同步锁。好了,加了同步锁来进行IO操作固然安全多了,但效率又开始下降了。

BitmapFun老版本的源码是不用日志文件的,直接从程序访问sdcard缓存开始计算访问时间,这样就避免了频繁的IO操作,算是一个简单可行的办法。这个代码是从BitmapFun中提取的,所以它缓存的对象是bitmap,通用性不强,如果要用话请自行修改吧。

总结:第一个源码和第二个源码内部实现是完全一样的,第二个源码做代码的整合,方便导入到项目中,所以请优先考虑用第二个源码。如果你在用这个类的时候发现加载图片的速度很慢,效率低,可以用第三个源码来替换,以提高效率。实现图片加载不建议用BitmapFun,效率不高。

1.2 分析

来源网址:http://blog.csdn.net/linghu_java/article/details/8600053

有注释的源码:

/*

* Copyright (C) 2011 The Android Open Source Project

*

* Licensed under the Apache License, Version 2.0 (the "License");

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/ package com.example.android.bitmapfun.util; import java.io.BufferedInputStream;

import java.io.BufferedWriter;

import java.io.Closeable;

import java.io.EOFException;

import java.io.File;

import java.io.FileInputStream;

import java.io.FileNotFoundException;

import java.io.FileOutputStream;

import java.io.FileWriter;

import java.io.FilterOutputStream;

import java.io.IOException;

import java.io.InputStream;

import java.io.InputStreamReader;

import java.io.OutputStream;

import java.io.OutputStreamWriter;

import java.io.Reader;

import java.io.StringWriter;

import java.io.Writer;

import java.lang.reflect.Array;

import java.nio.charset.Charset;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.Iterator;

import java.util.LinkedHashMap;

import java.util.Map;

import java.util.concurrent.Callable;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.LinkedBlockingQueue;

import java.util.concurrent.ThreadPoolExecutor;

import java.util.concurrent.TimeUnit; /**

******************************************************************************

* Taken from the JB source code, can be found in:

* libcore/luni/src/main/java/libcore/io/DiskLruCache.java

* or direct link:

* https://android.googlesource.com/platform/libcore/+/android-4.1.1_r1/luni/src/main/java/libcore/io/DiskLruCache.java

******************************************************************************

*

* A cache that uses a bounded amount of space on a filesystem. Each cache

* entry has a string key and a fixed number of values. Values are byte

* sequences, accessible as streams or files. Each value must be between {@code

* 0} and {@code Integer.MAX_VALUE} bytes in length.

* 一个使用空间大小有边界的文件cache,每一个entry包含一个key和values。values是byte序列,按文件或者流来访问的。

* 每一个value的长度在0---Integer.MAX_VALUE之间。

*

* <p>The cache stores its data in a directory on the filesystem. This

* directory must be exclusive to the cache; the cache may delete or overwrite

* files from its directory. It is an error for multiple processes to use the

* same cache directory at the same time.

* cache使用目录文件存储数据。文件路径必须是唯一的,可以删除和重写目录文件。多个进程同时使用同样的文件目录是不正确的

*

* <p>This cache limits the number of bytes that it will store on the

* filesystem. When the number of stored bytes exceeds the limit, the cache will

* remove entries in the background until the limit is satisfied. The limit is

* not strict: the cache may temporarily exceed it while waiting for files to be

* deleted. The limit does not include filesystem overhead or the cache

* journal so space-sensitive applications should set a conservative limit.

* cache限制了大小,当超出空间大小时,cache就会后台删除entry直到空间没有达到上限为止。空间大小限制不是严格的,

* cache可能会暂时超过limit在等待文件删除的过程中。cache的limit不包括文件系统的头部和日志,

* 所以空间大小敏感的应用应当设置一个保守的limit大小

*

* <p>Clients call {@link #edit} to create or update the values of an entry. An

* entry may have only one editor at one time; if a value is not available to be

* edited then {@link #edit} will return null.

* <ul>

* <li>When an entry is being <strong>created</strong> it is necessary to

* supply a full set of values; the empty value should be used as a

* placeholder if necessary.

* <li>When an entry is being <strong>edited</strong>, it is not necessary

* to supply data for every value; values default to their previous

* value.

* </ul>

* Every {@link #edit} call must be matched by a call to {@link Editor#commit}

* or {@link Editor#abort}. Committing is atomic: a read observes the full set

* of values as they were before or after the commit, but never a mix of values.

*调用edit()来创建或者更新entry的值,一个entry同时只能有一个editor;如果值不可被编辑就返回null。

*当entry被创建时必须提供一个value。空的value应当用占位符表示。当entry被编辑的时候,必须提供value。

*每次调用必须有匹配Editor commit或abort,commit是原子操作,读必须在commit前或者后,不会造成值混乱。

*

* <p>Clients call {@link #get} to read a snapshot of an entry. The read will

* observe the value at the time that {@link #get} was called. Updates and

* removals after the call do not impact ongoing reads.

* 调用get来读entry的快照。当get调用时读者读其值,更新或者删除不会影响先前的读

*

* <p>This class is tolerant of some I/O errors. If files are missing from the

* filesystem, the corresponding entries will be dropped from the cache. If

* an error occurs while writing a cache value, the edit will fail silently.

* Callers should handle other problems by catching {@code IOException} and

* responding appropriately.

* 该类可以容忍一些I/O errors。如果文件丢失啦,相应的entry就会被drop。写cache时如果error发生,edit将失败。

* 调用者应当相应的处理其它问题

*/

public final class DiskLruCache implements Closeable {

static final String JOURNAL_FILE = "journal";

static final String JOURNAL_FILE_TMP = "journal.tmp";

static final String MAGIC = "libcore.io.DiskLruCache";

static final String VERSION_1 = "1";

static final long ANY_SEQUENCE_NUMBER = -1;

private static final String CLEAN = "CLEAN";

private static final String DIRTY = "DIRTY";

private static final String REMOVE = "REMOVE";

private static final String READ = "READ"; private static final Charset UTF_8 = Charset.forName("UTF-8");

private static final int IO_BUFFER_SIZE = 8 * 1024;//8K /*

* This cache uses a journal file named "journal". A typical journal file

* looks like this:

* libcore.io.DiskLruCache

* 1 //the disk cache's version

* 100 //the application's version

* 2 //value count

*

* //state key optional

* CLEAN 3400330d1dfc7f3f7f4b8d4d803dfcf6 832 21054