大数据系列之数据仓库Hive命令使用及JDBC连接

Hive系列博文,持续更新~~~

大数据系列之数据仓库Hive原理

大数据系列之数据仓库Hive安装

大数据系列之数据仓库Hive中分区Partition如何使用

大数据系列之数据仓库Hive命令使用及JDBC连接

本文介绍Hive的使用原理及命令行、Java JDBC对于Hive的使用。

在Hadoop项目中,HDFS解决了文件分布式存储的问题,MapReduce解决了数据处理分布式计算问题,之前介绍过Hadoop生态中MapReduce(以下统称MR)的使用,大数据系列之分布式计算批处理引擎MapReduce实践。HBase解决了一种数据的存储和检索。那么要对存在HDFS上的文件或HBase中的表进行查询时,是要手工写一堆MapReduce类的。一方面,很麻烦,另一方面只能由懂MapReduce的程序员类编写。对于业务人员或数据科学家,非常不方便,这些人习惯了通过sql与rdbms打交道,因此如果有sql方式查询文件和数据就很有必要,这就是hive要满足的要求。

比如说采用MR处理WordCount统计词频时,我们如果用hql语句进行处理如下:

select word,count(*) as totalNum from t_word group by word order by totalNum desc

关于Hive的典型应用场景:

1.日志分析

2.统计网站一个时间段的pv,uv;

3.多维度数据分析;

4.海量结构化数据离线分析;

5.低成本进行数据分析(无须编写MR).

介绍Hive中分区-Partition的意义

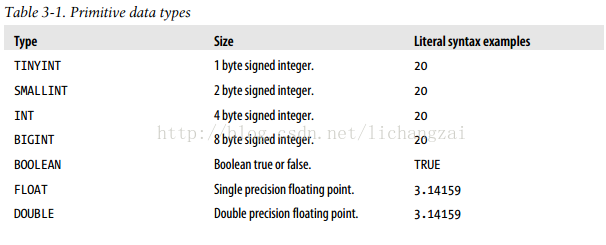

1.Hive的数据类型

1.1 基本数据类型:

1.2 hive的集合类型:

2.hive的命令练习:

连接hive:

beeline

!connect jdbc:hive2://master:10000/dbmfz mfz 111111

2.1 基本数据类型命令使用

#用户表创建

create table if not EXISTS user_dimension(

uid String,

name string,

gender string,

birth date,

province string

) row format delimited

fields terminated by ','; describe user_dimension;

show create table user_dimension; #品牌表创建

create table if not EXISTS brand_dimension(

bid string,

category string,

brand string

)row format delimited

fields terminated by ','; #交易表创建

create table if not EXISTS record_dimension(

rid string,

uid string,

bid string,

price int,

source_province string,

target_province string,

site string,

express_number string,

express_company string,

trancation_date date

)row format delimited

fields terminated by ','; show tables; #创建数据

user.DATA

brand.DATA

record.DATA #载入数据

LOAD DATA LOCAL INPATH '/home/mfz/apache-hive-2.1.1-bin/hivedata/user.data' OVERWRITE INTO TABLE user_dimension;

LOAD DATA LOCAL INPATH '/home/mfz/apache-hive-2.1.1-bin/hivedata/brand.data' OVERWRITE INTO TABLE brand_dimension;

LOAD DATA LOCAL INPATH '/home/mfz/apache-hive-2.1.1-bin/hivedata/record.data' OVERWRITE INTO TABLE record_dimension;

#验证

select * from user_dimension;

select * from brand_dimension;

select * from record_dimension;

#载入HDFS上数据

load data inpath 'user.data_HDFS_PATH' OVERWRITE INTO TABLE user_dimension; #查询

select count(*) from record_dimension where trancation_date = '2017-09-01';

+-----+--+

| c0 |

+-----+--+

| |

+-----+--+

#不同年龄消费的情况

select cast(datediff(CURRENT_DATE ,birth)/ as int ) as age,sum(price) as totalPrice

from record_dimension rd

JOIN user_dimension ud on rd.uid = ud.uid

group by cast(datediff(CURRENT_DATE ,birth)/ as int)

order by totalPrice DESC ; +------+-------------+--+

| age | totalprice |

+------+-------------+--+

| | |

| | |

| | |

| | |

+------+-------------+--+ #不同品牌被消费的情况

select brand,sum(price) as totalPrice

from record_dimension rd

join brand_dimension bd on bd.bid = rd.bid

group by bd.brand

order by totalPrice desc;

+------------+-------------+--+

| brand | totalprice |

+------------+-------------+--+

| SAMSUNG | |

| OPPO | |

| WULIANGYE | |

| DELL | |

| NIKE | |

+------------+-------------+--+ #统计2017-- 当天各个品牌的交易笔数,按照倒序排序

select brand,count(*) as sumCount

from record_dimension rd

join brand_dimension bd on bd.bid=rd.bid

where rd.trancation_date='2017-09-01'

group by bd.brand

order by sumCount desc

+------------+-----------+--+

| brand | sumcount |

+------------+-----------+--+

| SAMSUNG | |

| WULIANGYE | |

| OPPO | |

| NIKE | |

| DELL | |

+------------+-----------+--+ #不同性别消费的商品类别情况

select ud.gender as gender,bd.category shangping,sum(price) totalPrice,count(*) FROM record_dimension rd

join user_dimension ud on rd.uid = ud.uid

join brand_dimension bd on rd.bid = bd.bid

group by ud.gender,bd.category; +---------+------------+-------------+-----+--+

| gender | shangping | totalprice | c3 |

+---------+------------+-------------+-----+--+

| F | telephone | | |

| M | computer | | |

| M | food | | |

| M | sport | | |

| M | telephone | | |

+---------+------------+-------------+-----+--+

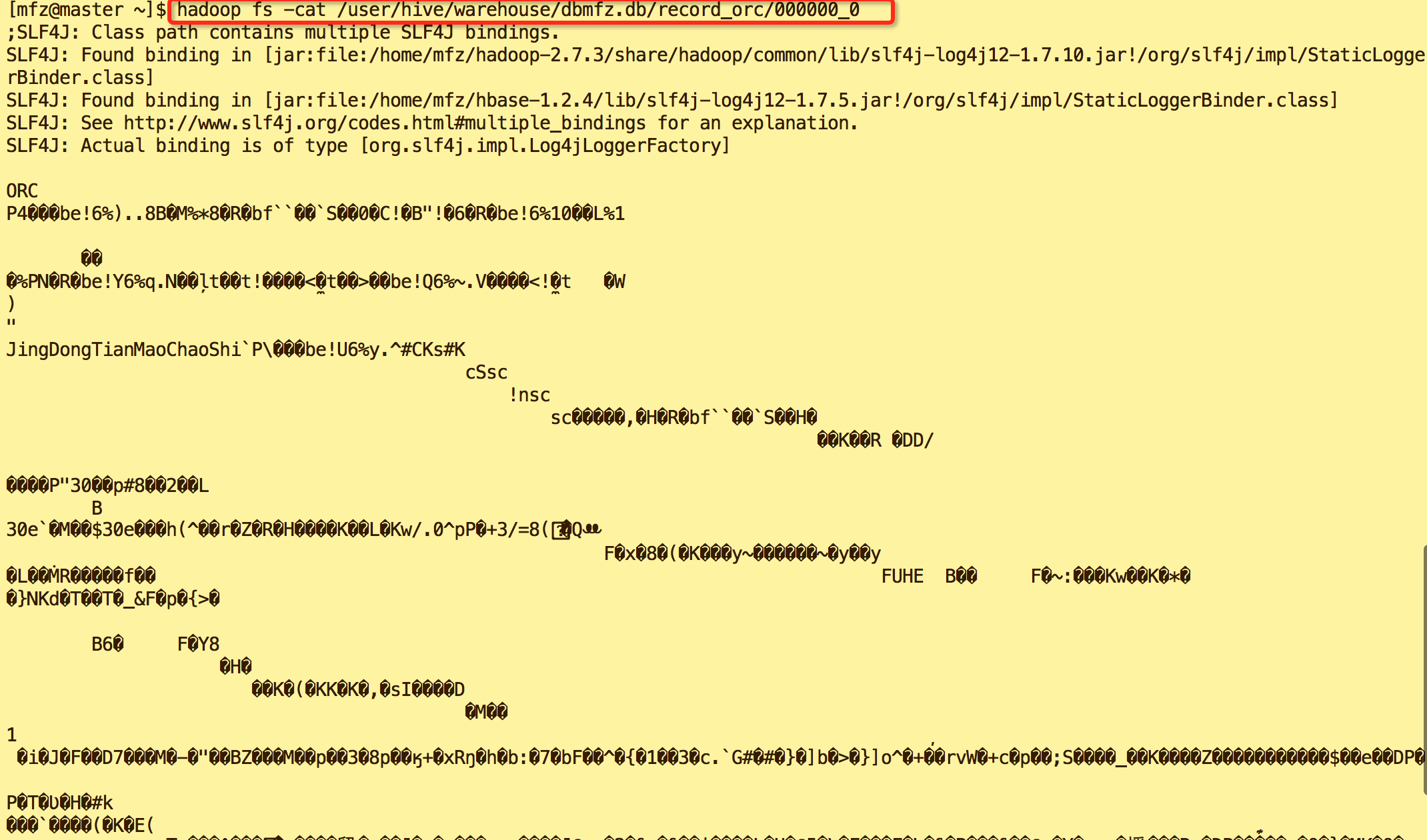

2.3. 集合数据类型的命令操作

#data employees.txt create database practice2;

show databases;

use practice2; create table if not EXISTS employees(

name string,

salary string,

subordinates array<String>,

deductions map<String,Float>,

address struct<street:string,city:string,state:string,zip:int>

)

row format delimited

fields terminated by '\001'

collection items terminated by '\002'

map keys terminated by '\003'

lines terminated by '\n'

stored as textfile; describe employees;

+---------------+---------------------------------------------------------+----------+--+

| col_name | data_type | comment |

+---------------+---------------------------------------------------------+----------+--+

| name | string | |

| salary | string | |

| subordinates | array<string> | |

| deductions | map<string,float> | |

| address | struct<street:string,city:string,state:string,zip:int> | |

+---------------+---------------------------------------------------------+----------+--+ LOAD DATA LOCAL INPATH '/home/mfz/apache-hive-2.1.1-bin/hivedata/employees.txt' OVERWRITE INTO TABLE employees;

+-------------------+-------------------+------------------------------+------------------------------------------------------------+

------------------------------------------------------------------------------+--+

| employees.name | employees.salary | employees.subordinates | employees.deductions |

employees.address |

+-------------------+-------------------+------------------------------+------------------------------------------------------------+

------------------------------------------------------------------------------+--+

| John Doe | 100000.0 | ["Mary Smith","Todd Jones"] | {"Federal Taxes":0.2,"State Taxes":0.05,"Insurance":0.1} |

{"street":"1 Michigan Ave.","city":"Chicago","state":"IL","zip":} |

| Mary Smith | 80000.0 | ["Bill King"] | {"Federal Taxes":0.2,"State Taxes":0.05,"Insurance":0.1} |

{"street":"100 Ontario St.","city":"Chicago","state":"IL","zip":} |

| Todd Jones | 70000.0 | [] | {"Federal Taxes":0.15,"State Taxes":0.03,"Insurance":0.1} |

{"street":"200 Chicago Ave.","city":"Oak Park","state":"IL","zip":} |

| Bill King | 60000.0 | [] | {"Federal Taxes":0.15,"State Taxes":0.03,"Insurance":0.1} |

{"street":"300 Obscure Dr.","city":"Obscuria","state":"IL","zip":} |

| Boss Man | 200000.0 | ["John Doe","Fred Finance"] | {"Federal Taxes":0.3,"State Taxes":0.07,"Insurance":0.05} |

{"street":"1 Pretentious Drive.","city":"Chicago","state":"IL","zip":} |

| Fred Finance | 150000.0 | ["Stacy Accountant"] | {"Federal Taxes":0.3,"State Taxes":0.07,"Insurance":0.05} |

{"street":"2 Pretentious Drive.","city":"Chicago","state":"IL","zip":} |

| Stacy Accountant | 60000.0 | [] | {"Federal Taxes":0.15,"State Taxes":0.03,"Insurance":0.1} |

{"street":"300 Main St.","city":"Naperville","state":"IL","zip":} |

+-------------------+-------------------+------------------------------+------------------------------------------------------------+

------------------------------------------------------------------------------+--+ select * from employees where deductions['Federal Taxes']>0.2;

+-----------------+-------------------+------------------------------+------------------------------------------------------------+--

----------------------------------------------------------------------------+--+

| employees.name | employees.salary | employees.subordinates | employees.deductions |

employees.address |

+-----------------+-------------------+------------------------------+------------------------------------------------------------+--

----------------------------------------------------------------------------+--+

| John Doe | 100000.0 | ["Mary Smith","Todd Jones"] | {"Federal Taxes":0.2,"State Taxes":0.05,"Insurance":0.1} | {

"street":"1 Michigan Ave.","city":"Chicago","state":"IL","zip":} |

| Mary Smith | 80000.0 | ["Bill King"] | {"Federal Taxes":0.2,"State Taxes":0.05,"Insurance":0.1} | {

"street":"100 Ontario St.","city":"Chicago","state":"IL","zip":} |

| Boss Man | 200000.0 | ["John Doe","Fred Finance"] | {"Federal Taxes":0.3,"State Taxes":0.07,"Insurance":0.05} | {

"street":"1 Pretentious Drive.","city":"Chicago","state":"IL","zip":} |

| Fred Finance | 150000.0 | ["Stacy Accountant"] | {"Federal Taxes":0.3,"State Taxes":0.07,"Insurance":0.05} | {

"street":"2 Pretentious Drive.","city":"Chicago","state":"IL","zip":} |

+-----------------+-------------------+------------------------------+------------------------------------------------------------+--

----------------------------------------------------------------------------+--+ #查询第一位下属是John Doe的

select * from employees where subordinates[] = 'John Doe';

+-----------------+-------------------+------------------------------+------------------------------------------------------------+--

----------------------------------------------------------------------------+--+

| employees.name | employees.salary | employees.subordinates | employees.deductions |

employees.address |

+-----------------+-------------------+------------------------------+------------------------------------------------------------+--

----------------------------------------------------------------------------+--+

| Boss Man | 200000.0 | ["John Doe","Fred Finance"] | {"Federal Taxes":0.3,"State Taxes":0.07,"Insurance":0.05} | {

"street":"1 Pretentious Drive.","city":"Chicago","state":"IL","zip":} |

+-----------------+-------------------+------------------------------+------------------------------------------------------------+--

----------------------------------------------------------------------------+--+ #查询经理 --下属人数大于0

select * from employees where size(subordinates)>;

+-----------------+-------------------+------------------------------+------------------------------------------------------------+--

----------------------------------------------------------------------------+--+

| employees.name | employees.salary | employees.subordinates | employees.deductions |

employees.address |

+-----------------+-------------------+------------------------------+------------------------------------------------------------+--

----------------------------------------------------------------------------+--+

| John Doe | 100000.0 | ["Mary Smith","Todd Jones"] | {"Federal Taxes":0.2,"State Taxes":0.05,"Insurance":0.1} | {

"street":"1 Michigan Ave.","city":"Chicago","state":"IL","zip":} |

| Mary Smith | 80000.0 | ["Bill King"] | {"Federal Taxes":0.2,"State Taxes":0.05,"Insurance":0.1} | {

"street":"100 Ontario St.","city":"Chicago","state":"IL","zip":} |

| Boss Man | 200000.0 | ["John Doe","Fred Finance"] | {"Federal Taxes":0.3,"State Taxes":0.07,"Insurance":0.05} | {

"street":"1 Pretentious Drive.","city":"Chicago","state":"IL","zip":} |

| Fred Finance | 150000.0 | ["Stacy Accountant"] | {"Federal Taxes":0.3,"State Taxes":0.07,"Insurance":0.05} | {

"street":"2 Pretentious Drive.","city":"Chicago","state":"IL","zip":} |

+-----------------+-------------------+------------------------------+------------------------------------------------------------+--

----------------------------------------------------------------------------+--+ #查询地址状态在IL

select * from employees where address.state='IL';

+-------------------+-------------------+------------------------------+------------------------------------------------------------+

------------------------------------------------------------------------------+--+

| employees.name | employees.salary | employees.subordinates | employees.deductions |

employees.address |

+-------------------+-------------------+------------------------------+------------------------------------------------------------+

------------------------------------------------------------------------------+--+

| John Doe | 100000.0 | ["Mary Smith","Todd Jones"] | {"Federal Taxes":0.2,"State Taxes":0.05,"Insurance":0.1} |

{"street":"1 Michigan Ave.","city":"Chicago","state":"IL","zip":} |

| Mary Smith | 80000.0 | ["Bill King"] | {"Federal Taxes":0.2,"State Taxes":0.05,"Insurance":0.1} |

{"street":"100 Ontario St.","city":"Chicago","state":"IL","zip":} |

| Todd Jones | 70000.0 | [] | {"Federal Taxes":0.15,"State Taxes":0.03,"Insurance":0.1} |

{"street":"200 Chicago Ave.","city":"Oak Park","state":"IL","zip":} |

| Bill King | 60000.0 | [] | {"Federal Taxes":0.15,"State Taxes":0.03,"Insurance":0.1} |

{"street":"300 Obscure Dr.","city":"Obscuria","state":"IL","zip":} |

| Boss Man | 200000.0 | ["John Doe","Fred Finance"] | {"Federal Taxes":0.3,"State Taxes":0.07,"Insurance":0.05} |

{"street":"1 Pretentious Drive.","city":"Chicago","state":"IL","zip":} |

| Fred Finance | 150000.0 | ["Stacy Accountant"] | {"Federal Taxes":0.3,"State Taxes":0.07,"Insurance":0.05} |

{"street":"2 Pretentious Drive.","city":"Chicago","state":"IL","zip":} |

| Stacy Accountant | 60000.0 | [] | {"Federal Taxes":0.15,"State Taxes":0.03,"Insurance":0.1} |

{"street":"300 Main St.","city":"Naperville","state":"IL","zip":} |

+-------------------+-------------------+------------------------------+------------------------------------------------------------+

------------------------------------------------------------------------------+--+ #模糊查询city 头字符是Na

select * from employees where address.city like 'Na%';

+-------------------+-------------------+-------------------------+------------------------------------------------------------+-----

--------------------------------------------------------------------+--+

| employees.name | employees.salary | employees.subordinates | employees.deductions |

employees.address |

+-------------------+-------------------+-------------------------+------------------------------------------------------------+-----

--------------------------------------------------------------------+--+

| Stacy Accountant | 60000.0 | [] | {"Federal Taxes":0.15,"State Taxes":0.03,"Insurance":0.1} | {"st

reet":" Main St.","city":"Naperville","state":"IL","zip":60563} |

+-------------------+-------------------+-------------------------+------------------------------------------------------------+-----

--------------------------------------------------------------------+--+ #正则查询

select * from employees where address.street rlike '^.*(Ontario|Chicago).*$';

+-----------------+-------------------+-------------------------+------------------------------------------------------------+-------

--------------------------------------------------------------------+--+

| employees.name | employees.salary | employees.subordinates | employees.deductions |

employees.address |

+-----------------+-------------------+-------------------------+------------------------------------------------------------+-------

--------------------------------------------------------------------+--+

| Mary Smith | 80000.0 | ["Bill King"] | {"Federal Taxes":0.2,"State Taxes":0.05,"Insurance":0.1} | {"stre

et":" Ontario St.","city":"Chicago","state":"IL","zip":60601} |

| Todd Jones | 70000.0 | [] | {"Federal Taxes":0.15,"State Taxes":0.03,"Insurance":0.1} | {"stre

et":" Chicago Ave.","city":"Oak Park","state":"IL","zip":60700} |

+-----------------+-------------------+-------------------------+------------------------------------------------------------+-------

--------------------------------------------------------------------+--+

2.4 分区Partition的命令使用

#stocks表创建

CREATE TABLE if not EXISTS stocks(

ymd date,

price_open FLOAT ,

price_high FLOAT ,

price_low FLOAT ,

price_close float,

volume int,

price_adj_close FLOAT

)partitioned by (exchanger string,symbol string)

row format delimited fields terminated by ','; #加载数据

LOAD DATA LOCAL INPATH '/home/mfz/apache-hive-2.1.1-bin/hivedata/stocks.csv' OVERWRITE INTO TABLE stocks partition(exchanger="NASDAQ",symbol="AAPL"); #查询partition stocks

show partitions stocks;

+-------------------------------+--+

| partition |

+-------------------------------+--+

| exchanger=NASDAQ/symbol=AAPL |

+-------------------------------+--+ #建立多个分区加载不同的数据

LOAD DATA LOCAL INPATH '/home/mfz/apache-hive-2.1.1-bin/hivedata/stocks.csv' OVERWRITE INTO TABLE stocks partition(exchanger="NASDAQ",symbol="INTC");

LOAD DATA LOCAL INPATH '/home/mfz/apache-hive-2.1.1-bin/hivedata/stocks.csv' OVERWRITE INTO TABLE stocks partition(exchanger="NYSE",symbol="GE");

LOAD DATA LOCAL INPATH '/home/mfz/apache-hive-2.1.1-bin/hivedata/stocks.csv' OVERWRITE INTO TABLE stocks partition(exchanger="NYSE",symbol="IBM"); #分页查询stocks分区是exchanger='NASDAQ' and symbol='AAPL'的数据

select * from stocks where exchanger='NASDAQ' and symbol='AAPL' limit ;

+-------------+--------------------+--------------------+-------------------+---------------------+----------------+-----------------

--------+-------------------+----------------+--+

| stocks.ymd | stocks.price_open | stocks.price_high | stocks.price_low | stocks.price_close | stocks.volume | stocks.price_adj

_close | stocks.exchanger | stocks.symbol |

+-------------+--------------------+--------------------+-------------------+---------------------+----------------+-----------------

--------+-------------------+----------------+--+

| -- | 195.69 | 197.88 | 194.0 | 194.12 | | 194.12

| NASDAQ | AAPL |

| -- | 192.63 | 196.0 | 190.85 | 195.46 | | 195.46

| NASDAQ | AAPL |

| -- | 196.73 | 198.37 | 191.57 | 192.05 | | 192.05

| NASDAQ | AAPL |

| -- | 195.17 | 200.2 | 194.42 | 199.23 | | 199.23

| NASDAQ | AAPL |

| -- | 195.91 | 196.32 | 193.38 | 195.86 | | 195.86

| NASDAQ | AAPL |

| -- | 192.37 | 196.0 | 191.3 | 194.73 | | 194.73

| NASDAQ | AAPL |

| -- | 201.08 | 202.2 | 190.25 | 192.06 | | 192.06

| NASDAQ | AAPL |

| -- | 204.93 | 205.5 | 198.7 | 199.29 | | 199.29

| NASDAQ | AAPL |

| -- | 206.85 | 210.58 | 199.53 | 207.88 | | 207.88

| NASDAQ | AAPL |

| -- | 205.95 | 213.71 | 202.58 | 205.94 | | 205.94

| NASDAQ | AAPL |

+-------------+--------------------+--------------------+-------------------+---------------------+----------------+-----------------

--------+-------------------+----------------+--+ #统计各分区中总数

select exchanger,symbol,count(*) from stocks group by exchanger,symbol;

+------------+---------+-------+--+

| exchanger | symbol | c2 |

+------------+---------+-------+--+

| NASDAQ | AAPL | |

| NASDAQ | INTC | |

| NYSE | GE | |

| NYSE | IBM | |

+------------+---------+-------+--+ #统计各分区中最大的最大消费金额

select exchanger,symbol,max(price_high) from stocks group by exchanger,symbol;

+------------+---------+---------+--+

| exchanger | symbol | c2 |

+------------+---------+---------+--+

| NASDAQ | AAPL | 215.59 |

| NASDAQ | INTC | 215.59 |

| NYSE | GE | 215.59 |

| NYSE | IBM | 215.59 |

+------------+---------+---------+--+

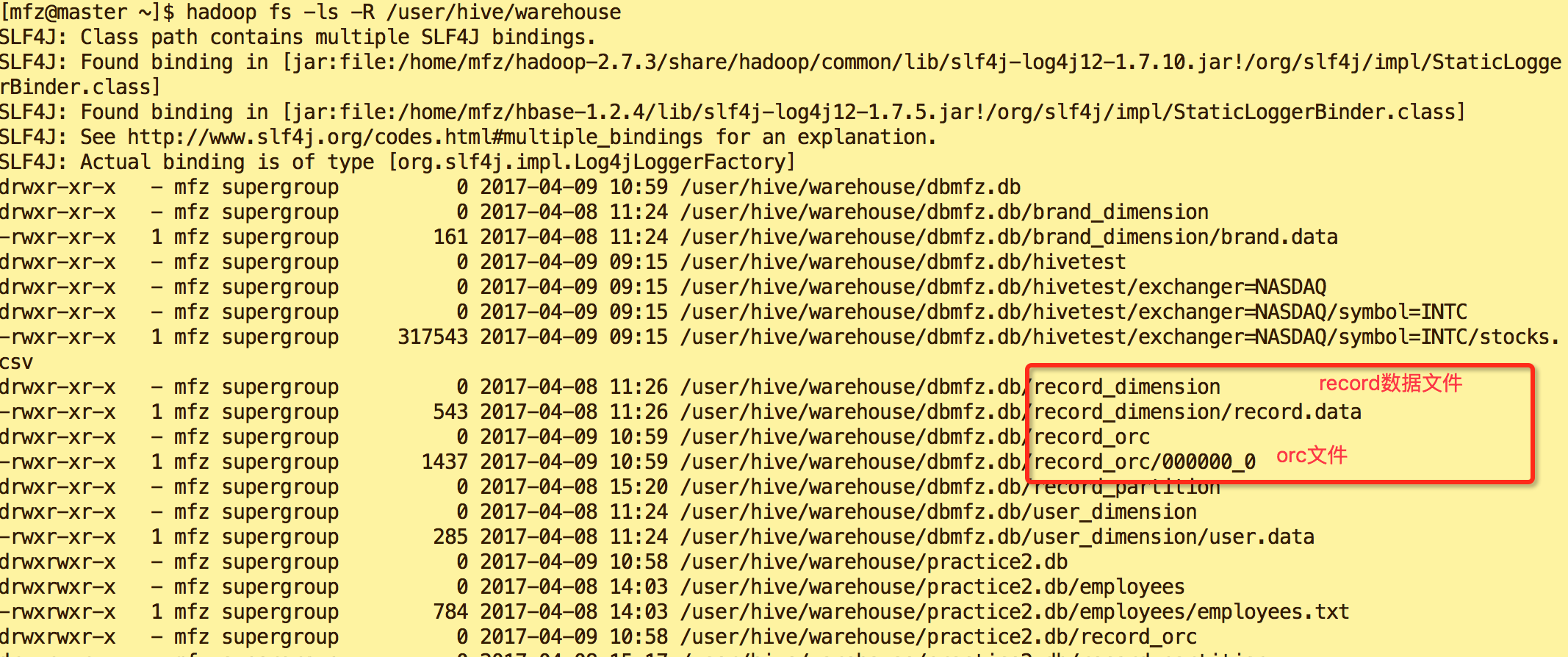

2.5 Hive ORCFile 的操作:

更高的压缩比,更好的性能–使用ORC文件格式优化Hive

create table if not EXISTS record_orc(

rid string,

uid string,

bid string,

price int,

source_province string,

target_province string,

site string,

express_number string,

express_company string,

trancation_date date

)stored as orc; show create table record_orc;

+---------------------------------------------------------------------+--+

| createtab_stmt |

+---------------------------------------------------------------------+--+

| CREATE TABLE `record_orc`( |

| `rid` string, |

| `uid` string, |

| `bid` string, |

| `price` int, |

| `source_province` string, |

| `target_province` string, |

| `site` string, |

| `express_number` string, |

| `express_company` string, |

| `trancation_date` date) |

| ROW FORMAT SERDE |

| 'org.apache.hadoop.hive.ql.io.orc.OrcSerde' |

| STORED AS INPUTFORMAT |

| 'org.apache.hadoop.hive.ql.io.orc.OrcInputFormat' |

| OUTPUTFORMAT |

| 'org.apache.hadoop.hive.ql.io.orc.OrcOutputFormat' |

| LOCATION |

| 'hdfs://master:9000/user/hive/warehouse/practice2.db/record_orc' |

| TBLPROPERTIES ( |

| 'COLUMN_STATS_ACCURATE'='{\"BASIC_STATS\":\"true\"}', |

| 'numFiles'='', |

| 'numRows'='', |

| 'rawDataSize'='', |

| 'totalSize'='', |

| 'transient_lastDdlTime'='') |

+---------------------------------------------------------------------+--+ #载入数据

insert into table record_orc select * from record_dimension; select * from record_orc;

+-----------------+-----------------+-----------------+-------------------+-----------------------------+----------------------------

-+------------------+----------------------------+-----------------------------+-----------------------------+--+

| record_orc.rid | record_orc.uid | record_orc.bid | record_orc.price | record_orc.source_province | record_orc.target_province

| record_orc.site | record_orc.express_number | record_orc.express_company | record_orc.trancation_date |

+-----------------+-----------------+-----------------+-------------------+-----------------------------+----------------------------

-+------------------+----------------------------+-----------------------------+-----------------------------+--+

| | | | | HeiLongJiang | HuNan

| TianMao | | ShenTong | -- |

| | | | | GuangDong | HuNan

| JingDong | | ZhongTong | -- |

| | | | | JiangSu | Huan

| TianMaoChaoShi | | Shunfeng | -- |

| | | | | TianJing | NeiMeiGu

| JingDong | | YunDa | -- |

| | | | | ShangHai | Ning

| TianMao | | YunDa | -- |

| | | | | HuBei | Aomen

| JuHU | | ZhongTong | -- |

+-----------------+-----------------+-----------------+-------------------+-----------------------------+----------------------------

-+------------------+----------------------------+-----------------------------+-----------------------------+--+ select * from record_dimension;

+-----------------------+-----------------------+-----------------------+-------------------------+----------------------------------

-+-----------------------------------+------------------------+----------------------------------+-----------------------------------

+-----------------------------------+--+

| record_dimension.rid | record_dimension.uid | record_dimension.bid | record_dimension.price | record_dimension.source_province

| record_dimension.target_province | record_dimension.site | record_dimension.express_number | record_dimension.express_company

| record_dimension.trancation_date |

+-----------------------+-----------------------+-----------------------+-------------------------+----------------------------------

-+-----------------------------------+------------------------+----------------------------------+-----------------------------------

+-----------------------------------+--+

| | | | | HeiLongJiang

| HuNan | TianMao | | ShenTong

| -- |

| | | | | GuangDong

| HuNan | JingDong | | ZhongTong

| -- |

| | | | | JiangSu

| Huan | TianMaoChaoShi | | Shunfeng

| -- |

| | | | | TianJing

| NeiMeiGu | JingDong | | YunDa

| -- |

| | | | | ShangHai

| Ning | TianMao | | YunDa

| -- |

| | | | | HuBei

| Aomen | JuHU | | ZhongTong

| -- |

+-----------------------+-----------------------+-----------------------+-------------------------+----------------------------------

-+-----------------------------------+------------------------+----------------------------------+-----------------------------------

+-----------------------------------+--+

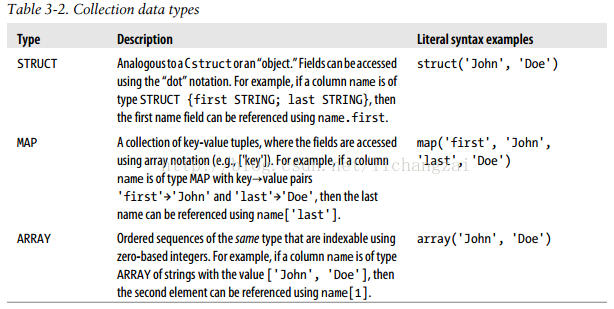

从数据结果来看没有多大区别。那我们来看下hdfs上的存储文件:

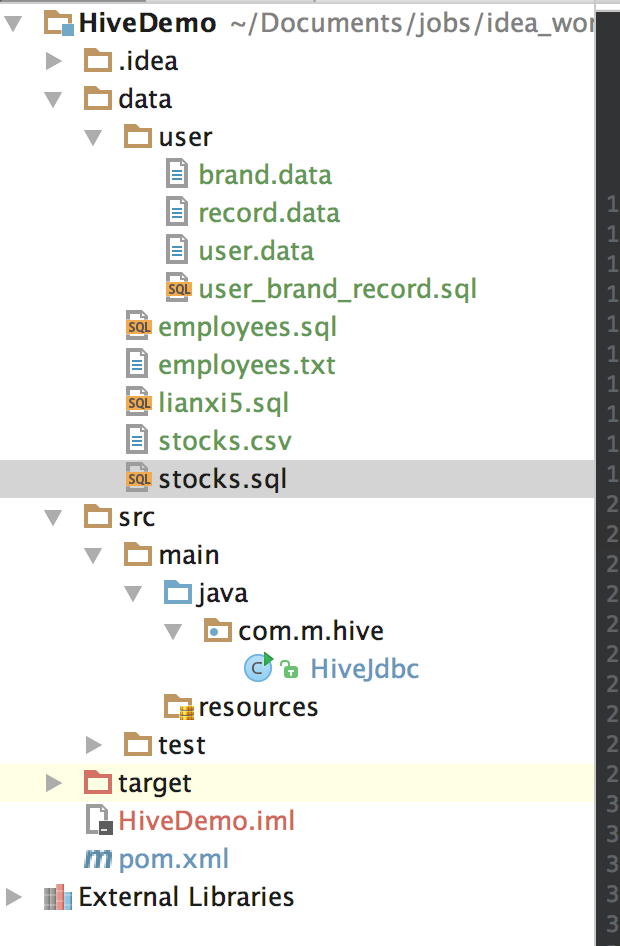

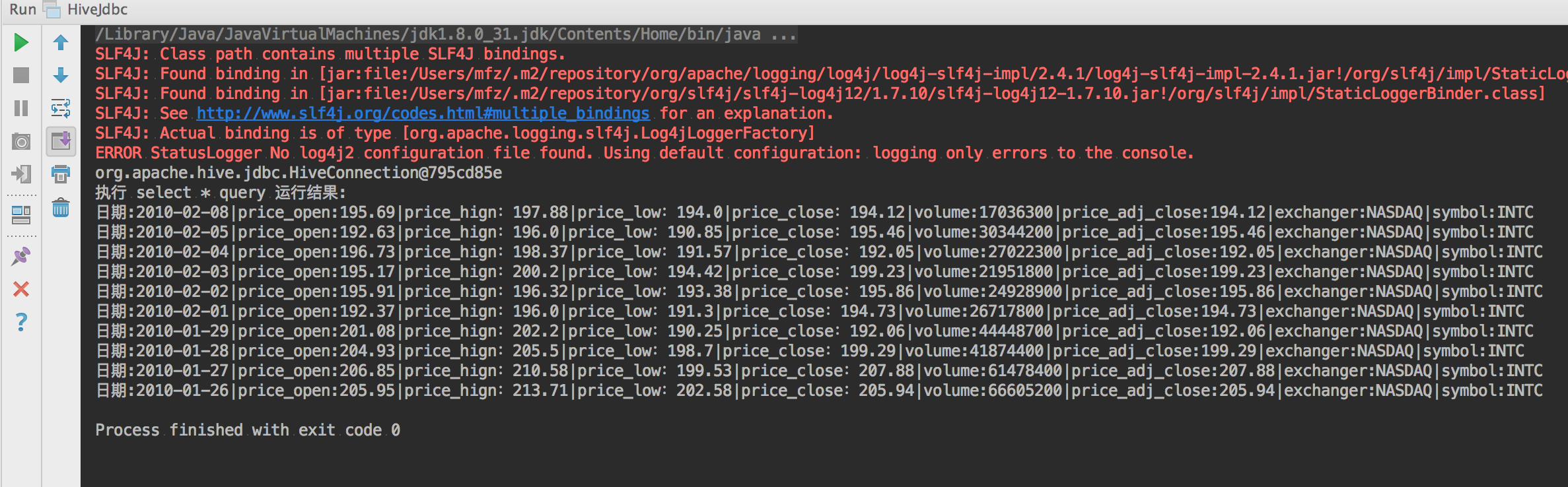

3.介绍下基本的JDBC连接hiveServer2的示例

package com.m.hive; import java.sql.*; /**

* @author mengfanzhu

* @Package com.m.hive

* @Description:

* @date 17/4/3 11:57

*/

public class HiveJdbc {

private static String driverName = "org.apache.hive.jdbc.HiveDriver";//jdbc驱动路径

private static String url = "jdbc:hive2://10.211.55.5:10000/dbmfz";//hive库地址+库名

private static String user = "mfz";//用户名

private static String password = "";//密码 public static void main(String[] args) {

Connection conn = null;

Statement stmt = null;

ResultSet res = null;

try {

conn = getConn();

System.out.println(conn);

stmt = conn.createStatement();

stmt.execute("drop table hivetest");

stmt.execute("CREATE TABLE if not EXISTS hivetest(" +

"ymd date," +

"price_open FLOAT ," +

"price_high FLOAT ," +

"price_low FLOAT ," +

"price_close float," +

"volume int," +

"price_adj_close FLOAT" +

")partitioned by (exchanger string,symbol string)" +

"row format delimited fields terminated by ','");

stmt.execute("LOAD DATA LOCAL INPATH '/home/mfz/apache-hive-2.1.1-bin/hivedata/stocks.csv' " +

"OVERWRITE INTO TABLE hivetest partition(exchanger=\"NASDAQ\",symbol=\"INTC\")");

res = stmt.executeQuery("select * from hivetest limit 10");

System.out.println("执行 select * query 运行结果:");

while (res.next()) {

System.out.println(

"日期:"+res.getString()+

"|price_open:"+res.getString()+

"|price_hign:"+res.getString()+

"|price_low:"+res.getString()+

"|price_close:"+res.getString()+

"|volume:"+res.getString()+

"|price_adj_close:"+res.getString()+

"|exchanger:"+res.getString()+

"|symbol:"+res.getString());

} } catch (ClassNotFoundException e) {

e.printStackTrace();

System.exit();

} catch (SQLException e) {

e.printStackTrace();

System.exit();

}finally {

try{

if(null!=res){

res.close();

}

if(null!=stmt){

stmt.close();

}

if(null!=conn){

conn.close();

}

}catch (Exception e){

e.printStackTrace();

} }

} private static Connection getConn() throws ClassNotFoundException,

SQLException {

Class.forName(driverName);

Connection conn = DriverManager.getConnection(url, user, password);

return conn;

}

}

运行结果

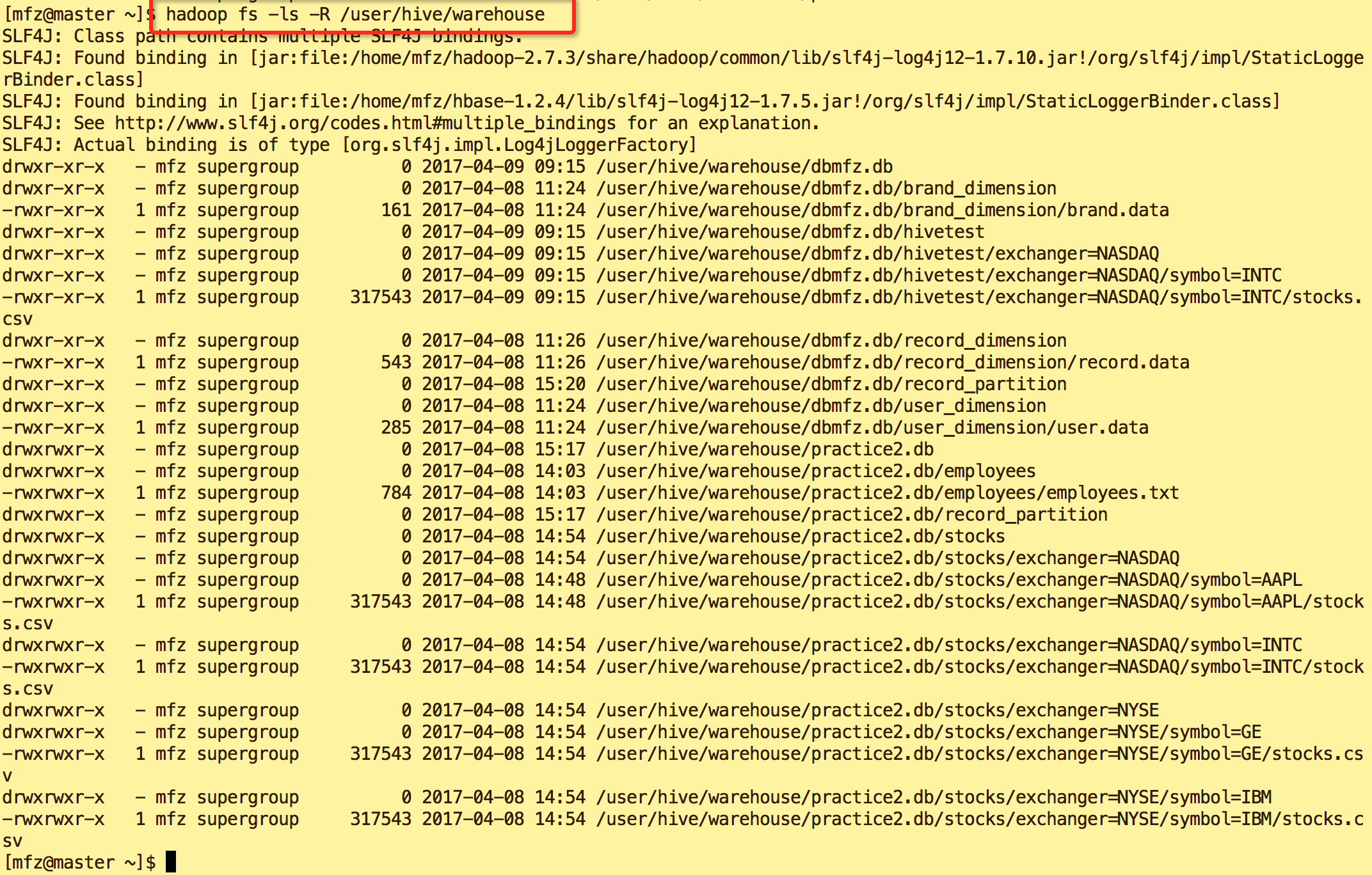

操作完成后我们在hdfs中可以见到我们之前操作过的文件。这个目录是我们之前在hive-site.xml中配置了此项

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

<description>locationofdefault databasefor thewarehouse</description>

</property>

完~,项目示例见Github https://github.com/fzmeng/HiveExample

大数据系列之数据仓库Hive命令使用及JDBC连接的更多相关文章

- 大数据系列之数据仓库Hive安装

Hive系列博文,持续更新~~~ 大数据系列之数据仓库Hive原理 大数据系列之数据仓库Hive安装 大数据系列之数据仓库Hive中分区Partition如何使用 大数据系列之数据仓库Hive命令使用 ...

- 大数据系列之数据仓库Hive原理

Hive系列博文,持续更新~~~ 大数据系列之数据仓库Hive原理 大数据系列之数据仓库Hive安装 大数据系列之数据仓库Hive中分区Partition如何使用 大数据系列之数据仓库Hive命令使用 ...

- 大数据系列之数据仓库Hive中分区Partition如何使用

Hive系列博文,持续更新~~~ 大数据系列之数据仓库Hive原理 大数据系列之数据仓库Hive安装 大数据系列之数据仓库Hive中分区Partition如何使用 大数据系列之数据仓库Hive命令使用 ...

- 【大数据系列】apache hive 官方文档翻译

GettingStarted 开始 Created by Confluence Administrator, last modified by Lefty Leverenz on Jun 15, 20 ...

- 大数据系列(4)——Hadoop集群VSFTP和SecureCRT安装配置

前言 经过前三篇文章的介绍,已经通过VMware安装了Hadoop的集群环境,当然,我相信安装的过程肯定遇到或多或少的问题,这些都需要自己解决,解决的过程就是学习的过程,本篇的来介绍几个Hadoop环 ...

- 大数据系列(3)——Hadoop集群完全分布式坏境搭建

前言 上一篇我们讲解了Hadoop单节点的安装,并且已经通过VMware安装了一台CentOS 6.8的Linux系统,咱们本篇的目标就是要配置一个真正的完全分布式的Hadoop集群,闲言少叙,进入本 ...

- 大数据系列(2)——Hadoop集群坏境CentOS安装

前言 前面我们主要分析了搭建Hadoop集群所需要准备的内容和一些提前规划好的项,本篇我们主要来分析如何安装CentOS操作系统,以及一些基础的设置,闲言少叙,我们进入本篇的正题. 技术准备 VMwa ...

- 12.Linux软件安装 (一步一步学习大数据系列之 Linux)

1.如何上传安装包到服务器 有三种方式: 1.1使用图形化工具,如: filezilla 如何使用FileZilla上传和下载文件 1.2使用 sftp 工具: 在 windows下使用CRT 软件 ...

- 大数据系列(5)——Hadoop集群MYSQL的安装

前言 有一段时间没写文章了,最近事情挺多的,现在咱们回归正题,经过前面四篇文章的介绍,已经通过VMware安装了Hadoop的集群环境,相关的两款软件VSFTP和SecureCRT也已经正常安装了. ...

随机推荐

- Alpha冲刺——day3

Alpha冲刺--day3 作业链接 Alpha冲刺随笔集 github地址 团队成员 031602636 许舒玲(队长) 031602237 吴杰婷 031602220 雷博浩 031602634 ...

- Linux 备份 文件夹的权限 然后在其他机器进行恢复

Study From https://www.cnblogs.com/chenshoubiao/p/4780987.html 用到的命令 getfacl 和 setfacl 备份 getfacl -R ...

- MySQL基础(二):视图、触发器、函数、事务、存储过程

一.视图 视图是一个虚拟表(非真实存在),其本质是[根据SQL语句获取动态的数据集,并为其命名],用户使用时只需使用[名称]即可获取结果集,并可以将其当作表来使用. 视图和上一篇学到的临时表搜索类似. ...

- Java中String直接赋字符串和new String的区别 如String str=new String("a")和String str = "a"有什么区别?

百度的面试官问 String A="ABC"; String B=new String("ABC"); 这两个值,A,B 是否相等,如果都往HashSet里面放 ...

- 远程显示(操作) 服务器 GUI 程序(图形化界面) (基于 X11 Forwarding + Centos + MobaXterm)

在做 数据分析(数据挖掘 或 机器学习)的时候,我们经常需要绘制一些统计相关的图表,这些统计.绘图的程序常常是跑在服务器上的,可是服务器出于性能和效率的考虑,通常都是没有安装图形化界面的,于是这些统计 ...

- 在vue中使用weixin-js-sdk自定义微信分享效果

在做微信分享的时候,产品要求分享效果要有文字和图片,使用weixin-js-sdk解决了, 原始的分享效果: 使用微信JS-SDK的分享效果: 首先需要引入weixin-js-sdk npm inst ...

- BZOJ1492 货币兑换 CDQ分治优化DP

1492: [NOI2007]货币兑换Cash Time Limit: 5 Sec Memory Limit: 64 MB Description 小Y最近在一家金券交易所工作.该金券交易所只发行交 ...

- 【题解】 P1879 玉米田Corn Fields (动态规划,状态压缩)

题目描述 Farmer John has purchased a lush new rectangular pasture composed of M by N (1 ≤ M ≤ 12; 1 ≤ N ...

- 【BZOJ1304】[CQOI2009]叶子的染色(动态规划)

[BZOJ1304][CQOI2009]叶子的染色(动态规划) 题面 BZOJ 洛谷 题解 很简单. 设\(f[i][0/1/2]\)表示以\(i\)为根的子树中,还有颜色为\(0/1/2\)(\(2 ...

- 【bzoj4676】 两双手

http://www.lydsy.com/JudgeOnline/problem.php?id=4767 (题目链接) 题意 求在网格图上从$(0,0)$走到$(n,m)$,其中不经过一些点的路径方案 ...