Flume启动报错[ERROR - org.apache.flume.sink.hdfs. Hit max consecutive under-replication rotations (30); will not continue rolling files under this path due to under-replication解决办法(图文详解)

前期博客

Flume自定义拦截器(Interceptors)或自带拦截器时的一些经验技巧总结(图文详解)

问题详情

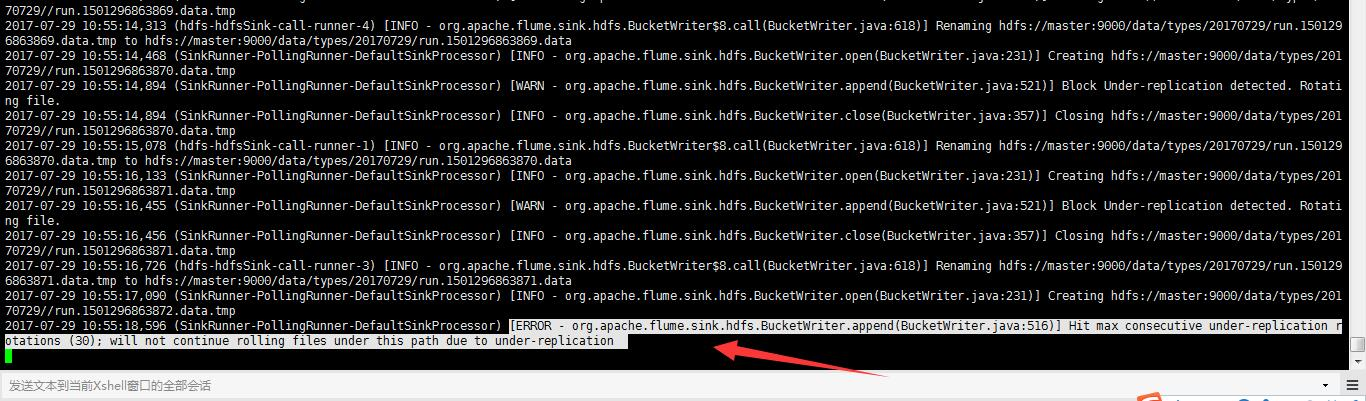

-- ::, (SinkRunner-PollingRunner-DefaultSinkProcessor) [WARN - org.apache.flume.sink.hdfs.BucketWriter.append(BucketWriter.java:)] Block Under-replication detected. Rotating file.

-- ::, (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.hdfs.BucketWriter.close(BucketWriter.java:)] Closing hdfs://master:9000/data/types/20170729//run.1501298449107.data.tmp

-- ::, (hdfs-hdfsSink-call-runner-) [INFO - org.apache.flume.sink.hdfs.BucketWriter$.call(BucketWriter.java:)] Renaming hdfs://master:9000/data/types/20170729/run.1501298449107.data.tmp to hdfs://master:9000/data/types/20170729/run.1501298449107.data

-- ::, (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.hdfs.BucketWriter.open(BucketWriter.java:)] Creating hdfs://master:9000/data/types/20170729//run.1501298449108.data.tmp

-- ::, (SinkRunner-PollingRunner-DefaultSinkProcessor) [WARN - org.apache.flume.sink.hdfs.BucketWriter.append(BucketWriter.java:)] Block Under-replication detected. Rotating file.

-- ::, (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.hdfs.BucketWriter.close(BucketWriter.java:)] Closing hdfs://master:9000/data/types/20170729//run.1501298449108.data.tmp

-- ::, (hdfs-hdfsSink-call-runner-) [INFO - org.apache.flume.sink.hdfs.BucketWriter$.call(BucketWriter.java:)] Renaming hdfs://master:9000/data/types/20170729/run.1501298449108.data.tmp to hdfs://master:9000/data/types/20170729/run.1501298449108.data

-- ::, (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.hdfs.BucketWriter.open(BucketWriter.java:)] Creating hdfs://master:9000/data/types/20170729//run.1501298449109.data.tmp

2017-07-29 11:22:21,869 (SinkRunner-PollingRunner-DefaultSinkProcessor) [ERROR - org.apache.flume.sink.hdfs.BucketWriter.append(BucketWriter.java:516)] Hit max consecutive under-replication rotations (30); will not continue rolling files under this path due to under-replication

解决办法

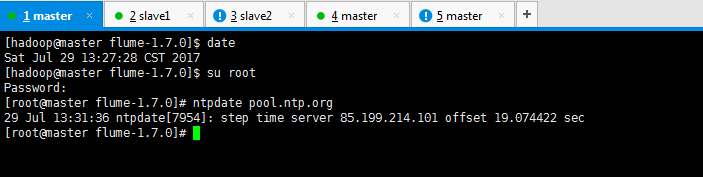

[hadoop@master flume-1.7.]$ su root

Password:

[root@master flume-1.7.]# ntpdate pool.ntp.org

Jul :: ntpdate[]: step time server 85.199.214.101 offset 19.074422 sec

[root@master flume-1.7.]#

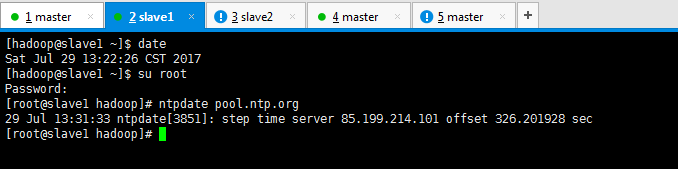

[hadoop@slave1 ~]$ su root

Password:

[root@slave1 hadoop]# ntpdate pool.ntp.org

Jul :: ntpdate[]: step time server 85.199.214.101 offset 326.201928 sec

[root@slave1 hadoop]#

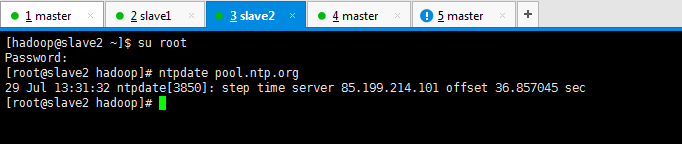

[hadoop@slave2 ~]$ su root

Password:

[root@slave2 hadoop]# ntpdate pool.ntp.org

Jul :: ntpdate[]: step time server 85.199.214.101 offset 36.857045 sec

[root@slave2 hadoop]#

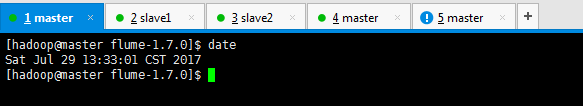

[hadoop@master flume-1.7.]$ date

Sat Jul :: CST

[hadoop@master flume-1.7.]$

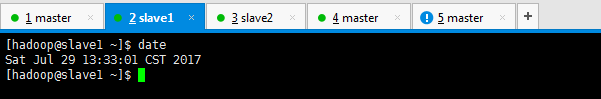

[hadoop@slave1 ~]$ date

Sat Jul :: CST

[hadoop@slave1 ~]$

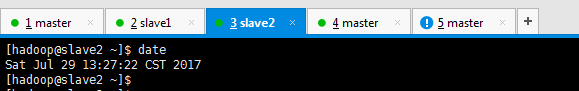

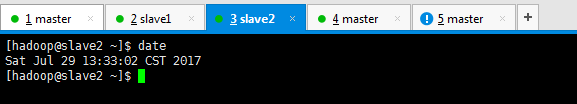

[hadoop@slave2 ~]$ date

Sat Jul :: CST

[hadoop@slave2 ~]$

或者

#source的名字

agent1.sources = fileSource

# channels的名字,建议按照type来命名

agent1.channels = memoryChannel

# sink的名字,建议按照目标来命名

agent1.sinks = hdfsSink # 指定source使用的channel名字

agent1.sources.fileSource.channels = memoryChannel

# 指定sink需要使用的channel的名字,注意这里是channel

agent1.sinks.hdfsSink.channel = memoryChannel agent1.sources.fileSource.type = exec

agent1.sources.fileSource.command = tail -F /usr/local/log/server.log #------- fileChannel-1相关配置-------------------------

# channel类型 agent1.channels.memoryChannel.type = memory

agent1.channels.memoryChannel.capacity =

agent1.channels.memoryChannel.transactionCapacity =

agent1.channels.memoryChannel.byteCapacityBufferPercentage =

agent1.channels.memoryChannel.byteCapacity =

agent1.channels.memoryChannel.keep-alive =

agent1.channels.memoryChannel.capacity = #---------拦截器相关配置------------------

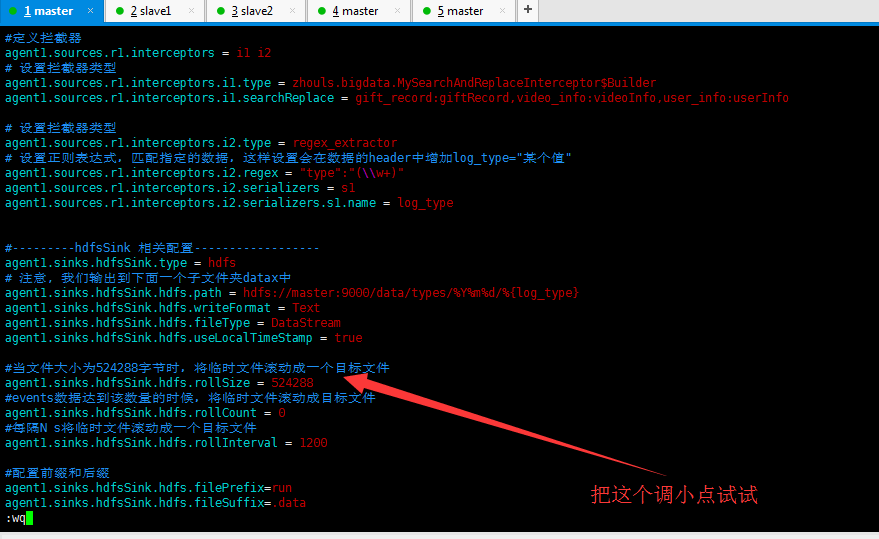

#定义拦截器

agent1.sources.r1.interceptors = i1 i2

# 设置拦截器类型

agent1.sources.r1.interceptors.i1.type = zhouls.bigdata.MySearchAndReplaceInterceptor$Builder

agent1.sources.r1.interceptors.i1.searchReplace = gift_record:giftRecord,video_info:videoInfo,user_info:userInfo # 设置拦截器类型

agent1.sources.r1.interceptors.i2.type = regex_extractor

# 设置正则表达式,匹配指定的数据,这样设置会在数据的header中增加log_type="某个值"

agent1.sources.r1.interceptors.i2.regex = "type":"(\\w+)"

agent1.sources.r1.interceptors.i2.serializers = s1

agent1.sources.r1.interceptors.i2.serializers.s1.name = log_type #---------hdfsSink 相关配置------------------

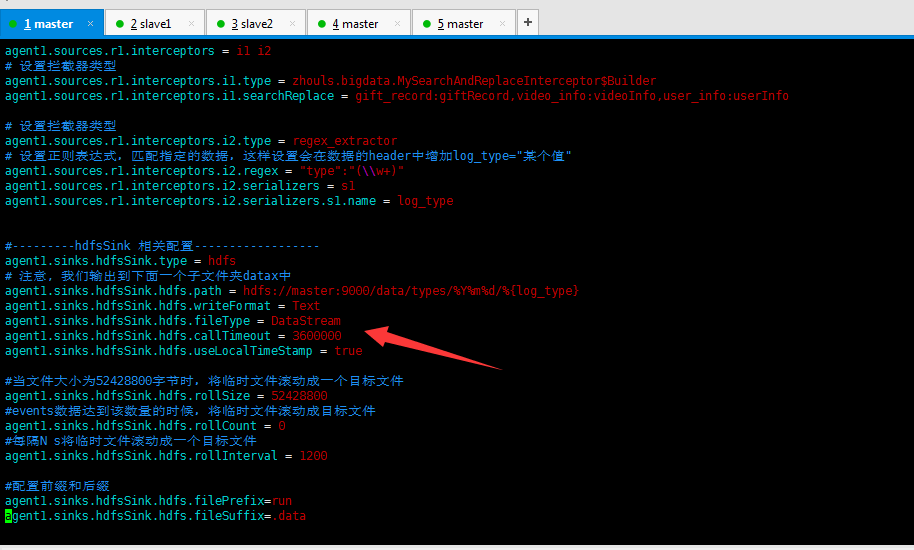

agent1.sinks.hdfsSink.type = hdfs

# 注意, 我们输出到下面一个子文件夹datax中

agent1.sinks.hdfsSink.hdfs.path = hdfs://master:9000/data/types/%Y%m%d/%{log_type}

agent1.sinks.hdfsSink.hdfs.writeFormat = Text

agent1.sinks.hdfsSink.hdfs.fileType = DataStream

agent1.sinks.hdfsSink.hdfs.callTimeout =

agent1.sinks.hdfsSink.hdfs.useLocalTimeStamp = true #当文件大小为52428800字节时,将临时文件滚动成一个目标文件

agent1.sinks.hdfsSink.hdfs.rollSize =

#events数据达到该数量的时候,将临时文件滚动成目标文件

agent1.sinks.hdfsSink.hdfs.rollCount =

#每隔N s将临时文件滚动成一个目标文件

agent1.sinks.hdfsSink.hdfs.rollInterval = #配置前缀和后缀

agent1.sinks.hdfsSink.hdfs.filePrefix=run

agent1.sinks.hdfsSink.hdfs.fileSuffix=.data

或者,

将机器重启,也许是网络的问题

或者,

进一步解决问题

https://stackoverflow.com/questions/22145899/flume-hdfs-sink-keeps-rolling-small-files

Flume启动报错[ERROR - org.apache.flume.sink.hdfs. Hit max consecutive under-replication rotations (30); will not continue rolling files under this path due to under-replication解决办法(图文详解)的更多相关文章

- Tomcat启动报错 ERROR org.apache.struts2.dispatcher.Dispatcher - Dispatcher initialization failed

背景: 在进行Spring Struts2 Hibernate 即SSH整合的过程中遇到了这个错误! 原因分析: Bean已经被加载了,不能重复加载 原来是Jar包重复了! 情形一: Tomcat ...

- flume启动报错

执行flume-ng agent -c conf -f conf/load_balancer_server.conf -n a1 -Dflume.root.logger=DEBUG,console , ...

- TOMCAT启动报错:org.apache.tomcat.jni.Error: 730055

TOMCAT启动报错:org.apache.tomcat.jni.Error: 730055 具体原因:不清楚 解决方式:重启应用服务器后,再启动tomcat就可以了 欢迎关注公众号,学习kettle ...

- android sdk启动报错error: could not install *smartsocket* listener: cannot bind to 127.0.0.1:5037:

android sdk启动报错error: could not install *smartsocket* listener: cannot bind to 127.0.0.1:5037: 问题原因: ...

- tomcat启动报错 ERROR o.a.catalina.session.StandardManager 182 - Exception loading sessions from persiste

系统:centos6.5 x86_64 jdk: 1.8.0_102 tomcat:8.0.37 tomcat 启动报错: ERROR o.a.catalina.session.StandardMan ...

- Tomcat启动报错ERROR:transport error 202:bind failed:Address already

昨天在服务器上拷贝了一个tomcat项目,修改了server.xml之后启动居然报错ERROR:transport error 202:bind failed:Address already,应该是远 ...

- Tomcat7.0.40注册到服务启动报错error Code 1 +connector attribute sslcertificateFile must be defined when using ssl with apr

Tomcat7.0.40 注册到服务启动遇到以下几个问题: 1.启动报错errorCode1 查看日志如下图: 解决办法: 这个是因为我的jdk版本问题,因为电脑是64位,安装的jdk是32位的所以会 ...

- springboot启动报错,Error starting ApplicationContext. To display the conditions report re-run your application with 'debug' enabled.

报错: Error starting ApplicationContext. To display the conditions report re-run your application with ...

- hbase shell中执行list命令报错:ERROR: org.apache.hadoop.hbase.PleaseHoldException: Master is initializing

问题描述: 今天在测试环境中,搭建hbase环境,执行list命令之后,报错: hbase(main):001:0> list TABLE ERROR: org.apache.hadoop.hb ...

随机推荐

- 【ZooKeeper怎么玩】之一:为什么需要ZK

博客已经搬家,见[ZooKeeper怎么玩]之一:为什么需要ZK 学习新东西首先需要搞清楚为什么学它,这是符合我们的一个认知过程.<!--more-->#ZooKeeper是什么ZooKe ...

- 2007.1.1 string.Format

String.Format举例(C#) stringstr1 =string.Format("{0:N1}",56789); //result: 56,789.0 stringst ...

- Python垃圾回收机制:gc模块

在Python中,为了解决内存泄露问题,采用了对象引用计数,并基于引用计数实现自动垃圾回收. 由于Python 有了自动垃圾回收功能,就造成了不少初学者误认为不必再受内存泄漏的骚扰了.但如果仔细查看一 ...

- MySQL学习笔记之一---字符编码和字符集

前言: 一般来说,出现中文乱码,都是客户端和服务端字符集不匹配导致的原因. (默认未指定字符集创建的数据库表,都是latinl字符集, 强烈建议使用utf8字符集) 保证不出现乱码的思想:保证客户 ...

- VS2010 rdlc报表无法显示“数据源”选项

- IDEA创建Maven项目一直显示正在加载的问题

在用idea创建maven项目的时候 有时候会出现下面这种情况 出现原因 IDEA根据maven archetype的本质,其实是执行mvn archetype:generate命令,该命令执行时,需 ...

- solr-用mmseg4j配置同义词索引和检索(IKanlyzer需要修改源码适应solr接口才能使用同义词功能)

概念说明:同义词大体的意思是指,当用户输入一个词时,solr会把相关有相同意思的近义词的或同义词的term的语段内容从索引中取出,展示给用户,提高交互的友好性(当然这些同义词的定义是要在配置文件中事先 ...

- 框架之Struts2

相比较hibernate简单了许多 案例:使用Struts2框架完成登录功能 需求分析 1. 使用Struts2完成登录的功能 技术分析之Struts2框架的概述 1. 什么是Struts2的框架 * ...

- gearman安装问题总结

解决configure: WARNING: You will need re2c 0.13.4 or later if you want to regenerate PHP parsers. yum ...

- 在Linux里安装jdk

一.系统环境说明: [操作系统]:Ubuntu 18.04.1 Desktop [JDK]:jdk1.8.0_181,文件名称:jdk-8u181-linux-x64.tar 二.准备jdk文件 下载 ...