openpose-opencv 的body数据多人体姿态估计

介绍

opencv除了支持常用的物体检测模型和分类模型之外,还支持openpose模型,同样是线下训练和线上调用。这里不做特别多的介绍,先把源代码和数据放出来~

实验模型获取地址:https://github.com/CMU-Perceptual-Computing-Lab/openpose

基于body数据的代码实现:

import cv2

import time

import numpy as np

from random import randint image1 = cv2.imread("E:\\usb_test\\example\\yolov3\\OpenPose-Multi-Person\\111.jpg") protoFile = "E:\\usb_test\\example\\yolov3\\OpenPose-Multi-Person\\pose\\body_25\\pose_deploy.prototxt"

weightsFile = "E:\\usb_test\\example\\yolov3\\OpenPose-Multi-Person\\pose\\body_25\\pose_iter_584000.caffemodel"

nPoints = 25

# COCO Output Format

keypointsMapping = ['Nose', 'Neck', 'RShoulder', 'RElbow', 'RWrist', 'LShoulder', 'LElbow', 'LWrist', 'MidHip', 'RHip', 'RKnee',

'RAnkle', 'LHip', 'LKnee', 'LAnkle', 'REye', 'LEye', 'REar', "LEar", 'LBigToe', 'LSmallToe', "LHeel", 'RBigToe', 'RSmallToe' ,

'RHeel'] POSE_PAIRS = [[1,8], [1,2], [1,5], [2,3], [3,4], [5,6],

[6,7],[8,9],[9,10],[10,11], [8,12], [12,13],

[13,14], [1,0], [0,15],[15,17],[0,16],[16,18],

[2,17], [5,18], [14,19], [19,20], [14,21],[11,22],

[22,23] ,[11,24]] # index of pafs correspoding to the POSE_PAIRS

# e.g for POSE_PAIR(1,2), the PAFs are located at indices (31,32) of output, Similarly, (1,5) -> (39,40) and so on. mapIdx = [[26,27], [40,41], [48,49], [42,43], [44,45], [50,51],

[52,53], [32,33], [28,29], [30,31], [34,35],[36,37],

[38,39], [56,57], [58,59], [62,63], [60,61], [64,65],

[46,47], [54,55], [66,67], [68,69], [70,71],[72,73],

[74,75],[76,77]] colors = [[255, 0, 0], [255, 85, 0], [255, 170, 0],

[255, 255, 0], [170, 255, 0], [85, 255, 0],

[0, 255, 0], [0, 255, 85], [0, 255, 170],

[0, 255, 255], [0, 170, 255], [0, 85, 255],

[0, 0, 255], [85, 0, 255], [170, 0, 255],

[255, 0, 255], [255, 0, 170], [255, 0, 85],

[255, 170, 85], [255, 170, 170], [255, 170, 255],

[255, 85, 85], [255, 85, 170], [255, 85, 255],

[170, 170, 170]] def getKeypoints(probMap, threshold=0.1): mapSmooth = cv2.GaussianBlur(probMap,(3,3),0,0) mapMask = np.uint8(mapSmooth>threshold)

keypoints = [] #find the blobs

_, contours, hierarchy = cv2.findContours(mapMask, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE) #for each blob find the maxima

for cnt in contours:

#print(cnt)

blobMask = np.zeros(mapMask.shape)

blobMask = cv2.fillConvexPoly(blobMask, cnt, 1)

maskedProbMap = mapSmooth * blobMask

_, maxVal, _, maxLoc = cv2.minMaxLoc(maskedProbMap)

keypoints.append(maxLoc + (probMap[maxLoc[1], maxLoc[0]],)) return keypoints # Find valid connections between the different joints of a all persons present

def getValidPairs(output):

valid_pairs = []

invalid_pairs = []

n_interp_samples = 15

paf_score_th = 0.1

conf_th = 0.7

# loop for every POSE_PAIR

for k in range(len(mapIdx)):

# A->B constitute a limb

pafA = output[0, mapIdx[k][0], :, :]

pafB = output[0, mapIdx[k][1], :, :]

pafA = cv2.resize(pafA, (frameWidth, frameHeight))

pafB = cv2.resize(pafB, (frameWidth, frameHeight)) # Find the keypoints for the first and second limb

candA = detected_keypoints[POSE_PAIRS[k][0]]

candB = detected_keypoints[POSE_PAIRS[k][1]]

nA = len(candA)

nB = len(candB) # If keypoints for the joint-pair is detected

# check every joint in candA with every joint in candB

# Calculate the distance vector between the two joints

# Find the PAF values at a set of interpolated points between the joints

# Use the above formula to compute a score to mark the connection valid if( nA != 0 and nB != 0):

valid_pair = np.zeros((0,3))

for i in range(nA):

max_j=-1

maxScore = -1

found = 0

for j in range(nB):

# Find d_ij

d_ij = np.subtract(candB[j][:2], candA[i][:2])

norm = np.linalg.norm(d_ij)

if norm:

d_ij = d_ij / norm

else:

continue

# Find p(u)

interp_coord = list(zip(np.linspace(candA[i][0], candB[j][0], num=n_interp_samples),

np.linspace(candA[i][1], candB[j][1], num=n_interp_samples)))

# Find L(p(u))

paf_interp = []

for k in range(len(interp_coord)):

paf_interp.append([pafA[int(round(interp_coord[k][1])), int(round(interp_coord[k][0]))],

pafB[int(round(interp_coord[k][1])), int(round(interp_coord[k][0]))] ])

# Find E

paf_scores = np.dot(paf_interp, d_ij)

avg_paf_score = sum(paf_scores)/len(paf_scores) # Check if the connection is valid

# If the fraction of interpolated vectors aligned with PAF is higher then threshold -> Valid Pair

if ( len(np.where(paf_scores > paf_score_th)[0]) / n_interp_samples ) > conf_th :

if avg_paf_score > maxScore:

max_j = j

maxScore = avg_paf_score

found = 1

# Append the connection to the list

if found:

valid_pair = np.append(valid_pair, [[candA[i][3], candB[max_j][3], maxScore]], axis=0) # Append the detected connections to the global list

valid_pairs.append(valid_pair)

else: # If no keypoints are detected

print("No Connection : k = {}".format(k))

invalid_pairs.append(k)

valid_pairs.append([])

return valid_pairs, invalid_pairs # This function creates a list of keypoints belonging to each person

# For each detected valid pair, it assigns the joint(s) to a person

def getPersonwiseKeypoints(valid_pairs, invalid_pairs):

# the last number in each row is the overall score

personwiseKeypoints = -1 * np.ones((0, 26)) for k in range(len(mapIdx)):

if k not in invalid_pairs:

partAs = valid_pairs[k][:,0]

partBs = valid_pairs[k][:,1]

indexA, indexB = np.array(POSE_PAIRS[k]) for i in range(len(valid_pairs[k])):

found = 0

person_idx = -1

for j in range(len(personwiseKeypoints)):

if personwiseKeypoints[j][indexA] == partAs[i]:

person_idx = j

found = 1

break

print("find",found)

if found:

personwiseKeypoints[person_idx][indexB] = partBs[i]

personwiseKeypoints[person_idx][-1] += keypoints_list[partBs[i].astype(int), 2] + valid_pairs[k][i][2] # if find no partA in the subset, create a new subset

elif not found and k < 24:

row = -1 * np.ones(26)

row[indexA] = partAs[i]

row[indexB] = partBs[i]

# add the keypoint_scores for the two keypoints and the paf_score

row[-1] = sum(keypoints_list[valid_pairs[k][i,:2].astype(int), 2]) + valid_pairs[k][i][2]

personwiseKeypoints = np.vstack([personwiseKeypoints, row])

return personwiseKeypoints frameWidth = image1.shape[1]

frameHeight = image1.shape[0] t = time.time()

net = cv2.dnn.readNetFromCaffe(protoFile, weightsFile) # Fix the input Height and get the width according to the Aspect Ratio

inHeight = 360

inWidth = int((inHeight/frameHeight)*frameWidth) inpBlob = cv2.dnn.blobFromImage(image1, 1.0 / 255, (inWidth, inHeight),

(0, 0, 0), swapRB=False, crop=False)

print("", inpBlob.shape )

net.setInput(inpBlob)

#net.setPreferableBackend(cv2.dnn.DNN_BACKEND_OPENCV)

#net.setPreferableTarget(cv2.dnn.DNN_TARGET_OPENCL)

output = net.forward()

print(output.shape)

print("Time Taken in forward pass = {}".format(time.time() - t)) detected_keypoints = []

keypoints_list = np.zeros((0,3))

keypoint_id = 0

threshold = 0.1 for part in range(nPoints):

probMap = output[0,part,:,:]

probMap = cv2.resize(probMap, (image1.shape[1], image1.shape[0]))

keypoints = getKeypoints(probMap, threshold)

print("Keypoints - {} : {}".format(keypointsMapping[part], keypoints))

keypoints_with_id = []

for i in range(len(keypoints)):

keypoints_with_id.append(keypoints[i] + (keypoint_id,))

keypoints_list = np.vstack([keypoints_list, keypoints[i]])

keypoint_id += 1 detected_keypoints.append(keypoints_with_id)

print("detected_keypoints",detected_keypoints) frameClone = image1.copy()

for i in range(nPoints):

for j in range(len(detected_keypoints[i])):

cv2.circle(frameClone, detected_keypoints[i][j][0:2], 3, colors[i], -1, cv2.LINE_AA)

cv2.imshow("Keypoints",frameClone) valid_pairs, invalid_pairs = getValidPairs(output)

personwiseKeypoints = getPersonwiseKeypoints(valid_pairs, invalid_pairs) for i in range(24):

for n in range(len(personwiseKeypoints)):

index = personwiseKeypoints[n][np.array(POSE_PAIRS[i])]

if -1 in index:

continue

B = np.int32(keypoints_list[index.astype(int), 0])

A = np.int32(keypoints_list[index.astype(int), 1])

cv2.line(frameClone, (B[0], A[0]), (B[1], A[1]), colors[i], 2, cv2.LINE_AA) cv2.imshow("Detected Pose" , frameClone)

cv2.waitKey(0)

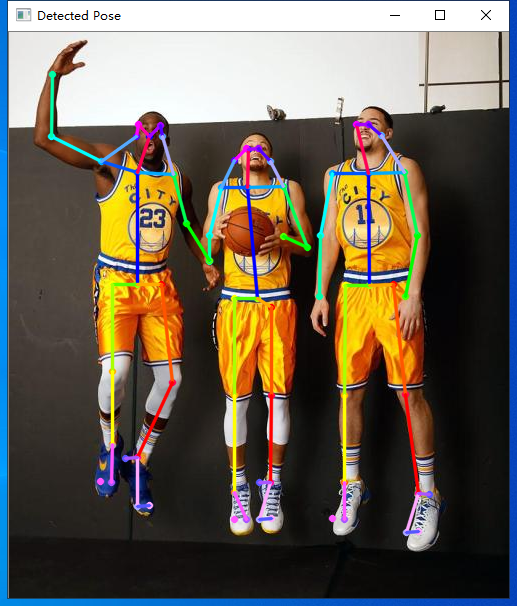

实验效果

openpose-opencv 的body数据多人体姿态估计的更多相关文章

- openpose-opencv 的coco数据多人体姿态估计

介绍 opencv除了支持常用的物体检测模型和分类模型之外,还支持openpose模型,同样是线下训练和线上调用.这里不做特别多的介绍,先把源代码和数据放出来- 实验模型获取地址:https://gi ...

- Facebook提出DensePose数据集和网络架构:可实现实时的人体姿态估计

https://baijiahao.baidu.com/s?id=1591987712899539583 选自arXiv 作者:Rza Alp Güler, Natalia Neverova, Ias ...

- 快速人体姿态估计:CVPR2019论文阅读

快速人体姿态估计:CVPR2019论文阅读 Fast Human Pose Estimation 论文链接: http://openaccess.thecvf.com/content_CVPR_201 ...

- 人体姿态和形状估计的视频推理:CVPR2020论文解析

人体姿态和形状估计的视频推理:CVPR2020论文解析 VIBE: Video Inference for Human Body Pose and Shape Estimation 论文链接:http ...

- 从DeepNet到HRNet,这有一份深度学习“人体姿势估计”全指南

从DeepNet到HRNet,这有一份深度学习"人体姿势估计"全指南 几十年来,人体姿态估计(Human Pose estimation)在计算机视觉界备受关注.它是理解图像和视频 ...

- CVPR 2020几篇论文内容点评:目标检测跟踪,人脸表情识别,姿态估计,实例分割等

CVPR 2020几篇论文内容点评:目标检测跟踪,人脸表情识别,姿态估计,实例分割等 CVPR 2020中选论文放榜后,最新开源项目合集也来了. 本届CPVR共接收6656篇论文,中选1470篇,&q ...

- 人体姿态的相似性评价基于OpenCV实现最近邻分类KNN K-Nearest Neighbors

最近学习了人体姿态的相似性评价.需要用到KNN来统计与当前姿态相似的k个姿态信息. 假设我们已经有了矩阵W和给定的测试样本姿态Xi,需要寻找与Xi相似的几个姿态,来估计当前Xi的姿态标签. //knn ...

- 利用RGB-D数据进行人体检测 带dataset

利用RGB-D数据进行人体检测 LucianoSpinello, Kai O. Arras 摘要 人体检测是机器人和智能系统中的重要问题.之前的研究工作使用摄像机和2D或3D测距器.本文中我们提出一种 ...

- 头部姿态估计 - Android

概括 通过Dlib获得当前人脸的特征点,然后通过旋转平移标准模型的特征点进行拟合,计算标准模型求得的特征点与Dlib获得的特征点之间的差,使用Ceres不断迭代优化,最终得到最佳的旋转和平移参数. A ...

随机推荐

- 【计算机视觉】OpenCV读取视频获取时间戳等信息(PS:经测试并不是时间戳,与FFMPEG时间戳不一样)

OpenCV中通过VideoCaptrue类对视频进行读取操作以及调用摄像头,下面是该类的API. 1.VideoCapture类的构造函数: C++: VideoCapture::VideoCapt ...

- hfile.block.cache.size - hbase调优

1.一个regionserver上有一个blockcache和N个memstore,它们的大小之和必须小于heapsize* 0.8,否则hbase不能启动,因为仍然要留有一些内存保证其它任务的执行. ...

- xmind常用快捷键

1-新建导图Ctrl+shift+N2-编辑文字空格键3-插入图片Ctrl+i4-插入主题Enter键5-插入主题之前Shift+Enter键6-插入子主题Tab键7-放大导图“Ctrl”+“+”,先 ...

- 通过JAX-WS实现WebService

(一)服务端的创建 一,首先创建一个Web 项目,或者创建一个Web Service项目也行(差别就是后者在开始就设置了Web Service的调用方式) 二,在项目中创建一个类作为我们要发布的服务( ...

- Win10 改为用 Ctrl+Shift 切换中英输入语言而不是 Win+空格

是切换中英输入语言,而不是切换输入法,如图: 步骤: 设置 > 设备 > 输入 > 高级键盘设置 > 语言栏选项 > 高级键盘设置 > 更改按键顺序 > 切换 ...

- Linux "yin"才们的奇"yin"小技巧 --请用东北发音夸他们

1. include/linux/bits.h GENMASK(h, l) /* * Create a contiguous bitmask starting at bit position @l a ...

- Counting Cliques(HDU-5952)【DFS】

题目链接:https://vjudge.net/problem/HDU-5952 题意:有一张无向图,求结点数量为S的团的数量. 思路:如果不加一点处理直接用DFS必然会超时,因为在搜索过程中会出现遍 ...

- RESTful接口开发规范

最近在研究restful,公司开发要使用,所以自己就去网上找了好些资料,并整理了一套公司开发的接口规范.当然,我也只是刚刚入坑.还不是很全面.但是这就是一个过程.一点点,总会好起来的.以下是就是RES ...

- 关于Django ModelForm渲染时间格式问题

关于Django ModelForm渲染时间格式问题 直接定义DateTimeInput或者DateTimeFile是不行的,渲染在html页面中的仍然是Input text类型 解决办法:自定义小部 ...

- Go语言学习笔记(5)——集合Map

集合Map map是使用hash表实现的.无序的键值对的集合!只能通过key获得value,而不能通过index. map的长度不固定,和slice一样都是引用类型.len函数适用于map,返回map ...