记录一次k8s环境尝试过程(初始方案,现在已经做过很多完善,例如普罗米修斯)

记录一次Team k8s环境搭建过程(初始方案,现在已经做过很多完善,例如普罗米修斯)

span::selection, .CodeMirror-line > span > span::selection { background: #d7d4f0; }.CodeMirror-line::-moz-selection, .CodeMirror-line > span::-moz-selection, .CodeMirror-line > span > span::-moz-selection { background: #d7d4f0; }.cm-searching {background: #ffa; background: rgba(255, 255, 0, .4);}.cm-force-border { padding-right: .1px; }@media print { .CodeMirror div.CodeMirror-cursors {visibility: hidden;}}.cm-tab-wrap-hack:after { content: ""; }span.CodeMirror-selectedtext { background: none; }.CodeMirror-activeline-background, .CodeMirror-selected {transition: visibility 0ms 100ms;}.CodeMirror-blur .CodeMirror-activeline-background, .CodeMirror-blur .CodeMirror-selected {visibility:hidden;}.CodeMirror-blur .CodeMirror-matchingbracket {color:inherit !important;outline:none !important;text-decoration:none !important;}.CodeMirror-sizer {min-height:auto !important;}

-->

li {list-style-type:decimal;}.wiz-editor-body ol.wiz-list-level2 > li {list-style-type:lower-latin;}.wiz-editor-body ol.wiz-list-level3 > li {list-style-type:lower-roman;}.wiz-editor-body li.wiz-list-align-style {list-style-position: inside; margin-left: -1em;}.wiz-editor-body blockquote {padding: 0 12px;}.wiz-editor-body blockquote > :first-child {margin-top:0;}.wiz-editor-body blockquote > :last-child {margin-bottom:0;}.wiz-editor-body img {border:0;max-width:100%;height:auto !important;margin:2px 0;}.wiz-editor-body table {border-collapse:collapse;border:1px solid #bbbbbb;}.wiz-editor-body td,.wiz-editor-body th {padding:4px 8px;border-collapse:collapse;border:1px solid #bbbbbb;min-height:28px;word-break:break-word;box-sizing: border-box;}.wiz-editor-body td > div:first-child {margin-top:0;}.wiz-editor-body td > div:last-child {margin-bottom:0;}.wiz-editor-body img.wiz-svg-image {box-shadow:1px 1px 4px #E8E8E8;}.wiz-hide {display:none !important;}

-->

| hostname | OS | purpose | ip |

| ub2-citst001.abc.com | ubuntu16.04 | docker registry | 10.239.220.38 |

| centos-k8s001.abc.com | centos7.3 | haproxy+keepalived+etcd(leader) | 10.239.219.154 |

| centos-k8s002.abc.com | centos7.3 | haproxy+keepalived+etcd | 10.239.219.153 |

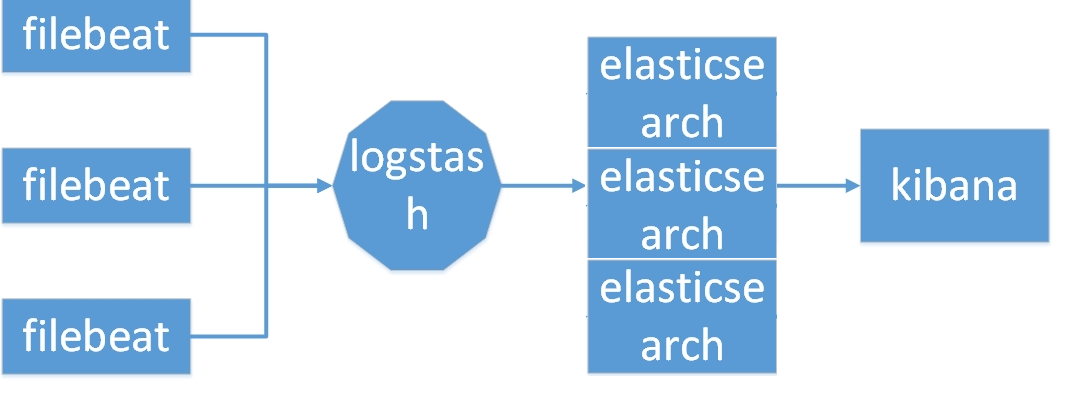

| centos-k8s003.abc.com | centos7.3 | etcd+nginx+ELK(elasticsearch,logstash,kibana) | 10.239.219.206 |

| centos-k8s004.abc.com | centos7.3 | k8s master (kube-apiserver、kube-controller-manager、kube-scheduler) | 10.239.219.207 |

| centos-k8s005.abc.com | centos7.3 |

k8s slave(kubeproxy,kubelet,docker,flanneld)+OSS service+ELK(elasticsearch+filebeat)

|

10.239.219.208 |

| centos-k8s006.abc.com | centos7.3 | k8s slave(kubeproxy,kubelet,docker,flanneld)+mysql master+OSS service+ELK(elasticsearch+filebeat) | 10.239.219.210 |

| centos-k8s007.abc.com | centos7.3 | k8s slave(kubeproxy,kubelet,docker,flanneld)+mysql slave+OSS service+ELK(elasticsearch+filebeat) | 10.239.219.209 |

- vi /etc/profile

- export http_proxy=http://ip or realm :port

- export https_proxy=http://ip or realm :port

1.备份原来的源

- sudo cp /etc/apt/sources.list /etc/apt/sources_init.list

将以前的源备份一下,以防以后可以用的。

2.更换源

- sudo vi /etc/apt/sources.list

deb http://mirrors.163.com/ubuntu/ wily-security main restricted universe multiverse

deb http://mirrors.163.com/ubuntu/ wily-updates main restricted universe multiverse

deb http://mirrors.163.com/ubuntu/ wily-proposed main restricted universe multiverse

deb http://mirrors.163.com/ubuntu/ wily-backports main restricted universe multiverse

deb-src http://mirrors.163.com/ubuntu/ wily main restricted universe multiverse

deb-src http://mirrors.163.com/ubuntu/ wily-security main restricted universe multiverse

deb-src http://mirrors.163.com/ubuntu/ wily-updates main restricted universe multiverse

deb-src http://mirrors.163.com/ubuntu/ wily-proposed main restricted universe multiverse

deb-src http://mirrors.163.com/ubuntu/ wily-backports main restricted universe multiverse

- deb http://mirrors.163.com/ubuntu/ wily main restricted universe multiverse

- deb http://mirrors.163.com/ubuntu/ wily-security main restricted universe multiverse

- deb http://mirrors.163.com/ubuntu/ wily-updates main restricted universe multiverse

- deb http://mirrors.163.com/ubuntu/ wily-proposed main restricted universe multiverse

- deb http://mirrors.163.com/ubuntu/ wily-backports main restricted universe multiverse

- deb-src http://mirrors.163.com/ubuntu/ wily main restricted universe multiverse

- deb-src http://mirrors.163.com/ubuntu/ wily-security main restricted universe multiverse

- deb-src http://mirrors.163.com/ubuntu/ wily-updates main restricted universe multiverse

- deb-src http://mirrors.163.com/ubuntu/ wily-proposed main restricted universe multiverse

- deb-src http://mirrors.163.com/ubuntu/ wily-backports main restricted universe multiverse

3.更新

更新源

- sudo apt-get update

- Ubuntu & centos docker 安装:

- {

- "registry-mirrors": ["http://hub-mirror.c.163.com"]

- }

- systemctl restart docker

- docker pull hello-world

- sudo mkdir -p /etc/systemd/system/docker.service.d

- vim /etc/systemd/system/docker.service.d/http-proxy.conf

- [Service]

- Environment="HTTP_PROXY=xxx.xxx.xxx.xxx:port"

- Environment="HTTPS_PROXY=xxx.xxx.xxx.xxx:port"

- Using default tag: latest

- latest: Pulling from library/hello-world

- d1725b59e92d: Pull complete

- Digest: sha256:0add3ace90ecb4adbf7777e9aacf18357296e799f81cabc9fde470971e499788

- Status: Downloaded newer image for hello-world:latest

- docker images

- docker pull index.tenxcloud.com/docker_library/registry

- docker tag daocloud.io/library/registry:latest registry

- docker images

- makedir -p /docker/registry/

- docker run -d -p 5000:5000 --name registry --restart=always --privileged=true -v /docker/registry:/var/lib/registry registry

"registry-mirrors": ["http://hub-mirror.c.163.com"],"insecure-registries":["宿主机的ip或域名:5000"]

}

- {

- "registry-mirrors": ["http://hub-mirror.c.163.com"],"insecure-registries":["宿主机的ip或域名:5000"]

- }

- systemctl restart docker

"registry-mirrors": ["http://hub-mirror.c.163.com"],"insecure-registries":["私有仓库ip或者域名 :5000"]

}

- {

- "registry-mirrors": ["http://hub-mirror.c.163.com"],"insecure-registries":["私有仓库ip或者域名 :5000"]

- }

service docker restart

- systemctl daemon-reload

- service docker restart

etcd简介

etcd是一个高可用的分布式键值(key-value)数据库。etcd内部采用raft协议作为一致性算法,etcd基于Go语言实现。

etcd是一个服务发现系统,具备以下的特点:

简单:安装配置简单,而且提供了HTTP API进行交互,使用也很简单

安全:支持SSL证书验证

快速:根据官方提供的benchmark数据,单实例支持每秒2k+读操作

可靠:采用raft算法,实现分布式系统数据的可用性和一致性

etcd应用场景

用于服务发现,服务发现(ServiceDiscovery)要解决的是分布式系统中最常见的问题之一,即在同一个分布式集群中的进程或服务如何才能找到对方并建立连接。

要解决服务发现的问题,需要具备下面三种必备属性。

- 一个强一致性、高可用的服务存储目录。

基于Ralf算法的etcd天生就是这样一个强一致性、高可用的服务存储目录。

一种注册服务和健康服务健康状况的机制。

etcd安装

分别在k8s001,k8s002,k8s003上安装etcd,组成etcd集群可以直接在主机上安装,也可以通过docker安装部署主机安装:1. 分别在三台机器上运行: yum install etcd -y2. yum安装的etcd默认配置文件在/etc/etcd/etcd.conf,修改这个文件centos-k8s001:

# 节点名称

ETCD_NAME=centos-k8s001

# 数据存放位置

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

#ETCD_WAL_DIR=""

#ETCD_SNAPSHOT_COUNT="10000"

#ETCD_HEARTBEAT_INTERVAL="100"

#ETCD_ELECTION_TIMEOUT="1000"

# 监听其他 Etcd 实例的地址

ETCD_LISTEN_PEER_URLS="http://0.0.0.0:2380"

# 监听客户端地址

ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379,http://0.0.0.0:4001"

#ETCD_MAX_SNAPSHOTS="5"

#ETCD_MAX_WALS="5"

#ETCD_CORS=""

#

#[cluster]

# 通知其他 Etcd 实例地址

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://centos-k8s001:2380"

# if you use different ETCD_NAME (e.g. test), set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http://..."

# 初始化集群内节点地址

ETCD_INITIAL_CLUSTER="centos-k8s001=http://centos-k8s001:2380,centos-k8s002=http://centos-k8s002:2380,centos-k8s003=http://centos-k8s003:2380"

# 初始化集群状态,new 表示新建

ETCD_INITIAL_CLUSTER_STATE="new"

# 初始化集群 token

ETCD_INITIAL_CLUSTER_TOKEN="mritd-etcd-cluster"

# 通知 客户端地址

ETCD_ADVERTISE_CLIENT_URLS="http://centos-k8s001:2379,http://centos-k8s001:4001"

- # [member]

- # 节点名称

- ETCD_NAME=centos-k8s001

- # 数据存放位置

- ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

- #ETCD_WAL_DIR=""

- #ETCD_SNAPSHOT_COUNT="10000"

- #ETCD_HEARTBEAT_INTERVAL="100"

- #ETCD_ELECTION_TIMEOUT="1000"

- # 监听其他 Etcd 实例的地址

- ETCD_LISTEN_PEER_URLS="http://0.0.0.0:2380"

- # 监听客户端地址

- ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379,http://0.0.0.0:4001"

- #ETCD_MAX_SNAPSHOTS="5"

- #ETCD_MAX_WALS="5"

- #ETCD_CORS=""

- #

- #[cluster]

- # 通知其他 Etcd 实例地址

- ETCD_INITIAL_ADVERTISE_PEER_URLS="http://centos-k8s001:2380"

- # if you use different ETCD_NAME (e.g. test), set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http://..."

- # 初始化集群内节点地址

- ETCD_INITIAL_CLUSTER="centos-k8s001=http://centos-k8s001:2380,centos-k8s002=http://centos-k8s002:2380,centos-k8s003=http://centos-k8s003:2380"

- # 初始化集群状态,new 表示新建

- ETCD_INITIAL_CLUSTER_STATE="new"

- # 初始化集群 token

- ETCD_INITIAL_CLUSTER_TOKEN="mritd-etcd-cluster"

- # 通知 客户端地址

- ETCD_ADVERTISE_CLIENT_URLS="http://centos-k8s001:2379,http://centos-k8s001:4001"

centos-k8s002:

ETCD_NAME=centos-k8s002

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

#ETCD_WAL_DIR=""

#ETCD_SNAPSHOT_COUNT="10000"

#ETCD_HEARTBEAT_INTERVAL="100"

#ETCD_ELECTION_TIMEOUT="1000"

ETCD_LISTEN_PEER_URLS="http://0.0.0.0:2380"

ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379,http://0.0.0.0:4001"

#ETCD_MAX_SNAPSHOTS="5"

#ETCD_MAX_WALS="5"

#ETCD_CORS=""

#

#[cluster]

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://centos-k8s002:2380"

# if you use different ETCD_NAME (e.g. test), set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http://..."

ETCD_INITIAL_CLUSTER="centos-k8s001=http://centos-k8s001:2380,centos-k8s002=http://centos-k8s002:2380,centos-k8s003=http://centos-k8s003:2380"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_INITIAL_CLUSTER_TOKEN="mritd-etcd-cluster"

ETCD_ADVERTISE_CLIENT_URLS="http://centos-k8s002:2379,http://centos-k8s002:4001"

- # [member]

- ETCD_NAME=centos-k8s002

- ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

- #ETCD_WAL_DIR=""

- #ETCD_SNAPSHOT_COUNT="10000"

- #ETCD_HEARTBEAT_INTERVAL="100"

- #ETCD_ELECTION_TIMEOUT="1000"

- ETCD_LISTEN_PEER_URLS="http://0.0.0.0:2380"

- ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379,http://0.0.0.0:4001"

- #ETCD_MAX_SNAPSHOTS="5"

- #ETCD_MAX_WALS="5"

- #ETCD_CORS=""

- #

- #[cluster]

- ETCD_INITIAL_ADVERTISE_PEER_URLS="http://centos-k8s002:2380"

- # if you use different ETCD_NAME (e.g. test), set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http://..."

- ETCD_INITIAL_CLUSTER="centos-k8s001=http://centos-k8s001:2380,centos-k8s002=http://centos-k8s002:2380,centos-k8s003=http://centos-k8s003:2380"

- ETCD_INITIAL_CLUSTER_STATE="new"

- ETCD_INITIAL_CLUSTER_TOKEN="mritd-etcd-cluster"

- ETCD_ADVERTISE_CLIENT_URLS="http://centos-k8s002:2379,http://centos-k8s002:4001"

centos-k8s003:

ETCD_NAME=centos-k8s003

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

#ETCD_WAL_DIR=""

#ETCD_SNAPSHOT_COUNT="10000"

#ETCD_HEARTBEAT_INTERVAL="100"

#ETCD_ELECTION_TIMEOUT="1000"

ETCD_LISTEN_PEER_URLS="http://0.0.0.0:2380"

ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379,http://0.0.0.0:4001"

#ETCD_MAX_SNAPSHOTS="5"

#ETCD_MAX_WALS="5"

#ETCD_CORS=""

#

#[cluster]

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://centos-k8s003:2380"

# if you use different ETCD_NAME (e.g. test), set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http://..."

ETCD_INITIAL_CLUSTER="centos-k8s001=http://centos-k8s001:2380,centos-k8s002=http://centos-k8s002:2380,centos-k8s003=http://centos-k8s003:2380"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_INITIAL_CLUSTER_TOKEN="mritd-etcd-cluster"

ETCD_ADVERTISE_CLIENT_URLS="http://centos-k8s003:2379,http://centos-k8s003:4001"

- # [member]

- ETCD_NAME=centos-k8s003

- ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

- #ETCD_WAL_DIR=""

- #ETCD_SNAPSHOT_COUNT="10000"

- #ETCD_HEARTBEAT_INTERVAL="100"

- #ETCD_ELECTION_TIMEOUT="1000"

- ETCD_LISTEN_PEER_URLS="http://0.0.0.0:2380"

- ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379,http://0.0.0.0:4001"

- #ETCD_MAX_SNAPSHOTS="5"

- #ETCD_MAX_WALS="5"

- #ETCD_CORS=""

- #

- #[cluster]

- ETCD_INITIAL_ADVERTISE_PEER_URLS="http://centos-k8s003:2380"

- # if you use different ETCD_NAME (e.g. test), set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http://..."

- ETCD_INITIAL_CLUSTER="centos-k8s001=http://centos-k8s001:2380,centos-k8s002=http://centos-k8s002:2380,centos-k8s003=http://centos-k8s003:2380"

- ETCD_INITIAL_CLUSTER_STATE="new"

- ETCD_INITIAL_CLUSTER_TOKEN="mritd-etcd-cluster"

- ETCD_ADVERTISE_CLIENT_URLS="http://centos-k8s003:2379,http://centos-k8s003:4001"

3. 在各节点上重启etcd服务:

- systemctl restart etcd

4.查看集群是否成功:

- etcdctl member list

713da186acaefc5b: name=etcd2 peerURLs=http://centos-k8s002:2380 clientURLs=http://centos-k8s002:2379,http://centos-k8s002:4001 isLeader=false

fabaedd18a2da8a7: name=etcd3 peerURLs=http://centos-k8s003:2380 clientURLs=http://centos-k8s003:2379,http://centos-k8s003:4001 isLeader=false

- 4cb07e7292111d83: name=etcd1 peerURLs=http://centos-k8s001:2380 clientURLs=http://centos-k8s001:2379,http://centos-k8s001:4001 isLeader=true

- 713da186acaefc5b: name=etcd2 peerURLs=http://centos-k8s002:2380 clientURLs=http://centos-k8s002:2379,http://centos-k8s002:4001 isLeader=false

- fabaedd18a2da8a7: name=etcd3 peerURLs=http://centos-k8s003:2380 clientURLs=http://centos-k8s003:2379,http://centos-k8s003:4001 isLeader=false

- docker pull quay.io/coreos/etcd

docker push ub2-citst001.abc.com:5000/quay.io/coreos/etcd

- x

- docker tag quay.io/coreos/etcd ub2-citst001.abc.com:5000/quay.io/coreos/etcd

- docker push ub2-citst001.abc.com:5000/quay.io/coreos/etcd

- x

- docker pull ub2-citst001.abc.com:5000/quay.io/coreos/etcd

docker run -d --name etcd -p 2379:2379 -p 2380:2380 -p 4001:4001 --restart=always --volume=etcd-data:/etcd-data ub2-citst001.abc.com:5000/quay.io/coreos/etcd /usr/local/bin/etcd --data-dir=/etcd-data --name etcd1 --initial-advertise-peer-urls http://centos-k8s001:2380 --listen-peer-urls http://0.0.0.0:2380 --advertise-client-urls http://centos-k8s001:2379,http://centos-k8s001:4001 --listen-client-urls http://0.0.0.0:2379 --initial-cluster-state new --initial-cluster-token docker-etcd --initial-cluster etcd1=http://centos-k8s001:2380,etcd2=http://centos-k8s002:2380,etcd3=http://centos-k8s003:2380

- x

- // centos-k8s001启动

- docker run -d --name etcd -p 2379:2379 -p 2380:2380 -p 4001:4001 --restart=always --volume=etcd-data:/etcd-data ub2-citst001.abc.com:5000/quay.io/coreos/etcd /usr/local/bin/etcd --data-dir=/etcd-data --name etcd1 --initial-advertise-peer-urls http://centos-k8s001:2380 --listen-peer-urls http://0.0.0.0:2380 --advertise-client-urls http://centos-k8s001:2379,http://centos-k8s001:4001 --listen-client-urls http://0.0.0.0:2379 --initial-cluster-state new --initial-cluster-token docker-etcd --initial-cluster etcd1=http://centos-k8s001:2380,etcd2=http://centos-k8s002:2380,etcd3=http://centos-k8s003:2380

docker run -d --name etcd -p 2379:2379 -p 2380:2380 -p 4001:4001 --restart=always --volume=etcd-data:/etcd-data ub2-citst001.abc.com:5000/quay.io/coreos/etcd /usr/local/bin/etcd --data-dir=/etcd-data --name etcd2 --initial-advertise-peer-urls http://centos-k8s002:2380 --listen-peer-urls http://0.0.0.0:2380 --advertise-client-urls http://centos-k8s002:2379,http://centos-k8s002:4001 --listen-client-urls http://0.0.0.0:2379 --initial-cluster-state new --initial-cluster-token docker-etcd --initial-cluster etcd1=http://centos-k8s001:2380,etcd2=http://centos-k8s002:2380,etcd3=http://centos-k8s003:2380

- x

- //centos-k8s002启动

- docker run -d --name etcd -p 2379:2379 -p 2380:2380 -p 4001:4001 --restart=always --volume=etcd-data:/etcd-data ub2-citst001.abc.com:5000/quay.io/coreos/etcd /usr/local/bin/etcd --data-dir=/etcd-data --name etcd2 --initial-advertise-peer-urls http://centos-k8s002:2380 --listen-peer-urls http://0.0.0.0:2380 --advertise-client-urls http://centos-k8s002:2379,http://centos-k8s002:4001 --listen-client-urls http://0.0.0.0:2379 --initial-cluster-state new --initial-cluster-token docker-etcd --initial-cluster etcd1=http://centos-k8s001:2380,etcd2=http://centos-k8s002:2380,etcd3=http://centos-k8s003:2380

docker run -d --name etcd -p 2379:2379 -p 2380:2380 -p 4001:4001 --restart=always --volume=etcd-data:/etcd-data ub2-citst001.abc.com:5000/quay.io/coreos/etcd /usr/local/bin/etcd --data-dir=/etcd-data --name etcd3 --initial-advertise-peer-urls http://centos-k8s003:2380 --listen-peer-urls http://0.0.0.0:2380 --advertise-client-urls http://centos-k8s003:2379,http://centos-k8s003:4001 --listen-client-urls http://0.0.0.0:2379 --initial-cluster-state new --initial-cluster-token docker-etcd --initial-cluster etcd1=http://centos-k8s001:2380,etcd2=http://centos-k8s002:2380,etcd3=http://centos-k8s003:2380

- x

- // centos-k8s003启动

- docker run -d --name etcd -p 2379:2379 -p 2380:2380 -p 4001:4001 --restart=always --volume=etcd-data:/etcd-data ub2-citst001.abc.com:5000/quay.io/coreos/etcd /usr/local/bin/etcd --data-dir=/etcd-data --name etcd3 --initial-advertise-peer-urls http://centos-k8s003:2380 --listen-peer-urls http://0.0.0.0:2380 --advertise-client-urls http://centos-k8s003:2379,http://centos-k8s003:4001 --listen-client-urls http://0.0.0.0:2379 --initial-cluster-state new --initial-cluster-token docker-etcd --initial-cluster etcd1=http://centos-k8s001:2380,etcd2=http://centos-k8s002:2380,etcd3=http://centos-k8s003:2380

// 此时已经进入容器 执行:

etcdctl member list

// 看到一下信息,表示etcd container 集群部署成功

4cb07e7292111d83: name=etcd1 peerURLs=http://centos-k8s001:2380 clientURLs=http://centos-k8s001:2379,http://centos-k8s001:4001 isLeader=true

713da186acaefc5b: name=etcd2 peerURLs=http://centos-k8s002:2380 clientURLs=http://centos-k8s002:2379,http://centos-k8s002:4001 isLeader=false

fabaedd18a2da8a7: name=etcd3 peerURLs=http://centos-k8s003:2380 clientURLs=http://centos-k8s003:2379,http://centos-k8s003:4001 isLeader=false

- docker extc -it <container name or id> /bin/bash

- // 此时已经进入容器 执行:

- etcdctl member list

- // 看到一下信息,表示etcd container 集群部署成功

- 4cb07e7292111d83: name=etcd1 peerURLs=http://centos-k8s001:2380 clientURLs=http://centos-k8s001:2379,http://centos-k8s001:4001 isLeader=true

- 713da186acaefc5b: name=etcd2 peerURLs=http://centos-k8s002:2380 clientURLs=http://centos-k8s002:2379,http://centos-k8s002:4001 isLeader=false

- fabaedd18a2da8a7: name=etcd3 peerURLs=http://centos-k8s003:2380 clientURLs=http://centos-k8s003:2379,http://centos-k8s003:4001 isLeader=false

kube-apiserver 提供kubernetes集群的API调用

kube-controller-manager 确保集群服务

kube-scheduler 调度容器,分配到Node

kubelet 在Node节点上按照配置文件中定义的容器规格启动容器

kube-proxy 提供网络代理服务

•先决条件

如下操作在上图4台机器执行

1.确保系统已经安装epel-release源

- # yum -y install epel-release

2.关闭防火墙服务和selinx,避免与docker容器的防火墙规则冲突。

- # systemctl stop firewalld

- # systemctl disable firewalld

- # setenforce 0

•安装配置Kubernetes Master

如下操作在master上执行

1.使用yum安装etcd和kubernetes-master

- # yum -y install kubernetes-master

2.编辑/etc/kubernetes/apiserver文件

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

# The port on the local server to listen on.

#KUBE_API_PORT="--port=8080"

# Port minions listen on

KUBELET_PORT="--kubelet-port=10250"

# Comma separated list of nodes in the etcd cluster

KUBE_ETCD_SERVERS="--etcd-servers=http://centos-k8s001:2379,http://centos-k8s002:2379,http://centos-k8s003:2379"

# Address range to use for services

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16"

# default admission control policies

#KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota"

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,ResourceQuota"

# Add your own!

KUBE_API_ARGS=""

- # The address on the local server to listen to.

- KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

- # The port on the local server to listen on.

- #KUBE_API_PORT="--port=8080"

- # Port minions listen on

- KUBELET_PORT="--kubelet-port=10250"

- # Comma separated list of nodes in the etcd cluster

- KUBE_ETCD_SERVERS="--etcd-servers=http://centos-k8s001:2379,http://centos-k8s002:2379,http://centos-k8s003:2379"

- # Address range to use for services

- KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16"

- # default admission control policies

- #KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota"

- KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,ResourceQuota"

- # Add your own!

- KUBE_API_ARGS=""

3.启动etcd、kube-apiserver、kube-controller-manager、kube-scheduler等服务,并设置开机启动。

//查看服务运行状态 使用 systemctl status ,例:

systemctl status kube-apiserver

- for SERVICES in etcd kube-apiserver kube-controller-manager kube-scheduler; do systemctl restart $SERVICES;systemctl enable $SERVICES;systemctl status $SERVICES ; done

- //查看服务运行状态 使用 systemctl status ,例:

- systemctl status kube-apiserver

4.在 centos-k8s001 的etcd中定义flannel网络

docker ps //找到etcd容器id

docker exec -it <容器id> /bin/sh //进入容器,然后执行下面命令

// 若直接在宿主机部署etcd,则直接执行:

etcdctl mk /atomic.io/network/config '{"Network":"172.17.0.0/16"}' //etcd中定义flannel

// 在其他etcd 节点查看flannel 网络配置:

// 如在cento-k8s002上查看:

etcdctl get /atomic.io/network/config

// 正确输出:

{"Network":"172.17.0.0/16"} //配置成功

- //如果是容器的方式部署etcd,先执行:

- docker ps //找到etcd容器id

- docker exec -it <容器id> /bin/sh //进入容器,然后执行下面命令

- // 若直接在宿主机部署etcd,则直接执行:

- etcdctl mk /atomic.io/network/config '{"Network":"172.17.0.0/16"}' //etcd中定义flannel

- // 在其他etcd 节点查看flannel 网络配置:

- // 如在cento-k8s002上查看:

- etcdctl get /atomic.io/network/config

- // 正确输出:

- {"Network":"172.17.0.0/16"} //配置成功

•安装配置Kubernetes Node

如下操作在centos-k8s005,6,7上执行

1.使用yum安装flannel和kubernetes-node

- # yum -y install flannel kubernetes-node

2.为flannel网络指定etcd服务,修改/etc/sysconfig/flanneld文件

- FLANNEL_ETCD="http://centos-k8s001:2379"

- FLANNEL_ETCD_KEY="/atomic.io/network"

3.修改/etc/kubernetes/config文件

- KUBE_LOGTOSTDERR="--logtostderr=true"

- KUBE_LOG_LEVEL="--v=0"

- KUBE_ALLOW_PRIV="--allow-privileged=false"

- KUBE_MASTER="--master=http://centos-k8s004:8080"

4.按照如下内容修改对应node的配置文件/etc/kubernetes/kubelet

centos-k8s005:

- KUBELET_ADDRESS="--address=0.0.0.0"

- KUBELET_PORT="--port=10250"

- KUBELET_HOSTNAME="--hostname-override=centos-k8s005" #修改成对应Node的IP

- KUBELET_API_SERVER="--api-servers=http://centos-k8s004:8080" #指定Master节点的API Server

- KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest"

- KUBELET_ARGS=""

centos-k8s006:

- KUBELET_ADDRESS="--address=0.0.0.0"

- KUBELET_PORT="--port=10250"

- KUBELET_HOSTNAME="--hostname-override=centos-k8s006"

- KUBELET_API_SERVER="--api-servers=http://centos-k8s004:8080"

- KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest"

- KUBELET_ARGS=""

centos-k8s007:

- KUBELET_ADDRESS="--address=0.0.0.0"

- KUBELET_PORT="--port=10250"

- KUBELET_HOSTNAME="--hostname-override=centos-k8s007"

- KUBELET_API_SERVER="--api-servers=http://centos-k8s004:8080"

- KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest"

- KUBELET_ARGS=""

5.在所有Node节点上启动kube-proxy,kubelet,docker,flanneld等服务,并设置开机启动。

- # for SERVICES in kube-proxy kubelet docker flanneld;do systemctl restart $SERVICES;systemctl enable $SERVICES;systemctl status $SERVICES; done

•验证集群是否安装成功

在master centos-k8s004上执行如下命令

- [root@centos-k8s004 ~]# kubectl get node

- NAME STATUS AGE

- centos-k8s005 Ready 38d

- centos-k8s006 Ready 38d

- centos-k8s007 Ready 37d

|-- master

| |-- docker-entrypoint.sh

| |-- Dockerfile

| `-- my.cnf

`-- slave

|-- docker-entrypoint.sh

|-- Dockerfile

`-- my.cnf

# master/docker-entrypoint.sh:

#!/bin/bash

MYSQL="mysql -uroot -proot"

sql="CREATE USER '$MYSQL_REPLICATION_USER'@'%' IDENTIFIED BY '$MYSQL_REPLICATION_PASSWORD'"

$MYSQL -e "$sql"

sql="GRANT REPLICATION SLAVE ON *.* TO '$MYSQL_REPLICATION_USER'@'%' IDENTIFIED BY '$MYSQL_REPLICATION_PASSWORD'"

$MYSQL -e "$sql"

sql="FLUSH PRIVILEGES"

$MYSQL -e "$sql"

# master/my.cnf :

[mysqld]

log-bin = mysql-bin

server-id = 1

character_set_server=utf8

log_bin_trust_function_creators=1

skip-name-resolve

binlog_format = mixed

relay-log = relay-bin

relay-log-index = slave-relay-bin.index

auto-increment-increment = 2

auto-increment-offset = 1

# master/Dockerfile:

FROM mysql:5.6

ENV http_proxy http://child-prc.abc.com:913

ENV https_proxy https://child-prc.abc.com:913

COPY my.cnf /etc/mysql/mysql.cnf

COPY docker-entrypoint.sh /docker-entrypoint-initdb.d/

#slave/docker-entrypoint.sh:

#!/bin/bash

MYSQL="mysql -uroot -proot"

MYSQL_MASTER="mysql -uroot -proot -h$MYSQL_MASTER_SERVICE_HOST -P$MASTER_PORT"

sql="stop slave"

$MYSQL -e "$sql"

sql="SHOW MASTER STATUS"

result="$($MYSQL_MASTER -e "$sql")"

dump_data=/master-condition.log

echo -e "$result" > $dump_data

var=$(cat /master-condition.log | grep mysql-bin)

MASTER_LOG_FILE=$(echo $var | awk '{split($0,arr," ");print arr[1]}')

MASTER_LOG_POS=$(echo $var | awk '{split($0,arr," ");print arr[2]}')

sql="reset slave"

$MYSQL -e "$sql"

sql="CHANGE MASTER TO master_host='$MYSQL_MASTER_SERVICE_HOST', master_user='$MYSQL_REPLICATION_USER', master_password='$MYSQL_REPLICATION_PASSWORD', master_log_file='$MASTER_LOG_FILE', master_log_pos=$MASTER_LOG_POS, master_port=$MASTER_PORT"

$MYSQL -e "$sql"

sql="start slave"

$MYSQL -e "$sql"

#slave/my.cnf:

[mysqld]

log-bin = mysql-bin

#server-id 不能必须保证唯一,不能和其他mysql images冲突

server-id = 2

character_set_server=utf8

log_bin_trust_function_creators=1

#slave/Dockerfile:

FROM mysql:5.6

ENV http_proxy http://child-prc.abc.com:913

ENV https_proxy https://child-prc.abc.com:913

COPY my.cnf /etc/mysql/mysql.cnf

COPY docker-entrypoint.sh /docker-entrypoint-initdb.d/

RUN touch master-condition.log && chown -R mysql:mysql /master-condition.log

- x

- `-- mysql-build_file

- |-- master

- | |-- docker-entrypoint.sh

- | |-- Dockerfile

- | `-- my.cnf

- `-- slave

- |-- docker-entrypoint.sh

- |-- Dockerfile

- `-- my.cnf

- # master/docker-entrypoint.sh:

- #!/bin/bash

- MYSQL="mysql -uroot -proot"

- sql="CREATE USER '$MYSQL_REPLICATION_USER'@'%' IDENTIFIED BY '$MYSQL_REPLICATION_PASSWORD'"

- $MYSQL -e "$sql"

- sql="GRANT REPLICATION SLAVE ON *.* TO '$MYSQL_REPLICATION_USER'@'%' IDENTIFIED BY '$MYSQL_REPLICATION_PASSWORD'"

- $MYSQL -e "$sql"

- sql="FLUSH PRIVILEGES"

- $MYSQL -e "$sql"

- # master/my.cnf :

- [mysqld]

- log-bin = mysql-bin

- server-id = 1

- character_set_server=utf8

- log_bin_trust_function_creators=1

- skip-name-resolve

- binlog_format = mixed

- relay-log = relay-bin

- relay-log-index = slave-relay-bin.index

- auto-increment-increment = 2

- auto-increment-offset = 1

- # master/Dockerfile:

- FROM mysql:5.6

- ENV http_proxy http://child-prc.abc.com:913

- ENV https_proxy https://child-prc.abc.com:913

- COPY my.cnf /etc/mysql/mysql.cnf

- COPY docker-entrypoint.sh /docker-entrypoint-initdb.d/

- #slave/docker-entrypoint.sh:

- #!/bin/bash

- MYSQL="mysql -uroot -proot"

- MYSQL_MASTER="mysql -uroot -proot -h$MYSQL_MASTER_SERVICE_HOST -P$MASTER_PORT"

- sql="stop slave"

- $MYSQL -e "$sql"

- sql="SHOW MASTER STATUS"

- result="$($MYSQL_MASTER -e "$sql")"

- dump_data=/master-condition.log

- echo -e "$result" > $dump_data

- var=$(cat /master-condition.log | grep mysql-bin)

- MASTER_LOG_FILE=$(echo $var | awk '{split($0,arr," ");print arr[1]}')

- MASTER_LOG_POS=$(echo $var | awk '{split($0,arr," ");print arr[2]}')

- sql="reset slave"

- $MYSQL -e "$sql"

- sql="CHANGE MASTER TO master_host='$MYSQL_MASTER_SERVICE_HOST', master_user='$MYSQL_REPLICATION_USER', master_password='$MYSQL_REPLICATION_PASSWORD', master_log_file='$MASTER_LOG_FILE', master_log_pos=$MASTER_LOG_POS, master_port=$MASTER_PORT"

- $MYSQL -e "$sql"

- sql="start slave"

- $MYSQL -e "$sql"

- #slave/my.cnf:

- [mysqld]

- log-bin = mysql-bin

- #server-id 不能必须保证唯一,不能和其他mysql images冲突

- server-id = 2

- character_set_server=utf8

- log_bin_trust_function_creators=1

- #slave/Dockerfile:

- FROM mysql:5.6

- ENV http_proxy http://child-prc.abc.com:913

- ENV https_proxy https://child-prc.abc.com:913

- COPY my.cnf /etc/mysql/mysql.cnf

- COPY docker-entrypoint.sh /docker-entrypoint-initdb.d/

- RUN touch master-condition.log && chown -R mysql:mysql /master-condition.log

docker build -t ub2-citst001.abc.com:5000/mysql-master .

# output 输出如下说明成功build:

#Sending build context to Docker daemon 4.096kB

#Step 1/5 : FROM mysql:5.6

# ---> a46c2a2722b9

#Step 2/5 : ENV http_proxy http://child-prc.abc.com:913

# ---> Using cache

# ---> 873859820af7

#Step 3/5 : ENV https_proxy https://child-prc.abc.com:913

# ---> Using cache

# ---> b5391bed1bda

#Step 4/5 : COPY my.cnf /etc/mysql/mysql.cnf

# ---> Using cache

# ---> ccbdced047a3

#Step 5/5 : COPY docker-entrypoint.sh /docker-entrypoint-initdb.d/

# ---> Using cache

# ---> 81cfad9f0268

#Successfully built 81cfad9f0268

#Successfully tagged ub2-citst001.sh.abc.com:5000/mysql-master

cd /mysql-build_file/slave

docker build -t ub2-citst001.abc.com:5000/mysql-slave .

# 查看images

docker images

# 将images放到私有镜像仓库:

docker push ub2-citst001.abc.com:5000/mysql-slave

docker push ub2-citst001.abc.com:5000/mysql-master

- x

- cd /mysql-build_file/master

- docker build -t ub2-citst001.abc.com:5000/mysql-master .

- # output 输出如下说明成功build:

- #Sending build context to Docker daemon 4.096kB

- #Step 1/5 : FROM mysql:5.6

- # ---> a46c2a2722b9

- #Step 2/5 : ENV http_proxy http://child-prc.abc.com:913

- # ---> Using cache

- # ---> 873859820af7

- #Step 3/5 : ENV https_proxy https://child-prc.abc.com:913

- # ---> Using cache

- # ---> b5391bed1bda

- #Step 4/5 : COPY my.cnf /etc/mysql/mysql.cnf

- # ---> Using cache

- # ---> ccbdced047a3

- #Step 5/5 : COPY docker-entrypoint.sh /docker-entrypoint-initdb.d/

- # ---> Using cache

- # ---> 81cfad9f0268

- #Successfully built 81cfad9f0268

- #Successfully tagged ub2-citst001.sh.abc.com:5000/mysql-master

- cd /mysql-build_file/slave

- docker build -t ub2-citst001.abc.com:5000/mysql-slave .

- # 查看images

- docker images

- # 将images放到私有镜像仓库:

- docker push ub2-citst001.abc.com:5000/mysql-slave

- docker push ub2-citst001.abc.com:5000/mysql-master

#根目录创建一个文件夹:file_for_k8s 用于存放yaml文件或

mkdir -p /file_for_k8s/MyCat

cd /file_for_k8s/MyCat/

mkdir master slave

cd master

#创建配置mysql-master.yaml文件

touch mysql-master.yaml

#内容如下:

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: mysql-master

spec:

replicas: 1

selector:

matchLabels:

app: mysql-master

release: stabel

template:

metadata:

labels:

name: mysql-master

app: mysql-master

release: stabel

spec:

containers:

- name: mysql-master

image: ub2-citst001.abc.com:5000/mysql-master

volumeMounts:

- name: mysql-config

mountPath: /usr/data

env:

- name: MYSQL_ROOT_PASSWORD

value: "root"

- name: MYSQL_REPLICATION_USER

value: "slave"

- name: MYSQL_REPLICATION_PASSWORD

value: "slave"

ports:

- containerPort: 3306

#hostPort: 4000

name: mysql-master

volumes:

- name: mysql-config

hostPath:

path: /localdisk/NFS/mysqlData/master/

nodeSelector:

kubernetes.io/hostname: centos-k8s006

----------------------------------------------------------end-----------------------------------------------------------------

#创建mysql-slave-service.yaml

touch mysql-slave-service.yaml

#内容如下:

apiVersion: v1

kind: Service

metadata:

name: mysql-master

namespace: default

spec:

type: NodePort

selector:

app: mysql-master

release: stabel

ports:

- name: http

port: 3306

nodePort: 31306

targetPort: 3306

--------------------------------------------------------------end---------------------------------------------------------------------

cd ../slave/

#创建配置mysql-slave.yaml文件

touch mysql-slave.yaml

#内容如下:

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: mysql-slave

spec:

replicas: 1

selector:

matchLabels:

app: mysql-slave

release: stabel

template:

metadata:

labels:

app: mysql-slave

name: mysql-slave

release: stabel

spec:

containers:

- name: mysql-slave

image: ub2-citst001.abc.com:5000/mysql-slave

volumeMounts:

- name: mysql-config

mountPath: /usr/data

env:

- name: MYSQL_ROOT_PASSWORD

value: "root"

- name: MYSQL_REPLICATION_USER

value: "slave"

- name: MYSQL_REPLICATION_PASSWORD

value: "slave"

- name: MYSQL_MASTER_SERVICE_HOST

value: "mysql-master"

- name: MASTER_PORT

value: "3306"

ports:

- containerPort: 3306

name: mysql-slave

volumes:

- name: mysql-config

hostPath:

path: /localdisk/NFS/mysqlData/slave/

nodeSelector:

kubernetes.io/hostname: centos-k8s007

-----------------------------------------------------------------------end-------------------------------------------------------------------

#创建mysql-slave-service.yaml

touch mysql-slave-service.yaml

#内容如下:

apiVersion: v1

kind: Service

metadata:

name: mysql-slave

namespace: default

spec:

type: NodePort

selector:

app: mysql-slave

release: stabel

ports:

- name: http

port: 3306

nodePort: 31307

targetPort: 3306

-----------------------------------------------------------------------end-----------------------------------------------------------------

#创建mycay实例:

cd ../master/

kubectl create -f mysql-master.yaml

kubectl create -f mysql-master-service.yaml

cd ../slave/

kubectl create -f mysql-slave.yaml

kubectl create -f mysql-slave-service.yaml

# 查看deployment创建状态,centos-k8s004上执行

[root@centos-k8s004 slave]# kubectl get deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

mysql-master 1 1 1 1 39d3

mysql-slave 1 1 1 1 39d

# 查看service创建状态:

[root@centos-k8s004 slave]# kubectl get svc

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes 192.168.0.1 <none> 443/TCP 5d

mysql-master 192.168.45.209 <nodes> 3306:31306/TCP 5h

mysql-slave 192.168.230.192 <nodes> 3306:31307/TCP 5h

# AVAILABLE的值可能需要2~15s的时间才能与DESIRED的值同步,启动pod需要时间。如果长时间还是不同步或为0,可用一下命令查看详细状态:

[root@centos-k8s004 master]# kubectl describe pod

Name: mysql-master-4291032429-0fzsr

Namespace: default

Node: centos-k8s006/10.239.219.210

Start Time: Wed, 31 Oct 2018 18:56:06 +0800

Labels: name=mysql-master

pod-template-hash=4291032429

Status: Running

IP: 172.17.44.2

Controllers: ReplicaSet/mysql-master-4291032429

Containers:

master:

Container ID: docker://674de0971fe2aa16c7926f345d8e8b2386278b14dedd826653e7347559737e28

Image: ub2-citst001.abc.com:5000/mysql-master

Image ID: docker-pullable://ub2-citst001.abc.com:5000/mysql-master@sha256:bc286c1374a3a5f18ae56bd785a771ffe0fad15567d56f8f67a615c606fb4e0d

Port: 3306/TCP

State: Running

Started: Wed, 31 Oct 2018 18:56:07 +0800

Ready: True

Restart Count: 0

Volume Mounts:

/usr/data from mysql-config (rw)

Environment Variables:

MYSQL_ROOT_PASSWORD: root

MYSQL_REPLICATION_USER: slave

MYSQL_REPLICATION_PASSWORD: slave

Conditions:

Type Status

Initialized True

Ready True

PodScheduled True

Volumes:

mysql-config:

Type: HostPath (bare host directory volume)

Path: /localdisk/NFS/mysqlData/master/

QoS Class: BestEffort

Tolerations: <none>

No events.

Name: mysql-slave-3654103728-0sxsm

Namespace: default

Node: centos-k8s007/10.239.219.209

Start Time: Wed, 31 Oct 2018 18:56:19 +0800

Labels: name=mysql-slave

pod-template-hash=3654103728

Status: Running

IP: 172.17.16.2

Controllers: ReplicaSet/mysql-slave-3654103728

Containers:

slave:

Container ID: docker://d52f4f1e57d6fa6a7c04f1a9ba63fa3f0af778df69a3190c4f35f755f225fb50

Image: ub2-citst001.abc.com:5000/mysql-slave

Image ID: docker-pullable://ub2-citst001.abc.com:5000/mysql-slave@sha256:6a1c7cbb27184b966d2557bf53860daa439b7afda3d4aa5498844d4e66f38f47

Port: 3306/TCP

State: Running

Started: Fri, 02 Nov 2018 13:49:48 +0800

Last State: Terminated

Reason: Completed

Exit Code: 0

Started: Wed, 31 Oct 2018 18:56:20 +0800

Finished: Fri, 02 Nov 2018 13:49:47 +0800

Ready: True

Restart Count: 1

Volume Mounts:

/usr/data from mysql-config (rw)

Environment Variables:

MYSQL_ROOT_PASSWORD: root

MYSQL_REPLICATION_USER: slave

MYSQL_REPLICATION_PASSWORD: slave

MYSQL_MASTER_SERVICE_HOST: centos-k8s006

MASTER_PORT: 4000

Conditions:

Type Status

Initialized True

Ready True

PodScheduled True

Volumes:

mysql-config:

Type: HostPath (bare host directory volume)

Path: /localdisk/NFS/mysqlData/slave/

QoS Class: BestEffort

Tolerations: <none>

No events.

# 如果出现如下错误,请按照下面方法解决:

Error syncing pod, skipping: failed to "StartContainer" for "POD" with ErrImagePull: "image pull failede:latest, this may be because there are no credentials on this request. details: (open /etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt: no such file or directory)"

#解决方法

问题是比较明显的,就是没有/etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt文件,用ls -l查看之后发现是一个软链接,链接到/etc/rhsm/ca/redhat-uep.pem,但是这个文件不存在,使用yum search *rhsm*命令:

安装python-rhsm-certificates包:

[root@centos-k8s004 master]# yum install python-rhsm-certificates -y

这里又出现问题了:

python-rhsm-certificates <= 1.20.3-1 被 (已安裝) subscription-manager-rhsm-certificates-1.20.11-1.el7.centos.x86_64 取代

那么怎么办呢,我们直接卸载掉subscription-manager-rhsm-certificates包,使用yum remove subscription-manager-rhsm-certificates -y命令,然后下载python-rhsm-certificates包:

# wget http://mirror.centos.org/centos/7/os/x86_64/Packages/python-rhsm-certificates-1.19.10-1.el7_4.x86_64.rpm

然后手动安装该rpm包:

# rpm -ivh python-rhsm-certificates

这时发现/etc/rhsm/ca/redhat-uep.pem文件已存在

在node执行:

yum install *rhsm* -y

这时将/etc/docker/seccomp.json删除,再次重启即可,并执行:

#docker pull registry.access.redhat.com/rhel7/pod-infrastructure:latest

这时将之前创建的rc、svc和pod全部删除重新创建,过一会就会发现pod启动成功

# 在centos-k8s006查看docker container,说明成功创建mysql-master实例,查看mysql-slave状态同理

[root@centos-k8s006 master]# docker ps

674de0971fe2 ub2-citst001.abc.com:5000/mysql-master "docker-entrypoint..." 5 weeks ago Up 5 weeks k8s_master.1fa78e47_mysql-master-4291032429-0fzsr_default_914f7535-dcfb-11e8-9eb8-005056a654f2_3462901b

220e4d37915d registry.access.redhat.com/rhel7/pod-infrastructure:latest "/usr/bin/pod" 5 weeks ago Up 5 weeks 0.0.0.0:4000->3306/tcp k8s_POD.62220e6f_mysql-master-4291032429-0fzsr_default_914f7535-dcfb-11e8-9eb8-005056a654f2_d0d62756

# 此时通过kubectl就可管理contianer

[root@centos-k8s006 master]# kubectl exec -it mysql-master-4291032429-0fzsr /bin/bash

root@mysql-master-4291032429-0fzsr:/#

# 在master创建一个数据库,看是否会同步到slave

root@mysql-master-4291032429-0fzsr:/# mysql -u root -p root

mysql>create database test_database charsetr='utf8';

mysql>show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| test_database |

| mysql |

| performance_schema |

+--------------------+

# 退出容器:

Ctrl + p && Ctrl + q

# 进入mysql-slave查看数据库:

[root@centos-k8s006 master]# kubectl exec -it mysql-slave-3654103728-0sxsm /bin/bash

root@mysql-slave-3654103728-0sxsm:/#mysql -u root -p root

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema | |

| mysql |

| performance_schema |

| test_database |

+--------------------+

# 可以看出,同步成功!

- x

- # centos-k8s004(k8s master)上操作:

- #根目录创建一个文件夹:file_for_k8s 用于存放yaml文件或

- mkdir -p /file_for_k8s/MyCat

- cd /file_for_k8s/MyCat/

- mkdir master slave

- cd master

- #创建配置mysql-master.yaml文件

- touch mysql-master.yaml

- #内容如下:

- apiVersion: extensions/v1beta1

- kind: Deployment

- metadata:

- name: mysql-master

- spec:

- replicas: 1

- selector:

- matchLabels:

- app: mysql-master

- release: stabel

- template:

- metadata:

- labels:

- name: mysql-master

- app: mysql-master

- release: stabel

- spec:

- containers:

- - name: mysql-master

- image: ub2-citst001.abc.com:5000/mysql-master

- volumeMounts:

- - name: mysql-config

- mountPath: /usr/data

- env:

- - name: MYSQL_ROOT_PASSWORD

- value: "root"

- - name: MYSQL_REPLICATION_USER

- value: "slave"

- - name: MYSQL_REPLICATION_PASSWORD

- value: "slave"

- ports:

- - containerPort: 3306

- #hostPort: 4000

- name: mysql-master

- volumes:

- - name: mysql-config

- hostPath:

- path: /localdisk/NFS/mysqlData/master/

- nodeSelector:

- kubernetes.io/hostname: centos-k8s006

- ----------------------------------------------------------end-----------------------------------------------------------------

- #创建mysql-slave-service.yaml

- touch mysql-slave-service.yaml

- #内容如下:

- apiVersion: v1

- kind: Service

- metadata:

- name: mysql-master

- namespace: default

- spec:

- type: NodePort

- selector:

- app: mysql-master

- release: stabel

- ports:

- - name: http

- port: 3306

- nodePort: 31306

- targetPort: 3306

- --------------------------------------------------------------end---------------------------------------------------------------------

- cd ../slave/

- #创建配置mysql-slave.yaml文件

- touch mysql-slave.yaml

- #内容如下:

- apiVersion: extensions/v1beta1

- kind: Deployment

- metadata:

- name: mysql-slave

- spec:

- replicas: 1

- selector:

- matchLabels:

- app: mysql-slave

- release: stabel

- template:

- metadata:

- labels:

- app: mysql-slave

- name: mysql-slave

- release: stabel

- spec:

- containers:

- - name: mysql-slave

- image: ub2-citst001.abc.com:5000/mysql-slave

- volumeMounts:

- - name: mysql-config

- mountPath: /usr/data

- env:

- - name: MYSQL_ROOT_PASSWORD

- value: "root"

- - name: MYSQL_REPLICATION_USER

- value: "slave"

- - name: MYSQL_REPLICATION_PASSWORD

- value: "slave"

- - name: MYSQL_MASTER_SERVICE_HOST

- value: "mysql-master"

- - name: MASTER_PORT

- value: "3306"

- ports:

- - containerPort: 3306

- name: mysql-slave

- volumes:

- - name: mysql-config

- hostPath:

- path: /localdisk/NFS/mysqlData/slave/

- nodeSelector:

- kubernetes.io/hostname: centos-k8s007

- -----------------------------------------------------------------------end-------------------------------------------------------------------

- #创建mysql-slave-service.yaml

- touch mysql-slave-service.yaml

- #内容如下:

- apiVersion: v1

- kind: Service

- metadata:

- name: mysql-slave

- namespace: default

- spec:

- type: NodePort

- selector:

- app: mysql-slave

- release: stabel

- ports:

- - name: http

- port: 3306

- nodePort: 31307

- targetPort: 3306

- -----------------------------------------------------------------------end-----------------------------------------------------------------

- #创建mycay实例:

- cd ../master/

- kubectl create -f mysql-master.yaml

- kubectl create -f mysql-master-service.yaml

- cd ../slave/

- kubectl create -f mysql-slave.yaml

- kubectl create -f mysql-slave-service.yaml

- # 查看deployment创建状态,centos-k8s004上执行

- [root@centos-k8s004 slave]# kubectl get deployment

- NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

- mysql-master 1 1 1 1 39d3

- mysql-slave 1 1 1 1 39d

- # 查看service创建状态:

- [root@centos-k8s004 slave]# kubectl get svc

- NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

- kubernetes 192.168.0.1 <none> 443/TCP 5d

- mysql-master 192.168.45.209 <nodes> 3306:31306/TCP 5h

- mysql-slave 192.168.230.192 <nodes> 3306:31307/TCP 5h

- # AVAILABLE的值可能需要2~15s的时间才能与DESIRED的值同步,启动pod需要时间。如果长时间还是不同步或为0,可用一下命令查看详细状态:

- [root@centos-k8s004 master]# kubectl describe pod

- Name: mysql-master-4291032429-0fzsr

- Namespace: default

- Node: centos-k8s006/10.239.219.210

- Start Time: Wed, 31 Oct 2018 18:56:06 +0800

- Labels: name=mysql-master

- pod-template-hash=4291032429

- Status: Running

- IP: 172.17.44.2

- Controllers: ReplicaSet/mysql-master-4291032429

- Containers:

- master:

- Container ID: docker://674de0971fe2aa16c7926f345d8e8b2386278b14dedd826653e7347559737e28

- Image: ub2-citst001.abc.com:5000/mysql-master

- Image ID: docker-pullable://ub2-citst001.abc.com:5000/mysql-master@sha256:bc286c1374a3a5f18ae56bd785a771ffe0fad15567d56f8f67a615c606fb4e0d

- Port: 3306/TCP

- State: Running

- Started: Wed, 31 Oct 2018 18:56:07 +0800

- Ready: True

- Restart Count: 0

- Volume Mounts:

- /usr/data from mysql-config (rw)

- Environment Variables:

- MYSQL_ROOT_PASSWORD: root

- MYSQL_REPLICATION_USER: slave

- MYSQL_REPLICATION_PASSWORD: slave

- Conditions:

- Type Status

- Initialized True

- Ready True

- PodScheduled True

- Volumes:

- mysql-config:

- Type: HostPath (bare host directory volume)

- Path: /localdisk/NFS/mysqlData/master/

- QoS Class: BestEffort

- Tolerations: <none>

- No events.

- Name: mysql-slave-3654103728-0sxsm

- Namespace: default

- Node: centos-k8s007/10.239.219.209

- Start Time: Wed, 31 Oct 2018 18:56:19 +0800

- Labels: name=mysql-slave

- pod-template-hash=3654103728

- Status: Running

- IP: 172.17.16.2

- Controllers: ReplicaSet/mysql-slave-3654103728

- Containers:

- slave:

- Container ID: docker://d52f4f1e57d6fa6a7c04f1a9ba63fa3f0af778df69a3190c4f35f755f225fb50

- Image: ub2-citst001.abc.com:5000/mysql-slave

- Image ID: docker-pullable://ub2-citst001.abc.com:5000/mysql-slave@sha256:6a1c7cbb27184b966d2557bf53860daa439b7afda3d4aa5498844d4e66f38f47

- Port: 3306/TCP

- State: Running

- Started: Fri, 02 Nov 2018 13:49:48 +0800

- Last State: Terminated

- Reason: Completed

- Exit Code: 0

- Started: Wed, 31 Oct 2018 18:56:20 +0800

- Finished: Fri, 02 Nov 2018 13:49:47 +0800

- Ready: True

- Restart Count: 1

- Volume Mounts:

- /usr/data from mysql-config (rw)

- Environment Variables:

- MYSQL_ROOT_PASSWORD: root

- MYSQL_REPLICATION_USER: slave

- MYSQL_REPLICATION_PASSWORD: slave

- MYSQL_MASTER_SERVICE_HOST: centos-k8s006

- MASTER_PORT: 4000

- Conditions:

- Type Status

- Initialized True

- Ready True

- PodScheduled True

- Volumes:

- mysql-config:

- Type: HostPath (bare host directory volume)

- Path: /localdisk/NFS/mysqlData/slave/

- QoS Class: BestEffort

- Tolerations: <none>

- No events.

- # 如果出现如下错误,请按照下面方法解决:

- Error syncing pod, skipping: failed to "StartContainer" for "POD" with ErrImagePull: "image pull failede:latest, this may be because there are no credentials on this request. details: (open /etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt: no such file or directory)"

- #解决方法

- 问题是比较明显的,就是没有/etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt文件,用ls -l查看之后发现是一个软链接,链接到/etc/rhsm/ca/redhat-uep.pem,但是这个文件不存在,使用yum search *rhsm*命令:

- 安装python-rhsm-certificates包:

- [root@centos-k8s004 master]# yum install python-rhsm-certificates -y

- 这里又出现问题了:

- python-rhsm-certificates <= 1.20.3-1 被 (已安裝) subscription-manager-rhsm-certificates-1.20.11-1.el7.centos.x86_64 取代

- 那么怎么办呢,我们直接卸载掉subscription-manager-rhsm-certificates包,使用yum remove subscription-manager-rhsm-certificates -y命令,然后下载python-rhsm-certificates包:

- # wget http://mirror.centos.org/centos/7/os/x86_64/Packages/python-rhsm-certificates-1.19.10-1.el7_4.x86_64.rpm

- 然后手动安装该rpm包:

- # rpm -ivh python-rhsm-certificates

- 这时发现/etc/rhsm/ca/redhat-uep.pem文件已存在

- 在node执行:

- yum install *rhsm* -y

- 这时将/etc/docker/seccomp.json删除,再次重启即可,并执行:

- #docker pull registry.access.redhat.com/rhel7/pod-infrastructure:latest

- 这时将之前创建的rc、svc和pod全部删除重新创建,过一会就会发现pod启动成功

- # 在centos-k8s006查看docker container,说明成功创建mysql-master实例,查看mysql-slave状态同理

- [root@centos-k8s006 master]# docker ps

- 674de0971fe2 ub2-citst001.abc.com:5000/mysql-master "docker-entrypoint..." 5 weeks ago Up 5 weeks k8s_master.1fa78e47_mysql-master-4291032429-0fzsr_default_914f7535-dcfb-11e8-9eb8-005056a654f2_3462901b

- 220e4d37915d registry.access.redhat.com/rhel7/pod-infrastructure:latest "/usr/bin/pod" 5 weeks ago Up 5 weeks 0.0.0.0:4000->3306/tcp k8s_POD.62220e6f_mysql-master-4291032429-0fzsr_default_914f7535-dcfb-11e8-9eb8-005056a654f2_d0d62756

- # 此时通过kubectl就可管理contianer

- [root@centos-k8s006 master]# kubectl exec -it mysql-master-4291032429-0fzsr /bin/bash

- root@mysql-master-4291032429-0fzsr:/#

- # 在master创建一个数据库,看是否会同步到slave

- root@mysql-master-4291032429-0fzsr:/# mysql -u root -p root

- mysql>create database test_database charsetr='utf8';

- mysql>show databases;

- +--------------------+

- | Database |

- +--------------------+

- | information_schema |

- | test_database |

- | mysql |

- | performance_schema |

- +--------------------+

- # 退出容器:

- Ctrl + p && Ctrl + q

- # 进入mysql-slave查看数据库:

- [root@centos-k8s006 master]# kubectl exec -it mysql-slave-3654103728-0sxsm /bin/bash

- root@mysql-slave-3654103728-0sxsm:/#mysql -u root -p root

- mysql> show databases;

- +--------------------+

- | Database |

- +--------------------+

- | information_schema | |

- | mysql |

- | performance_schema |

- | test_database |

- +--------------------+

- # 可以看出,同步成功!

beautifulsoup4==4.4.1

certifi==2017.4.17

cffi==1.11.5

chardet==3.0.2

configparser==3.5.0

cryptography==2.3.1

defusedxml==0.5.0

Django==1.11.15

django-auth-ldap==1.2.6

djangorestframework==3.8.2

djangorestframework-xml==1.3.0

enum34==1.1.6

Ghost.py==0.2.3

gitdb2==2.0.0

GitPython==2.1.1

idna==2.5

ipaddress==1.0.22

jira==1.0.10

multi-key-dict==2.0.3

numpy==1.15.2

oauthlib==2.0.2

ordereddict==1.1

pbr==0.11.1

pyasn1==0.4.4

pyasn1-modules==0.2.2

pycparser==2.18

PyMySQL==0.9.2

python-ldap==3.1.0

pytz==2018.5

requests==2.18.1

requests-oauthlib==0.8.0

requests-toolbelt==0.8.0

scipy==1.1.0

selenium==3.4.3

simplejson==3.16.0

six==1.10.0

smmap2==2.0.1

threadpool==1.3.2

urllib3==1.21.1

python-jenkins==0.4.13

uWSGI

- uWSGI

~~~~~~~~~~~~~~~~

timeout = 60

index-url = http://pypi.douban.com/simple

trusted-host = pypi.douban.com

- [global]

- timeout = 60

- index-url = http://pypi.douban.com/simple

- trusted-host = pypi.douban.com

touch /root/www2/oss2/log/touchforlogrotate

p4 sync

uwsgi --ini /root/uwsgi.ini

tail -f /dev/null

- #!/bin/bash

- touch /root/www2/oss2/log/touchforlogrotate

- uwsgi --ini /root/uwsgi.ini

- tail -f /dev/null

socket=0.0.0.0:8001

chdir=/root/www2/oss2/

master=true

processes=4

threads=2

module=oss2.wsgi

touch-logreopen = /root/www2/oss2/log/touchforlogrotate

daemonize = /root/www2/oss2/log/log.log

wsgi-file =/root/www2/oss2/website/wsgi.py

py-autoreload=1

- [uwsgi]

- socket=0.0.0.0:8001

- chdir=/root/www2/oss2/

- master=true

- processes=4

- threads=2

- module=oss2.wsgi

- touch-logreopen = /root/www2/oss2/log/touchforlogrotate

- daemonize = /root/www2/oss2/log/log.log

- wsgi-file =/root/www2/oss2/website/wsgi.py

- py-autoreload=1

MAINTAINER by miaohenx

ENV http_proxy http://child-prc.abc.com:913/

ENV https_proxy https://child-prc.abc.com:913/

RUN mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup && curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.163.com/.help/CentOS7-Base-163.repo && yum makecache && yum -y install epel-release zlib-devel bzip2-devel openssl-devel ncurses-devel sqlite-devel readline-devel tk-devel gcc make openldap-devel && curl -O https://www.python.org/ftp/python/3.6.6/Python-3.6.6.tar.xz && tar -xvJf Python-3.6.6.tar.xz && cd Python-3.6.6 && ./configure prefix=/usr/local/python3 && make && make install && ln -s /usr/local/python3/bin/python3 /usr/bin/python3.6 && ln -s /usr/local/python3/bin/python3 /usr/bin/python3 && cd .. && rm -rf Python-3.6.*

RUN yum -y install python36-devel python36-setuptools && easy_install-3.6 pip && mkdir /root/.pip

COPY 1.txt /root/

COPY uwsgi.ini /root/

COPY www2 /root/www2/

COPY start_script.sh /root/

COPY pip.conf /root/.pip/

RUN pip3 install -r /root/1.txt && chmod +x /root/start_script.sh

EXPOSE 8000

ENTRYPOINT ["/root/start_script.sh"]

- x

- FROM centos

- MAINTAINER by miaohenx

- ENV http_proxy http://child-prc.abc.com:913/

- ENV https_proxy https://child-prc.abc.com:913/

- RUN mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup && curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.163.com/.help/CentOS7-Base-163.repo && yum makecache && yum -y install epel-release zlib-devel bzip2-devel openssl-devel ncurses-devel sqlite-devel readline-devel tk-devel gcc make openldap-devel && curl -O https://www.python.org/ftp/python/3.6.6/Python-3.6.6.tar.xz && tar -xvJf Python-3.6.6.tar.xz && cd Python-3.6.6 && ./configure prefix=/usr/local/python3 && make && make install && ln -s /usr/local/python3/bin/python3 /usr/bin/python3.6 && ln -s /usr/local/python3/bin/python3 /usr/bin/python3 && cd .. && rm -rf Python-3.6.*

- RUN yum -y install python36-devel python36-setuptools && easy_install-3.6 pip && mkdir /root/.pip

- COPY 1.txt /root/

- COPY uwsgi.ini /root/

- COPY www2 /root/www2/

- COPY start_script.sh /root/

- COPY pip.conf /root/.pip/

- RUN pip3 install -r /root/1.txt && chmod +x /root/start_script.sh

- EXPOSE 8000

- ENTRYPOINT ["/root/start_script.sh"]

- docker build -t oss-server . #注意末尾的.

- docker images

1)工作在网络的7层之上,可以针对 http 应用做一些分流的策略,比如针对域名、目录结构,它的正则规则比 HAProxy 更为强大和灵活,这也是它目前广泛流

行的主要原因之一, Nginx 单凭这点可利用的场合就远多于 LVS 了。

2) Nginx 对网络稳定性的依赖非常小,理论上能 ping 通就就能进行负载功能,这个也是它的优势之一;相反 LVS 对网络稳定性依赖比较大;

3) Nginx 安装和配置比较简单,测试起来比较方便,它基本能把错误用日志打印出来。 LVS 的配置、测试就要花比较长的时间了, LVS 对网络依赖比较大。

4)可以承担高负载压力且稳定,在硬件不差的情况下一般能支撑几万次的并发量,负载度比 LVS 相对小些。

5) Nginx 可以通过端口检测到服务器内部的故障,比如根据服务器处理网页返回的状态码、超时等等,并且会把返回错误的请求重新提交到另一个节点,不过其中缺点就是不支持url来检测。

比如用户正在上传一个文件,而处理该上传的节点刚好在上传过程中出现故障, Nginx 会把上传切到另一台服务器重新处 理,而LVS就直接断掉了,如果是上传一个很大的文件或者很重要的文

件的话,用户可能会因此而不满。

6)Nginx 不仅仅是一款优秀的负载均衡器/反向代理软件,它同时也是功能强大的 Web 应用服务器。 LNMP 也是近几年非常流行的 web 架构,在高流量的环境中稳定性也很好。

7)Nginx 现在作为 Web 反向加速缓存越来越成熟了,速度比传统的 Squid 服务器更快,可以考虑用其作为反向代理加速器。

8)Nginx 可作为中层反向代理使用,这一层面 Nginx 基本上无对手,唯一可以对比 Nginx 的就只有 lighttpd 了,不过 lighttpd 目前还没有做到 Nginx 完全的功能,配置也不那么清晰易读,

社区资料也远远没 Nginx 活跃。

9) Nginx 也可作为静态网页和图片服务器,这方面的性能也无对手。还有 Nginx社区非常活跃,第三方模块也很多。

Nginx 的缺点是:

1)Nginx 仅能支持 http、 https 和 Email 协议,这样就在适用范围上面小些,这个是它的缺点。

2)对后端服务器的健康检查,只支持通过端口来检测,不支持通过 url 来检测。不支持 Session 的直接保持,但能通过 ip_hash 来解决。

二、LVS:使用 Linux 内核集群实现一个高性能、 高可用的负载均衡服务器,它具有很好的可伸缩性( Scalability)、可靠性( Reliability)和可管理性(Manageability)。

LVS 的优点是:

1)抗负载能力强、是工作在网络 4 层之上仅作分发之用, 没有流量的产生,这个特点也决定了它在负载均衡软件里的性能最强的,对内存和 cpu 资源消耗比较低。

2)配置性比较低,这是一个缺点也是一个优点,因为没有可太多配置的东西,所以并不需要太多接触,大大减少了人为出错的几率。

3)工作稳定,因为其本身抗负载能力很强,自身有完整的双机热备方案,如LVS+Keepalived,不过我们在项目实施中用得最多的还是 LVS/DR+Keepalived。

4)无流量, LVS 只分发请求,而流量并不从它本身出去,这点保证了均衡器 IO的性能不会收到大流量的影响。

5)应用范围比较广,因为 LVS 工作在 4 层,所以它几乎可以对所有应用做负载均衡,包括 http、数据库、在线聊天室等等。

LVS 的缺点是:

1)软件本身不支持正则表达式处理,不能做动静分离;而现在许多网站在这方面都有较强的需求,这个是 Nginx/HAProxy+Keepalived 的优势所在。

2)如果是网站应用比较庞大的话, LVS/DR+Keepalived 实施起来就比较复杂了,特别后面有 Windows Server 的机器的话,如果实施及配置还有维护过程就比较复杂了,相对而言,

Nginx/HAProxy+Keepalived 就简单多了。

三、HAProxy 的特点是:

1)HAProxy 也是支持虚拟主机的。

2)HAProxy 的优点能够补充 Nginx 的一些缺点,比如支持 Session 的保持,Cookie的引导;同时支持通过获取指定的 url 来检测后端服务器的状态。

3)HAProxy 跟 LVS 类似,本身就只是一款负载均衡软件;单纯从效率上来讲HAProxy 会比 Nginx 有更出色的负载均衡速度,在并发处理上也是优于 Nginx 的。

4)HAProxy 支持 TCP 协议的负载均衡转发,可以对 MySQL 读进行负载均衡,对后端的 MySQL 节点进行检测和负载均衡,大家可以用 LVS+Keepalived 对 MySQL主从做负载均衡。

5)HAProxy 负载均衡策略非常多, HAProxy 的负载均衡算法现在具体有如下8种:

1> roundrobin,表示简单的轮询,这个不多说,这个是负载均衡基本都具备的;

2> static-rr,表示根据权重,建议关注;

3> leastconn,表示最少连接者先处理,建议关注;

4> source,表示根据请求源 IP,这个跟 Nginx 的 IP_hash 机制类似,我们用其作为解决 session 问题的一种方法,建议关注;

5> ri,表示根据请求的 URI;

6> rl_param,表示根据请求的 URl 参数’balance url_param’ requires an URLparameter name;

7> hdr(name),表示根据 HTTP 请求头来锁定每一次 HTTP 请求;

8> rdp-cookie(name),表示根据据 cookie(name)来锁定并哈希每一次 TCP 请求。<wiz_code_mirror>

- 一、Nginx的优点是:

- 1)工作在网络的7层之上,可以针对 http 应用做一些分流的策略,比如针对域名、目录结构,它的正则规则比 HAProxy 更为强大和灵活,这也是它目前广泛流

- 行的主要原因之一, Nginx 单凭这点可利用的场合就远多于 LVS 了。

- 2) Nginx 对网络稳定性的依赖非常小,理论上能 ping 通就就能进行负载功能,这个也是它的优势之一;相反 LVS 对网络稳定性依赖比较大;

- 3) Nginx 安装和配置比较简单,测试起来比较方便,它基本能把错误用日志打印出来。 LVS 的配置、测试就要花比较长的时间了, LVS 对网络依赖比较大。

- 4)可以承担高负载压力且稳定,在硬件不差的情况下一般能支撑几万次的并发量,负载度比 LVS 相对小些。

- 5) Nginx 可以通过端口检测到服务器内部的故障,比如根据服务器处理网页返回的状态码、超时等等,并且会把返回错误的请求重新提交到另一个节点,不过其中缺点就是不支持url来检测。

- 比如用户正在上传一个文件,而处理该上传的节点刚好在上传过程中出现故障, Nginx 会把上传切到另一台服务器重新处 理,而LVS就直接断掉了,如果是上传一个很大的文件或者很重要的文

- 件的话,用户可能会因此而不满。

- 6)Nginx 不仅仅是一款优秀的负载均衡器/反向代理软件,它同时也是功能强大的 Web 应用服务器。 LNMP 也是近几年非常流行的 web 架构,在高流量的环境中稳定性也很好。

- 7)Nginx 现在作为 Web 反向加速缓存越来越成熟了,速度比传统的 Squid 服务器更快,可以考虑用其作为反向代理加速器。

- 8)Nginx 可作为中层反向代理使用,这一层面 Nginx 基本上无对手,唯一可以对比 Nginx 的就只有 lighttpd 了,不过 lighttpd 目前还没有做到 Nginx 完全的功能,配置也不那么清晰易读,

- 社区资料也远远没 Nginx 活跃。

- 9) Nginx 也可作为静态网页和图片服务器,这方面的性能也无对手。还有 Nginx社区非常活跃,第三方模块也很多。

- Nginx 的缺点是:

- 1)Nginx 仅能支持 http、 https 和 Email 协议,这样就在适用范围上面小些,这个是它的缺点。

- 2)对后端服务器的健康检查,只支持通过端口来检测,不支持通过 url 来检测。不支持 Session 的直接保持,但能通过 ip_hash 来解决。

- 二、LVS:使用 Linux 内核集群实现一个高性能、 高可用的负载均衡服务器,它具有很好的可伸缩性( Scalability)、可靠性( Reliability)和可管理性(Manageability)。

- LVS 的优点是:

- 1)抗负载能力强、是工作在网络 4 层之上仅作分发之用, 没有流量的产生,这个特点也决定了它在负载均衡软件里的性能最强的,对内存和 cpu 资源消耗比较低。

- 2)配置性比较低,这是一个缺点也是一个优点,因为没有可太多配置的东西,所以并不需要太多接触,大大减少了人为出错的几率。

- 3)工作稳定,因为其本身抗负载能力很强,自身有完整的双机热备方案,如LVS+Keepalived,不过我们在项目实施中用得最多的还是 LVS/DR+Keepalived。

- 4)无流量, LVS 只分发请求,而流量并不从它本身出去,这点保证了均衡器 IO的性能不会收到大流量的影响。

- 5)应用范围比较广,因为 LVS 工作在 4 层,所以它几乎可以对所有应用做负载均衡,包括 http、数据库、在线聊天室等等。

- LVS 的缺点是:

- 1)软件本身不支持正则表达式处理,不能做动静分离;而现在许多网站在这方面都有较强的需求,这个是 Nginx/HAProxy+Keepalived 的优势所在。

- 2)如果是网站应用比较庞大的话, LVS/DR+Keepalived 实施起来就比较复杂了,特别后面有 Windows Server 的机器的话,如果实施及配置还有维护过程就比较复杂了,相对而言,

- Nginx/HAProxy+Keepalived 就简单多了。

- 三、HAProxy 的特点是:

- 1)HAProxy 也是支持虚拟主机的。

- 2)HAProxy 的优点能够补充 Nginx 的一些缺点,比如支持 Session 的保持,Cookie的引导;同时支持通过获取指定的 url 来检测后端服务器的状态。

- 3)HAProxy 跟 LVS 类似,本身就只是一款负载均衡软件;单纯从效率上来讲HAProxy 会比 Nginx 有更出色的负载均衡速度,在并发处理上也是优于 Nginx 的。

- 4)HAProxy 支持 TCP 协议的负载均衡转发,可以对 MySQL 读进行负载均衡,对后端的 MySQL 节点进行检测和负载均衡,大家可以用 LVS+Keepalived 对 MySQL主从做负载均衡。

- 5)HAProxy 负载均衡策略非常多, HAProxy 的负载均衡算法现在具体有如下8种:

- 1> roundrobin,表示简单的轮询,这个不多说,这个是负载均衡基本都具备的;

- 2> static-rr,表示根据权重,建议关注;

- 3> leastconn,表示最少连接者先处理,建议关注;

- 4> source,表示根据请求源 IP,这个跟 Nginx 的 IP_hash 机制类似,我们用其作为解决 session 问题的一种方法,建议关注;

- 5> ri,表示根据请求的 URI;

- 6> rl_param,表示根据请求的 URl 参数’balance url_param’ requires an URLparameter name;

- 7> hdr(name),表示根据 HTTP 请求头来锁定每一次 HTTP 请求;

- 8> rdp-cookie(name),表示根据据 cookie(name)来锁定并哈希每一次 TCP 请求。

vim /etc/keepalived/keepalived.conf # 配置keepalived,替换为以下内容

! Configuration File for keepalived

global_defs {

notification_email { #定义收件人邮箱

root@localhost

}

notification_email_from root@localhost #定义发件人邮箱

smtp_server 127.0.0.1 #定义邮件服务器地址

smtp_connect_timeout 30 #定有邮件服务器连接超时时长为30秒

router_id LVS_DEVEL #运行keepalive的机器的标识

}

vrrp_instance VI_1 { #定义VRRP实例,实例名自定义

state MASTER #指定当前节点的角色,master为主,backup为从

interface ens160 #直接HA监测的接口

virtual_router_id 51 #虚拟路由标识,在同一VRRP实例中,主备服务器ID必须一样

priority 100 #定义节点优先级,数字越大越优先,主服务器优先级高于从服务器

advert_int 1 #设置主备之间永不检查时间间隔,单位为秒

authentication { #设置主从之间验证类型和密码

auth_type PASS

auth_pass a23c7f32dfb519d6a5dc67a4b2ff8f5e

}

virtual_ipaddress {

10.239.219.157 #定义虚拟ip地址

}

}

vrrp_instance VI_2 {

state BACKUP

interface ens160

virtual_router_id 52

priority 99

advert_int 1

authentication {

auth_type PASS

auth_pass 56f7663077966379d4106e8ee30eb1a5

}

virtual_ipaddress {

10.239.219.156

}

}

<wiz_code_mirror>

- yum install -y haproxy keepalived # 安装haproxy keepalived

- vim /etc/keepalived/keepalived.conf # 配置keepalived,替换为以下内容

- ! Configuration File for keepalived

- global_defs {

- notification_email { #定义收件人邮箱

- root@localhost

- }

- notification_email_from root@localhost #定义发件人邮箱

- smtp_server 127.0.0.1 #定义邮件服务器地址

- smtp_connect_timeout 30 #定有邮件服务器连接超时时长为30秒

- router_id LVS_DEVEL #运行keepalive的机器的标识

- }

- vrrp_instance VI_1 { #定义VRRP实例,实例名自定义

- state MASTER #指定当前节点的角色,master为主,backup为从

- interface ens160 #直接HA监测的接口

- virtual_router_id 51 #虚拟路由标识,在同一VRRP实例中,主备服务器ID必须一样

- priority 100 #定义节点优先级,数字越大越优先,主服务器优先级高于从服务器

- advert_int 1 #设置主备之间永不检查时间间隔,单位为秒

- authentication { #设置主从之间验证类型和密码

- auth_type PASS

- auth_pass a23c7f32dfb519d6a5dc67a4b2ff8f5e

- }

- virtual_ipaddress {

- 10.239.219.157 #定义虚拟ip地址

- }

- }

- vrrp_instance VI_2 {

- state BACKUP

- interface ens160

- virtual_router_id 52

- priority 99

- advert_int 1

- authentication {

- auth_type PASS

- auth_pass 56f7663077966379d4106e8ee30eb1a5

- }

- virtual_ipaddress {

- 10.239.219.156

- }

- }

global_defs {

notification_email { #定义收件人邮箱

root@localhost

}

notification_email_from root@localhost #定义发件人邮箱

smtp_server 127.0.0.1 #定义邮件服务器地址

smtp_connect_timeout 30 #定有邮件服务器连接超时时长为30秒

router_id LVS_DEVEL #运行keepalive的机器的标识

}

vrrp_instance VI_1 { #定义VRRP实例,实例名自定义

state BACKUP #指定当前节点的角色,master为主,backup为从

interface ens160 #直接HA监测的接口

virtual_router_id 51 #虚拟路由标识,在同一VRRP实例中,主备服务器ID必须一样

priority 99 #定义节点优先级,数字越大越优先,主服务器优先级高于从服务器

advert_int 1 #设置主备之间永不检查时间间隔,单位为秒

authentication { #设置主从之间验证类型和密码

auth_type PASS

auth_pass a23c7f32dfb519d6a5dc67a4b2ff8f5e

}

virtual_ipaddress {

10.239.219.157 #定义虚拟ip地址

}

}

vrrp_instance VI_2 {

state MASTER

interface ens160

virtual_router_id 52

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 56f7663077966379d4106e8ee30eb1a5

}

virtual_ipaddress {

10.239.219.156

}

}<wiz_code_mirror>

- ! Configuration File for keepalived

- global_defs {

- notification_email { #定义收件人邮箱

- root@localhost

- }

- notification_email_from root@localhost #定义发件人邮箱

- smtp_server 127.0.0.1 #定义邮件服务器地址

- smtp_connect_timeout 30 #定有邮件服务器连接超时时长为30秒

- router_id LVS_DEVEL #运行keepalive的机器的标识

- }

- vrrp_instance VI_1 { #定义VRRP实例,实例名自定义

- state BACKUP #指定当前节点的角色,master为主,backup为从

- interface ens160 #直接HA监测的接口

- virtual_router_id 51 #虚拟路由标识,在同一VRRP实例中,主备服务器ID必须一样

- priority 99 #定义节点优先级,数字越大越优先,主服务器优先级高于从服务器

- advert_int 1 #设置主备之间永不检查时间间隔,单位为秒

- authentication { #设置主从之间验证类型和密码

- auth_type PASS

- auth_pass a23c7f32dfb519d6a5dc67a4b2ff8f5e

- }

- virtual_ipaddress {

- 10.239.219.157 #定义虚拟ip地址

- }

- }

- vrrp_instance VI_2 {

- state MASTER

- interface ens160

- virtual_router_id 52

- priority 100

- advert_int 1

- authentication {

- auth_type PASS

- auth_pass 56f7663077966379d4106e8ee30eb1a5

- }

- virtual_ipaddress {

- 10.239.219.156

- }

- }

# to have these messages end up in /var/log/haproxy.log you will

# need to:

#

# 1) configure syslog to accept network log events. This is done

# by adding the '-r' option to the SYSLOGD_OPTIONS in

# /etc/sysconfig/syslog

#

# 2) configure local2 events to go to the /var/log/haproxy.log

# file. A line like the following can be added to

# /etc/sysconfig/syslog

#

# local2.* /var/log/haproxy.log

#

log 127.0.0.1 local2 #通过rsyslog将日志进行归档记录,在/etc/rsyslog.conf配置文件中,添加‘local2.* /var/log/haproxy',并且启用$ModLoad imudp,$UDPServerRun 514,$ModLoad imtcp,$InputTCPServerRun 514 此四项功能,最后重启rsyslog进程。

chroot /var/lib/haproxy #指定haproxy进程工作的目录

pidfile /var/run/haproxy.pid #指定pid文件

maxconn 4000 #最大并发连接数

user haproxy #运行haproxy的用户

group haproxy #运行haproxy的组

daemon #以守护进程的形式运行,即后台运行

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults #默认配置端

mode http #工作模式,源码包编译默认为tcp

log global #记录全局日志

option httplog #详细记录http日志

option dontlognull #不记录健康检测的日志信息

option http-server-close #启用服务器端主动关闭功能

option forwardfor except 127.0.0.0/8 #传递client端IP至后端real server

option redispatch #基于cookie做会话保持时,后端对应存放session的服务器出现故障时,会话会被重定向至别的服务器

retries 3 #请求重传次数

timeout http-request 10s #断开客户端连接的时长

timeout queue 1m #一个请求在队列里的超时时长

timeout connect 10s #设定在haproxy转发至后端upstream server时等待的超时时长

timeout client 1m #client的一次非活动状态的超时时长

timeout server 1m #等待服务器端的非活动的超时时长

timeout http-keep-alive 10s #持久连接超时时长

timeout check 10s #检查请求连接的超时时长

maxconn 3000 #最大连接数

#---------------------------------------------------------------------

# main frontend which proxys to the backends

#---------------------------------------------------------------------

frontend webserver *:8000 # OSS server

acl url_static path_beg -i /static /images /javascript /stylesheets #匹配path以/static,/images开始的,且不区分大小写

acl url_static path_end -i .jpg .gif .png .css .js .html

acl url_static hdr_beg(host) -i img. video. download. ftp. imgs. image.

acl url_dynamic path_end .php .jsp

use_backend static if url_static #满足名为url_static这条acl规则,则将请求转发至后端名为static的real server组中去

use_backend dynamic if url_dynamic

default_backend dynamic #如果上面所有acl规则都不满足,将请求转发到dynamic组中

#---------------------------------------------------------------------

# static backend for serving up images, stylesheets and such

#---------------------------------------------------------------------

backend static #定义后端real server组,组名为static

balance roundrobin #支持动态权重修改,支持慢启动

server static_1 centos-k8s005:8000 check inter 3000 fall 3 rise 1 maxconn 30000

server static_2 centos-k8s006:8000 check inter 3000 fall 3 rise 1 maxconn 30000

server static_3 centos-k8s007:8000 check inter 3000 fall 3 rise 1 maxconn 30000

# server static_Error :8080 backup check #当此组中的所有server全部不能提供服务,才将请求调度至此server上

#---------------------------------------------------------------------

# round robin balancing between the various backends

#---------------------------------------------------------------------

backend dynamic

cookie cookie_name insert nocache #使用cookie实现session绑定,且不记录缓存

balance roundrobin

server dynamic1 centos-k8s005:8000 check inter 3000 fall 3 rise 1 maxconn 1000 cookie dynamic1

server dynamic2 centos-k8s006:8000 check inter 3000 fall 3 rise 1 maxconn 1000 cookie dynamic2

server dynamic3 centos-k8s007:8000 check inter 3000 fall 3 rise 1 maxconn 1000 cookie dynamic3 #定义dynamic组中的server,将此server命名为dynamic2,每隔3000ms检测一个健康状态,如果检测3次都失败,将此server剔除。在离线的状态下,只要检测1次成功,就让其上线,此server支持最大的并发连接数为1000,cookie的值为dynamic2

frontend kibana *:5602 #Kibana

acl url_static path_beg -i /static /images /javascript /stylesheets #匹配path以/static,/images开始的,且不区分大小写

acl url_static path_end -i .jpg .gif .png .css .js .html

acl url_static hdr_beg(host) -i img. video. download. ftp. imgs. image.

acl url_dynamic path_end .php .jsp

use_backend static2 if url_static #满足名为url_static这条acl规则,则将请求转发至后端名为static的real server组中去

use_backend dynamic2 if url_dynamic

default_backend dynamic2 #如果上面所有acl规则都不满足,将请求转发到dynamic组中

#---------------------------------------------------------------------

# static backend for serving up images, stylesheets and such

#---------------------------------------------------------------------

backend static2 #定义后端real server组,组名为static

balance roundrobin #支持动态权重修改,支持慢启动

server static_1 centos-k8s003:5602 check inter 3000 fall 3 rise 1 maxconn 30000

#---------------------------------------------------------------------

# round robin balancing between the various backends

#---------------------------------------------------------------------

backend dynamic2

cookie cookie_name insert nocache #使用cookie实现session绑定,且不记录缓存

balance roundrobin

server dynamic1 centos-k8s003:5602 check inter 3000 fall 3 rise 1 maxconn 1000 cookie dynamic1

frontend kibana *:8080 # kubernetes-dashboard

acl url_static path_beg -i /static /images /javascript /stylesheets #匹配path以/static,/images开始的,且不区分大小写

acl url_static path_end -i .jpg .gif .png .css .js .html

acl url_static hdr_beg(host) -i img. video. download. ftp. imgs. image.

acl url_dynamic path_end .php .jsp

use_backend static3 if url_static #满足名为url_static这条acl规则,则将请求转发至后端名为static的real server组中去

use_backend dynamic3 if url_dynamic

default_backend dynamic3 #如果上面所有acl规则都不满足,将请求转发到dynamic组中

#---------------------------------------------------------------------

# static backend for serving up images, stylesheets and such

#---------------------------------------------------------------------

backend static3 #定义后端real server组,组名为static

balance roundrobin #支持动态权重修改,支持慢启动

server static_1 centos-k8s004:8080 check inter 3000 fall 3 rise 1 maxconn 30000

#---------------------------------------------------------------------

# round robin balancing between the various backends

#---------------------------------------------------------------------

backend dynamic3

cookie cookie_name insert nocache #使用cookie实现session绑定,且不记录缓存

balance roundrobin

server dynamic1 centos-k8s004:8080 check inter 3000 fall 3 rise 1 maxconn 1000 cookie dynamic1

listen state # 使用单独输出,不需要frontedn调用:定义haproxy的状态统计页面

bind *:8001 # 监听的地址

mode http # http 7层工作模式:对应用层数据做深入分析,因此支持7层的过滤、处理、转换等机制

stats enable # 开启统计页面输出

stats hide-version # 隐藏状态页面版本号

stats uri /haproxyadmin?stats # 指定状态页的访问路径

stats auth admin:root # 基于用户名,密码验证。

stats admin if TRUE # 验证通过时运行登录。

acl num1 src 10.239.0.0/16 # 定义源地址为10.239.0.0/16网段的acl规则,将其命名为num1

tcp-request content accept if num1 # 如果满足此规则,则允许访问

tcp-request content reject # 拒绝其他所有的访问<wiz_code_mirror>

- x

- global #定义全局配置段

- # to have these messages end up in /var/log/haproxy.log you will

- # need to:

- #

- # 1) configure syslog to accept network log events. This is done

- # by adding the '-r' option to the SYSLOGD_OPTIONS in

- # /etc/sysconfig/syslog

- #

- # 2) configure local2 events to go to the /var/log/haproxy.log

- # file. A line like the following can be added to

- # /etc/sysconfig/syslog

- #

- # local2.* /var/log/haproxy.log

- #

- log 127.0.0.1 local2 #通过rsyslog将日志进行归档记录,在/etc/rsyslog.conf配置文件中,添加‘local2.* /var/log/haproxy',并且启用$ModLoad imudp,$UDPServerRun 514,$ModLoad imtcp,$InputTCPServerRun 514 此四项功能,最后重启rsyslog进程。

- chroot /var/lib/haproxy #指定haproxy进程工作的目录

- pidfile /var/run/haproxy.pid #指定pid文件

- maxconn 4000 #最大并发连接数

- user haproxy #运行haproxy的用户

- group haproxy #运行haproxy的组

- daemon #以守护进程的形式运行,即后台运行

- # turn on stats unix socket

- stats socket /var/lib/haproxy/stats

- #---------------------------------------------------------------------

- # common defaults that all the 'listen' and 'backend' sections will

- # use if not designated in their block

- #---------------------------------------------------------------------

- defaults #默认配置端

- mode http #工作模式,源码包编译默认为tcp

- log global #记录全局日志

- option httplog #详细记录http日志

- option dontlognull #不记录健康检测的日志信息

- option http-server-close #启用服务器端主动关闭功能

- option forwardfor except 127.0.0.0/8 #传递client端IP至后端real server

- option redispatch #基于cookie做会话保持时,后端对应存放session的服务器出现故障时,会话会被重定向至别的服务器

- retries 3 #请求重传次数

- timeout http-request 10s #断开客户端连接的时长

- timeout queue 1m #一个请求在队列里的超时时长

- timeout connect 10s #设定在haproxy转发至后端upstream server时等待的超时时长

- timeout client 1m #client的一次非活动状态的超时时长

- timeout server 1m #等待服务器端的非活动的超时时长

- timeout http-keep-alive 10s #持久连接超时时长

- timeout check 10s #检查请求连接的超时时长

- maxconn 3000 #最大连接数

- #---------------------------------------------------------------------

- # main frontend which proxys to the backends

- #---------------------------------------------------------------------

- frontend webserver *:8000 # OSS server

- acl url_static path_beg -i /static /images /javascript /stylesheets #匹配path以/static,/images开始的,且不区分大小写

- acl url_static path_end -i .jpg .gif .png .css .js .html

- acl url_static hdr_beg(host) -i img. video. download. ftp. imgs. image.

- acl url_dynamic path_end .php .jsp

- use_backend static if url_static #满足名为url_static这条acl规则,则将请求转发至后端名为static的real server组中去

- use_backend dynamic if url_dynamic

- default_backend dynamic #如果上面所有acl规则都不满足,将请求转发到dynamic组中

- #---------------------------------------------------------------------