2.3AutoEncoder

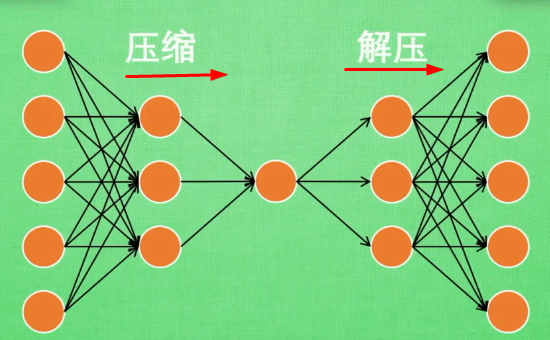

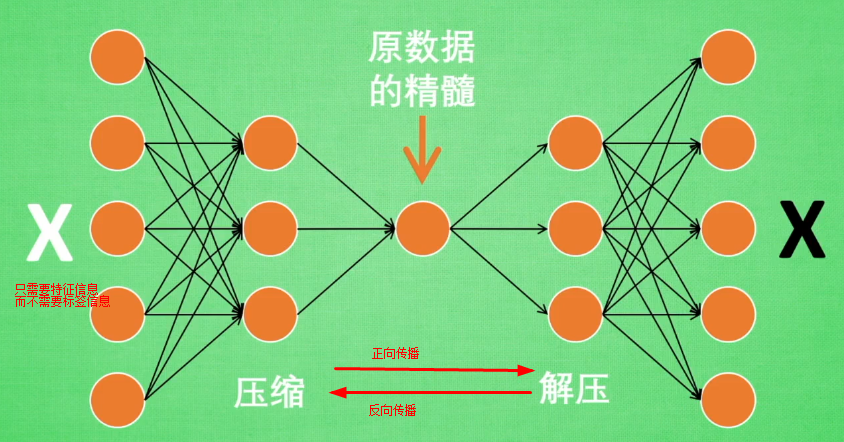

AutoEncoder是包含一个压缩和解压缩的过程,属于一种无监督学习的降维技术。

神经网络接受大量信息,有时候接受的数据达到上千万,可以通过压缩

提取原图片最具有代表性的信息,压缩输入的信息量,在将缩减后的数据放入神经网络中学习,如此学习起来变得轻松了

自编码在这个时候使用,可以将自编码归为无监督学习,类似于PCA,自编码可以为属性降维

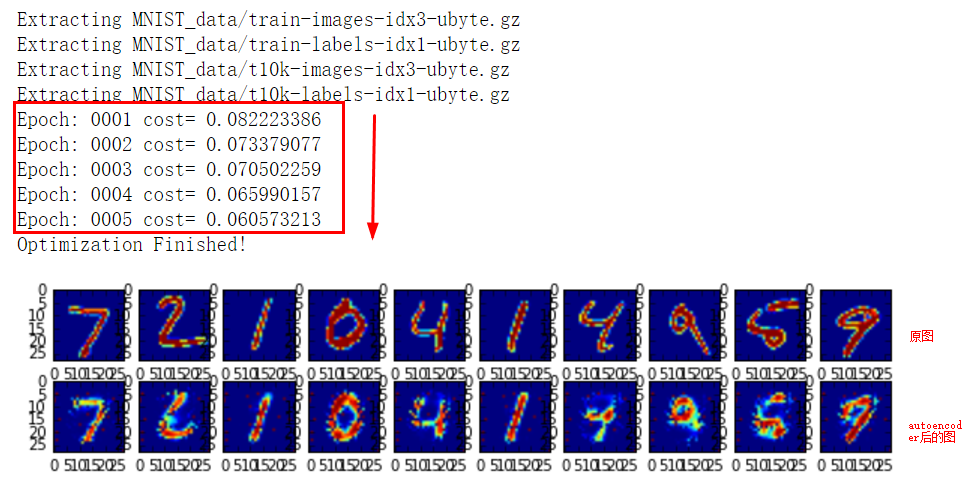

手写体识别代码AutoEncoder

from __future__ import division, print_function, absolute_import import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt # Import MNIST data

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('MNIST_data', one_hot=False) # Visualize decoder setting

# Parameters

learning_rate = 0.01

training_epochs = 5

batch_size = 256

display_step = 1

examples_to_show = 10 # Network Parameters

n_input = 784 # MNIST data input (img shape: 28*28) # tf Graph input (only pictures)

X = tf.placeholder("float", [None, n_input]) # hidden layer settings

n_hidden_1 = 256 # 1st layer num features

n_hidden_2 = 128 # 2nd layer num features

weights = {

'encoder_h1': tf.Variable(tf.random_normal([n_input, n_hidden_1])),

'encoder_h2': tf.Variable(tf.random_normal([n_hidden_1, n_hidden_2])),

'decoder_h1': tf.Variable(tf.random_normal([n_hidden_2, n_hidden_1])),

'decoder_h2': tf.Variable(tf.random_normal([n_hidden_1, n_input])),

}

biases = {

'encoder_b1': tf.Variable(tf.random_normal([n_hidden_1])),

'encoder_b2': tf.Variable(tf.random_normal([n_hidden_2])),

'decoder_b1': tf.Variable(tf.random_normal([n_hidden_1])),

'decoder_b2': tf.Variable(tf.random_normal([n_input])),

} # Building the encoder

def encoder(x):

# Encoder Hidden layer with sigmoid activation #1

layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['encoder_h1']),

biases['encoder_b1']))

# Decoder Hidden layer with sigmoid activation #2

layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['encoder_h2']),

biases['encoder_b2']))

return layer_2 # Building the decoder

def decoder(x):

# Encoder Hidden layer with sigmoid activation #1

layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['decoder_h1']),

biases['decoder_b1']))

# Decoder Hidden layer with sigmoid activation #2

layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['decoder_h2']),

biases['decoder_b2']))

return layer_2 """ # Visualize encoder setting

# Parameters

learning_rate = 0.01 # 0.01 this learning rate will be better! Tested

training_epochs = 10

batch_size = 256

display_step = 1 # Network Parameters

n_input = 784 # MNIST data input (img shape: 28*28) # tf Graph input (only pictures)

X = tf.placeholder("float", [None, n_input]) # hidden layer settings

n_hidden_1 = 128

n_hidden_2 = 64

n_hidden_3 = 10

n_hidden_4 = 2 #2D show weights = {

'encoder_h1': tf.Variable(tf.truncated_normal([n_input, n_hidden_1],)),

'encoder_h2': tf.Variable(tf.truncated_normal([n_hidden_1, n_hidden_2],)),

'encoder_h3': tf.Variable(tf.truncated_normal([n_hidden_2, n_hidden_3],)),

'encoder_h4': tf.Variable(tf.truncated_normal([n_hidden_3, n_hidden_4],)), 'decoder_h1': tf.Variable(tf.truncated_normal([n_hidden_4, n_hidden_3],)),

'decoder_h2': tf.Variable(tf.truncated_normal([n_hidden_3, n_hidden_2],)),

'decoder_h3': tf.Variable(tf.truncated_normal([n_hidden_2, n_hidden_1],)),

'decoder_h4': tf.Variable(tf.truncated_normal([n_hidden_1, n_input],)),

}

biases = {

'encoder_b1': tf.Variable(tf.random_normal([n_hidden_1])),

'encoder_b2': tf.Variable(tf.random_normal([n_hidden_2])),

'encoder_b3': tf.Variable(tf.random_normal([n_hidden_3])),

'encoder_b4': tf.Variable(tf.random_normal([n_hidden_4])), 'decoder_b1': tf.Variable(tf.random_normal([n_hidden_3])),

'decoder_b2': tf.Variable(tf.random_normal([n_hidden_2])),

'decoder_b3': tf.Variable(tf.random_normal([n_hidden_1])),

'decoder_b4': tf.Variable(tf.random_normal([n_input])),

} def encoder(x):

layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['encoder_h1']),

biases['encoder_b1']))

layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['encoder_h2']),

biases['encoder_b2']))

layer_3 = tf.nn.sigmoid(tf.add(tf.matmul(layer_2, weights['encoder_h3']),

biases['encoder_b3']))

layer_4 = tf.add(tf.matmul(layer_3, weights['encoder_h4']),

biases['encoder_b4'])

return layer_4 def decoder(x):

layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['decoder_h1']),

biases['decoder_b1']))

layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['decoder_h2']),

biases['decoder_b2']))

layer_3 = tf.nn.sigmoid(tf.add(tf.matmul(layer_2, weights['decoder_h3']),

biases['decoder_b3']))

layer_4 = tf.nn.sigmoid(tf.add(tf.matmul(layer_3, weights['decoder_h4']),

biases['decoder_b4']))

return layer_4

""" # Construct model

encoder_op = encoder(X)

decoder_op = decoder(encoder_op) # Prediction

y_pred = decoder_op

# Targets (Labels) are the input data.

y_true = X # Define loss and optimizer, minimize the squared error

cost = tf.reduce_mean(tf.pow(y_true - y_pred, 2))

optimizer = tf.train.AdamOptimizer(learning_rate).minimize(cost) # Launch the graph

with tf.Session() as sess:

# tf.initialize_all_variables() no long valid from

# 2017-03-02 if using tensorflow >= 0.12

if int((tf.__version__).split('.')[1]) < 12 and int((tf.__version__).split('.')[0]) < 1:

init = tf.initialize_all_variables()

else:

init = tf.global_variables_initializer()

sess.run(init)

total_batch = int(mnist.train.num_examples/batch_size)

# Training cycle

for epoch in range(training_epochs):

# Loop over all batches

for i in range(total_batch):

batch_xs, batch_ys = mnist.train.next_batch(batch_size) # max(x) = 1, min(x) = 0

# Run optimization op (backprop) and cost op (to get loss value)

_, c = sess.run([optimizer, cost], feed_dict={X: batch_xs})

# Display logs per epoch step

if epoch % display_step == 0:

print("Epoch:", '%04d' % (epoch+1),

"cost=", "{:.9f}".format(c)) print("Optimization Finished!") # # Applying encode and decode over test set

encode_decode = sess.run(

y_pred, feed_dict={X: mnist.test.images[:examples_to_show]})

# Compare original images with their reconstructions

f, a = plt.subplots(2, 10, figsize=(10, 2))

for i in range(examples_to_show):

a[0][i].imshow(np.reshape(mnist.test.images[i], (28, 28)))

a[1][i].imshow(np.reshape(encode_decode[i], (28, 28)))

plt.show() # encoder_result = sess.run(encoder_op, feed_dict={X: mnist.test.images})

# plt.scatter(encoder_result[:, 0], encoder_result[:, 1], c=mnist.test.labels)

# plt.colorbar()

# plt.show()

利用AutoEncoder进行类似于PCA的降维

代码:

from __future__ import division, print_function, absolute_import import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt # Import MNIST data

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('MNIST_data', one_hot=False) """

# Visualize decoder setting

# Parameters

learning_rate = 0.01

training_epochs = 5

batch_size = 256

display_step = 1

examples_to_show = 10 # Network Parameters

n_input = 784 # MNIST data input (img shape: 28*28) # tf Graph input (only pictures)

X = tf.placeholder("float", [None, n_input]) # hidden layer settings

n_hidden_1 = 256 # 1st layer num features

n_hidden_2 = 128 # 2nd layer num features

weights = {

'encoder_h1': tf.Variable(tf.random_normal([n_input, n_hidden_1])),

'encoder_h2': tf.Variable(tf.random_normal([n_hidden_1, n_hidden_2])),

'decoder_h1': tf.Variable(tf.random_normal([n_hidden_2, n_hidden_1])),

'decoder_h2': tf.Variable(tf.random_normal([n_hidden_1, n_input])),

}

biases = {

'encoder_b1': tf.Variable(tf.random_normal([n_hidden_1])),

'encoder_b2': tf.Variable(tf.random_normal([n_hidden_2])),

'decoder_b1': tf.Variable(tf.random_normal([n_hidden_1])),

'decoder_b2': tf.Variable(tf.random_normal([n_input])),

} # Building the encoder

def encoder(x):

# Encoder Hidden layer with sigmoid activation #1

layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['encoder_h1']),

biases['encoder_b1']))

# Decoder Hidden layer with sigmoid activation #2

layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['encoder_h2']),

biases['encoder_b2']))

return layer_2 # Building the decoder

def decoder(x):

# Encoder Hidden layer with sigmoid activation #1

layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['decoder_h1']),

biases['decoder_b1']))

# Decoder Hidden layer with sigmoid activation #2

layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['decoder_h2']),

biases['decoder_b2']))

return layer_2 """ # Visualize encoder setting

# Parameters

learning_rate = 0.01 # 0.01 this learning rate will be better! Tested

training_epochs = 10

batch_size = 256

display_step = 1 # Network Parameters

n_input = 784 # MNIST data input (img shape: 28*28) # tf Graph input (only pictures)

X = tf.placeholder("float", [None, n_input]) # hidden layer settings

n_hidden_1 = 128

n_hidden_2 = 64

n_hidden_3 = 10

n_hidden_4 = 2 #2D show weights = {

'encoder_h1': tf.Variable(tf.truncated_normal([n_input, n_hidden_1],)),

'encoder_h2': tf.Variable(tf.truncated_normal([n_hidden_1, n_hidden_2],)),

'encoder_h3': tf.Variable(tf.truncated_normal([n_hidden_2, n_hidden_3],)),

'encoder_h4': tf.Variable(tf.truncated_normal([n_hidden_3, n_hidden_4],)), 'decoder_h1': tf.Variable(tf.truncated_normal([n_hidden_4, n_hidden_3],)),

'decoder_h2': tf.Variable(tf.truncated_normal([n_hidden_3, n_hidden_2],)),

'decoder_h3': tf.Variable(tf.truncated_normal([n_hidden_2, n_hidden_1],)),

'decoder_h4': tf.Variable(tf.truncated_normal([n_hidden_1, n_input],)),

}

biases = {

'encoder_b1': tf.Variable(tf.random_normal([n_hidden_1])),

'encoder_b2': tf.Variable(tf.random_normal([n_hidden_2])),

'encoder_b3': tf.Variable(tf.random_normal([n_hidden_3])),

'encoder_b4': tf.Variable(tf.random_normal([n_hidden_4])), 'decoder_b1': tf.Variable(tf.random_normal([n_hidden_3])),

'decoder_b2': tf.Variable(tf.random_normal([n_hidden_2])),

'decoder_b3': tf.Variable(tf.random_normal([n_hidden_1])),

'decoder_b4': tf.Variable(tf.random_normal([n_input])),

} def encoder(x):

layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['encoder_h1']),

biases['encoder_b1']))

layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['encoder_h2']),

biases['encoder_b2']))

layer_3 = tf.nn.sigmoid(tf.add(tf.matmul(layer_2, weights['encoder_h3']),

biases['encoder_b3']))

layer_4 = tf.add(tf.matmul(layer_3, weights['encoder_h4']),

biases['encoder_b4'])

return layer_4 def decoder(x):

layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['decoder_h1']),

biases['decoder_b1']))

layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['decoder_h2']),

biases['decoder_b2']))

layer_3 = tf.nn.sigmoid(tf.add(tf.matmul(layer_2, weights['decoder_h3']),

biases['decoder_b3']))

layer_4 = tf.nn.sigmoid(tf.add(tf.matmul(layer_3, weights['decoder_h4']),

biases['decoder_b4']))

return layer_4 # Construct model

encoder_op = encoder(X)

decoder_op = decoder(encoder_op) # Prediction

y_pred = decoder_op

# Targets (Labels) are the input data.

y_true = X # Define loss and optimizer, minimize the squared error

cost = tf.reduce_mean(tf.pow(y_true - y_pred, 2))

optimizer = tf.train.AdamOptimizer(learning_rate).minimize(cost) # Launch the graph

with tf.Session() as sess:

# tf.initialize_all_variables() no long valid from

# 2017-03-02 if using tensorflow >= 0.12

if int((tf.__version__).split('.')[1]) < 12 and int((tf.__version__).split('.')[0]) < 1:

init = tf.initialize_all_variables()

else:

init = tf.global_variables_initializer()

sess.run(init)

total_batch = int(mnist.train.num_examples/batch_size)

# Training cycle

for epoch in range(training_epochs):

# Loop over all batches

for i in range(total_batch):

batch_xs, batch_ys = mnist.train.next_batch(batch_size) # max(x) = 1, min(x) = 0

# Run optimization op (backprop) and cost op (to get loss value)

_, c = sess.run([optimizer, cost], feed_dict={X: batch_xs})

# Display logs per epoch step

if epoch % display_step == 0:

print("Epoch:", '%04d' % (epoch+1),

"cost=", "{:.9f}".format(c)) print("Optimization Finished!") # # # Applying encode and decode over test set

# encode_decode = sess.run(

# y_pred, feed_dict={X: mnist.test.images[:examples_to_show]})

# # Compare original images with their reconstructions

# f, a = plt.subplots(2, 10, figsize=(10, 2))

# for i in range(examples_to_show):

# a[0][i].imshow(np.reshape(mnist.test.images[i], (28, 28)))

# a[1][i].imshow(np.reshape(encode_decode[i], (28, 28)))

# plt.show() encoder_result = sess.run(encoder_op, feed_dict={X: mnist.test.images})

plt.scatter(encoder_result[:, 0], encoder_result[:, 1], c=mnist.test.labels)

plt.colorbar()

plt.show()

显示如下:

2.3AutoEncoder的更多相关文章

随机推荐

- 利用MsChart控件绘制多曲线图表(转载)

在.Net4.0框架中,微软已经将Mschart控件集成了进来,以前一直在web下面用过,原来winform下的Mschart控件更加简单更加方便,今天我们用mschart绘制一个多曲线图,话不多说, ...

- SpringBoot------Eclipce配置Spring Boot

步骤一: 步骤二: 点击左下角Eclipse图标下的“Popular”菜单,选择Spring安装(已安装的插件在Installed中显示),一直按步骤确定就好了,如果中途下载超时什么的,就看看自己的网 ...

- SQL利用CASE按分组显示合计

按行显示的合计 select game, sum(purchase) as purchase_sum from purchase group by game; 按列显示的合计 select sum(c ...

- iOS 优秀文章网址收录

1. iOS应用支持IPV6,就那点事儿 地址:http://www.jianshu.com/p/a6bab07c4062 2. iOS配置IPV6网络 地址:http://www.jianshu.c ...

- STL——空间配置器(SGI-STL)

一. 空间配置器标准接口 参见<STL源码剖析>第二章-2.1.<memory>文件. 二.具备次配置力的SGI空间配置器 1. SGI STL的配置器与众不同,也与标准规范不 ...

- php危险的函数和类 disable_functions/class

phpinfo()功能描述:输出 PHP 环境信息以及相关的模块.WEB 环境等信息.危险等级:中 passthru()功能描述:允许执行一个外部程序并回显输出,类似于 exec().危险等级:高 e ...

- iOS10个实用小技巧(总有你不知道的和你会用到的)

本文转载至 http://www.jianshu.com/p/a3156826c27c 在开发过程中我们总会遇到各种各样的小问题,有些小问题并不是十分容易解决.在此我就总结一下,我在开发中遇到的各种小 ...

- 【cs229-Lecture9】经验风险最小化

写在前面:机器学习的目标是从训练集中得到一个模型,使之能对测试集进行分类,这里,训练集和测试集都是分布D的样本.而我们会设定一个训练误差来表示测试集的拟合程度(训练误差),虽然训练误差具有一定的参考价 ...

- 【前端积累】Awesome初识

前言 之所以要看这个,是因为在看到的一个网站里图表显示的全屏和缩小,anyway ,还是看一下咯~ 一.介绍 Font Awesome 字体为您提供可缩放矢量图标,它可以被定制大小.颜色.阴影以及任何 ...

- Sencha Touch 实战开发培训 视频教程 第二期 第七节

2014.4.21 晚上8:20左右开课. 本节课视频耗时比较短,不过期间意外情况比较多,录制时间偏长了点. 本期培训一共八节,前两节免费,后面的课程需要付费才可以观看. 本节内容: 视频的录制播放 ...