JVM系列(五):gc实现概要01

java的一大核心特性,即是自动内存回收。这让一些人从繁琐的内存管理中解脱出来,但对大部分人来说,貌似这太理所当然了。因为现在市场上的语言,几乎都已经没有了还需要自己去管理内存这事。大家似乎都以为,语言不就应该干这事吗。

其实在我们现在的编程语言中,从某种角度上,大致可以分为多进程并发模型和多线程并发模型。进程的资源可以操作系统直接管理,而线程则依附于进程而存在。所以,从这个角度来说,也许多进程并发模型的内存回收,也许会简单些。因为,多进程运行,可能带来的就是进程的快速创建与消亡。而进程消亡后,就可以由操作系统进行管理内存了。这种编程语言多见于各种脚本语言。但实际上,并没有那么简单。虽说多线程并发模型中,必然伴随着进程的长期运行,线程的反复创建与销毁,所以内存资源需要语言自行实现。但,多进程并发模型,难道就不可以长时间运行了吗?难道就不会使用超过最大内存限制的空间了吗?难道就没有需要删除的空间了吗?其实它同样都会面临这些问题,所以,它们都会有内存回收的需求。只是各自实现的精细度不一样,也是合理的。

网上有许多的资料,讲解java gc的工作过程,参数控制,最佳实践等等。但多半只算得黑盒测试,但也足够我们从容应对工作了。业有余力之时,我们可以来看看,具体的gc如何用代码敲出来,亦是快事。

1. 垃圾回收算法及收集器简述

前面说了,有太多的文章讲解这类工作。我们只提只言片语。

如何判定一个对象是否可以回收,主要算法有: 引用计数器算法(简单加减引用)、可达性分析算法(图论分析)。

而具体的内存回收,则可以分几大算法进行描述:标记-清除算法,复制算法,标记-整理算法,混合之。具体处理过程,可以望文生义,也可以查详细资料理解。

具体的java垃圾回收器:Serial/Serial Old收集器;ParNew收集器;Parallel Scavenge收集器;Parallel Old收集器;CMS(Concurrent Mark Sweep)收集器;G1收集器;ZGC收集器;具体解释请参考其他资料。

2. hotspot垃圾回收器入口1

我们知道,垃圾回收器实际是在时刻都在工作的,比如minor空间不足时,触发一次MinorGC,当老年代空间不足时,触发MajorGC。但要说我们主动触发GC,则可以直接调用 System.gc(); 即可完成一次gc工作。

// jdk/src/share/native/java/lang/Runtime.c

JNIEXPORT void JNICALL

Java_java_lang_Runtime_gc(JNIEnv *env, jobject this)

{

JVM_GC();

}

// jdk,hosport, share/vm/prims/jvm.h

JNIEXPORT void JNICALL

JVM_GC(void);

// hotspot/src/share/vm/prims/jvm.cpp

JVM_ENTRY_NO_ENV(void, JVM_GC(void))

JVMWrapper("JVM_GC");

if (!DisableExplicitGC) {

// 调用对应的gc实现,collect 回收内存

Universe::heap()->collect(GCCause::_java_lang_system_gc);

}

JVM_END

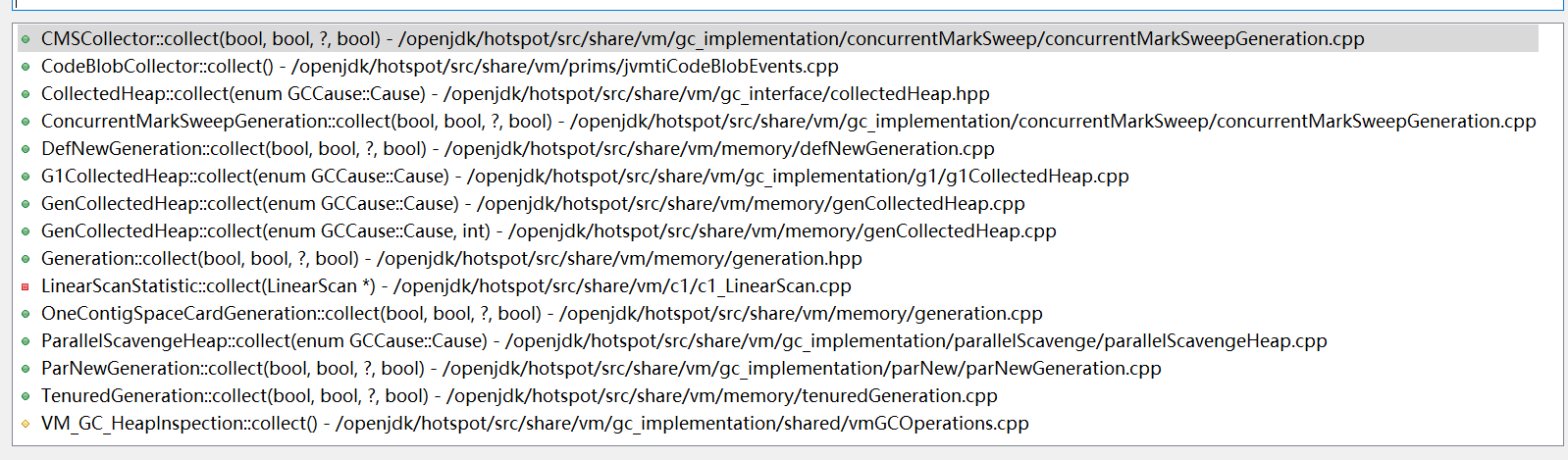

其中,heap() 和 collect() 都是有许多实现版本的,即根据选择的垃圾回收器的不同,而执行不同的逻辑。

具体的heap()选择,需要依赖于外部设置。我们看两个具体的 heap 由来。一个是默认的,一个PS的,一个G1的。

// share/memory/universe.cpp

// The particular choice of collected heap.

static CollectedHeap* heap() { return _collectedHeap; } // share/vm/gc_implemention/parallelScavenge/parallelScavengeHeap.cpp

ParallelScavengeHeap* ParallelScavengeHeap::heap() {

assert(_psh != NULL, "Uninitialized access to ParallelScavengeHeap::heap()");

assert(_psh->kind() == CollectedHeap::ParallelScavengeHeap, "not a parallel scavenge heap");

return _psh;

} // share/vm/gc_implemention/g1/g1CollectionHeap.cpp

G1CollectedHeap* G1CollectedHeap::heap() {

assert(_sh->kind() == CollectedHeap::G1CollectedHeap,

"not a garbage-first heap");

return _g1h;

}

具体内存回收由 collect完成,而每个垃圾回收器有各自的实现。我们就看3个实现,1.默认新生代回收;2.多线程新生代回收;3.G1新生代回收;只需看个入口框架,细节留待后续完成。

// 1. defNew 回收

// share/memory/defNewGeneration.cpp

void DefNewGeneration::collect(bool full,

bool clear_all_soft_refs,

size_t size,

bool is_tlab) {

assert(full || size > 0, "otherwise we don't want to collect"); GenCollectedHeap* gch = GenCollectedHeap::heap(); _gc_timer->register_gc_start();

DefNewTracer gc_tracer;

gc_tracer.report_gc_start(gch->gc_cause(), _gc_timer->gc_start()); _next_gen = gch->next_gen(this); // If the next generation is too full to accommodate promotion

// from this generation, pass on collection; let the next generation

// do it.

if (!collection_attempt_is_safe()) {

if (Verbose && PrintGCDetails) {

gclog_or_tty->print(" :: Collection attempt not safe :: ");

}

gch->set_incremental_collection_failed(); // Slight lie: we did not even attempt one

return;

}

assert(to()->is_empty(), "Else not collection_attempt_is_safe"); init_assuming_no_promotion_failure(); GCTraceTime t1(GCCauseString("GC", gch->gc_cause()), PrintGC && !PrintGCDetails, true, NULL);

// Capture heap used before collection (for printing).

size_t gch_prev_used = gch->used(); gch->trace_heap_before_gc(&gc_tracer); SpecializationStats::clear(); // These can be shared for all code paths

IsAliveClosure is_alive(this);

ScanWeakRefClosure scan_weak_ref(this); age_table()->clear();

to()->clear(SpaceDecorator::Mangle); gch->rem_set()->prepare_for_younger_refs_iterate(false); assert(gch->no_allocs_since_save_marks(0),

"save marks have not been newly set."); // Not very pretty.

CollectorPolicy* cp = gch->collector_policy(); FastScanClosure fsc_with_no_gc_barrier(this, false);

FastScanClosure fsc_with_gc_barrier(this, true); KlassScanClosure klass_scan_closure(&fsc_with_no_gc_barrier,

gch->rem_set()->klass_rem_set()); set_promo_failure_scan_stack_closure(&fsc_with_no_gc_barrier);

FastEvacuateFollowersClosure evacuate_followers(gch, _level, this,

&fsc_with_no_gc_barrier,

&fsc_with_gc_barrier); assert(gch->no_allocs_since_save_marks(0),

"save marks have not been newly set."); int so = SharedHeap::SO_AllClasses | SharedHeap::SO_Strings | SharedHeap::SO_CodeCache; gch->gen_process_strong_roots(_level,

true, // Process younger gens, if any,

// as strong roots.

true, // activate StrongRootsScope

true, // is scavenging

SharedHeap::ScanningOption(so),

&fsc_with_no_gc_barrier,

true, // walk *all* scavengable nmethods

&fsc_with_gc_barrier,

&klass_scan_closure); // "evacuate followers".

evacuate_followers.do_void(); FastKeepAliveClosure keep_alive(this, &scan_weak_ref);

ReferenceProcessor* rp = ref_processor();

rp->setup_policy(clear_all_soft_refs);

const ReferenceProcessorStats& stats =

rp->process_discovered_references(&is_alive, &keep_alive, &evacuate_followers,

NULL, _gc_timer);

gc_tracer.report_gc_reference_stats(stats); if (!_promotion_failed) {

// Swap the survivor spaces.

eden()->clear(SpaceDecorator::Mangle);

from()->clear(SpaceDecorator::Mangle);

if (ZapUnusedHeapArea) {

// This is now done here because of the piece-meal mangling which

// can check for valid mangling at intermediate points in the

// collection(s). When a minor collection fails to collect

// sufficient space resizing of the young generation can occur

// an redistribute the spaces in the young generation. Mangle

// here so that unzapped regions don't get distributed to

// other spaces.

to()->mangle_unused_area();

}

swap_spaces(); assert(to()->is_empty(), "to space should be empty now"); adjust_desired_tenuring_threshold(); // A successful scavenge should restart the GC time limit count which is

// for full GC's.

AdaptiveSizePolicy* size_policy = gch->gen_policy()->size_policy();

size_policy->reset_gc_overhead_limit_count();

if (PrintGC && !PrintGCDetails) {

gch->print_heap_change(gch_prev_used);

}

assert(!gch->incremental_collection_failed(), "Should be clear");

} else {

assert(_promo_failure_scan_stack.is_empty(), "post condition");

_promo_failure_scan_stack.clear(true); // Clear cached segments. remove_forwarding_pointers();

if (PrintGCDetails) {

gclog_or_tty->print(" (promotion failed) ");

}

// Add to-space to the list of space to compact

// when a promotion failure has occurred. In that

// case there can be live objects in to-space

// as a result of a partial evacuation of eden

// and from-space.

swap_spaces(); // For uniformity wrt ParNewGeneration.

from()->set_next_compaction_space(to());

gch->set_incremental_collection_failed(); // Inform the next generation that a promotion failure occurred.

_next_gen->promotion_failure_occurred();

gc_tracer.report_promotion_failed(_promotion_failed_info); // Reset the PromotionFailureALot counters.

NOT_PRODUCT(Universe::heap()->reset_promotion_should_fail();)

}

// set new iteration safe limit for the survivor spaces

from()->set_concurrent_iteration_safe_limit(from()->top());

to()->set_concurrent_iteration_safe_limit(to()->top());

SpecializationStats::print(); // We need to use a monotonically non-decreasing time in ms

// or we will see time-warp warnings and os::javaTimeMillis()

// does not guarantee monotonicity.

jlong now = os::javaTimeNanos() / NANOSECS_PER_MILLISEC;

update_time_of_last_gc(now); gch->trace_heap_after_gc(&gc_tracer);

gc_tracer.report_tenuring_threshold(tenuring_threshold()); _gc_timer->register_gc_end(); gc_tracer.report_gc_end(_gc_timer->gc_end(), _gc_timer->time_partitions());

} // 2. parNew 回收

// share/vm/gc_implemention/parNew/parNewGeneration.cpp

void ParNewGeneration::collect(bool full,

bool clear_all_soft_refs,

size_t size,

bool is_tlab) {

assert(full || size > 0, "otherwise we don't want to collect");

// 获得堆空间的指针

GenCollectedHeap* gch = GenCollectedHeap::heap(); _gc_timer->register_gc_start(); assert(gch->kind() == CollectedHeap::GenCollectedHeap,

"not a CMS generational heap");

AdaptiveSizePolicy* size_policy = gch->gen_policy()->size_policy();

FlexibleWorkGang* workers = gch->workers();

assert(workers != NULL, "Need workgang for parallel work");

int active_workers =

AdaptiveSizePolicy::calc_active_workers(workers->total_workers(),

workers->active_workers(),

Threads::number_of_non_daemon_threads());

workers->set_active_workers(active_workers);

assert(gch->n_gens() == 2,

"Par collection currently only works with single older gen.");

_next_gen = gch->next_gen(this);

// Do we have to avoid promotion_undo?

if (gch->collector_policy()->is_concurrent_mark_sweep_policy()) {

set_avoid_promotion_undo(true);

} // If the next generation is too full to accommodate worst-case promotion

// from this generation, pass on collection; let the next generation

// do it.

if (!collection_attempt_is_safe()) {

gch->set_incremental_collection_failed(); // slight lie, in that we did not even attempt one

return;

}

assert(to()->is_empty(), "Else not collection_attempt_is_safe"); ParNewTracer gc_tracer;

gc_tracer.report_gc_start(gch->gc_cause(), _gc_timer->gc_start());

gch->trace_heap_before_gc(&gc_tracer); init_assuming_no_promotion_failure(); if (UseAdaptiveSizePolicy) {

set_survivor_overflow(false);

size_policy->minor_collection_begin();

} GCTraceTime t1(GCCauseString("GC", gch->gc_cause()), PrintGC && !PrintGCDetails, true, NULL);

// Capture heap used before collection (for printing).

size_t gch_prev_used = gch->used(); SpecializationStats::clear(); age_table()->clear();

to()->clear(SpaceDecorator::Mangle); gch->save_marks();

assert(workers != NULL, "Need parallel worker threads.");

int n_workers = active_workers; // Set the correct parallelism (number of queues) in the reference processor

ref_processor()->set_active_mt_degree(n_workers); // Always set the terminator for the active number of workers

// because only those workers go through the termination protocol.

ParallelTaskTerminator _term(n_workers, task_queues());

ParScanThreadStateSet thread_state_set(workers->active_workers(),

*to(), *this, *_next_gen, *task_queues(),

_overflow_stacks, desired_plab_sz(), _term); ParNewGenTask tsk(this, _next_gen, reserved().end(), &thread_state_set);

gch->set_par_threads(n_workers);

gch->rem_set()->prepare_for_younger_refs_iterate(true);

// It turns out that even when we're using 1 thread, doing the work in a

// separate thread causes wide variance in run times. We can't help this

// in the multi-threaded case, but we special-case n=1 here to get

// repeatable measurements of the 1-thread overhead of the parallel code.

if (n_workers > 1) {

// 使用GcRoots 可达性分析法

GenCollectedHeap::StrongRootsScope srs(gch);

workers->run_task(&tsk);

} else {

GenCollectedHeap::StrongRootsScope srs(gch);

tsk.work(0);

}

thread_state_set.reset(0 /* Bad value in debug if not reset */,

promotion_failed()); // Process (weak) reference objects found during scavenge.

ReferenceProcessor* rp = ref_processor();

IsAliveClosure is_alive(this);

ScanWeakRefClosure scan_weak_ref(this);

KeepAliveClosure keep_alive(&scan_weak_ref);

ScanClosure scan_without_gc_barrier(this, false);

ScanClosureWithParBarrier scan_with_gc_barrier(this, true);

set_promo_failure_scan_stack_closure(&scan_without_gc_barrier);

EvacuateFollowersClosureGeneral evacuate_followers(gch, _level,

&scan_without_gc_barrier, &scan_with_gc_barrier);

rp->setup_policy(clear_all_soft_refs);

// Can the mt_degree be set later (at run_task() time would be best)?

rp->set_active_mt_degree(active_workers);

ReferenceProcessorStats stats;

if (rp->processing_is_mt()) {

ParNewRefProcTaskExecutor task_executor(*this, thread_state_set);

stats = rp->process_discovered_references(&is_alive, &keep_alive,

&evacuate_followers, &task_executor,

_gc_timer);

} else {

thread_state_set.flush();

gch->set_par_threads(0); // 0 ==> non-parallel.

gch->save_marks();

stats = rp->process_discovered_references(&is_alive, &keep_alive,

&evacuate_followers, NULL,

_gc_timer);

}

gc_tracer.report_gc_reference_stats(stats);

if (!promotion_failed()) {

// Swap the survivor spaces.

eden()->clear(SpaceDecorator::Mangle);

from()->clear(SpaceDecorator::Mangle);

if (ZapUnusedHeapArea) {

// This is now done here because of the piece-meal mangling which

// can check for valid mangling at intermediate points in the

// collection(s). When a minor collection fails to collect

// sufficient space resizing of the young generation can occur

// an redistribute the spaces in the young generation. Mangle

// here so that unzapped regions don't get distributed to

// other spaces.

to()->mangle_unused_area();

}

swap_spaces(); // A successful scavenge should restart the GC time limit count which is

// for full GC's.

size_policy->reset_gc_overhead_limit_count(); assert(to()->is_empty(), "to space should be empty now"); adjust_desired_tenuring_threshold();

} else {

handle_promotion_failed(gch, thread_state_set, gc_tracer);

}

// set new iteration safe limit for the survivor spaces

from()->set_concurrent_iteration_safe_limit(from()->top());

to()->set_concurrent_iteration_safe_limit(to()->top()); if (ResizePLAB) {

plab_stats()->adjust_desired_plab_sz(n_workers);

}

// 输出gc日志

if (PrintGC && !PrintGCDetails) {

gch->print_heap_change(gch_prev_used);

} if (PrintGCDetails && ParallelGCVerbose) {

TASKQUEUE_STATS_ONLY(thread_state_set.print_termination_stats());

TASKQUEUE_STATS_ONLY(thread_state_set.print_taskqueue_stats());

} if (UseAdaptiveSizePolicy) {

size_policy->minor_collection_end(gch->gc_cause());

size_policy->avg_survived()->sample(from()->used());

} // We need to use a monotonically non-deccreasing time in ms

// or we will see time-warp warnings and os::javaTimeMillis()

// does not guarantee monotonicity.

jlong now = os::javaTimeNanos() / NANOSECS_PER_MILLISEC;

update_time_of_last_gc(now); SpecializationStats::print(); rp->set_enqueuing_is_done(true);

if (rp->processing_is_mt()) {

ParNewRefProcTaskExecutor task_executor(*this, thread_state_set);

rp->enqueue_discovered_references(&task_executor);

} else {

rp->enqueue_discovered_references(NULL);

}

rp->verify_no_references_recorded(); gch->trace_heap_after_gc(&gc_tracer);

gc_tracer.report_tenuring_threshold(tenuring_threshold()); _gc_timer->register_gc_end(); gc_tracer.report_gc_end(_gc_timer->gc_end(), _gc_timer->time_partitions());

} // 3. 分代收集回收

// share/vm/memory/genCollectedHeap.cpp

void GenCollectedHeap::collect(GCCause::Cause cause) {

// 判断是否需要进行FGC, 依据是 cms 收集器且 cause==_gc_locker

if (should_do_concurrent_full_gc(cause)) {

#if INCLUDE_ALL_GCS

// mostly concurrent full collection

collect_mostly_concurrent(cause);

#else // INCLUDE_ALL_GCS

ShouldNotReachHere();

#endif // INCLUDE_ALL_GCS

} else {

#ifdef ASSERT

if (cause == GCCause::_scavenge_alot) {

// minor collection only

// 新生代gc

collect(cause, 0);

} else {

// Stop-the-world full collection

collect(cause, n_gens() - 1);

}

#else

// Stop-the-world full collection

collect(cause, n_gens() - 1);

#endif

}

} void GenCollectedHeap::collect(GCCause::Cause cause, int max_level) {

// The caller doesn't have the Heap_lock

assert(!Heap_lock->owned_by_self(), "this thread should not own the Heap_lock");

MutexLocker ml(Heap_lock);

// 上锁收集内存

collect_locked(cause, max_level);

} // this is the private collection interface

// The Heap_lock is expected to be held on entry. void GenCollectedHeap::collect_locked(GCCause::Cause cause, int max_level) {

// Read the GC count while holding the Heap_lock

unsigned int gc_count_before = total_collections();

unsigned int full_gc_count_before = total_full_collections();

{

MutexUnlocker mu(Heap_lock); // give up heap lock, execute gets it back

// 整个gc过程就由 VM_GenCollectFull 去实现了,而其中最重要的则是 doIt() 方法的实现

VM_GenCollectFull op(gc_count_before, full_gc_count_before,

cause, max_level);

// 交给虚拟机线程完成该工作

VMThread::execute(&op);

}

} // 4. G1回收

// share/vm/gc_implemention/g1/g1CollectedHeap.cpp

void G1CollectedHeap::collect(GCCause::Cause cause) {

assert_heap_not_locked(); unsigned int gc_count_before;

unsigned int old_marking_count_before;

bool retry_gc; do {

retry_gc = false; {

MutexLocker ml(Heap_lock); // Read the GC count while holding the Heap_lock

gc_count_before = total_collections();

old_marking_count_before = _old_marking_cycles_started;

}

// FullGc 判定

if (should_do_concurrent_full_gc(cause)) {

// Schedule an initial-mark evacuation pause that will start a

// concurrent cycle. We're setting word_size to 0 which means that

// we are not requesting a post-GC allocation.

// 整个 FGC 过程由 VM_G1IncCollectionPause 完成

VM_G1IncCollectionPause op(gc_count_before,

0, /* word_size */

true, /* should_initiate_conc_mark */

g1_policy()->max_pause_time_ms(),

cause); VMThread::execute(&op);

if (!op.pause_succeeded()) {

if (old_marking_count_before == _old_marking_cycles_started) {

retry_gc = op.should_retry_gc();

} else {

// A Full GC happened while we were trying to schedule the

// initial-mark GC. No point in starting a new cycle given

// that the whole heap was collected anyway.

} if (retry_gc) {

if (GC_locker::is_active_and_needs_gc()) {

GC_locker::stall_until_clear();

}

}

}

} else {

if (cause == GCCause::_gc_locker

DEBUG_ONLY(|| cause == GCCause::_scavenge_alot)) { // Schedule a standard evacuation pause. We're setting word_size

// to 0 which means that we are not requesting a post-GC allocation.

VM_G1IncCollectionPause op(gc_count_before,

0, /* word_size */

false, /* should_initiate_conc_mark */

g1_policy()->max_pause_time_ms(),

cause);

VMThread::execute(&op);

} else {

// Schedule a Full GC.

VM_G1CollectFull op(gc_count_before, old_marking_count_before, cause);

VMThread::execute(&op);

}

}

} while (retry_gc);

}

以上是几个收集的实现入门框架,从中我们可以窥得一些实现方式。尤其是对于 单线程类的收集器,基本思路已经形成。只是具体如何,还得看官们自花心思。

除了要了解gc从何处开始执行,我们应该还需要知道如何选择收集器,以及他们是如何初始化的。这自然是在jvm启动时完成的。

// universe初始化

// share/vm/runtime/init.cpp

jint init_globals() {

HandleMark hm;

management_init();

bytecodes_init();

classLoader_init();

codeCache_init();

VM_Version_init();

os_init_globals();

stubRoutines_init1();

// gc 初始化入口

jint status = universe_init(); // dependent on codeCache_init and

// stubRoutines_init1 and metaspace_init.

if (status != JNI_OK)

return status; interpreter_init(); // before any methods loaded

invocationCounter_init(); // before any methods loaded

marksweep_init();

accessFlags_init();

templateTable_init();

InterfaceSupport_init();

SharedRuntime::generate_stubs();

universe2_init(); // dependent on codeCache_init and stubRoutines_init1

referenceProcessor_init();

jni_handles_init();

#if INCLUDE_VM_STRUCTS

vmStructs_init();

#endif // INCLUDE_VM_STRUCTS vtableStubs_init();

InlineCacheBuffer_init();

compilerOracle_init();

compilationPolicy_init();

compileBroker_init();

VMRegImpl::set_regName(); if (!universe_post_init()) {

return JNI_ERR;

}

javaClasses_init(); // must happen after vtable initialization

stubRoutines_init2(); // note: StubRoutines need 2-phase init // All the flags that get adjusted by VM_Version_init and os::init_2

// have been set so dump the flags now.

if (PrintFlagsFinal) {

CommandLineFlags::printFlags(tty, false);

} return JNI_OK;

} // memory/universe.cpp

jint universe_init() {

assert(!Universe::_fully_initialized, "called after initialize_vtables");

guarantee(1 << LogHeapWordSize == sizeof(HeapWord),

"LogHeapWordSize is incorrect.");

guarantee(sizeof(oop) >= sizeof(HeapWord), "HeapWord larger than oop?");

guarantee(sizeof(oop) % sizeof(HeapWord) == 0,

"oop size is not not a multiple of HeapWord size");

TraceTime timer("Genesis", TraceStartupTime);

GC_locker::lock(); // do not allow gc during bootstrapping

JavaClasses::compute_hard_coded_offsets(); jint status = Universe::initialize_heap();

if (status != JNI_OK) {

return status;

} Metaspace::global_initialize(); // Create memory for metadata. Must be after initializing heap for

// DumpSharedSpaces.

ClassLoaderData::init_null_class_loader_data(); // We have a heap so create the Method* caches before

// Metaspace::initialize_shared_spaces() tries to populate them.

Universe::_finalizer_register_cache = new LatestMethodCache();

Universe::_loader_addClass_cache = new LatestMethodCache();

Universe::_pd_implies_cache = new LatestMethodCache(); if (UseSharedSpaces) {

// Read the data structures supporting the shared spaces (shared

// system dictionary, symbol table, etc.). After that, access to

// the file (other than the mapped regions) is no longer needed, and

// the file is closed. Closing the file does not affect the

// currently mapped regions.

MetaspaceShared::initialize_shared_spaces();

StringTable::create_table();

} else {

SymbolTable::create_table();

StringTable::create_table();

ClassLoader::create_package_info_table();

} return JNI_OK;

} // hotspot/src/share/vm/memory/universe.cpp:

jint Universe::initialize_heap() { if (UseParallelGC) {

#if INCLUDE_ALL_GCS

Universe::_collectedHeap = new ParallelScavengeHeap();

#else // INCLUDE_ALL_GCS

fatal("UseParallelGC not supported in this VM.");

#endif // INCLUDE_ALL_GCS } else if (UseG1GC) {

#if INCLUDE_ALL_GCS

G1CollectorPolicy* g1p = new G1CollectorPolicy();

g1p->initialize_all();

G1CollectedHeap* g1h = new G1CollectedHeap(g1p);

Universe::_collectedHeap = g1h;

#else // INCLUDE_ALL_GCS

fatal("UseG1GC not supported in java kernel vm.");

#endif // INCLUDE_ALL_GCS } else {

GenCollectorPolicy *gc_policy; if (UseSerialGC) {

gc_policy = new MarkSweepPolicy();

} else if (UseConcMarkSweepGC) {

#if INCLUDE_ALL_GCS

if (UseAdaptiveSizePolicy) {

gc_policy = new ASConcurrentMarkSweepPolicy();

} else {

gc_policy = new ConcurrentMarkSweepPolicy();

}

#else // INCLUDE_ALL_GCS

fatal("UseConcMarkSweepGC not supported in this VM.");

#endif // INCLUDE_ALL_GCS

} else { // default old generation

gc_policy = new MarkSweepPolicy();

}

gc_policy->initialize_all(); Universe::_collectedHeap = new GenCollectedHeap(gc_policy);

} jint status = Universe::heap()->initialize();

if (status != JNI_OK) {

return status;

} #ifdef _LP64

if (UseCompressedOops) {

// Subtract a page because something can get allocated at heap base.

// This also makes implicit null checking work, because the

// memory+1 page below heap_base needs to cause a signal.

// See needs_explicit_null_check.

// Only set the heap base for compressed oops because it indicates

// compressed oops for pstack code.

bool verbose = PrintCompressedOopsMode || (PrintMiscellaneous && Verbose);

if (verbose) {

tty->cr();

tty->print("heap address: " PTR_FORMAT ", size: " SIZE_FORMAT " MB",

Universe::heap()->base(), Universe::heap()->reserved_region().byte_size()/M);

}

if (((uint64_t)Universe::heap()->reserved_region().end() > OopEncodingHeapMax)) {

// Can't reserve heap below 32Gb.

// keep the Universe::narrow_oop_base() set in Universe::reserve_heap()

Universe::set_narrow_oop_shift(LogMinObjAlignmentInBytes);

if (verbose) {

tty->print(", %s: "PTR_FORMAT,

narrow_oop_mode_to_string(HeapBasedNarrowOop),

Universe::narrow_oop_base());

}

} else {

Universe::set_narrow_oop_base(0);

if (verbose) {

tty->print(", %s", narrow_oop_mode_to_string(ZeroBasedNarrowOop));

}

#ifdef _WIN64

if (!Universe::narrow_oop_use_implicit_null_checks()) {

// Don't need guard page for implicit checks in indexed addressing

// mode with zero based Compressed Oops.

Universe::set_narrow_oop_use_implicit_null_checks(true);

}

#endif // _WIN64

if((uint64_t)Universe::heap()->reserved_region().end() > UnscaledOopHeapMax) {

// Can't reserve heap below 4Gb.

Universe::set_narrow_oop_shift(LogMinObjAlignmentInBytes);

} else {

Universe::set_narrow_oop_shift(0);

if (verbose) {

tty->print(", %s", narrow_oop_mode_to_string(UnscaledNarrowOop));

}

}

} if (verbose) {

tty->cr();

tty->cr();

}

Universe::set_narrow_ptrs_base(Universe::narrow_oop_base());

}

// Universe::narrow_oop_base() is one page below the heap.

assert((intptr_t)Universe::narrow_oop_base() <= (intptr_t)(Universe::heap()->base() -

os::vm_page_size()) ||

Universe::narrow_oop_base() == NULL, "invalid value");

assert(Universe::narrow_oop_shift() == LogMinObjAlignmentInBytes ||

Universe::narrow_oop_shift() == 0, "invalid value");

#endif // We will never reach the CATCH below since Exceptions::_throw will cause

// the VM to exit if an exception is thrown during initialization if (UseTLAB) {

assert(Universe::heap()->supports_tlab_allocation(),

"Should support thread-local allocation buffers");

ThreadLocalAllocBuffer::startup_initialization();

}

return JNI_OK;

}

3. 一个内存回收器的工作示例

该部分我们以一某个垃圾收集器的实现过程,来了解gc是如何完成的。这自然算不得真正的了解gc原理,但是有其一定的作用。

首先,一般的gc动作都会有独立的vm线程,也就是我们通过jstack查看时看到的gc线程。当然了,在代码中我们看到的是 VMThread. vm线程运行垃圾回收:

// share/vm/runtime/vmThread.cpp

void VMThread::execute(VM_Operation* op) {

Thread* t = Thread::current(); if (!t->is_VM_thread()) {

SkipGCALot sgcalot(t); // avoid re-entrant attempts to gc-a-lot

// JavaThread or WatcherThread

bool concurrent = op->evaluate_concurrently();

// only blocking VM operations need to verify the caller's safepoint state:

if (!concurrent) {

t->check_for_valid_safepoint_state(true);

} // New request from Java thread, evaluate prologue

if (!op->doit_prologue()) {

return; // op was cancelled

} // Setup VM_operations for execution

op->set_calling_thread(t, Thread::get_priority(t)); // It does not make sense to execute the epilogue, if the VM operation object is getting

// deallocated by the VM thread.

bool execute_epilog = !op->is_cheap_allocated();

assert(!concurrent || op->is_cheap_allocated(), "concurrent => cheap_allocated"); // Get ticket number for non-concurrent VM operations

int ticket = 0;

if (!concurrent) {

ticket = t->vm_operation_ticket();

} // Add VM operation to list of waiting threads. We are guaranteed not to block while holding the

// VMOperationQueue_lock, so we can block without a safepoint check. This allows vm operation requests

// to be queued up during a safepoint synchronization.

{

VMOperationQueue_lock->lock_without_safepoint_check();

bool ok = _vm_queue->add(op);

op->set_timestamp(os::javaTimeMillis());

VMOperationQueue_lock->notify();

VMOperationQueue_lock->unlock();

// VM_Operation got skipped

if (!ok) {

assert(concurrent, "can only skip concurrent tasks");

if (op->is_cheap_allocated()) delete op;

return;

}

} if (!concurrent) {

// Wait for completion of request (non-concurrent)

// Note: only a JavaThread triggers the safepoint check when locking

MutexLocker mu(VMOperationRequest_lock);

while(t->vm_operation_completed_count() < ticket) {

VMOperationRequest_lock->wait(!t->is_Java_thread());

}

} if (execute_epilog) {

op->doit_epilogue();

}

} else {

// invoked by VM thread; usually nested VM operation

assert(t->is_VM_thread(), "must be a VM thread");

VM_Operation* prev_vm_operation = vm_operation();

if (prev_vm_operation != NULL) {

// Check the VM operation allows nested VM operation. This normally not the case, e.g., the compiler

// does not allow nested scavenges or compiles.

if (!prev_vm_operation->allow_nested_vm_operations()) {

fatal(err_msg("Nested VM operation %s requested by operation %s",

op->name(), vm_operation()->name()));

}

op->set_calling_thread(prev_vm_operation->calling_thread(), prev_vm_operation->priority());

} EventMark em("Executing %s VM operation: %s", prev_vm_operation ? "nested" : "", op->name()); // Release all internal handles after operation is evaluated

HandleMark hm(t);

_cur_vm_operation = op; if (op->evaluate_at_safepoint() && !SafepointSynchronize::is_at_safepoint()) {

SafepointSynchronize::begin();

op->evaluate();

SafepointSynchronize::end();

} else {

op->evaluate();

} // Free memory if needed

if (op->is_cheap_allocated()) delete op; _cur_vm_operation = prev_vm_operation;

}

}

我们看前面的 GenCollectedHeap 的垃圾回收方法,其最终向 vmThread 提交了一个 VM_GenCollectFull 的op任务,而其核心实现是在 doit() 方法中。 分代垃圾回收,由vm线程执行。

// vm/gc_implemention/shared/vmGCOperations.cpp

void VM_GenCollectFull::doit() {

SvcGCMarker sgcm(SvcGCMarker::FULL); GenCollectedHeap* gch = GenCollectedHeap::heap();

GCCauseSetter gccs(gch, _gc_cause);

// 清理软引用,再做回收

gch->do_full_collection(gch->must_clear_all_soft_refs(), _max_level);

}

// memory/genCollectedHeap.cpp

void GenCollectedHeap::do_full_collection(bool clear_all_soft_refs,

int max_level) {

int local_max_level;

if (!incremental_collection_will_fail(false /* don't consult_young */) &&

gc_cause() == GCCause::_gc_locker) {

local_max_level = 0;

} else {

local_max_level = max_level;

}

// 具体回收动作

do_collection(true /* full */,

clear_all_soft_refs /* clear_all_soft_refs */,

0 /* size */,

false /* is_tlab */,

local_max_level /* max_level */);

// Hack XXX FIX ME !!!

// A scavenge may not have been attempted, or may have

// been attempted and failed, because the old gen was too full

// 触发一次 FGC

if (local_max_level == 0 && gc_cause() == GCCause::_gc_locker &&

incremental_collection_will_fail(false /* don't consult_young */)) {

if (PrintGCDetails) {

gclog_or_tty->print_cr("GC locker: Trying a full collection "

"because scavenge failed");

}

// This time allow the old gen to be collected as well

do_collection(true /* full */,

clear_all_soft_refs /* clear_all_soft_refs */,

0 /* size */,

false /* is_tlab */,

n_gens() - 1 /* max_level */);

}

} // memory/genCollectedHeap.cpp

void GenCollectedHeap::do_collection(bool full,

bool clear_all_soft_refs,

size_t size,

bool is_tlab,

int max_level) {

bool prepared_for_verification = false;

ResourceMark rm;

DEBUG_ONLY(Thread* my_thread = Thread::current();) assert(SafepointSynchronize::is_at_safepoint(), "should be at safepoint");

assert(my_thread->is_VM_thread() ||

my_thread->is_ConcurrentGC_thread(),

"incorrect thread type capability");

assert(Heap_lock->is_locked(),

"the requesting thread should have the Heap_lock");

guarantee(!is_gc_active(), "collection is not reentrant");

assert(max_level < n_gens(), "sanity check"); if (GC_locker::check_active_before_gc()) {

return; // GC is disabled (e.g. JNI GetXXXCritical operation)

} const bool do_clear_all_soft_refs = clear_all_soft_refs ||

collector_policy()->should_clear_all_soft_refs(); ClearedAllSoftRefs casr(do_clear_all_soft_refs, collector_policy()); const size_t metadata_prev_used = MetaspaceAux::allocated_used_bytes(); print_heap_before_gc(); {

FlagSetting fl(_is_gc_active, true); bool complete = full && (max_level == (n_gens()-1));

const char* gc_cause_prefix = complete ? "Full GC" : "GC";

gclog_or_tty->date_stamp(PrintGC && PrintGCDateStamps);

TraceCPUTime tcpu(PrintGCDetails, true, gclog_or_tty);

GCTraceTime t(GCCauseString(gc_cause_prefix, gc_cause()), PrintGCDetails, false, NULL); gc_prologue(complete);

increment_total_collections(complete); size_t gch_prev_used = used(); int starting_level = 0;

if (full) {

// Search for the oldest generation which will collect all younger

// generations, and start collection loop there.

for (int i = max_level; i >= 0; i--) {

if (_gens[i]->full_collects_younger_generations()) {

starting_level = i;

break;

}

}

} bool must_restore_marks_for_biased_locking = false; int max_level_collected = starting_level;

for (int i = starting_level; i <= max_level; i++) {

if (_gens[i]->should_collect(full, size, is_tlab)) {

// 升级FGC

if (i == n_gens() - 1) { // a major collection is to happen

if (!complete) {

// The full_collections increment was missed above.

increment_total_full_collections();

}

pre_full_gc_dump(NULL); // do any pre full gc dumps

}

// Timer for individual generations. Last argument is false: no CR

// FIXME: We should try to start the timing earlier to cover more of the GC pause

GCTraceTime t1(_gens[i]->short_name(), PrintGCDetails, false, NULL);

TraceCollectorStats tcs(_gens[i]->counters());

TraceMemoryManagerStats tmms(_gens[i]->kind(),gc_cause()); size_t prev_used = _gens[i]->used();

_gens[i]->stat_record()->invocations++;

_gens[i]->stat_record()->accumulated_time.start(); // Must be done anew before each collection because

// a previous collection will do mangling and will

// change top of some spaces.

record_gen_tops_before_GC(); if (PrintGC && Verbose) {

gclog_or_tty->print("level=%d invoke=%d size=" SIZE_FORMAT,

i,

_gens[i]->stat_record()->invocations,

size*HeapWordSize);

} if (VerifyBeforeGC && i >= VerifyGCLevel &&

total_collections() >= VerifyGCStartAt) {

HandleMark hm; // Discard invalid handles created during verification

if (!prepared_for_verification) {

prepare_for_verify();

prepared_for_verification = true;

}

Universe::verify(" VerifyBeforeGC:");

}

COMPILER2_PRESENT(DerivedPointerTable::clear()); if (!must_restore_marks_for_biased_locking &&

_gens[i]->performs_in_place_marking()) {

// We perform this mark word preservation work lazily

// because it's only at this point that we know whether we

// absolutely have to do it; we want to avoid doing it for

// scavenge-only collections where it's unnecessary

must_restore_marks_for_biased_locking = true;

BiasedLocking::preserve_marks();

} // Do collection work

{

// Note on ref discovery: For what appear to be historical reasons,

// GCH enables and disabled (by enqueing) refs discovery.

// In the future this should be moved into the generation's

// collect method so that ref discovery and enqueueing concerns

// are local to a generation. The collect method could return

// an appropriate indication in the case that notification on

// the ref lock was needed. This will make the treatment of

// weak refs more uniform (and indeed remove such concerns

// from GCH). XXX HandleMark hm; // Discard invalid handles created during gc

save_marks(); // save marks for all gens

// We want to discover references, but not process them yet.

// This mode is disabled in process_discovered_references if the

// generation does some collection work, or in

// enqueue_discovered_references if the generation returns

// without doing any work.

ReferenceProcessor* rp = _gens[i]->ref_processor();

// If the discovery of ("weak") refs in this generation is

// atomic wrt other collectors in this configuration, we

// are guaranteed to have empty discovered ref lists.

if (rp->discovery_is_atomic()) {

rp->enable_discovery(true /*verify_disabled*/, true /*verify_no_refs*/);

rp->setup_policy(do_clear_all_soft_refs);

} else {

// collect() below will enable discovery as appropriate

}

// 每个年代的对象各自回收

_gens[i]->collect(full, do_clear_all_soft_refs, size, is_tlab);

if (!rp->enqueuing_is_done()) {

rp->enqueue_discovered_references();

} else {

rp->set_enqueuing_is_done(false);

}

rp->verify_no_references_recorded();

}

max_level_collected = i; // Determine if allocation request was met.

if (size > 0) {

if (!is_tlab || _gens[i]->supports_tlab_allocation()) {

if (size*HeapWordSize <= _gens[i]->unsafe_max_alloc_nogc()) {

size = 0;

}

}

} COMPILER2_PRESENT(DerivedPointerTable::update_pointers()); _gens[i]->stat_record()->accumulated_time.stop(); update_gc_stats(i, full); if (VerifyAfterGC && i >= VerifyGCLevel &&

total_collections() >= VerifyGCStartAt) {

HandleMark hm; // Discard invalid handles created during verification

Universe::verify(" VerifyAfterGC:");

} if (PrintGCDetails) {

gclog_or_tty->print(":");

_gens[i]->print_heap_change(prev_used);

}

}

} // Update "complete" boolean wrt what actually transpired --

// for instance, a promotion failure could have led to

// a whole heap collection.

complete = complete || (max_level_collected == n_gens() - 1); if (complete) { // We did a "major" collection

// FIXME: See comment at pre_full_gc_dump call

post_full_gc_dump(NULL); // do any post full gc dumps

} if (PrintGCDetails) {

print_heap_change(gch_prev_used); // Print metaspace info for full GC with PrintGCDetails flag.

if (complete) {

MetaspaceAux::print_metaspace_change(metadata_prev_used);

}

} for (int j = max_level_collected; j >= 0; j -= 1) {

// Adjust generation sizes.

_gens[j]->compute_new_size();

} if (complete) {

// Delete metaspaces for unloaded class loaders and clean up loader_data graph

ClassLoaderDataGraph::purge();

MetaspaceAux::verify_metrics();

// Resize the metaspace capacity after full collections

MetaspaceGC::compute_new_size();

update_full_collections_completed();

} // Track memory usage and detect low memory after GC finishes

MemoryService::track_memory_usage(); gc_epilogue(complete); if (must_restore_marks_for_biased_locking) {

BiasedLocking::restore_marks();

}

} AdaptiveSizePolicy* sp = gen_policy()->size_policy();

AdaptiveSizePolicyOutput(sp, total_collections()); print_heap_after_gc(); #ifdef TRACESPINNING

ParallelTaskTerminator::print_termination_counts();

#endif

}

// memory/generation.cpp

void OneContigSpaceCardGeneration::collect(bool full,

bool clear_all_soft_refs,

size_t size,

bool is_tlab) {

GenCollectedHeap* gch = GenCollectedHeap::heap(); SpecializationStats::clear();

// Temporarily expand the span of our ref processor, so

// refs discovery is over the entire heap, not just this generation

ReferenceProcessorSpanMutator

x(ref_processor(), gch->reserved_region()); STWGCTimer* gc_timer = GenMarkSweep::gc_timer();

gc_timer->register_gc_start(); SerialOldTracer* gc_tracer = GenMarkSweep::gc_tracer();

gc_tracer->report_gc_start(gch->gc_cause(), gc_timer->gc_start());

// 标记清除算法调用

GenMarkSweep::invoke_at_safepoint(_level, ref_processor(), clear_all_soft_refs); gc_timer->register_gc_end(); gc_tracer->report_gc_end(gc_timer->gc_end(), gc_timer->time_partitions()); SpecializationStats::print();

} // memory/genMarkSweep.cpp

void GenMarkSweep::invoke_at_safepoint(int level, ReferenceProcessor* rp, bool clear_all_softrefs) {

guarantee(level == 1, "We always collect both old and young.");

assert(SafepointSynchronize::is_at_safepoint(), "must be at a safepoint"); GenCollectedHeap* gch = GenCollectedHeap::heap();

#ifdef ASSERT

if (gch->collector_policy()->should_clear_all_soft_refs()) {

assert(clear_all_softrefs, "Policy should have been checked earlier");

}

#endif // hook up weak ref data so it can be used during Mark-Sweep

assert(ref_processor() == NULL, "no stomping");

assert(rp != NULL, "should be non-NULL");

_ref_processor = rp;

rp->setup_policy(clear_all_softrefs); GCTraceTime t1(GCCauseString("Full GC", gch->gc_cause()), PrintGC && !PrintGCDetails, true, NULL); gch->trace_heap_before_gc(_gc_tracer); // When collecting the permanent generation Method*s may be moving,

// so we either have to flush all bcp data or convert it into bci.

CodeCache::gc_prologue();

Threads::gc_prologue(); // Increment the invocation count

_total_invocations++; // Capture heap size before collection for printing.

size_t gch_prev_used = gch->used(); // Capture used regions for each generation that will be

// subject to collection, so that card table adjustments can

// be made intelligently (see clear / invalidate further below).

gch->save_used_regions(level); allocate_stacks();

// 阶段1~4

// 1. Mark live objects

// 2. Calculate new addresses

// 3. Update pointers

// 4. Move objects to new positions

mark_sweep_phase1(level, clear_all_softrefs); mark_sweep_phase2(); // Don't add any more derived pointers during phase3

COMPILER2_PRESENT(assert(DerivedPointerTable::is_active(), "Sanity"));

COMPILER2_PRESENT(DerivedPointerTable::set_active(false)); mark_sweep_phase3(level); mark_sweep_phase4(); restore_marks(); // Set saved marks for allocation profiler (and other things? -- dld)

// (Should this be in general part?)

gch->save_marks(); deallocate_stacks(); // If compaction completely evacuated all generations younger than this

// one, then we can clear the card table. Otherwise, we must invalidate

// it (consider all cards dirty). In the future, we might consider doing

// compaction within generations only, and doing card-table sliding.

bool all_empty = true;

for (int i = 0; all_empty && i < level; i++) {

Generation* g = gch->get_gen(i);

all_empty = all_empty && gch->get_gen(i)->used() == 0;

}

GenRemSet* rs = gch->rem_set();

Generation* old_gen = gch->get_gen(level);

// Clear/invalidate below make use of the "prev_used_regions" saved earlier.

if (all_empty) {

// We've evacuated all generations below us.

rs->clear_into_younger(old_gen);

} else {

// Invalidate the cards corresponding to the currently used

// region and clear those corresponding to the evacuated region.

rs->invalidate_or_clear(old_gen);

} Threads::gc_epilogue();

CodeCache::gc_epilogue();

JvmtiExport::gc_epilogue(); if (PrintGC && !PrintGCDetails) {

gch->print_heap_change(gch_prev_used);

} // refs processing: clean slate

_ref_processor = NULL; // Update heap occupancy information which is used as

// input to soft ref clearing policy at the next gc.

Universe::update_heap_info_at_gc(); // Update time of last gc for all generations we collected

// (which curently is all the generations in the heap).

// We need to use a monotonically non-deccreasing time in ms

// or we will see time-warp warnings and os::javaTimeMillis()

// does not guarantee monotonicity.

jlong now = os::javaTimeNanos() / NANOSECS_PER_MILLISEC;

gch->update_time_of_last_gc(now); gch->trace_heap_after_gc(_gc_tracer);

}

害,太复杂,有空慢慢拆解吧。反正大致就是标记-清除算法步骤,在必要地方记录gc信息并打印,更新上下文信息。其中,最重要的是几个 phase, 可展开阅读。此处就当抛砖引玉了。

// genMarkSweep.cpp

void GenMarkSweep::mark_sweep_phase1(int level,

bool clear_all_softrefs) {

// Recursively traverse all live objects and mark them

GCTraceTime tm("phase 1", PrintGC && Verbose, true, _gc_timer);

trace(" 1"); GenCollectedHeap* gch = GenCollectedHeap::heap(); // Because follow_root_closure is created statically, cannot

// use OopsInGenClosure constructor which takes a generation,

// as the Universe has not been created when the static constructors

// are run.

follow_root_closure.set_orig_generation(gch->get_gen(level)); // Need new claim bits before marking starts.

ClassLoaderDataGraph::clear_claimed_marks(); gch->gen_process_strong_roots(level,

false, // Younger gens are not roots.

true, // activate StrongRootsScope

false, // not scavenging

SharedHeap::SO_SystemClasses,

&follow_root_closure,

true, // walk code active on stacks

&follow_root_closure,

&follow_klass_closure); // Process reference objects found during marking

{

ref_processor()->setup_policy(clear_all_softrefs);

const ReferenceProcessorStats& stats =

ref_processor()->process_discovered_references(

&is_alive, &keep_alive, &follow_stack_closure, NULL, _gc_timer);

gc_tracer()->report_gc_reference_stats(stats);

} // This is the point where the entire marking should have completed.

assert(_marking_stack.is_empty(), "Marking should have completed"); // Unload classes and purge the SystemDictionary.

bool purged_class = SystemDictionary::do_unloading(&is_alive); // Unload nmethods.

CodeCache::do_unloading(&is_alive, purged_class); // Prune dead klasses from subklass/sibling/implementor lists.

Klass::clean_weak_klass_links(&is_alive); // Delete entries for dead interned strings.

StringTable::unlink(&is_alive); // Clean up unreferenced symbols in symbol table.

SymbolTable::unlink(); gc_tracer()->report_object_count_after_gc(&is_alive);

} void GenMarkSweep::mark_sweep_phase2() {

// Now all live objects are marked, compute the new object addresses. // It is imperative that we traverse perm_gen LAST. If dead space is

// allowed a range of dead object may get overwritten by a dead int

// array. If perm_gen is not traversed last a Klass* may get

// overwritten. This is fine since it is dead, but if the class has dead

// instances we have to skip them, and in order to find their size we

// need the Klass*!

//

// It is not required that we traverse spaces in the same order in

// phase2, phase3 and phase4, but the ValidateMarkSweep live oops

// tracking expects us to do so. See comment under phase4. GenCollectedHeap* gch = GenCollectedHeap::heap(); GCTraceTime tm("phase 2", PrintGC && Verbose, true, _gc_timer);

trace("2"); gch->prepare_for_compaction();

} void GenMarkSweep::mark_sweep_phase3(int level) {

GenCollectedHeap* gch = GenCollectedHeap::heap(); // Adjust the pointers to reflect the new locations

GCTraceTime tm("phase 3", PrintGC && Verbose, true, _gc_timer);

trace("3"); // Need new claim bits for the pointer adjustment tracing.

ClassLoaderDataGraph::clear_claimed_marks(); // Because the closure below is created statically, we cannot

// use OopsInGenClosure constructor which takes a generation,

// as the Universe has not been created when the static constructors

// are run.

adjust_pointer_closure.set_orig_generation(gch->get_gen(level)); gch->gen_process_strong_roots(level,

false, // Younger gens are not roots.

true, // activate StrongRootsScope

false, // not scavenging

SharedHeap::SO_AllClasses,

&adjust_pointer_closure,

false, // do not walk code

&adjust_pointer_closure,

&adjust_klass_closure); // Now adjust pointers in remaining weak roots. (All of which should

// have been cleared if they pointed to non-surviving objects.)

CodeBlobToOopClosure adjust_code_pointer_closure(&adjust_pointer_closure,

/*do_marking=*/ false);

gch->gen_process_weak_roots(&adjust_pointer_closure,

&adjust_code_pointer_closure); adjust_marks();

GenAdjustPointersClosure blk;

gch->generation_iterate(&blk, true);

} void GenMarkSweep::mark_sweep_phase4() {

// All pointers are now adjusted, move objects accordingly // It is imperative that we traverse perm_gen first in phase4. All

// classes must be allocated earlier than their instances, and traversing

// perm_gen first makes sure that all Klass*s have moved to their new

// location before any instance does a dispatch through it's klass! // The ValidateMarkSweep live oops tracking expects us to traverse spaces

// in the same order in phase2, phase3 and phase4. We don't quite do that

// here (perm_gen first rather than last), so we tell the validate code

// to use a higher index (saved from phase2) when verifying perm_gen.

GenCollectedHeap* gch = GenCollectedHeap::heap(); GCTraceTime tm("phase 4", PrintGC && Verbose, true, _gc_timer);

trace("4"); GenCompactClosure blk;

gch->generation_iterate(&blk, true);

}

JVM系列(五):gc实现概要01的更多相关文章

- jvm系列(五):Java GC 分析

Java GC就是JVM记录仪,书画了JVM各个分区的表演. 什么是 Java GC Java GC(Garbage Collection,垃圾收集,垃圾回收)机制,是Java与C++/C的主要区别之 ...

- JVM系列五:JVM监测&工具

JVM系列五:JVM监测&工具[整理中] http://www.cnblogs.com/redcreen/archive/2011/05/09/2040977.html 前几篇篇文章介绍了介 ...

- jvm系列:Java GC 分析

Java GC就是JVM记录仪,书画了JVM各个分区的表演. 什么是 Java GC Java GC(Garbage Collection,垃圾收集,垃圾回收)机制,是Java与C++/C的主要区别之 ...

- JVM系列二:GC策略&内存申请、对象衰老

JVM里的GC(Garbage Collection)的算法有很多种,如标记清除收集器,压缩收集器,分代收集器等等,详见HotSpot VM GC 的种类 现在比较常用的是分代收集(generatio ...

- jvm系列 (五) ---类的加载机制

类的加载机制 目录 jvm系列(一):jvm内存区域与溢出 jvm系列(二):垃圾收集器与内存分配策略 jvm系列(三):锁的优化 jvm系列 (四) ---强.软.弱.虚引用 我的博客目录 什么是类 ...

- jvm系列 (五) ---类加载机制

类的加载机制 目录 jvm系列(一):jvm内存区域与溢出 jvm系列(二):垃圾收集器与内存分配策略 jvm系列(三):锁的优化 jvm系列 (四) ---强.软.弱.虚引用 我的博客目录 什么是类 ...

- 【转载】JVM系列二:GC策略&内存申请、对象衰老

JVM里的GC(Garbage Collection)的算法有很多种,如标记清除收集器,压缩收集器,分代收集器等等,详见HotSpot VM GC 的种类 现在比较常用的是分代收集(generatio ...

- [转]JVM系列二:GC策略&内存申请、对象衰老

原文地址:http://www.cnblogs.com/redcreen/archive/2011/05/04/2037056.html JVM里的GC(Garbage Collection)的算法有 ...

- jvm系列五、jvm垃圾回收机制、jvm各种参数及调优

转载自:http://yufenfei.iteye.com/blog/1746914 尊重原创. 一.GC有两种类型:Scavenge GC 和Full GC 1.Scavenge GC 一般情况下, ...

随机推荐

- W32Dasm缓冲区溢出分析【转载】

课程简介 在上次课程中与大家一起学习了编写通用的Shellcode,也提到会用一个实例来展示Shellcode的溢出. 那么本次课程中为大家准备了W32Dasm这款软件,并且是存在漏洞的版本.利用它的 ...

- PowerShell-1.入门及其常用

PowerShell可以理解成是加强版的批处理,但是和批处理完全不同,比如可以调用API等.应用场景平时使用的用户机基本都支持了(出了XP). 常用情节:便捷快速开发,或者是**(因为不存在自己的PE ...

- Windows核心编程 第八章 用户方式中线程的同步(上)

第8章 用户方式中线程的同步 当所有的线程在互相之间不需要进行通信的情况下就能够顺利地运行时, M i c r o s o f t Wi n d o w s的运行性能最好.但是,线程很少能够在所有的时 ...

- 11.PHP与MySQL

PHP与MySQL 首先是PHPStorm设置创建SQL的教程,找到了一个写的不错的,在这里:http://blog.csdn.net/knight_quan/article/details/5198 ...

- (Py练习)输入某年某月判断天数

# 输入某年某月,判断这一天是这一年的第几天 year = int(input("year:\n")) month = int(input("month:\n" ...

- No input file specified.问题的解决

问题描述:apache配置网站出现问题"No input file specified." 解决1: 打开.htaccess 在RewriteRule 后面的index.php教程 ...

- ACM、考研、就业,在我心底已经有了明确的答案_人生没有完整的,只有无悔的

思绪再三,还是决定放弃了ACM,走上考研路(我现在是大二下学期,马上结束).虽然我们ACM的带队老师经常说:"ACM和考研是不冲突的",但是我感觉做ACM和考研的关系不是很紧密,而 ...

- [ML] 高德软件的路径规划原理

路径规划 Dijkstra s:起点:S:已知到起点最短路径的点:U:未知到起点最短路径的点 Step 1:S中只有起点s,从U中找出路径最短的 Step 2:更新U中的顶点和顶点对应的路径 重复St ...

- CentOS Linux搭建独立SVN Server全套流程(修改svn仓库地址、服务启动等)

CentOS Linux搭建独立SVN Server全套流程(修改svn仓库地址.服务启动等) 原 一事能狂便少年 发布于 2016/12/27 11:16 字数 1113 阅读 1.3K 收藏 0 ...

- Shell脚本 /dev/null 2>&1详解

Shell脚本---- /dev/null 2>&1详解 1.可以将/dev/null看作"黑洞". 它非常等价于一个只写文件. 所有写入它的内容都会永远丢失. ...