[ICLR'17] DEEPCODER: LEARNING TO WRITE PROGRAMS

DEEPCODER: LEARNING TO WRITE PROGRAMS

Basic Information

- Authors: Matej Balog, Alexander L. Gaunt, Marc Brockschmidt, Sebastian Nowozin, Daniel Tarlow

- Publication: ICLR'17

- Description: Generate code based on input-output examples via neural network techniques

INDUCTIVE PROGRAM SYNTHESIS (IPS)

The Inductive Program Synthesis (IPS) problem is the following: given input-output examples, produce a program that has behavior consistent with the examples.

Building an IPS system requires solving two problems:

- Search problem: to find consistent programs we need to search over a suitable set of possible programs. We need to define the set

(i.e., the program space) and search procedure. - Ranking problem: if there are multiple programs consistent with the input-output examples, which one do we return?

Domain Specific Languages (DSLs)

- DSLs are programming languages that are suitable for a

specialized domain but are more restrictive than full-featured programming languages. - Restricted DSLs can also enable more efficient special-purpose search algorithms.

- The choice of DSL also affects the difficulty of the ranking problem.

Search Techniques

Technique for searching for programs consistent with input-output examples.

- Special-purpose algorithm

- Satisfiability Modulo Theories (SMT) solving

Ranking

LEARNING INDUCTIVE PROGRAM SYNTHESIS (LIPS)

The components of LIPS are:

a DSL specification,

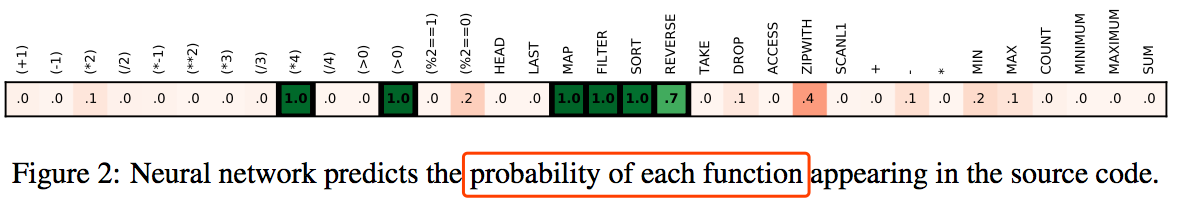

An attribute function A that maps programs P of the DSL to finite attribute vectors a = A(P). (Attribute vectors of different programs need not have equal length.) Attributes serve as the link between the machine learning and the search component of LIPS: the machine learning model predicts a distribution q(a | E), where E is the set of input-output examples, and the search procedure aims to search over programs P as ordered by q(A(P) | E). Thus an attribute is useful if it is both predictable from input-output examples, and if conditioning on its value significantly reduces the effective size of the search space.

Possible attributes are the (perhaps position-dependent) presence or absence of high-level functions (e.g., does the program contain or end in a call to SORT). Other possible attributes include control

flow templates (e.g., the number of loops and conditionals).a data-generation procedure,

Generate a dataset ((P(n), a(n), E(n)))Nn=1 of programs P(n) in the chosen DSL, their attributes a(n), and accompanying input-output examples E(n)).

a machine learning model that maps from input-output examples to program attributes,

Learn a distribution of attributes given input-output examples, q(a | E).

a search procedure that searches program space in an order guided by the model from (3).

Interface with an existing solver, using the predicted q(a | E) to guide the search.

DEEPCODER: Instantiation of LIPS

- DSL AND ATTRIBUTES

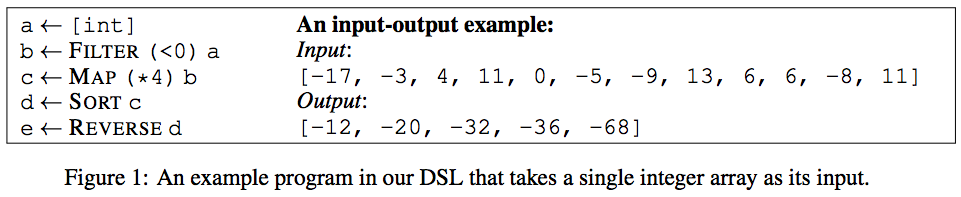

A program in our DSL is a sequence of function calls, where the result of each call initializes a fresh variable that is either a

singleton integer or an integer array. Functions can be applied to any of the inputs or previously computed (intermediate) variables. The output of the program is the return value of the last function

call, i.e., the last variable. See Fig. 1 for an example program of length T = 4 in our DSL.

Overall, our DSL contains the first-order functions HEAD, LAST, TAKE, DROP, ACCESS, MINIMUM, MAXIMUM, REVERSE, SORT, SUM, and the higher-order functions MAP, FILTER, COUNT, ZIPWITH, SCANL1.

- DATA GENERATION

- MACHINE LEARNING MODEL

- an encoder: a differentiable mapping from a set of M input-output examples generated by

a single program to a latent real-valued vector, and - a decoder: a differentiable mapping from the latent vector representing a set of M inputoutput

examples to predictions of the ground truth program’s attributes.

- an encoder: a differentiable mapping from a set of M input-output examples generated by

- SEARCH

- Depth-first search (DFS)

- “Sort and add” enumeration

- Sketch

- TRAINING LOSS FUNCTION

Negative cross entropy loss

Implementation

[ICLR'17] DEEPCODER: LEARNING TO WRITE PROGRAMS的更多相关文章

- 17、Learning and Transferring IDs Representation in E-commerce笔记

一.摘要 电子商务场景:主要组成部分(用户ID.商品ID.产品ID.商店ID.品牌ID.类别ID等) 传统的编码两个缺陷:如onehot,(1)存在稀疏性问题,维度高(2)不能反映关系,以两个不同的i ...

- SysML——AI-Sys Spring 2019

AI-Sys Syllabus Projects Grading AI-Sys Spring 2019 When: Mondays and Wednesdays from 9:30 to 11:00 ...

- [综述]Deep Compression/Acceleration深度压缩/加速/量化

Survey Recent Advances in Efficient Computation of Deep Convolutional Neural Networks, [arxiv '18] A ...

- (zhuan) Deep Reinforcement Learning Papers

Deep Reinforcement Learning Papers A list of recent papers regarding deep reinforcement learning. Th ...

- Machine Learning 方向读博的一些重要期刊及会议 && 读博第一次组会时博导的交代

读博从报道那天算起到现在已经3个多月了,这段时间以来和博导总共见过两次面,寥寥数语的见面要我对剩下的几年读书生活没有了太多的期盼,有些事情一直想去做却总是打不起来精神,最后挣扎一下还是决定把和博导开学 ...

- 【Deep Learning Nanodegree Foundation笔记】第 0 课:课程计划

第一周 机器学习的类型,以及何时使用机器学习 我们将首先简单介绍线性回归和机器学习.这将让你熟悉这些领域的常用术语,你需要了解的技术进展,并了解深度学习在更大的机器学习背景中的位置. 直播:线性回归 ...

- Github项目推荐-图神经网络(GNN)相关资源大列表

文章发布于公号[数智物语] (ID:decision_engine),关注公号不错过每一篇干货. 转自 | AI研习社 作者|Zonghan Wu 这是一个与图神经网络相关的资源集合.相关资源浏览下方 ...

- 库、教程、论文实现,这是一份超全的PyTorch资源列表(Github 2.2K星)

项目地址:https://github.com/bharathgs/Awesome-pytorch-list 列表结构: NLP 与语音处理 计算机视觉 概率/生成库 其他库 教程与示例 论文实现 P ...

- CNN结构:场景分割与Relation Network

参考第一个回答:如何评价DeepMind最新提出的RelationNetWork 参考链接:Relation Network笔记 ,暂时还没有应用到场景中 LiFeifei阿姨的课程:CV与ML课程 ...

随机推荐

- vue路由打开新窗口

一. <router-link>标签实现新窗口打开: 官方文档中说 v-link 指令被 <router-link> 组件指令替代,且 <router-link> ...

- spring注解之@profile

spring中@profile与maven中的profile很相似,通过配置来改变参数. 例如在开发环境与生产环境使用不同的参数,可以配置两套配置文件,通过@profile来激活需要的环境,但维护两套 ...

- Java知识回顾 (8) 集合

早在 Java 2 中之前,Java 就提供了特设类.比如:Dictionary, Vector, Stack, 和 Properties 这些类用来存储和操作对象组. 虽然这些类都非常有用,但是它们 ...

- Coursera机器学习+deeplearning.ai+斯坦福CS231n

日志 20170410 Coursera机器学习 2017.11.28 update deeplearning 台大的机器学习课程:台湾大学林轩田和李宏毅机器学习课程 Coursera机器学习 Wee ...

- android:第十章,后台的默默劳动者——服务,学习笔记

一.多线程 1)本章首先介绍了安卓的多线程编程,说明在子线程中如果要修改UI,必须通过Handler, Message, MessageQueue, Looper来实现,但是这样毕竟太麻烦了. 2) ...

- Docker国内镜像source

现在使用docker的镜像大多基于几种基本Linux系统.虽然我不需要在容器李安装很多东西,但经常需要一些必要的工具,而基础镜像里并不包含,比如vim, ifconfig, curl等.考虑下载速度, ...

- ASP.NET 使用 plupload 上传大文件时出现“blob”文件的Bug

最近在一个ASP.NET 项目中使用了plupload来上传文件,结果几天后客户发邮件说上传的文件不对,说是文件无法打开 在进入系统进行查看后发现上传的文件竟然没有后缀,经过一番测试发现如果文件上传的 ...

- protobuf 动态创建

https://www.ibm.com/developerworks/cn/linux/l-cn-gpb/index.html https://originlee.com/2015/03/14/ana ...

- oracle无效索引重建

问题描述: 执行失败!错误信息[Exception message:无效的列索引 解决思路: 分析是表索引,大部分都是表索引失效导致的,只需要花重建表索引即可! 00.查看此表归属账户select * ...

- [转]Ubuntu18.04搜狗拼音输入法候选栏乱码解决方法

经常碰到安装完搜狗拼音输入法后候选栏是乱码的情况,解决方法如下: 输入如下命令: cd ~/.config sudo rm -rf SogouPY* sogou* 1 2 之后重启即可. 安装过程可参 ...