Organization SYMMETRIC MULTIPROCESSORS

COMPUTER ORGANIZATION AND ARCHITECTURE DESIGNING FOR PERFORMANCE NINTH EDITION

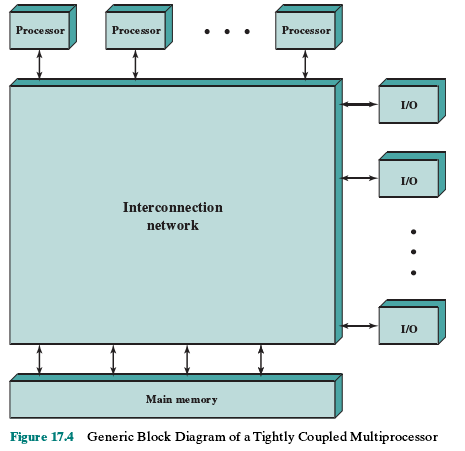

Figure 17.4 depicts in general terms the organization of a multiprocessor system.

There are two or more processors. Each processor is self-contained, including a

control unit, ALU, registers, and, typically, one or more levels of cache. Each pro-

cessor has access to a shared main memory and the I/O devices through some form

of interconnection mechanism. The processors can communicate with each other

through memory (messages and status information left in common data areas). It

may also be possible for processors to exchange signals directly. The memory is

often organized so that multiple simultaneous accesses to separate blocks of mem-

ory are possible. In some configurations, each processor may also have its own pri-

vate main memory and I/O channels in addition to the shared resources.

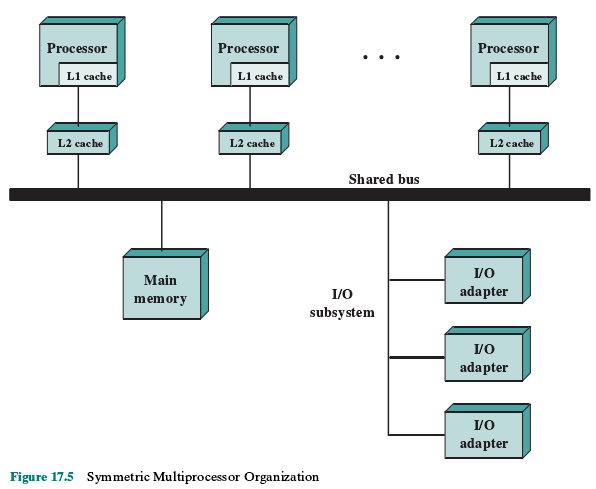

The most common organization for personal computers, workstations, and

servers is the time-shared bus. The time-shared bus is the simplest mechanism for

constructing a multiprocessor system (Figure 17.5). The structure and interfaces are

basically the same as for a single-processor system that uses a bus interconnection.

The bus consists of control, address, and data lines. To facilitate DMA transfers

from I/O subsystems to processors, the following features are provided:

• Addressing: It must be possible to distinguish modules on the bus to deter-

mine the source and destination of data.

• Arbitration: Any I/O module can temporarily function as “master.” A mecha-

nism is provided to arbitrate competing requests for bus control, using some

sort of priority scheme.

• Time-sharing: When one module is controlling the bus, other modules are

locked out and must, if necessary, suspend operation until bus access is achieved.

hese uniprocessor features are directly usable in an SMP organization. In

this latter case, there are now multiple processors as well as multiple I/O processors

all attempting to gain access to one or more memory modules via the bus.

The bus organization has several attractive features:

• Simplicity: This is the simplest approach to multiprocessor organization. The

physical interface and the addressing, arbitration, and time-sharing logic of

each processor remain the same as in a single-processor system.

• Flexibility: It is generally easy to expand the system by attaching more proces-

sors to the bus.

• Reliability: The bus is essentially a passive medium, and the failure of any

attached device should not cause failure of the whole system.

The main drawback to the bus organization is performance. All memory ref-

erences pass through the common bus. Thus, the bus cycle time limits the speed

of the system. To improve performance, it is desirable to equip each processor

with a cache memory. This should reduce the number of bus accesses dramatically.

Typically, workstation and PC SMPs have two levels of cache, with the L1 cache

internal (same chip as the processor) and the L2 cache either internal or external.

Some processors now employ a L3 cache as well.

The use of caches introduces some new design considerations. Because each

local cache contains an image of a portion of memory, if a word is altered in one

cache, it could conceivably invalidate a word in another cache. To prevent this, the

other processors must be alerted that an update has taken place. This problem is

known as the cache coherence problem and is typically addressed in hardware rather

than by the operating system. We address this issue in Section 17.4.

Organization SYMMETRIC MULTIPROCESSORS的更多相关文章

- SYMMETRIC MULTIPROCESSORS

COMPUTER ORGANIZATION AND ARCHITECTURE DESIGNING FOR PERFORMANCE NINTH EDITION As demands for perfor ...

- Multiprocessor Operating System Design Considerations SYMMETRIC MULTIPROCESSORS

COMPUTER ORGANIZATION AND ARCHITECTURE DESIGNING FOR PERFORMANCE NINTH EDITION An SMP operating syst ...

- parallelism

COMPUTER ORGANIZATION AND ARCHITECTURE DESIGNING FOR PERFORMANCE NINTH EDITION Traditionally, the co ...

- Massively parallel supercomputer

A novel massively parallel supercomputer of hundreds of teraOPS-scale includes node architectures ba ...

- QEMU中smp,socket,cores,threads几个参数的理解

在用QEMU创建KVM guest的时候,为了指定guest cpu资源,用到了-smp, -sockets, -cores, -threads几个参数, #/usr/bin/qemu-system- ...

- c++多线程同步使用的对象

线程的同步 Critical section(临界区)用来实现“排他性占有”.适用范围是单一进程的各线程之间.它是: · 一个局部性对象,不是一个核心对象. · 快速而 ...

- 第4章 同步控制 Synchronization ----同步机制的摘要

同步机制摘要Critical Section Critical section(临界区)用来实现"排他性占有".适用范围是单一进程的各线程之间.它是: 一个局部性对象,不是一个核 ...

- 线程的同步控制(Synchronization)

临界区(Critical Sections) 摘要 临界区(Critical Section) 用来实现"排他性占有".适合范围时单一进程的各线程之间. 特点 一个局部对象,不是一 ...

- 分布式计算课程补充笔记 part 1

▶ 高性能计算机发展历程 真空管电子计算机,向量机(Vector Machine),并行向量处理机(Parallel Vector Processors,PVP),分布式并行机(Parallel Pr ...

随机推荐

- 【转】Java读取matlab的.mat数据文件

参考:Java读取mat文件 下载链接:ujmp jmatio 下载完两个.jar文件之后,如何引用到java项目当中?项目名称->右键->Property->Java Build ...

- 修改hosts文件,修改后不生效怎么办

当你在打开浏览器的情况下修改hosts文件时,关闭浏览器时系统才会释放掉hosts文件占用的那部分内存,我们再次打开浏览器访问就发现已经生效了. ps:ipconfig /flushdns # ...

- GET和POST

Ajax与Comet 1. Ajax Asynchronous Javascript+xml :能够向服务器请求额外的数据而无需卸载页面. Ajax技术的核心是XMLHttpRequest 对象(简称 ...

- Java Class类及反射机制

java.lang.Class类 声明: public final class Class<T>extends Object implements Serializable, Generi ...

- 定时刷新之setTimeout(只一次)和setInterval(间隔相同时间)的使用

setTimeout和setInterval的使用 这两个方法都可以用来实现在一个固定时间段之后去执行JavaScript.不过两者各有各的应用场景. 方 法 实际上,setTimeout和setIn ...

- SpringMVC拦截器

springmvc的拦截器 需求:进行用户的访问控制,判断用户是否登陆,如果登陆进行正常访问,如果没有登陆跳转到登陆页面. 1自定义拦截器类 package org.guangsoft.utils; ...

- vue

vue.js 插件 setting--> plugins 搜索vue,下载安装如果想要高亮显示*.vue文件,可以在File Types 选项里找到HTML,然后在下方手动添加*.vue,这样就 ...

- 【原创】AC自动机小结

有了KMP和Trie的基础,就可以学习神奇的AC自动机了.AC自动机其实就是在Trie树上实现KMP,可以完成多模式串的匹配. AC自动机 其实 就是创建了一个状态的转移图,思想很 ...

- Requests库练习

预备知识 字符串方法 用途 string.partition(str) 有点像 find()和 split()的结合体,从 str 出现的第一个位置起,把 字 符 串 string 分 成 一 个 3 ...

- Html 移动web开发细节处理

1.-webkit-tap-highlight-color:rgba(255,255,255,0)可以同时屏蔽ios和android下点击元素时出现的阴影.备注:transparent的属性值在and ...