【Azure 存储服务】Hadoop集群中使用ADLS(Azure Data Lake Storage)过程中遇见执行PUT操作报错

问题描述

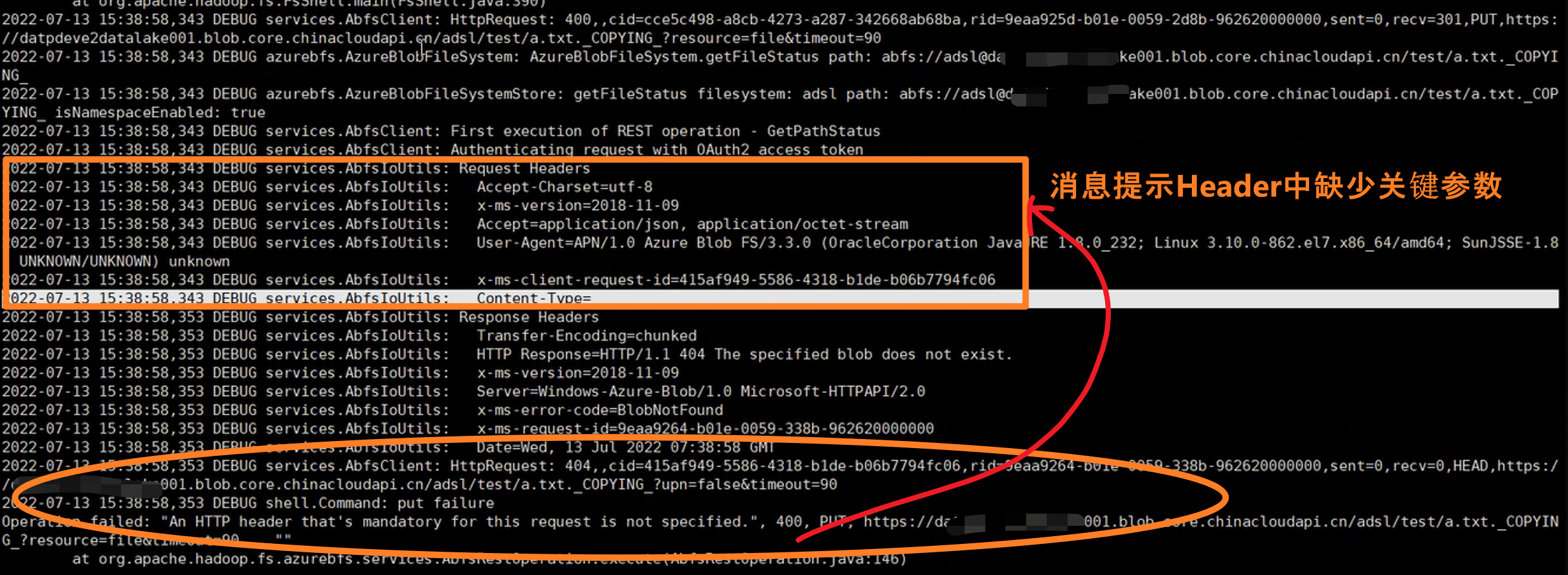

在Hadoop集中中,使用ADLS 作为数据源,在执行PUT操作(上传文件到ADLS中),遇见 400错误【put: Operation failed: "An HTTP header that's mandatory for this request is not specified.", 400】

启用Debug输出详细日志:

错误消息文本内容:

[hdfs@hadoop001 ~]$ hadoop fs -put a.txt abfs://adsl@xxxxxxxxxxxxx.blob.core.chinacloudapi.cn/test/a.txt

22/07/13 15:46:05 DEBUG util.Shell: setsid exited with exit code 0

22/07/13 15:46:05 DEBUG conf.Configuration: parsing URL jar:file:/usr/hdp/3.1.4.0-315/hadoop/hadoop-common-3.1.1.3.1.4.0-315.jar!/core-default.xml

22/07/13 15:46:05 DEBUG conf.Configuration: parsing input stream sun.net.www.protocol.jar.JarURLConnection$JarURLInputStream@4fe3c938

22/07/13 15:46:05 DEBUG conf.Configuration: parsing URL file:/etc/hadoop/3.1.4.0-315/0/core-site.xml

22/07/13 15:46:05 DEBUG conf.Configuration: parsing input stream java.io.BufferedInputStream@467aecef

22/07/13 15:46:05 DEBUG security.SecurityUtil: Setting hadoop.security.token.service.use_ip to true

22/07/13 15:46:05 DEBUG security.Groups: Creating new Groups object

22/07/13 15:46:05 DEBUG util.NativeCodeLoader: Trying to load the custom-built native-hadoop library...

22/07/13 15:46:05 DEBUG util.NativeCodeLoader: Loaded the native-hadoop library

22/07/13 15:46:05 DEBUG security.JniBasedUnixGroupsMapping: Using JniBasedUnixGroupsMapping for Group resolution

22/07/13 15:46:05 DEBUG security.JniBasedUnixGroupsMappingWithFallback: Group mapping impl=org.apache.hadoop.security.JniBasedUnixGroupsMapping

22/07/13 15:46:05 DEBUG security.Groups: Group mapping impl=org.apache.hadoop.security.JniBasedUnixGroupsMappingWithFallback; cacheTimeout=300000; warningDeltaMs=5000

22/07/13 15:46:06 DEBUG core.Tracer: sampler.classes = ; loaded no samplers

22/07/13 15:46:06 DEBUG core.Tracer: span.receiver.classes = ; loaded no span receivers

22/07/13 15:46:06 DEBUG security.UserGroupInformation: hadoop login

22/07/13 15:46:06 DEBUG security.UserGroupInformation: hadoop login commit

22/07/13 15:46:06 DEBUG security.UserGroupInformation: using local user:UnixPrincipal: hdfs

22/07/13 15:46:06 DEBUG security.UserGroupInformation: Using user: "UnixPrincipal: hdfs" with name hdfs

22/07/13 15:46:06 DEBUG security.UserGroupInformation: User entry: "hdfs"

22/07/13 15:46:06 DEBUG security.UserGroupInformation: UGI loginUser:hdfs (auth:SIMPLE)

22/07/13 15:46:06 DEBUG core.Tracer: sampler.classes = ; loaded no samplers

22/07/13 15:46:06 DEBUG core.Tracer: span.receiver.classes = ; loaded no span receivers

22/07/13 15:46:06 DEBUG fs.FileSystem: Loading filesystems

22/07/13 15:46:06 DEBUG fs.FileSystem: file:// = class org.apache.hadoop.fs.LocalFileSystem from /usr/hdp/3.1.4.0-315/hadoop/hadoop-common-3.1.1.3.1.4.0-315.jar

22/07/13 15:46:06 DEBUG fs.FileSystem: viewfs:// = class org.apache.hadoop.fs.viewfs.ViewFileSystem from /usr/hdp/3.1.4.0-315/hadoop/hadoop-common-3.1.1.3.1.4.0-315.jar

22/07/13 15:46:06 DEBUG fs.FileSystem: har:// = class org.apache.hadoop.fs.HarFileSystem from /usr/hdp/3.1.4.0-315/hadoop/hadoop-common-3.1.1.3.1.4.0-315.jar

22/07/13 15:46:06 DEBUG fs.FileSystem: http:// = class org.apache.hadoop.fs.http.HttpFileSystem from /usr/hdp/3.1.4.0-315/hadoop/hadoop-common-3.1.1.3.1.4.0-315.jar

22/07/13 15:46:06 DEBUG fs.FileSystem: https:// = class org.apache.hadoop.fs.http.HttpsFileSystem from /usr/hdp/3.1.4.0-315/hadoop/hadoop-common-3.1.1.3.1.4.0-315.jar

22/07/13 15:46:06 DEBUG fs.FileSystem: hdfs:// = class org.apache.hadoop.hdfs.DistributedFileSystem from /usr/hdp/3.1.4.0-315/hadoop-hdfs/hadoop-hdfs-client-3.1.1.3.1.4.0-315.jar

22/07/13 15:46:06 DEBUG fs.FileSystem: webhdfs:// = class org.apache.hadoop.hdfs.web.WebHdfsFileSystem from /usr/hdp/3.1.4.0-315/hadoop-hdfs/hadoop-hdfs-client-3.1.1.3.1.4.0-315.jar

22/07/13 15:46:06 DEBUG fs.FileSystem: swebhdfs:// = class org.apache.hadoop.hdfs.web.SWebHdfsFileSystem from /usr/hdp/3.1.4.0-315/hadoop-hdfs/hadoop-hdfs-client-3.1.1.3.1.4.0-315.jar

22/07/13 15:46:06 DEBUG fs.FileSystem: gs:// = class com.google.cloud.hadoop.fs.gcs.GoogleHadoopFileSystem from /usr/hdp/3.1.4.0-315/hadoop-mapreduce/gcs-connector-1.9.10.3.1.4.0-315-shaded.jar

22/07/13 15:46:06 DEBUG fs.FileSystem: s3n:// = class org.apache.hadoop.fs.s3native.NativeS3FileSystem from /usr/hdp/3.1.4.0-315/hadoop-mapreduce/hadoop-aws-3.1.1.3.1.4.0-315.jar

22/07/13 15:46:06 DEBUG fs.FileSystem: Looking for FS supporting abfs

22/07/13 15:46:06 DEBUG fs.FileSystem: looking for configuration option fs.abfs.impl

22/07/13 15:46:06 DEBUG fs.FileSystem: Filesystem abfs defined in configuration option

22/07/13 15:46:06 DEBUG fs.FileSystem: FS for abfs is class org.apache.hadoop.fs.azurebfs.AzureBlobFileSystem

22/07/13 15:46:06 DEBUG azurebfs.AzureBlobFileSystem: Initializing AzureBlobFileSystem for abfs://adsl@xxxxxxxxxxxxx.blob.core.chinacloudapi.cn/test/a.txt

22/07/13 15:46:06 DEBUG security.Groups: GroupCacheLoader - load.

22/07/13 15:46:06 WARN utils.SSLSocketFactoryEx: Failed to load OpenSSL. Falling back to the JSSE default.

22/07/13 15:46:06 DEBUG utils.SSLSocketFactoryEx: Removed Cipher - TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384

22/07/13 15:46:06 DEBUG utils.SSLSocketFactoryEx: Removed Cipher - TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256

22/07/13 15:46:06 DEBUG utils.SSLSocketFactoryEx: Removed Cipher - TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384

22/07/13 15:46:06 DEBUG utils.SSLSocketFactoryEx: Removed Cipher - TLS_RSA_WITH_AES_256_GCM_SHA384

22/07/13 15:46:06 DEBUG utils.SSLSocketFactoryEx: Removed Cipher - TLS_ECDH_ECDSA_WITH_AES_256_GCM_SHA384

22/07/13 15:46:06 DEBUG utils.SSLSocketFactoryEx: Removed Cipher - TLS_ECDH_RSA_WITH_AES_256_GCM_SHA384

22/07/13 15:46:06 DEBUG utils.SSLSocketFactoryEx: Removed Cipher - TLS_DHE_RSA_WITH_AES_256_GCM_SHA384

22/07/13 15:46:06 DEBUG utils.SSLSocketFactoryEx: Removed Cipher - TLS_DHE_DSS_WITH_AES_256_GCM_SHA384

22/07/13 15:46:06 DEBUG utils.SSLSocketFactoryEx: Removed Cipher - TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256

22/07/13 15:46:06 DEBUG utils.SSLSocketFactoryEx: Removed Cipher - TLS_RSA_WITH_AES_128_GCM_SHA256

22/07/13 15:46:06 DEBUG utils.SSLSocketFactoryEx: Removed Cipher - TLS_ECDH_ECDSA_WITH_AES_128_GCM_SHA256

22/07/13 15:46:06 DEBUG utils.SSLSocketFactoryEx: Removed Cipher - TLS_ECDH_RSA_WITH_AES_128_GCM_SHA256

22/07/13 15:46:06 DEBUG utils.SSLSocketFactoryEx: Removed Cipher - TLS_DHE_RSA_WITH_AES_128_GCM_SHA256

22/07/13 15:46:06 DEBUG utils.SSLSocketFactoryEx: Removed Cipher - TLS_DHE_DSS_WITH_AES_128_GCM_SHA256

22/07/13 15:46:06 DEBUG services.AbfsClientThrottlingIntercept: Client-side throttling is enabled for the ABFS file system.

22/07/13 15:46:06 DEBUG azurebfs.AzureBlobFileSystem: AzureBlobFileSystem.getFileStatus path: abfs://adsl@xxxxxxxxxxxxx.blob.core.chinacloudapi.cn/test/a.txt

22/07/13 15:46:06 DEBUG azurebfs.AzureBlobFileSystem: ABFS authorizer is not initialized. No authorization check will be performed.

22/07/13 15:46:06 DEBUG azurebfs.AzureBlobFileSystemStore: Get root ACL status

22/07/13 15:46:06 DEBUG oauth2.AccessTokenProvider: AADToken: no token. Returning expiring=true

22/07/13 15:46:06 DEBUG oauth2.AccessTokenProvider: AAD Token is missing or expired: Calling refresh-token from abstract base class

22/07/13 15:46:06 DEBUG oauth2.AccessTokenProvider: AADToken: refreshing client-credential based token

22/07/13 15:46:06 DEBUG oauth2.AzureADAuthenticator: AADToken: starting to fetch token using client creds for client ID 0392543e-5eab-4de2-881b-9bd8a9fe9deb

22/07/13 15:46:06 DEBUG oauth2.AzureADAuthenticator: Requesting an OAuth token by POST to https://login.partner.microsoftonline.cn/fc54511d-de79-4bae-bfc9-3a42945d1b27/oauth2/token

22/07/13 15:46:06 DEBUG services.AbfsIoUtils: Request Headers

22/07/13 15:46:06 DEBUG services.AbfsIoUtils: Connection=close

22/07/13 15:46:06 DEBUG oauth2.AzureADAuthenticator: Response 200

22/07/13 15:46:06 DEBUG services.AbfsIoUtils: Response Headers

22/07/13 15:46:06 DEBUG services.AbfsIoUtils: HTTP Response=HTTP/1.1 200 OK

22/07/13 15:46:06 DEBUG services.AbfsIoUtils: x-ms-ests-server=2.1.13156.10 - CNN2LR1 ProdSlices

22/07/13 15:46:06 DEBUG services.AbfsIoUtils: X-Content-Type-Options=nosniff

22/07/13 15:46:06 DEBUG services.AbfsIoUtils: Connection=close

22/07/13 15:46:06 DEBUG services.AbfsIoUtils: Pragma=no-cache

22/07/13 15:46:06 DEBUG services.AbfsIoUtils: P3P=CP="DSP CUR OTPi IND OTRi ONL FIN"

22/07/13 15:46:06 DEBUG services.AbfsIoUtils: Date=Wed, 13 Jul 2022 07:46:06 GMT

22/07/13 15:46:06 DEBUG services.AbfsIoUtils: Strict-Transport-Security=max-age=31536000; includeSubDomains

22/07/13 15:46:06 DEBUG services.AbfsIoUtils: Cache-Control=no-store, no-cache

22/07/13 15:46:06 DEBUG services.AbfsIoUtils: Set-Cookie=*cookie info*

22/07/13 15:46:06 DEBUG services.AbfsIoUtils: Expires=-1

22/07/13 15:46:06 DEBUG services.AbfsIoUtils: Content-Length=1427

22/07/13 15:46:06 DEBUG services.AbfsIoUtils: X-XSS-Protection=0

22/07/13 15:46:06 DEBUG services.AbfsIoUtils: x-ms-request-id=b63779e3-ec7a-4d78-a950-fc5cd47b2f01

22/07/13 15:46:06 DEBUG services.AbfsIoUtils: Content-Type=application/json; charset=utf-8

22/07/13 15:46:06 DEBUG oauth2.AzureADAuthenticator: AADToken: fetched token with expiry Wed Jul 13 16:46:05 CST 2022

22/07/13 15:46:06 DEBUG services.AbfsClient: Authenticating request with OAuth2 access token

22/07/13 15:46:06 DEBUG services.AbfsIoUtils: Request Headers

22/07/13 15:46:06 DEBUG services.AbfsIoUtils: Accept-Charset=utf-8

22/07/13 15:46:06 DEBUG services.AbfsIoUtils: x-ms-version=2018-11-09

22/07/13 15:46:06 DEBUG services.AbfsIoUtils: Accept=application/json, application/octet-stream

22/07/13 15:46:06 DEBUG services.AbfsIoUtils: User-Agent=Azure Blob FS/3.1.1.3.1.4.0-315 (JavaJRE 1.8.0_232; Linux 3.10.0-862.el7.x86_64; SunJSSE-1.8) User-Agent: APN/1.0 Hortonworks/1.0 HDP/3.1.4.0-315

22/07/13 15:46:06 DEBUG services.AbfsIoUtils: x-ms-client-request-id=14467eed-4c13-4e36-9a5d-35603fe87d0a

22/07/13 15:46:06 DEBUG services.AbfsIoUtils: Content-Type=

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Response Headers

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: HTTP Response=HTTP/1.1 200 OK

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-lease-status=unlocked

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-version=2018-11-09

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Server=Windows-Azure-Blob/1.0 Microsoft-HTTPAPI/2.0

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-lease-state=available

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Last-Modified=Mon, 11 Jul 2022 08:15:08 GMT

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Date=Wed, 13 Jul 2022 07:46:06 GMT

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-blob-type=BlockBlob

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Accept-Ranges=bytes

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-server-encrypted=true

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-access-tier-inferred=true

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-meta-hdi_isfolder=true

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-access-tier=Hot

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: ETag="0x8DA631578216222"

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-creation-time=Mon, 11 Jul 2022 08:15:08 GMT

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Content-Length=0

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-request-id=4a211e55-f01e-0058-388c-9679fc000000

22/07/13 15:46:07 DEBUG services.AbfsClient: HttpRequest: 200,,cid=14467eed-4c13-4e36-9a5d-35603fe87d0a,rid=4a211e55-f01e-0058-388c-9679fc000000,sent=0,recv=0,HEAD,https://xxxxxxxxxxxxx.blob.core.chinacloudapi.cn/adsl//?upn=false&action=getAccessControl&timeout=90

22/07/13 15:46:07 DEBUG azurebfs.AzureBlobFileSystemStore: getFileStatus filesystem: adsl path: abfs://adsl@xxxxxxxxxxxxx.blob.core.chinacloudapi.cn/test/a.txt isNamespaceEnabled: true

22/07/13 15:46:07 DEBUG services.AbfsClient: Authenticating request with OAuth2 access token

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Request Headers

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Accept-Charset=utf-8

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-version=2018-11-09

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Accept=application/json, application/octet-stream

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: User-Agent=Azure Blob FS/3.1.1.3.1.4.0-315 (JavaJRE 1.8.0_232; Linux 3.10.0-862.el7.x86_64; SunJSSE-1.8) User-Agent: APN/1.0 Hortonworks/1.0 HDP/3.1.4.0-315

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-client-request-id=756615aa-bed9-4487-88ae-b69f859f0b51

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Content-Type=

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Response Headers

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Transfer-Encoding=chunked

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: HTTP Response=HTTP/1.1 404 The specified blob does not exist.

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-version=2018-11-09

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Server=Windows-Azure-Blob/1.0 Microsoft-HTTPAPI/2.0

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-error-code=BlobNotFound

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-request-id=4a211e7e-f01e-0058-5d8c-9679fc000000

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Date=Wed, 13 Jul 2022 07:46:06 GMT

22/07/13 15:46:07 DEBUG services.AbfsClient: HttpRequest: 404,,cid=756615aa-bed9-4487-88ae-b69f859f0b51,rid=4a211e7e-f01e-0058-5d8c-9679fc000000,sent=0,recv=0,HEAD,https://xxxxxxxxxxxxx.blob.core.chinacloudapi.cn/adsl/test/a.txt?upn=false&timeout=90

22/07/13 15:46:07 DEBUG fs.FileSystem: Looking for FS supporting file

22/07/13 15:46:07 DEBUG fs.FileSystem: looking for configuration option fs.file.impl

22/07/13 15:46:07 DEBUG fs.FileSystem: Looking in service filesystems for implementation class

22/07/13 15:46:07 DEBUG fs.FileSystem: FS for file is class org.apache.hadoop.fs.LocalFileSystem

22/07/13 15:46:07 DEBUG azurebfs.AzureBlobFileSystem: AzureBlobFileSystem.getFileStatus path: abfs://adsl@xxxxxxxxxxxxx.blob.core.chinacloudapi.cn/test

22/07/13 15:46:07 DEBUG azurebfs.AzureBlobFileSystem: ABFS authorizer is not initialized. No authorization check will be performed.

22/07/13 15:46:07 DEBUG azurebfs.AzureBlobFileSystemStore: getFileStatus filesystem: adsl path: abfs://adsl@xxxxxxxxxxxxx.blob.core.chinacloudapi.cn/test isNamespaceEnabled: true

22/07/13 15:46:07 DEBUG services.AbfsClient: Authenticating request with OAuth2 access token

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Request Headers

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Accept-Charset=utf-8

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-version=2018-11-09

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Accept=application/json, application/octet-stream

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: User-Agent=Azure Blob FS/3.1.1.3.1.4.0-315 (JavaJRE 1.8.0_232; Linux 3.10.0-862.el7.x86_64; SunJSSE-1.8) User-Agent: APN/1.0 Hortonworks/1.0 HDP/3.1.4.0-315

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-client-request-id=b48f18e8-ba8e-4a44-956f-5ef889b828e5

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Content-Type=

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Response Headers

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: HTTP Response=HTTP/1.1 200 OK

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-lease-status=unlocked

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-version=2018-11-09

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Server=Windows-Azure-Blob/1.0 Microsoft-HTTPAPI/2.0

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-lease-state=available

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Last-Modified=Tue, 12 Jul 2022 10:03:44 GMT

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Date=Wed, 13 Jul 2022 07:46:06 GMT

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-blob-type=BlockBlob

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Accept-Ranges=bytes

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-server-encrypted=true

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-access-tier-inferred=true

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-meta-hdi_isfolder=true

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-access-tier=Hot

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Cache-Control=max-age=0

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: ETag="0x8DA63EDCE5D3F3C"

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-creation-time=Tue, 12 Jul 2022 10:03:44 GMT

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Content-Length=0

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-request-id=4a211e93-f01e-0058-718c-9679fc000000

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Content-Type=application/octet-stream

22/07/13 15:46:07 DEBUG services.AbfsClient: HttpRequest: 200,,cid=b48f18e8-ba8e-4a44-956f-5ef889b828e5,rid=4a211e93-f01e-0058-718c-9679fc000000,sent=0,recv=0,HEAD,https://xxxxxxxxxxxxx.blob.core.chinacloudapi.cn/adsl/test?upn=false&timeout=90

22/07/13 15:46:07 DEBUG azurebfs.AzureBlobFileSystem: AzureBlobFileSystem.getFileStatus path: abfs://adsl@xxxxxxxxxxxxx.blob.core.chinacloudapi.cn/test/a.txt._COPYING_

22/07/13 15:46:07 DEBUG azurebfs.AzureBlobFileSystem: ABFS authorizer is not initialized. No authorization check will be performed.

22/07/13 15:46:07 DEBUG azurebfs.AzureBlobFileSystemStore: getFileStatus filesystem: adsl path: abfs://adsl@xxxxxxxxxxxxx.blob.core.chinacloudapi.cn/test/a.txt._COPYING_ isNamespaceEnabled: true

22/07/13 15:46:07 DEBUG services.AbfsClient: Authenticating request with OAuth2 access token

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Request Headers

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Accept-Charset=utf-8

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-version=2018-11-09

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Accept=application/json, application/octet-stream

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: User-Agent=Azure Blob FS/3.1.1.3.1.4.0-315 (JavaJRE 1.8.0_232; Linux 3.10.0-862.el7.x86_64; SunJSSE-1.8) User-Agent: APN/1.0 Hortonworks/1.0 HDP/3.1.4.0-315

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-client-request-id=8a6491b6-e13e-4d4e-be3b-8d183c727442

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Content-Type=

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Response Headers

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Transfer-Encoding=chunked

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: HTTP Response=HTTP/1.1 404 The specified blob does not exist.

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-version=2018-11-09

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Server=Windows-Azure-Blob/1.0 Microsoft-HTTPAPI/2.0

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-error-code=BlobNotFound

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-request-id=4a211ea5-f01e-0058-7f8c-9679fc000000

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Date=Wed, 13 Jul 2022 07:46:06 GMT

22/07/13 15:46:07 DEBUG services.AbfsClient: HttpRequest: 404,,cid=8a6491b6-e13e-4d4e-be3b-8d183c727442,rid=4a211ea5-f01e-0058-7f8c-9679fc000000,sent=0,recv=0,HEAD,https://xxxxxxxxxxxxx.blob.core.chinacloudapi.cn/adsl/test/a.txt._COPYING_?upn=false&timeout=90

22/07/13 15:46:07 DEBUG azurebfs.AzureBlobFileSystem: AzureBlobFileSystem.create path: abfs://adsl@xxxxxxxxxxxxx.blob.core.chinacloudapi.cn/test/a.txt._COPYING_ permission: { masked: rw-r--r--, unmasked: rw-rw-rw- } overwrite: true bufferSize: 33554432

22/07/13 15:46:07 DEBUG azurebfs.AzureBlobFileSystem: ABFS authorizer is not initialized. No authorization check will be performed.

22/07/13 15:46:07 DEBUG azurebfs.AzureBlobFileSystemStore: createFile filesystem: adsl path: abfs://adsl@xxxxxxxxxxxxx.blob.core.chinacloudapi.cn/test/a.txt._COPYING_ overwrite: true permission: { masked: rw-r--r--, unmasked: rw-rw-rw- } umask: ----w--w- isNamespaceEnabled: true

22/07/13 15:46:07 DEBUG services.AbfsClient: Authenticating request with OAuth2 access token

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Request Headers

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-umask=0022

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Accept-Charset=utf-8

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-version=2018-11-09

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Accept=application/json, application/octet-stream

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: User-Agent=Azure Blob FS/3.1.1.3.1.4.0-315 (JavaJRE 1.8.0_232; Linux 3.10.0-862.el7.x86_64; SunJSSE-1.8) User-Agent: APN/1.0 Hortonworks/1.0 HDP/3.1.4.0-315

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-permissions=0644

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-client-request-id=bf71e98f-886d-4529-b62b-7898c655fadf

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Content-Type=

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Response Headers

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: HTTP Response=HTTP/1.1 400 An HTTP header that's mandatory for this request is not specified.

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-version=2018-11-09

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Server=Windows-Azure-Blob/1.0 Microsoft-HTTPAPI/2.0

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-error-code=MissingRequiredHeader

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Content-Length=301

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-request-id=4a211eae-f01e-0058-088c-9679fc000000

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Date=Wed, 13 Jul 2022 07:46:06 GMT

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Content-Type=application/xml

22/07/13 15:46:07 DEBUG services.AbfsHttpOperation: ExpectedError:

org.codehaus.jackson.JsonParseException: Unexpected character ('<' (code 60)): expected a valid value (number, String, array, object, 'true', 'false' or 'null')

at [Source: sun.net.www.protocol.http.HttpURLConnection$HttpInputStream@38145825; line: 1, column: 5]

at org.codehaus.jackson.JsonParser._constructError(JsonParser.java:1433)

at org.codehaus.jackson.impl.JsonParserMinimalBase._reportError(JsonParserMinimalBase.java:521)

at org.codehaus.jackson.impl.JsonParserMinimalBase._reportUnexpectedChar(JsonParserMinimalBase.java:442)

at org.codehaus.jackson.impl.Utf8StreamParser._handleUnexpectedValue(Utf8StreamParser.java:2090)

at org.codehaus.jackson.impl.Utf8StreamParser._nextTokenNotInObject(Utf8StreamParser.java:606)

at org.codehaus.jackson.impl.Utf8StreamParser.nextToken(Utf8StreamParser.java:492)

at org.apache.hadoop.fs.azurebfs.services.AbfsHttpOperation.processStorageErrorResponse(AbfsHttpOperation.java:379)

at org.apache.hadoop.fs.azurebfs.services.AbfsHttpOperation.processResponse(AbfsHttpOperation.java:285)

at org.apache.hadoop.fs.azurebfs.services.AbfsRestOperation.executeHttpOperation(AbfsRestOperation.java:172)

at org.apache.hadoop.fs.azurebfs.services.AbfsRestOperation.execute(AbfsRestOperation.java:125)

at org.apache.hadoop.fs.azurebfs.services.AbfsClient.createPath(AbfsClient.java:254)

at org.apache.hadoop.fs.azurebfs.AzureBlobFileSystemStore.createFile(AzureBlobFileSystemStore.java:342)

at org.apache.hadoop.fs.azurebfs.AzureBlobFileSystem.create(AzureBlobFileSystem.java:189)

at org.apache.hadoop.fs.FilterFileSystem.create(FilterFileSystem.java:193)

at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:1118)

at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:1098)

at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:987)

at org.apache.hadoop.fs.shell.CommandWithDestination$TargetFileSystem.create(CommandWithDestination.java:521)

at org.apache.hadoop.fs.shell.CommandWithDestination$TargetFileSystem.writeStreamToFile(CommandWithDestination.java:485)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyStreamToTarget(CommandWithDestination.java:408)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyFileToTarget(CommandWithDestination.java:343)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:278)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:263)

at org.apache.hadoop.fs.shell.Command.processPathInternal(Command.java:367)

at org.apache.hadoop.fs.shell.Command.processPaths(Command.java:331)

at org.apache.hadoop.fs.shell.Command.processPathArgument(Command.java:304)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPathArgument(CommandWithDestination.java:258)

at org.apache.hadoop.fs.shell.Command.processArgument(Command.java:286)

at org.apache.hadoop.fs.shell.Command.processArguments(Command.java:270)

at org.apache.hadoop.fs.shell.CommandWithDestination.processArguments(CommandWithDestination.java:229)

at org.apache.hadoop.fs.shell.CopyCommands$Put.processArguments(CopyCommands.java:295)

at org.apache.hadoop.fs.shell.FsCommand.processRawArguments(FsCommand.java:120)

at org.apache.hadoop.fs.shell.Command.run(Command.java:177)

at org.apache.hadoop.fs.FsShell.run(FsShell.java:328)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90)

at org.apache.hadoop.fs.FsShell.main(FsShell.java:391)

22/07/13 15:46:07 DEBUG services.AbfsClient: HttpRequest: 400,,cid=bf71e98f-886d-4529-b62b-7898c655fadf,rid=4a211eae-f01e-0058-088c-9679fc000000,sent=0,recv=301,PUT,https://xxxxxxxxxxxxx.blob.core.chinacloudapi.cn/adsl/test/a.txt._COPYING_?resource=file&timeout=90

22/07/13 15:46:07 DEBUG azurebfs.AzureBlobFileSystem: AzureBlobFileSystem.getFileStatus path: abfs://adsl@xxxxxxxxxxxxx.blob.core.chinacloudapi.cn/test/a.txt._COPYING_

22/07/13 15:46:07 DEBUG azurebfs.AzureBlobFileSystem: ABFS authorizer is not initialized. No authorization check will be performed.

22/07/13 15:46:07 DEBUG azurebfs.AzureBlobFileSystemStore: getFileStatus filesystem: adsl path: abfs://adsl@xxxxxxxxxxxxx.blob.core.chinacloudapi.cn/test/a.txt._COPYING_ isNamespaceEnabled: true

22/07/13 15:46:07 DEBUG services.AbfsClient: Authenticating request with OAuth2 access token

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Request Headers

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Accept-Charset=utf-8

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-version=2018-11-09

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Accept=application/json, application/octet-stream

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: User-Agent=Azure Blob FS/3.1.1.3.1.4.0-315 (JavaJRE 1.8.0_232; Linux 3.10.0-862.el7.x86_64; SunJSSE-1.8) User-Agent: APN/1.0 Hortonworks/1.0 HDP/3.1.4.0-315

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-client-request-id=4aeb3b46-587d-489c-a246-5d6d668a84a5

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Content-Type=

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Response Headers

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Transfer-Encoding=chunked

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: HTTP Response=HTTP/1.1 404 The specified blob does not exist.

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-version=2018-11-09

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Server=Windows-Azure-Blob/1.0 Microsoft-HTTPAPI/2.0

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-error-code=BlobNotFound

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: x-ms-request-id=4a211eb7-f01e-0058-108c-9679fc000000

22/07/13 15:46:07 DEBUG services.AbfsIoUtils: Date=Wed, 13 Jul 2022 07:46:06 GMT

22/07/13 15:46:07 DEBUG services.AbfsClient: HttpRequest: 404,,cid=4aeb3b46-587d-489c-a246-5d6d668a84a5,rid=4a211eb7-f01e-0058-108c-9679fc000000,sent=0,recv=0,HEAD,https://xxxxxxxxxxxxx.blob.core.chinacloudapi.cn/adsl/test/a.txt._COPYING_?upn=false&timeout=90

put: Operation failed: "An HTTP header that's mandatory for this request is not specified.", 400, PUT, https://xxxxxxxxxxxxx.blob.core.chinacloudapi.cn/adsl/test/a.txt._COPYING_?resource=file&timeout=90, , ""

22/07/13 15:46:07 DEBUG azurebfs.AzureBlobFileSystem: AzureBlobFileSystem.close

22/07/13 15:46:07 DEBUG util.ShutdownHookManager: Completed shutdown in 0.004 seconds; Timeouts: 0

22/07/13 15:46:07 DEBUG util.ShutdownHookManager: ShutdownHookManger completed shutdown.

问题解答

虽然在Hadoop 中执行的 PUT指令如下:

./hadoop fs -put a.txt abfs://yourcontainername@youradlsname.blob.core.chinacloudapi.cn/test.txt

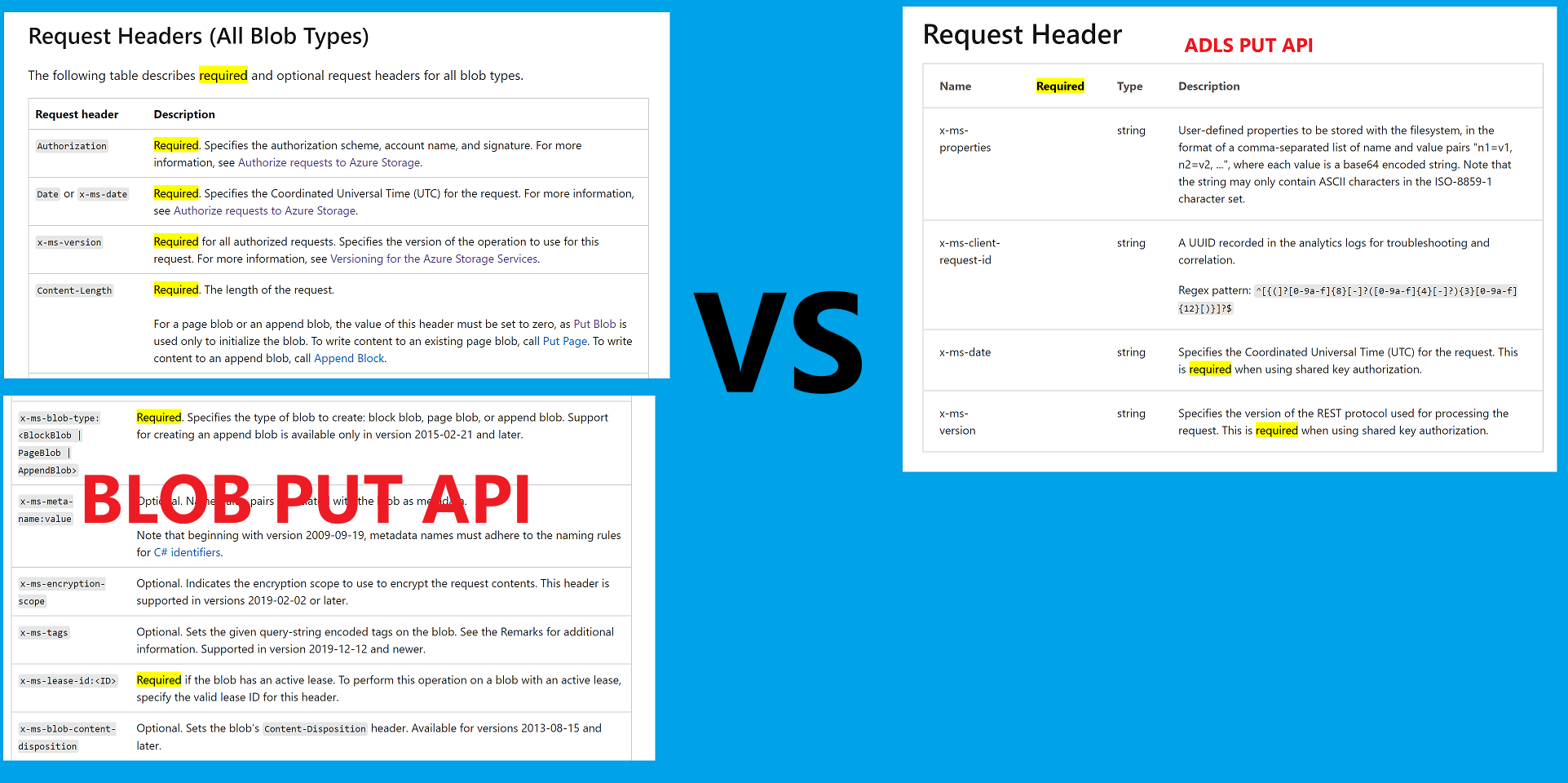

但实质上,也时发送的REST API来操作ADLS资源。 所以参考PUT Blob的接口文档:https://docs.microsoft.com/en-us/rest/api/storageservices/put-blob#request-headers-all-blob-types

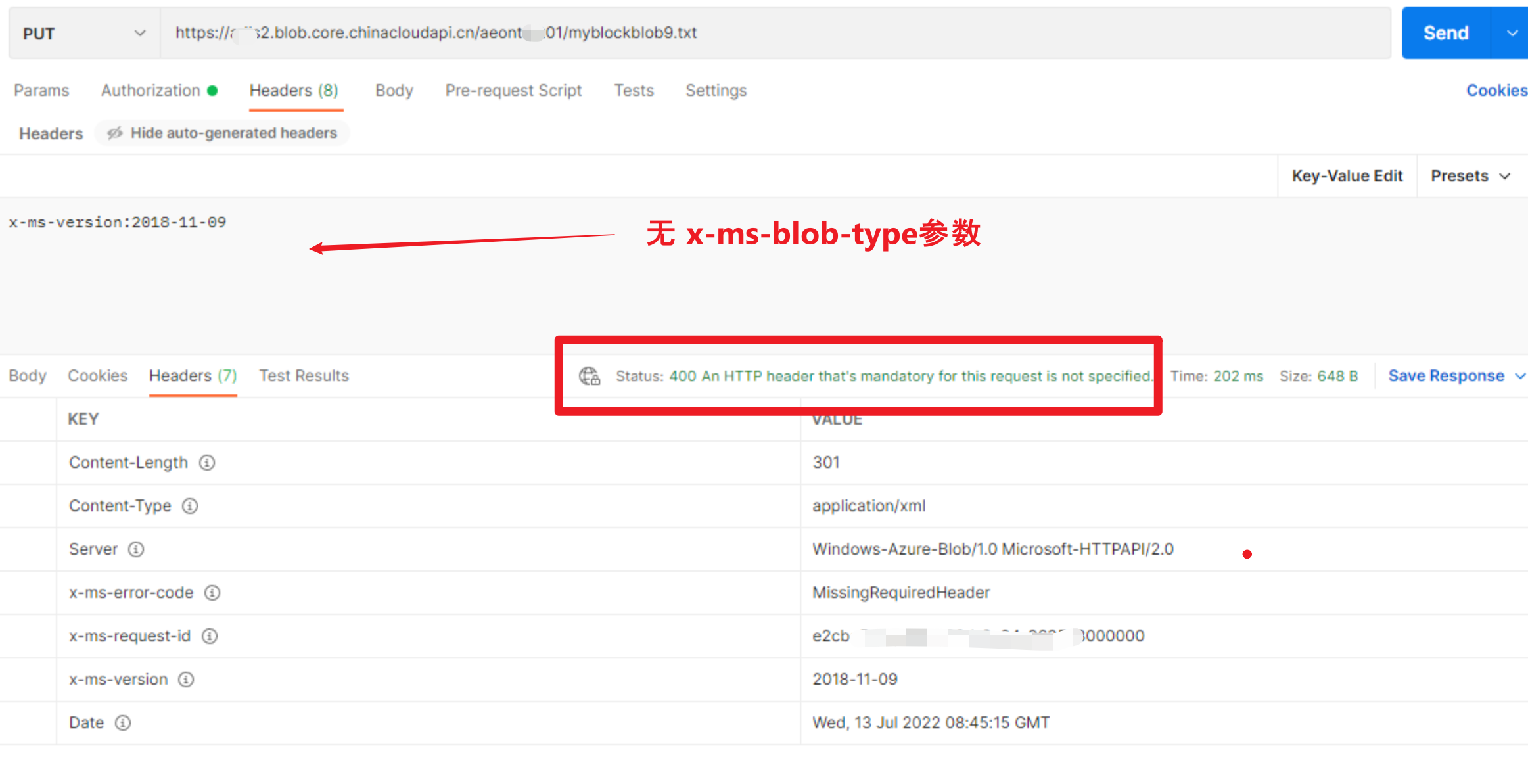

它必须的Header参数有:x-ms-version,x-ms-blob-type,x-ms-lease-id,Authorization,x-ms-date,Content-Length等。但是在Hadoop的日志中,我们只发现了 x-ms-version为 2018-11-09,缺少了x-ms-blob-type。

基于这一发现,我们通过Postman复现了同样的错误:

虽然找到了发生问题的根源,但是在Hadoop中,如何来解决呢? 为什么使用 -put , -ls 等指令都会出现 HTTP Header miss 的问题呢? 按照Hadoop + ADLS 组合设计分析,不可能出现这样的严重错误而不进行修复。

回想 ADLS Gen 2专为大数据操作而设计。并且还特别启用了新的终结点(常规Blob操作终结点为:youradlsname.blob.core.chinacloudapi.cn , ADLS操作的终结点为:youradlsname.dfs.core.chinacloudapi.cn)

是否时我们在指令中使用了错误的终结点呢?

对比REST API 文档中,常规Blob的PUT操作和ADLS Create File的PUT操作,发现 ADLS PUT操作根本就不需要 x-ms-version,x-ms-blob-type 这两个Header 为必须。

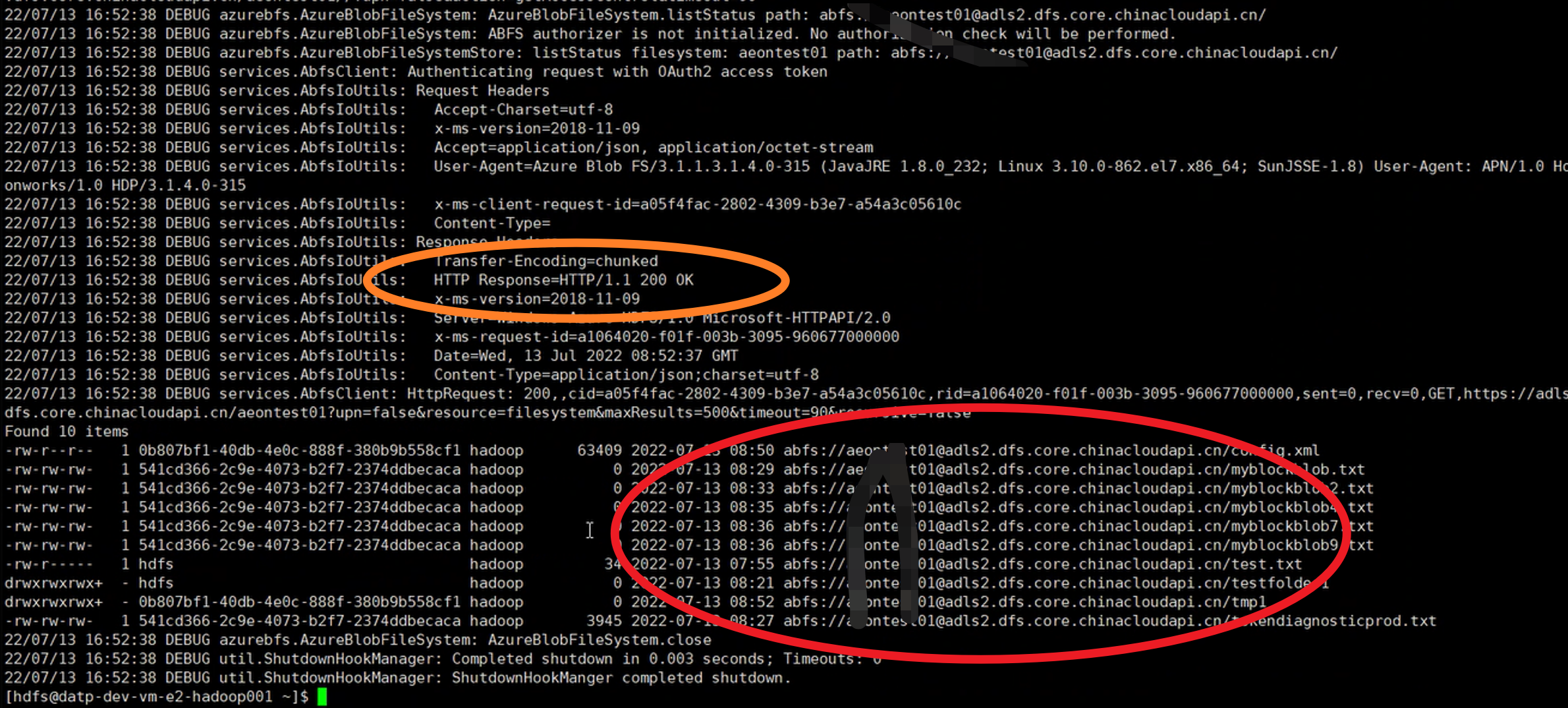

根据以上发现,在Hadoop put指令中修改 blob 为 dfs 测试。 问题完美解决!

以此次的错误,得出一个深刻的教训:当使用ADLS进行大数据相关操作时(如hadoop,databricks)一定一定要使用ADLS专用终结点:

xxxxxxx.dfs.core.chinacloudapi.cn

参考资料

Filesystem - Create:https://docs.microsoft.com/en-us/rest/api/storageservices/datalakestoragegen2/filesystem/create

Put Blob: https://docs.microsoft.com/en-us/rest/api/storageservices/put-blob#request-headers-all-blob-types

[END]

【Azure 存储服务】Hadoop集群中使用ADLS(Azure Data Lake Storage)过程中遇见执行PUT操作报错的更多相关文章

- hadoop集群搭建详细教程

本文针对hadoop集群的搭建过程给予一个详细的介绍. 参考视频教程:https://www.bilibili.com/video/BV1tz4y127hX?p=1&share_medium= ...

- 大数据 -- Hadoop集群环境搭建

首先我们来认识一下HDFS, HDFS(Hadoop Distributed File System )Hadoop分布式文件系统.它其实是将一个大文件分成若干块保存在不同服务器的多个节点中.通过联网 ...

- 配置两个Hadoop集群Kerberos认证跨域互信

两个Hadoop集群开启Kerberos验证后,集群间不能够相互访问,需要实现Kerberos之间的互信,使用Hadoop集群A的客户端访问Hadoop集群B的服务(实质上是使用Kerberos Re ...

- hadoop集群namenode同时挂datanode

背景:(测试环境)只有两台机器一台namenode一台namenode,但集群只有一个结点感觉不出来效果,在namenode上挂一个datanode就有两个节点,弊端见最后 操作非常简单(添加独立节点 ...

- Hadoop集群搭建(五)~搭建集群

继上篇关闭防火墙之后,因为后面我们会管理一个集群,在VMware中不断切换不同节点,为了管理方便我选择xshell这个连接工具,大家也可以选择SecureCRT等工具. 本篇记录一下3台机器集群的搭建 ...

- Hadoop 集群配置记录小结

Hadoop集群配置往往按照网上教程就可以"配置成功",但是你自己在操作的时候会有很多奇奇怪怪的问题出现, 我在这里整理了一下常见的问题与处理方法: 1.配置/etc/hosts ...

- Databricks 第8篇:把Azure Data Lake Storage Gen2 (ADLS Gen 2)挂载到DBFS

DBFS使用dbutils实现存储服务的装载(mount.挂载),用户可以把Azure Data Lake Storage Gen2和Azure Blob Storage 账户装载到DBFS中.mou ...

- 使用Windows Azure的VM安装和配置CDH搭建Hadoop集群

本文主要内容是使用Windows Azure的VIRTUAL MACHINES和NETWORKS服务安装CDH (Cloudera Distribution Including Apache Hado ...

- 实战CentOS系统部署Hadoop集群服务

导读 Hadoop是一个由Apache基金会所开发的分布式系统基础架构,Hadoop实现了一个分布式文件系统(Hadoop Distributed File System),简称HDFS.HDFS有高 ...

随机推荐

- CentOS8更换yum源后出现同步仓库缓存失败的问题

1.错误情况更新yum时报错: 按照网上教程,更换阿里源.清华源都还是无法使用.可参考: centos8更换国内源(阿里源)_大山的博客-CSDN博客_centos8更换阿里源icon-default ...

- WPF样式和触发器

理解样式 样式可以定义通用的格式化特征集合. Style 类的属性 Setters.Triggers.Resources.BasedOn.TargetType <Style x:Key=&quo ...

- centos 7.0 下安装FFmpeg软件 过程

这几天由于需要编写一个语音识别功能,用到了百度语音识别接口,从web端或小程序端传上来的音频文件是aac或者mp3或者wav格式的,需要使用FFmpeg进行格式转换,以符合百度api的要求. 安装FF ...

- 一文了解RPC框架原理

点击上方"开源Linux",选择"设为星标" 回复"学习"获取独家整理的学习资料! 1.RPC框架的概念 RPC(Remote Proced ...

- learnByWork

2019.5.5(移动端页面) 1.页面的整体框架大小min-width: 300px~max-width: 560px: 2.具体大小单位用px: 3.网页布局用div不是table,在特殊情况,如 ...

- VUE3 之 Teleport - 这个系列的教程通俗易懂,适合新手

1. 概述 老话说的好:宰相肚里能撑船,但凡成功的人,都有一种博大的胸怀. 言归正传,今天我们来聊聊 VUE 中 Teleport 的使用. 2. Teleport 2.1 遮罩效果的实现 < ...

- Android 12(S) 图像显示系统 - SurfaceFlinger GPU合成/CLIENT合成方式 - 随笔1

必读: Android 12(S) 图像显示系统 - 开篇 一.前言 SurfaceFlinger中的图层选择GPU合成(CLIENT合成方式)时,会把待合成的图层Layers通过renderengi ...

- vue大型电商项目尚品汇(前台篇)day01

学完vue2还是决定先做一个比较经典,也比较大的项目来练练手好一点,vue3的知识不用那么着急,先把vue2用熟练了,vue3随时都能学. 这个项目确实很经典包含了登录注册.购物车电商网站该有的都有, ...

- 【算法】基数排序(Radix Sort)(十)

基数排序(Radix Sort) 基数排序是按照低位先排序,然后收集:再按照高位排序,然后再收集:依次类推,直到最高位.有时候有些属性是有优先级顺序的,先按低优先级排序,再按高优先级排序.最后的次序就 ...

- DML数据操作语言

DML数据操作语言 用来对数据库中表的数据记录进行更新.(增删改) 插入insert -- insert into 表(列名1,列名2,列名3...) values (值1,值2,值3...):向表中 ...