2019-ICLR-DARTS: Differentiable Architecture Search-论文阅读

DARTS

2019-ICLR-DARTS Differentiable Architecture Search

- Hanxiao Liu、Karen Simonyan、Yiming Yang

- GitHub:2.8k stars

- Citation:557

Motivation

Current NAS method:

- Computationally expensive: 2000/3000 GPU days

- Discrete search space, leads to a large number of architecture evaluations required.

Contribution

- Differentiable NAS method based on gradient decent.

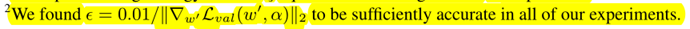

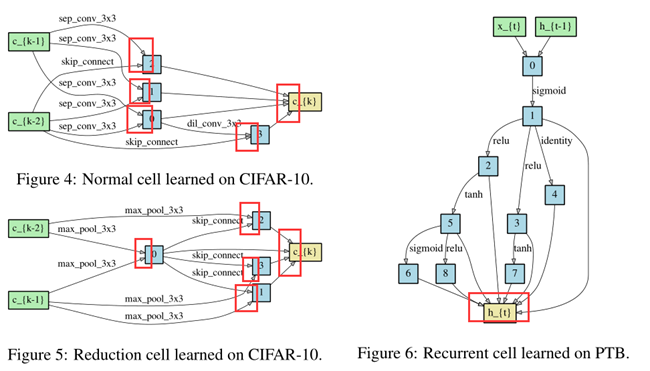

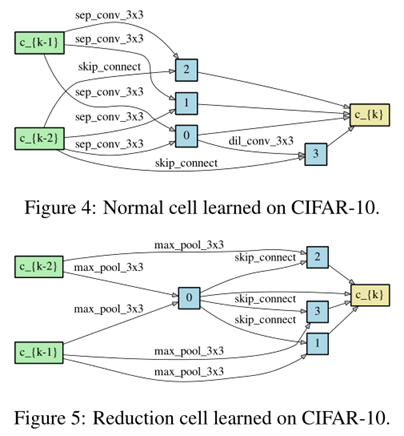

- Both CNN(CV) and RNN(NLP).

- SOTA results on CIFAR-10 and PTB.

- Efficiency: (2000 GPU days VS 4 GPU days)

- Transferable: cifar10 to ImageNet, (PTB to WikiText-2).

Method

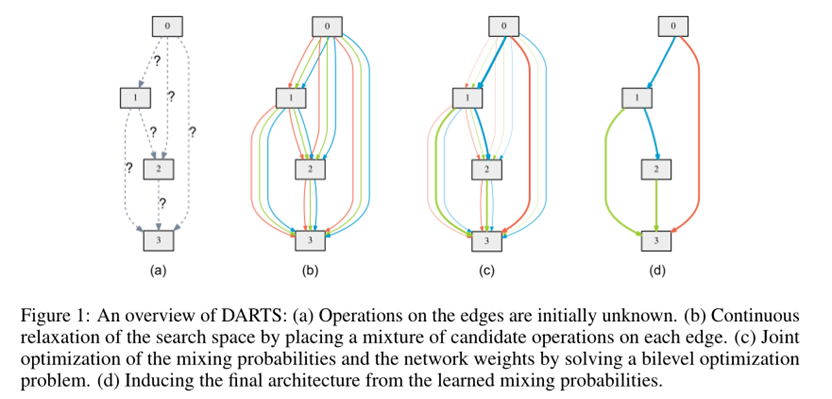

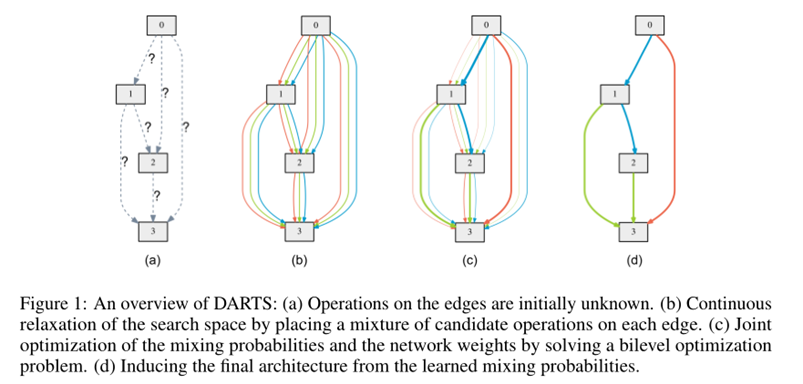

Search Space

Search for a cell as the building block of the final architecture.

The learned cell could either be stacked to form a CNN or recursively connected to form a RNN.

A cell is a DAG consisting of an ordered sequence of N nodes.

\(\bar{o}^{(i, j)}(x)=\sum_{o \in \mathcal{O}} \frac{\exp \left(\alpha_{o}^{(i, j)}\right)}{\sum_{o^{\prime} \in \mathcal{O}} \exp \left(\alpha_{o^{\prime}}^{(i, j)}\right)} o(x)\)

\(x^{(j)}=\sum_{i<j} o^{(i, j)}\left(x^{(i)}\right)\)

Optimization Target

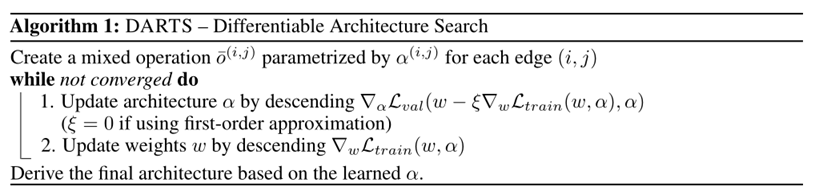

Our goal is to jointly learn the architecture α and the weights w within all the mixed operations (e.g. weights of the convolution filters).

\(\min _{\alpha} \mathcal{L}_{v a l}\left(w^{*}(\alpha), \alpha\right)\) ......(3)

s.t. \(\quad w^{*}(\alpha)=\operatorname{argmin}_{w} \mathcal{L}_{\text {train}}(w, \alpha)\) .......(4)

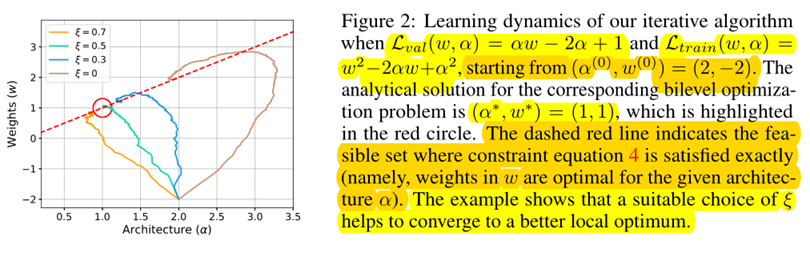

The idea is to approximate w∗(α) by adapting w using only a single training step, without solving the inner optimization (equation 4) completely by training until convergence.

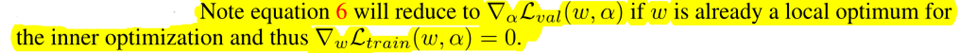

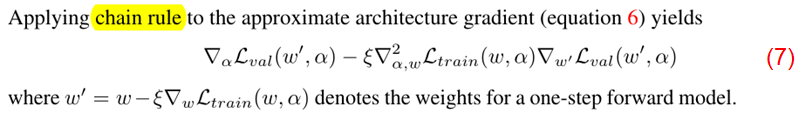

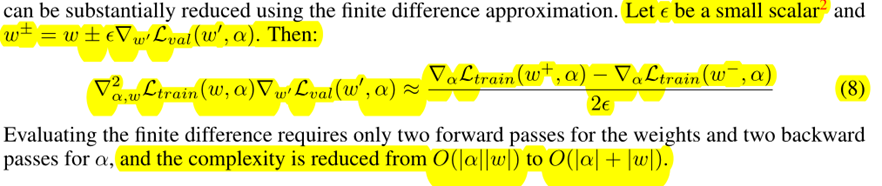

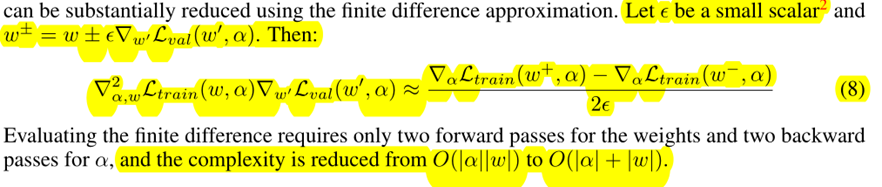

\(\nabla_{\alpha} \mathcal{L}_{v a l}\left(w^{*}(\alpha), \alpha\right)\) ......(5)

\(\approx \nabla_{\alpha} \mathcal{L}_{v a l}\left(w-\xi \nabla_{w} \mathcal{L}_{t r a i n}(w, \alpha), \alpha\right)\) ......(6)

- When ξ = 0, the second-order derivative in equation 7 will disappear.

- ξ = 0 as the first-order approximation,

- ξ > 0 as the second-order approximation.

Discrete Arch

To form each node in the discrete architecture, we retain the top-k strongest operations (from distinct nodes) among all non-zero candidate operations collected from all the previous nodes.

we use k = 2 for convolutional cells and k = 1 for recurrent cellsThe strength of an operation is defined as \(\frac{\exp \left(\alpha_{o}^{(i, j)}\right)}{\sum_{o^{\prime} \in \mathcal{O}} \exp \left(\alpha_{o^{\prime}}^{(i, j)}\right)}\)

Experiments

We include the following operations in O:

- 3 × 3 and 5 × 5 separable convolutions,

- 3 × 3 and 5 × 5 dilated separable convolutions,

- 3 × 3 max pooling,

- 3 × 3 average pooling,

- identity (skip connection?)

- zero.

All operations are of

- stride one (if applicable)

- the feature maps are padded to preserve their spatial resolution.

We use the

- ReLU-Conv-BN order for convolutional operations,

- Each separable convolution is always applied twice

- Our convolutional cell consists of N = 7 nodes, the output node is defined as the depthwise concatenation of all the intermediate nodes (input nodes excluded).

The first and second nodes of cell k are set equal to the outputs of cell k−2 and cell k−1

Cells located at the 1/3 and 2/3 of the total depth of the network are reduction cells, in which all the operations adjacent to the input nodes are of stride two.

The architecture encoding therefore is (αnormal, αreduce),

where αnormal is shared by all the normal cells

and αreduce is shared by all the reduction cells.

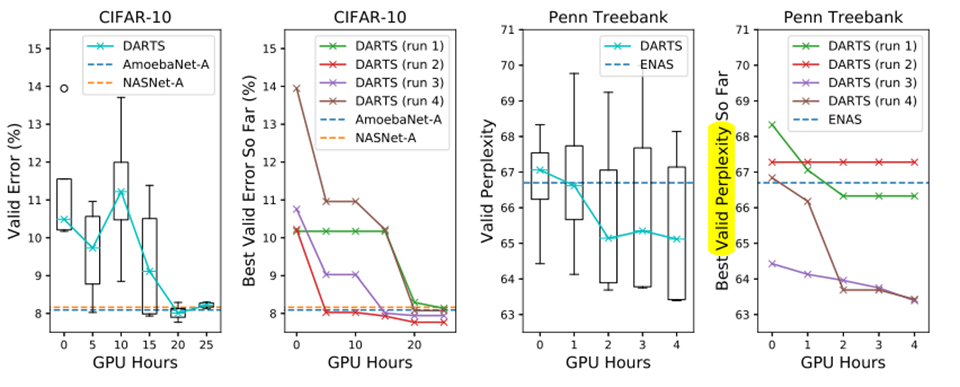

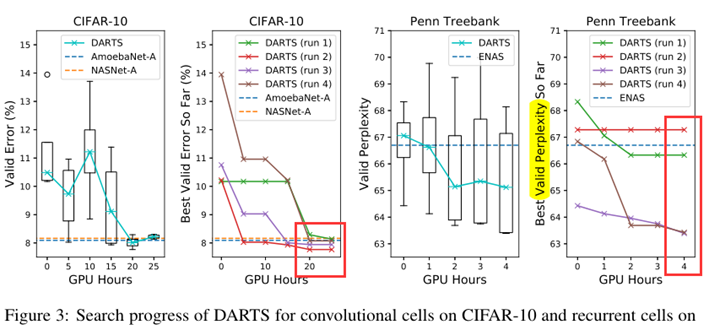

To determine the architecture for final evaluation, we run DARTS four times with different random seeds and pick the best cell based on its validation performance obtained by training from scratch for a short period (100 epochs on CIFAR-10 and 300 epochs on PTB).

This is particularly important for recurrent cells, as the optimization outcomes can be initialization-sensitive (Fig. 3)

Arch Evaluation

- To evaluate the selected architecture, we randomly initialize its weights (weights learned during the search process are discarded), train it from scratch, and report its performance on the test set.

- To evaluate the selected architecture, we randomly initialize its weights (weights learned during the search process are discarded), train it from scratch, and report its performance on the test set.

Result Analysis

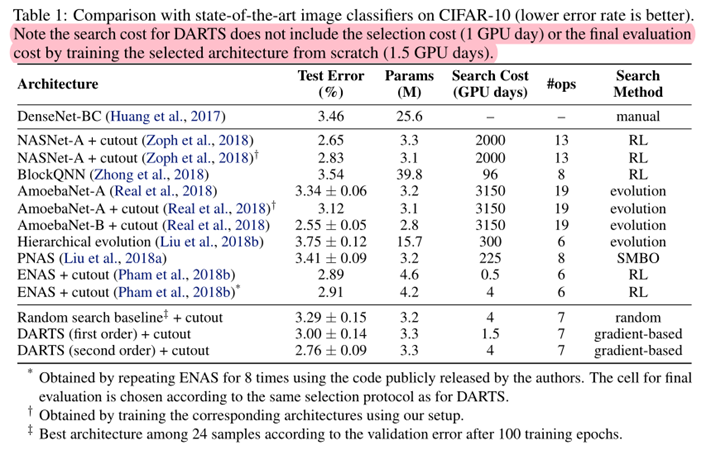

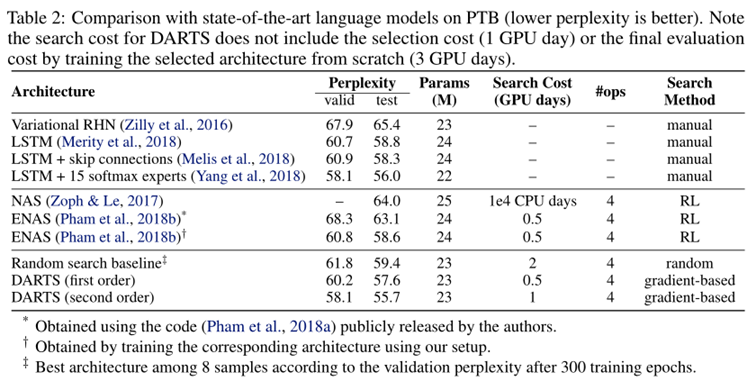

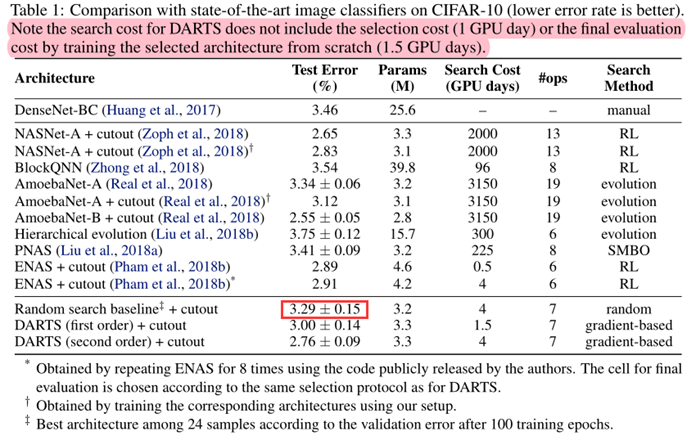

- DARTS achieved comparable results with the state of the art while using three orders of magnitude less computation resources.

- (i.e. 1.5 or 4 GPU days vs 2000 GPU days for NASNet and 3150 GPU days for AmoebaNet)

- The longer search time is due to the fact that we have repeated the search process four times for cell selection. This practice is less important for convolutional cells however, because the performance of discovered architectures does not strongly depend on initialization (Fig. 3).

- It is also interesting to note that random search is competitive for both convolutional and recurrent models, which reflects the importance of the search space design.

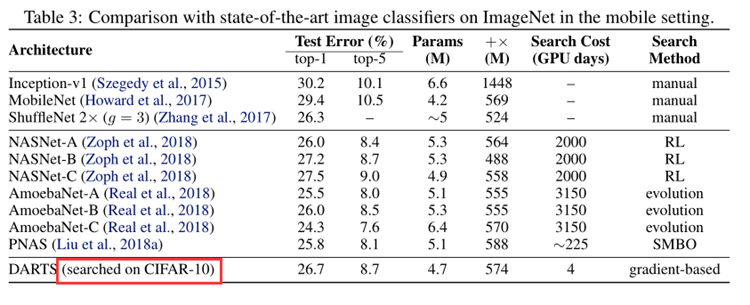

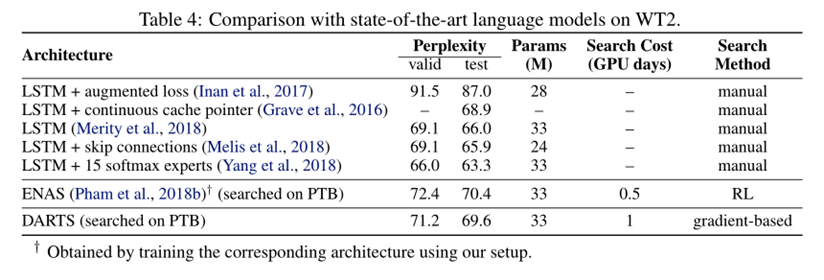

Results in Table 3 show that the cell learned on CIFAR-10 is indeed transferable to ImageNet.

The weaker transferability between PTB and WT2 (as compared to that between CIFAR-10 and ImageNet) could be explained by the relatively small size of the source dataset (PTB) for architecture search.

The issue of transferability could potentially be circumvented by directly optimizing the architecture on the task of interest.

Conclusion

- We presented DARTS, a simple yet efficient NAS algorithm for both CNN and RNN.

- SOTA

- efficiency improvement by several orders of magnitude.

Improve

- discrepancies between the continuous architecture encoding and the derived discrete architecture. (softmax…)

- It would also be interesting to investigate performance-aware architecture derivation schemes based on the shared parameters learned during the search process.

Appendix

2019-ICLR-DARTS: Differentiable Architecture Search-论文阅读的更多相关文章

- 论文笔记:DARTS: Differentiable Architecture Search

DARTS: Differentiable Architecture Search 2019-03-19 10:04:26accepted by ICLR 2019 Paper:https://arx ...

- 论文笔记系列-DARTS: Differentiable Architecture Search

Summary 我的理解就是原本节点和节点之间操作是离散的,因为就是从若干个操作中选择某一个,而作者试图使用softmax和relaxation(松弛化)将操作连续化,所以模型结构搜索的任务就转变成了 ...

- 论文笔记:Progressive Differentiable Architecture Search:Bridging the Depth Gap between Search and Evaluation

Progressive Differentiable Architecture Search:Bridging the Depth Gap between Search and Evaluation ...

- 2019-ICCV-PDARTS-Progressive Differentiable Architecture Search Bridging the Depth Gap Between Search and Evaluation-论文阅读

P-DARTS 2019-ICCV-Progressive Differentiable Architecture Search Bridging the Depth Gap Between Sear ...

- 论文笔记系列-Auto-DeepLab:Hierarchical Neural Architecture Search for Semantic Image Segmentation

Pytorch实现代码:https://github.com/MenghaoGuo/AutoDeeplab 创新点 cell-level and network-level search 以往的NAS ...

- Research Guide for Neural Architecture Search

Research Guide for Neural Architecture Search 2019-09-19 09:29:04 This blog is from: https://heartbe ...

- 小米造最强超分辨率算法 | Fast, Accurate and Lightweight Super-Resolution with Neural Architecture Search

本篇是基于 NAS 的图像超分辨率的文章,知名学术性自媒体 Paperweekly 在该文公布后迅速跟进,发表分析称「属于目前很火的 AutoML / Neural Architecture Sear ...

- 论文笔记系列-Neural Architecture Search With Reinforcement Learning

摘要 神经网络在多个领域都取得了不错的成绩,但是神经网络的合理设计却是比较困难的.在本篇论文中,作者使用 递归网络去省城神经网络的模型描述,并且使用 增强学习训练RNN,以使得生成得到的模型在验证集上 ...

- 论文笔记:Auto-DeepLab: Hierarchical Neural Architecture Search for Semantic Image Segmentation

Auto-DeepLab: Hierarchical Neural Architecture Search for Semantic Image Segmentation2019-03-18 14:4 ...

随机推荐

- 算法——Java实现栈

栈 定义: 栈是一种先进后出的数据结构,我们把允许插入和删除的一端称为栈顶,另一端称为栈底,不含任何元素的栈称为空栈 栈的java代码实现: 基于数组: import org.junit.jupite ...

- E - Tunnel Warfare HDU - 1540 F - Hotel G - 约会安排 HDU - 4553 区间合并

E - Tunnel Warfare HDU - 1540 对这个题目的思考:首先我们已经意识到这个是一个线段树,要利用线段树来解决问题,但是怎么解决呢,这个摧毁和重建的操作都很简单,但是这个查询怎么 ...

- idea撤销快捷键

Ctrl+z:撤销. Ctrl+shift+z:取消撤销.

- 近期总结的一些Java基础

1.面向过程:当需要实现一个功能的时候,每一个过程中的详细步骤和细节都要亲力亲为. 2.面向对象:当需要实现一个功能的时候,不关心详细的步骤细节,而是找人帮我做事. 3.类和对象的关系: a-类是 ...

- 【杂谈】Disruptor——RingBuffer问题整理(一)

纯CAS为啥比加锁要快? 同样是修改数据,一个采用加锁的方式保证原子性,一个采用CAS的方式保证原子性. 都是能够达到目的的,但是常用的锁(例如显式的Lock和隐式的synchonized),都会把获 ...

- 【Hadoop离线基础总结】Sqoop数据迁移

目录 Sqoop介绍 概述 版本 Sqoop安装及使用 Sqoop安装 Sqoop数据导入 导入关系表到Hive已有表中 导入关系表到Hive(自动创建Hive表) 将关系表子集导入到HDFS中 sq ...

- xml(3)

xml的解析方式:dom解析和sax解析 DOM解析 使用jaxp进行增删改查 1.创建DocumentBuilderFactory工厂 2.通过DocumentBuilderFactory工厂创建D ...

- [csu/coj 1079]树上路径查询 LCA

题意:询问树上从u到v的路径是否经过k 思路:把树dfs转化为有根树后,对于u,v的路径而言,设p为u,v的最近公共祖先,u到v的路径必定是可以看成两条路径的组合,u->p,v->p,这样 ...

- 初识spring boot maven管理--使用spring-boot-starter-parent

springboot官方推荐我们使用spring-boot-starter-parent,spring-boot-starter-parent包含了以下信息: 1.使用java6编译级别 2.使用ut ...

- html5 canvas 绘制上下浮动的字体

绘制上下浮动的字体主要思想为先绘制好需要的字体,每隔一定的时间将画布清空,然后再将字体位置改变再绘制上去 如此循环即可. (function(window) { var flowLogo = func ...