1. Vectors and Linear Combinations

1.1 Vectors

We have n separate numbers \(v_1、v_2、v_3,...,v_n\),that produces a n-dimensional vector \(v\),and \(v\) is represented by an arrow.

\begin{matrix}

v_1 \\

v_2 \\

.\\

.\\

.\\

v_n

\end{matrix}

\right] = (v_1,v_2,...,v_n)

\]

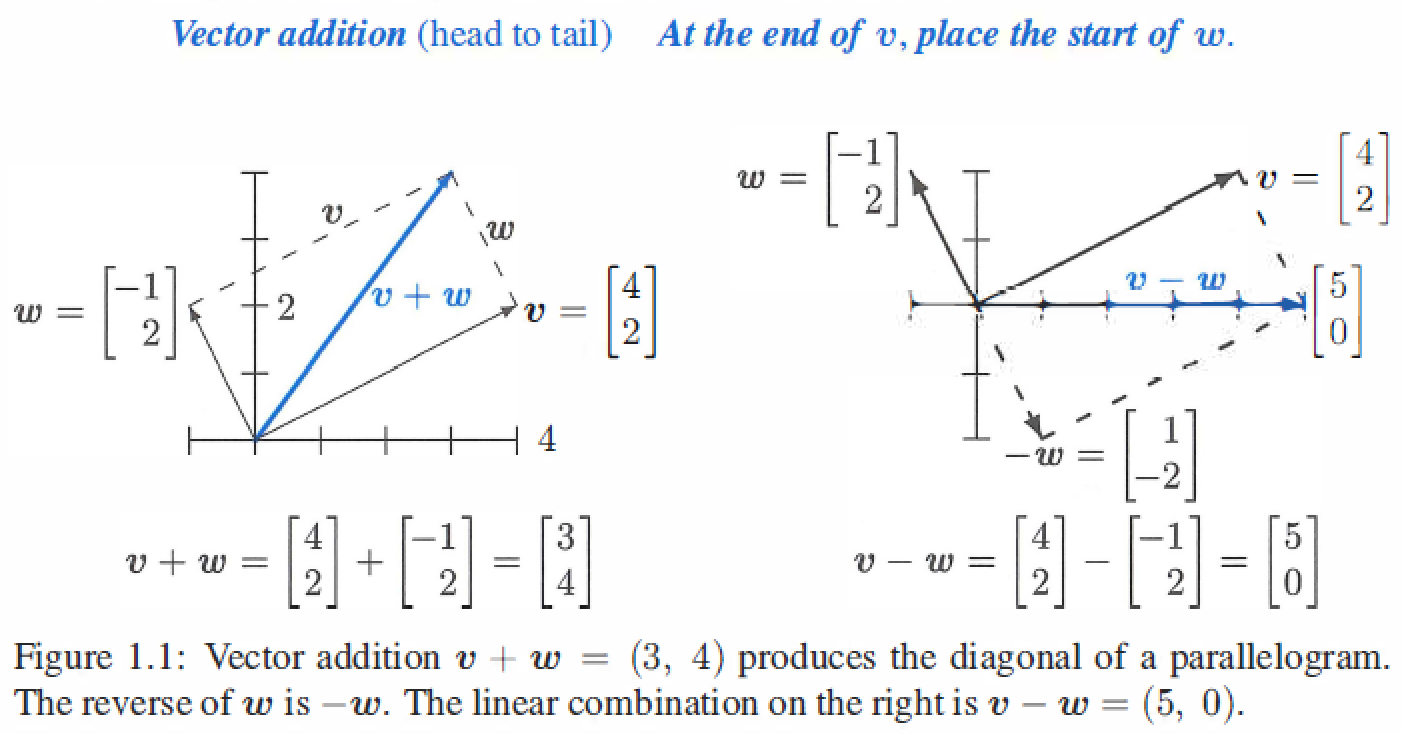

Two-dimensional vector :\(v = \left[\begin{matrix} v_1 \\ v_2 \end{matrix}\right]\) and \(w = \left[\begin{matrix} w_1 \\ w_2 \end{matrix}\right]\)

- Vector Addition : \(v + w = \left[\begin{matrix} v_1 + w_1 \\ v_2 + w_2\end{matrix}\right]\)

- Scalar Multiplication : \(cv = \left[\begin{matrix} cv_1 \\ cv_2 \end{matrix}\right]\),c is scalar.

1.2 Linear Combinations

Multiply \(v\) by \(c\) and multiply \(w\) by \(d\),the sum of \(cv\) and \(dw\) is a linear combination : \(cv + dw\).

We can visualize \(v + w\) using arrows,for example:

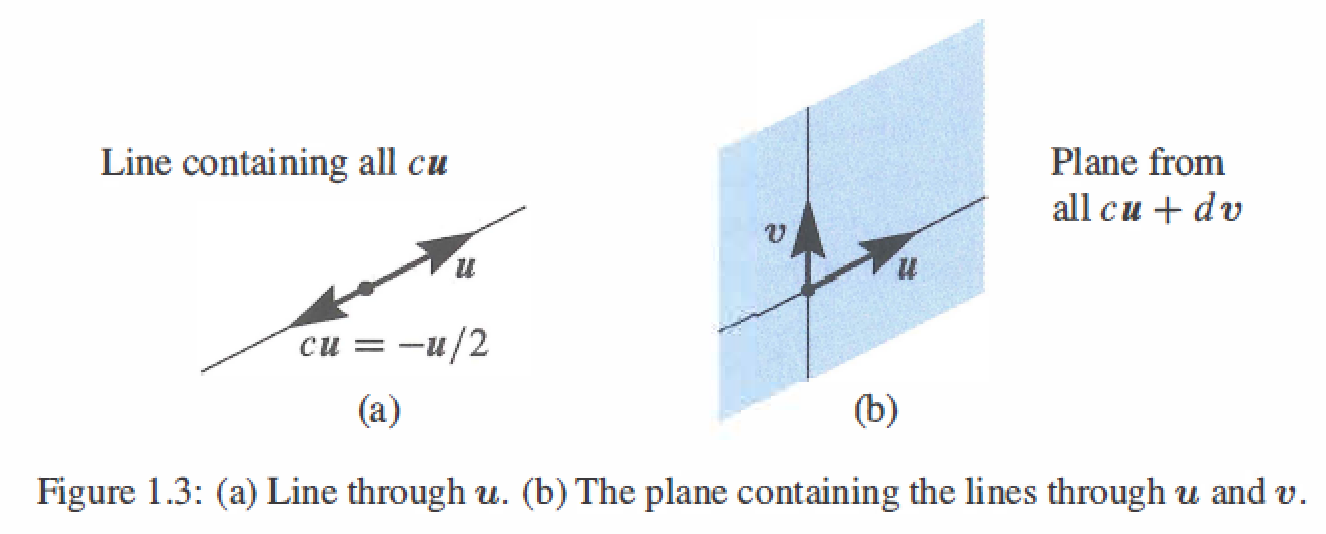

The combinations can fill Line、Plane 、or 3-dimensional space:

- The combinations \(cu\) fill a line through origin.

- The combinations \(cu + dv\) fill a plane throught origin

- The combinations \(cu + dv +ew\) fill three-dimensional space throught origin.

1.3 Lengths and Dot Products

Dot Product/ Inner Product: \(v \cdot w = v_1w_1 + v_2w_2\),where $v = (v_1, v_2) $ and \(w=(w_1, w_2)\) ,the dot product \(w \cdot v\) equals \(v \cdot w\)

Length : \(||v|| = \sqrt{v \cdot v} = (v_1^2 + v_2^2 + v_3^2 +...+ v_n^2)^{1/2}\)

Unit vector : \(u = v /||v||\) is a unit vector in the same direction as \(v\),length =1

Perpendicular vector : \(v \cdot w = 0\)

Cosine Formula : if \(v\) and \(w\) are nonzero vectors then \(\frac{v \cdot w}{||v|| \ ||w||} = cos \theta\) , \(\theta\) is the angle between \(v\) and \(w\)

Schwarz Inequality : \(|v \cdot w| \leq ||v|| \ ||w||\)

Triangel Inequality : \(||v + w|| \leq ||v|| + ||w||\)

1.4 Matrices

1、\(A = \left[ \begin{matrix} 1 & 2 \\ 3 & 4 \\ 5 & 6 \end{matrix}\right]\) is a 3 by 2 matrix : m=2 rows and n=2 columns

2、$Ax = b $ is a linear combination of the columns A

3、 Combination of the vectors : \(Ax = x_1\left[ \begin{matrix} 1 \\ -1 \\ 0 \end{matrix} \right] + x_2\left[ \begin{matrix} 0 \\ 1 \\ -1 \end{matrix} \right] + x_3\left[ \begin{matrix} 0 \\ 0 \\ 1 \end{matrix} \right] = \left[ \begin{matrix} x_1 \\ x_2-x_1 \\ x_3-x_2 \end{matrix} \right]\)

4、Matrix times Vector : $Ax = \left[ \begin{matrix} 1&0&0\ -1&1&0 \ 0&-1&1 \end{matrix} \right] \left[ \begin{matrix} x_1\ x_2 \ x_3 \end{matrix} \right]= \left[ \begin{matrix} x_1 \ x_2-x_1 \ x_3-x_2 \end{matrix} \right] $

5、Linear Equation : Ax = b --> \(\begin{matrix} x_1 = b_1 \\ -x_1 + x_2 = b_2 \\ -x_2 + x_3 = b_3 \end{matrix}\)

6、Inverse Solution : \(x = A^{-1}b\) -- > \(\begin{matrix} x_1 = b_1 \\ x_2 = b_1 + b_2 \\ x_3 =b_1 + b_2 + b_3 \end{matrix}\), when A is an invertible matrix

7、Independent columns : Ax = 0 has one solution, A is an invertible matrix, the column vectors of A are independent. (example: \(u,v,w\) are independent,No combination except \(0u + 0v + 0w = 0\) gives \(b=0\))

8、Dependent columns : Cx = 0 has many solutions, C is a singular matrix, the column vectors of C are dependent. (example: \(u,v,w^*\) are dependent,other combinations like \(au + cv + dw^*\) gives \(b=0\))

1. Vectors and Linear Combinations的更多相关文章

- 【读书笔记】:MIT线性代数(1):Linear Combinations

1. Linear Combination Two linear operations of vectors: Linear combination: 2.Geometric Explaination ...

- 【线性代数】1-1:线性组合(Linear Combinations)

title: [线性代数]1-1:线性组合(Linear Combinations) toc: true categories: Mathematic Linear Algebra date: 201 ...

- 线性代数导论 | Linear Algebra 课程

搞统计的线性代数和概率论必须精通,最好要能锻炼出直觉,再学机器学习才会事半功倍. 线性代数只推荐Prof. Gilbert Strang的MIT课程,有视频,有教材,有习题,有考试,一套学下来基本就入 ...

- [MIT 18.06 线性代数]Intordution to Vectors向量初体验

目录 1.1. Vectors and Linear Combinations向量和线性组合 REVIEW OF THE KEY IDEAS 1.2 Lengths and Dot Products向 ...

- Linear Algebra lecture1 note

Professor: Gilbert Strang Text: Introduction to Linear Algebra http://web.mit.edu/18.06 Lecture 1 ...

- PRML-Chapter3 Linear Models for Regression

Example: Polynomial Curve Fitting The goal of regression is to predict the value of one or more cont ...

- 大规模视觉识别挑战赛ILSVRC2015各团队结果和方法 Large Scale Visual Recognition Challenge 2015

Large Scale Visual Recognition Challenge 2015 (ILSVRC2015) Legend: Yellow background = winner in thi ...

- What is an eigenvector of a covariance matrix?

What is an eigenvector of a covariance matrix? One of the most intuitive explanations of eigenvector ...

- Getting started with machine learning in Python

Getting started with machine learning in Python Machine learning is a field that uses algorithms to ...

- sklearn包学习

1首先是sklearn的官网:http://scikit-learn.org/stable/ 在官网网址上可以看到很多的demo,下边这张是一张非常有用的流程图,在这个流程图中,可以根据数据集的特征, ...

随机推荐

- JS内存爆破问题

原理 检测到调试,格式化等,疯狂的在js文件,或者html中进行读写,cookie重写追加,字节追加,导致内存不足够,卡死 内存爆破,指js通过死循环/频繁操作数据库(包括cookie)/频繁调取hi ...

- JVM运行时参数

JVM运行时参数 JVM运行时参数是用于配置和调整Java虚拟机的行为和性能的参数.这些参数可以在启动Java应用程序时通过命令行或配置文件进行设置,合理配置参数可以使JVM虚拟机的达到更好的性能,降 ...

- 第127篇:异步函数(async和await)练习题(异步,消息队列)

好家伙,本篇为做题思考 书接上文 题目如下: 1.请给出下列代码的输出结果,并配合"消息队列"写出相关解释 async function foo() { console.lo ...

- 【Azure Redis 缓存】使用Azure Redis服务时候,如突然遇见异常,遇见命令Timeout performing SET xxxxxx等情况,如何第一时间查看是否有Failover存在呢?

问题描述 使用Azure Redis服务时,如突然遇见异常,命令Timeout performing SET xxxxxx等情况,如何第一时间查看是否有Failover存在呢?看是否有进行平台的维护呢 ...

- java基础 韩顺平老师的 面向对象(基础) 自己记的部分笔记

194,对象内存布局 基本数据类型放在堆里面,字符串类型放在方法区. 栈:一般存放基本数据类型(局部变量) 堆:存放对象(Cat cat,数组等) 方法区:常量池(常量,比如字符串),类加载信息 19 ...

- [VueJsDev] 基础知识 - snippetsLab 代码片段

[VueJsDev] 目录列表 https://www.cnblogs.com/pengchenggang/p/17037320.html 代码片段 ::: details 目录 目录 代码片段 St ...

- vscode 当做记事本,用任务 tasks 自动提交git - ctrl shift B

vscode 当做记事本,用任务 tasks 自动提交git - ctrl shift B 起因 开始用的joplin 本地记事本挺好,唯一缺点不能同步. 用了一下,发现markdown是两栏的,变成 ...

- InputRegZen.vue 正则Input 限制输入框输入内容

核心内容 已经 perfect,没有用外库,原生完成 用的 iview的Input组件 封装 // InputRegZen.vue <template> <div> <I ...

- git svn 提交代码日志填写规范 BUG NEW DEL CHG TRP gitz 日志z

git svn 提交代码日志填写规范 BUG NEW DEL CHG TRP gitz 日志z

- Python中的join()函数的用法实例分析

一.join()函数 语法: 'sep'.join(seq) 参数说明sep:分隔符.可以为空seq:要连接的元素序列.字符串.元组.字典上面的语法即:以sep作为分隔符,将seq所有的元素合并成一 ...