CoreCLR源码探索(八) JIT的工作原理(详解篇)

在上一篇我们对CoreCLR中的JIT有了一个基础的了解,

这一篇我们将更详细分析JIT的实现.

JIT的实现代码主要在https://github.com/dotnet/coreclr/tree/master/src/jit下,

要对一个的函数的JIT过程进行详细分析, 最好的办法是查看JitDump.

查看JitDump需要自己编译一个Debug版本的CoreCLR, windows可以看这里, linux可以看这里,

编译完以后定义环境变量COMPlus_JitDump=Main, Main可以换成其他函数的名称, 然后使用该Debug版本的CoreCLR执行程序即可.

JitDump的例子可以看这里, 包含了Debug模式和Release模式的输出.

接下来我们来结合代码一步步的看JIT中的各个过程.

以下的代码基于CoreCLR 1.1.0和x86/x64分析, 新版本可能会有变化.

(为什么是1.1.0? 因为JIT部分我看了半年时间, 开始看的时候2.0还未出来)

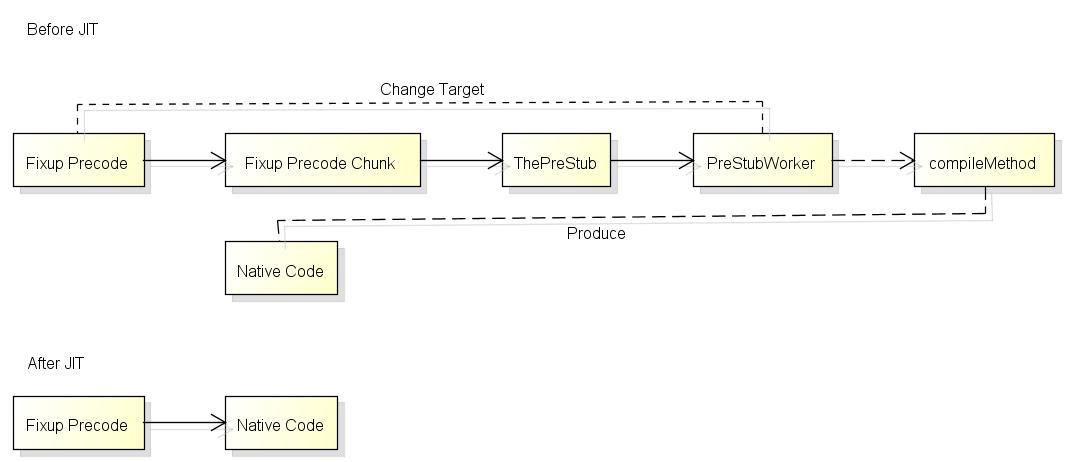

JIT的触发

在上一篇中我提到了, 触发JIT编译会在第一次调用函数时, 会从桩(Stub)触发:

这就是JIT Stub实际的样子, 函数第一次调用前Fixup Precode的状态:

Fixup Precode:

(lldb) di --frame --bytes

-> 0x7fff7c21f5a8: e8 2b 6c fe ff callq 0x7fff7c2061d8

0x7fff7c21f5ad: 5e popq %rsi

0x7fff7c21f5ae: 19 05 e8 23 6c fe sbbl %eax, -0x193dc18(%rip)

0x7fff7c21f5b4: ff 5e a8 lcalll *-0x58(%rsi)

0x7fff7c21f5b7: 04 e8 addb $-0x18, %al

0x7fff7c21f5b9: 1b 6c fe ff sbbl -0x1(%rsi,%rdi,8), %ebp

0x7fff7c21f5bd: 5e popq %rsi

0x7fff7c21f5be: 00 03 addb %al, (%rbx)

0x7fff7c21f5c0: e8 13 6c fe ff callq 0x7fff7c2061d8

0x7fff7c21f5c5: 5e popq %rsi

0x7fff7c21f5c6: b0 02 movb $0x2, %al

(lldb) di --frame --bytes

-> 0x7fff7c2061d8: e9 13 3f 9d 79 jmp 0x7ffff5bda0f0 ; PrecodeFixupThunk

0x7fff7c2061dd: cc int3

0x7fff7c2061de: cc int3

0x7fff7c2061df: cc int3

0x7fff7c2061e0: 49 ba 00 da d0 7b ff 7f 00 00 movabsq $0x7fff7bd0da00, %r10

0x7fff7c2061ea: 40 e9 e0 ff ff ff jmp 0x7fff7c2061d0

这两段代码只有第一条指令是相关的, 注意callq后面的5e 19 05, 这些并不是汇编指令而是函数的信息, 下面会提到.

接下来跳转到Fixup Precode Chunk, 从这里开始的代码所有函数都会共用:

Fixup Precode Chunk:

(lldb) di --frame --bytes

-> 0x7ffff5bda0f0 <PrecodeFixupThunk>: 58 popq %rax ; rax = 0x7fff7c21f5ad

0x7ffff5bda0f1 <PrecodeFixupThunk+1>: 4c 0f b6 50 02 movzbq 0x2(%rax), %r10 ; r10 = 0x05 (precode chunk index)

0x7ffff5bda0f6 <PrecodeFixupThunk+6>: 4c 0f b6 58 01 movzbq 0x1(%rax), %r11 ; r11 = 0x19 (methoddesc chunk index)

0x7ffff5bda0fb <PrecodeFixupThunk+11>: 4a 8b 44 d0 03 movq 0x3(%rax,%r10,8), %rax ; rax = 0x7fff7bdd5040 (methoddesc chunk)

0x7ffff5bda100 <PrecodeFixupThunk+16>: 4e 8d 14 d8 leaq (%rax,%r11,8), %r10 ; r10 = 0x7fff7bdd5108 (methoddesc)

0x7ffff5bda104 <PrecodeFixupThunk+20>: e9 37 ff ff ff jmp 0x7ffff5bda040 ; ThePreStub

这段代码的源代码在vm\amd64\unixasmhelpers.S:

LEAF_ENTRY PrecodeFixupThunk, _TEXT

pop rax // Pop the return address. It points right after the call instruction in the precode.

// Inline computation done by FixupPrecode::GetMethodDesc()

movzx r10,byte ptr [rax+2] // m_PrecodeChunkIndex

movzx r11,byte ptr [rax+1] // m_MethodDescChunkIndex

mov rax,qword ptr [rax+r10*8+3]

lea METHODDESC_REGISTER,[rax+r11*8]

// Tail call to prestub

jmp C_FUNC(ThePreStub)

LEAF_END PrecodeFixupThunk, _TEXT

popq %rax后rax会指向刚才callq后面的地址, 再根据后面储存的索引值可以得到编译函数的MethodDesc, 接下来跳转到The PreStub:

ThePreStub:

(lldb) di --frame --bytes

-> 0x7ffff5bda040 <ThePreStub>: 55 pushq %rbp

0x7ffff5bda041 <ThePreStub+1>: 48 89 e5 movq %rsp, %rbp

0x7ffff5bda044 <ThePreStub+4>: 53 pushq %rbx

0x7ffff5bda045 <ThePreStub+5>: 41 57 pushq %r15

0x7ffff5bda047 <ThePreStub+7>: 41 56 pushq %r14

0x7ffff5bda049 <ThePreStub+9>: 41 55 pushq %r13

0x7ffff5bda04b <ThePreStub+11>: 41 54 pushq %r12

0x7ffff5bda04d <ThePreStub+13>: 41 51 pushq %r9

0x7ffff5bda04f <ThePreStub+15>: 41 50 pushq %r8

0x7ffff5bda051 <ThePreStub+17>: 51 pushq %rcx

0x7ffff5bda052 <ThePreStub+18>: 52 pushq %rdx

0x7ffff5bda053 <ThePreStub+19>: 56 pushq %rsi

0x7ffff5bda054 <ThePreStub+20>: 57 pushq %rdi

0x7ffff5bda055 <ThePreStub+21>: 48 8d a4 24 78 ff ff ff leaq -0x88(%rsp), %rsp ; allocate transition block

0x7ffff5bda05d <ThePreStub+29>: 66 0f 7f 04 24 movdqa %xmm0, (%rsp) ; fill transition block

0x7ffff5bda062 <ThePreStub+34>: 66 0f 7f 4c 24 10 movdqa %xmm1, 0x10(%rsp) ; fill transition block

0x7ffff5bda068 <ThePreStub+40>: 66 0f 7f 54 24 20 movdqa %xmm2, 0x20(%rsp) ; fill transition block

0x7ffff5bda06e <ThePreStub+46>: 66 0f 7f 5c 24 30 movdqa %xmm3, 0x30(%rsp) ; fill transition block

0x7ffff5bda074 <ThePreStub+52>: 66 0f 7f 64 24 40 movdqa %xmm4, 0x40(%rsp) ; fill transition block

0x7ffff5bda07a <ThePreStub+58>: 66 0f 7f 6c 24 50 movdqa %xmm5, 0x50(%rsp) ; fill transition block

0x7ffff5bda080 <ThePreStub+64>: 66 0f 7f 74 24 60 movdqa %xmm6, 0x60(%rsp) ; fill transition block

0x7ffff5bda086 <ThePreStub+70>: 66 0f 7f 7c 24 70 movdqa %xmm7, 0x70(%rsp) ; fill transition block

0x7ffff5bda08c <ThePreStub+76>: 48 8d bc 24 88 00 00 00 leaq 0x88(%rsp), %rdi ; arg 1 = transition block*

0x7ffff5bda094 <ThePreStub+84>: 4c 89 d6 movq %r10, %rsi ; arg 2 = methoddesc

0x7ffff5bda097 <ThePreStub+87>: e8 44 7e 11 00 callq 0x7ffff5cf1ee0 ; PreStubWorker at prestub.cpp:958

0x7ffff5bda09c <ThePreStub+92>: 66 0f 6f 04 24 movdqa (%rsp), %xmm0

0x7ffff5bda0a1 <ThePreStub+97>: 66 0f 6f 4c 24 10 movdqa 0x10(%rsp), %xmm1

0x7ffff5bda0a7 <ThePreStub+103>: 66 0f 6f 54 24 20 movdqa 0x20(%rsp), %xmm2

0x7ffff5bda0ad <ThePreStub+109>: 66 0f 6f 5c 24 30 movdqa 0x30(%rsp), %xmm3

0x7ffff5bda0b3 <ThePreStub+115>: 66 0f 6f 64 24 40 movdqa 0x40(%rsp), %xmm4

0x7ffff5bda0b9 <ThePreStub+121>: 66 0f 6f 6c 24 50 movdqa 0x50(%rsp), %xmm5

0x7ffff5bda0bf <ThePreStub+127>: 66 0f 6f 74 24 60 movdqa 0x60(%rsp), %xmm6

0x7ffff5bda0c5 <ThePreStub+133>: 66 0f 6f 7c 24 70 movdqa 0x70(%rsp), %xmm7

0x7ffff5bda0cb <ThePreStub+139>: 48 8d a4 24 88 00 00 00 leaq 0x88(%rsp), %rsp

0x7ffff5bda0d3 <ThePreStub+147>: 5f popq %rdi

0x7ffff5bda0d4 <ThePreStub+148>: 5e popq %rsi

0x7ffff5bda0d5 <ThePreStub+149>: 5a popq %rdx

0x7ffff5bda0d6 <ThePreStub+150>: 59 popq %rcx

0x7ffff5bda0d7 <ThePreStub+151>: 41 58 popq %r8

0x7ffff5bda0d9 <ThePreStub+153>: 41 59 popq %r9

0x7ffff5bda0db <ThePreStub+155>: 41 5c popq %r12

0x7ffff5bda0dd <ThePreStub+157>: 41 5d popq %r13

0x7ffff5bda0df <ThePreStub+159>: 41 5e popq %r14

0x7ffff5bda0e1 <ThePreStub+161>: 41 5f popq %r15

0x7ffff5bda0e3 <ThePreStub+163>: 5b popq %rbx

0x7ffff5bda0e4 <ThePreStub+164>: 5d popq %rbp

0x7ffff5bda0e5 <ThePreStub+165>: 48 ff e0 jmpq *%rax

%rax should be patched fixup precode = 0x7fff7c21f5a8

(%rsp) should be the return address before calling "Fixup Precode"

看上去相当长但做的事情很简单, 它的源代码在vm\amd64\theprestubamd64.S:

NESTED_ENTRY ThePreStub, _TEXT, NoHandler

PROLOG_WITH_TRANSITION_BLOCK 0, 0, 0, 0, 0

//

// call PreStubWorker

//

lea rdi, [rsp + __PWTB_TransitionBlock] // pTransitionBlock*

mov rsi, METHODDESC_REGISTER

call C_FUNC(PreStubWorker)

EPILOG_WITH_TRANSITION_BLOCK_TAILCALL

TAILJMP_RAX

NESTED_END ThePreStub, _TEXT

它会备份寄存器到栈, 然后调用PreStubWorker这个函数, 调用完毕以后恢复栈上的寄存器,

再跳转到PreStubWorker的返回结果, 也就是打完补丁后的Fixup Precode的地址(0x7fff7c21f5a8).

PreStubWorker是C编写的函数, 它会调用JIT的编译函数, 然后对Fixup Precode打补丁.

打补丁时会读取前面的5e, 5e代表precode的类型是PRECODE_FIXUP, 打补丁的函数是FixupPrecode::SetTargetInterlocked.

打完补丁以后的Fixup Precode如下:

Fixup Precode:

(lldb) di --bytes -s 0x7fff7c21f5a8

0x7fff7c21f5a8: e9 a3 87 3a 00 jmp 0x7fff7c5c7d50

0x7fff7c21f5ad: 5f popq %rdi

0x7fff7c21f5ae: 19 05 e8 23 6c fe sbbl %eax, -0x193dc18(%rip)

0x7fff7c21f5b4: ff 5e a8 lcalll *-0x58(%rsi)

0x7fff7c21f5b7: 04 e8 addb $-0x18, %al

0x7fff7c21f5b9: 1b 6c fe ff sbbl -0x1(%rsi,%rdi,8), %ebp

0x7fff7c21f5bd: 5e popq %rsi

0x7fff7c21f5be: 00 03 addb %al, (%rbx)

0x7fff7c21f5c0: e8 13 6c fe ff callq 0x7fff7c2061d8

0x7fff7c21f5c5: 5e popq %rsi

0x7fff7c21f5c6: b0 02 movb $0x2, %al

下次再调用函数时就可以直接jmp到编译结果了.

JIT Stub的实现可以让运行时只编译实际会运行的函数, 这样可以大幅减少程序的启动时间, 第二次调用时的消耗(1个jmp)也非常的小.

注意调用虚方法时的流程跟上面的流程有一点不同, 虚方法的地址会保存在函数表中,

打补丁时会对函数表而不是Precode打补丁, 下次调用时函数表中指向的地址是编译后的地址, 有兴趣可以自己试试分析.

接下来我们看看PreStubWorker的内部处理.

JIT的入口点

PreStubWorker的源代码如下:

extern "C" PCODE STDCALL PreStubWorker(TransitionBlock * pTransitionBlock, MethodDesc * pMD)

{

PCODE pbRetVal = NULL;

BEGIN_PRESERVE_LAST_ERROR;

STATIC_CONTRACT_THROWS;

STATIC_CONTRACT_GC_TRIGGERS;

STATIC_CONTRACT_MODE_COOPERATIVE;

STATIC_CONTRACT_ENTRY_POINT;

MAKE_CURRENT_THREAD_AVAILABLE();

#ifdef _DEBUG

Thread::ObjectRefFlush(CURRENT_THREAD);

#endif

FrameWithCookie<PrestubMethodFrame> frame(pTransitionBlock, pMD);

PrestubMethodFrame * pPFrame = &frame;

pPFrame->Push(CURRENT_THREAD);

INSTALL_MANAGED_EXCEPTION_DISPATCHER;

INSTALL_UNWIND_AND_CONTINUE_HANDLER;

ETWOnStartup (PrestubWorker_V1,PrestubWorkerEnd_V1);

_ASSERTE(!NingenEnabled() && "You cannot invoke managed code inside the ngen compilation process.");

// Running the PreStubWorker on a method causes us to access its MethodTable

g_IBCLogger.LogMethodDescAccess(pMD);

// Make sure the method table is restored, and method instantiation if present

pMD->CheckRestore();

CONSISTENCY_CHECK(GetAppDomain()->CheckCanExecuteManagedCode(pMD));

// Note this is redundant with the above check but we do it anyway for safety

//

// This has been disabled so we have a better chance of catching these. Note that this check is

// NOT sufficient for domain neutral and ngen cases.

//

// pMD->EnsureActive();

MethodTable *pDispatchingMT = NULL;

if (pMD->IsVtableMethod())

{

OBJECTREF curobj = pPFrame->GetThis();

if (curobj != NULL) // Check for virtual function called non-virtually on a NULL object

{

pDispatchingMT = curobj->GetTrueMethodTable();

#ifdef FEATURE_ICASTABLE

if (pDispatchingMT->IsICastable())

{

MethodTable *pMDMT = pMD->GetMethodTable();

TypeHandle objectType(pDispatchingMT);

TypeHandle methodType(pMDMT);

GCStress<cfg_any>::MaybeTrigger();

INDEBUG(curobj = NULL); // curobj is unprotected and CanCastTo() can trigger GC

if (!objectType.CanCastTo(methodType))

{

// Apperantly ICastable magic was involved when we chose this method to be called

// that's why we better stick to the MethodTable it belongs to, otherwise

// DoPrestub() will fail not being able to find implementation for pMD in pDispatchingMT.

pDispatchingMT = pMDMT;

}

}

#endif // FEATURE_ICASTABLE

// For value types, the only virtual methods are interface implementations.

// Thus pDispatching == pMT because there

// is no inheritance in value types. Note the BoxedEntryPointStubs are shared

// between all sharable generic instantiations, so the == test is on

// canonical method tables.

#ifdef _DEBUG

MethodTable *pMDMT = pMD->GetMethodTable(); // put this here to see what the MT is in debug mode

_ASSERTE(!pMD->GetMethodTable()->IsValueType() ||

(pMD->IsUnboxingStub() && (pDispatchingMT->GetCanonicalMethodTable() == pMDMT->GetCanonicalMethodTable())));

#endif // _DEBUG

}

}

GCX_PREEMP_THREAD_EXISTS(CURRENT_THREAD);

pbRetVal = pMD->DoPrestub(pDispatchingMT);

UNINSTALL_UNWIND_AND_CONTINUE_HANDLER;

UNINSTALL_MANAGED_EXCEPTION_DISPATCHER;

{

HardwareExceptionHolder

// Give debugger opportunity to stop here

ThePreStubPatch();

}

pPFrame->Pop(CURRENT_THREAD);

POSTCONDITION(pbRetVal != NULL);

END_PRESERVE_LAST_ERROR;

return pbRetVal;

}

这个函数接收了两个参数,

第一个是TransitionBlock, 其实就是一个指向栈的指针, 里面保存了备份的寄存器,

第二个是MethodDesc, 是当前编译函数的信息, lldb中使用dumpmd pMD即可看到具体信息.

之后会调用MethodDesc::DoPrestub, 如果函数是虚方法则传入this对象类型的MethodTable.

MethodDesc::DoPrestub的源代码如下:

PCODE MethodDesc::DoPrestub(MethodTable *pDispatchingMT)

{

CONTRACT(PCODE)

{

STANDARD_VM_CHECK;

POSTCONDITION(RETVAL != NULL);

}

CONTRACT_END;

Stub *pStub = NULL;

PCODE pCode = NULL;

Thread *pThread = GetThread();

MethodTable *pMT = GetMethodTable();

// Running a prestub on a method causes us to access its MethodTable

g_IBCLogger.LogMethodDescAccess(this);

// A secondary layer of defense against executing code in inspection-only assembly.

// This should already have been taken care of by not allowing inspection assemblies

// to be activated. However, this is a very inexpensive piece of insurance in the name

// of security.

if (IsIntrospectionOnly())

{

_ASSERTE(!"A ReflectionOnly assembly reached the prestub. This should not have happened.");

COMPlusThrow(kInvalidOperationException, IDS_EE_CODEEXECUTION_IN_INTROSPECTIVE_ASSEMBLY);

}

if (ContainsGenericVariables())

{

COMPlusThrow(kInvalidOperationException, IDS_EE_CODEEXECUTION_CONTAINSGENERICVAR);

}

/************************** DEBUG CHECKS *************************/

/*-----------------------------------------------------------------

// Halt if needed, GC stress, check the sharing count etc.

*/

#ifdef _DEBUG

static unsigned ctr = 0;

ctr++;

if (g_pConfig->ShouldPrestubHalt(this))

{

_ASSERTE(!"PreStubHalt");

}

LOG((LF_CLASSLOADER, LL_INFO10000, "In PreStubWorker for %s::%s\n",

m_pszDebugClassName, m_pszDebugMethodName));

// This is a nice place to test out having some fatal EE errors. We do this only in a checked build, and only

// under the InjectFatalError key.

if (g_pConfig->InjectFatalError() == 1)

{

EEPOLICY_HANDLE_FATAL_ERROR(COR_E_EXECUTIONENGINE);

}

else if (g_pConfig->InjectFatalError() == 2)

{

EEPOLICY_HANDLE_FATAL_ERROR(COR_E_STACKOVERFLOW);

}

else if (g_pConfig->InjectFatalError() == 3)

{

TestSEHGuardPageRestore();

}

// Useful to test GC with the prestub on the call stack

if (g_pConfig->ShouldPrestubGC(this))

{

GCX_COOP();

GCHeap::GetGCHeap()->GarbageCollect(-1);

}

#endif // _DEBUG

STRESS_LOG1(LF_CLASSLOADER, LL_INFO10000, "Prestubworker: method %pM\n", this);

GCStress<cfg_any, EeconfigFastGcSPolicy, CoopGcModePolicy>::MaybeTrigger();

// Are we in the prestub because of a rejit request? If so, let the ReJitManager

// take it from here.

pCode = ReJitManager::DoReJitIfNecessary(this);

if (pCode != NULL)

{

// A ReJIT was performed, so nothing left for DoPrestub() to do. Return now.

//

// The stable entrypoint will either be a pointer to the original JITted code

// (with a jmp at the top to jump to the newly-rejitted code) OR a pointer to any

// stub code that must be executed first (e.g., a remoting stub), which in turn

// will call the original JITted code (which then jmps to the newly-rejitted

// code).

RETURN GetStableEntryPoint();

}

#ifdef FEATURE_PREJIT

// If this method is the root of a CER call graph and we've recorded this fact in the ngen image then we're in the prestub in

// order to trip any runtime level preparation needed for this graph (P/Invoke stub generation/library binding, generic

// dictionary prepopulation etc.).

GetModule()->RestoreCer(this);

#endif // FEATURE_PREJIT

#ifdef FEATURE_COMINTEROP

/************************** INTEROP *************************/

/*-----------------------------------------------------------------

// Some method descriptors are COMPLUS-to-COM call descriptors

// they are not your every day method descriptors, for example

// they don't have an IL or code.

*/

if (IsComPlusCall() || IsGenericComPlusCall())

{

pCode = GetStubForInteropMethod(this);

GetPrecode()->SetTargetInterlocked(pCode);

RETURN GetStableEntryPoint();

}

#endif // FEATURE_COMINTEROP

// workaround: This is to handle a punted work item dealing with a skipped module constructor

// due to appdomain unload. Basically shared code was JITted in domain A, and then

// this caused a link to another shared module with a module CCTOR, which was skipped

// or aborted in another appdomain we were trying to propagate the activation to.

//

// Note that this is not a fix, but that it just minimizes the window in which the

// issue can occur.

if (pThread->IsAbortRequested())

{

pThread->HandleThreadAbort();

}

/************************** CLASS CONSTRUCTOR ********************/

// Make sure .cctor has been run

if (IsClassConstructorTriggeredViaPrestub())

{

pMT->CheckRunClassInitThrowing();

}

/************************** BACKPATCHING *************************/

// See if the addr of code has changed from the pre-stub

#ifdef FEATURE_INTERPRETER

if (!IsReallyPointingToPrestub())

#else

if (!IsPointingToPrestub())

#endif

{

LOG((LF_CLASSLOADER, LL_INFO10000,

" In PreStubWorker, method already jitted, backpatching call point\n"));

RETURN DoBackpatch(pMT, pDispatchingMT, TRUE);

}

// record if remoting needs to intercept this call

BOOL fRemotingIntercepted = IsRemotingInterceptedViaPrestub();

BOOL fReportCompilationFinished = FALSE;

/************************** CODE CREATION *************************/

if (IsUnboxingStub())

{

pStub = MakeUnboxingStubWorker(this);

}

#ifdef FEATURE_REMOTING

else if (pMT->IsInterface() && !IsStatic() && !IsFCall())

{

pCode = CRemotingServices::GetDispatchInterfaceHelper(this);

GetOrCreatePrecode();

}

#endif // FEATURE_REMOTING

#if defined(FEATURE_SHARE_GENERIC_CODE)

else if (IsInstantiatingStub())

{

pStub = MakeInstantiatingStubWorker(this);

}

#endif // defined(FEATURE_SHARE_GENERIC_CODE)

else if (IsIL() || IsNoMetadata())

{

// remember if we need to backpatch the MethodTable slot

BOOL fBackpatch = !fRemotingIntercepted

&& !IsEnCMethod();

#ifdef FEATURE_PREJIT

//

// See if we have any prejitted code to use.

//

pCode = GetPreImplementedCode();

#ifdef PROFILING_SUPPORTED

if (pCode != NULL)

{

BOOL fShouldSearchCache = TRUE;

{

BEGIN_PIN_PROFILER(CORProfilerTrackCacheSearches());

g_profControlBlock.pProfInterface->

JITCachedFunctionSearchStarted((FunctionID) this,

&fShouldSearchCache);

END_PIN_PROFILER();

}

if (!fShouldSearchCache)

{

#ifdef FEATURE_INTERPRETER

SetNativeCodeInterlocked(NULL, pCode, FALSE);

#else

SetNativeCodeInterlocked(NULL, pCode);

#endif

_ASSERTE(!IsPreImplemented());

pCode = NULL;

}

}

#endif // PROFILING_SUPPORTED

if (pCode != NULL)

{

LOG((LF_ZAP, LL_INFO10000,

"ZAP: Using code" FMT_ADDR "for %s.%s sig=\"%s\" (token %x).\n",

DBG_ADDR(pCode),

m_pszDebugClassName,

m_pszDebugMethodName,

m_pszDebugMethodSignature,

GetMemberDef()));

TADDR pFixupList = GetFixupList();

if (pFixupList != NULL)

{

Module *pZapModule = GetZapModule();

_ASSERTE(pZapModule != NULL);

if (!pZapModule->FixupDelayList(pFixupList))

{

_ASSERTE(!"FixupDelayList failed");

ThrowHR(COR_E_BADIMAGEFORMAT);

}

}

#ifdef HAVE_GCCOVER

if (GCStress<cfg_instr_ngen>::IsEnabled())

SetupGcCoverage(this, (BYTE*) pCode);

#endif // HAVE_GCCOVER

#ifdef PROFILING_SUPPORTED

/*

* This notifies the profiler that a search to find a

* cached jitted function has been made.

*/

{

BEGIN_PIN_PROFILER(CORProfilerTrackCacheSearches());

g_profControlBlock.pProfInterface->

JITCachedFunctionSearchFinished((FunctionID) this, COR_PRF_CACHED_FUNCTION_FOUND);

END_PIN_PROFILER();

}

#endif // PROFILING_SUPPORTED

}

//

// If not, try to jit it

//

#endif // FEATURE_PREJIT

#ifdef FEATURE_READYTORUN

if (pCode == NULL)

{

Module * pModule = GetModule();

if (pModule->IsReadyToRun())

{

pCode = pModule->GetReadyToRunInfo()->GetEntryPoint(this);

if (pCode != NULL)

fReportCompilationFinished = TRUE;

}

}

#endif // FEATURE_READYTORUN

if (pCode == NULL)

{

NewHolder<COR_ILMETHOD_DECODER> pHeader(NULL);

// Get the information on the method

if (!IsNoMetadata())

{

COR_ILMETHOD* ilHeader = GetILHeader(TRUE);

if(ilHeader == NULL)

{

#ifdef FEATURE_COMINTEROP

// Abstract methods can be called through WinRT derivation if the deriving type

// is not implemented in managed code, and calls through the CCW to the abstract

// method. Throw a sensible exception in that case.

if (pMT->IsExportedToWinRT() && IsAbstract())

{

COMPlusThrowHR(E_NOTIMPL);

}

#endif // FEATURE_COMINTEROP

COMPlusThrowHR(COR_E_BADIMAGEFORMAT, BFA_BAD_IL);

}

COR_ILMETHOD_DECODER::DecoderStatus status = COR_ILMETHOD_DECODER::FORMAT_ERROR;

{

// Decoder ctor can AV on a malformed method header

AVInRuntimeImplOkayHolder AVOkay;

pHeader = new COR_ILMETHOD_DECODER(ilHeader, GetMDImport(), &status);

if(pHeader == NULL)

status = COR_ILMETHOD_DECODER::FORMAT_ERROR;

}

if (status == COR_ILMETHOD_DECODER::VERIFICATION_ERROR &&

Security::CanSkipVerification(GetModule()->GetDomainAssembly()))

{

status = COR_ILMETHOD_DECODER::SUCCESS;

}

if (status != COR_ILMETHOD_DECODER::SUCCESS)

{

if (status == COR_ILMETHOD_DECODER::VERIFICATION_ERROR)

{

// Throw a verification HR

COMPlusThrowHR(COR_E_VERIFICATION);

}

else

{

COMPlusThrowHR(COR_E_BADIMAGEFORMAT, BFA_BAD_IL);

}

}

#ifdef _VER_EE_VERIFICATION_ENABLED

static ConfigDWORD peVerify;

if (peVerify.val(CLRConfig::EXTERNAL_PEVerify))

Verify(pHeader, TRUE, FALSE); // Throws a VerifierException if verification fails

#endif // _VER_EE_VERIFICATION_ENABLED

} // end if (!IsNoMetadata())

// JIT it

LOG((LF_CLASSLOADER, LL_INFO1000000,

" In PreStubWorker, calling MakeJitWorker\n"));

// Create the precode eagerly if it is going to be needed later.

if (!fBackpatch)

{

GetOrCreatePrecode();

}

// Mark the code as hot in case the method ends up in the native image

g_IBCLogger.LogMethodCodeAccess(this);

pCode = MakeJitWorker(pHeader, 0, 0);

#ifdef FEATURE_INTERPRETER

if ((pCode != NULL) && !HasStableEntryPoint())

{

// We don't yet have a stable entry point, so don't do backpatching yet.

// But we do have to handle some extra cases that occur in backpatching.

// (Perhaps I *should* get to the backpatching code, but in a mode where we know

// we're not dealing with the stable entry point...)

if (HasNativeCodeSlot())

{

// We called "SetNativeCodeInterlocked" in MakeJitWorker, which updated the native

// code slot, but I think we also want to update the regular slot...

PCODE tmpEntry = GetTemporaryEntryPoint();

PCODE pFound = FastInterlockCompareExchangePointer(GetAddrOfSlot(), pCode, tmpEntry);

// Doesn't matter if we failed -- if we did, it's because somebody else made progress.

if (pFound != tmpEntry) pCode = pFound;

}

// Now we handle the case of a FuncPtrPrecode.

FuncPtrStubs * pFuncPtrStubs = GetLoaderAllocator()->GetFuncPtrStubsNoCreate();

if (pFuncPtrStubs != NULL)

{

Precode* pFuncPtrPrecode = pFuncPtrStubs->Lookup(this);

if (pFuncPtrPrecode != NULL)

{

// If there is a funcptr precode to patch, attempt to patch it. If we lose, that's OK,

// somebody else made progress.

pFuncPtrPrecode->SetTargetInterlocked(pCode);

}

}

}

#endif // FEATURE_INTERPRETER

} // end if (pCode == NULL)

} // end else if (IsIL() || IsNoMetadata())

else if (IsNDirect())

{

if (!GetModule()->GetSecurityDescriptor()->CanCallUnmanagedCode())

Security::ThrowSecurityException(g_SecurityPermissionClassName, SPFLAGSUNMANAGEDCODE);

pCode = GetStubForInteropMethod(this);

GetOrCreatePrecode();

}

else if (IsFCall())

{

// Get the fcall implementation

BOOL fSharedOrDynamicFCallImpl;

pCode = ECall::GetFCallImpl(this, &fSharedOrDynamicFCallImpl);

if (fSharedOrDynamicFCallImpl)

{

// Fake ctors share one implementation that has to be wrapped by prestub

GetOrCreatePrecode();

}

}

else if (IsArray())

{

pStub = GenerateArrayOpStub((ArrayMethodDesc*)this);

}

else if (IsEEImpl())

{

_ASSERTE(GetMethodTable()->IsDelegate());

pCode = COMDelegate::GetInvokeMethodStub((EEImplMethodDesc*)this);

GetOrCreatePrecode();

}

else

{

// This is a method type we don't handle yet

_ASSERTE(!"Unknown Method Type");

}

/************************** POSTJIT *************************/

#ifndef FEATURE_INTERPRETER

_ASSERTE(pCode == NULL || GetNativeCode() == NULL || pCode == GetNativeCode());

#else // FEATURE_INTERPRETER

// Interpreter adds a new possiblity == someone else beat us to installing an intepreter stub.

_ASSERTE(pCode == NULL || GetNativeCode() == NULL || pCode == GetNativeCode()

|| Interpreter::InterpretationStubToMethodInfo(pCode) == this);

#endif // FEATURE_INTERPRETER

// At this point we must have either a pointer to managed code or to a stub. All of the above code

// should have thrown an exception if it couldn't make a stub.

_ASSERTE((pStub != NULL) ^ (pCode != NULL));

/************************** SECURITY *************************/

// Lets check to see if we need declarative security on this stub, If we have

// security checks on this method or class then we need to add an intermediate

// stub that performs declarative checks prior to calling the real stub.

// record if security needs to intercept this call (also depends on whether we plan to use stubs for declarative security)

#if !defined( HAS_REMOTING_PRECODE) && defined (FEATURE_REMOTING)

/************************** REMOTING *************************/

// check for MarshalByRef scenarios ... we need to intercept

// Non-virtual calls on MarshalByRef types

if (fRemotingIntercepted)

{

// let us setup a remoting stub to intercept all the calls

Stub *pRemotingStub = CRemotingServices::GetStubForNonVirtualMethod(this,

(pStub != NULL) ? (LPVOID)pStub->GetEntryPoint() : (LPVOID)pCode, pStub);

if (pRemotingStub != NULL)

{

pStub = pRemotingStub;

pCode = NULL;

}

}

#endif // HAS_REMOTING_PRECODE

_ASSERTE((pStub != NULL) ^ (pCode != NULL));

#if defined(_TARGET_X86_) || defined(_TARGET_AMD64_)

//

// We are seeing memory reordering race around fixups (see DDB 193514 and related bugs). We get into

// situation where the patched precode is visible by other threads, but the resolved fixups

// are not. IT SHOULD NEVER HAPPEN according to our current understanding of x86/x64 memory model.

// (see email thread attached to the bug for details).

//

// We suspect that there may be bug in the hardware or that hardware may have shortcuts that may be

// causing grief. We will try to avoid the race by executing an extra memory barrier.

//

MemoryBarrier();

#endif

if (pCode != NULL)

{

if (HasPrecode())

GetPrecode()->SetTargetInterlocked(pCode);

else

if (!HasStableEntryPoint())

{

// Is the result an interpreter stub?

#ifdef FEATURE_INTERPRETER

if (Interpreter::InterpretationStubToMethodInfo(pCode) == this)

{

SetEntryPointInterlocked(pCode);

}

else

#endif // FEATURE_INTERPRETER

{

SetStableEntryPointInterlocked(pCode);

}

}

}

else

{

if (!GetOrCreatePrecode()->SetTargetInterlocked(pStub->GetEntryPoint()))

{

pStub->DecRef();

}

else

if (pStub->HasExternalEntryPoint())

{

// If the Stub wraps code that is outside of the Stub allocation, then we

// need to free the Stub allocation now.

pStub->DecRef();

}

}

#ifdef FEATURE_INTERPRETER

_ASSERTE(!IsReallyPointingToPrestub());

#else // FEATURE_INTERPRETER

_ASSERTE(!IsPointingToPrestub());

_ASSERTE(HasStableEntryPoint());

#endif // FEATURE_INTERPRETER

if (fReportCompilationFinished)

DACNotifyCompilationFinished(this);

RETURN DoBackpatch(pMT, pDispatchingMT, FALSE);

}

这个函数比较长, 我们只需要关注两个地方:

pCode = MakeJitWorker(pHeader, 0, 0);

MakeJitWorker会调用JIT编译函数, pCode是编译后的机器代码地址.

if (HasPrecode())

GetPrecode()->SetTargetInterlocked(pCode);

SetTargetInterlocked会对Precode打补丁, 第二次调用函数时会直接跳转到编译结果.

MakeJitWorker的源代码如下:

PCODE MethodDesc::MakeJitWorker(COR_ILMETHOD_DECODER* ILHeader, DWORD flags, DWORD flags2)

{

STANDARD_VM_CONTRACT;

BOOL fIsILStub = IsILStub(); // @TODO: understand the need for this special case

LOG((LF_JIT, LL_INFO1000000,

"MakeJitWorker(" FMT_ADDR ", %s) for %s:%s\n",

DBG_ADDR(this),

fIsILStub ? " TRUE" : "FALSE",

GetMethodTable()->GetDebugClassName(),

m_pszDebugMethodName));

PCODE pCode = NULL;

ULONG sizeOfCode = 0;

#ifdef FEATURE_INTERPRETER

PCODE pPreviousInterpStub = NULL;

BOOL fInterpreted = FALSE;

BOOL fStable = TRUE; // True iff the new code address (to be stored in pCode), is a stable entry point.

#endif

#ifdef FEATURE_MULTICOREJIT

MulticoreJitManager & mcJitManager = GetAppDomain()->GetMulticoreJitManager();

bool fBackgroundThread = (flags & CORJIT_FLG_MCJIT_BACKGROUND) != 0;

#endif

{

// Enter the global lock which protects the list of all functions being JITd

ListLockHolder pJitLock (GetDomain()->GetJitLock());

// It is possible that another thread stepped in before we entered the global lock for the first time.

pCode = GetNativeCode();

if (pCode != NULL)

{

#ifdef FEATURE_INTERPRETER

if (Interpreter::InterpretationStubToMethodInfo(pCode) == this)

{

pPreviousInterpStub = pCode;

}

else

#endif // FEATURE_INTERPRETER

goto Done;

}

const char *description = "jit lock";

INDEBUG(description = m_pszDebugMethodName;)

ListLockEntryHolder pEntry(ListLockEntry::Find(pJitLock, this, description));

// We have an entry now, we can release the global lock

pJitLock.Release();

// Take the entry lock

{

ListLockEntryLockHolder pEntryLock(pEntry, FALSE);

if (pEntryLock.DeadlockAwareAcquire())

{

if (pEntry->m_hrResultCode == S_FALSE)

{

// Nobody has jitted the method yet

}

else

{

// We came in to jit but someone beat us so return the

// jitted method!

// We can just fall through because we will notice below that

// the method has code.

// @todo: Note that we may have a failed HRESULT here -

// we might want to return an early error rather than

// repeatedly failing the jit.

}

}

else

{

// Taking this lock would cause a deadlock (presumably because we

// are involved in a class constructor circular dependency.) For

// instance, another thread may be waiting to run the class constructor

// that we are jitting, but is currently jitting this function.

//

// To remedy this, we want to go ahead and do the jitting anyway.

// The other threads contending for the lock will then notice that

// the jit finished while they were running class constructors, and abort their

// current jit effort.

//

// We don't have to do anything special right here since we

// can check HasNativeCode() to detect this case later.

//

// Note that at this point we don't have the lock, but that's OK because the

// thread which does have the lock is blocked waiting for us.

}

// It is possible that another thread stepped in before we entered the lock.

pCode = GetNativeCode();

#ifdef FEATURE_INTERPRETER

if (pCode != NULL && (pCode != pPreviousInterpStub))

#else

if (pCode != NULL)

#endif // FEATURE_INTERPRETER

{

goto Done;

}

SString namespaceOrClassName, methodName, methodSignature;

PCODE pOtherCode = NULL; // Need to move here due to 'goto GotNewCode'

#ifdef FEATURE_MULTICOREJIT

bool fCompiledInBackground = false;

// If not called from multi-core JIT thread,

if (! fBackgroundThread)

{

// Quick check before calling expensive out of line function on this method's domain has code JITted by background thread

if (mcJitManager.GetMulticoreJitCodeStorage().GetRemainingMethodCount() > 0)

{

if (MulticoreJitManager::IsMethodSupported(this))

{

pCode = mcJitManager.RequestMethodCode(this); // Query multi-core JIT manager for compiled code

// Multicore JIT manager starts background thread to pre-compile methods, but it does not back-patch it/notify profiler/notify DAC,

// Jumtp to GotNewCode to do so

if (pCode != NULL)

{

fCompiledInBackground = true;

#ifdef DEBUGGING_SUPPORTED

// Notify the debugger of the jitted function

if (g_pDebugInterface != NULL)

{

g_pDebugInterface->JITComplete(this, pCode);

}

#endif

goto GotNewCode;

}

}

}

}

#endif

if (fIsILStub)

{

// we race with other threads to JIT the code for an IL stub and the

// IL header is released once one of the threads completes. As a result

// we must be inside the lock to reliably get the IL header for the

// stub.

ILStubResolver* pResolver = AsDynamicMethodDesc()->GetILStubResolver();

ILHeader = pResolver->GetILHeader();

}

#ifdef MDA_SUPPORTED

MdaJitCompilationStart* pProbe = MDA_GET_ASSISTANT(JitCompilationStart);

if (pProbe)

pProbe->NowCompiling(this);

#endif // MDA_SUPPORTED

#ifdef PROFILING_SUPPORTED

// If profiling, need to give a chance for a tool to examine and modify

// the IL before it gets to the JIT. This allows one to add probe calls for

// things like code coverage, performance, or whatever.

{

BEGIN_PIN_PROFILER(CORProfilerTrackJITInfo());

// Multicore JIT should be disabled when CORProfilerTrackJITInfo is on

// But there could be corner case in which profiler is attached when multicore background thread is calling MakeJitWorker

// Disable this block when calling from multicore JIT background thread

if (!IsNoMetadata()

#ifdef FEATURE_MULTICOREJIT

&& (! fBackgroundThread)

#endif

)

{

g_profControlBlock.pProfInterface->JITCompilationStarted((FunctionID) this, TRUE);

// The profiler may have changed the code on the callback. Need to

// pick up the new code. Note that you have to be fully trusted in

// this mode and the code will not be verified.

COR_ILMETHOD *pilHeader = GetILHeader(TRUE);

new (ILHeader) COR_ILMETHOD_DECODER(pilHeader, GetMDImport(), NULL);

}

END_PIN_PROFILER();

}

#endif // PROFILING_SUPPORTED

#ifdef FEATURE_INTERPRETER

// We move the ETW event for start of JITting inward, after we make the decision

// to JIT rather than interpret.

#else // FEATURE_INTERPRETER

// Fire an ETW event to mark the beginning of JIT'ing

ETW::MethodLog::MethodJitting(this, &namespaceOrClassName, &methodName, &methodSignature);

#endif // FEATURE_INTERPRETER

#ifdef FEATURE_STACK_SAMPLING

#ifdef FEATURE_MULTICOREJIT

if (!fBackgroundThread)

#endif // FEATURE_MULTICOREJIT

{

StackSampler::RecordJittingInfo(this, flags, flags2);

}

#endif // FEATURE_STACK_SAMPLING

EX_TRY

{

pCode = UnsafeJitFunction(this, ILHeader, flags, flags2, &sizeOfCode);

}

EX_CATCH

{

// If the current thread threw an exception, but a competing thread

// somehow succeeded at JITting the same function (e.g., out of memory

// encountered on current thread but not competing thread), then go ahead

// and swallow this current thread's exception, since we somehow managed

// to successfully JIT the code on the other thread.

//

// Note that if a deadlock cycle is broken, that does not result in an

// exception--the thread would just pass through the lock and JIT the

// function in competition with the other thread (with the winner of the

// race decided later on when we do SetNativeCodeInterlocked). This

// try/catch is purely to deal with the (unusual) case where a competing

// thread succeeded where we aborted.

pOtherCode = GetNativeCode();

if (pOtherCode == NULL)

{

pEntry->m_hrResultCode = E_FAIL;

EX_RETHROW;

}

}

EX_END_CATCH(RethrowTerminalExceptions)

if (pOtherCode != NULL)

{

// Somebody finished jitting recursively while we were jitting the method.

// Just use their method & leak the one we finished. (Normally we hope

// not to finish our JIT in this case, as we will abort early if we notice

// a reentrant jit has occurred. But we may not catch every place so we

// do a definitive final check here.

pCode = pOtherCode;

goto Done;

}

_ASSERTE(pCode != NULL);

#ifdef HAVE_GCCOVER

if (GCStress<cfg_instr_jit>::IsEnabled())

{

SetupGcCoverage(this, (BYTE*) pCode);

}

#endif // HAVE_GCCOVER

#ifdef FEATURE_INTERPRETER

// Determine whether the new code address is "stable"...= is not an interpreter stub.

fInterpreted = (Interpreter::InterpretationStubToMethodInfo(pCode) == this);

fStable = !fInterpreted;

#endif // FEATURE_INTERPRETER

#ifdef FEATURE_MULTICOREJIT

// If called from multi-core JIT background thread, store code under lock, delay patching until code is queried from application threads

if (fBackgroundThread)

{

// Fire an ETW event to mark the end of JIT'ing

ETW::MethodLog::MethodJitted(this, &namespaceOrClassName, &methodName, &methodSignature, pCode, 0 /* ReJITID */);

#ifdef FEATURE_PERFMAP

// Save the JIT'd method information so that perf can resolve JIT'd call frames.

PerfMap::LogJITCompiledMethod(this, pCode, sizeOfCode);

#endif

mcJitManager.GetMulticoreJitCodeStorage().StoreMethodCode(this, pCode);

goto Done;

}

GotNewCode:

#endif

// If this function had already been requested for rejit (before its original

// code was jitted), then give the rejit manager a chance to jump-stamp the

// code we just compiled so the first thread entering the function will jump

// to the prestub and trigger the rejit. Note that the PublishMethodHolder takes

// a lock to avoid a particular kind of rejit race. See

// code:ReJitManager::PublishMethodHolder::PublishMethodHolder#PublishCode for

// details on the rejit race.

//

// Aside from rejit, performing a SetNativeCodeInterlocked at this point

// generally ensures that there is only one winning version of the native

// code. This also avoid races with profiler overriding ngened code (see

// matching SetNativeCodeInterlocked done after

// JITCachedFunctionSearchStarted)

#ifdef FEATURE_INTERPRETER

PCODE pExpected = pPreviousInterpStub;

if (pExpected == NULL) pExpected = GetTemporaryEntryPoint();

#endif

{

ReJitPublishMethodHolder publishWorker(this, pCode);

if (!SetNativeCodeInterlocked(pCode

#ifdef FEATURE_INTERPRETER

, pExpected, fStable

#endif

))

{

// Another thread beat us to publishing its copy of the JITted code.

pCode = GetNativeCode();

goto Done;

}

}

#ifdef FEATURE_INTERPRETER

// State for dynamic methods cannot be freed if the method was ever interpreted,

// since there is no way to ensure that it is not in use at the moment.

if (IsDynamicMethod() && !fInterpreted && (pPreviousInterpStub == NULL))

{

AsDynamicMethodDesc()->GetResolver()->FreeCompileTimeState();

}

#endif // FEATURE_INTERPRETER

// We succeeded in jitting the code, and our jitted code is the one that's going to run now.

pEntry->m_hrResultCode = S_OK;

#ifdef PROFILING_SUPPORTED

// Notify the profiler that JIT completed.

// Must do this after the address has been set.

// @ToDo: Why must we set the address before notifying the profiler ??

// Note that if IsInterceptedForDeclSecurity is set no one should access the jitted code address anyway.

{

BEGIN_PIN_PROFILER(CORProfilerTrackJITInfo());

if (!IsNoMetadata())

{

g_profControlBlock.pProfInterface->

JITCompilationFinished((FunctionID) this,

pEntry->m_hrResultCode,

TRUE);

}

END_PIN_PROFILER();

}

#endif // PROFILING_SUPPORTED

#ifdef FEATURE_MULTICOREJIT

if (! fCompiledInBackground)

#endif

#ifdef FEATURE_INTERPRETER

// If we didn't JIT, but rather, created an interpreter stub (i.e., fStable is false), don't tell ETW that we did.

if (fStable)

#endif // FEATURE_INTERPRETER

{

// Fire an ETW event to mark the end of JIT'ing

ETW::MethodLog::MethodJitted(this, &namespaceOrClassName, &methodName, &methodSignature, pCode, 0 /* ReJITID */);

#ifdef FEATURE_PERFMAP

// Save the JIT'd method information so that perf can resolve JIT'd call frames.

PerfMap::LogJITCompiledMethod(this, pCode, sizeOfCode);

#endif

}

#ifdef FEATURE_MULTICOREJIT

// If not called from multi-core JIT thread, not got code from storage, quick check before calling out of line function

if (! fBackgroundThread && ! fCompiledInBackground && mcJitManager.IsRecorderActive())

{

if (MulticoreJitManager::IsMethodSupported(this))

{

mcJitManager.RecordMethodJit(this); // Tell multi-core JIT manager to record method on successful JITting

}

}

#endif

if (!fIsILStub)

{

// The notification will only occur if someone has registered for this method.

DACNotifyCompilationFinished(this);

}

}

}

Done:

// We must have a code by now.

_ASSERTE(pCode != NULL);

LOG((LF_CORDB, LL_EVERYTHING, "MethodDesc::MakeJitWorker finished. Stub is" FMT_ADDR "\n",

DBG_ADDR(pCode)));

return pCode;

}

这个函数是线程安全的JIT函数,

如果多个线程编译同一个函数, 其中一个线程会执行编译, 其他线程会等待编译完成.

每个AppDomain会有一个锁的集合, 一个正在编译的函数拥有一个ListLockEntry对象,

函数首先会对集合上锁, 获取或者创建函数对应的ListLockEntry, 然后释放对集合的锁,

这个时候所有线程对同一个函数都会获取到同一个ListLockEntry, 然后再对ListLockEntry上锁.

上锁后调用非线程安全的JIT函数:

pCode = UnsafeJitFunction(this, ILHeader, flags, flags2, &sizeOfCode)

接下来还有几层调用才会到JIT主函数, 我只简单说明他们的处理:

这个函数会创建CEEJitInfo(JIT层给EE层反馈使用的类)的实例, 从函数信息中获取编译标志(是否以Debug模式编译),

调用CallCompileMethodWithSEHWrapper, 并且在相对地址溢出时禁止使用相对地址(fAllowRel32)然后重试编译.

CallCompileMethodWithSEHWrapper

这个函数会在try中调用invokeCompileMethod.

这个函数让当前线程进入Preemptive模式(GC可以不用挂起当前线程), 然后调用invokeCompileMethodHelper.

这个函数一般情况下会调用jitMgr->m_jit->compileMethod.

这个函数一般情况下会调用jitNativeCode.

创建和初始化Compiler的实例, 并调用pParam->pComp->compCompile(7参数版).

内联时也会从这个函数开始调用, 如果是内联则Compiler实例会在第一次创建后复用.

Compiler负责单个函数的整个JIT过程.

这个函数会对Compiler实例做出一些初始化处理, 然后调用Compiler::compCompileHelper.

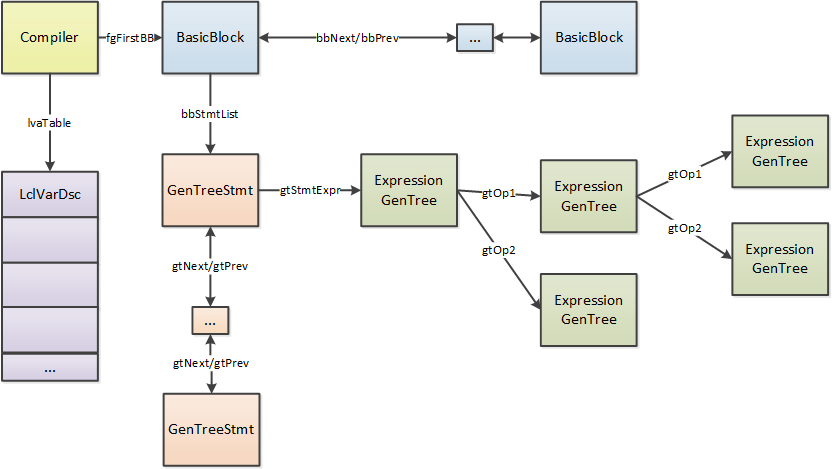

这个函数会先创建本地变量表lvaTable和BasicBlock的链表,

必要时添加一个内部使用的block(BB01), 然后解析IL代码添加更多的block, 具体将在下面说明.

然后调用compCompile(3参数版).

这就是JIT的主函数, 这个函数负责调用JIT各个阶段的工作, 具体将在下面说明.

创建本地变量表

compCompileHelper会调用lvaInitTypeRef,

lvaInitTypeRef会创建本地变量表, 源代码如下:

void Compiler::lvaInitTypeRef()

{

/* x86 args look something like this:

[this ptr] [hidden return buffer] [declared arguments]* [generic context] [var arg cookie]

x64 is closer to the native ABI:

[this ptr] [hidden return buffer] [generic context] [var arg cookie] [declared arguments]*

(Note: prior to .NET Framework 4.5.1 for Windows 8.1 (but not .NET Framework 4.5.1 "downlevel"),

the "hidden return buffer" came before the "this ptr". Now, the "this ptr" comes first. This

is different from the C++ order, where the "hidden return buffer" always comes first.)

ARM and ARM64 are the same as the current x64 convention:

[this ptr] [hidden return buffer] [generic context] [var arg cookie] [declared arguments]*

Key difference:

The var arg cookie and generic context are swapped with respect to the user arguments

*/

/* Set compArgsCount and compLocalsCount */

info.compArgsCount = info.compMethodInfo->args.numArgs;

// Is there a 'this' pointer

if (!info.compIsStatic)

{

info.compArgsCount++;

}

else

{

info.compThisArg = BAD_VAR_NUM;

}

info.compILargsCount = info.compArgsCount;

#ifdef FEATURE_SIMD

if (featureSIMD && (info.compRetNativeType == TYP_STRUCT))

{

var_types structType = impNormStructType(info.compMethodInfo->args.retTypeClass);

info.compRetType = structType;

}

#endif // FEATURE_SIMD

// Are we returning a struct using a return buffer argument?

//

const bool hasRetBuffArg = impMethodInfo_hasRetBuffArg(info.compMethodInfo);

// Possibly change the compRetNativeType from TYP_STRUCT to a "primitive" type

// when we are returning a struct by value and it fits in one register

//

if (!hasRetBuffArg && varTypeIsStruct(info.compRetNativeType))

{

CORINFO_CLASS_HANDLE retClsHnd = info.compMethodInfo->args.retTypeClass;

Compiler::structPassingKind howToReturnStruct;

var_types returnType = getReturnTypeForStruct(retClsHnd, &howToReturnStruct);

if (howToReturnStruct == SPK_PrimitiveType)

{

assert(returnType != TYP_UNKNOWN);

assert(returnType != TYP_STRUCT);

info.compRetNativeType = returnType;

// ToDo: Refactor this common code sequence into its own method as it is used 4+ times

if ((returnType == TYP_LONG) && (compLongUsed == false))

{

compLongUsed = true;

}

else if (((returnType == TYP_FLOAT) || (returnType == TYP_DOUBLE)) && (compFloatingPointUsed == false))

{

compFloatingPointUsed = true;

}

}

}

// Do we have a RetBuffArg?

if (hasRetBuffArg)

{

info.compArgsCount++;

}

else

{

info.compRetBuffArg = BAD_VAR_NUM;

}

/* There is a 'hidden' cookie pushed last when the

calling convention is varargs */

if (info.compIsVarArgs)

{

info.compArgsCount++;

}

// Is there an extra parameter used to pass instantiation info to

// shared generic methods and shared generic struct instance methods?

if (info.compMethodInfo->args.callConv & CORINFO_CALLCONV_PARAMTYPE)

{

info.compArgsCount++;

}

else

{

info.compTypeCtxtArg = BAD_VAR_NUM;

}

lvaCount = info.compLocalsCount = info.compArgsCount + info.compMethodInfo->locals.numArgs;

info.compILlocalsCount = info.compILargsCount + info.compMethodInfo->locals.numArgs;

/* Now allocate the variable descriptor table */

if (compIsForInlining())

{

lvaTable = impInlineInfo->InlinerCompiler->lvaTable;

lvaCount = impInlineInfo->InlinerCompiler->lvaCount;

lvaTableCnt = impInlineInfo->InlinerCompiler->lvaTableCnt;

// No more stuff needs to be done.

return;

}

lvaTableCnt = lvaCount * 2;

if (lvaTableCnt < 16)

{

lvaTableCnt = 16;

}

lvaTable = (LclVarDsc*)compGetMemArray(lvaTableCnt, sizeof(*lvaTable), CMK_LvaTable);

size_t tableSize = lvaTableCnt * sizeof(*lvaTable);

memset(lvaTable, 0, tableSize);

for (unsigned i = 0; i < lvaTableCnt; i++)

{

new (&lvaTable[i], jitstd::placement_t()) LclVarDsc(this); // call the constructor.

}

//-------------------------------------------------------------------------

// Count the arguments and initialize the respective lvaTable[] entries

//

// First the implicit arguments

//-------------------------------------------------------------------------

InitVarDscInfo varDscInfo;

varDscInfo.Init(lvaTable, hasRetBuffArg);

lvaInitArgs(&varDscInfo);

//-------------------------------------------------------------------------

// Finally the local variables

//-------------------------------------------------------------------------

unsigned varNum = varDscInfo.varNum;

LclVarDsc* varDsc = varDscInfo.varDsc;

CORINFO_ARG_LIST_HANDLE localsSig = info.compMethodInfo->locals.args;

for (unsigned i = 0; i < info.compMethodInfo->locals.numArgs;

i++, varNum++, varDsc++, localsSig = info.compCompHnd->getArgNext(localsSig))

{

CORINFO_CLASS_HANDLE typeHnd;

CorInfoTypeWithMod corInfoType =

info.compCompHnd->getArgType(&info.compMethodInfo->locals, localsSig, &typeHnd);

lvaInitVarDsc(varDsc, varNum, strip(corInfoType), typeHnd, localsSig, &info.compMethodInfo->locals);

varDsc->lvPinned = ((corInfoType & CORINFO_TYPE_MOD_PINNED) != 0);

varDsc->lvOnFrame = true; // The final home for this local variable might be our local stack frame

}

if ( // If there already exist unsafe buffers, don't mark more structs as unsafe

// as that will cause them to be placed along with the real unsafe buffers,

// unnecessarily exposing them to overruns. This can affect GS tests which

// intentionally do buffer-overruns.

!getNeedsGSSecurityCookie() &&

// GS checks require the stack to be re-ordered, which can't be done with EnC

!opts.compDbgEnC && compStressCompile(STRESS_UNSAFE_BUFFER_CHECKS, 25))

{

setNeedsGSSecurityCookie();

compGSReorderStackLayout = true;

for (unsigned i = 0; i < lvaCount; i++)

{

if ((lvaTable[i].lvType == TYP_STRUCT) && compStressCompile(STRESS_GENERIC_VARN, 60))

{

lvaTable[i].lvIsUnsafeBuffer = true;

}

}

}

if (getNeedsGSSecurityCookie())

{

// Ensure that there will be at least one stack variable since

// we require that the GSCookie does not have a 0 stack offset.

unsigned dummy = lvaGrabTempWithImplicitUse(false DEBUGARG("GSCookie dummy"));

lvaTable[dummy].lvType = TYP_INT;

}

#ifdef DEBUG

if (verbose)

{

lvaTableDump(INITIAL_FRAME_LAYOUT);

}

#endif

}

初始的本地变量数量是info.compArgsCount + info.compMethodInfo->locals.numArgs, 也就是IL中的参数数量+IL中的本地变量数量.

因为后面可能会添加更多的临时变量, 本地变量表的储存采用了length+capacity的方式,

本地变量表的指针是lvaTable, 当前长度是lvaCount, 最大长度是lvaTableCnt.

本地变量表的开头部分会先保存IL中的参数变量, 随后才是IL中的本地变量,

例如有3个参数, 2个本地变量时, 本地变量表是[参数0, 参数1, 参数2, 变量0, 变量1, 空, 空, 空, ... ].

此外如果对当前函数的编译是为了内联, 本地变量表会使用调用端(callsite)的对象.

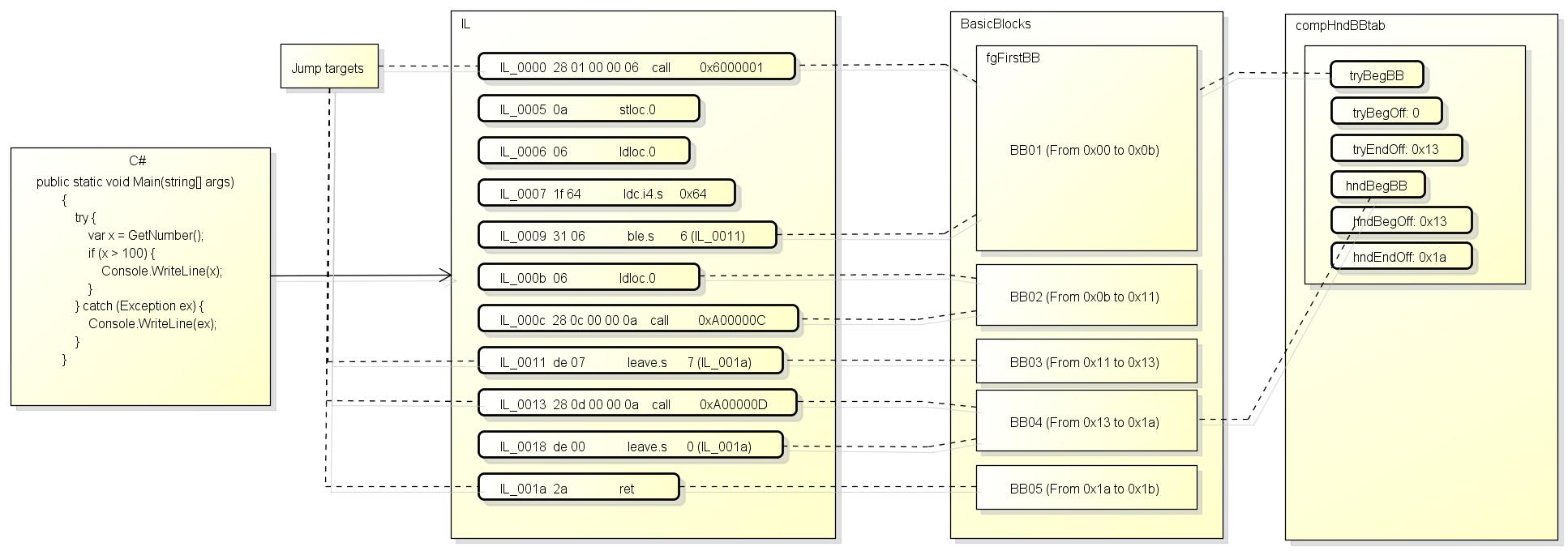

根据IL创建BasicBlock

在进入JIT的主函数之前, compCompileHelper会先解析IL并且根据指令创建BasicBlock.

在上一篇中也提到过,

BasicBlock是内部不包含跳转的逻辑块, 跳转指令原则只出现在block的最后, 同时跳转目标只能是block的开头.

创建BasicBlock的逻辑在函数fgFindBasicBlocks, 我们来看看它的源代码:

/*****************************************************************************

*

* Main entry point to discover the basic blocks for the current function.

*/

void Compiler::fgFindBasicBlocks()

{

#ifdef DEBUG

if (verbose)

{

printf("*************** In fgFindBasicBlocks() for %s\n", info.compFullName);

}

#endif

/* Allocate the 'jump target' vector

*

* We need one extra byte as we mark

* jumpTarget[info.compILCodeSize] with JT_ADDR

* when we need to add a dummy block

* to record the end of a try or handler region.

*/

BYTE* jumpTarget = new (this, CMK_Unknown) BYTE[info.compILCodeSize + 1];

memset(jumpTarget, JT_NONE, info.compILCodeSize + 1);

noway_assert(JT_NONE == 0);

/* Walk the instrs to find all jump targets */

fgFindJumpTargets(info.compCode, info.compILCodeSize, jumpTarget);

if (compDonotInline())

{

return;

}

unsigned XTnum;

/* Are there any exception handlers? */

if (info.compXcptnsCount > 0)

{

noway_assert(!compIsForInlining());

/* Check and mark all the exception handlers */

for (XTnum = 0; XTnum < info.compXcptnsCount; XTnum++)

{

DWORD tmpOffset;

CORINFO_EH_CLAUSE clause;

info.compCompHnd->getEHinfo(info.compMethodHnd, XTnum, &clause);

noway_assert(clause.HandlerLength != (unsigned)-1);

if (clause.TryLength <= 0)

{

BADCODE("try block length <=0");

}

/* Mark the 'try' block extent and the handler itself */

if (clause.TryOffset > info.compILCodeSize)

{

BADCODE("try offset is > codesize");

}

if (jumpTarget[clause.TryOffset] == JT_NONE)

{

jumpTarget[clause.TryOffset] = JT_ADDR;

}

tmpOffset = clause.TryOffset + clause.TryLength;

if (tmpOffset > info.compILCodeSize)

{

BADCODE("try end is > codesize");

}

if (jumpTarget[tmpOffset] == JT_NONE)

{

jumpTarget[tmpOffset] = JT_ADDR;

}

if (clause.HandlerOffset > info.compILCodeSize)

{

BADCODE("handler offset > codesize");

}

if (jumpTarget[clause.HandlerOffset] == JT_NONE)

{

jumpTarget[clause.HandlerOffset] = JT_ADDR;

}

tmpOffset = clause.HandlerOffset + clause.HandlerLength;

if (tmpOffset > info.compILCodeSize)

{

BADCODE("handler end > codesize");

}

if (jumpTarget[tmpOffset] == JT_NONE)

{

jumpTarget[tmpOffset] = JT_ADDR;

}

if (clause.Flags & CORINFO_EH_CLAUSE_FILTER)

{

if (clause.FilterOffset > info.compILCodeSize)

{

BADCODE("filter offset > codesize");

}

if (jumpTarget[clause.FilterOffset] == JT_NONE)

{

jumpTarget[clause.FilterOffset] = JT_ADDR;

}

}

}

}

#ifdef DEBUG

if (verbose)

{

bool anyJumpTargets = false;

printf("Jump targets:\n");

for (unsigned i = 0; i < info.compILCodeSize + 1; i++)

{

if (jumpTarget[i] == JT_NONE)

{

continue;

}

anyJumpTargets = true;

printf(" IL_%04x", i);

if (jumpTarget[i] & JT_ADDR)

{

printf(" addr");

}

if (jumpTarget[i] & JT_MULTI)

{

printf(" multi");

}

printf("\n");

}

if (!anyJumpTargets)

{

printf(" none\n");

}

}

#endif // DEBUG

/* Now create the basic blocks */

fgMakeBasicBlocks(info.compCode, info.compILCodeSize, jumpTarget);

if (compIsForInlining())

{

if (compInlineResult->IsFailure())

{

return;

}

bool hasReturnBlocks = false;

bool hasMoreThanOneReturnBlock = false;

for (BasicBlock* block = fgFirstBB; block != nullptr; block = block->bbNext)

{

if (block->bbJumpKind == BBJ_RETURN)

{

if (hasReturnBlocks)

{

hasMoreThanOneReturnBlock = true;

break;

}

hasReturnBlocks = true;

}

}

if (!hasReturnBlocks && !compInlineResult->UsesLegacyPolicy())

{

//

// Mark the call node as "no return". The inliner might ignore CALLEE_DOES_NOT_RETURN and

// fail inline for a different reasons. In that case we still want to make the "no return"

// information available to the caller as it can impact caller's code quality.

//

impInlineInfo->iciCall->gtCallMoreFlags |= GTF_CALL_M_DOES_NOT_RETURN;

}

compInlineResult->NoteBool(InlineObservation::CALLEE_DOES_NOT_RETURN, !hasReturnBlocks);

if (compInlineResult->IsFailure())

{

return;

}

noway_assert(info.compXcptnsCount == 0);

compHndBBtab = impInlineInfo->InlinerCompiler->compHndBBtab;

compHndBBtabAllocCount =

impInlineInfo->InlinerCompiler->compHndBBtabAllocCount; // we probably only use the table, not add to it.

compHndBBtabCount = impInlineInfo->InlinerCompiler->compHndBBtabCount;

info.compXcptnsCount = impInlineInfo->InlinerCompiler->info.compXcptnsCount;

if (info.compRetNativeType != TYP_VOID && hasMoreThanOneReturnBlock)

{

// The lifetime of this var might expand multiple BBs. So it is a long lifetime compiler temp.

lvaInlineeReturnSpillTemp = lvaGrabTemp(false DEBUGARG("Inline candidate multiple BBJ_RETURN spill temp"));

lvaTable[lvaInlineeReturnSpillTemp].lvType = info.compRetNativeType;

}

return;

}

/* Mark all blocks within 'try' blocks as such */

if (info.compXcptnsCount == 0)

{

return;

}

if (info.compXcptnsCount > MAX_XCPTN_INDEX)

{

IMPL_LIMITATION("too many exception clauses");

}

/* Allocate the exception handler table */

fgAllocEHTable();

/* Assume we don't need to sort the EH table (such that nested try/catch

* appear before their try or handler parent). The EH verifier will notice

* when we do need to sort it.

*/

fgNeedToSortEHTable = false;

verInitEHTree(info.compXcptnsCount);

EHNodeDsc* initRoot = ehnNext; // remember the original root since

// it may get modified during insertion

// Annotate BBs with exception handling information required for generating correct eh code

// as well as checking for correct IL

EHblkDsc* HBtab;

for (XTnum = 0, HBtab = compHndBBtab; XTnum < compHndBBtabCount; XTnum++, HBtab++)

{

CORINFO_EH_CLAUSE clause;

info.compCompHnd->getEHinfo(info.compMethodHnd, XTnum, &clause);

noway_assert(clause.HandlerLength != (unsigned)-1); // @DEPRECATED

#ifdef DEBUG

if (verbose)

{

dispIncomingEHClause(XTnum, clause);

}

#endif // DEBUG

IL_OFFSET tryBegOff = clause.TryOffset;

IL_OFFSET tryEndOff = tryBegOff + clause.TryLength;

IL_OFFSET filterBegOff = 0;

IL_OFFSET hndBegOff = clause.HandlerOffset;

IL_OFFSET hndEndOff = hndBegOff + clause.HandlerLength;

if (clause.Flags & CORINFO_EH_CLAUSE_FILTER)

{

filterBegOff = clause.FilterOffset;

}

if (tryEndOff > info.compILCodeSize)

{

BADCODE3("end of try block beyond end of method for try", " at offset %04X", tryBegOff);

}

if (hndEndOff > info.compILCodeSize)

{

BADCODE3("end of hnd block beyond end of method for try", " at offset %04X", tryBegOff);

}

HBtab->ebdTryBegOffset = tryBegOff;

HBtab->ebdTryEndOffset = tryEndOff;

HBtab->ebdFilterBegOffset = filterBegOff;

HBtab->ebdHndBegOffset = hndBegOff;

HBtab->ebdHndEndOffset = hndEndOff;

/* Convert the various addresses to basic blocks */

BasicBlock* tryBegBB = fgLookupBB(tryBegOff);

BasicBlock* tryEndBB =

fgLookupBB(tryEndOff); // note: this can be NULL if the try region is at the end of the function

BasicBlock* hndBegBB = fgLookupBB(hndBegOff);

BasicBlock* hndEndBB = nullptr;

BasicBlock* filtBB = nullptr;

BasicBlock* block;

//

// Assert that the try/hnd beginning blocks are set up correctly

//

if (tryBegBB == nullptr)

{

BADCODE("Try Clause is invalid");

}

if (hndBegBB == nullptr)

{

BADCODE("Handler Clause is invalid");

}

tryBegBB->bbFlags |= BBF_HAS_LABEL;

hndBegBB->bbFlags |= BBF_HAS_LABEL | BBF_JMP_TARGET;

#if HANDLER_ENTRY_MUST_BE_IN_HOT_SECTION

// This will change the block weight from 0 to 1

// and clear the rarely run flag

hndBegBB->makeBlockHot();

#else

hndBegBB->bbSetRunRarely(); // handler entry points are rarely executed

#endif

if (hndEndOff < info.compILCodeSize)

{

hndEndBB = fgLookupBB(hndEndOff);

}

if (clause.Flags & CORINFO_EH_CLAUSE_FILTER)

{

filtBB = HBtab->ebdFilter = fgLookupBB(clause.FilterOffset);

filtBB->bbCatchTyp = BBCT_FILTER;

filtBB->bbFlags |= BBF_HAS_LABEL | BBF_JMP_TARGET;

hndBegBB->bbCatchTyp = BBCT_FILTER_HANDLER;

#if HANDLER_ENTRY_MUST_BE_IN_HOT_SECTION

// This will change the block weight from 0 to 1

// and clear the rarely run flag

filtBB->makeBlockHot();

#else

filtBB->bbSetRunRarely(); // filter entry points are rarely executed

#endif

// Mark all BBs that belong to the filter with the XTnum of the corresponding handler

for (block = filtBB; /**/; block = block->bbNext)

{

if (block == nullptr)

{

BADCODE3("Missing endfilter for filter", " at offset %04X", filtBB->bbCodeOffs);

return;

}

// Still inside the filter

block->setHndIndex(XTnum);

if (block->bbJumpKind == BBJ_EHFILTERRET)

{

// Mark catch handler as successor.

block->bbJumpDest = hndBegBB;

assert(block->bbJumpDest->bbCatchTyp == BBCT_FILTER_HANDLER);

break;

}

}

if (!block->bbNext || block->bbNext != hndBegBB)

{

BADCODE3("Filter does not immediately precede handler for filter", " at offset %04X",

filtBB->bbCodeOffs);

}

}

else

{

HBtab->ebdTyp = clause.ClassToken;

/* Set bbCatchTyp as appropriate */

if (clause.Flags & CORINFO_EH_CLAUSE_FINALLY)

{

hndBegBB->bbCatchTyp = BBCT_FINALLY;

}

else

{

if (clause.Flags & CORINFO_EH_CLAUSE_FAULT)

{

hndBegBB->bbCatchTyp = BBCT_FAULT;

}

else

{

hndBegBB->bbCatchTyp = clause.ClassToken;

// These values should be non-zero value that will

// not collide with real tokens for bbCatchTyp

if (clause.ClassToken == 0)

{

BADCODE("Exception catch type is Null");

}

noway_assert(clause.ClassToken != BBCT_FAULT);

noway_assert(clause.ClassToken != BBCT_FINALLY);

noway_assert(clause.ClassToken != BBCT_FILTER);

noway_assert(clause.ClassToken != BBCT_FILTER_HANDLER);

}

}

}

/* Mark the initial block and last blocks in the 'try' region */

tryBegBB->bbFlags |= BBF_TRY_BEG | BBF_HAS_LABEL;

/* Prevent future optimizations of removing the first block */

/* of a TRY block and the first block of an exception handler */

tryBegBB->bbFlags |= BBF_DONT_REMOVE;

hndBegBB->bbFlags |= BBF_DONT_REMOVE;

hndBegBB->bbRefs++; // The first block of a handler gets an extra, "artificial" reference count.

if (clause.Flags & CORINFO_EH_CLAUSE_FILTER)

{

filtBB->bbFlags |= BBF_DONT_REMOVE;

filtBB->bbRefs++; // The first block of a filter gets an extra, "artificial" reference count.

}

tryBegBB->bbFlags |= BBF_DONT_REMOVE;

hndBegBB->bbFlags |= BBF_DONT_REMOVE;

//

// Store the info to the table of EH block handlers

//

HBtab->ebdHandlerType = ToEHHandlerType(clause.Flags);

HBtab->ebdTryBeg = tryBegBB;

HBtab->ebdTryLast = (tryEndBB == nullptr) ? fgLastBB : tryEndBB->bbPrev;

HBtab->ebdHndBeg = hndBegBB;

HBtab->ebdHndLast = (hndEndBB == nullptr) ? fgLastBB : hndEndBB->bbPrev;

//

// Assert that all of our try/hnd blocks are setup correctly.

//

if (HBtab->ebdTryLast == nullptr)

{

BADCODE("Try Clause is invalid");

}

if (HBtab->ebdHndLast == nullptr)

{

BADCODE("Handler Clause is invalid");

}

//

// Verify that it's legal

//

verInsertEhNode(&clause, HBtab);

} // end foreach handler table entry

fgSortEHTable();

// Next, set things related to nesting that depend on the sorting being complete.

for (XTnum = 0, HBtab = compHndBBtab; XTnum < compHndBBtabCount; XTnum++, HBtab++)

{

/* Mark all blocks in the finally/fault or catch clause */

BasicBlock* tryBegBB = HBtab->ebdTryBeg;

BasicBlock* hndBegBB = HBtab->ebdHndBeg;

IL_OFFSET tryBegOff = HBtab->ebdTryBegOffset;

IL_OFFSET tryEndOff = HBtab->ebdTryEndOffset;

IL_OFFSET hndBegOff = HBtab->ebdHndBegOffset;

IL_OFFSET hndEndOff = HBtab->ebdHndEndOffset;

BasicBlock* block;

for (block = hndBegBB; block && (block->bbCodeOffs < hndEndOff); block = block->bbNext)

{

if (!block->hasHndIndex())

{

block->setHndIndex(XTnum);

}

// All blocks in a catch handler or filter are rarely run, except the entry

if ((block != hndBegBB) && (hndBegBB->bbCatchTyp != BBCT_FINALLY))

{

block->bbSetRunRarely();

}

}

/* Mark all blocks within the covered range of the try */

for (block = tryBegBB; block && (block->bbCodeOffs < tryEndOff); block = block->bbNext)

{

/* Mark this BB as belonging to a 'try' block */

if (!block->hasTryIndex())

{

block->setTryIndex(XTnum);

}

#ifdef DEBUG

/* Note: the BB can't span the 'try' block */

if (!(block->bbFlags & BBF_INTERNAL))

{

noway_assert(tryBegOff <= block->bbCodeOffs);

noway_assert(tryEndOff >= block->bbCodeOffsEnd || tryEndOff == tryBegOff);

}

#endif

}

/* Init ebdHandlerNestingLevel of current clause, and bump up value for all

* enclosed clauses (which have to be before it in the table).

* Innermost try-finally blocks must precede outermost

* try-finally blocks.

*/

#if !FEATURE_EH_FUNCLETS

HBtab->ebdHandlerNestingLevel = 0;

#endif // !FEATURE_EH_FUNCLETS

HBtab->ebdEnclosingTryIndex = EHblkDsc::NO_ENCLOSING_INDEX;

HBtab->ebdEnclosingHndIndex = EHblkDsc::NO_ENCLOSING_INDEX;

noway_assert(XTnum < compHndBBtabCount);

noway_assert(XTnum == ehGetIndex(HBtab));

for (EHblkDsc* xtab = compHndBBtab; xtab < HBtab; xtab++)

{

#if !FEATURE_EH_FUNCLETS

if (jitIsBetween(xtab->ebdHndBegOffs(), hndBegOff, hndEndOff))

{

xtab->ebdHandlerNestingLevel++;

}

#endif // !FEATURE_EH_FUNCLETS

/* If we haven't recorded an enclosing try index for xtab then see

* if this EH region should be recorded. We check if the

* first offset in the xtab lies within our region. If so,

* the last offset also must lie within the region, due to

* nesting rules. verInsertEhNode(), below, will check for proper nesting.

*/

if (xtab->ebdEnclosingTryIndex == EHblkDsc::NO_ENCLOSING_INDEX)

{

bool begBetween = jitIsBetween(xtab->ebdTryBegOffs(), tryBegOff, tryEndOff);

if (begBetween)

{

// Record the enclosing scope link

xtab->ebdEnclosingTryIndex = (unsigned short)XTnum;

}

}

/* Do the same for the enclosing handler index.

*/

if (xtab->ebdEnclosingHndIndex == EHblkDsc::NO_ENCLOSING_INDEX)

{

bool begBetween = jitIsBetween(xtab->ebdTryBegOffs(), hndBegOff, hndEndOff);

if (begBetween)

{

// Record the enclosing scope link

xtab->ebdEnclosingHndIndex = (unsigned short)XTnum;

}

}

}

} // end foreach handler table entry

#if !FEATURE_EH_FUNCLETS

EHblkDsc* HBtabEnd;

for (HBtab = compHndBBtab, HBtabEnd = compHndBBtab + compHndBBtabCount; HBtab < HBtabEnd; HBtab++)

{

if (ehMaxHndNestingCount <= HBtab->ebdHandlerNestingLevel)

ehMaxHndNestingCount = HBtab->ebdHandlerNestingLevel + 1;

}

#endif // !FEATURE_EH_FUNCLETS

#ifndef DEBUG

if (tiVerificationNeeded)

#endif

{

// always run these checks for a debug build

verCheckNestingLevel(initRoot);

}

#ifndef DEBUG

// fgNormalizeEH assumes that this test has been passed. And Ssa assumes that fgNormalizeEHTable

// has been run. So do this unless we're in minOpts mode (and always in debug).

if (tiVerificationNeeded || !opts.MinOpts())

#endif

{

fgCheckBasicBlockControlFlow();

}

#ifdef DEBUG

if (verbose)

{

JITDUMP("*************** After fgFindBasicBlocks() has created the EH table\n");

fgDispHandlerTab();

}

// We can't verify the handler table until all the IL legality checks have been done (above), since bad IL

// (such as illegal nesting of regions) will trigger asserts here.

fgVerifyHandlerTab();

#endif

fgNormalizeEH();

}

fgFindBasicBlocks首先创建了一个byte数组, 长度跟IL长度一样(也就是一个IL偏移值会对应一个byte),

然后调用fgFindJumpTargets查找跳转目标, 以这段IL为例:

IL_0000 00 nop

IL_0001 16 ldc.i4.0

IL_0002 0a stloc.0

IL_0003 2b 0d br.s 13 (IL_0012)

IL_0005 00 nop

IL_0006 06 ldloc.0

IL_0007 28 0c 00 00 0a call 0xA00000C

IL_000c 00 nop

IL_000d 00 nop

IL_000e 06 ldloc.0

IL_000f 17 ldc.i4.1

IL_0010 58 add

IL_0011 0a stloc.0

IL_0012 06 ldloc.0

IL_0013 19 ldc.i4.3

IL_0014 fe 04 clt

IL_0016 0b stloc.1

IL_0017 07 ldloc.1

IL_0018 2d eb brtrue.s -21 (IL_0005)

IL_001a 2a ret

这段IL可以找到两个跳转目标:

Jump targets:

IL_0005

IL_0012

然后fgFindBasicBlocks会根据函数的例外信息找到更多的跳转目标, 例如try的开始和catch的开始都会被视为跳转目标.

注意fgFindJumpTargets在解析IL的后会判断是否值得内联, 内联相关的处理将在下面说明.

之后调用fgMakeBasicBlocks创建BasicBlock, fgMakeBasicBlocks在遇到跳转指令或者跳转目标时会开始一个新的block.

调用fgMakeBasicBlocks后, compiler中就有了BasicBlock的链表(从fgFirstBB开始), 每个节点对应IL中的一段范围.

在创建完BasicBlock后还会根据例外信息创建一个例外信息表compHndBBtab(也称EH表), 长度是compHndBBtabCount.

表中每条记录都有try开始的block, handler(catch, finally, fault)开始的block, 和外层的try序号(如果try嵌套了).

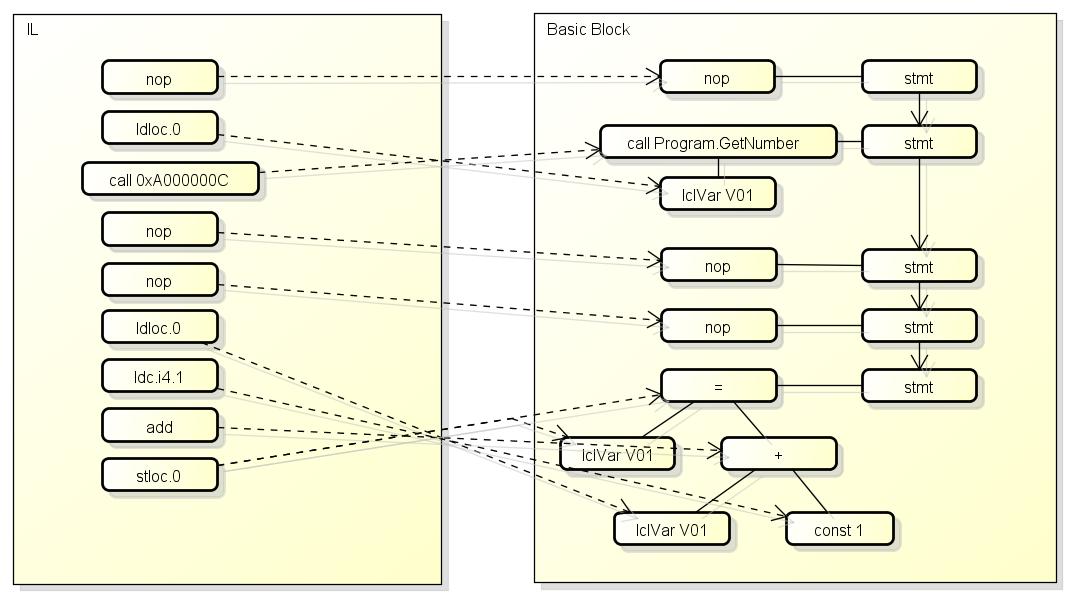

如下图所示:

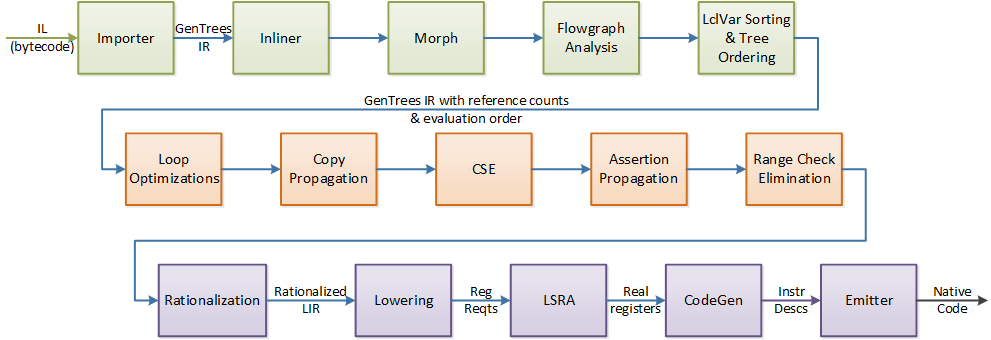

JIT主函数

compCompileHelper把BasicBlock划分好以后, 就会调用3参数版的Compiler::compCompile, 这个函数就是JIT的主函数.

Compiler::compCompile的源代码如下:

//*********************************************************************************************

// #Phases

//

// This is the most interesting 'toplevel' function in the JIT. It goes through the operations of

// importing, morphing, optimizations and code generation. This is called from the EE through the

// code:CILJit::compileMethod function.

//

// For an overview of the structure of the JIT, see:

// https://github.com/dotnet/coreclr/blob/master/Documentation/botr/ryujit-overview.md

//

void Compiler::compCompile(void** methodCodePtr, ULONG* methodCodeSize, CORJIT_FLAGS* compileFlags)

{

if (compIsForInlining())

{

// Notify root instance that an inline attempt is about to import IL

impInlineRoot()->m_inlineStrategy->NoteImport();

}

hashBv::Init(this);

VarSetOps::AssignAllowUninitRhs(this, compCurLife, VarSetOps::UninitVal());

/* The temp holding the secret stub argument is used by fgImport() when importing the intrinsic. */

if (info.compPublishStubParam)

{

assert(lvaStubArgumentVar == BAD_VAR_NUM);

lvaStubArgumentVar = lvaGrabTempWithImplicitUse(false DEBUGARG("stub argument"));

lvaTable[lvaStubArgumentVar].lvType = TYP_I_IMPL;

}

EndPhase(PHASE_PRE_IMPORT);

compFunctionTraceStart();

/* Convert the instrs in each basic block to a tree based intermediate representation */

fgImport();

assert(!fgComputePredsDone);

if (fgCheapPredsValid)

{

// Remove cheap predecessors before inlining; allowing the cheap predecessor lists to be inserted

// with inlined blocks causes problems.

fgRemovePreds();

}

if (compIsForInlining())

{

/* Quit inlining if fgImport() failed for any reason. */

if (compDonotInline())

{

return;

}

/* Filter out unimported BBs */

fgRemoveEmptyBlocks();

return;

}

assert(!compDonotInline());

EndPhase(PHASE_IMPORTATION);

// Maybe the caller was not interested in generating code

if (compIsForImportOnly())

{

compFunctionTraceEnd(nullptr, 0, false);

return;

}

#if !FEATURE_EH

// If we aren't yet supporting EH in a compiler bring-up, remove as many EH handlers as possible, so

// we can pass tests that contain try/catch EH, but don't actually throw any exceptions.

fgRemoveEH();

#endif // !FEATURE_EH

if (compileFlags->corJitFlags & CORJIT_FLG_BBINSTR)

{

fgInstrumentMethod();

}

// We could allow ESP frames. Just need to reserve space for

// pushing EBP if the method becomes an EBP-frame after an edit.

// Note that requiring a EBP Frame disallows double alignment. Thus if we change this

// we either have to disallow double alignment for E&C some other way or handle it in EETwain.

if (opts.compDbgEnC)

{

codeGen->setFramePointerRequired(true);

// Since we need a slots for security near ebp, its not possible

// to do this after an Edit without shifting all the locals.

// So we just always reserve space for these slots in case an Edit adds them

opts.compNeedSecurityCheck = true;

// We don't care about localloc right now. If we do support it,

// EECodeManager::FixContextForEnC() needs to handle it smartly

// in case the localloc was actually executed.

//

// compLocallocUsed = true;

}

EndPhase(PHASE_POST_IMPORT);

/* Initialize the BlockSet epoch */

NewBasicBlockEpoch();

/* Massage the trees so that we can generate code out of them */

fgMorph();

EndPhase(PHASE_MORPH);

/* GS security checks for unsafe buffers */

if (getNeedsGSSecurityCookie())

{

#ifdef DEBUG

if (verbose)

{

printf("\n*************** -GS checks for unsafe buffers \n");

}

#endif

gsGSChecksInitCookie();

if (compGSReorderStackLayout)

{

gsCopyShadowParams();

}

#ifdef DEBUG

if (verbose)

{

fgDispBasicBlocks(true);

printf("\n");

}

#endif

}

EndPhase(PHASE_GS_COOKIE);

/* Compute bbNum, bbRefs and bbPreds */

JITDUMP("\nRenumbering the basic blocks for fgComputePred\n");

fgRenumberBlocks();

noway_assert(!fgComputePredsDone); // This is the first time full (not cheap) preds will be computed.

fgComputePreds();

EndPhase(PHASE_COMPUTE_PREDS);

/* If we need to emit GC Poll calls, mark the blocks that need them now. This is conservative and can

* be optimized later. */

fgMarkGCPollBlocks();

EndPhase(PHASE_MARK_GC_POLL_BLOCKS);

/* From this point on the flowgraph information such as bbNum,

* bbRefs or bbPreds has to be kept updated */

// Compute the edge weights (if we have profile data)

fgComputeEdgeWeights();

EndPhase(PHASE_COMPUTE_EDGE_WEIGHTS);

#if FEATURE_EH_FUNCLETS

/* Create funclets from the EH handlers. */

fgCreateFunclets();

EndPhase(PHASE_CREATE_FUNCLETS);

#endif // FEATURE_EH_FUNCLETS

if (!opts.MinOpts() && !opts.compDbgCode)

{

optOptimizeLayout();

EndPhase(PHASE_OPTIMIZE_LAYOUT);

// Compute reachability sets and dominators.

fgComputeReachability();

}

// Transform each GT_ALLOCOBJ node into either an allocation helper call or

// local variable allocation on the stack.

ObjectAllocator objectAllocator(this);

objectAllocator.Run();

if (!opts.MinOpts() && !opts.compDbgCode)

{

/* Perform loop inversion (i.e. transform "while" loops into

"repeat" loops) and discover and classify natural loops

(e.g. mark iterative loops as such). Also marks loop blocks

and sets bbWeight to the loop nesting levels

*/

optOptimizeLoops();

EndPhase(PHASE_OPTIMIZE_LOOPS);

// Clone loops with optimization opportunities, and

// choose the one based on dynamic condition evaluation.

optCloneLoops();

EndPhase(PHASE_CLONE_LOOPS);

/* Unroll loops */

optUnrollLoops();

EndPhase(PHASE_UNROLL_LOOPS);

}

#ifdef DEBUG

fgDebugCheckLinks();

#endif

/* Create the variable table (and compute variable ref counts) */

lvaMarkLocalVars();

EndPhase(PHASE_MARK_LOCAL_VARS);

// IMPORTANT, after this point, every place where trees are modified or cloned

// the local variable reference counts must be updated

// You can test the value of the following variable to see if

// the local variable ref counts must be updated

//

assert(lvaLocalVarRefCounted == true);

if (!opts.MinOpts() && !opts.compDbgCode)

{

/* Optimize boolean conditions */

optOptimizeBools();

EndPhase(PHASE_OPTIMIZE_BOOLS);

// optOptimizeBools() might have changed the number of blocks; the dominators/reachability might be bad.

}

/* Figure out the order in which operators are to be evaluated */

fgFindOperOrder();

EndPhase(PHASE_FIND_OPER_ORDER);

// Weave the tree lists. Anyone who modifies the tree shapes after

// this point is responsible for calling fgSetStmtSeq() to keep the

// nodes properly linked.

// This can create GC poll calls, and create new BasicBlocks (without updating dominators/reachability).

fgSetBlockOrder();

EndPhase(PHASE_SET_BLOCK_ORDER);

// IMPORTANT, after this point, every place where tree topology changes must redo evaluation

// order (gtSetStmtInfo) and relink nodes (fgSetStmtSeq) if required.

CLANG_FORMAT_COMMENT_ANCHOR;

#ifdef DEBUG

// Now we have determined the order of evaluation and the gtCosts for every node.

// If verbose, dump the full set of trees here before the optimization phases mutate them