附012.Kubeadm部署高可用Kubernetes

一 kubeadm介绍

1.1 概述

1.2 kubeadm功能

二 部署规划

2.1 节点规划

|

节点主机名

|

IP

|

类型

|

运行服务

|

|

k8smaster01

|

172.24.8.71

|

Kubernetes master节点

|

docker、etcd、kube-apiserver、kube-scheduler、kube-controller-manager、kubectl、kubelet、heapster、calico

|

|

k8smaster02

|

172.24.8.72

|

Kubernetes master节点

|

docker、etcd、kube-apiserver、kube-scheduler、kube-controller-manager、kubectl、

kubelet、heapster、calico

|

|

k8smaster03

|

172.24.8.73

|

Kubernetes master节点

|

docker、etcd、kube-apiserver、kube-scheduler、kube-controller-manager、kubectl、

kubelet、heapster、calico

|

|

k8snode01

|

172.24.8.74

|

Kubernetes node节点1

|

docker、kubelet、proxy、calico

|

|

k8snode02

|

172.24.8.75

|

Kubernetes node节点2

|

docker、kubelet、proxy、calico

|

|

k8snode03

|

172.24.8.76

|

Kubernetes node节点3

|

docker、kubelet、proxy、calico

|

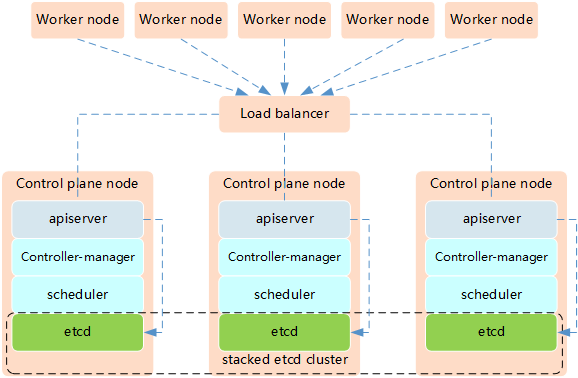

- Etcd混布方式

- 所需机器资源少

- 部署简单,利于管理

- 容易进行横向扩展

- 风险大,一台宿主机挂了,master和etcd就都少了一套,集群冗余度受到的影响比较大。

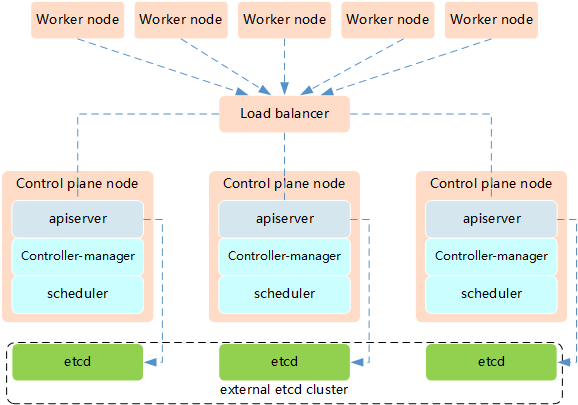

- Etcd独立部署方式:

- 所需机器资源多(按照Etcd集群的奇数原则,这种拓扑的集群关控制平面最少需要6台宿主机了)

- 部署相对复杂,要独立管理etcd集群和和master集群

- 解耦了控制平面和Etcd,集群风险小健壮性强,单独挂了一台master或etcd对集群的影响很小

2.2 初始准备

1 [root@k8smaster01 ~]# vi k8sinit.sh

2 # Modify Author: xhy

3 # Modify Date: 2019-06-23 22:19

4 # Version:

5 #***************************************************************#

6 # Initialize the machine. This needs to be executed on every machine.

7

8 # Add host domain name.

9 cat >> /etc/hosts << EOF

10 172.24.8.71 k8smaster01

11 172.24.8.72 k8smaster02

12 172.24.8.73 k8smaster03

13 172.24.8.74 k8snode01

14 172.24.8.75 k8snode02

15 172.24.8.76 k8snode03

16 EOF

17

18 # Add docker user

19 useradd -m docker

20

21 # Disable the SELinux.

22 sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

23

24 # Turn off and disable the firewalld.

25 systemctl stop firewalld

26 systemctl disable firewalld

27

28 # Modify related kernel parameters & Disable the swap.

29 cat > /etc/sysctl.d/k8s.conf << EOF

30 net.ipv4.ip_forward = 1

31 net.bridge.bridge-nf-call-ip6tables = 1

32 net.bridge.bridge-nf-call-iptables = 1

33 net.ipv4.tcp_tw_recycle = 0

34 vm.swappiness = 0

35 vm.overcommit_memory = 1

36 vm.panic_on_oom = 0

37 net.ipv6.conf.all.disable_ipv6 = 1

38 EOF

39 sysctl -p /etc/sysctl.d/k8s.conf >&/dev/null

40 swapoff -a

41 sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

42 modprobe br_netfilter

43

44 # Add ipvs modules

45 cat > /etc/sysconfig/modules/ipvs.modules <<EOF

46 #!/bin/bash

47 modprobe -- ip_vs

48 modprobe -- ip_vs_rr

49 modprobe -- ip_vs_wrr

50 modprobe -- ip_vs_sh

51 modprobe -- nf_conntrack_ipv4

52 EOF

53 chmod 755 /etc/sysconfig/modules/ipvs.modules

54 bash /etc/sysconfig/modules/ipvs.modules

55

56 # Install rpm

57 yum install -y conntrack git ntpdate ntp ipvsadm ipset jq iptables curl sysstat libseccomp wget gcc gcc-c++ make openssl-devel

58

59 # Install Docker Compose

60 sudo curl -L "https://github.com/docker/compose/releases/download/1.25.0/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

61 sudo chmod +x /usr/local/bin/docker-compose

62

63 # Update kernel

64 rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

65 rpm -Uvh https://www.elrepo.org/elrepo-release-7.0-4.el7.elrepo.noarch.rpm

66 yum --disablerepo="*" --enablerepo="elrepo-kernel" install -y kernel-ml-5.4.1-1.el7.elrepo

67 sed -i 's/^GRUB_DEFAULT=.*/GRUB_DEFAULT=0/' /etc/default/grub

68 grub2-mkconfig -o /boot/grub2/grub.cfg

69 yum update -y

70

71 # Reboot the machine.

72 reboot

2.3 互信配置

1 [root@k8smaster01 ~]# ssh-keygen -f ~/.ssh/id_rsa -N ''

2 [root@k8smaster01 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@k8smaster01

3 [root@k8smaster01 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@k8smaster02

4 [root@k8smaster01 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@k8smaster03

5 [root@k8smaster01 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@k8snode01

6 [root@k8smaster01 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@k8snode02

7 [root@k8smaster01 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@k8snode03

三 集群部署

3.1 Docker安装

1 [root@k8smaster01 ~]# yum -y update

2 [root@k8smaster01 ~]# yum -y install yum-utils device-mapper-persistent-data lvm2

3 [root@k8smaster01 ~]# yum-config-manager \

4 --add-repo \

5 http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

6 [root@k8smaster01 ~]# yum list docker-ce --showduplicates | sort -r #查看可用版本

7 [root@k8smaster01 ~]# yum -y install docker-ce-18.09.9-3.el7.x86_64 #kubeadm当前不支持18.09以上版本

8 [root@k8smaster01 ~]# mkdir /etc/docker

9 [root@k8smaster01 ~]# cat > /etc/docker/daemon.json <<EOF

10 {

11 "registry-mirrors": ["https://dbzucv6w.mirror.aliyuncs.com"],

12 "exec-opts": ["native.cgroupdriver=systemd"],

13 "log-driver": "json-file",

14 "log-opts": {

15 "max-size": "100m"

16 },

17 "storage-driver": "overlay2",

18 "storage-opts": [

19 "overlay2.override_kernel_check=true"

20 ]

21 }

22 EOF #配置system管理cgroup

1 [root@k8smaster01 ~]# systemctl restart docker

2 [root@k8smaster01 ~]# systemctl enable docker

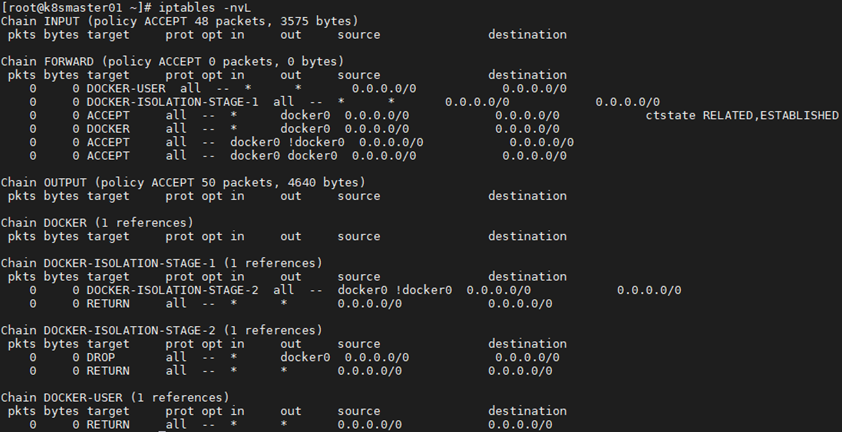

3 [root@k8smaster01 ~]# iptables -nvL #确认iptables filter表中FOWARD链的默认策略(pllicy)为ACCEPT

3.2 相关组件包

3.3 正式安装

1 [root@k8smaster01 ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

2 [kubernetes]

3 name=Kubernetes

4 baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

5 enabled=1

6 gpgcheck=1

7 repo_gpgcheck=1

8 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

9 EOF

10 #配置yum源

1 [root@k8smaster01 ~]# yum search kubelet --showduplicates #查看相应版本

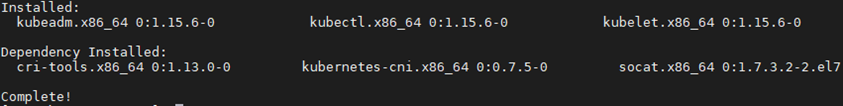

2 [root@k8smaster01 ~]# yum install -y kubeadm-1.15.6-0.x86_64 kubelet-1.15.6-0.x86_64 kubectl-1.15.6-0.x86_64 --disableexcludes=kubernetes

1 [root@k8smaster01 ~]# systemctl enable kubelet

三 部署高可用组件I

3.1 Keepalived安装

1 [root@k8smaster01 ~]# wget https://www.keepalived.org/software/keepalived-2.0.19.tar.gz

2 [root@k8smaster01 ~]# tar -zxvf keepalived-2.0.19.tar.gz

3 [root@k8smaster01 ~]# cd keepalived-2.0.19/

4 [root@k8smaster01 ~]# ./configure --sysconf=/etc --prefix=/usr/local/keepalived

5 [root@k8smaster01 keepalived-2.0.19]# make && make install

6 [root@k8smaster01 ~]# systemctl enable keepalived && systemctl start keepalived

3.2 创建配置文件

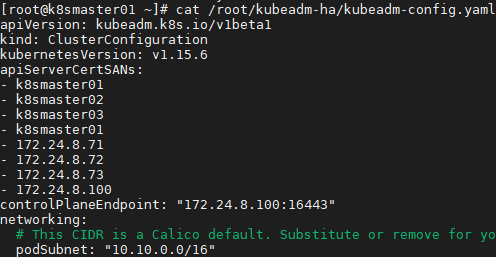

1 [root@k8smaster01 ~]# git clone https://github.com/cookeem/kubeadm-ha #拉取github的高可用自动配置脚本

2 [root@k8smaster01 ~]# vi /root/kubeadm-ha/kubeadm-config.yaml

3 apiVersion: kubeadm.k8s.io/v1beta1

4 kind: ClusterConfiguration

5 kubernetesVersion: v1.15.6 #配置安装的版本

6 ……

7 podSubnet: "10.10.0.0/16" #指定pod网段及掩码

8 ……

1 [root@k8smaster01 ~]# cd kubeadm-ha/

2 [root@k8smaster01 kubeadm-ha]# vi create-config.sh

3 # master keepalived virtual ip address

4 export K8SHA_VIP=172.24.8.100

5 # master01 ip address

6 export K8SHA_IP1=172.24.8.71

7 # master02 ip address

8 export K8SHA_IP2=172.24.8.72

9 # master03 ip address

10 export K8SHA_IP3=172.24.8.73

11 # master keepalived virtual ip hostname

12 export K8SHA_VHOST=k8smaster01

13 # master01 hostname

14 export K8SHA_HOST1=k8smaster01

15 # master02 hostname

16 export K8SHA_HOST2=k8smaster02

17 # master03 hostname

18 export K8SHA_HOST3=k8smaster03

19 # master01 network interface name

20 export K8SHA_NETINF1=eth0

21 # master02 network interface name

22 export K8SHA_NETINF2=eth0

23 # master03 network interface name

24 export K8SHA_NETINF3=eth0

25 # keepalived auth_pass config

26 export K8SHA_KEEPALIVED_AUTH=412f7dc3bfed32194d1600c483e10ad1d

27 # calico reachable ip address

28 export K8SHA_CALICO_REACHABLE_IP=172.24.8.2

29 # kubernetes CIDR pod subnet

30 export K8SHA_CIDR=10.10.0.0

31

32 [root@k8smaster01 kubeadm-ha]# ./create-config.sh

- kubeadm-config.yaml:kubeadm初始化配置文件,位于kubeadm-ha代码的./根目录

- keepalived:keepalived配置文件,位于各个master节点的/etc/keepalived目录

- nginx-lb:nginx-lb负载均衡配置文件,位于各个master节点的/root/nginx-lb目录

- calico.yaml:calico网络组件部署文件,位于kubeadm-ha代码的./calico目录

3.3 启动Keepalived

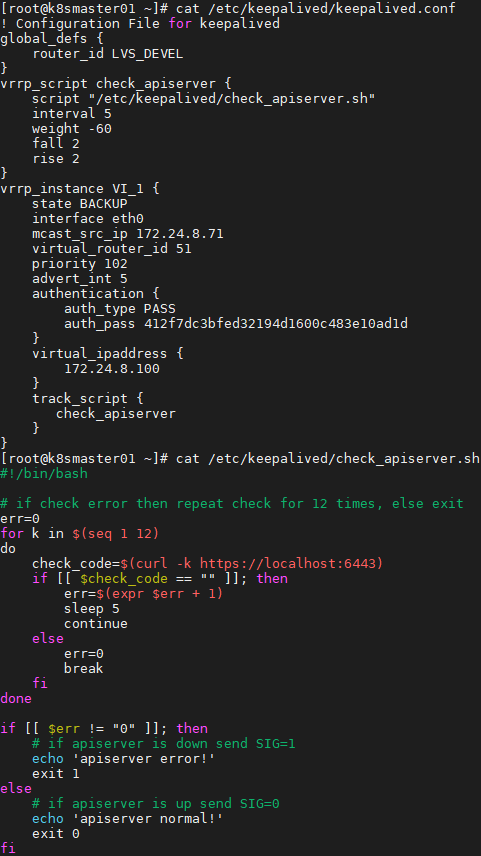

1 [root@k8smaster01 ~]# cat /etc/keepalived/keepalived.conf

2 [root@k8smaster01 ~]# cat /etc/keepalived/check_apiserver.sh

1 [root@k8smaster01 ~]# systemctl restart keepalived.service

2 [root@k8smaster01 ~]# systemctl status keepalived.service

3 [root@k8smaster01 ~]# ping 172.24.8.100

4

3.4 启动Nginx

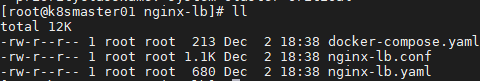

1 [root@k8smaster01 ~]# cd /root/nginx-lb/

1 [root@k8smaster01 nginx-lb]# docker-compose up -d #使用docker-compose方式启动nginx-lb

2 [root@k8smaster01 ~]# docker-compose ps #检查nginx-lb启动状态

四 初始化集群-Mater

4.1 Master上初始化

1 [root@k8smaster01 ~]# kubeadm --kubernetes-version=v1.15.6 config images list #列出所需镜像

2 k8s.gcr.io/kube-apiserver:v1.15.6

3 k8s.gcr.io/kube-controller-manager:v1.15.6

4 k8s.gcr.io/kube-scheduler:v1.15.6

5 k8s.gcr.io/kube-proxy:v1.15.6

6 k8s.gcr.io/pause:3.1

7 k8s.gcr.io/etcd:3.3.10

8 k8s.gcr.io/coredns:1.3.1

1 [root@k8smaster01 ~]# kubeadm --kubernetes-version=v1.15.6 config images pull #拉取kubernetes所需镜像

1 [root@VPN ~]# docker pull k8s.gcr.io/kube-apiserver:v1.15.6

2 [root@VPN ~]# docker pull k8s.gcr.io/kube-controller-manager:v1.15.6

3 [root@VPN ~]# docker pull k8s.gcr.io/kube-scheduler:v1.15.6

4 [root@VPN ~]# docker pull k8s.gcr.io/kube-proxy:v1.15.6

5 [root@VPN ~]# docker pull k8s.gcr.io/pause:3.1

6 [root@VPN ~]# docker pull k8s.gcr.io/etcd:3.3.10

7 [root@VPN ~]# docker pull k8s.gcr.io/coredns:1.3.1

8 [root@k8smaster01 ~]# docker load -i kube-apiserver.tar

9 [root@k8smaster01 ~]# docker load -i kube-controller-manager.tar

10 [root@k8smaster01 ~]# docker load -i kube-scheduler.tar

11 [root@k8smaster01 ~]# docker load -i kube-proxy.tar

12 [root@k8smaster01 ~]# docker load -i pause.tar

13 [root@k8smaster01 ~]# docker load -i etcd.tar

14 [root@k8smaster01 ~]# docker load -i coredns.tar

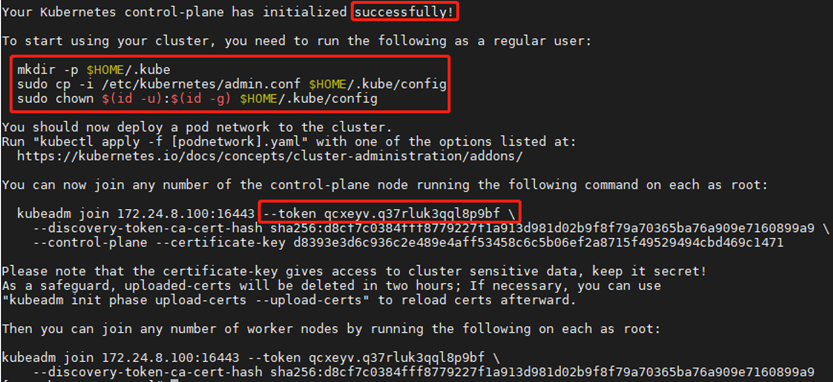

1 [root@k8smaster01 ~]# kubeadm init --config=/root/kubeadm-ha/kubeadm-config.yaml --upload-certs

1 You can now join any number of the control-plane node running the following command on each as root:

2

3 kubeadm join 172.24.8.100:16443 --token qcxeyv.q37rluk3qql8p9bf \

4 --discovery-token-ca-cert-hash sha256:d8cf7c0384fff8779227f1a913d981d02b9f8f79a70365ba76a909e7160899a9 \

5 --control-plane --certificate-key d8393e3d6c936c2e489e4aff53458c6c5b06ef2a8715f49529494cbd469c1471

6

7 Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

8 As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

9 "kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

10

11 Then you can join any number of worker nodes by running the following on each as root:

12

13 kubeadm join 172.24.8.100:16443 --token qcxeyv.q37rluk3qql8p9bf \

14 --discovery-token-ca-cert-hash sha256:d8cf7c0384fff8779227f1a913d981d02b9f8f79a70365ba76a909e7160899a9

1 kubeadm token create

2 openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

3

1 [root@k8smaster01 ~]# mkdir -p $HOME/.kube

2 [root@k8smaster01 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/admin.conf

3 [root@k8smaster01 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/admin.conf

1 [root@k8smaster01 ~]# cat << EOF >> ~/.bashrc

2 export KUBECONFIG=$HOME/.kube/admin.conf

3 EOF #设置KUBECONFIG环境变量

4 [root@k8smaster01 ~]# source ~/.bashrc

- [kubelet-start] 生成kubelet的配置文件”/var/lib/kubelet/config.yaml”

- [certificates]生成相关的各种证书

- [kubeconfig]生成相关的kubeconfig文件

- [bootstraptoken]生成token记录下来,后边使用kubeadm join往集群中添加节点时会用到

4.2 添加其他master节点

1 [root@k8smaster02 ~]# kubeadm join 172.24.8.100:16443 --token qcxeyv.q37rluk3qql8p9bf \

2 --discovery-token-ca-cert-hash sha256:d8cf7c0384fff8779227f1a913d981d02b9f8f79a70365ba76a909e7160899a9 \

3 --control-plane --certificate-key d8393e3d6c936c2e489e4aff53458c6c5b06ef2a8715f49529494cbd469c1471

1 [root@k8smaster02 ~]# mkdir -p $HOME/.kube

2 [root@k8smaster02 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/admin.conf

3 [root@k8smaster02 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/admin.conf

4 [root@k8smaster02 ~]# cat << EOF >> ~/.bashrc

5 export KUBECONFIG=$HOME/.kube/admin.conf

6 EOF

7 [root@k8smaster02 ~]# source ~/.bashrc

五 安装NIC插件

5.1 NIC插件介绍

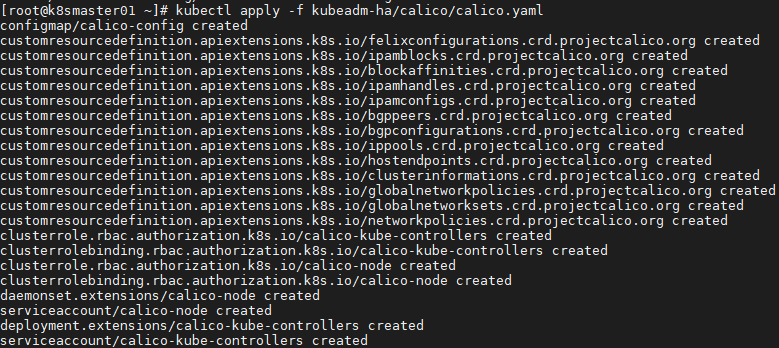

5.2 部署calico

1 [root@k8smaster01 ~]# docker pull calico/cni:v3.6.0

2 [root@k8smaster01 ~]# docker pull calico/node:v3.6.0

3 [root@k8smaster01 ~]# docker pull calico/kube-controllers:v3.6.0 #建议提前pull镜像

4 [root@k8smaster01 ~]# kubectl apply -f kubeadm-ha/calico/calico.yaml

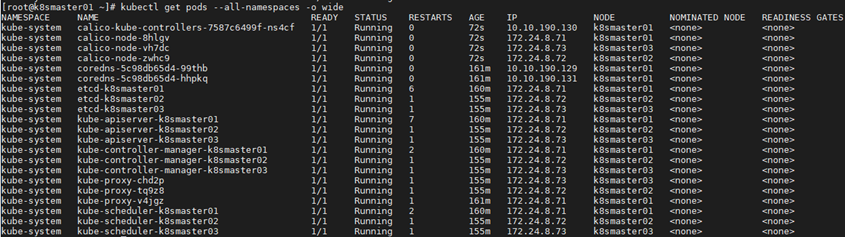

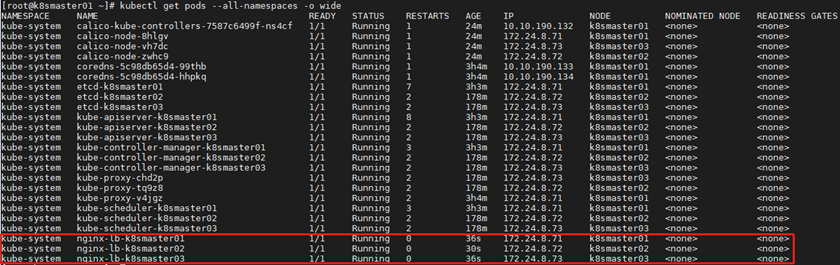

1 [root@k8smaster01 ~]# kubectl get pods --all-namespaces -o wide #查看部署

1 [root@k8smaster01 ~]# kubectl get nodes

2 NAME STATUS ROLES AGE VERSION

3 k8smaster01 Ready master 174m v1.15.6

4 k8smaster02 Ready master 168m v1.15.6

5 k8smaster03 Ready master 168m v1.15.6

六 部署高可用组件II

6.1 高可用说明

1 [root@k8smaster01 ~]# systemctl stop kubelet

2 [root@k8smaster01 ~]# docker stop nginx-lb && docker rm nginx-lb

1 [root@k8smaster01 ~]# export K8SHA_HOST1=k8smaster01

2 [root@k8smaster01 ~]# export K8SHA_HOST2=k8smaster02

3 [root@k8smaster01 ~]# export K8SHA_HOST3=k8smaster03

4 [root@k8smaster01 ~]# scp /root/nginx-lb/nginx-lb.conf root@${K8SHA_HOST1}:/etc/kubernetes/

5 [root@k8smaster01 ~]# scp /root/nginx-lb/nginx-lb.conf root@${K8SHA_HOST2}:/etc/kubernetes/

6 [root@k8smaster01 ~]# scp /root/nginx-lb/nginx-lb.conf root@${K8SHA_HOST3}:/etc/kubernetes/

7 [root@k8smaster01 ~]# scp /root/nginx-lb/nginx-lb.yaml root@${K8SHA_HOST1}:/etc/kubernetes/manifests/

8 [root@k8smaster01 ~]# scp /root/nginx-lb/nginx-lb.yaml root@${K8SHA_HOST2}:/etc/kubernetes/manifests/

9 [root@k8smaster01 ~]# scp /root/nginx-lb/nginx-lb.yaml root@${K8SHA_HOST3}:/etc/kubernetes/manifests/

1 [root@k8smaster01 ~]# systemctl restart kubelet docker #所有master节点重启相关服务

1 [root@k8smaster01 ~]# kubectl get pods --all-namespaces -o wide #再次验证

七 添加Worker节点

7.1 下载镜像

1 [root@k8snode01 ~]# docker load -i pause.tar

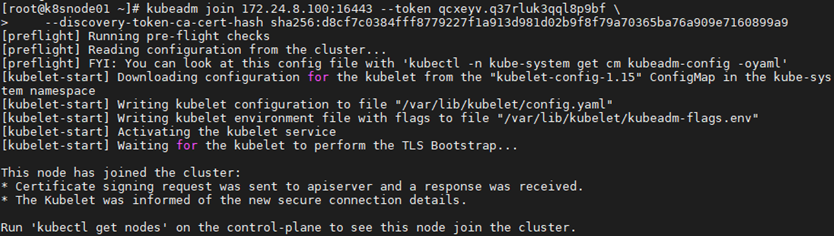

7.2 添加Node节点

1 [root@k8snode01 ~]# systemctl enable kubelet.service

2 [root@k8snode01 ~]# skubeadm join 172.24.8.100:16443 --token qcxeyv.q37rluk3qql8p9bf \

3 --discovery-token-ca-cert-hash sha256:d8cf7c0384fff8779227f1a913d981d02b9f8f79a70365ba76a909e7160899a9

1 [root@node01 ~]# kubeadm reset

2 [root@node01 ~]# ifconfig cni0 down

3 [root@node01 ~]# ip link delete cni0

4 [root@node01 ~]# ifconfig flannel.1 down

5 [root@node01 ~]# ip link delete flannel.1

6 [root@node01 ~]# rm -rf /var/lib/cni/

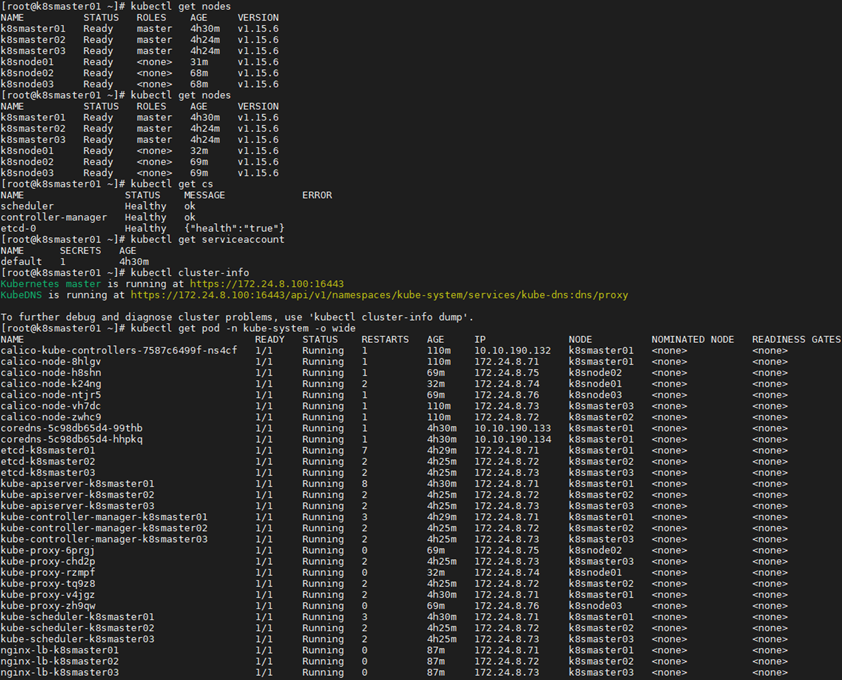

7.3 确认验证

1 [root@k8smaster01 ~]# kubectl get nodes #节点状态

2 [root@k8smaster01 ~]# kubectl get cs #组件状态

3 [root@k8smaster01 ~]# kubectl get serviceaccount #服务账户

4 [root@k8smaster01 ~]# kubectl cluster-info #集群信息

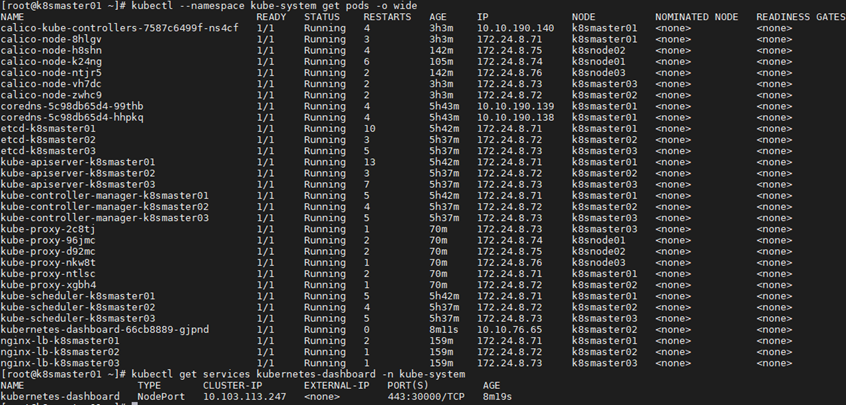

5 [root@k8smaster01 ~]# kubectl get pod -n kube-system -o wide #所有服务状态

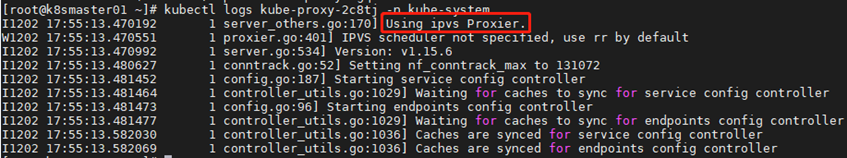

八 开启IPVS

8.1 修改ConfigMap

1 [root@k8smaster01 ~]# kubectl edit cm kube-proxy -n kube-system #模式改为ipvs

2 ……

3 mode: "ipvs"

4 ……

5 [root@k8smaster01 ~]# kubectl get pod -n kube-system | grep kube-proxy | awk '{system("kubectl delete pod "$1" -n kube-system")}'

6 [root@k8smaster01 ~]# kubectl get pod -n kube-system | grep kube-proxy #查看proxy的pod

7 [root@k8smaster01 ~]# kubectl logs kube-proxy-2c8tj -n kube-system #查看任意一个proxy pod的日志

九 测试集群

9.1 创建测试service

1 [root@k8smaster01 ~]# kubectl run nginx --replicas=2 --labels="run=load-balancer-example" --image=nginx --port=80

2 [root@k8smaster01 ~]# kubectl expose deployment nginx --type=NodePort --name=example-service #暴露端口

3 [root@k8smaster01 ~]# kubectl get service #查看服务状态

4 [root@k8smaster01 ~]# kubectl describe service example-service #查看信息

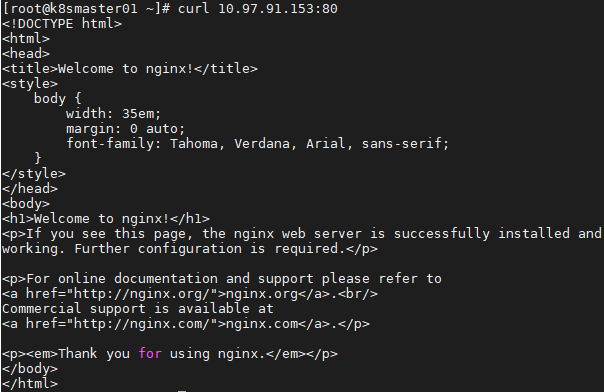

9.2 测试访问

1 [root@k8smaster01 ~]# curl 10.97.91.153:80

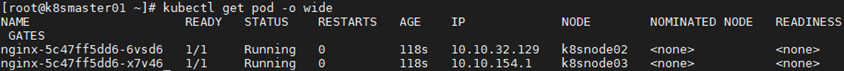

1 [root@k8smaster01 ~]# kubectl get pod -o wide #查看endpoint

1 [root@k8smaster01 ~]# curl 10.10.32.129:80 #访问endpoint,与访问服务ip结果相同

2 [root@k8smaster01 ~]# curl 10.10.154.1:80

十 部署dashboard

10.1 设置标签

1 [root@k8smaster01 ~]# kubectl label nodes k8smaster01 app=kube-system

2 [root@k8smaster01 ~]# kubectl label nodes k8smaster02 app=kube-system

3 [root@k8smaster01 ~]# kubectl label nodes k8smaster03 app=kube-system

10.2 创建证书

1 [root@k8smaster01 ~]# cd /etc/kubernetes/pki/

2 [root@k8smaster01 pki]# openssl genrsa -out dashboard.key 2048

3 [root@k8smaster01 pki]# openssl req -new -out dashboard.csr -key dashboard.key -subj "/CN=dashboard"

4 [root@k8smaster01 pki]# openssl x509 -req -sha256 -in dashboard.csr -out dashboard.crt -signkey dashboard.key -days 365

5 [root@k8smaster01 pki]# openssl x509 -noout -text -in ./dashboard.crt #查看证书

6

10.3 分发证书

1 [root@k8smaster01 pki]# scp dashboard.* root@k8smaster02:/etc/kubernetes/pki/

2 [root@k8smaster01 pki]# scp dashboard.* root@k8smaster03:/etc/kubernetes/pki/

3

10.4 手动创建secret

1 [root@k8smaster01 ~]# ll /etc/kubernetes/pki/dashboard.*

2 -rw-r--r-- 1 root root 1.2K Dec 3 03:10 /etc/kubernetes/pki/dashboard.crt

3 -rw-r--r-- 1 root root 976 Dec 3 03:10 /etc/kubernetes/pki/dashboard.csr

4 -rw-r--r-- 1 root root 1.7K Dec 3 03:09 /etc/kubernetes/pki/dashboard.key

5 [root@k8smaster01 ~]# kubectl create secret generic kubernetes-dashboard-certs --from-file="/etc/kubernetes/pki/dashboard.crt,/etc/kubernetes/pki/dashboard.key" -n kube-system #挂载新证书到dashboard

6 [root@master dashboard]# kubectl get secret kubernetes-dashboard-certs -n kube-system -o yaml #查看新证书

10.5 部署dashboard

1 [root@master01 ~]# vim /root/kubeadm-ha/addons/kubernetes-dashboard/kubernetes-dashboard.yaml #如下部分删除

2 # ------------------- Dashboard Secret ------------------- #

3

4 apiVersion: v1

5 kind: Secret

6 metadata:

7 labels:

8 k8s-app: kubernetes-dashboard

9 name: kubernetes-dashboard-certs

10 namespace: kube-system

11 type: Opaque

1 [root@k8smaster01 ~]# kubectl apply -f /root/kubeadm-ha/addons/kubernetes-dashboard/kubernetes-dashboard.yaml

2 [root@k8smaster01 ~]# kubectl get deployment kubernetes-dashboard -n kube-system

3 NAME READY UP-TO-DATE AVAILABLE AGE

4 kubernetes-dashboard 1/1 1 1 10s

5 [root@k8smaster01 ~]# kubectl get pods -o wide --namespace kube-system

6 [root@k8smaster01 ~]# kubectl get services kubernetes-dashboard -n kube-system

10.6 查看dashboard参数

1 [root@k8smaster01 ~]# kubectl exec --namespace kube-system -it kubernetes-dashboard-66cb8889-gjpnd -- /dashboard --help

十一 访问dashboard

11.1 导入证书

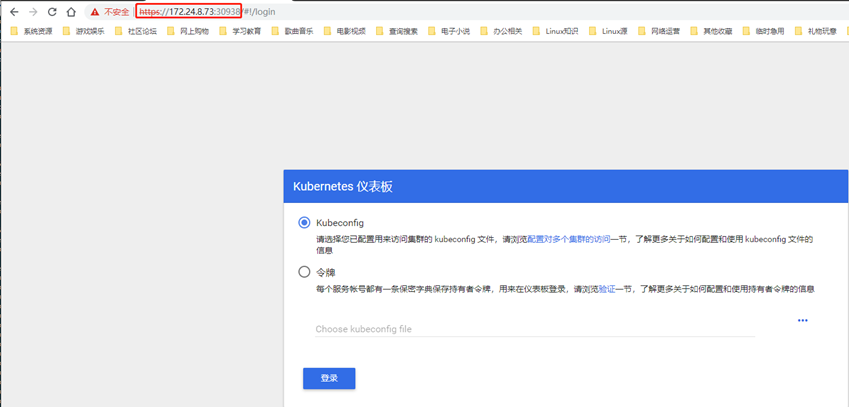

11.2 创建kubeconfig文件

1 [root@k8smaster01 ~]# ADMIN_SECRET=$(kubectl get secrets -n kube-system | grep admin-user-token- | awk '{print $1}')

2 [root@k8smaster01 ~]# DASHBOARD_LOGIN_TOKEN=$(kubectl describe secret -n kube-system ${ADMIN_SECRET} | grep -E '^token' | awk '{print $2}')

3 [root@k8smaster01 ~]# kubectl config set-cluster kubernetes \

4 --certificate-authority=/etc/kubernetes/pki/ca.crt \

5 --embed-certs=true \

6 --server=172.24.8.100:16443 \

7 --kubeconfig=dashboard.kubeconfig # 设置集群参数

8 [root@k8smaster01 ~]# kubectl config set-credentials dashboard_user \

9 --token=${DASHBOARD_LOGIN_TOKEN} \

10 --kubeconfig=dashboard_admin.kubeconfig # 设置客户端认证参数,使用上面创建的 Token

11 [root@k8smaster01 ~]# kubectl config set-context default \

12 --cluster=kubernetes \

13 --user=dashboard_user \

14 --kubeconfig=dashboard_admin.kubeconfig # 设置上下文参数

15 [root@k8smaster01 ~]# kubectl config use-context default --kubeconfig=dashboard_admin.kubeconfig # 设置默认上下文

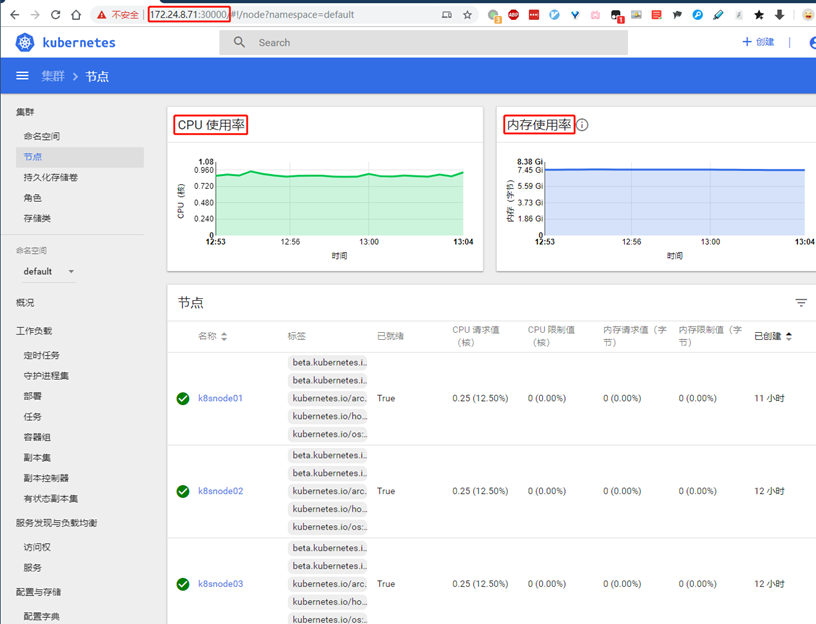

11.3 测试访问dashboard

十二 部署heapster

1 [root@VPN ~]# docker pull k8s.gcr.io/heapster-influxdb-amd64:v1.5.2

2 [root@VPN ~]# docker run -ti --rm --entrypoint "/bin/sh" k8s.gcr.io/heapster-influxdb-amd64:v1.5.2

3 / # sed -i "s/localhost/127.0.0.1/g" /etc/config.toml #容器内修改config

4 [root@VPN ~]# docker ps #另开一个终端

5 CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6 30b29cf20782 k8s.gcr.io/heapster-influxdb-amd64:v1.5.2 "/bin/sh" 5 minutes ago Up 5 minutes elastic_brahmagupta

7 [root@VPN ~]# docker commit elastic_brahmagupta k8s.gcr.io/heapster-influxdb-amd64:v1.5.2-fixed #提交修改后的容器为镜像

8 [root@VPN ~]# docker save -o heapster-influxdb-fixed.tar k8s.gcr.io/heapster-influxdb-amd64:v1.5.2-fixed

1 [root@VPN ~]# docker pull k8s.gcr.io/heapster-amd64:v1.5.4

2 [root@VPN ~]# docker save -o heapster.tar k8s.gcr.io/heapster-amd64:v1.5.4

1 [root@k8smaster01 ~]# docker load -i heapster.tar

2 [root@k8smaster01 ~]# docker load -i heapster-influxdb-fixed.tar

12.2 部署heapster

1 [root@k8smaster01 ~]# kubectl apply -f /root/kubeadm-ha/addons/heapster/

12.3 确认验证

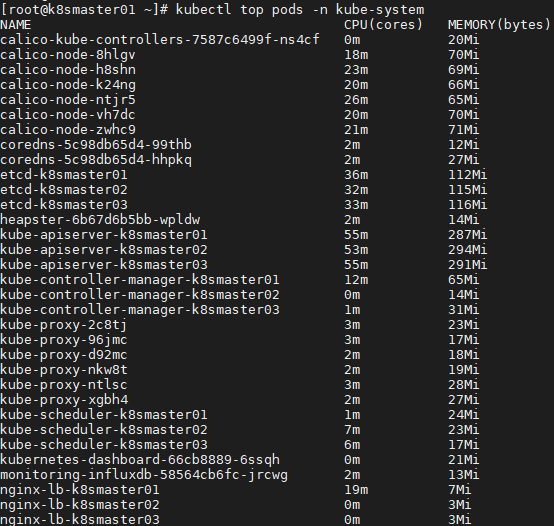

1 [root@k8smaster01 ~]# kubectl top pods -n kube-system

https://www.kubernetes.org.cn/4956.html

附012.Kubeadm部署高可用Kubernetes的更多相关文章

- kubernetes kubeadm部署高可用集群

k8s kubeadm部署高可用集群 kubeadm是官方推出的部署工具,旨在降低kubernetes使用门槛与提高集群部署的便捷性. 同时越来越多的官方文档,围绕kubernetes容器化部署为环境 ...

- kubeadm部署高可用集群Kubernetes 1.14.1版本

Kubernetes高可用集群部署 部署架构: Master 组件: kube-apiserver Kubernetes API,集群的统一入口,各组件协调者,以HTTP API提供接口服务,所有对象 ...

- kubeadm部署高可用K8S集群(v1.14.2)

1. 简介 测试环境Kubernetes 1.14.2版本高可用搭建文档,搭建方式为kubeadm 2. 服务器版本和架构信息 系统版本:CentOS Linux release 7.6.1810 ( ...

- hype-v上centos7部署高可用kubernetes集群实践

概述 在上一篇中已经实践了 非高可用的bubernetes集群的实践 普通的k8s集群当work node 故障时是高可用的,但是master node故障时将会发生灾难,因为k8s api serv ...

- kubeadm部署高可用版Kubernetes1.21[更新]

环境规划 主机名 IP地址 说明 k8s-master01 ~ 03 192.168.3.81 ~ 83 master节点 * 3 k8s-master-lb 192.168.3.200 keepal ...

- 使用Kubeadm搭建高可用Kubernetes集群

1.概述 Kubenetes集群的控制平面节点(即Master节点)由数据库服务(Etcd)+其他组件服务(Apiserver.Controller-manager.Scheduler...)组成. ...

- K8S 使用Kubeadm搭建高可用Kubernetes(K8S)集群 - 证书有效期100年

1.概述 Kubenetes集群的控制平面节点(即Master节点)由数据库服务(Etcd)+其他组件服务(Apiserver.Controller-manager.Scheduler...)组成. ...

- 基于Containerd安装部署高可用Kubernetes集群

转载自:https://blog.weiyigeek.top/2021/7-30-623.html 简述 Kubernetes(后续简称k8s)是 Google(2014年6月) 开源的一个容器编排引 ...

- Kubeadm部署高可用K8S集群

一 基础环境 1.1 资源 节点名称 ip地址 VIP 192.168.12.150 master01 192.168.12.48 master02 192.168.12.242 master03 1 ...

随机推荐

- 设计模式C++描述----18.中介者(Mediator)模式

一. 举例 比如,现在中图和日本在关于钓鱼岛问题上存在争端.这时,联合国就会站出来,做为调解者,其实也没什么好调解的,钓鱼岛本来就是中国的,这是不争的事实!联合国也就是个传话者.发言人. 结构图如下: ...

- 实战SpringCloud响应式微服务系列教程(第九章)使用Spring WebFlux构建响应式RESTful服务

本文为实战SpringCloud响应式微服务系列教程第九章,讲解使用Spring WebFlux构建响应式RESTful服务.建议没有之前基础的童鞋,先看之前的章节,章节目录放在文末. 从本节开始我们 ...

- 七牛云图片存储---Java

一.新建存储空间 到七牛云官网注册一个账号 新建一个存储空间 到个人中心获取秘钥 二.新建Java项目 1.pom.xml配置 <dependency> <groupId>co ...

- 机器学习笔记(一)· 感知机算法 · 原理篇

这篇学习笔记强调几何直觉,同时也注重感知机算法内部的动机.限于篇幅,这里仅仅讨论了感知机的一般情形.损失函数的引入.工作原理.关于感知机的对偶形式和核感知机,会专门写另外一篇文章.关于感知机的实现代码 ...

- 使用ASP.NET Core 3.x 构建 RESTful API - 1.准备工作

以前写过ASP.NET Core 2.x的REST API文章,今年再更新一下到3.0版本. 先决条件 我在B站有一个非常入门的ASP.NET Core 3.0的视频教程,如果您对ASP.NET Co ...

- Mongo 导出为csv文件

遇到需要从Mongo库导出到csv的情况,特此记录. 先贴上在mongo目录下命令行的语句: ./mongoexport -h 10.175.54.77 -u userName -p password ...

- Alibaba Java Coding Guidelines,以后的Java代码规范,就靠它了

前言 对于Java代码规范,业界有统一的标准,不少公司对此都有一定的要求.但是即便如此,庞大的Java使用者由于经验很水平的限制,未必有规范编码的意识,而且即便经验丰富的老Java程序员也无法做到时刻 ...

- 清华大学教学内核ucore学习系列(1) bootloader

ucore是清华大学操作系统课程的实验内核,也是一个开源项目,是不可多得的非常好的操作系统学习资源 https://github.com/chyyuu/ucore_lab.git, 各位同学可以使用g ...

- 深入了解 Java Resource && Spring Resource

在Java中,为了从相对路径读取文件,经常会使用的方法便是: xxx.class.getResource(); xxx.class.getClassLoader().getResource(); 在S ...

- python购物车练习题

# 购物车练习# 1.启动程序后,让用户输入工资,打印商品列表# 2.允许用户根据商品编号购买商品# 3.用户选择商品后,检测余额是否够,够就直接扣款,不够就提醒# 4.可随时退出,退出时,打印已购买 ...