Unity Shaderlab: Object Outlines 转

转 https://willweissman.wordpress.com/tutorials/shaders/unity-shaderlab-object-outlines/

Unity Shaderlab: Object Outlines

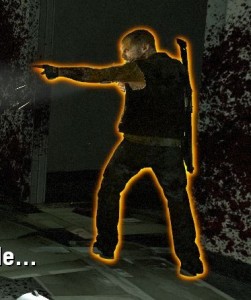

One of the simplest and most useful effects that isn’t already present in Unity is object outlines.

Screenshot from Left 4 Dead. Image Source: http://gamedev.stackexchange.com/questions/16391/how-can-i-reduce-aliasing-in-my-outline-glow-effect

Screenshot from Left 4 Dead. Image Source: http://gamedev.stackexchange.com/questions/16391/how-can-i-reduce-aliasing-in-my-outline-glow-effect

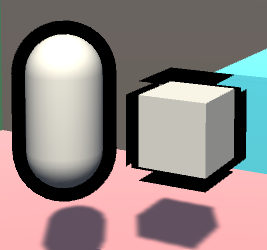

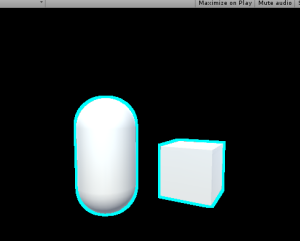

There are a couple of ways to do this, and the Unity Wiki covers one of these. But, the example demonstrated in the Wiki cannot make a “blurred” outline, and it requires smoothed normals for all vertices. If you need to have an edge on one of your outlined objects, you will get the following result:

The WRONG way to do outlines

The WRONG way to do outlines

While the capsule on the left looks fine, the cube on the right has artifacts. And to the beginners: the solution is NOT to apply smoothing groups or smooth normals out, or else it will mess with the lighting of the object. Instead, we need to do this as a simple post-processing effect. Here are the basic steps:

- Render the scene to a texture(render target)

- Render only the selected objects to another texture, in this case the capsule and box

- Draw a rectangle across the entire screen and put the texture on it with a custom shader

- The pixel/fragment shader for that rectangle will take samples from the previous texture, and add color to pixels which are near the object on that texture

- Blur the samples

Step 1: Render the scene to a texture

First things first, let’s make our C# script and attach it to the camera gameobject:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

using UnityEngine;using System.Collections;public class PostEffect : MonoBehaviour { Camera AttachedCamera; public Shader Post_Outline; void Start () { AttachedCamera = GetComponent<Camera>(); } void OnRenderImage(RenderTexture source, RenderTexture destination) { }} |

OnRenderImage() works as follows: After the scene is rendered, any component attached to the camera that is drawing receives this message, and a rendertexture containing the scene is passed in, along with a rendertexture that is to be output to, but the scene is not drawn to the screen. So, that’s step 1 complete.

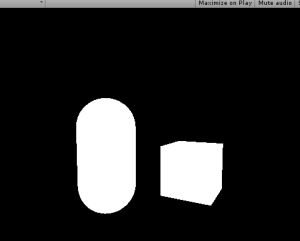

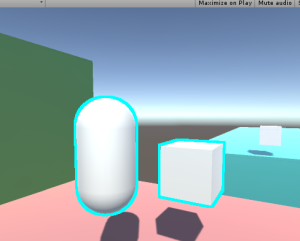

Here’s the scene by itself

Here’s the scene by itself

Step 2: Render only the selected objects to another texture

There are again many ways to select certain objects to render, but I believe this is the cleanest way. We are going to create a shader that ignores lighting or depth testing, and just draws the object as pure white. Then we re-draw the outlined objects, but with this shader.

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

|

//This shader goes on the objects themselves. It just draws the object as white, and has the "Outline" tag.Shader "Custom/DrawSimple"{ SubShader { ZWrite Off ZTest Always Lighting Off Pass { CGPROGRAM #pragma vertex VShader #pragma fragment FShader struct VertexToFragment { float4 pos:SV_POSITION; }; //just get the position correct VertexToFragment VShader(VertexToFragment i) { VertexToFragment o; o.pos=mul(UNITY_MATRIX_MVP,i.pos); return o; } //return white half4 FShader():COLOR0 { return half4(1,1,1,1); } ENDCG } }} |

Now, whenever the object is drawn with this shader, it will be white. We can make the object get drawn by using Unity’s Camera.RenderWithShader() function. So, our new camera code needs to render the objects that reside on a special layer, rendering them with this shader, to a texture. Because we can’t use the same camera to render twice in one frame, we need to make a new camera. We also need to handle our new RenderTexture, and work with binary briefly.

Our new C# code is as follows:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

|

using UnityEngine;using System.Collections;public class PostEffect : MonoBehaviour { Camera AttachedCamera; public Shader Post_Outline; public Shader DrawSimple; Camera TempCam; // public RenderTexture TempRT; void Start () { AttachedCamera = GetComponent<Camera>(); TempCam = new GameObject().AddComponent<Camera>(); TempCam.enabled = false; } void OnRenderImage(RenderTexture source, RenderTexture destination) { //set up a temporary camera TempCam.CopyFrom(AttachedCamera); TempCam.clearFlags = CameraClearFlags.Color; TempCam.backgroundColor = Color.black; //cull any layer that isn't the outline TempCam.cullingMask = 1 << LayerMask.NameToLayer("Outline"); //make the temporary rendertexture RenderTexture TempRT = new RenderTexture(source.width, source.height, 0, RenderTextureFormat.R8); //put it to video memory TempRT.Create(); //set the camera's target texture when rendering TempCam.targetTexture = TempRT; //render all objects this camera can render, but with our custom shader. TempCam.RenderWithShader(DrawSimple,""); //copy the temporary RT to the final image Graphics.Blit(TempRT, destination); //release the temporary RT TempRT.Release(); }} |

Bitmasks

The line:

|

1

|

TempCam.cullingMask = 1 << LayerMask.NameToLayer("Outline"); |

means that we are shifting the value (Decimal: 1, Binary: 00000000000000000000000000000001) a number of bits to the left, in this case the same number of bits as our layer’s decimal value. This is because binary value “01” is the first layer and value “010” is the second, and “0100” is the third, and so on, up to a total of 32 layers(because we have 32 bits up here). Unity uses this order of bits to mask what it draws, in other words, this is a Bitmask.

So, if our “Outline” layer is 8, to draw it, we need a bit in the 8th spot. We shift a bit that we know to be in the first spot over to the 8th spot. Layermask.NameToLayer() will return the decimal value of the layer(8), and the bit shift operator will shift the bits that many over(8).

To the beginners: No, you cannot just set the layer mask to “8”. 8 in decimal is actually 1000, which, when doing bitmask operations, is the 4th slot, and would result in the 4th layer being drawn.

Q: Why do we even do bitmasks?

A: For performance reasons.

Moving along…

Make sure that at render time, the objects you need outlined are on the outline layer. You could do this by changing the object’s layer in LateUpdate(), and setting it back in OnRenderObject(). But out of laziness, I’m just setting them to the outline layer in the editor.

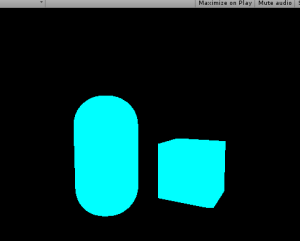

Objects to be outlined are rendered to a texture

Objects to be outlined are rendered to a texture

The above screenshot shows what the scene should look like with our code. So that’s step 2; we rendered those objects to a texture.

Step 3: Draw a rectangle to the screen and put the texture on it with a custom shader.

Except that’s already what’s going on in the code; Graphics.Blit() copies a texture over to a rendertexture. It draws a full-screen quad(vertex coordinates 0,0, 0,1, 1,1, 1,0) an puts the texture on it.

And, you can pass in a custom shader for when it draws this.

So, let’s make a new shader:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

|

Shader "Custom/Post Outline"{ Properties { //Graphics.Blit() sets the "_MainTex" property to the texture passed in _MainTex("Main Texture",2D)="black"{} } SubShader { Pass { CGPROGRAM sampler2D _MainTex; #pragma vertex vert #pragma fragment frag #include "UnityCG.cginc" struct v2f { float4 pos : SV_POSITION; float2 uvs : TEXCOORD0; }; v2f vert (appdata_base v) { v2f o; //Despite the fact that we are only drawing a quad to the screen, Unity requires us to multiply vertices by our MVP matrix, presumably to keep things working when inexperienced people try copying code from other shaders. o.pos = mul(UNITY_MATRIX_MVP,v.vertex); //Also, we need to fix the UVs to match our screen space coordinates. There is a Unity define for this that should normally be used. o.uvs = o.pos.xy / 2 + 0.5; return o; } half4 frag(v2f i) : COLOR { //return the texture we just looked up return tex2D(_MainTex,i.uvs.xy); } ENDCG } //end pass } //end subshader}//end shader |

If we put this shader onto a new material, and pass that material into Graphics.Blit(), we can now re-draw our rendered texture with our custom shader.

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

|

using UnityEngine;using System.Collections;public class PostEffect : MonoBehaviour { Camera AttachedCamera; public Shader Post_Outline; public Shader DrawSimple; Camera TempCam; Material Post_Mat; // public RenderTexture TempRT; void Start () { AttachedCamera = GetComponent<Camera>(); TempCam = new GameObject().AddComponent<Camera>(); TempCam.enabled = false; Post_Mat = new Material(Post_Outline); } void OnRenderImage(RenderTexture source, RenderTexture destination) { //set up a temporary camera TempCam.CopyFrom(AttachedCamera); TempCam.clearFlags = CameraClearFlags.Color; TempCam.backgroundColor = Color.black; //cull any layer that isn't the outline TempCam.cullingMask = 1 << LayerMask.NameToLayer("Outline"); //make the temporary rendertexture RenderTexture TempRT = new RenderTexture(source.width, source.height, 0, RenderTextureFormat.R8); //put it to video memory TempRT.Create(); //set the camera's target texture when rendering TempCam.targetTexture = TempRT; //render all objects this camera can render, but with our custom shader. TempCam.RenderWithShader(DrawSimple,""); //copy the temporary RT to the final image Graphics.Blit(TempRT, destination,Post_Mat); //release the temporary RT TempRT.Release(); }} |

Which should lead to the results above, but the process is now using our own shader.

Step 4: add color to pixels which are near white pixels on the texture.

For this, we need to get the relevant texture coordinate of the pixel we are rendering, and look up all adjacent pixels for existing objects. If an object exists near our pixel, then we should draw a color at our pixel, as our pixel is within the outlined radius.

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

|

Shader "Custom/Post Outline"{ Properties { _MainTex("Main Texture",2D)="black"{} } SubShader { Pass { CGPROGRAM sampler2D _MainTex; //<SamplerName>_TexelSize is a float2 that says how much screen space a texel occupies. float2 _MainTex_TexelSize; #pragma vertex vert #pragma fragment frag #include "UnityCG.cginc" struct v2f { float4 pos : SV_POSITION; float2 uvs : TEXCOORD0; }; v2f vert (appdata_base v) { v2f o; //Despite the fact that we are only drawing a quad to the screen, Unity requires us to multiply vertices by our MVP matrix, presumably to keep things working when inexperienced people try copying code from other shaders. o.pos = mul(UNITY_MATRIX_MVP,v.vertex); //Also, we need to fix the UVs to match our screen space coordinates. There is a Unity define for this that should normally be used. o.uvs = o.pos.xy / 2 + 0.5; return o; } half4 frag(v2f i) : COLOR { //arbitrary number of iterations for now int NumberOfIterations=9; //split texel size into smaller words float TX_x=_MainTex_TexelSize.x; float TX_y=_MainTex_TexelSize.y; //and a final intensity that increments based on surrounding intensities. float ColorIntensityInRadius; //for every iteration we need to do horizontally for(int k=0;k<NumberOfIterations;k+=1) { //for every iteration we need to do vertically for(int j=0;j<NumberOfIterations;j+=1) { //increase our output color by the pixels in the area ColorIntensityInRadius+=tex2D( _MainTex, i.uvs.xy+float2 ( (k-NumberOfIterations/2)*TX_x, (j-NumberOfIterations/2)*TX_y ) ).r; } } //output some intensity of teal return ColorIntensityInRadius*half4(0,1,1,1); } ENDCG } //end pass } //end subshader}//end shader |

And then, if an object exists under the pixel, discard the pixel:

|

1

2

3

4

5

|

//if something already exists underneath the fragment, discard the fragment. if(tex2D(_MainTex,i.uvs.xy).r>0) { discard; } |

And finally, add a blend mode to the shader:

|

1

|

Blend SrcAlpha OneMinusSrcAlpha |

And the resulting shader:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

|

Shader "Custom/Post Outline"{ Properties { _MainTex("Main Texture",2D)="white"{} } SubShader { Blend SrcAlpha OneMinusSrcAlpha Pass { CGPROGRAM sampler2D _MainTex; //<SamplerName>_TexelSize is a float2 that says how much screen space a texel occupies. float2 _MainTex_TexelSize; #pragma vertex vert #pragma fragment frag #include "UnityCG.cginc" struct v2f { float4 pos : SV_POSITION; float2 uvs : TEXCOORD0; }; v2f vert (appdata_base v) { v2f o; //Despite the fact that we are only drawing a quad to the screen, Unity requires us to multiply vertices by our MVP matrix, presumably to keep things working when inexperienced people try copying code from other shaders. o.pos = mul(UNITY_MATRIX_MVP,v.vertex); //Also, we need to fix the UVs to match our screen space coordinates. There is a Unity define for this that should normally be used. o.uvs = o.pos.xy / 2 + 0.5; return o; } half4 frag(v2f i) : COLOR { //arbitrary number of iterations for now int NumberOfIterations=9; //split texel size into smaller words float TX_x=_MainTex_TexelSize.x; float TX_y=_MainTex_TexelSize.y; //and a final intensity that increments based on surrounding intensities. float ColorIntensityInRadius; //if something already exists underneath the fragment, discard the fragment. if(tex2D(_MainTex,i.uvs.xy).r>0) { discard; } //for every iteration we need to do horizontally for(int k=0;k<NumberOfIterations;k+=1) { //for every iteration we need to do vertically for(int j=0;j<NumberOfIterations;j+=1) { //increase our output color by the pixels in the area ColorIntensityInRadius+=tex2D( _MainTex, i.uvs.xy+float2 ( (k-NumberOfIterations/2)*TX_x, (j-NumberOfIterations/2)*TX_y ) ).r; } } //output some intensity of teal return ColorIntensityInRadius*half4(0,1,1,1); } ENDCG } //end pass } //end subshader}//end shader |

Step 5: Blur the samples

Now, at this point, we don’t have any form of blur or gradient. There also exists the problem of performance: If we want an outline that is 3 pixels thick, there are 3×3, or 9, texture lookups per pixel. If we want to increase the outline radius to 20 pixels, that is 20×20, or 400 texture lookups per pixel!

We can solve both of these problems with our upcoming method of blurring, which is very similar to how most gaussian blurs are performed. It is important to note that we are not doing a gaussian blur in this tutorial, as the method of weight calculation is different. I recommend that if you are experienced with shaders, you should do a gaussian blur here, but color it one single color.

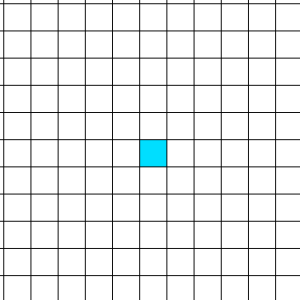

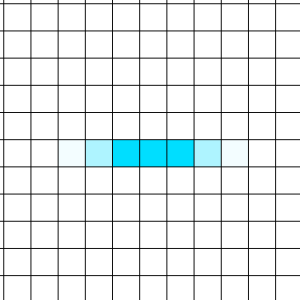

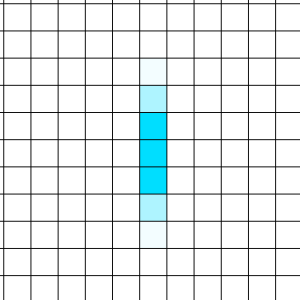

We start with a pixel:

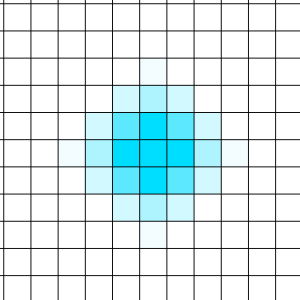

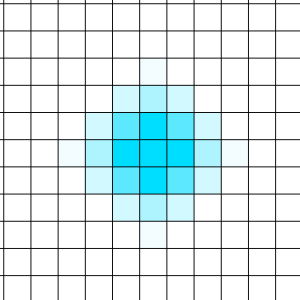

Which we can sample all neighboring pixels in a circle and weight them based on their distance to the sample input:

Which looks really good! But again, that’s a lot of samples once you reach a higher radius.

Fortunately, there’s a cool trick we can do.

We can blur the texel horizontally to a texture….

And then read from that new texture, and blur vertically…

Which leads to a blur as well!

And now, instead of an exponential increase in samples with radius, the increase is now linear. When the radius is 5 pixels, we blur 5 pixels horizontally, then 5 pixels vertically. 5+5 = 10, compared to our other method, where 5×5 = 25.

To do this, we need to make 2 passes. Each pass will function like the shader code above, but remove one “for” loop. We also don’t bother with the discarding in the first pass, and instead leave it for the second.

The first pass also uses a single channel for the fragment shader; No colors are needed at that point.

Because we are using two passes, we can’t simply use a blend mode over the existing scene data. Now, we need to do blending ourselves, instead of leaving it to the hardware blending operations.

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

|

Shader "Custom/Post Outline"{ Properties { _MainTex("Main Texture",2D)="black"{} _SceneTex("Scene Texture",2D)="black"{} } SubShader { Pass { CGPROGRAM sampler2D _MainTex; //<SamplerName>_TexelSize is a float2 that says how much screen space a texel occupies. float2 _MainTex_TexelSize; #pragma vertex vert #pragma fragment frag #include "UnityCG.cginc" struct v2f { float4 pos : SV_POSITION; float2 uvs : TEXCOORD0; }; v2f vert (appdata_base v) { v2f o; //Despite the fact that we are only drawing a quad to the screen, Unity requires us to multiply vertices by our MVP matrix, presumably to keep things working when inexperienced people try copying code from other shaders. o.pos = mul(UNITY_MATRIX_MVP,v.vertex); //Also, we need to fix the UVs to match our screen space coordinates. There is a Unity define for this that should normally be used. o.uvs = o.pos.xy / 2 + 0.5; return o; } half frag(v2f i) : COLOR { //arbitrary number of iterations for now int NumberOfIterations=20; //split texel size into smaller words float TX_x=_MainTex_TexelSize.x; //and a final intensity that increments based on surrounding intensities. float ColorIntensityInRadius; //for every iteration we need to do horizontally for(int k=0;k<NumberOfIterations;k+=1) { //increase our output color by the pixels in the area ColorIntensityInRadius+=tex2D( _MainTex, i.uvs.xy+float2 ( (k-NumberOfIterations/2)*TX_x, 0 ) ).r/NumberOfIterations; } //output some intensity of teal return ColorIntensityInRadius; } ENDCG } //end pass GrabPass{} Pass { CGPROGRAM sampler2D _MainTex; sampler2D _SceneTex; //we need to declare a sampler2D by the name of "_GrabTexture" that Unity can write to during GrabPass{} sampler2D _GrabTexture; //<SamplerName>_TexelSize is a float2 that says how much screen space a texel occupies. float2 _GrabTexture_TexelSize; #pragma vertex vert #pragma fragment frag #include "UnityCG.cginc" struct v2f { float4 pos : SV_POSITION; float2 uvs : TEXCOORD0; }; v2f vert (appdata_base v) { v2f o; //Despite the fact that we are only drawing a quad to the screen, Unity requires us to multiply vertices by our MVP matrix, presumably to keep things working when inexperienced people try copying code from other shaders. o.pos=mul(UNITY_MATRIX_MVP,v.vertex); //Also, we need to fix the UVs to match our screen space coordinates. There is a Unity define for this that should normally be used. o.uvs = o.pos.xy / 2 + 0.5; return o; } half4 frag(v2f i) : COLOR { //arbitrary number of iterations for now int NumberOfIterations=20; //split texel size into smaller words float TX_y=_GrabTexture_TexelSize.y; //and a final intensity that increments based on surrounding intensities. half ColorIntensityInRadius=0; //if something already exists underneath the fragment (in the original texture), discard the fragment. if(tex2D(_MainTex,i.uvs.xy).r>0) { return tex2D(_SceneTex,float2(i.uvs.x,1-i.uvs.y)); } //for every iteration we need to do vertically for(int j=0;j<NumberOfIterations;j+=1) { //increase our output color by the pixels in the area ColorIntensityInRadius+= tex2D( _GrabTexture, float2(i.uvs.x,1-i.uvs.y)+float2 ( 0, (j-NumberOfIterations/2)*TX_y ) ).r/NumberOfIterations; } //this is alpha blending, but we can't use HW blending unless we make a third pass, so this is probably cheaper. half4 outcolor=ColorIntensityInRadius*half4(0,1,1,1)*2+(1-ColorIntensityInRadius)*tex2D(_SceneTex,float2(i.uvs.x,1-i.uvs.y)); return outcolor; } ENDCG } //end pass } //end subshader}//end shader |

And the final result:

Where you can go from here

First of all, the values, iterations, radius, color, etc are all hardcoded in the shader. You can set them as properties to be more code and designer friendly.

Second, my code has a falloff based on the number of filled texels in the area, and not the distance to each texel. You could create a gaussian kernel table and multiply the blurs with your values, which would run a little bit faster, but also remove the artifacts and uneven-ness you can see at the corners of the cube.

Don’t try to generate the gaussian kernel at runtime, though. That is super expensive.

I hope you learned a lot from this! If you have any questions or comments, please leave them below.

Unity Shaderlab: Object Outlines 转的更多相关文章

- Unity ShaderLab学习总结

http://www.jianshu.com/p/7b9498e58659 Unity ShaderLab学习总结 Why Bothers? 为什么已经有ShaderForge这种可视化Shader编 ...

- unity中object 对象之间用c# delegate方式进行通信

unity 3D经常需要设计到不同object之间数据通信和事件信息触发.这里可以利用C#本身的事件和代理的方法来实现. 这里实现了在GUI上点击按钮,触发事件,移动object cube移动的例子. ...

- unity ShaderLab 编辑器——sublime text 2

sublime text 2,支持unity shader关键字高亮显示,智能提示功能.这个脚本编辑器的售价是70美元,不过作者很厚道地给了我们永久的免费试用期. 1)下载sublime text 2 ...

- Unity——ShaderLab实现玻璃和镜子效果

在这一篇中会实现会介绍折射和反射,以及菲尼尔反射:并且实现镜子和玻璃效果: 这里和之前不同的地方在于取样的是一张CubeMap: demo里的cubemap使用的一样,相机所在位置拍出来的周围环境图: ...

- unity shaderlab Blend操作

原文链接: http://www.tiankengblog.com/?p=84 Blend混合操作是作用于在所有计算之后,是Shader渲染的最后一步,进行Blend操作后就可以显示在屏幕上.shad ...

- Unity ShaderLab 光照随笔

unity camera默认3种渲染路径,unity5.50里面有4种 camera Rendering Path 1 vertexLit(逐顶点,一般在vert中处理) 2 forward (前向 ...

- Unity ShaderLab 学习笔记(一)

因为项目的问题,有个效果在iOS上面无法实现出来- 因为shader用的HardSurface的,在android上面跑起来没有问题- 以为在iOS上也不会有问题,但是悲剧啊,技能效果一片漆黑- 而且 ...

- Unity获取object所有属性的一个方法,一些界面上没有开放的属性可以用该方法编辑

static void PrintProperty () { if(Selection.activeObject == null) return; SerializedObject so = new ...

- Unity——ShaderLab基础

1.格式 Shader "Custom/MyShader" //命名+右键创建shader路径 { //属性必须在代码里声明才能使用 Properties{ //属性,会出现在in ...

随机推荐

- python smtplib 发送邮件简单介绍

SMTP(Simple Mail Transfer Protocol)即简单邮件传输协议,它是一组用于由源地址到目的地址传送邮件的规则,由它来控制信件的中转方式python的smtplib提供了一种很 ...

- mysql 通过查看mysql 配置参数、状态来优化你的mysql

我把MYISAM改成了INNODB,数据库对CPU方面的占用变小很多' mysql的监控方法大致分为两类: 1.连接到mysql数据库内部,使用show status,show variables,f ...

- Prometheus监控学习笔记之PromQL简单示例

0x00 简单的时间序列选择 返回度量指标 http_requests_total 的所有时间序列样本数据: http_requests_total 返回度量指标名称为 http_requests_t ...

- P3369 【模板】普通平衡树(splay)

P3369 [模板]普通平衡树 就是不用treap splay板子,好好背吧TAT #include<iostream> #include<cstdio> #include&l ...

- linux设置代理

在~/.bashrc或者/etc/profile下,添加下面 http_proxy=http://192.168.105.171:80 https_proxy=$http_proxy export h ...

- HAProxy实现slave负载均衡[高可用]

下面要执行的是HAProxy部分 这是一个集群,其他的部分在: mysql-cluster 7.3.5安装部署 mysql主备部署[高可用] mysql主备切换[高可用] mysql读写分离[高可用] ...

- noip模拟【noname】

noname [问题描述] 给定一个长度为n的正整数序列,你的任务就是求出至少需要修改序列中的多少个数才能使得该数列成为一个严格(即不允许相等)单调递增的正整数序列,对序列中的任意一个数,你都可以将其 ...

- 為什麼gnome-terminal中不能使用ctrl_shift_f來進行查找? 是因為 跟输入法的全局设置衝突了!

但是,也要注意, 为什么ctrl+shift_f有时候可以使用, 有时候又不可以使用? 是因为, 这个跟输入法的状态有关, 如果输入法是英文, 那么中文的 "简体/繁体切换快捷键ctrl+s ...

- [HEOI2016/TJOI2016]树

[HEOI2016/TJOI2016]树 思路 做的时候也是糊里糊涂的 就是求最大值的线段树 错误 线段树写错了 #include <bits/stdc++.h> #define FOR( ...

- 【Spring Security】一、快速入手

一 概要 Spring Security,这是一种基于 Spring AOP 和 Servlet 过滤器的安全框架.它提供全面的安全性解决方案,同时在 Web 请求级和方法调用级处理身份确认和授权.这 ...