Fundmentals in Stream Computing

Spark programs are structured on RDDs: they invole reading data from stable storage into the RDD format, performing a number of computations and

data transformations on the RDD, and writing the result RDD to stable storage on collecting to the driver. Thus, most of the power of Spark comes from

its transformation: operations that are defined on RDDs and return RDDs.

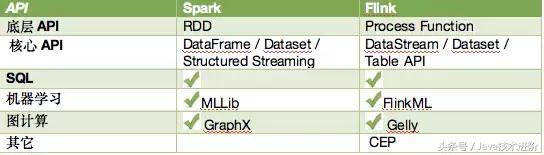

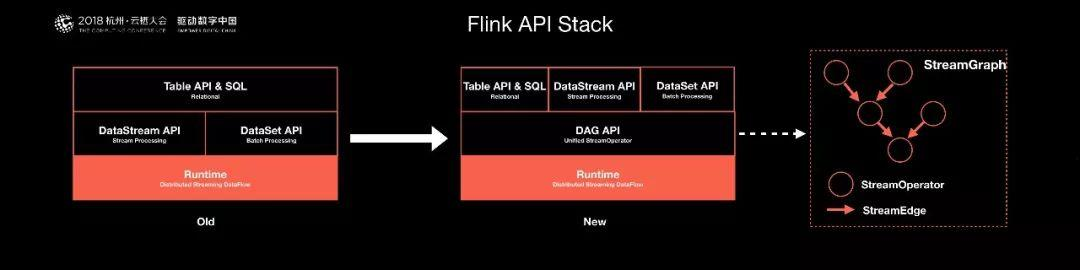

1. Need core underlying layer as basic fundmentals

2. Providing the API to high level

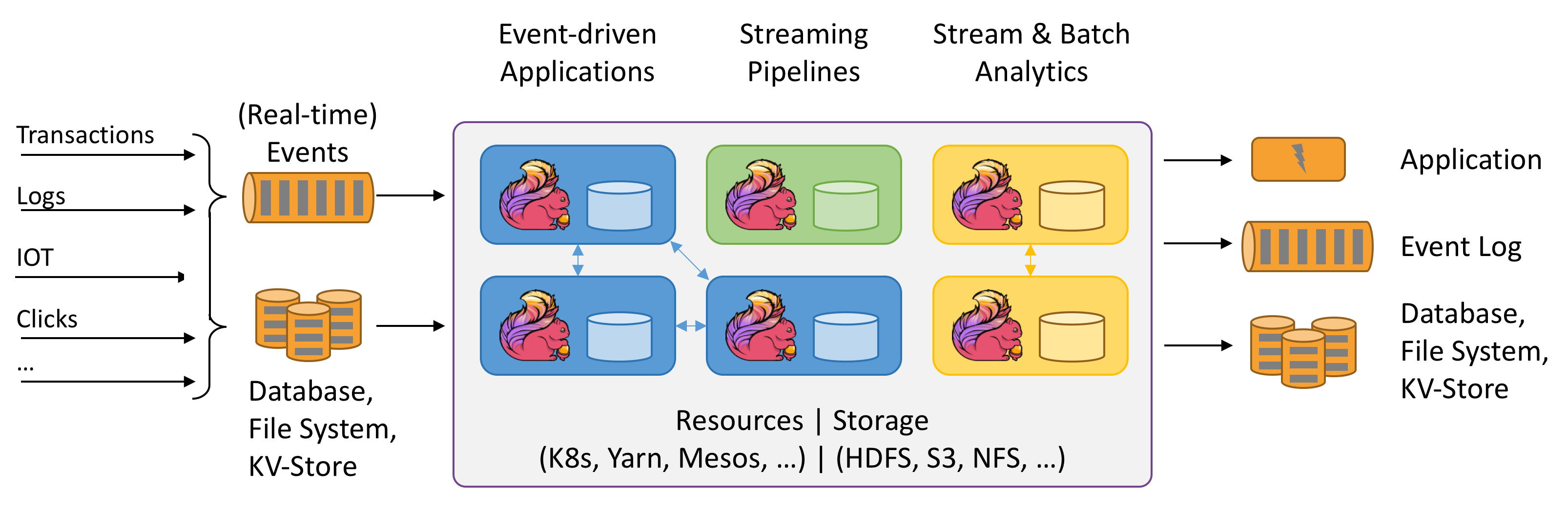

3. Stream computing = core underlying API + Distributed RPC + Computing Template + Cluster of executor

4.What will be computed, the Sequence of computed and definition of (K,V) are totally in hand of Users through the defined Computing Template.

5. We can say that Distributed Computing is a kind of platform to provide more Computing Template to operate the user data which is splited and distributed in cluster.

6. The ML/Bigdata SQL alike use these Stream API to do there jobs.

7. Remmeber that Stream Computing is a platform or runtime of operating distributed data with Computing Template (transformation API).

8. We can see a lot of common between StreamComputing and OS, which all provide the API to have operation on Data in Stream and on Hardeware in OS.

9.Stream Computing Runtime has API of Computing Template / Computing Generic; OS has API of Resource Operation on PC hardware.

Operators transform one or more DataStreams into a new DataStream. Programs can combine multiple transformations into sophisticated dataflow topologies.

| ransformation | Description |

|---|---|

| Map DataStream → DataStream |

Takes one element and produces one element. A map function that doubles the values of the input stream: |

| FlatMap DataStream → DataStream |

Takes one element and produces zero, one, or more elements. A flatmap function that splits sentences to words: |

| Filter DataStream → DataStream |

Evaluates a boolean function for each element and retains those for which the function returns true. A filter that filters out zero values: |

| KeyBy DataStream → KeyedStream |

Logically partitions a stream into disjoint partitions. All records with the same key are assigned to the same partition. Internally, keyBy() is implemented with hash partitioning. There are different ways to specify keys. This transformation returns a KeyedStream, which is, among other things, required to use keyed state. Attention A type cannot be a key if:

|

| Reduce KeyedStream → DataStream |

A "rolling" reduce on a keyed data stream. Combines the current element with the last reduced value and emits the new value. A reduce function that creates a stream of partial sums: |

| Fold KeyedStream → DataStream |

A "rolling" fold on a keyed data stream with an initial value. Combines the current element with the last folded value and emits the new value. A fold function that, when applied on the sequence (1,2,3,4,5), emits the sequence "start-1", "start-1-2", "start-1-2-3", ... |

| Aggregations KeyedStream → DataStream |

Rolling aggregations on a keyed data stream. The difference between min and minBy is that min returns the minimum value, whereas minBy returns the element that has the minimum value in this field (same for max and maxBy). |

| Window KeyedStream → WindowedStream |

Windows can be defined on already partitioned KeyedStreams. Windows group the data in each key according to some characteristic (e.g., the data that arrived within the last 5 seconds). See windows for a complete description of windows. |

| WindowAll DataStream → AllWindowedStream |

Windows can be defined on regular DataStreams. Windows group all the stream events according to some characteristic (e.g., the data that arrived within the last 5 seconds). See windows for a complete description of windows. WARNING: This is in many cases a non-parallel transformation. All records will be gathered in one task for the windowAll operator. |

| Window Apply WindowedStream → DataStream AllWindowedStream → DataStream |

Applies a general function to the window as a whole. Below is a function that manually sums the elements of a window. Note: If you are using a windowAll transformation, you need to use an AllWindowFunction instead. |

| Window Reduce WindowedStream → DataStream |

Applies a functional reduce function to the window and returns the reduced value. |

| Window Fold WindowedStream → DataStream |

Applies a functional fold function to the window and returns the folded value. The example function, when applied on the sequence (1,2,3,4,5), folds the sequence into the string "start-1-2-3-4-5": |

| Aggregations on windows WindowedStream → DataStream |

Aggregates the contents of a window. The difference between min and minBy is that min returns the minimum value, whereas minBy returns the element that has the minimum value in this field (same for max and maxBy). |

| Union DataStream* → DataStream |

Union of two or more data streams creating a new stream containing all the elements from all the streams. Note: If you union a data stream with itself you will get each element twice in the resulting stream. |

| Window Join DataStream,DataStream → DataStream |

Join two data streams on a given key and a common window. |

| Interval Join KeyedStream,KeyedStream → DataStream |

Join two elements e1 and e2 of two keyed streams with a common key over a given time interval, so that e1.timestamp + lowerBound <= e2.timestamp <= e1.timestamp + upperBound |

| Window CoGroup DataStream,DataStream → DataStream |

Cogroups two data streams on a given key and a common window. |

| Connect DataStream,DataStream → ConnectedStreams |

"Connects" two data streams retaining their types. Connect allowing for shared state between the two streams. |

| CoMap, CoFlatMap ConnectedStreams → DataStream |

Similar to map and flatMap on a connected data stream |

| Split DataStream → SplitStream |

Split the stream into two or more streams according to some criterion. |

| Select SplitStream → DataStream |

Select one or more streams from a split stream. |

| Iterate DataStream → IterativeStream → DataStream |

Creates a "feedback" loop in the flow, by redirecting the output of one operator to some previous operator. This is especially useful for defining algorithms that continuously update a model. The following code starts with a stream and applies the iteration body continuously. Elements that are greater than 0 are sent back to the feedback channel, and the rest of the elements are forwarded downstream. See iterations for a complete description. |

| Extract Timestamps DataStream → DataStream |

Extracts timestamps from records in order to work with windows that use event time semantics. See Event Time. |

Fundmentals in Stream Computing的更多相关文章

- [Note] Stream Computing

Stream Computing 概念对比 静态数据和流数据 静态数据,例如数据仓库中存放的大量历史数据,特点是不会发生更新,可以利用数据挖掘技术和 OLAP(On-Line Analytical P ...

- Stream computing

stream data 从广义上说,所有大数据的生成均可以看作是一连串发生的离散事件.这些离散的事件以时间轴为维度进行观看就形成了一条条事件流/数据流.不同于传统的离线数据,流数据是指由数千个数据源持 ...

- [Linux] 流 ( Stream )、管道 ( Pipeline ) 、Filter - 笔记

流 ( Stream ) 1. 流,是指可使用的数据元素一个序列. 2. 流,可以想象为是传送带上等待加工处理的物品,也可以想象为工厂流水线上的物品. 3. 流,可以是无限的数据. 4. 有一种功能, ...

- MapReduce的核心资料索引 [转]

转自http://prinx.blog.163.com/blog/static/190115275201211128513868/和http://www.cnblogs.com/jie46583173 ...

- 分布式系统(Distributed System)资料

这个资料关于分布式系统资料,作者写的太好了.拿过来以备用 网址:https://github.com/ty4z2008/Qix/blob/master/ds.md 希望转载的朋友,你可以不用联系我.但 ...

- 资源list:Github上关于大数据的开源项目、论文等合集

Awesome Big Data A curated list of awesome big data frameworks, resources and other awesomeness. Ins ...

- Mac OS X 背后的故事

Mac OS X 背后的故事 作者: 王越 来源: <程序员> 发布时间: 2013-01-22 10:55 阅读: 25840 次 推荐: 49 原文链接 [收藏] ...

- 想从事分布式系统,计算,hadoop等方面,需要哪些基础,推荐哪些书籍?--转自知乎

作者:廖君链接:https://www.zhihu.com/question/19868791/answer/88873783来源:知乎 分布式系统(Distributed System)资料 < ...

- 【原】Kryo序列化篇

Kryo是一个快速有效的对象图序列化Java库.它的目标是快速.高效.易使用.该项目适用于对象持久化到文件或数据库中或通过网络传输.Kryo还可以自动实现深浅的拷贝/克隆. 就是直接复制一个对象对象到 ...

随机推荐

- PHP将多维数组变成一维数组

function reduceArray($array) { $return = []; array_walk_recursive($array, function ($x) use (&$r ...

- vi基本状态

vi状态退出并保存:shift+ZZ vi readme.txt 进入VIM编辑器,可以新建文件也可以修改文件 如果这个文件,以前是没有的,则为新建,则下方有提示为新文件. 按ESC键 跳到命令模式, ...

- HDU - 5306 剪枝的线段树

题意:给定\(a[1...n]\),\(m\)次操作,0表示使\([L,R]\)中的值\(a[i]=min(a[i],x)\),其余的1是查最值2是查区间和 本题是吉利爷的2016论文题,1 2套路不 ...

- Python——付费/版权歌曲下载

很多歌曲需要版权或者付费才能收听 正确食用方法: 1.找到歌曲编号 2.输入编号并点击下载歌曲 # coding:utf8 # author:Jery # datetime:2019/4/13 23: ...

- Mysql技术内幕笔记

mysql由以下几个部分组成: 连接池组件 管理服务和工具组件 sql接口组价 查询分析器组价 优化器组价 缓存(cache)组价 插件式存储引擎 物理文件. 可以看出,MySQL数据库区别于其他数据 ...

- vuex到底是什么?

vuex到底是什么? 使用vue也有一段时间了,但是对vue的理解似乎还是停留在初始状态,究其原因,不得不说是自己没有深入进去,理解本质,导致开发效率低,永远停留在表面, 更坏的结果就是refresh ...

- dfs.replication、dfs.replication.min/max及dfs.safemode.threshold.pct

一.参数含义 dfs.replication:设置数据块应该被复制的份数: dfs.replication.min:所规定的数据块副本的最小份数: dfs.replication.max:所规定的数据 ...

- hadoop源码svn下载地址

1.apache开源框架

- Druid SQL 解析器概览

概览 Druid 的官方 wiki 对 SQL 解析器部分的讲解内容并不多,但虽然不多,也有利于完全没接触过 Druid 的人对 SQL 解析器有个初步的印象. 说到解析器,脑海里便很容易浮现 par ...

- Java生成二维码和解析二维码URL

二维码依赖jar包,zxing <!-- 二维码依赖 start --><dependency> <groupId>com.google.zxing</gro ...