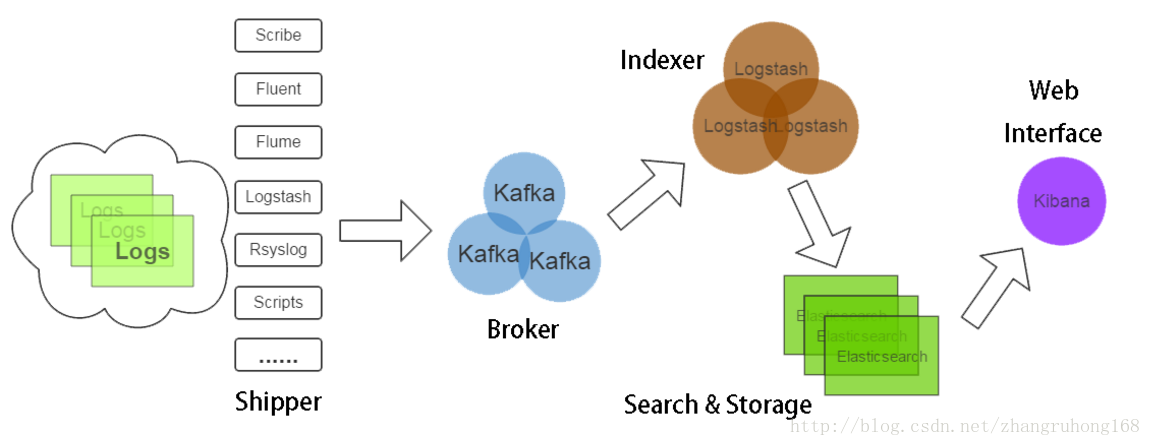

Spring Cloud Sleuth通过Kafka将链路追踪日志输出到ELK

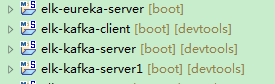

1、工程简介

elk-eureka-server作为其他三个项目的服务注册中心

elk-kafka-client调用elk-kafka-server,elk-kafka-server再调用elk-kafka-server1

2、新建springboot项目elk-eureka-server

pom.xml内容如下

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion> <groupId>com.carry.elk</groupId>

<artifactId>elk-eureka-server</artifactId>

<version>0.0.1-SNAPSHOT</version>

<packaging>jar</packaging> <name>elk-eureka-server</name>

<description>Demo project for Spring Boot</description> <parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.0.3.RELEASE</version>

<relativePath/> <!-- lookup parent from repository -->

</parent> <properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<project.reporting.outputEncoding>UTF-8</project.reporting.outputEncoding>

<java.version>1.8</java.version>

<spring-cloud.version>Finchley.RELEASE</spring-cloud.version>

</properties> <dependencies>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-netflix-eureka-server</artifactId>

</dependency> <dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

</dependencies> <dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-dependencies</artifactId>

<version>${spring-cloud.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement> <build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

</plugin>

</plugins>

</build> </project>

application.yml内容如下

server:

port: 8761

eureka:

instance:

hostname: localhost

client:

registerWithEureka: false

fetchRegistry: false

serviceUrl:

defaultZone: http://${eureka.instance.hostname}:${server.port}/eureka/

在启动类ElkEurekaServerApplication上加上注解@EnableEurekaServer

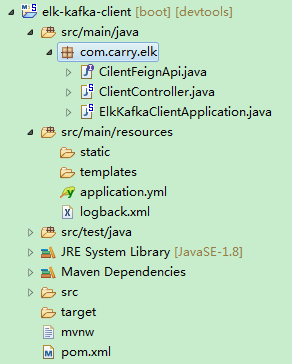

3、新建springboot项目elk-kafka-client

pom.xml内容如下

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion> <groupId>com.carry.elk</groupId>

<artifactId>elk-kafka-client</artifactId>

<version>0.0.1-SNAPSHOT</version>

<packaging>jar</packaging> <name>elk-kafka-client</name>

<description>Demo project for Spring Boot</description> <parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.0.3.RELEASE</version>

<relativePath /> <!-- lookup parent from repository -->

</parent> <properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<project.reporting.outputEncoding>UTF-8</project.reporting.outputEncoding>

<java.version>1.8</java.version>

<spring-cloud.version>Finchley.RELEASE</spring-cloud.version>

</properties> <dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-netflix-eureka-client</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-openfeign</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-sleuth</artifactId>

</dependency>

<dependency>

<groupId>net.logstash.logback</groupId>

<artifactId>logstash-logback-encoder</artifactId>

<version>4.11</version>

</dependency>

<dependency>

<groupId>com.github.danielwegener</groupId>

<artifactId>logback-kafka-appender</artifactId>

<version>0.1.0</version>

<scope>runtime</scope>

</dependency> <dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-devtools</artifactId>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

</dependencies> <dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-dependencies</artifactId>

<version>${spring-cloud.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement> <build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

</plugin>

</plugins>

</build> </project>

application.yml内容如下

server:

port: 8080

spring:

application:

name: ELK-KAFKA-CLIENT

devtools:

restart:

enabled: true

eureka:

client:

serviceUrl:

defaultZone: http://localhost:8761/eureka/

在resources目录下新增logback.xml文件,内容如下

<?xml version="1.0" encoding="UTF-8"?>

<configuration debug="false" scan="true"

scanPeriod="1 seconds">

<include

resource="org/springframework/boot/logging/logback/base.xml" />

<!-- <jmxConfigurator/> -->

<contextName>logback</contextName> <property name="log.path" value="\logs\logback.log" /> <property name="log.pattern"

value="%d{yyyy-MM-dd HH:mm:ss.SSS} -%5p ${PID} --- traceId:[%X{mdc_trace_id}] [%15.15t] %-40.40logger{39} : %m%n" /> <appender name="file"

class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${log.path}</file> <encoder>

<pattern>${log.pattern}</pattern>

</encoder> <rollingPolicy

class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy"> <fileNamePattern>info-%d{yyyy-MM-dd}-%i.log

</fileNamePattern> <timeBasedFileNamingAndTriggeringPolicy

class="ch.qos.logback.core.rolling.SizeAndTimeBasedFNATP"> <maxFileSize>10MB</maxFileSize>

</timeBasedFileNamingAndTriggeringPolicy>

<maxHistory>10</maxHistory>

</rollingPolicy> </appender> <appender name="KafkaAppender"

class="com.github.danielwegener.logback.kafka.KafkaAppender">

<encoder

class="com.github.danielwegener.logback.kafka.encoding.LayoutKafkaMessageEncoder">

<layout class="net.logstash.logback.layout.LogstashLayout">

<includeContext>true</includeContext>

<includeCallerData>true</includeCallerData>

<customFields>{"system":"kafka"}</customFields>

<fieldNames

class="net.logstash.logback.fieldnames.ShortenedFieldNames" />

</layout>

<charset>UTF-8</charset>

</encoder>

<!--kafka topic 需要与配置文件里面的topic一致 否则kafka会沉默并鄙视你 -->

<topic>applog</topic>

<keyingStrategy

class="com.github.danielwegener.logback.kafka.keying.HostNameKeyingStrategy" />

<deliveryStrategy

class="com.github.danielwegener.logback.kafka.delivery.AsynchronousDeliveryStrategy" />

<producerConfig>bootstrap.servers=192.168.68.110:9092,192.168.68.111:9092,192.168.68.112:9092</producerConfig>

</appender> <!-- <logger name="Application_ERROR">

<appender-ref ref="KafkaAppender" />

</logger> --> <root level="info">

<appender-ref ref="KafkaAppender" />

</root> </configuration>

新增Feign接口CilentFeignApi,内容如下

package com.carry.elk; import org.springframework.cloud.openfeign.FeignClient;

import org.springframework.http.MediaType;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RequestMethod; @FeignClient("ELK-KAFKA-SERVER")

public interface CilentFeignApi { @RequestMapping(value = "/server", method = RequestMethod.GET, consumes = MediaType.APPLICATION_JSON_VALUE, produces = MediaType.APPLICATION_JSON_VALUE)

public String getString(); }

新增ClientController,内容如下

package com.carry.elk; import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController; @RestController

public class ClientController { // private final static Logger logger = LoggerFactory.getLogger("Application_ERROR"); private final Logger logger = LoggerFactory.getLogger(this.getClass()); @Autowired

CilentFeignApi api; @GetMapping("/client")

public String getString() {

logger.info("开始调用服务端Server");

return api.getString();

}

}

在启动类ElkKafkaClientApplication上加上注解@EnableEurekaClient和@EnableFeignClients

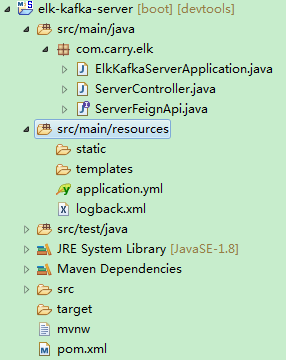

4、新建springboot项目elk-kafka-server

此项目跟elk-kafka-client项目相似只需作一下修改

application.yml文件中修改端口号为8081,application.name为ELK-KAFKA-SERVER

Feign接口中注解修改为@FeignClient("ELK-KAFKA-SERVER1")

Controller内容修改为

package com.carry.elk; import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController; @RestController

public class ServerController { // private final static Logger logger = LoggerFactory.getLogger("Application_ERROR");

private final Logger logger = LoggerFactory.getLogger(this.getClass()); @Autowired

ServerFeignApi api; @GetMapping("/server")

public String getString() {

logger.info("接收客户端调用并调用服务端Server1");

return api.getString();

}

}

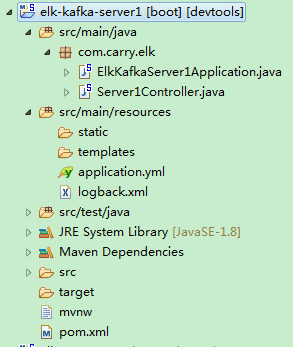

5、新建springboot项目elk-kafka-server1

此项目不需要调用其他项目所以去掉Feign的支持

application.yml文件中修改端口号为8082,application.name为ELK-KAFKA-SERVER1

Controller内容修改为

package com.carry.elk; import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController; @RestController

public class Server1Controller { // private final static Logger logger = LoggerFactory.getLogger("Application_ERROR");

private final Logger logger = LoggerFactory.getLogger(this.getClass()); @GetMapping("/server")

public String getString() {

logger.info("接收服务端server的调用");

return "I am server1.";

} }

项目已写完让我们来看看结果吧

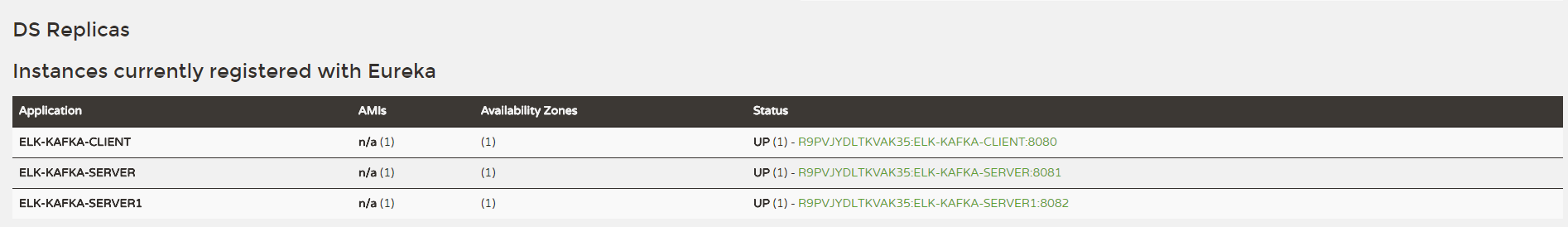

首先启动elk-eureka-server,然后依次启动elk-kafka-server1,elk-kafka-server,elk-kafka-client,浏览器中访问localhost:8761/,查看三个项目是否成功注册到eureka上去

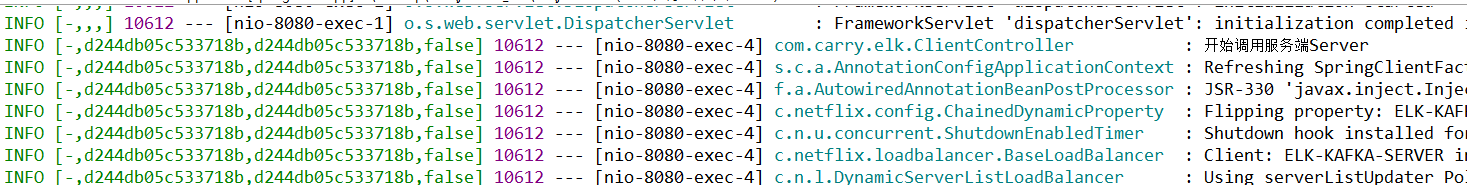

访问localhost:8080/client,查看项目后台日志

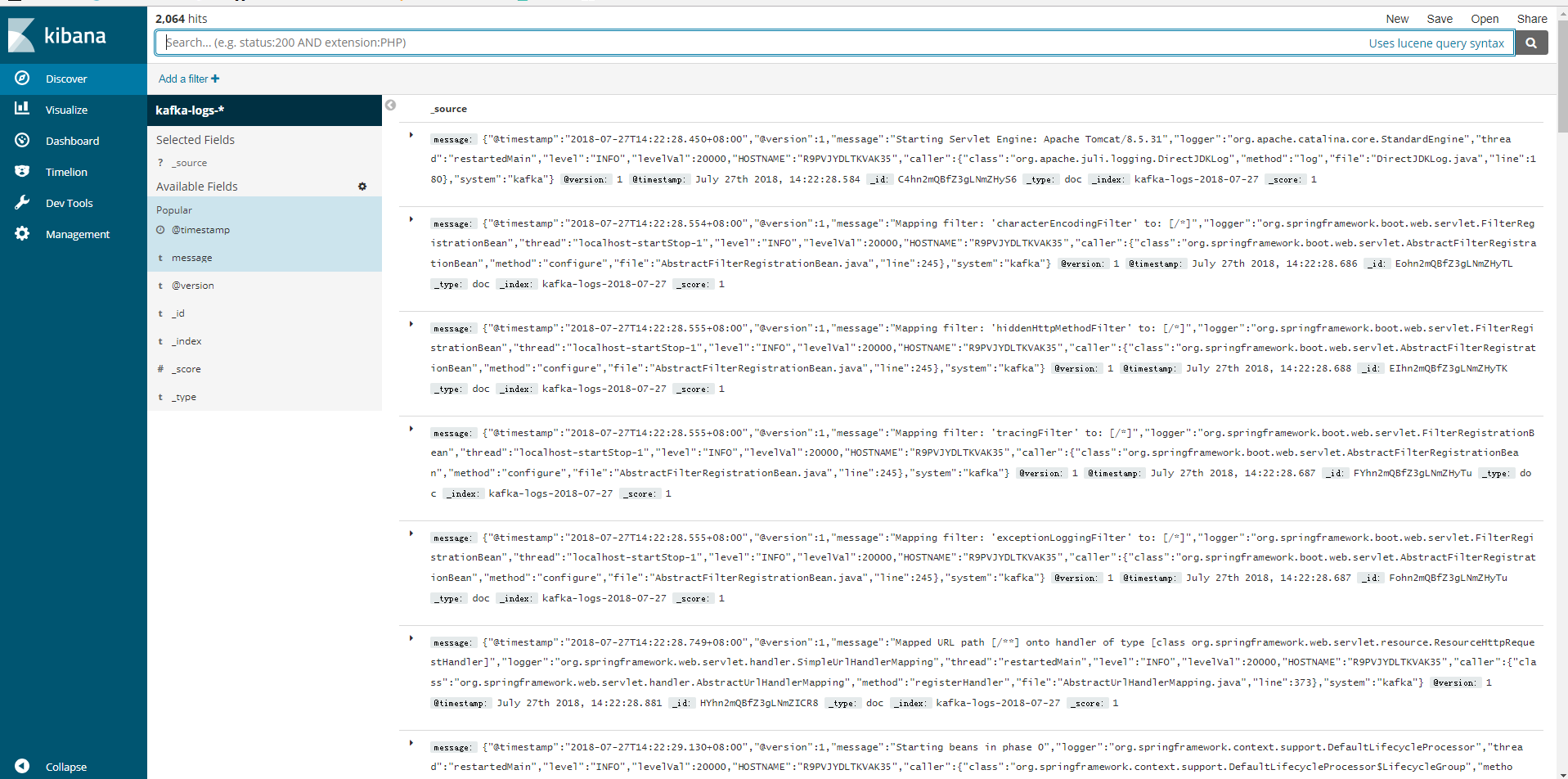

这时我们可以去Kibana上查询日志了,访问http://192.168.68.112:5601/,进入Management创建index pattern kafka-logs-*,返回Discover页面

Spring Cloud Sleuth通过Kafka将链路追踪日志输出到ELK的更多相关文章

- 分布式链路追踪之Spring Cloud Sleuth+Zipkin最全教程!

大家好,我是不才陈某~ 这是<Spring Cloud 进阶>第九篇文章,往期文章如下: 五十五张图告诉你微服务的灵魂摆渡者Nacos究竟有多强? openFeign夺命连环9问,这谁受得 ...

- 服务链路追踪(Spring Cloud Sleuth)

sleuth:英 [slu:θ] 美 [sluθ] n.足迹,警犬,侦探vi.做侦探 微服务架构是一个分布式架构,它按业务划分服务单元,一个分布式系统往往有很多个服务单元.由于服务单元数量众多,业务的 ...

- Spring Cloud Sleuth+ZipKin+ELK服务链路追踪(七)

序言 sleuth是spring cloud的分布式跟踪工具,主要记录链路调用数据,本身只支持内存存储,在业务量大的场景下,为拉提升系统性能也可通过http传输数据,也可换做rabbit或者kafka ...

- Spring Cloud Sleuth服务链路追踪(zipkin)(转)

这篇文章主要讲述服务追踪组件zipkin,Spring Cloud Sleuth集成了zipkin组件. 一.简介 Spring Cloud Sleuth 主要功能就是在分布式系统中提供追踪解决方案, ...

- SpringCloud(7)服务链路追踪Spring Cloud Sleuth

1.简介 Spring Cloud Sleuth 主要功能就是在分布式系统中提供追踪解决方案,并且兼容支持了 zipkin,你只需要在pom文件中引入相应的依赖即可.本文主要讲述服务追踪组件zipki ...

- 第八篇: 服务链路追踪(Spring Cloud Sleuth)

一.简介 一个分布式系统由若干分布式服务构成,每一个请求会经过多个业务系统并留下足迹,但是这些分散的数据对于问题排查,或是流程优化都很有限. 要能做到追踪每个请求的完整链路调用,收集链路调用上每个 ...

- 史上最简单的SpringCloud教程 | 第九篇: 服务链路追踪(Spring Cloud Sleuth)

这篇文章主要讲述服务追踪组件zipkin,Spring Cloud Sleuth集成了zipkin组件. 注意情况: 该案例使用的spring-boot版本1.5.x,没使用2.0.x, 另外本文图3 ...

- spring cloud 入门系列八:使用spring cloud sleuth整合zipkin进行服务链路追踪

好久没有写博客了,主要是最近有些忙,今天忙里偷闲来一篇. =======我是华丽的分割线========== 微服务架构是一种分布式架构,微服务系统按照业务划分服务单元,一个微服务往往会有很多个服务单 ...

- spring boot 2.0.3+spring cloud (Finchley)7、服务链路追踪Spring Cloud Sleuth

参考:Spring Cloud(十二):分布式链路跟踪 Sleuth 与 Zipkin[Finchley 版] Spring Cloud Sleuth 是Spring Cloud的一个组件,主要功能是 ...

随机推荐

- 「POJ3237」Tree(树链剖分)

题意 给棵n个点的树.边有边权然后有三种操作 1.CHANGE i v 将编号为i的边权变为v 2.NEGATE a b 将a到b的所有边权变为相反数. 3.QUERY a b 查询a b路径的最大边 ...

- docker容器存放目录磁盘空间满了,转移数据修改Docker默认存储位置

原文:docker容器存放目录磁盘空间满了,转移数据修改Docker默认存储位置 版权声明:本文为博主原创文章,未经博主允许不得转载. https://blog.csdn.net/qq_3767485 ...

- POJ 2081 Recaman's Sequence

Recaman's Sequence Time Limit: 3000ms Memory Limit: 60000KB This problem will be judged on PKU. Orig ...

- Dig A Well For Yourself

See Paul's essay: , I found paul is a genius, double checking. Mars June 2015

- 七牛用户搭建c# sdk的图文讲解

Qiniu 七牛问题解答 问题描写叙述:非常多客户属于小白类型. 可是请不要随便喷七牛的文档站.由于须要一点http的专业知识才干了解七牛的api文档.如今我给大家弄个c# sdk的搭建步骤 问题解决 ...

- 学习中 常用到的string内置对象方法的总结

//concat() – 将两个或多个字符的文本组合起来,返回一个新的字符串. var str = "Hello"; var out = str.concat(" Wor ...

- 130.C++经典面试题 52-100

- 19.volatile

volatile 编译器会自动优化,而volatile起到的作用是禁止优化,每次读内存

- SQL server无法启动服务,提示“错误1069: 由于登录失败而无法启动服务”

原因:大部分情况是你修改了服务器系统的登录密码,而导致SQL服务无法启动. 解决方法:将sql server(mssql server)服务的登录密码改为系统登录密码或本地登录,如下操作步骤: 在wi ...

- WP8 学习笔记(001_环境配置)

Step 1 WP8 的开发要求64位操作系统,Windows 8及以上版本,需要激活版,建议网上买一个注册码.详见安装双系统. Step 2 安装好系统并已经激活之后,需要安装Windows Ph ...