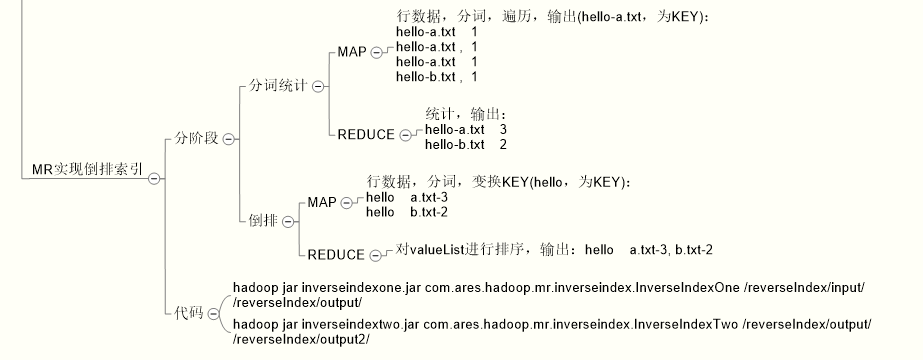

【Hadoop】Hadoop MR 如何实现倒排索引算法?

1、概念、方案

2、代码示例

InverseIndexOne

package com.ares.hadoop.mr.inverseindex; import java.io.IOException; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.FileSplit;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.StringUtils;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

import org.apache.log4j.Logger; public class InverseIndexOne extends Configured implements Tool { private static final Logger LOGGER = Logger.getLogger(InverseIndexOne.class);

enum Counter {

LINESKIP

} public static class InverseIndexOneMapper

extends Mapper<LongWritable, Text, Text, LongWritable> { private String line;

private final static char separatorA = ' ';

private final static char separatorB = '-';

private String fileName; private Text text = new Text();

private final static LongWritable ONE = new LongWritable(1L); @Override

protected void map(LongWritable key, Text value,

Mapper<LongWritable, Text, Text, LongWritable>.Context context)

throws IOException, InterruptedException {

// TODO Auto-generated method stub

//super.map(key, value, context);

try {

line = value.toString();

String[] fields = StringUtils.split(line, separatorA); FileSplit fileSplit = (FileSplit) context.getInputSplit();

fileName = fileSplit.getPath().getName(); for (int i = ; i < fields.length; i++) {

text.set(fields[i] + separatorB + fileName);

context.write(text, ONE);

}

} catch (Exception e) {

// TODO: handle exception

LOGGER.error(e);

System.out.println(e);

context.getCounter(Counter.LINESKIP).increment();

return;

}

}

} public static class InverseIndexOneReducer

extends Reducer<Text, LongWritable, Text, LongWritable> {

private LongWritable result = new LongWritable(); @Override

protected void reduce(Text key, Iterable<LongWritable> values,

Reducer<Text, LongWritable, Text, LongWritable>.Context context)

throws IOException, InterruptedException {

// TODO Auto-generated method stub

//super.reduce(arg0, arg1, arg2);

long count = ;

for (LongWritable value : values) {

count += value.get();

}

result.set(count);

context.write(key, result);

}

} @Override

public int run(String[] args) throws Exception {

// TODO Auto-generated method stub

//return 0;

String errMsg = "InverseIndexOne: TEST STARTED...";

LOGGER.debug(errMsg);

System.out.println(errMsg); Configuration conf = new Configuration();

//FOR Eclipse JVM Debug

//conf.set("mapreduce.job.jar", "flowsum.jar");

Job job = Job.getInstance(conf); // JOB NAME

job.setJobName("InverseIndexOne"); // JOB MAPPER & REDUCER

job.setJarByClass(InverseIndexOne.class);

job.setMapperClass(InverseIndexOneMapper.class);

job.setReducerClass(InverseIndexOneReducer.class); // JOB PARTITION

//job.setPartitionerClass(FlowGroupPartition.class); // JOB REDUCE TASK NUMBER

//job.setNumReduceTasks(5); // MAP & REDUCE

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(LongWritable.class);

// MAP

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(LongWritable.class); // JOB INPUT & OUTPUT PATH

//FileInputFormat.addInputPath(job, new Path(args[0]));

FileInputFormat.setInputPaths(job, args[]);

Path output = new Path(args[]);

// FileSystem fs = FileSystem.get(conf);

// if (fs.exists(output)) {

// fs.delete(output, true);

// }

FileOutputFormat.setOutputPath(job, output); // VERBOSE OUTPUT

if (job.waitForCompletion(true)) {

errMsg = "InverseIndexOne: TEST SUCCESSFULLY...";

LOGGER.debug(errMsg);

System.out.println(errMsg);

return ;

} else {

errMsg = "InverseIndexOne: TEST FAILED...";

LOGGER.debug(errMsg);

System.out.println(errMsg);

return ;

}

} public static void main(String[] args) throws Exception {

if (args.length != ) {

String errMsg = "InverseIndexOne: ARGUMENTS ERROR";

LOGGER.error(errMsg);

System.out.println(errMsg);

System.exit(-);

} int result = ToolRunner.run(new Configuration(), new InverseIndexOne(), args);

System.exit(result);

}

}

InverseIndexTwo

package com.ares.hadoop.mr.inverseindex; import java.io.IOException; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.StringUtils;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

import org.apache.log4j.Logger; public class InverseIndexTwo extends Configured implements Tool{

private static final Logger LOGGER = Logger.getLogger(InverseIndexOne.class);

enum Counter {

LINESKIP

} public static class InverseIndexTwoMapper extends

Mapper<LongWritable, Text, Text, Text> { private String line;

private final static char separatorA = '\t';

private final static char separatorB = '-'; private Text textKey = new Text();

private Text textValue = new Text(); @Override

protected void map(LongWritable key, Text value,

Mapper<LongWritable, Text, Text, Text>.Context context)

throws IOException, InterruptedException {

// TODO Auto-generated method stub

//super.map(key, value, context);

try {

line = value.toString();

String[] fields = StringUtils.split(line, separatorA);

String[] wordAndfileName = StringUtils.split(fields[], separatorB);

long count = Long.parseLong(fields[]);

String word = wordAndfileName[];

String fileName = wordAndfileName[]; textKey.set(word);

textValue.set(fileName + separatorB + count);

context.write(textKey, textValue);

} catch (Exception e) {

// TODO: handle exception

LOGGER.error(e);

System.out.println(e);

context.getCounter(Counter.LINESKIP).increment();

return;

}

}

} public static class InverseIndexTwoReducer extends

Reducer<Text, Text, Text, Text> { private Text textValue = new Text(); @Override

protected void reduce(Text key, Iterable<Text> values,

Reducer<Text, Text, Text, Text>.Context context)

throws IOException, InterruptedException {

// TODO Auto-generated method stub

//super.reduce(arg0, arg1, arg2);

StringBuilder index = new StringBuilder("");

// for (Text text : values) {

// if (condition) {

//

// }

// index.append(text.toString() + separatorA);

// }

String separatorA = "";

for (Text text : values) {

index.append(separatorA + text.toString());

separatorA = ",";

}

textValue.set(index.toString());

context.write(key, textValue);

}

} @Override

public int run(String[] args) throws Exception {

// TODO Auto-generated method stub

//return 0;

String errMsg = "InverseIndexTwo: TEST STARTED...";

LOGGER.debug(errMsg);

System.out.println(errMsg); Configuration conf = new Configuration();

//FOR Eclipse JVM Debug

//conf.set("mapreduce.job.jar", "flowsum.jar");

Job job = Job.getInstance(conf); // JOB NAME

job.setJobName("InverseIndexTwo"); // JOB MAPPER & REDUCER

job.setJarByClass(InverseIndexTwo.class);

job.setMapperClass(InverseIndexTwoMapper.class);

job.setReducerClass(InverseIndexTwoReducer.class); // JOB PARTITION

//job.setPartitionerClass(FlowGroupPartition.class); // JOB REDUCE TASK NUMBER

//job.setNumReduceTasks(5); // MAP & REDUCE

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

// MAP

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Text.class); // JOB INPUT & OUTPUT PATH

//FileInputFormat.addInputPath(job, new Path(args[0]));

FileInputFormat.setInputPaths(job, args[]);

Path output = new Path(args[]);

// FileSystem fs = FileSystem.get(conf);

// if (fs.exists(output)) {

// fs.delete(output, true);

// }

FileOutputFormat.setOutputPath(job, output); // VERBOSE OUTPUT

if (job.waitForCompletion(true)) {

errMsg = "InverseIndexTwo: TEST SUCCESSFULLY...";

LOGGER.debug(errMsg);

System.out.println(errMsg);

return ;

} else {

errMsg = "InverseIndexTwo: TEST FAILED...";

LOGGER.debug(errMsg);

System.out.println(errMsg);

return ;

}

} public static void main(String[] args) throws Exception {

if (args.length != ) {

String errMsg = "InverseIndexOne: ARGUMENTS ERROR";

LOGGER.error(errMsg);

System.out.println(errMsg);

System.exit(-);

} int result = ToolRunner.run(new Configuration(), new InverseIndexTwo(), args);

System.exit(result);

} }

参考资料:

How to check if processing the last item in an Iterator?:http://stackoverflow.com/questions/9633991/how-to-check-if-processing-the-last-item-in-an-iterator

【Hadoop】Hadoop MR 如何实现倒排索引算法?的更多相关文章

- hadoop修改MR的提交的代码程序的副本数

hadoop修改MR的提交的代码程序的副本数 Under-Replicated Blocks的数量很多,有7万多个.hadoop fsck -blocks 检查发现有很多replica missing ...

- 腾讯公司数据分析岗位的hadoop工作 线性回归 k-means算法 朴素贝叶斯算法 SpringMVC组件 某公司的广告投放系统 KNN算法 社交网络模型 SpringMVC注解方式

腾讯公司数据分析岗位的hadoop工作 线性回归 k-means算法 朴素贝叶斯算法 SpringMVC组件 某公司的广告投放系统 KNN算法 社交网络模型 SpringMVC注解方式 某移动公司实时 ...

- Hadoop【MR开发规范、序列化】

Hadoop[MR开发规范.序列化] 目录 Hadoop[MR开发规范.序列化] 一.MapReduce编程规范 1.Mapper阶段 2.Reducer阶段 3.Driver阶段 二.WordCou ...

- [Hadoop]Hadoop章2 HDFS原理及读写过程

HDFS(Hadoop Distributed File System )Hadoop分布式文件系统. HDFS有很多特点: ① 保存多个副本,且提供容错机制,副本丢失或宕机自动恢复.默认存3份. ② ...

- hadoop hadoop install (1)

vmuser@vmuser-VirtualBox:~$ sudo useradd -m hadoop -s /bin/bash[sudo] vmuser 的密码: vmuser@vmuser-Virt ...

- MR案例:倒排索引

1.map阶段:将单词和URI组成Key值(如“MapReduce :1.txt”),将词频作为value. 利用MR框架自带的Map端排序,将同一文档的相同单词的词频组成列表,传递给Combine过 ...

- Hadoop hadoop 机架感知配置

机架感知脚本 使用python3编写机架感知脚本,报存到topology.py,给予执行权限 import sys import os DEFAULT_RACK="/default-rack ...

- hadoop之 mr输出到hbase

1.注意问题: 1.在开发过程中一定要导入hbase源码中的lib库否则出现如下错误 TableMapReducUtil 找不到什么-- 2.编码: import java.io.IOExceptio ...

- Hadoop案例(四)倒排索引(多job串联)与全局计数器

一. 倒排索引(多job串联) 1. 需求分析 有大量的文本(文档.网页),需要建立搜索索引 xyg pingping xyg ss xyg ss a.txt xyg pingping xyg pin ...

随机推荐

- 了解Spark源码的概况

本文旨在帮助那些想要对Spark有更深入了解的工程师们,了解Spark源码的概况,搭建Spark源码阅读环境,编译.调试Spark源码,为将来更深入地学习打下基础. 一.项目结构 在大型项目中,往往涉 ...

- 洛谷 P2114 [NOI2014]起床困难综合症 解题报告

P2114 [NOI2014]起床困难综合症 题目描述 21世纪,许多人得了一种奇怪的病:起床困难综合症,其临床表现为:起床难,起床后精神不佳.作为一名青春阳光好少年,atm一直坚持与起床困难综合症作 ...

- ROS内usb_cam包使用注意事项

1.查看摄像头支持的pixel-format: 方法: v4l2-ctl --list-formats-ext -d /dev/video0

- 基于node的cmd迷你天气查询工具

1.前几天网上看到的,于是自己小改了一下,更换了天气查询的接口,当作练习一下node. 2.收获挺大的,捣鼓了一天,终于学会了发布npm包. 3.接下来,就介绍一下这个 mini-tianqi 的主要 ...

- linux内核情景分析之execve()

用来描述用户态的cpu寄存器在内核栈中保存情况.可以获取用户空间的信息 struct pt_regs { long ebx; //可执行文件路径的指针(regs.ebx中 long ecx; //命令 ...

- windows安装scrapy

1.安装Twisted 直接pip install Twisted 然后报错 error: Microsoft Visual C++ 14.0 is required. Get it with &qu ...

- Kubernetes控制节点安装配置

#环境安装Centos 7 Linux release 7.3.1611网络: 互通配置主机名设置各个服务器的主机名hosts#查找kubernetes支持的docker版本Kubernetes v1 ...

- Dom4J读写xml

解析读取XML public static void main(String[] args) { //1获取SaxReader对象 SAXReader reader=new SAXReader(); ...

- c++和G++的区别

今天被g++坑死了.. 网上找了一段铭记:引自 http://www.cnblogs.com/dongsheng/archive/2012/10/22/2734670.html 1.输出double类 ...

- PhpStrom弹窗License activation 报 this license BIG3CLIK6F has been cancelled 错误的解决。

将“0.0.0.0 account.jetbrains.com”添加到hosts文件中