OpenACC 书上的范例代码(Jacobi 迭代),part 3

▶ 使用Jacobi 迭代求泊松方程的数值解

● 使用 data 构件,强行要求 u0 仅拷入和拷出 GPU 各一次,u1 仅拷入GPU 一次

#include <stdio.h>

#include <stdlib.h>

#include <math.h>

#include <time.h>

#include <openacc.h> #if defined(_WIN32) || defined(_WIN64)

#include <C:\Program Files\PGI\win64\19.4\include\wrap\sys\timeb.h>

#define timestruct clock_t

#define gettime(a) (*(a) = clock())

#define usec(t1,t2) (t2 - t1)

#else

#include <sys/time.h>

#define gettime(a) gettimeofday(a, NULL)

#define usec(t1,t2) (((t2).tv_sec - (t1).tv_sec) * 1000000 + (t2).tv_usec - (t1).tv_usec)

typedef struct timeval timestruct;

#endif inline float uval(float x, float y)

{

return x * x + y * y;

} int main()

{

const int row = , col = ;

const float height = 1.0, width = 2.0;

const float hx = height / row, wy = width / col;

const float fij = -4.0f;

const float hx2 = hx * hx, wy2 = wy * wy, c1 = hx2 * wy2, c2 = 1.0f / (2.0 * (hx2 + wy2));

const int maxIter = ;

const int colPlus = col + ; float *restrict u0 = (float *)malloc(sizeof(float)*(row + )*colPlus);

float *restrict u1 = (float *)malloc(sizeof(float)*(row + )*colPlus);

float *utemp = NULL; // 初始化

for (int ix = ; ix <= row; ix++)

{

u0[ix*colPlus + ] = u1[ix*colPlus + ] = uval(ix * hx, 0.0f);

u0[ix*colPlus + col] = u1[ix*colPlus + col] = uval(ix*hx, col * wy);

}

for (int jy = ; jy <= col; jy++)

{

u0[jy] = u1[jy] = uval(0.0f, jy * wy);

u0[row*colPlus + jy] = u1[row*colPlus + jy] = uval(row*hx, jy * wy);

}

for (int ix = ; ix < row; ix++)

{

for (int jy = ; jy < col; jy++)

u0[ix*colPlus + jy] = 0.0f;

} // 计算

timestruct t1, t2;

acc_init(acc_device_nvidia);

gettime(&t1);

#pragma acc data copy(u0[0:(row + 1) * colPlus]) copyin(u1[0:(row + 1) * colPlus]) // 循环外侧添加 data 构件,跨迭代(内核)构造数据空间

{

for (int iter = ; iter < maxIter; iter++)

{

#pragma acc kernels present(u0[0:((row + 1) * colPlus)], u1[0:((row + 1) * colPlus)]) // 每次调用内核时声明 u0 和 u1 已经存在,不要再拷贝

{

#pragma acc loop independent

for (int ix = ; ix < row; ix++)

{

#pragma acc loop independent

for (int jy = ; jy < col; jy++)

{

u1[ix*colPlus + jy] = (c1*fij + wy2 * (u0[(ix - )*colPlus + jy] + u0[(ix + )*colPlus + jy]) + \

hx2 * (u0[ix*colPlus + jy - ] + u0[ix*colPlus + jy + ])) * c2;

}

}

}

utemp = u0, u0 = u1, u1 = utemp;

}

}

gettime(&t2); long long timeElapse = usec(t1, t2);

#if defined(_WIN32) || defined(_WIN64)

printf("\nElapsed time: %13ld ms.\n", timeElapse);

#else

printf("\nElapsed time: %13ld us.\n", timeElapse);

#endif

free(u0);

free(u1);

acc_shutdown(acc_device_nvidia);

//getchar();

return ;

}

● 输出结果,win10 中运行结果,关闭 PGI_ACC_NOTIFY 后可以达到 67 ms

D:\Code\OpenACC>pgcc main.c -o main.exe -c99 -Minfo -acc

main:

, Memory zero idiom, loop replaced by call to __c_mzero4

, Generating copy(u0[:colPlus*(row+)])

Generating copyin(u1[:colPlus*(row+)])

, Generating present(u1[:colPlus*(row+)],u0[:colPlus*(row+)])

, Loop is parallelizable

, Loop is parallelizable

Generating Tesla code

, #pragma acc loop gang, vector(4) /* blockIdx.y threadIdx.y */

, #pragma acc loop gang, vector(32) /* blockIdx.x threadIdx.x */

, FMA (fused multiply-add) instruction(s) generated

uval:

, FMA (fused multiply-add) instruction(s) generated D:\Code\OpenACC>main.exe

launch CUDA kernel file=D:\Code\OpenACC\main.c function=main line= device= threadid= num_gangs= num_workers= vector_length= grid=32x1024 block=32x4 ... launch CUDA kernel file=D:\Code\OpenACC\main.c function=main line= device= threadid= num_gangs= num_workers= vector_length= grid=32x1024 block=32x4 Elapsed time: ms.

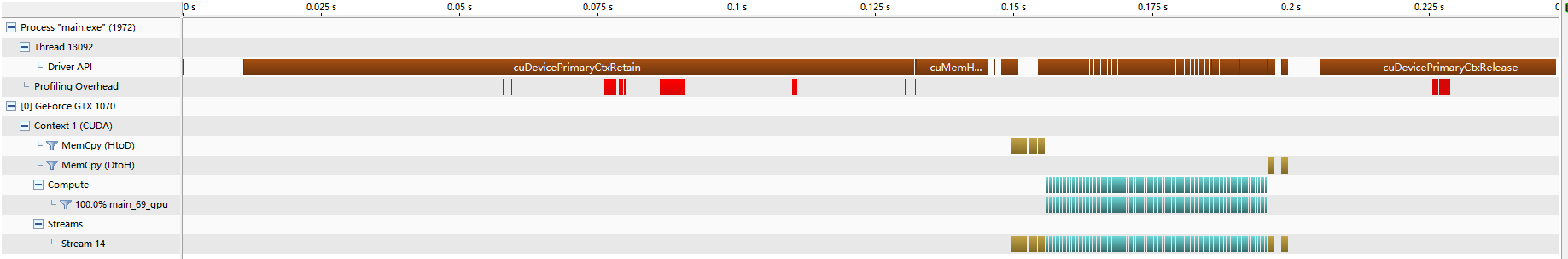

● nvvp 结果,可见大部分时间都花在了初始化设备上,计算用时已经比较少了,拷贝用时更少,只有开头和结尾有一点

● 输出结果,Ubuntu 中运行结果,含开启 PGI_ACC_TIME 的数据

cuan@CUAN:~$ pgcc data.c -o data.exe -c99 -Minfo -acc

main:

, Memory zero idiom, loop replaced by call to __c_mzero4

, Generating copy(u0[:colPlus*(row+)])

Generating copyin(u1[:colPlus*(row+)])

, Generating present(utemp[:],u1[:colPlus*(row+)],u0[:colPlus*(row+)])

FMA (fused multiply-add) instruction(s) generated

, Loop is parallelizable

, Loop is parallelizable

Generating Tesla code

, #pragma acc loop gang, vector(4) /* blockIdx.y threadIdx.y */

, #pragma acc loop gang, vector(32) /* blockIdx.x threadIdx.x */

uval:

, FMA (fused multiply-add) instruction(s) generated

cuan@CUAN:~$ ./data.exe

launch CUDA kernel file=/home/cuan/data.c function=main line= device= threadid= num_gangs= num_workers= vector_length= grid=32x1024 block=32x4 ... launch CUDA kernel file=/home/cuan/data.c function=main line= device= threadid= num_gangs= num_workers= vector_length= grid=32x1024 block=32x4 Elapsed time: us. Accelerator Kernel Timing data

/home/cuan/data.c

main NVIDIA devicenum=

time(us): ,

: data region reached times

: data copyin transfers:

device time(us): total=, max=, min=, avg=,

: data copyout transfers:

device time(us): total=, max=, min= avg=

: data region reached times

: compute region reached times

: kernel launched times

grid: [32x1024] block: [32x4]

device time(us): total=, max= min= avg=

elapsed time(us): total=, max=, min= avg=

● 将 tempp 放到了更里一层循环,报运行时错误 715 或 719,参考【https://stackoverflow.com/questions/41366915/openacc-create-data-while-running-inside-a-kernels】,大意是关于内存泄露

D:\Code\OpenACC>main.exe

launch CUDA kernel file=D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c function=main

line=69 device=0 threadid=1 num_gangs=32768 num_workers=4 vector_length=32 grid=32x1024 block=32x4

launch CUDA kernel file=D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c function=main

line=74 device=0 threadid=1 num_gangs=1 num_workers=1 vector_length=1 grid=1 block=1

call to cuStreamSynchronize returned error 715: Illegal instruction call to cuMemFreeHost returned error 715: Illegal instruction D:\Code\OpenACC>main.exe

launch CUDA kernel file=D:\Code\OpenACC\main.c function=main line= device= threadid= num_gangs= num_workers= vector_length= grid=32x1024 block=32x4

launch CUDA kernel file=D:\Code\OpenACC\main.c function=main line= device= threadid= num_gangs= num_workers= vector_length= grid= block=

Failing in Thread:

call to cuStreamSynchronize returned error : Launch failed (often invalid pointer dereference) Failing in Thread:

call to cuMemFreeHost returned error : Launch failed (often invalid pointer dereference)

● 尝试 在 data 构件中添加 create(utemp) 或在交换指针的位置临时定义 float *utemp 都会报运行时错误 700

D:\Code\OpenACC>main.exe

launch CUDA kernel file=D:\Code\OpenACC\main.c function=main line= device= threadid= num_gangs= num_workers= vector_length= grid=32x1024 block=32x4

launch CUDA kernel file=D:\Code\OpenACC\main.c function=main line= device= threadid= num_gangs= num_workers= vector_length= grid= block=

Failing in Thread:

call to cuStreamSynchronize returned error : Illegal address during kernel execution Failing in Thread:

call to cuMemFreeHost returned error : Illegal address during kernel execution

▶ 恢复错误控制,添加 reduction 导语用来计量改进量

#include <stdio.h>

#include <stdlib.h>

#include <math.h>

#include <time.h>

#include <openacc.h> #if defined(_WIN32) || defined(_WIN64)

#include <C:\Program Files\PGI\win64\19.4\include\wrap\sys\timeb.h>

#define timestruct clock_t

#define gettime(a) (*(a) = clock())

#define usec(t1,t2) (t2 - t1)

#else

#include <sys/time.h>

#define gettime(a) gettimeofday(a, NULL)

#define usec(t1,t2) (((t2).tv_sec - (t1).tv_sec) * 1000000 + (t2).tv_usec - (t1).tv_usec)

typedef struct timeval timestruct; #define max(x,y) ((x) > (y) ? (x) : (y))

#endif inline float uval(float x, float y)

{

return x * x + y * y;

} int main()

{

const int row = , col = ;

const float height = 1.0, width = 2.0;

const float hx = height / row, wy = width / col;

const float fij = -4.0f;

const float hx2 = hx * hx, wy2 = wy * wy, c1 = hx2 * wy2, c2 = 1.0f / (2.0 * (hx2 + wy2)), errControl = 0.0f;

const int maxIter = ;

const int colPlus = col + ; float *restrict u0 = (float *)malloc(sizeof(float)*(row + )*colPlus);

float *restrict u1 = (float *)malloc(sizeof(float)*(row + )*colPlus);

float *utemp = NULL; // 初始化

for (int ix = ; ix <= row; ix++)

{

u0[ix*colPlus + ] = u1[ix*colPlus + ] = uval(ix * hx, 0.0f);

u0[ix*colPlus + col] = u1[ix*colPlus + col] = uval(ix*hx, col * wy);

}

for (int jy = ; jy <= col; jy++)

{

u0[jy] = u1[jy] = uval(0.0f, jy * wy);

u0[row*colPlus + jy] = u1[row*colPlus + jy] = uval(row*hx, jy * wy);

}

for (int ix = ; ix < row; ix++)

{

for (int jy = ; jy < col; jy++)

u0[ix*colPlus + jy] = 0.0f;

} // 计算

timestruct t1, t2;

acc_init(acc_device_nvidia);

gettime(&t1);

#pragma acc data copy(u0[0:(row + 1) * colPlus]) copyin(u1[0:(row + 1) * colPlus])

{

for (int iter = ; iter < maxIter; iter++)

{

float uerr = 0.0f; // uerr 要放到前面,否则离开代码块数据未定义,书上这里是错的

#pragma acc kernels present(u0[0:(row + 1) * colPlus]) present(u1[0:(row + 1) * colPlus])

{

#pragma acc loop independent reduction(max:uerr) // 添加 reduction 语句统计改进量

for (int ix = ; ix < row; ix++)

{

for (int jy = ; jy < col; jy++)

{

u1[ix*colPlus + jy] = (c1*fij + wy2 * (u0[(ix - )*colPlus + jy] + u0[(ix + )*colPlus + jy]) + \

hx2 * (u0[ix*colPlus + jy - ] + u0[ix*colPlus + jy + ])) * c2;

uerr = max(uerr, fabs(u0[ix * colPlus + jy] - u1[ix * colPlus + jy]));

}

}

}

printf("\niter = %d, uerr = %e\n", iter, uerr);

if (uerr < errControl)

break;

utemp = u0, u0 = u1, u1 = utemp;

}

}

gettime(&t2); long long timeElapse = usec(t1, t2);

#if defined(_WIN32) || defined(_WIN64)

printf("\nElapsed time: %13ld ms.\n", timeElapse);

#else

printf("\nElapsed time: %13ld us.\n", timeElapse);

#endif

free(u0);

free(u1);

acc_shutdown(acc_device_nvidia);

//getchar();

return ;

}

● 输出结果,win10 相比没有错误控制的情形整整慢了一倍,nvvp 没有明显变化,不放上来了

D:\Code\OpenACC>pgcc main.c -o main.exe -c99 -Minfo -acc

main:

, Memory zero idiom, loop replaced by call to __c_mzero4

, Generating copy(u0[:colPlus*(row+)])

Generating copyin(u1[:colPlus*(row+)])

, Generating present(u0[:colPlus*(row+)])

Generating implicit copy(uerr)

Generating present(u1[:colPlus*(row+)])

, Loop is parallelizable

, Loop is parallelizable

Generating Tesla code

, #pragma acc loop gang, vector(4) /* blockIdx.y threadIdx.y */

, #pragma acc loop gang, vector(32) /* blockIdx.x threadIdx.x */

Generating reduction(max:uerr) // 多了 reduction 的信息

, FMA (fused multiply-add) instruction(s) generated

uval:

, FMA (fused multiply-add) instruction(s) generated D:\Code\OpenACC>main.exe iter = , uerr = 2.496107e+00 ... iter = , uerr = 2.202189e-02 Elapsed time: ms.

● 输出结果,Unubtu

cuan@CUAN:~$ pgcc data+reduction.c -o data+reduction.exe -c99 -Minfo -acc

main:

, Memory zero idiom, loop replaced by call to __c_mzero4

, Generating copyin(u1[:colPlus*(row+)])

Generating copy(u0[:colPlus*(row+)])

, FMA (fused multiply-add) instruction(s) generated

, Generating present(u0[:colPlus*(row+)])

Generating implicit copy(uerr)

Generating present(u1[:colPlus*(row+)])

, Loop is parallelizable

, Loop is parallelizable

Generating Tesla code

, #pragma acc loop gang, vector(4) /* blockIdx.y threadIdx.y */

, #pragma acc loop gang, vector(32) /* blockIdx.x threadIdx.x */

Generating reduction(max:uerr)

uval:

, FMA (fused multiply-add) instruction(s) generated

cuan@CUAN:~$ ./data+reduction.exe

launch CUDA kernel file=/home/cuan/data+reduction.c function=main line= device= threadid= num_gangs= num_workers= vector_length= grid=32x1024 block=32x4 shared memory=

launch CUDA kernel file=/home/cuan/data+reduction.c function=main line= device= threadid= num_gangs= num_workers= vector_length= grid= block= shared memory= iter = , uerr = 2.496107e+00

launch CUDA kernel file=/home/cuan/data+reduction.c function=main line= device= threadid= num_gangs= num_workers= vector_length= grid=32x1024 block=32x4 shared memory=

launch CUDA kernel file=/home/cuan/data+reduction.c function=main line= device= threadid= num_gangs= num_workers= vector_length= grid= block= shared memory= ... iter = , uerr = 2.214956e-02

launch CUDA kernel file=/home/cuan/data+reduction.c function=main line= device= threadid= num_gangs= num_workers= vector_length= grid=32x1024 block=32x4 shared memory=

launch CUDA kernel file=/home/cuan/data+reduction.c function=main line= device= threadid= num_gangs= num_workers= vector_length= grid= block= shared memory= iter = , uerr = 2.202189e-02 Elapsed time: us. Accelerator Kernel Timing data

/home/cuan/data+reduction.c

main NVIDIA devicenum=

time(us): ,

: data region reached times

: data copyin transfers:

device time(us): total=, max=, min=, avg=,

: data copyout transfers:

device time(us): total=, max=, min= avg=

: compute region reached times

: kernel launched times

grid: [32x1024] block: [32x4]

device time(us): total=, max= min= avg=

elapsed time(us): total=, max=, min= avg=

: reduction kernel launched times

grid: [] block: []

device time(us): total=, max= min= avg=

elapsed time(us): total=, max=, min= avg=

: data region reached times

: data copyin transfers:

device time(us): total= max= min= avg=

: data copyout transfers:

device time(us): total= max= min= avg=

▶ 尝试在计算的循环导语上加上 collapse(2) 子句,意思是合并两个较小的循环为一个较大的循环。发现效果不显著,不放上来了

OpenACC 书上的范例代码(Jacobi 迭代),part 3的更多相关文章

- OpenACC 书上的范例代码(Jacobi 迭代),part 2

▶ 使用Jacobi 迭代求泊松方程的数值解 ● 首次使用 OpenACC 进行加速,使用动态数组,去掉了误差控制 #include <stdio.h> #include <stdl ...

- OpenACC 书上的范例代码(Jacobi 迭代),part 1

▶ 使用Jacobi 迭代求泊松方程的数值解 ● 原始串行版本,运行时间 2272 ms #include <stdio.h> #include <stdlib.h> #inc ...

- C#高级编程(第9版) -C#5.0&.Net4.5.1 书上的示例代码下载链接

http://www.wrox.com/WileyCDA/WroxTitle/Professional-C-5-0-and-NET-4-5-1.productCd-1118833031,descCd- ...

- uva 213 - Message Decoding (我认为我的方法要比书上少非常多代码,不保证好……)

#include<stdio.h> #include<math.h> #include<string.h> char s[250]; char a[10][250] ...

- java代码流类。。程序怎么跟书上的结果不一样???

总结:这个程序很容易懂.的那是这个结果我觉得有问题啊..怎么“stop”后,输出的内容是输入过的呢? 应该是没有关系的呀,与输入的值是不同的....怎么书上运行的结果和我的不一样啊 package c ...

- 面试必备:高频算法题终章「图文解析 + 范例代码」之 矩阵 二进制 + 位运算 + LRU 合集

Attention 秋招接近尾声,我总结了 牛客.WanAndroid 上,有关笔试面经的帖子中出现的算法题,结合往年考题写了这一系列文章,所有文章均与 LeetCode 进行核对.测试.欢迎食用 本 ...

- JAVA理解逻辑程序的书上全部重要的习题

今天随便翻翻看以前学过JAVA理解逻辑程序的书上全部练习,为了一些刚学的学弟学妹,所以呢就把这些作为共享了. 希望对初学的学弟学妹有所帮助! 例子:升级“我行我素购物管理系统”,实现购物结算功能 代码 ...

- OK 开始实践书上的项目一:即使标记

OK 开始实践书上的项目一:及时标记 然而....又得往前面看啦! ----------------------我是分割线------------------------ 代码改变世界

- 关于node的基础理论,书上看来的

最近看了一本书,说了一些Node.js的东西,现在来记录一下,让自己记得更牢靠一点. 在书上,是这样介绍的:Node.js模型是源于Ruby的Event Machine 和 Python的Twiste ...

随机推荐

- javaScript 之set/get方法的使用

例1:var fe={ name:'leony', $age:null, get age(){ if(this.$age == undefined){ //this.$age == undefined ...

- css里涉及到定位相关的example实例

一,情景导入:正常文档流:指的是HTML文档在进行内容布局时所遵循的从左到右,从上到下的表现方式.HTML中的大多数元素都处在正常的文档流中,而一个元素要脱离正常流的唯一途径就是浮动或定位.二,定位的 ...

- 将一个list转成json数组-晚上坐49路回去打卡

- lets encrypt 申请nginx 泛域名

1. 安装certbot工具 wget https://dl.eff.org/certbot-auto chmod a+x ./certbot-auto 2. 申请通配符域名 ./certbot-au ...

- oracle nodejs 访问

1. 下载node-oracle网址如下: https://github.com/joeferner/node-oracle 2. 测试代码: var oracle = require('oracle ...

- sdut2165 Crack Mathmen (山东省第二届ACM省赛)

版权声明:本文为博主原创文章,未经博主同意不得转载. https://blog.csdn.net/svitter/article/details/24270265 本文出自:http://blog.c ...

- 基于CentOS与VmwareStation10搭建Oracle11G RAC 64集群环境

1.资源准备 最近,在VmwareStation 10虚拟机上,基于CentOS5.4安装Oracle 11g RAC,并把过程记录下来.刚开始时,是基于CentOS 6.4安装Oracle 11g ...

- POJ3254Corn Fields——状态压缩dp

题目:http://poj.org/problem?id=3254 1.枚举行: 2.把有影响的“放不放牛”加入参数中,用二进制数表示该位置放不放牛,再用十进制数表示二进制数: 3.优美的预处理lis ...

- 【python】重定向输出

重定向的理解:就是把所要输出的结果输出到其他的地方.常用方法:"print >>",(若有其他方法后续补充) 举个例子: __author__ = 'paulwinfl ...

- php如何分割字符串?php mb_substr分割字条串,解决中文乱码问题,支持分割中文! (转)

因为网站开发需要,必须有一项功能可以把字符串一个一个分割开来,并且转换为数组. 刚开始用“str_split函数”在实验分割中文字符时就出现了乱码. 蚂蚁学院经过一翻研究,最终发现以下方法可以有效分割 ...