自然语言13_Stop words with NLTK

python机器学习-乳腺癌细胞挖掘(博主亲自录制视频)https://study.163.com/course/introduction.htm?courseId=1005269003&utm_campaign=commission&utm_source=cp-400000000398149&utm_medium=share

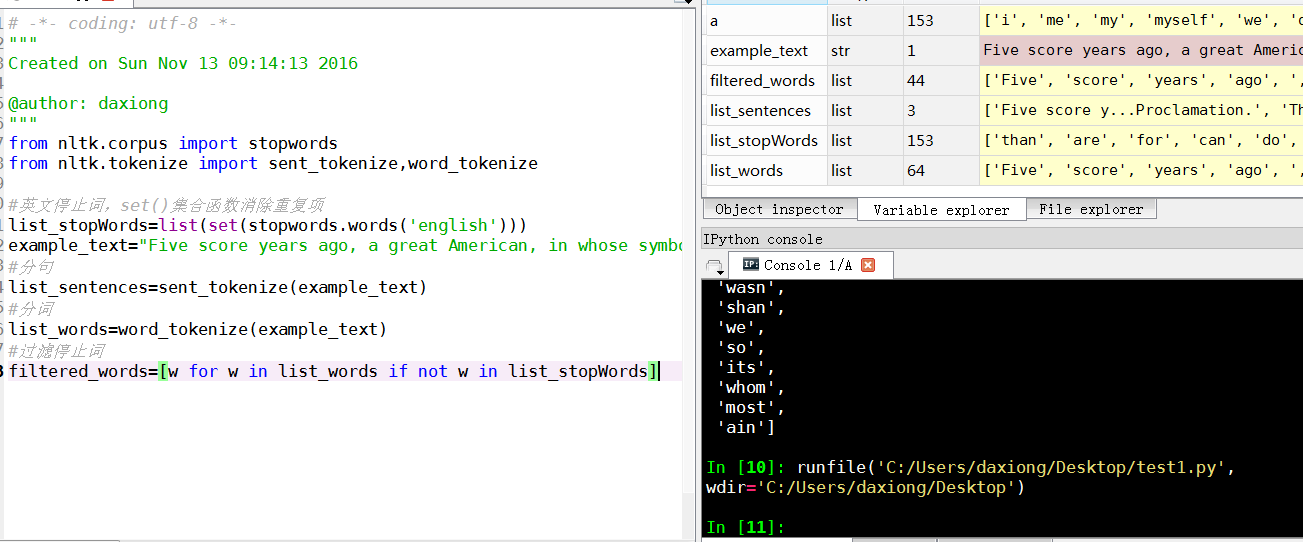

# -*- coding: utf-8 -*-

"""

Created on Sun Nov 13 09:14:13 2016 @author: daxiong

"""

from nltk.corpus import stopwords

from nltk.tokenize import sent_tokenize,word_tokenize #英文停止词,set()集合函数消除重复项

list_stopWords=list(set(stopwords.words('english')))

example_text="Five score years ago, a great American, in whose symbolic shadow we stand today, signed the Emancipation Proclamation. This momentous decree came as a great beacon light of hope to millions of Negro slaves who had been seared in the flames of withering injustice. It came as a joyous daybreak to end the long night of bad captivity."

#分句

list_sentences=sent_tokenize(example_text)

#分词

list_words=word_tokenize(example_text)

#过滤停止词

filtered_words=[w for w in list_words if not w in list_stopWords]

Stop words with NLTK

The idea of Natural Language Processing is to do some form of

analysis, or processing, where the machine can understand, at least to

some level, what the text means, says, or implies.

This is an obviously massive challenge, but there are steps to

doing it that anyone can follow. The main idea, however, is that

computers simply do not, and will not, ever understand words directly.

Humans don't either *shocker*. In humans, memory is broken down into

electrical signals in the brain, in the form of neural groups that fire

in patterns. There is a lot about the brain that remains unknown, but,

the more we break down the human brain to the basic elements, we find

out basic the elements really are. Well, it turns out computers store

information in a very similar way! We need a way to get as close to that

as possible if we're going to mimic how humans read and understand

text. Generally, computers use numbers for everything, but we often see

directly in programming where we use binary signals (True or False,

which directly translate to 1 or 0, which originates directly from

either the presence of an electrical signal (True, 1), or not (False,

0)). To do this, we need a way to convert words to values, in numbers,

or signal patterns. The process of converting data to something a

computer can understand is referred to as "pre-processing." One of the

major forms of pre-processing is going to be filtering out useless data.

In natural language processing, useless words (data), are referred to

as stop words.

Immediately, we can recognize ourselves that some words carry more

meaning than other words. We can also see that some words are just

plain useless, and are filler words. We use them in the English

language, for example, to sort of "fluff" up the sentence so it is not

so strange sounding. An example of one of the most common, unofficial,

useless words is the phrase "umm." People stuff in "umm" frequently,

some more than others. This word means nothing, unless of course we're

searching for someone who is maybe lacking confidence, is confused, or

hasn't practiced much speaking. We all do it, you can hear me saying

"umm" or "uhh" in the videos plenty of ...uh ... times. For most

analysis, these words are useless.

We would not want these words taking up space in our database, or

taking up valuable processing time. As such, we call these words "stop

words" because they are useless, and we wish to do nothing with them.

Another version of the term "stop words" can be more literal: Words we

stop on.

For example, you may wish to completely cease analysis if you

detect words that are commonly used sarcastically, and stop immediately.

Sarcastic words, or phrases are going to vary by lexicon and corpus.

For now, we'll be considering stop words as words that just contain no

meaning, and we want to remove them.

You can do this easily, by storing a list of words that you

consider to be stop words. NLTK starts you off with a bunch of words

that they consider to be stop words, you can access it via the NLTK

corpus with:

from nltk.corpus import stopwords

Here is the list:

{'ourselves', 'hers', 'between', 'yourself', 'but', 'again', 'there',

'about', 'once', 'during', 'out', 'very', 'having', 'with', 'they',

'own', 'an', 'be', 'some', 'for', 'do', 'its', 'yours', 'such', 'into',

'of', 'most', 'itself', 'other', 'off', 'is', 's', 'am', 'or', 'who',

'as', 'from', 'him', 'each', 'the', 'themselves', 'until', 'below',

'are', 'we', 'these', 'your', 'his', 'through', 'don', 'nor', 'me',

'were', 'her', 'more', 'himself', 'this', 'down', 'should', 'our',

'their', 'while', 'above', 'both', 'up', 'to', 'ours', 'had', 'she',

'all', 'no', 'when', 'at', 'any', 'before', 'them', 'same', 'and',

'been', 'have', 'in', 'will', 'on', 'does', 'yourselves', 'then',

'that', 'because', 'what', 'over', 'why', 'so', 'can', 'did', 'not',

'now', 'under', 'he', 'you', 'herself', 'has', 'just', 'where', 'too',

'only', 'myself', 'which', 'those', 'i', 'after', 'few', 'whom', 't',

'being', 'if', 'theirs', 'my', 'against', 'a', 'by', 'doing', 'it',

'how', 'further', 'was', 'here', 'than'}

Here is how you might incorporate using the stop_words set to remove the stop words from your text:

from nltk.corpus import stopwords

from nltk.tokenize import word_tokenize example_sent = "This is a sample sentence, showing off the stop words filtration." stop_words = set(stopwords.words('english')) word_tokens = word_tokenize(example_sent) filtered_sentence = [w for w in word_tokens if not w in stop_words] filtered_sentence = [] for w in word_tokens:

if w not in stop_words:

filtered_sentence.append(w) print(word_tokens)

print(filtered_sentence)

Our output here:['This', 'is', 'a', 'sample', 'sentence', ',', 'showing', 'off', 'the', 'stop', 'words', 'filtration', '.']

['This', 'sample', 'sentence', ',', 'showing', 'stop', 'words', 'filtration', '.']

Our database thanks us. Another form of data pre-processing is 'stemming,' which is what we're going to be talking about next.

自然语言13_Stop words with NLTK的更多相关文章

- 自然语言处理(1)之NLTK与PYTHON

自然语言处理(1)之NLTK与PYTHON 题记: 由于现在的项目是搜索引擎,所以不由的对自然语言处理产生了好奇,再加上一直以来都想学Python,只是没有机会与时间.碰巧这几天在亚马逊上找书时发现了 ...

- 自然语言23_Text Classification with NLTK

QQ:231469242 欢迎喜欢nltk朋友交流 https://www.pythonprogramming.net/text-classification-nltk-tutorial/?compl ...

- 自然语言20_The corpora with NLTK

QQ:231469242 欢迎喜欢nltk朋友交流 https://www.pythonprogramming.net/nltk-corpus-corpora-tutorial/?completed= ...

- 自然语言19.1_Lemmatizing with NLTK(单词变体还原)

QQ:231469242 欢迎喜欢nltk朋友交流 https://www.pythonprogramming.net/lemmatizing-nltk-tutorial/?completed=/na ...

- 自然语言14_Stemming words with NLTK

https://www.pythonprogramming.net/stemming-nltk-tutorial/?completed=/stop-words-nltk-tutorial/ # -*- ...

- 自然语言处理2.1——NLTK文本语料库

1.获取文本语料库 NLTK库中包含了大量的语料库,下面一一介绍几个: (1)古腾堡语料库:NLTK包含古腾堡项目电子文本档案的一小部分文本.该项目目前大约有36000本免费的电子图书. >&g ...

- python自然语言处理函数库nltk从入门到精通

1. 关于Python安装的补充 若在ubuntu系统中同时安装了Python2和python3,则输入python或python2命令打开python2.x版本的控制台:输入python3命令打开p ...

- Python自然语言处理实践: 在NLTK中使用斯坦福中文分词器

http://www.52nlp.cn/python%E8%87%AA%E7%84%B6%E8%AF%AD%E8%A8%80%E5%A4%84%E7%90%86%E5%AE%9E%E8%B7%B5-% ...

- 推荐《用Python进行自然语言处理》中文翻译-NLTK配套书

NLTK配套书<用Python进行自然语言处理>(Natural Language Processing with Python)已经出版好几年了,但是国内一直没有翻译的中文版,虽然读英文 ...

随机推荐

- RabbitMQ官方中文入门教程(PHP版) 第四部分:路由(Routing)

路由(Routing) 在前面的教程中,我们实现了一个简单的日志系统.可以把日志消息广播给多个接收者. 本篇教程中我们打算新增一个功能——使得它能够只订阅消息的一个字集.例如,我们只需要把严重的错误日 ...

- xml文件的生成与解析

生成方法一:同事StringBuffer类对xml文件格式解析写入 package com.steel_rocky.xml; import android.app.Activity; import a ...

- Hibernate的三种状态

Hibernate的对象有3种状态,分别为:瞬时态(Transient). 持久态(Persistent).脱管态(Detached).处于持久态的对象也称为PO(Persistence Objec ...

- 收藏Javascript中常用的55个经典技巧

1. oncontextmenu="window.event.returnValue=false" 将彻底屏蔽鼠标右键 <table border oncontextmenu ...

- Spring中配置和读取多个Properties文件

一个系统中通常会存在如下一些以Properties形式存在的配置文件 1.数据库配置文件demo-db.properties: database.url=jdbc:mysql://localhost/ ...

- jsp 中的js 与 jstl 运行的先后顺序

在jsp 中运行下面的代码,结论是:js 中可以使用 标签,js 的注释 对标签无效-- 有知道原理的吗<c:set var="flag" value="false ...

- 常用免费的WebService列表

天气预报Web服务,数据来源于中国气象局 Endpoint : http://www.webxml.com.cn/WebServices/WeatherWebService.asmx Disc ...

- iOS事件传递&响应者链条

原文:http://www.cnblogs.com/Quains/p/3369132.html 主要是记录下iOS的界面触摸事件处理机制,然后用一个实例来说明下应用场景. 一.处理机制 界面响应消息机 ...

- adb错误解决

1.adb是什么?ADB全称Android Debug Bridge, 是android sdk里的一个工具,用这个工具可以直接操作管理android模拟器或者真实的andriod设备. 2.调试安卓 ...

- ajax请求web服务返回json格式

由于.net frameword3.5以上添加了对contenttype的检查,当ajax发送请求时,如果设置了contenttype为json,那么请求webservice时,会自动将返回的内容转为 ...