HBASE 基础命令总结

HBASE基础命令总结

一,概述

本文中介绍了hbase的基础命令,作者既有记录总结hbase基础命令的目的还有本着分享的精神,和广大读者一起进步。本文的hbase版本是:HBase 1.2.0-cdh5.10.0。

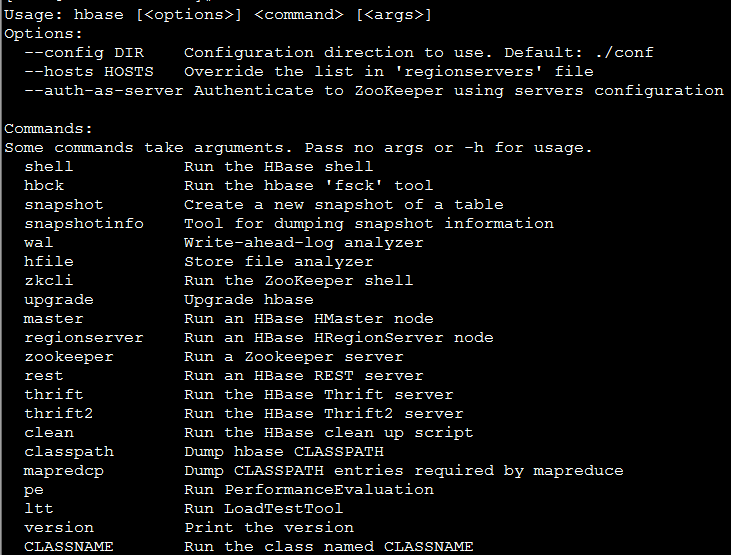

二,HBase工具命令

上面的 master、regionserver、zookeeper、rest、thrift、thrift2 都是启动命令启动命令就不介绍了。下面一一介绍我认为比较重要的命令:

1,hbase shell 命令

这个命令主要是进入hbase客户端client 的命令,在这里可以操作hbase 数据库,诸如:增删改查等这个命令内容较多,本文简单介绍这个命令以后会着重讲解这个命令见下面代码:

hbase(main):001:0> help

HBase Shell, version 1.2.0-cdh5.10.0, rUnknown, Fri Jan 20 12:13:18 PST 2017

Type 'help "COMMAND"', (e.g. 'help "get"' -- the quotes are necessary) for help on a specific command.

Commands are grouped. Type 'help "COMMAND_GROUP"', (e.g. 'help "general"') for help on a command group. COMMAND GROUPS:

Group name: general

Commands: status, table_help, version, whoami Group name: ddl

Commands: alter, alter_async, alter_status, create, describe, disable, disable_all, drop, drop_all, enable, enable_all, exists, get_table, is_disabled, is_enabled, list, locate_region,

show_filters Group name: namespace

Commands: alter_namespace, create_namespace, describe_namespace, drop_namespace, list_namespace, list_namespace_tables Group name: dml

Commands: append, count, delete, deleteall, get, get_counter, get_splits, incr, put, scan, truncate, truncate_preserve Group name: tools

Commands: assign, balance_switch, balancer, balancer_enabled, catalogjanitor_enabled, catalogjanitor_run, catalogjanitor_switch, close_region, compact, compact_mob, compact_rs, flush,

major_compact, major_compact_mob, merge_region, move, normalize, normalizer_enabled, normalizer_switch, split, trace, unassign, wal_roll, zk_dump Group name: replication

Commands: add_peer, append_peer_tableCFs, disable_peer, disable_table_replication, enable_peer, enable_table_replication, get_peer_config, list_peer_configs, list_peers,

list_replicated_tables, remove_peer, remove_peer_tableCFs, set_peer_tableCFs, show_peer_tableCFs, update_peer_config Group name: snapshots

Commands: clone_snapshot, delete_all_snapshot, delete_snapshot, list_snapshots, restore_snapshot, snapshot Group name: configuration

Commands: update_all_config, update_config Group name: quotas

Commands: list_quotas, set_quota Group name: security

Commands: grant, list_security_capabilities, revoke, user_permission Group name: procedures

Commands: abort_procedure, list_procedures Group name: visibility labels

Commands: add_labels, clear_auths, get_auths, list_labels, set_auths, set_visibility SHELL USAGE:

Quote all names in HBase Shell such as table and column names. Commas delimit

command parameters. Type <RETURN> after entering a command to run it.

Dictionaries of configuration used in the creation and alteration of tables are

Ruby Hashes. They look like this: {'key1' => 'value1', 'key2' => 'value2', ...} and are opened and closed with curley-braces. Key/values are delimited by the

'=>' character combination. Usually keys are predefined constants such as

NAME, VERSIONS, COMPRESSION, etc. Constants do not need to be quoted. Type

'Object.constants' to see a (messy) list of all constants in the environment. If you are using binary keys or values and need to enter them in the shell, use

double-quote'd hexadecimal representation. For example: hbase> get 't1', "key\x03\x3f\xcd"

hbase> get 't1', "key\003\023\011"

hbase> put 't1', "test\xef\xff", 'f1:', "\x01\x33\x40" The HBase shell is the (J)Ruby IRB with the above HBase-specific commands added.

For more on the HBase Shell, see http://hbase.apache.org/book.html

2,hbase hbck 检测命令

[hbase@rhel1009167 root]$ hbase hbck -h

Java HotSpot(TM) 64-Bit Server VM warning: Using incremental CMS is deprecated and will likely be removed in a future release

19/03/03 17:09:41 INFO Configuration.deprecation: fs.default.name is deprecated. Instead, use fs.defaultFS

Usage: fsck [opts] {only tables}

where [opts] are:

-help Display help options (this)

-details Display full report of all regions.

-timelag <timeInSeconds> Process only regions that have not experienced any metadata updates in the last <timeInSeconds> seconds.

-sleepBeforeRerun <timeInSeconds> Sleep this many seconds before checking if the fix worked if run with -fix

-summary Print only summary of the tables and status.

-metaonly Only check the state of the hbase:meta table.

-sidelineDir <hdfs://> HDFS path to backup existing meta.

-boundaries Verify that regions boundaries are the same between META and store files.

-exclusive Abort if another hbck is exclusive or fixing.

-disableBalancer Disable the load balancer. Metadata Repair options: (expert features, use with caution!)

-fix Try to fix region assignments. This is for backwards compatiblity

-fixAssignments Try to fix region assignments. Replaces the old -fix

-fixMeta Try to fix meta problems. This assumes HDFS region info is good.

-noHdfsChecking Don't load/check region info from HDFS. Assumes hbase:meta region info is good. Won't check/fix any HDFS issue, e.g. hole, orphan, or overlap

-fixHdfsHoles Try to fix region holes in hdfs.

-fixHdfsOrphans Try to fix region dirs with no .regioninfo file in hdfs

-fixTableOrphans Try to fix table dirs with no .tableinfo file in hdfs (online mode only)

-fixHdfsOverlaps Try to fix region overlaps in hdfs.

-fixVersionFile Try to fix missing hbase.version file in hdfs.

-maxMerge <n> When fixing region overlaps, allow at most <n> regions to merge. (n=5 by default)

-sidelineBigOverlaps When fixing region overlaps, allow to sideline big overlaps

-maxOverlapsToSideline <n> When fixing region overlaps, allow at most <n> regions to sideline per group. (n=2 by default)

-fixSplitParents Try to force offline split parents to be online.

-ignorePreCheckPermission ignore filesystem permission pre-check

-fixReferenceFiles Try to offline lingering reference store files

-fixEmptyMetaCells Try to fix hbase:meta entries not referencing any region (empty REGIONINFO_QUALIFIER rows) Datafile Repair options: (expert features, use with caution!)

-checkCorruptHFiles Check all Hfiles by opening them to make sure they are valid

-sidelineCorruptHFiles Quarantine corrupted HFiles. implies -checkCorruptHFiles Metadata Repair shortcuts

-repair Shortcut for -fixAssignments -fixMeta -fixHdfsHoles -fixHdfsOrphans -fixHdfsOverlaps -fixVersionFile -sidelineBigOverlaps -fixReferenceFiles -fixTableLocks

-fixOrphanedTableZnodes

-repairHoles Shortcut for -fixAssignments -fixMeta -fixHdfsHoles Table lock options

-fixTableLocks Deletes table locks held for a long time (hbase.table.lock.expire.ms, 10min by default) Table Znode options

-fixOrphanedTableZnodes Set table state in ZNode to disabled if table does not exists Replication options

-fixReplication Deletes replication queues for removed peers

这是一个用于检查区域一致性和表完整性问题并且修复损坏的Hbase的工具,它工作在两种基本模式-只读不一致识别模式和多阶段写修复模式。如果想要检查您的hbase集群是否损坏就运行命令 hbase hbck

hbase hbck :此处的命令有很多INFO日志,本文已经略去

在命令结束的时候,它会打印OK或者告诉您存在的INCONSISTENCIES数量。偶尔的不一致也可能是暂时的所以要多运行几次。

Java HotSpot(TM) 64-Bit Server VM warning: Using incremental CMS is deprecated and will likely be removed in a future release

19/03/03 10:51:44 INFO Configuration.deprecation: fs.default.name is deprecated. Instead, use fs.defaultFS

HBaseFsck command line options:

19/03/03 10:51:44 INFO util.HBaseFsck: Launching hbck

19/03/03 10:51:44 INFO zookeeper.RecoverableZooKeeper: Process identifier=hconnection-0x66fdec9 connecting to ZooKeeper ensemble=rhel1009161:2181,rhel1009179:2181,rhel1009167:2181

此处省略环境变量和路径

Version: 1.2.0-cdh5.10.0

Number of live region servers: 3

Number of dead region servers: 0

Master: rhel1009161,60000,1548150309236

Number of backup masters: 0

Average load: 12.666666666666666

Number of requests: 0

Number of regions: 38

Number of regions in transition: 0

19/03/03 10:51:44 INFO Configuration.deprecation: hadoop.native.lib is deprecated. Instead, use io.native.lib.available

19/03/03 10:51:44 INFO util.HBaseFsck: Loading regionsinfo from the hbase:meta table Number of empty REGIONINFO_QUALIFIER rows in hbase:meta: 0

Number of Tables: 13

19/03/03 10:51:44 INFO util.HBaseFsck: Loading region directories from HDFS

.

19/03/03 10:51:45 INFO util.HBaseFsck: Loading region information from HDFS

Summary:

19/03/03 10:51:45 INFO zookeeper.ClientCnxn: EventThread shut down

Table CONSUMER_OFFSET is okay.

Number of regions: 1

Deployed on: rhel1009173,60020,1548150308701

Table scores is okay.

Number of regions: 1

Deployed on: rhel1009179,60020,1548150309012

Table hbase:meta is okay.

Number of regions: 1

Deployed on: rhel1009167,60020,1548150309349

Table SYSTEM.CATALOG is okay.

Number of regions: 1

Deployed on: rhel1009173,60020,1548150308701

Table logs is okay.

Number of regions: 25

Deployed on: rhel1009167,60020,1548150309349 rhel1009173,60020,1548150308701 rhel1009179,60020,1548150309012

Table test is okay.

Number of regions: 1

Deployed on: rhel1009173,60020,1548150308701

Table UNIFIED_TREATED_OFFSET is okay.

Number of regions: 1

Deployed on: rhel1009167,60020,1548150309349

Table hbase:namespace is okay.

Number of regions: 1

Deployed on: rhel1009167,60020,1548150309349

Table SYSTEM:SEQUENCE is okay.

Number of regions: 1

Deployed on: rhel1009179,60020,1548150309012

Table SYSTEM:FUNCTION is okay.

Number of regions: 1

Deployed on: rhel1009173,60020,1548150308701

Table SYSTEM.SEQUENCE is okay.

Number of regions: 1

Deployed on: rhel1009173,60020,1548150308701

Table SYSTEM.FUNCTION is okay.

Number of regions: 1

Deployed on: rhel1009167,60020,1548150309349

Table SYSTEM.STATS is okay.

Number of regions: 1

Deployed on: rhel1009179,60020,1548150309012

Table SYSTEM:STATS is okay.

Number of regions: 1

Deployed on: rhel1009167,60020,1548150309349

0 inconsistencies detected.

Status: OK

在操作上,如果您想要定期报告hbck情况使用 -details选项将报告更多细节。

hbase hbck -details

和上面对比更加详细的日志有:

Version: 1.2.0-cdh5.10.0

Number of live region servers: 3

rhel1009167,60020,1548150309349

rhel1009179,60020,1548150309012

rhel1009173,60020,1548150308701

Number of dead region servers: 0

Master: rhel1009161,60000,1548150309236

Number of backup masters: 0

Average load: 12.666666666666666

Number of requests: 0

Number of regions: 38

Number of regions in transition: 0

RegionServer: rhel1009167,60020,1548150309349 number of regions: 14

RegionServer: rhel1009173,60020,1548150308701 number of regions: 13

CONSUMER_OFFSET,,1542955855321.7fb40659f11f9aa40cc4e4592e7b0ee8. id: 1542955855321 encoded_name: 7fb40659f11f9aa40cc4e4592e7b0ee8 start: end:

SYSTEM.CATALOG,,1542868574952.c12de5a41e40941ead11d9c28610715b. id: 1542868574952 encoded_name: c12de5a41e40941ead11d9c28610715b start: end:

logs,06,1550475903153.5c01a9598f165dc920190e62095df2d9. id: 1550475903153 encoded_name: 5c01a9598f165dc920190e62095df2d9 start: 06 end: 07

logs,07,1550475903153.41822d6c6b7cd327899a702042891843. id: 1550475903153 encoded_name: 41822d6c6b7cd327899a702042891843 start: 07 end: 08

logs,08,1550475903153.b78167598124d3e26fc83284af4070fd. id: 1550475903153 encoded_name: b78167598124d3e26fc83284af4070fd start: 08 end: 09

logs,11,1550475903153.839eb42eeb4a24509b946f1e3479782e. id: 1550475903153 encoded_name: 839eb42eeb4a24509b946f1e3479782e start: 11 end: 12

logs,15,1550475903153.64d80dbb27469f8f8af220b2d0f5e6f9. id: 1550475903153 encoded_name: 64d80dbb27469f8f8af220b2d0f5e6f9 start: 15 end: 16

hbase:meta,,1.1588230740 id: 1 encoded_name: 1588230740 start: end:

logs,18,1550475903153.3c95183ef591ecd40eb2974235fd7fb3. id: 1550475903153 encoded_name: 3c95183ef591ecd40eb2974235fd7fb3 start: 18 end: 19

logs,01,1550475903153.90a78af1f727bc227fae4eb110bf9f81. id: 1550475903153 encoded_name: 90a78af1f727bc227fae4eb110bf9f81 start: 01 end: 02

logs,03,1550475903153.ed0a1db1482cdc32c5c81db7c13d0640. id: 1550475903153 encoded_name: ed0a1db1482cdc32c5c81db7c13d0640 start: 03 end: 04

logs,20,1550475903153.f1d80dd75b6081ad4e2c228943d3ea26. id: 1550475903153 encoded_name: f1d80dd75b6081ad4e2c228943d3ea26 start: 20 end: 21

logs,04,1550475903153.e0d0ffe01694d399d4561f7ccd8a4251. id: 1550475903153 encoded_name: e0d0ffe01694d399d4561f7ccd8a4251 start: 04 end: 05

logs,22,1550475903153.d25a8660a05bcc622b8d097de95de1d9. id: 1550475903153 encoded_name: d25a8660a05bcc622b8d097de95de1d9 start: 22 end: 23

test,,1551005576808.dea7c6c100149485017af503a5e4e1be. id: 1551005576808 encoded_name: dea7c6c100149485017af503a5e4e1be start: end:

SYSTEM:FUNCTION,,1542955835416.1c38cae8b41456637f964d304b5d4c12. id: 1542955835416 encoded_name: 1c38cae8b41456637f964d304b5d4c12 start: end:

SYSTEM.SEQUENCE,,1543905944331.9d1b8e635bff6f102f6449f52e831fa5. id: 1543905944331 encoded_name: 9d1b8e635bff6f102f6449f52e831fa5 start: end:

RegionServer: rhel1009179,60020,1548150309012 number of regions: 11

scores,,1543037693784.dde4190ffc736f98eaa9daa1ea05041d. id: 1543037693784 encoded_name: dde4190ffc736f98eaa9daa1ea05041d start: end:

logs,,1550475903153.e0cd325149800ef8530183f8bb3a0e3e. id: 1550475903153 encoded_name: e0cd325149800ef8530183f8bb3a0e3e start: end: 01

logs,02,1550475903153.e62cf30a617df4ec1ae0eabb2234ffcf. id: 1550475903153 encoded_name: e62cf30a617df4ec1ae0eabb2234ffcf start: 02 end: 03

logs,05,1550475903153.73f2d6e692b279f6195bb819868ef994. id: 1550475903153 encoded_name: 73f2d6e692b279f6195bb819868ef994 start: 05 end: 06

logs,13,1550475903153.a1198f2a30fa37cee7af8469c98d1b2b. id: 1550475903153 encoded_name: a1198f2a30fa37cee7af8469c98d1b2b start: 13 end: 14

logs,14,1550475903153.2434987f549f96afd8cf0d0350a0c75a. id: 1550475903153 encoded_name: 2434987f549f96afd8cf0d0350a0c75a start: 14 end: 15

logs,17,1550475903153.049ef09d700b5ccfe1f9dc81eb67b622. id: 1550475903153 encoded_name: 049ef09d700b5ccfe1f9dc81eb67b622 start: 17 end: 18

logs,19,1550475903153.abea5829dfde5fb41ffeb0850d9bf887. id: 1550475903153 encoded_name: abea5829dfde5fb41ffeb0850d9bf887 start: 19 end: 20

logs,23,1550475903153.94b3e1a2714d4ca430f420d278bcd521. id: 1550475903153 encoded_name: 94b3e1a2714d4ca430f420d278bcd521 start: 23 end: 24

logs,09,1550475903153.1748392dfa2bd60e2ef77e546ae2965c. id: 1550475903153 encoded_name: 1748392dfa2bd60e2ef77e546ae2965c start: 09 end: 10

SYSTEM:SEQUENCE,,1542955832695.6075b49813063999d52eb99a9698cb29. id: 1542955832695 encoded_name: 6075b49813063999d52eb99a9698cb29 start: end:

SYSTEM.STATS,,1543905945651.223f3fb04a9f65ccb1e7e1820411867c. id: 1543905945651 encoded_name: 223f3fb04a9f65ccb1e7e1820411867c start: end:

logs,10,1550475903153.749026a0500f23933466d8af3115cf2d. id: 1550475903153 encoded_name: 749026a0500f23933466d8af3115cf2d start: 10 end: 11

logs,12,1550475903153.fbfad1572d67c451f5daa1de87565cf1. id: 1550475903153 encoded_name: fbfad1572d67c451f5daa1de87565cf1 start: 12 end: 13

logs,16,1550475903153.64a37d3879353f6ea372c8ef296a5c22. id: 1550475903153 encoded_name: 64a37d3879353f6ea372c8ef296a5c22 start: 16 end: 17

logs,21,1550475903153.a4a1786a84ad60aa086e46d3209657c3. id: 1550475903153 encoded_name: a4a1786a84ad60aa086e46d3209657c3 start: 21 end: 22

logs,24,1550475903153.8ffca9986bd916c9e311a1ed681f818a. id: 1550475903153 encoded_name: 8ffca9986bd916c9e311a1ed681f818a start: 24 end:

UNIFIED_TREATED_OFFSET,,1542955848844.e6c6b20204132fdca2a037b8b2c145ee. id: 1542955848844 encoded_name: e6c6b20204132fdca2a037b8b2c145ee start: end:

hbase:namespace,,1542770225289.36bb8d029f2a478b59ffa7b5f2e2d7a9. id: 1542770225289 encoded_name: 36bb8d029f2a478b59ffa7b5f2e2d7a9 start: end:

SYSTEM.FUNCTION,,1543905946894.b0ca0860f46ab494a40e81ea3732e990. id: 1543905946894 encoded_name: b0ca0860f46ab494a40e81ea3732e990 start: end:

SYSTEM:STATS,,1542955834028.07cc65ad3379ccc1af28924cb8cb1d50. id: 1542955834028 encoded_name: 07cc65ad3379ccc1af28924cb8cb1d50 start: end:

Number of Tables: 13

Table: SYSTEM:STATS rw families: 1

Table: SYSTEM:FUNCTION rw families: 1

Table: SYSTEM.STATS rw families: 1

Table: hbase:namespace rw families: 1

Table: SYSTEM:SEQUENCE rw families: 1

Table: CONSUMER_OFFSET rw families: 1

Table: SYSTEM.SEQUENCE rw families: 1

Table: SYSTEM.FUNCTION rw families: 1

Table: SYSTEM.CATALOG rw families: 1

Table: scores rw families: 3

Table: test rw families: 1

Table: logs rw families: 1

Table: UNIFIED_TREATED_OFFSET rw families: 1

---- Table 'CONSUMER_OFFSET': region split map

: [ { meta => CONSUMER_OFFSET,,1542955855321.7fb40659f11f9aa40cc4e4592e7b0ee8., hdfs => hdfs://nameservice1/hbase/data/default/CONSUMER_OFFSET/7fb40659f11f9aa40cc4e4592e7b0ee8,

deployed => rhel1009173,60020,1548150308701;CONSUMER_OFFSET,,1542955855321.7fb40659f11f9aa40cc4e4592e7b0ee8., replicaId => 0 }, ]

null:

---- Table 'CONSUMER_OFFSET': overlap groups

There are 0 overlap groups with 0 overlapping regions

---- Table 'scores': region split map

: [ { meta => scores,,1543037693784.dde4190ffc736f98eaa9daa1ea05041d., hdfs => hdfs://nameservice1/hbase/data/default/scores/dde4190ffc736f98eaa9daa1ea05041d,

deployed => rhel1009179,60020,1548150309012;scores,,1543037693784.dde4190ffc736f98eaa9daa1ea05041d., replicaId => 0 }, ]

null:

---- Table 'scores': overlap groups

There are 0 overlap groups with 0 overlapping regions

---- Table 'hbase:meta': region split map

: [ { meta => hbase:meta,,1.1588230740, hdfs => hdfs://nameservice1/hbase/data/hbase/meta/1588230740, deployed => rhel1009167,60020,1548150309349;hbase:meta,,1.1588230740,

replicaId => 0 }, ]

null:

---- Table 'hbase:meta': overlap groups

There are 0 overlap groups with 0 overlapping regions

---- Table 'SYSTEM.CATALOG': region split map

: [ { meta => SYSTEM.CATALOG,,1542868574952.c12de5a41e40941ead11d9c28610715b., hdfs => hdfs://nameservice1/hbase/data/default/SYSTEM.CATALOG/c12de5a41e40941ead11d9c28610715b,

deployed => rhel1009173,60020,1548150308701;SYSTEM.CATALOG,,1542868574952.c12de5a41e40941ead11d9c28610715b., replicaId => 0 }, ]

null:

---- Table 'SYSTEM.CATALOG': overlap groups

There are 0 overlap groups with 0 overlapping regions

如果信息过多还可以针对表来进行检测比如:

hbase hbck test

test 就是表名

3,hbase hbck 修复命令

如果多次检测都出现了不一致或者其他问题,那么就要针对性的修复了修复命令如:

hbase hbck -fixVersionFile 解决缺失hbase.version 文件 的问题

hbase hbck -fixAssignments 解决 用于修复region没有assign、不应该assign、assign多次的问题。

如果region既不在meta表中,又不在hdfs上,但是在regionserver的online region集合中;

如果一个region在META表中有记录,在hdfs上面也有,table不是disabled的,但是这个region没有被服务;

如果一个region在META表中有记录,在hdfs上面也有,table是disabled的,但是这个region被某个regionserver服务了;

如果一个region在META表中有记录,在hdfs上面也有,table不是disabled的,但是这个region被多个regionserver服务了;

如果一个region在META表中,在hdfs上面也有,也应该被服务,但是META表中记录的regionserver和实际所在的regionserver不相符

hbase hbck -fixAssignments -fixMeta 解决如果一个region在meta中,并且在regionserver的online region集合中,但是hdfs集合没有,(-fixAssignments告诉regionserver close region),(-fixMeta删除META表中region的记录)

hbase hbck -fixMeta -fixAssignments 解决 如果一个region在meta表中没有记录,没有被regionserver服务,但是hdfs上面有。(-fixAssignments 用于assign region),( -fixMeta用于在META表中添加region的记录)

hbase hbck -fixMeta 解决如果hdfs上面没有,那么从Meta表中删除相应的记录,如果hdfs上面有,在meta表中添加相应的记录。

如果一个region在Meta表中没有记录,在hdfs上面有,被regionserver服务了;如果在meta有记录,但是在hdfs没有,并且没有被regionserver服务;

hbase hbck -fixHdfsHoles 创建一个新的空region,填补空洞,但是不assign 这个 region,也不在META表中添加这个region的相关信息,

选项只是创建了一个新的空region,填补上了这个区间,还需要加上-fixAssignments -fixMeta 来解决问题,

-fixAssignments 用于assign region,

-fixMeta用于在META表中添加region的记录,

所以有了组合拳 -repairHoles 修复region holes,相当于-fixAssignments -fixMeta -fixHdfsHoles -fixHdfsOrphans

hbase hbck -fixHdfsOrphans region在hdfs上面没有.regioninfo文件

hbase hbck -fixHdfsOverlaps

hbase hbck -repair 这个命令是打开所有的修复项目,相当于 -fixAssignments -fixMeta -fixHdfsHoles -fixHdfsOrphans -fixHdfsOverlaps -fixVersionFile -sidelineBigOverlaps

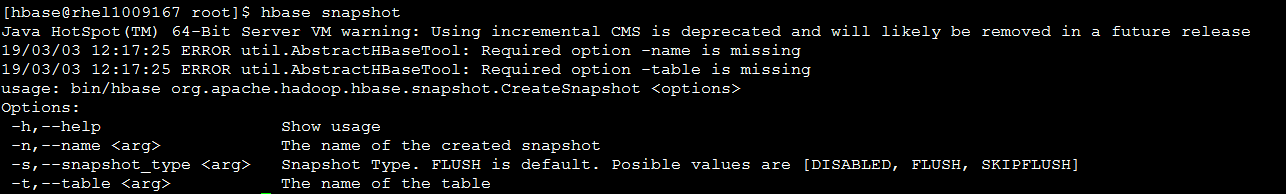

4,hbase snapshot 命令

使用这个命令可以创建hbase快照:

举例说明:hbase snapshot -name test_snapshot -table test

这个命令就是给表test创建一个快照快照名字叫做 test_snapshot

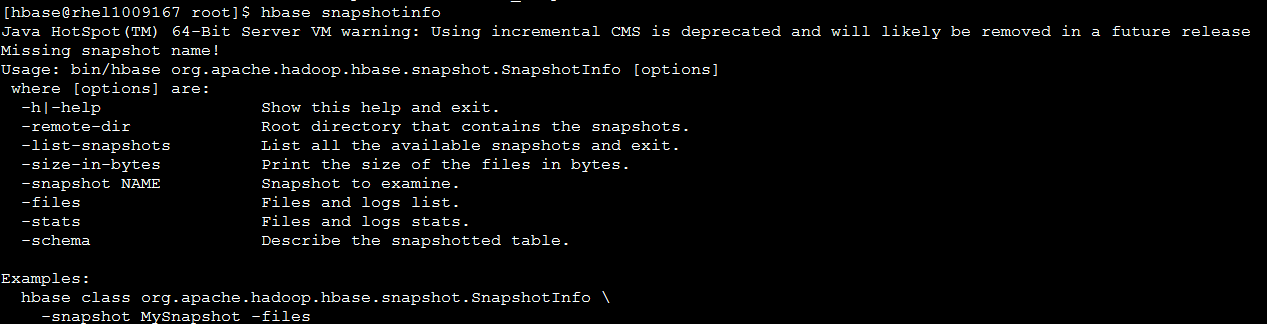

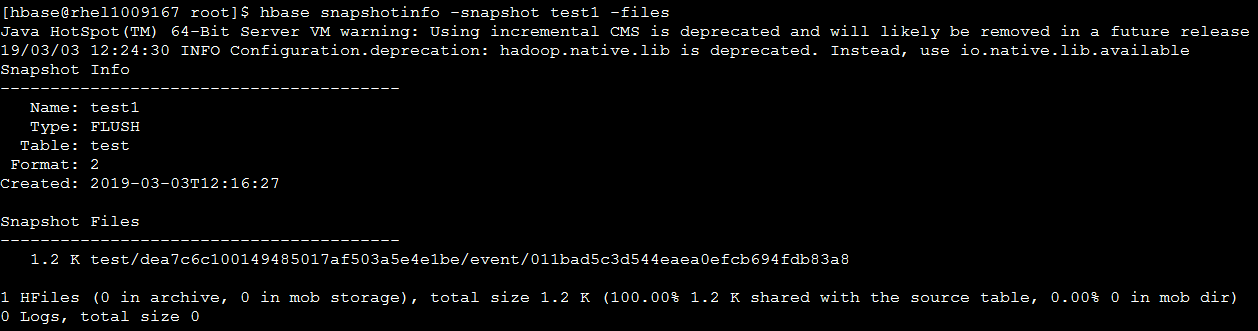

5,hbase snapshotinfo 命令

这是查看hbase 快照信息的

举例说明:hbase snapshotinfo -snapshot test1 -files

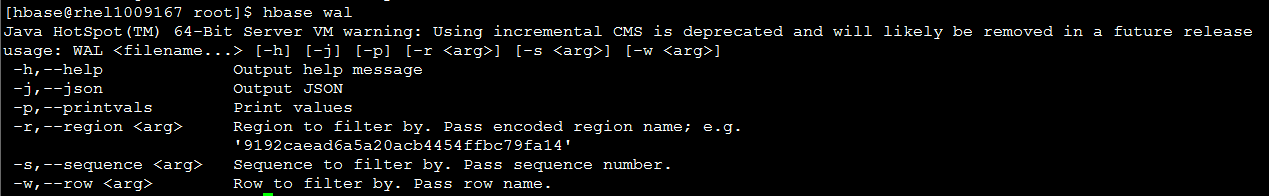

6,hbase wal 命令

[hbase@rhel1009167 root]$ hbase wal --json --printvals /hbase/WALs/rhel1009167,60020,1548150309349/rhel1009167%2C60020%2C1548150309349..meta.1551602786730.meta

Java HotSpot(TM) 64-Bit Server VM warning: Using incremental CMS is deprecated and will likely be removed in a future release

19/03/03 17:35:18 INFO Configuration.deprecation: fs.default.name is deprecated. Instead, use fs.defaultFS

[Writer Classes: ProtobufLogWriter

Cell Codec Class: org.apache.hadoop.hbase.regionserver.wal.WALCellCodec]

7,hbase hfile 命令

[hbase@rhel1009167 root]$ hbase hfile

Java HotSpot(TM) 64-Bit Server VM warning: Using incremental CMS is deprecated and will likely be removed in a future release

19/03/03 17:37:14 INFO Configuration.deprecation: fs.default.name is deprecated. Instead, use fs.defaultFS

usage: HFile [-a] [-b] [-e] [-f <arg> | -r <arg>] [-h] [-i] [-k] [-m] [-p]

[-s] [-v] [-w <arg>]

-a,--checkfamily Enable family check

-b,--printblocks Print block index meta data

-e,--printkey Print keys

-f,--file <arg> File to scan. Pass full-path; e.g.

hdfs://a:9000/hbase/hbase:meta/12/34

-h,--printblockheaders Print block headers for each block.

-i,--checkMobIntegrity Print all cells whose mob files are missing

-k,--checkrow Enable row order check; looks for out-of-order

keys

-m,--printmeta Print meta data of file

-p,--printkv Print key/value pairs

-r,--region <arg> Region to scan. Pass region name; e.g.

'hbase:meta,,1'

-s,--stats Print statistics

-v,--verbose Verbose output; emits file and meta data

delimiters

-w,--seekToRow <arg> Seek to this row and print all the kvs for this

row only

举例hbase hfile -b -m -v -f /hbase/data/default/test/dea7c6c100149485017af503a5e4e1be/event/011bad5c3d544eaea0efcb694fdb83a8

hbase hfile -b -m -v -f /hbase/data/default/test/dea7c6c100149485017af503a5e4e1be/event/011bad5c3d544eaea0efcb694fdb83a8

Java HotSpot(TM) 64-Bit Server VM warning: Using incremental CMS is deprecated and will likely be removed in a future release

19/03/03 17:44:58 INFO Configuration.deprecation: fs.default.name is deprecated. Instead, use fs.defaultFS

Scanning -> /hbase/data/default/test/dea7c6c100149485017af503a5e4e1be/event/011bad5c3d544eaea0efcb694fdb83a8

19/03/03 17:44:58 INFO hfile.CacheConfig: CacheConfig:disabled

19/03/03 17:44:58 INFO Configuration.deprecation: hadoop.native.lib is deprecated. Instead, use io.native.lib.available

Block index size as per heapsize: 392

reader=/hbase/data/default/test/dea7c6c100149485017af503a5e4e1be/event/011bad5c3d544eaea0efcb694fdb83a8,

compression=none,

cacheConf=CacheConfig:disabled,

firstKey=12345/event:2/1551515538823/Put,

lastKey=dea7c6c100149485017af503a5e4e1be/event:time/2/Put,

avgKeyLen=29,

avgValueLen=2,

entries=5,

length=1264

Trailer:

fileinfoOffset=398,

loadOnOpenDataOffset=288,

dataIndexCount=1,

metaIndexCount=0,

totalUncomressedBytes=1171,

entryCount=5,

compressionCodec=NONE,

uncompressedDataIndexSize=36,

numDataIndexLevels=1,

firstDataBlockOffset=0,

lastDataBlockOffset=0,

comparatorClassName=org.apache.hadoop.hbase.KeyValue$KeyComparator,

majorVersion=2,

minorVersion=3

Fileinfo:

BLOOM_FILTER_TYPE = ROW

DELETE_FAMILY_COUNT = \x00\x00\x00\x00\x00\x00\x00\x00

EARLIEST_PUT_TS = \x00\x00\x00\x00\x00\x00\x00\x02

KEY_VALUE_VERSION = \x00\x00\x00\x01

LAST_BLOOM_KEY = dea7c6c100149485017af503a5e4e1be

MAJOR_COMPACTION_KEY = \xFF

MAX_MEMSTORE_TS_KEY = \x00\x00\x00\x00\x00\x00\x00\x1D

MAX_SEQ_ID_KEY = 31

TIMERANGE = 2....1551515538823

hfile.AVG_KEY_LEN = 29

hfile.AVG_VALUE_LEN = 2

hfile.CREATE_TIME_TS = \x00\x00\x01i=\xBF\x8D|

hfile.LASTKEY = \x00 dea7c6c100149485017af503a5e4e1be\x05eventtime\x00\x00\x00\x00\x00\x00\x00\x02\x04

Mid-key: \x00\x0512345\x05event2\x00\x00\x01i=\x88U\x87\x04

Bloom filter:

BloomSize: 8

No of Keys in bloom: 3

Max Keys for bloom: 6

Percentage filled: 50%

Number of chunks: 1

Comparator: RawBytesComparator

Delete Family Bloom filter:

Not present

Block Index:

size=1

key=12345/event:2/1551515538823/Put

offset=0, dataSize=243

Scanned kv count -> 5

举例:hbase hfile -p -m -v -r test,,1551005576808.dea7c6c100149485017af503a5e4e1be.

hbase hfile -p -m -v -r test,,1551005576808.dea7c6c100149485017af503a5e4e1be.

Java HotSpot(TM) 64-Bit Server VM warning: Using incremental CMS is deprecated and will likely be removed in a future release

19/03/03 17:48:46 INFO Configuration.deprecation: fs.default.name is deprecated. Instead, use fs.defaultFS

region dir -> hdfs://nameservice1/hbase/data/default/test/dea7c6c100149485017af503a5e4e1be

19/03/03 17:48:46 INFO Configuration.deprecation: hadoop.native.lib is deprecated. Instead, use io.native.lib.available

Number of region files found -> 1

Found file[1] -> hdfs://nameservice1/hbase/data/default/test/dea7c6c100149485017af503a5e4e1be/event/011bad5c3d544eaea0efcb694fdb83a8

Scanning -> hdfs://nameservice1/hbase/data/default/test/dea7c6c100149485017af503a5e4e1be/event/011bad5c3d544eaea0efcb694fdb83a8

19/03/03 17:48:46 INFO hfile.CacheConfig: CacheConfig:disabled

K: 12345/event:2/1551515538823/Put/vlen=0/seqid=29 V:

K: 12345/event:3/1551237197857/Put/vlen=2/seqid=21 V: 56

K: 123456/event:2/1551238121803/Put/vlen=2/seqid=24 V: 4\x0A

K: 123456/event:4/1551238105260/Put/vlen=1/seqid=23 V: 2

K: dea7c6c100149485017af503a5e4e1be/event:time/2/Put/vlen=9/seqid=0 V: timestamp

Block index size as per heapsize: 392

reader=hdfs://nameservice1/hbase/data/default/test/dea7c6c100149485017af503a5e4e1be/event/011bad5c3d544eaea0efcb694fdb83a8,

compression=none,

cacheConf=CacheConfig:disabled,

firstKey=12345/event:2/1551515538823/Put,

lastKey=dea7c6c100149485017af503a5e4e1be/event:time/2/Put,

avgKeyLen=29,

avgValueLen=2,

entries=5,

length=1264

Trailer:

fileinfoOffset=398,

loadOnOpenDataOffset=288,

dataIndexCount=1,

metaIndexCount=0,

totalUncomressedBytes=1171,

entryCount=5,

compressionCodec=NONE,

uncompressedDataIndexSize=36,

numDataIndexLevels=1,

firstDataBlockOffset=0,

lastDataBlockOffset=0,

comparatorClassName=org.apache.hadoop.hbase.KeyValue$KeyComparator,

majorVersion=2,

minorVersion=3

Fileinfo:

BLOOM_FILTER_TYPE = ROW

DELETE_FAMILY_COUNT = \x00\x00\x00\x00\x00\x00\x00\x00

EARLIEST_PUT_TS = \x00\x00\x00\x00\x00\x00\x00\x02

KEY_VALUE_VERSION = \x00\x00\x00\x01

LAST_BLOOM_KEY = dea7c6c100149485017af503a5e4e1be

MAJOR_COMPACTION_KEY = \xFF

MAX_MEMSTORE_TS_KEY = \x00\x00\x00\x00\x00\x00\x00\x1D

MAX_SEQ_ID_KEY = 31

TIMERANGE = 2....1551515538823

hfile.AVG_KEY_LEN = 29

hfile.AVG_VALUE_LEN = 2

hfile.CREATE_TIME_TS = \x00\x00\x01i=\xBF\x8D|

hfile.LASTKEY = \x00 dea7c6c100149485017af503a5e4e1be\x05eventtime\x00\x00\x00\x00\x00\x00\x00\x02\x04

Mid-key: \x00\x0512345\x05event2\x00\x00\x01i=\x88U\x87\x04

Bloom filter:

BloomSize: 8

No of Keys in bloom: 3

Max Keys for bloom: 6

Percentage filled: 50%

Number of chunks: 1

Comparator: RawBytesComparator

Delete Family Bloom filter:

Not present

Scanned kv count -> 5

8,hbase zkcli 命令

这个命令顾名思义 就是进入zookeeper客户端,值得注意的是,如果你的hbase配置了kerberos,在这里修改权限是很方便的。其余zookeeper命令省略。

9, hbase upgrade 命令

这个命令就是更新hbase的时候使用的命令:

hbase upgrade -h

Java HotSpot(TM) 64-Bit Server VM warning: Using incremental CMS is deprecated and will likely be removed in a future release

usage: $bin/hbase upgrade -check [-dir DIR]|-execute

-check Run upgrade check; looks for HFileV1 under ${hbase.rootdir}

or provided 'dir' directory.

-dir <arg> Relative path of dir to check for HFileV1s.

-execute Run upgrade; zk and hdfs must be up, hbase down

-h,--help Help

Read http://hbase.apache.org/book.html#upgrade0.96 before attempting upgrade Example usage: Run upgrade check; looks for HFileV1s under ${hbase.rootdir}:

$ bin/hbase upgrade -check Run the upgrade:

$ bin/hbase upgrade -execute

hbase upgrade -check

Java HotSpot(TM) 64-Bit Server VM warning: Using incremental CMS is deprecated and will likely be removed in a future release

19/03/03 18:07:48 INFO Configuration.deprecation: fs.default.name is deprecated. Instead, use fs.defaultFS

19/03/03 18:07:48 INFO util.HFileV1Detector: Target dir is: hdfs://nameservice1/hbase

19/03/03 18:07:48 INFO util.HFileV1Detector: Ignoring path: hdfs://nameservice1/hbase/.hbase-snapshot

19/03/03 18:07:48 INFO util.HFileV1Detector: Ignoring path: hdfs://nameservice1/hbase/.tmp

19/03/03 18:07:48 INFO util.HFileV1Detector: Ignoring path: hdfs://nameservice1/hbase/MasterProcWALs

19/03/03 18:07:48 INFO util.HFileV1Detector: Ignoring path: hdfs://nameservice1/hbase/WALs

19/03/03 18:07:48 INFO util.HFileV1Detector: Ignoring path: hdfs://nameservice1/hbase/archive

19/03/03 18:07:48 INFO util.HFileV1Detector: Ignoring path: hdfs://nameservice1/hbase/corrupt

19/03/03 18:07:48 INFO util.HFileV1Detector: Ignoring path: hdfs://nameservice1/hbase/data

19/03/03 18:07:48 INFO util.HFileV1Detector: Ignoring path: hdfs://nameservice1/hbase/hbase.id

19/03/03 18:07:48 INFO util.HFileV1Detector: Ignoring path: hdfs://nameservice1/hbase/hbase.version

19/03/03 18:07:48 INFO util.HFileV1Detector: Ignoring path: hdfs://nameservice1/hbase/oldWALs

19/03/03 18:07:48 INFO util.HFileV1Detector: Ignoring path: hdfs://nameservice1/hbase/splitWAL

19/03/03 18:07:48 INFO util.HFileV1Detector: Result: 19/03/03 18:07:48 INFO util.HFileV1Detector: Tables Processed:

19/03/03 18:07:48 INFO util.HFileV1Detector: Count of HFileV1: 0

19/03/03 18:07:48 INFO util.HFileV1Detector: Count of corrupted files: 0

19/03/03 18:07:48 INFO util.HFileV1Detector: Count of Regions with HFileV1: 0

19/03/03 18:07:48 INFO migration.UpgradeTo96: No HFileV1 found.

10,hbase clean 命令

这个命令在生产比较危险。谨慎使用。

hbase clean -h

Usage: hbase clean (--cleanZk|--cleanHdfs|--cleanAll)

Options:

--cleanZk cleans hbase related data from zookeeper.

--cleanHdfs cleans hbase related data from hdfs.

--cleanAll cleans hbase related data from both zookeeper and hdfs.

11,hbase pe 命令

这个命令是测试hbase性能的自带的工具

hbase pe -h

Java HotSpot(TM) 64-Bit Server VM warning: Using incremental CMS is deprecated and will likely be removed in a future release

19/03/03 18:14:52 INFO Configuration.deprecation: hadoop.native.lib is deprecated. Instead, use io.native.lib.available

Usage: java org.apache.hadoop.hbase.PerformanceEvaluation \

<OPTIONS> [-D<property=value>]* <command> <nclients> Options:

nomapred Run multiple clients using threads (rather than use mapreduce)

rows Rows each client runs. Default: 1048576

size Total size in GiB. Mutually exclusive with --rows. Default: 1.0.

sampleRate Execute test on a sample of total rows. Only supported by randomRead. Default: 1.0

traceRate Enable HTrace spans. Initiate tracing every N rows. Default: 0

table Alternate table name. Default: 'TestTable'

multiGet If >0, when doing RandomRead, perform multiple gets instead of single gets. Default: 0

compress Compression type to use (GZ, LZO, ...). Default: 'NONE'

flushCommits Used to determine if the test should flush the table. Default: false

writeToWAL Set writeToWAL on puts. Default: True

autoFlush Set autoFlush on htable. Default: False

oneCon all the threads share the same connection. Default: False

presplit Create presplit table. If a table with same name exists, it'll be deleted and recreated (instead of verifying count of its existing regions).

Recommended for accurate perf analysis (see guide). Default: disabled

inmemory Tries to keep the HFiles of the CF inmemory as far as possible. Not guaranteed that reads are always served from memory. Default: false

usetags Writes tags along with KVs. Use with HFile V3. Default: false

numoftags Specify the no of tags that would be needed. This works only if usetags is true. Default: 1

filterAll Helps to filter out all the rows on the server side there by not returning any thing back to the client. Helps to check the server side performance.

Uses FilterAllFilter internally.

latency Set to report operation latencies. Default: False

bloomFilter Bloom filter type, one of [NONE, ROW, ROWCOL]

blockEncoding Block encoding to use. Value should be one of [NONE, PREFIX, DIFF, FAST_DIFF, PREFIX_TREE]. Default: NONE

valueSize Pass value size to use: Default: 1000

valueRandom Set if we should vary value size between 0 and 'valueSize'; set on read for stats on size: Default: Not set.

valueZipf Set if we should vary value size between 0 and 'valueSize' in zipf form: Default: Not set.

period Report every 'period' rows: Default: opts.perClientRunRows / 10 = 104857

multiGet Batch gets together into groups of N. Only supported by randomRead. Default: disabled

addColumns Adds columns to scans/gets explicitly. Default: true

replicas Enable region replica testing. Defaults: 1.

splitPolicy Specify a custom RegionSplitPolicy for the table.

randomSleep Do a random sleep before each get between 0 and entered value. Defaults: 0

columns Columns to write per row. Default: 1

caching Scan caching to use. Default: 30 Note: -D properties will be applied to the conf used.

For example:

-Dmapreduce.output.fileoutputformat.compress=true

-Dmapreduce.task.timeout=60000 Command:

append Append on each row; clients overlap on keyspace so some concurrent operations

checkAndDelete CheckAndDelete on each row; clients overlap on keyspace so some concurrent operations

checkAndMutate CheckAndMutate on each row; clients overlap on keyspace so some concurrent operations

checkAndPut CheckAndPut on each row; clients overlap on keyspace so some concurrent operations

filterScan Run scan test using a filter to find a specific row based on it's value (make sure to use --rows=20)

increment Increment on each row; clients overlap on keyspace so some concurrent operations

randomRead Run random read test

randomSeekScan Run random seek and scan 100 test

randomWrite Run random write test

scan Run scan test (read every row)

scanRange10 Run random seek scan with both start and stop row (max 10 rows)

scanRange100 Run random seek scan with both start and stop row (max 100 rows)

scanRange1000 Run random seek scan with both start and stop row (max 1000 rows)

scanRange10000 Run random seek scan with both start and stop row (max 10000 rows)

sequentialRead Run sequential read test

sequentialWrite Run sequential write test Args:

nclients Integer. Required. Total number of clients (and HRegionServers) running. 1 <= value <= 500

Examples:

To run a single client doing the default 1M sequentialWrites:

$ bin/hbase org.apache.hadoop.hbase.PerformanceEvaluation sequentialWrite 1

To run 10 clients doing increments over ten rows:

$ bin/hbase org.apache.hadoop.hbase.PerformanceEvaluation --rows=10 --nomapred increment 10

举例:

下面是hbase pe测试

顺序写(百万)

hbase org.apache.Hadoop.hbase.PerformanceEvaluation sequentialWrite 1

顺序读(百万)

hbase org.apache.hadoop.hbase.PerformanceEvaluation sequentialRead 1

随机写(百万)

hbase org.apache.hadoop.hbase.PerformanceEvaluation randomWrite 1

随机读(百万)

hbase org.apache.hadoop.hbase.PerformanceEvaluation randomRead 1

12,hbase ltt 命令

这个也是测试hbase性能的命令

hbase ltt -h

Java HotSpot(TM) 64-Bit Server VM warning: Using incremental CMS is deprecated and will likely be removed in a future release

19/03/03 18:19:36 INFO Configuration.deprecation: hadoop.native.lib is deprecated. Instead, use io.native.lib.available

usage: bin/hbase org.apache.hadoop.hbase.util.LoadTestTool <options>

Options:

-batchupdate Whether to use batch as opposed to separate updates for every column in a row

-bloom <arg> Bloom filter type, one of [NONE, ROW, ROWCOL]

-compression <arg> Compression type, one of [LZO, GZ, NONE, SNAPPY, LZ4]

-data_block_encoding <arg> Encoding algorithm (e.g. prefix compression) to use for data blocks in the test column

family, one of [NONE, PREFIX, DIFF, FAST_DIFF, PREFIX_TREE].

-deferredlogflush Enable deferred log flush.

-encryption <arg> Enables transparent encryption on the test table, one of [AES]

-families <arg> The name of the column families to use separated by comma

-generator <arg> The class which generates load for the tool. Any args for this class can be passed as

colon separated after class name

-h,--help Show usage

-in_memory Tries to keep the HFiles of the CF inmemory as far as possible. Not guaranteed that

reads are always served from inmemory

-init_only Initialize the test table only, don't do any loading

-key_window <arg> The 'key window' to maintain between reads and writes for concurrent write/read

workload. The default is 0.

-max_read_errors <arg> The maximum number of read errors to tolerate before terminating all reader threads.

The default is 10.

-mob_threshold <arg> Desired cell size to exceed in bytes that will use the MOB write path

-multiget_batchsize <arg> Whether to use multi-gets as opposed to separate gets for every column in a row

-multiput Whether to use multi-puts as opposed to separate puts for every column in a row

-num_keys <arg> The number of keys to read/write

-num_regions_per_server <arg> Desired number of regions per region server. Defaults to 5.

-num_tables <arg> A positive integer number. When a number n is speicfied, load test tool will load n

table parallely. -tn parameter value becomes table name prefix. Each table name is in

format <tn>_1...<tn>_n

-read <arg> <verify_percent>[:<#threads=20>]

-reader <arg> The class for executing the read requests

-region_replica_id <arg> Region replica id to do the reads from

-region_replication <arg> Desired number of replicas per region

-regions_per_server <arg> A positive integer number. When a number n is specified, load test tool will create the

test table with n regions per server

-skip_init Skip the initialization; assume test table already exists

-start_key <arg> The first key to read/write (a 0-based index). The default value is 0.

-tn <arg> The name of the table to read or write

-update <arg> <update_percent>[:<#threads=20>][:<#whether to ignore nonce collisions=0>]

-updater <arg> The class for executing the update requests

-write <arg> <avg_cols_per_key>:<avg_data_size>[:<#threads=20>]

-writer <arg> The class for executing the write requests

-zk <arg> ZK quorum as comma-separated host names without port numbers

-zk_root <arg> name of parent znode in zookeeper

HBASE 基础命令总结的更多相关文章

- 【CDN+】 Hbase入门 以及Hbase shell基础命令

前言 大数据的基础离不开Hbase, 本文就hbase的基础概念,特点,以及框架进行简介, 实际操作种需要注意hbase shell的使用. Hbase 基础 官网:https://hbase.ap ...

- HBase集群部署与基础命令

HBase 集群部署 安装 hbase 之前需要先搭建好 hadoop 集群和 zookeeper 集群.hadoop 集群搭建可以参考:https://www.cnblogs.com/javammc ...

- HBase shell 命令介绍

HBase shell是HBase的一套命令行工具,类似传统数据中的sql概念,可以使用shell命令来查询HBase中数据的详细情况.安装完HBase之后,如果配置了HBase的环境变量,只要在sh ...

- hbase shell 命令

HBase使用教程 时间 2014-06-01 20:02:18 IT社区推荐资讯 原文 http://itindex.net/detail/49825-hbase 主题 HBase 1 基 ...

- 【转】HBase shell命令与 scan 过滤器

Hbase 常用shell命令 https://www.cnblogs.com/i80386/p/4105423.html HBase基础之常用过滤器hbase shell操作 https://www ...

- 学习 git基础命令

缘起 年后到了新公司,由于个人意愿到了一个海外的项目组,除了自己从Java技术栈转了C#技术栈外,很多技术都是第一次使用,学习压力不小啊. 自己也就先从常用的技术开始学起,比如C#,AngularJS ...

- Linux安全基础:shell及一些基础命令

1.什么是shell?Shell是用户和Linux操作系统之间的接口.Linux中有多种shell,其中缺省使用的是Bash. 2.shell的分类(1)bash bash shell 是 Bourn ...

- LINUX二十个基础命令

LINUX二十个基础命令 一. useradd命令 1.命令格式: useradd 选项 用户名 2.命令功能: 添加新的用户账号 3.常用参数: -c comment 指定一段注释性描述.-d 目录 ...

- Docker - Docker基础命令及使用

Docker Docker - 官网 Docker - Hub GitHub - Docker Docker中文社区 Docker基础命令 Docker 查看帮助信息:docker --help 查看 ...

随机推荐

- 原生js实现淘宝图片切换

这个淘宝图片切换具体效果就是:鼠标移上底部一行中的小图片,上面大图片区域就会显示对应的图片. gif图片看起来还挺酷的,其实实现很简单,用原生js绑定事件改变大图片区域的src. 上代码,html部分 ...

- 细数本地连阿里云上mysql8遇到的坑

最近两个月忙成狗,给了自己一个冠冕堂皇的不记录博客的借口,今天咬牙记录一篇本地连阿里云mysql遇到的各种坑; 昨天利用妹妹的学生身份买了台廉价的阿里ECS,购买成功后的第一反应当然是把本地的mysq ...

- centos安装MySQL5.7

Mysql安装 一.查看是否安装MySQL # rpm -qa | grep mysql 二.查看所有mariadb的软件包 # rpm -qa | grep mariadb 三.删除相关的maria ...

- 使用Pretues仿真Arduino驱动步进电机

这几天想做一个给金鱼自动喂食的装置,所以学习了下如何操控步进电机,现在做个记录. 使用Pretues仿真Arduino的话,可以参考:http://www.geek-workshop.com/thre ...

- 4--Postman--Request&Response

//var josndata=JSON.parse(responseBody);//获取body中返回的所有参数//tests["code"]=josndata.code===20 ...

- 嵌入式C语言编译器

GCC与gcc: 初识编译器: 扩展问题: 如何理解“多语言混合开发”? 参考: 狄泰软件学院唐佐林视频教程

- 解析HTTP报文——C#

目前没有找到.Net框架内置的解析方法,理论上HttpClient等类在内部应该已经实现了解析,但不知为何没有公开这些处理方法.(亦或是我没找到)那么只能自己来解析这些数据了. public enum ...

- Centos7-跟踪用户操作记录并录入日志

1. 添加bash全局配置文件: cd /etc/profile.d sudo -e vi log_command.sh 输入如下内容: export PROMPT_COMMAND='RETRN_VA ...

- word中编辑论文公式对齐问题

这里只说在word中编辑公式时,公式居中,编号右对齐的情况. 在编辑公式时,我平时就是右对齐,然后通过敲击空格键进行公式的居中,然而这样并不美观.所以接下来学习一下: 1)首先打开视图-->标尺 ...

- vue b表单

你可以用 v-model 指令在表单控件元素上创建双向数据绑定. v-model 会根据控件类型自动选取正确的方法来更新元素. 输入框 实例中演示了 input 和 textarea 元素中使用 v- ...