RocketMQ(七):高性能探秘之线程池

上一篇文章讲了如何设计和实现高并发高性能的应用,从根本上说明了一些道理。且以rocketmq的mappedFile的实现作为一个突破点,讲解了rocketmq是如何具体实现高性能的。从中我们也知道,mappedFile只是其利用的操作系统的一个特性小点。

今天,我们就来说说,rockmq实现高性能的第二个小:线程池的应用。

1. rocketmq的线程模型概述

谈到多线程,一般我们都会谈到锁和线程模型。

锁我们就不多说了,基本原理都差不多,大家可以参考网上资料或查看我之前的文章。

而线程模型,则可能各有不同,它会根据各自应用的特性,玩出自己的花样。下面我们就来数数rocketmq中的线程模型吧!

事实上,rocketmq中,存在多种不同的角色组件,而线程模型也往往会根据组件的特性变得不一样。最好我们都能了解一点,以不至于一叶障目。

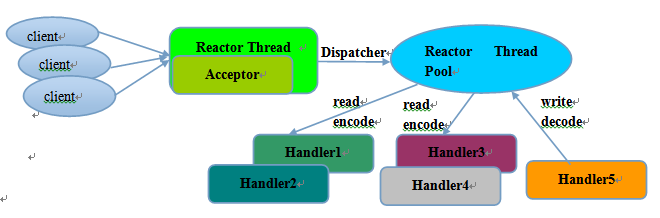

2. 通用的netty线程模型

首先,整个rockmq都是基于netty进行的网络通信,所以其最基础的线程模型既是netty的线程模型,即reactor模型,(注:以下netty线程模型来源于网络)

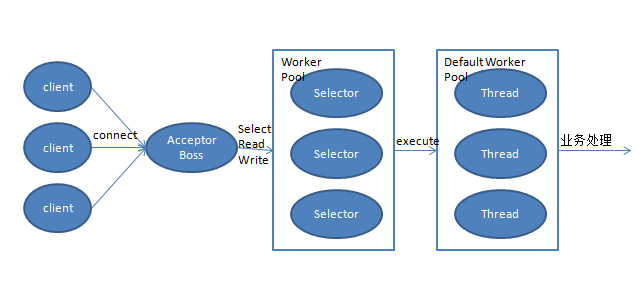

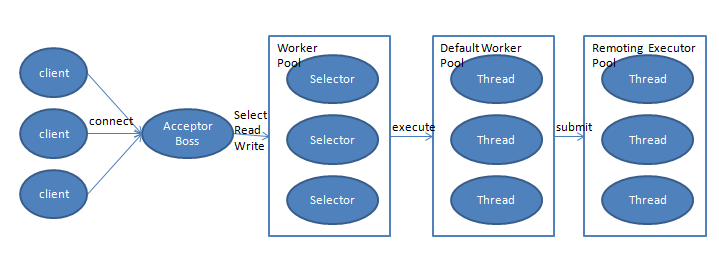

rocketmq中具体应用的通用的netty线程如下:

1个acceptor -> n个event.processor.selector -> serverWorkerThreads.worker -> 业务处理线程

其调用netty的应用实现代码如下:

// org.apache.rocketmq.remoting.netty.NettyRemotingServer#start

@Override

public void start() {

this.defaultEventExecutorGroup = new DefaultEventExecutorGroup(

nettyServerConfig.getServerWorkerThreads(),

new ThreadFactory() { private AtomicInteger threadIndex = new AtomicInteger(0); @Override

public Thread newThread(Runnable r) {

return new Thread(r, "NettyServerCodecThread_" + this.threadIndex.incrementAndGet());

}

}); prepareSharableHandlers();

// netty 核心参数 eventLoopGroupBoss, eventLoopGroupSelector

ServerBootstrap childHandler =

this.serverBootstrap.group(this.eventLoopGroupBoss, this.eventLoopGroupSelector)

.channel(useEpoll() ? EpollServerSocketChannel.class : NioServerSocketChannel.class)

.option(ChannelOption.SO_BACKLOG, 1024)

.option(ChannelOption.SO_REUSEADDR, true)

.option(ChannelOption.SO_KEEPALIVE, false)

.childOption(ChannelOption.TCP_NODELAY, true)

.childOption(ChannelOption.SO_SNDBUF, nettyServerConfig.getServerSocketSndBufSize())

.childOption(ChannelOption.SO_RCVBUF, nettyServerConfig.getServerSocketRcvBufSize())

.localAddress(new InetSocketAddress(this.nettyServerConfig.getListenPort()))

.childHandler(new ChannelInitializer<SocketChannel>() {

@Override

public void initChannel(SocketChannel ch) throws Exception {

ch.pipeline()

.addLast(defaultEventExecutorGroup, HANDSHAKE_HANDLER_NAME, handshakeHandler)

.addLast(defaultEventExecutorGroup,

encoder,

new NettyDecoder(),

new IdleStateHandler(0, 0, nettyServerConfig.getServerChannelMaxIdleTimeSeconds()),

connectionManageHandler,

serverHandler

);

}

}); if (nettyServerConfig.isServerPooledByteBufAllocatorEnable()) {

childHandler.childOption(ChannelOption.ALLOCATOR, PooledByteBufAllocator.DEFAULT);

} try {

ChannelFuture sync = this.serverBootstrap.bind().sync();

InetSocketAddress addr = (InetSocketAddress) sync.channel().localAddress();

this.port = addr.getPort();

} catch (InterruptedException e1) {

throw new RuntimeException("this.serverBootstrap.bind().sync() InterruptedException", e1);

} if (this.channelEventListener != null) {

this.nettyEventExecutor.start();

} this.timer.scheduleAtFixedRate(new TimerTask() { @Override

public void run() {

try {

NettyRemotingServer.this.scanResponseTable();

} catch (Throwable e) {

log.error("scanResponseTable exception", e);

}

}

}, 1000 * 3, 1000);

}

这个每个server端的所有角色网络io处理线程模型,client端就不用说了吧(同样遵循netty编程模型)。

但是到具体的角色,分工是不一样的。下面我们就来细分几个角色详细分解下。

3. nameserver的线程模型

nameserver的业务线程模型非常简单,因为它只有一个 defaultProcessor, 其线程模型如下:

1个acceptor -> serverSelectorThreads个io.processor -> serverWorkerThreads.worker(defaultEventExecutorGroup) -> serverWorkerThreads.executors(remotingExecutor)

之所以nameserver的线程模型如此简单,可能是因为其业务处理本身就非常轻量级,基本都是一些纯内存操作,只需几个简单的线程就可以处理了。

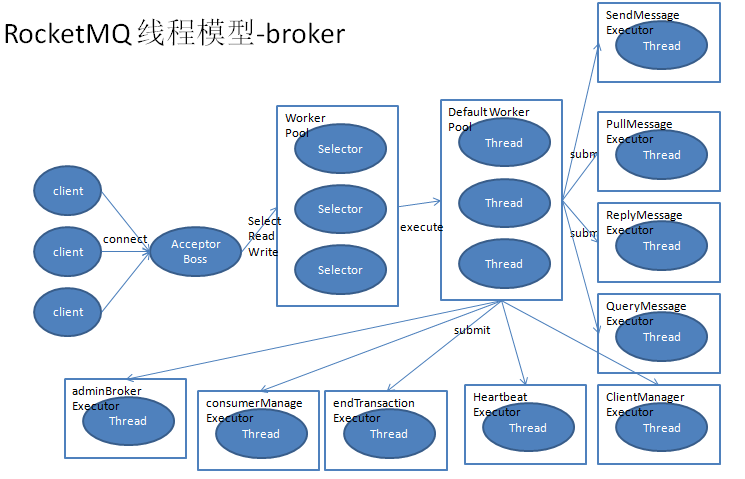

4. broker的线程模型

broker的业务线程模型则相对复杂了,它需要处理各种不同类型的业务,所以它划分出了许多的业务线程池,供各类型业务处理使用。

这可以在broker初始化代码中看到具体实现:

// org.apache.rocketmq.broker.BrokerController#initialize

public boolean initialize() throws CloneNotSupportedException {

boolean result = this.topicConfigManager.load(); result = result && this.consumerOffsetManager.load();

result = result && this.subscriptionGroupManager.load();

result = result && this.consumerFilterManager.load(); if (result) {

try {

this.messageStore =

new DefaultMessageStore(this.messageStoreConfig, this.brokerStatsManager, this.messageArrivingListener,

this.brokerConfig);

if (messageStoreConfig.isEnableDLegerCommitLog()) {

DLedgerRoleChangeHandler roleChangeHandler = new DLedgerRoleChangeHandler(this, (DefaultMessageStore) messageStore);

((DLedgerCommitLog)((DefaultMessageStore) messageStore).getCommitLog()).getdLedgerServer().getdLedgerLeaderElector().addRoleChangeHandler(roleChangeHandler);

}

this.brokerStats = new BrokerStats((DefaultMessageStore) this.messageStore);

//load plugin

MessageStorePluginContext context = new MessageStorePluginContext(messageStoreConfig, brokerStatsManager, messageArrivingListener, brokerConfig);

this.messageStore = MessageStoreFactory.build(context, this.messageStore);

this.messageStore.getDispatcherList().addFirst(new CommitLogDispatcherCalcBitMap(this.brokerConfig, this.consumerFilterManager));

} catch (IOException e) {

result = false;

log.error("Failed to initialize", e);

}

} result = result && this.messageStore.load(); if (result) {

this.remotingServer = new NettyRemotingServer(this.nettyServerConfig, this.clientHousekeepingService);

NettyServerConfig fastConfig = (NettyServerConfig) this.nettyServerConfig.clone();

fastConfig.setListenPort(nettyServerConfig.getListenPort() - 2);

this.fastRemotingServer = new NettyRemotingServer(fastConfig, this.clientHousekeepingService);

this.sendMessageExecutor = new BrokerFixedThreadPoolExecutor(

this.brokerConfig.getSendMessageThreadPoolNums(),

this.brokerConfig.getSendMessageThreadPoolNums(),

1000 * 60,

TimeUnit.MILLISECONDS,

this.sendThreadPoolQueue,

new ThreadFactoryImpl("SendMessageThread_")); this.pullMessageExecutor = new BrokerFixedThreadPoolExecutor(

this.brokerConfig.getPullMessageThreadPoolNums(),

this.brokerConfig.getPullMessageThreadPoolNums(),

1000 * 60,

TimeUnit.MILLISECONDS,

this.pullThreadPoolQueue,

new ThreadFactoryImpl("PullMessageThread_")); this.replyMessageExecutor = new BrokerFixedThreadPoolExecutor(

this.brokerConfig.getProcessReplyMessageThreadPoolNums(),

this.brokerConfig.getProcessReplyMessageThreadPoolNums(),

1000 * 60,

TimeUnit.MILLISECONDS,

this.replyThreadPoolQueue,

new ThreadFactoryImpl("ProcessReplyMessageThread_")); this.queryMessageExecutor = new BrokerFixedThreadPoolExecutor(

this.brokerConfig.getQueryMessageThreadPoolNums(),

this.brokerConfig.getQueryMessageThreadPoolNums(),

1000 * 60,

TimeUnit.MILLISECONDS,

this.queryThreadPoolQueue,

new ThreadFactoryImpl("QueryMessageThread_")); this.adminBrokerExecutor =

Executors.newFixedThreadPool(this.brokerConfig.getAdminBrokerThreadPoolNums(), new ThreadFactoryImpl(

"AdminBrokerThread_")); this.clientManageExecutor = new ThreadPoolExecutor(

this.brokerConfig.getClientManageThreadPoolNums(),

this.brokerConfig.getClientManageThreadPoolNums(),

1000 * 60,

TimeUnit.MILLISECONDS,

this.clientManagerThreadPoolQueue,

new ThreadFactoryImpl("ClientManageThread_")); this.heartbeatExecutor = new BrokerFixedThreadPoolExecutor(

this.brokerConfig.getHeartbeatThreadPoolNums(),

this.brokerConfig.getHeartbeatThreadPoolNums(),

1000 * 60,

TimeUnit.MILLISECONDS,

this.heartbeatThreadPoolQueue,

new ThreadFactoryImpl("HeartbeatThread_", true)); this.endTransactionExecutor = new BrokerFixedThreadPoolExecutor(

this.brokerConfig.getEndTransactionThreadPoolNums(),

this.brokerConfig.getEndTransactionThreadPoolNums(),

1000 * 60,

TimeUnit.MILLISECONDS,

this.endTransactionThreadPoolQueue,

new ThreadFactoryImpl("EndTransactionThread_")); this.consumerManageExecutor =

Executors.newFixedThreadPool(this.brokerConfig.getConsumerManageThreadPoolNums(), new ThreadFactoryImpl(

"ConsumerManageThread_")); this.registerProcessor(); final long initialDelay = UtilAll.computeNextMorningTimeMillis() - System.currentTimeMillis();

final long period = 1000 * 60 * 60 * 24;

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

BrokerController.this.getBrokerStats().record();

} catch (Throwable e) {

log.error("schedule record error.", e);

}

}

}, initialDelay, period, TimeUnit.MILLISECONDS); this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

BrokerController.this.consumerOffsetManager.persist();

} catch (Throwable e) {

log.error("schedule persist consumerOffset error.", e);

}

}

}, 1000 * 10, this.brokerConfig.getFlushConsumerOffsetInterval(), TimeUnit.MILLISECONDS); this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

BrokerController.this.consumerFilterManager.persist();

} catch (Throwable e) {

log.error("schedule persist consumer filter error.", e);

}

}

}, 1000 * 10, 1000 * 10, TimeUnit.MILLISECONDS); this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

BrokerController.this.protectBroker();

} catch (Throwable e) {

log.error("protectBroker error.", e);

}

}

}, 3, 3, TimeUnit.MINUTES); this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

BrokerController.this.printWaterMark();

} catch (Throwable e) {

log.error("printWaterMark error.", e);

}

}

}, 10, 1, TimeUnit.SECONDS); this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() { @Override

public void run() {

try {

log.info("dispatch behind commit log {} bytes", BrokerController.this.getMessageStore().dispatchBehindBytes());

} catch (Throwable e) {

log.error("schedule dispatchBehindBytes error.", e);

}

}

}, 1000 * 10, 1000 * 60, TimeUnit.MILLISECONDS); if (this.brokerConfig.getNamesrvAddr() != null) {

this.brokerOuterAPI.updateNameServerAddressList(this.brokerConfig.getNamesrvAddr());

log.info("Set user specified name server address: {}", this.brokerConfig.getNamesrvAddr());

} else if (this.brokerConfig.isFetchNamesrvAddrByAddressServer()) {

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() { @Override

public void run() {

try {

BrokerController.this.brokerOuterAPI.fetchNameServerAddr();

} catch (Throwable e) {

log.error("ScheduledTask fetchNameServerAddr exception", e);

}

}

}, 1000 * 10, 1000 * 60 * 2, TimeUnit.MILLISECONDS);

} if (!messageStoreConfig.isEnableDLegerCommitLog()) {

if (BrokerRole.SLAVE == this.messageStoreConfig.getBrokerRole()) {

if (this.messageStoreConfig.getHaMasterAddress() != null && this.messageStoreConfig.getHaMasterAddress().length() >= 6) {

this.messageStore.updateHaMasterAddress(this.messageStoreConfig.getHaMasterAddress());

this.updateMasterHAServerAddrPeriodically = false;

} else {

this.updateMasterHAServerAddrPeriodically = true;

}

} else {

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

BrokerController.this.printMasterAndSlaveDiff();

} catch (Throwable e) {

log.error("schedule printMasterAndSlaveDiff error.", e);

}

}

}, 1000 * 10, 1000 * 60, TimeUnit.MILLISECONDS);

}

} if (TlsSystemConfig.tlsMode != TlsMode.DISABLED) {

// Register a listener to reload SslContext

try {

fileWatchService = new FileWatchService(

new String[] {

TlsSystemConfig.tlsServerCertPath,

TlsSystemConfig.tlsServerKeyPath,

TlsSystemConfig.tlsServerTrustCertPath

},

new FileWatchService.Listener() {

boolean certChanged, keyChanged = false; @Override

public void onChanged(String path) {

if (path.equals(TlsSystemConfig.tlsServerTrustCertPath)) {

log.info("The trust certificate changed, reload the ssl context");

reloadServerSslContext();

}

if (path.equals(TlsSystemConfig.tlsServerCertPath)) {

certChanged = true;

}

if (path.equals(TlsSystemConfig.tlsServerKeyPath)) {

keyChanged = true;

}

if (certChanged && keyChanged) {

log.info("The certificate and private key changed, reload the ssl context");

certChanged = keyChanged = false;

reloadServerSslContext();

}

} private void reloadServerSslContext() {

((NettyRemotingServer) remotingServer).loadSslContext();

((NettyRemotingServer) fastRemotingServer).loadSslContext();

}

});

} catch (Exception e) {

log.warn("FileWatchService created error, can't load the certificate dynamically");

}

}

initialTransaction();

initialAcl();

initialRpcHooks();

}

return result;

}

可以说broker的线程模型是最复杂的,当然也是因为其业务是最复杂和最核心的。整个rocketmq的数据存储与读取都是在broker端,而nameserver只是一个辅助管理角色。所以,broker需要自行保证各种高可用性,各种隔离性,以及各种有效性维护。其实现就是通过分配不同的线程池,来隔离各自类型的业务处理。其使用各线程池的方式也是固定不变的,使用一个 Pair<T1, T2> 的包装类,将executor和proccor包装起来,使用时就可以一起绑定使用了。前面我们也说过,它是通过一个注册表的方式,将所有可处理的code映射出去,再使用时取出对应executor.submit()即可。

// org.apache.rocketmq.remoting.netty.NettyRemotingAbstract#processRequestCommand

/**

* Process incoming request command issued by remote peer.

*

* @param ctx channel handler context.

* @param cmd request command.

*/

public void processRequestCommand(final ChannelHandlerContext ctx, final RemotingCommand cmd) {

// 找到对应的code处理器,使用 Pair<NettyRequestProcessor, ExecutorService> 进行包装

final Pair<NettyRequestProcessor, ExecutorService> matched = this.processorTable.get(cmd.getCode());

final Pair<NettyRequestProcessor, ExecutorService> pair = null == matched ? this.defaultRequestProcessor : matched;

final int opaque = cmd.getOpaque(); if (pair != null) {

Runnable run = new Runnable() {

@Override

public void run() {

try {

doBeforeRpcHooks(RemotingHelper.parseChannelRemoteAddr(ctx.channel()), cmd);

final RemotingResponseCallback callback = new RemotingResponseCallback() {

@Override

public void callback(RemotingCommand response) {

doAfterRpcHooks(RemotingHelper.parseChannelRemoteAddr(ctx.channel()), cmd, response);

if (!cmd.isOnewayRPC()) {

if (response != null) {

response.setOpaque(opaque);

response.markResponseType();

try {

ctx.writeAndFlush(response);

} catch (Throwable e) {

log.error("process request over, but response failed", e);

log.error(cmd.toString());

log.error(response.toString());

}

} else {

}

}

}

};

if (pair.getObject1() instanceof AsyncNettyRequestProcessor) {

AsyncNettyRequestProcessor processor = (AsyncNettyRequestProcessor)pair.getObject1();

processor.asyncProcessRequest(ctx, cmd, callback);

} else {

NettyRequestProcessor processor = pair.getObject1();

RemotingCommand response = processor.processRequest(ctx, cmd);

callback.callback(response);

}

} catch (Throwable e) {

log.error("process request exception", e);

log.error(cmd.toString()); if (!cmd.isOnewayRPC()) {

final RemotingCommand response = RemotingCommand.createResponseCommand(RemotingSysResponseCode.SYSTEM_ERROR,

RemotingHelper.exceptionSimpleDesc(e));

response.setOpaque(opaque);

ctx.writeAndFlush(response);

}

}

}

}; if (pair.getObject1().rejectRequest()) {

final RemotingCommand response = RemotingCommand.createResponseCommand(RemotingSysResponseCode.SYSTEM_BUSY,

"[REJECTREQUEST]system busy, start flow control for a while");

response.setOpaque(opaque);

ctx.writeAndFlush(response);

return;

} try {

final RequestTask requestTask = new RequestTask(run, ctx.channel(), cmd);

// object2 为executor线程池, 直接向其submit()任务即可

pair.getObject2().submit(requestTask);

} catch (RejectedExecutionException e) {

if ((System.currentTimeMillis() % 10000) == 0) {

log.warn(RemotingHelper.parseChannelRemoteAddr(ctx.channel())

+ ", too many requests and system thread pool busy, RejectedExecutionException "

+ pair.getObject2().toString()

+ " request code: " + cmd.getCode());

} if (!cmd.isOnewayRPC()) {

final RemotingCommand response = RemotingCommand.createResponseCommand(RemotingSysResponseCode.SYSTEM_BUSY,

"[OVERLOAD]system busy, start flow control for a while");

response.setOpaque(opaque);

ctx.writeAndFlush(response);

}

}

} else {

String error = " request type " + cmd.getCode() + " not supported";

final RemotingCommand response =

RemotingCommand.createResponseCommand(RemotingSysResponseCode.REQUEST_CODE_NOT_SUPPORTED, error);

response.setOpaque(opaque);

ctx.writeAndFlush(response);

log.error(RemotingHelper.parseChannelRemoteAddr(ctx.channel()) + error);

}

}

所以,看起来rocketmq的broker的线程模型非常复杂,但在这种统一的框架管理之下,并没有给业务代码带来许多的困难。这是优秀的也是应该的。

5. producer线程模型

producer作为生产客户端,更多的只是一个使用者的一个角色。所以,他不可能也不应该承受太多的复杂性。所以,理论上,其线程模型也会是非常简单的。

事实上,它确实只是在netty框架之上,简单的使用了其功能而已。稍微多说一点是,它也存在同步发送和异步发送的差异。而这其中的差别,仅是一个回调方法解决的事而已,并不影响其线程模型。

客户端使用netty的样例如下:

@Override

public void start() {

this.defaultEventExecutorGroup = new DefaultEventExecutorGroup(

nettyClientConfig.getClientWorkerThreads(),

new ThreadFactory() { private AtomicInteger threadIndex = new AtomicInteger(0); @Override

public Thread newThread(Runnable r) {

return new Thread(r, "NettyClientWorkerThread_" + this.threadIndex.incrementAndGet());

}

}); Bootstrap handler = this.bootstrap.group(this.eventLoopGroupWorker).channel(NioSocketChannel.class)

.option(ChannelOption.TCP_NODELAY, true)

.option(ChannelOption.SO_KEEPALIVE, false)

.option(ChannelOption.CONNECT_TIMEOUT_MILLIS, nettyClientConfig.getConnectTimeoutMillis())

.option(ChannelOption.SO_SNDBUF, nettyClientConfig.getClientSocketSndBufSize())

.option(ChannelOption.SO_RCVBUF, nettyClientConfig.getClientSocketRcvBufSize())

.handler(new ChannelInitializer<SocketChannel>() {

@Override

public void initChannel(SocketChannel ch) throws Exception {

ChannelPipeline pipeline = ch.pipeline();

if (nettyClientConfig.isUseTLS()) {

if (null != sslContext) {

pipeline.addFirst(defaultEventExecutorGroup, "sslHandler", sslContext.newHandler(ch.alloc()));

log.info("Prepend SSL handler");

} else {

log.warn("Connections are insecure as SSLContext is null!");

}

}

// 同样使用 各种编解码器处理业务即可

pipeline.addLast(

defaultEventExecutorGroup,

new NettyEncoder(),

new NettyDecoder(),

new IdleStateHandler(0, 0, nettyClientConfig.getClientChannelMaxIdleTimeSeconds()),

new NettyConnectManageHandler(),

// 该处理器主要用于接收server端的响应消息

new NettyClientHandler());

}

}); this.timer.scheduleAtFixedRate(new TimerTask() {

@Override

public void run() {

try {

NettyRemotingClient.this.scanResponseTable();

} catch (Throwable e) {

log.error("scanResponseTable exception", e);

}

}

}, 1000 * 3, 1000); if (this.channelEventListener != null) {

this.nettyEventExecutor.start();

}

}

// 以上仅仅是初始化了netty的使用环境,但到真正使用时,需要各自创建对应channel

// 创建channel完成后会放入 channelTables 中,以便可复用

private Channel createChannel(final String addr) throws InterruptedException {

ChannelWrapper cw = this.channelTables.get(addr);

if (cw != null && cw.isOK()) {

return cw.getChannel();

} if (this.lockChannelTables.tryLock(LOCK_TIMEOUT_MILLIS, TimeUnit.MILLISECONDS)) {

try {

boolean createNewConnection;

cw = this.channelTables.get(addr);

if (cw != null) { if (cw.isOK()) {

return cw.getChannel();

} else if (!cw.getChannelFuture().isDone()) {

createNewConnection = false;

} else {

this.channelTables.remove(addr);

createNewConnection = true;

}

} else {

createNewConnection = true;

}

// 通过调用 connect 方法,创建连接远端的tcp通道

// 放入 channelTables 中

if (createNewConnection) {

ChannelFuture channelFuture = this.bootstrap.connect(RemotingHelper.string2SocketAddress(addr));

log.info("createChannel: begin to connect remote host[{}] asynchronously", addr);

cw = new ChannelWrapper(channelFuture);

this.channelTables.put(addr, cw);

}

} catch (Exception e) {

log.error("createChannel: create channel exception", e);

} finally {

this.lockChannelTables.unlock();

}

} else {

log.warn("createChannel: try to lock channel table, but timeout, {}ms", LOCK_TIMEOUT_MILLIS);

} if (cw != null) {

// 测试连接可用性后返回

ChannelFuture channelFuture = cw.getChannelFuture();

if (channelFuture.awaitUninterruptibly(this.nettyClientConfig.getConnectTimeoutMillis())) {

if (cw.isOK()) {

log.info("createChannel: connect remote host[{}] success, {}", addr, channelFuture.toString());

return cw.getChannel();

} else {

log.warn("createChannel: connect remote host[" + addr + "] failed, " + channelFuture.toString(), channelFuture.cause());

}

} else {

log.warn("createChannel: connect remote host[{}] timeout {}ms, {}", addr, this.nettyClientConfig.getConnectTimeoutMillis(),

channelFuture.toString());

}

} return null;

} // server端数据响应处理

// org.apache.rocketmq.remoting.netty.NettyRemotingAbstract#processResponseCommand

/**

* Process response from remote peer to the previous issued requests.

*

* @param ctx channel handler context.

* @param cmd response command instance.

*/

public void processResponseCommand(ChannelHandlerContext ctx, RemotingCommand cmd) {

final int opaque = cmd.getOpaque();

// 根据 opaque 获取请求时的上下文

final ResponseFuture responseFuture = responseTable.get(opaque);

if (responseFuture != null) {

responseFuture.setResponseCommand(cmd); responseTable.remove(opaque); if (responseFuture.getInvokeCallback() != null) {

executeInvokeCallback(responseFuture);

} else {

responseFuture.putResponse(cmd);

responseFuture.release();

}

} else {

log.warn("receive response, but not matched any request, " + RemotingHelper.parseChannelRemoteAddr(ctx.channel()));

log.warn(cmd.toString());

}

}

核心发送消息的代码如下:

// org.apache.rocketmq.client.impl.producer.DefaultMQProducerImpl#sendDefaultImpl

private SendResult sendDefaultImpl(

Message msg,

final CommunicationMode communicationMode,

final SendCallback sendCallback,

final long timeout

) throws MQClientException, RemotingException, MQBrokerException, InterruptedException {

this.makeSureStateOK();

Validators.checkMessage(msg, this.defaultMQProducer);

final long invokeID = random.nextLong();

long beginTimestampFirst = System.currentTimeMillis();

long beginTimestampPrev = beginTimestampFirst;

long endTimestamp = beginTimestampFirst;

TopicPublishInfo topicPublishInfo = this.tryToFindTopicPublishInfo(msg.getTopic());

if (topicPublishInfo != null && topicPublishInfo.ok()) {

boolean callTimeout = false;

MessageQueue mq = null;

Exception exception = null;

SendResult sendResult = null;

int timesTotal = communicationMode == CommunicationMode.SYNC ? 1 + this.defaultMQProducer.getRetryTimesWhenSendFailed() : 1;

int times = 0;

String[] brokersSent = new String[timesTotal];

for (; times < timesTotal; times++) {

String lastBrokerName = null == mq ? null : mq.getBrokerName();

MessageQueue mqSelected = this.selectOneMessageQueue(topicPublishInfo, lastBrokerName);

if (mqSelected != null) {

mq = mqSelected;

brokersSent[times] = mq.getBrokerName();

try {

beginTimestampPrev = System.currentTimeMillis();

if (times > 0) {

//Reset topic with namespace during resend.

msg.setTopic(this.defaultMQProducer.withNamespace(msg.getTopic()));

}

long costTime = beginTimestampPrev - beginTimestampFirst;

if (timeout < costTime) {

callTimeout = true;

break;

} sendResult = this.sendKernelImpl(msg, mq, communicationMode, sendCallback, topicPublishInfo, timeout - costTime);

endTimestamp = System.currentTimeMillis();

this.updateFaultItem(mq.getBrokerName(), endTimestamp - beginTimestampPrev, false);

switch (communicationMode) {

case ASYNC:

return null;

case ONEWAY:

return null;

case SYNC:

if (sendResult.getSendStatus() != SendStatus.SEND_OK) {

if (this.defaultMQProducer.isRetryAnotherBrokerWhenNotStoreOK()) {

continue;

}

} return sendResult;

default:

break;

}

} catch (RemotingException e) {

endTimestamp = System.currentTimeMillis();

this.updateFaultItem(mq.getBrokerName(), endTimestamp - beginTimestampPrev, true);

log.warn(String.format("sendKernelImpl exception, resend at once, InvokeID: %s, RT: %sms, Broker: %s", invokeID, endTimestamp - beginTimestampPrev, mq), e);

log.warn(msg.toString());

exception = e;

continue;

} catch (MQClientException e) {

endTimestamp = System.currentTimeMillis();

this.updateFaultItem(mq.getBrokerName(), endTimestamp - beginTimestampPrev, true);

log.warn(String.format("sendKernelImpl exception, resend at once, InvokeID: %s, RT: %sms, Broker: %s", invokeID, endTimestamp - beginTimestampPrev, mq), e);

log.warn(msg.toString());

exception = e;

continue;

} catch (MQBrokerException e) {

endTimestamp = System.currentTimeMillis();

this.updateFaultItem(mq.getBrokerName(), endTimestamp - beginTimestampPrev, true);

log.warn(String.format("sendKernelImpl exception, resend at once, InvokeID: %s, RT: %sms, Broker: %s", invokeID, endTimestamp - beginTimestampPrev, mq), e);

log.warn(msg.toString());

exception = e;

switch (e.getResponseCode()) {

case ResponseCode.TOPIC_NOT_EXIST:

case ResponseCode.SERVICE_NOT_AVAILABLE:

case ResponseCode.SYSTEM_ERROR:

case ResponseCode.NO_PERMISSION:

case ResponseCode.NO_BUYER_ID:

case ResponseCode.NOT_IN_CURRENT_UNIT:

continue;

default:

if (sendResult != null) {

return sendResult;

} throw e;

}

} catch (InterruptedException e) {

endTimestamp = System.currentTimeMillis();

this.updateFaultItem(mq.getBrokerName(), endTimestamp - beginTimestampPrev, false);

log.warn(String.format("sendKernelImpl exception, throw exception, InvokeID: %s, RT: %sms, Broker: %s", invokeID, endTimestamp - beginTimestampPrev, mq), e);

log.warn(msg.toString()); log.warn("sendKernelImpl exception", e);

log.warn(msg.toString());

throw e;

}

} else {

break;

}

} if (sendResult != null) {

return sendResult;

} String info = String.format("Send [%d] times, still failed, cost [%d]ms, Topic: %s, BrokersSent: %s",

times,

System.currentTimeMillis() - beginTimestampFirst,

msg.getTopic(),

Arrays.toString(brokersSent)); info += FAQUrl.suggestTodo(FAQUrl.SEND_MSG_FAILED); MQClientException mqClientException = new MQClientException(info, exception);

if (callTimeout) {

throw new RemotingTooMuchRequestException("sendDefaultImpl call timeout");

} if (exception instanceof MQBrokerException) {

mqClientException.setResponseCode(((MQBrokerException) exception).getResponseCode());

} else if (exception instanceof RemotingConnectException) {

mqClientException.setResponseCode(ClientErrorCode.CONNECT_BROKER_EXCEPTION);

} else if (exception instanceof RemotingTimeoutException) {

mqClientException.setResponseCode(ClientErrorCode.ACCESS_BROKER_TIMEOUT);

} else if (exception instanceof MQClientException) {

mqClientException.setResponseCode(ClientErrorCode.BROKER_NOT_EXIST_EXCEPTION);

} throw mqClientException;

} validateNameServerSetting(); throw new MQClientException("No route info of this topic: " + msg.getTopic() + FAQUrl.suggestTodo(FAQUrl.NO_TOPIC_ROUTE_INFO),

null).setResponseCode(ClientErrorCode.NOT_FOUND_TOPIC_EXCEPTION);

}

// org.apache.rocketmq.client.impl.producer.DefaultMQProducerImpl#sendKernelImpl

private SendResult sendKernelImpl(final Message msg,

final MessageQueue mq,

final CommunicationMode communicationMode,

final SendCallback sendCallback,

final TopicPublishInfo topicPublishInfo,

final long timeout) throws MQClientException, RemotingException, MQBrokerException, InterruptedException {

long beginStartTime = System.currentTimeMillis();

String brokerAddr = this.mQClientFactory.findBrokerAddressInPublish(mq.getBrokerName());

if (null == brokerAddr) {

tryToFindTopicPublishInfo(mq.getTopic());

brokerAddr = this.mQClientFactory.findBrokerAddressInPublish(mq.getBrokerName());

} SendMessageContext context = null;

if (brokerAddr != null) {

brokerAddr = MixAll.brokerVIPChannel(this.defaultMQProducer.isSendMessageWithVIPChannel(), brokerAddr); byte[] prevBody = msg.getBody();

try {

//for MessageBatch,ID has been set in the generating process

if (!(msg instanceof MessageBatch)) {

MessageClientIDSetter.setUniqID(msg);

} boolean topicWithNamespace = false;

if (null != this.mQClientFactory.getClientConfig().getNamespace()) {

msg.setInstanceId(this.mQClientFactory.getClientConfig().getNamespace());

topicWithNamespace = true;

} int sysFlag = 0;

boolean msgBodyCompressed = false;

if (this.tryToCompressMessage(msg)) {

sysFlag |= MessageSysFlag.COMPRESSED_FLAG;

msgBodyCompressed = true;

} final String tranMsg = msg.getProperty(MessageConst.PROPERTY_TRANSACTION_PREPARED);

if (tranMsg != null && Boolean.parseBoolean(tranMsg)) {

sysFlag |= MessageSysFlag.TRANSACTION_PREPARED_TYPE;

} if (hasCheckForbiddenHook()) {

CheckForbiddenContext checkForbiddenContext = new CheckForbiddenContext();

checkForbiddenContext.setNameSrvAddr(this.defaultMQProducer.getNamesrvAddr());

checkForbiddenContext.setGroup(this.defaultMQProducer.getProducerGroup());

checkForbiddenContext.setCommunicationMode(communicationMode);

checkForbiddenContext.setBrokerAddr(brokerAddr);

checkForbiddenContext.setMessage(msg);

checkForbiddenContext.setMq(mq);

checkForbiddenContext.setUnitMode(this.isUnitMode());

this.executeCheckForbiddenHook(checkForbiddenContext);

} if (this.hasSendMessageHook()) {

context = new SendMessageContext();

context.setProducer(this);

context.setProducerGroup(this.defaultMQProducer.getProducerGroup());

context.setCommunicationMode(communicationMode);

context.setBornHost(this.defaultMQProducer.getClientIP());

context.setBrokerAddr(brokerAddr);

context.setMessage(msg);

context.setMq(mq);

context.setNamespace(this.defaultMQProducer.getNamespace());

String isTrans = msg.getProperty(MessageConst.PROPERTY_TRANSACTION_PREPARED);

if (isTrans != null && isTrans.equals("true")) {

context.setMsgType(MessageType.Trans_Msg_Half);

} if (msg.getProperty("__STARTDELIVERTIME") != null || msg.getProperty(MessageConst.PROPERTY_DELAY_TIME_LEVEL) != null) {

context.setMsgType(MessageType.Delay_Msg);

}

this.executeSendMessageHookBefore(context);

} SendMessageRequestHeader requestHeader = new SendMessageRequestHeader();

requestHeader.setProducerGroup(this.defaultMQProducer.getProducerGroup());

requestHeader.setTopic(msg.getTopic());

requestHeader.setDefaultTopic(this.defaultMQProducer.getCreateTopicKey());

requestHeader.setDefaultTopicQueueNums(this.defaultMQProducer.getDefaultTopicQueueNums());

requestHeader.setQueueId(mq.getQueueId());

requestHeader.setSysFlag(sysFlag);

requestHeader.setBornTimestamp(System.currentTimeMillis());

requestHeader.setFlag(msg.getFlag());

requestHeader.setProperties(MessageDecoder.messageProperties2String(msg.getProperties()));

requestHeader.setReconsumeTimes(0);

requestHeader.setUnitMode(this.isUnitMode());

requestHeader.setBatch(msg instanceof MessageBatch);

if (requestHeader.getTopic().startsWith(MixAll.RETRY_GROUP_TOPIC_PREFIX)) {

String reconsumeTimes = MessageAccessor.getReconsumeTime(msg);

if (reconsumeTimes != null) {

requestHeader.setReconsumeTimes(Integer.valueOf(reconsumeTimes));

MessageAccessor.clearProperty(msg, MessageConst.PROPERTY_RECONSUME_TIME);

} String maxReconsumeTimes = MessageAccessor.getMaxReconsumeTimes(msg);

if (maxReconsumeTimes != null) {

requestHeader.setMaxReconsumeTimes(Integer.valueOf(maxReconsumeTimes));

MessageAccessor.clearProperty(msg, MessageConst.PROPERTY_MAX_RECONSUME_TIMES);

}

} SendResult sendResult = null;

switch (communicationMode) {

case ASYNC:

Message tmpMessage = msg;

boolean messageCloned = false;

if (msgBodyCompressed) {

//If msg body was compressed, msgbody should be reset using prevBody.

//Clone new message using commpressed message body and recover origin massage.

//Fix bug:https://github.com/apache/rocketmq-externals/issues/66

tmpMessage = MessageAccessor.cloneMessage(msg);

messageCloned = true;

msg.setBody(prevBody);

} if (topicWithNamespace) {

if (!messageCloned) {

tmpMessage = MessageAccessor.cloneMessage(msg);

messageCloned = true;

}

msg.setTopic(NamespaceUtil.withoutNamespace(msg.getTopic(), this.defaultMQProducer.getNamespace()));

} long costTimeAsync = System.currentTimeMillis() - beginStartTime;

if (timeout < costTimeAsync) {

throw new RemotingTooMuchRequestException("sendKernelImpl call timeout");

}

sendResult = this.mQClientFactory.getMQClientAPIImpl().sendMessage(

brokerAddr,

mq.getBrokerName(),

tmpMessage,

requestHeader,

timeout - costTimeAsync,

communicationMode,

sendCallback,

topicPublishInfo,

this.mQClientFactory,

this.defaultMQProducer.getRetryTimesWhenSendAsyncFailed(),

context,

this);

break;

case ONEWAY:

case SYNC:

long costTimeSync = System.currentTimeMillis() - beginStartTime;

if (timeout < costTimeSync) {

throw new RemotingTooMuchRequestException("sendKernelImpl call timeout");

}

sendResult = this.mQClientFactory.getMQClientAPIImpl().sendMessage(

brokerAddr,

mq.getBrokerName(),

msg,

requestHeader,

timeout - costTimeSync,

communicationMode,

context,

this);

break;

default:

assert false;

break;

} if (this.hasSendMessageHook()) {

context.setSendResult(sendResult);

this.executeSendMessageHookAfter(context);

} return sendResult;

} catch (RemotingException e) {

if (this.hasSendMessageHook()) {

context.setException(e);

this.executeSendMessageHookAfter(context);

}

throw e;

} catch (MQBrokerException e) {

if (this.hasSendMessageHook()) {

context.setException(e);

this.executeSendMessageHookAfter(context);

}

throw e;

} catch (InterruptedException e) {

if (this.hasSendMessageHook()) {

context.setException(e);

this.executeSendMessageHookAfter(context);

}

throw e;

} finally {

msg.setBody(prevBody);

msg.setTopic(NamespaceUtil.withoutNamespace(msg.getTopic(), this.defaultMQProducer.getNamespace()));

}

} throw new MQClientException("The broker[" + mq.getBrokerName() + "] not exist", null);

} public SendResult sendMessage(

final String addr,

final String brokerName,

final Message msg,

final SendMessageRequestHeader requestHeader,

final long timeoutMillis,

final CommunicationMode communicationMode,

final SendMessageContext context,

final DefaultMQProducerImpl producer

) throws RemotingException, MQBrokerException, InterruptedException {

return sendMessage(addr, brokerName, msg, requestHeader, timeoutMillis, communicationMode, null, null, null, 0, context, producer);

} public SendResult sendMessage(

final String addr,

final String brokerName,

final Message msg,

final SendMessageRequestHeader requestHeader,

final long timeoutMillis,

final CommunicationMode communicationMode,

final SendCallback sendCallback,

final TopicPublishInfo topicPublishInfo,

final MQClientInstance instance,

final int retryTimesWhenSendFailed,

final SendMessageContext context,

final DefaultMQProducerImpl producer

) throws RemotingException, MQBrokerException, InterruptedException {

long beginStartTime = System.currentTimeMillis();

RemotingCommand request = null;

String msgType = msg.getProperty(MessageConst.PROPERTY_MESSAGE_TYPE);

boolean isReply = msgType != null && msgType.equals(MixAll.REPLY_MESSAGE_FLAG);

if (isReply) {

if (sendSmartMsg) {

SendMessageRequestHeaderV2 requestHeaderV2 = SendMessageRequestHeaderV2.createSendMessageRequestHeaderV2(requestHeader);

request = RemotingCommand.createRequestCommand(RequestCode.SEND_REPLY_MESSAGE_V2, requestHeaderV2);

} else {

request = RemotingCommand.createRequestCommand(RequestCode.SEND_REPLY_MESSAGE, requestHeader);

}

} else {

if (sendSmartMsg || msg instanceof MessageBatch) {

SendMessageRequestHeaderV2 requestHeaderV2 = SendMessageRequestHeaderV2.createSendMessageRequestHeaderV2(requestHeader);

request = RemotingCommand.createRequestCommand(msg instanceof MessageBatch ? RequestCode.SEND_BATCH_MESSAGE : RequestCode.SEND_MESSAGE_V2, requestHeaderV2);

} else {

request = RemotingCommand.createRequestCommand(RequestCode.SEND_MESSAGE, requestHeader);

}

}

request.setBody(msg.getBody()); switch (communicationMode) {

case ONEWAY:

this.remotingClient.invokeOneway(addr, request, timeoutMillis);

return null;

case ASYNC:

final AtomicInteger times = new AtomicInteger();

long costTimeAsync = System.currentTimeMillis() - beginStartTime;

if (timeoutMillis < costTimeAsync) {

throw new RemotingTooMuchRequestException("sendMessage call timeout");

}

this.sendMessageAsync(addr, brokerName, msg, timeoutMillis - costTimeAsync, request, sendCallback, topicPublishInfo, instance,

retryTimesWhenSendFailed, times, context, producer);

return null;

case SYNC:

long costTimeSync = System.currentTimeMillis() - beginStartTime;

if (timeoutMillis < costTimeSync) {

throw new RemotingTooMuchRequestException("sendMessage call timeout");

}

return this.sendMessageSync(addr, brokerName, msg, timeoutMillis - costTimeSync, request);

default:

assert false;

break;

} return null;

}

同步发送消息实现如下:(直接获取写入结果)

// org.apache.rocketmq.client.impl.MQClientAPIImpl#sendMessageSync

private SendResult sendMessageSync(

final String addr,

final String brokerName,

final Message msg,

final long timeoutMillis,

final RemotingCommand request

) throws RemotingException, MQBrokerException, InterruptedException {

RemotingCommand response = this.remotingClient.invokeSync(addr, request, timeoutMillis);

assert response != null;

return this.processSendResponse(brokerName, msg, response);

} @Override

public RemotingCommand invokeSync(String addr, final RemotingCommand request, long timeoutMillis)

throws InterruptedException, RemotingConnectException, RemotingSendRequestException, RemotingTimeoutException {

long beginStartTime = System.currentTimeMillis();

final Channel channel = this.getAndCreateChannel(addr);

if (channel != null && channel.isActive()) {

try {

doBeforeRpcHooks(addr, request);

long costTime = System.currentTimeMillis() - beginStartTime;

if (timeoutMillis < costTime) {

throw new RemotingTimeoutException("invokeSync call timeout");

}

RemotingCommand response = this.invokeSyncImpl(channel, request, timeoutMillis - costTime);

doAfterRpcHooks(RemotingHelper.parseChannelRemoteAddr(channel), request, response);

return response;

} catch (RemotingSendRequestException e) {

log.warn("invokeSync: send request exception, so close the channel[{}]", addr);

this.closeChannel(addr, channel);

throw e;

} catch (RemotingTimeoutException e) {

if (nettyClientConfig.isClientCloseSocketIfTimeout()) {

this.closeChannel(addr, channel);

log.warn("invokeSync: close socket because of timeout, {}ms, {}", timeoutMillis, addr);

}

log.warn("invokeSync: wait response timeout exception, the channel[{}]", addr);

throw e;

}

} else {

this.closeChannel(addr, channel);

throw new RemotingConnectException(addr);

}

}

// org.apache.rocketmq.remoting.netty.NettyRemotingAbstract#invokeSyncImpl

public RemotingCommand invokeSyncImpl(final Channel channel, final RemotingCommand request,

final long timeoutMillis)

throws InterruptedException, RemotingSendRequestException, RemotingTimeoutException {

final int opaque = request.getOpaque(); try {

final ResponseFuture responseFuture = new ResponseFuture(channel, opaque, timeoutMillis, null, null);

this.responseTable.put(opaque, responseFuture);

final SocketAddress addr = channel.remoteAddress();

// 核心: 向channel中写入数据,即是往远端服务器发送数据

channel.writeAndFlush(request).addListener(new ChannelFutureListener() {

@Override

public void operationComplete(ChannelFuture f) throws Exception {

if (f.isSuccess()) {

responseFuture.setSendRequestOK(true);

return;

} else {

responseFuture.setSendRequestOK(false);

}

// 出现异常情况下,主动处理 responseTable

// 在 putResponse() 会触发锁释放

responseTable.remove(opaque);

responseFuture.setCause(f.cause());

responseFuture.putResponse(null);

log.warn("send a request command to channel <" + addr + "> failed.");

}

});

// 同步阻塞等待写入结果响应(使用 CountDownLatch 实现)

RemotingCommand responseCommand = responseFuture.waitResponse(timeoutMillis);

if (null == responseCommand) {

if (responseFuture.isSendRequestOK()) {

throw new RemotingTimeoutException(RemotingHelper.parseSocketAddressAddr(addr), timeoutMillis,

responseFuture.getCause());

} else {

throw new RemotingSendRequestException(RemotingHelper.parseSocketAddressAddr(addr), responseFuture.getCause());

}

} return responseCommand;

} finally {

this.responseTable.remove(opaque);

}

}

异步发送消息核心实现如下:(等待异步回调或者忽略结果)

// org.apache.rocketmq.client.impl.MQClientAPIImpl#sendMessageAsync

private void sendMessageAsync(

final String addr,

final String brokerName,

final Message msg,

final long timeoutMillis,

final RemotingCommand request,

final SendCallback sendCallback,

final TopicPublishInfo topicPublishInfo,

final MQClientInstance instance,

final int retryTimesWhenSendFailed,

final AtomicInteger times,

final SendMessageContext context,

final DefaultMQProducerImpl producer

) throws InterruptedException, RemotingException {

final long beginStartTime = System.currentTimeMillis();

this.remotingClient.invokeAsync(addr, request, timeoutMillis, new InvokeCallback() {

@Override

public void operationComplete(ResponseFuture responseFuture) {

long cost = System.currentTimeMillis() - beginStartTime;

RemotingCommand response = responseFuture.getResponseCommand();

if (null == sendCallback && response != null) { try {

SendResult sendResult = MQClientAPIImpl.this.processSendResponse(brokerName, msg, response);

if (context != null && sendResult != null) {

context.setSendResult(sendResult);

context.getProducer().executeSendMessageHookAfter(context);

}

} catch (Throwable e) {

} producer.updateFaultItem(brokerName, System.currentTimeMillis() - responseFuture.getBeginTimestamp(), false);

return;

} if (response != null) {

try {

SendResult sendResult = MQClientAPIImpl.this.processSendResponse(brokerName, msg, response);

assert sendResult != null;

if (context != null) {

context.setSendResult(sendResult);

context.getProducer().executeSendMessageHookAfter(context);

} try {

sendCallback.onSuccess(sendResult);

} catch (Throwable e) {

} producer.updateFaultItem(brokerName, System.currentTimeMillis() - responseFuture.getBeginTimestamp(), false);

} catch (Exception e) {

producer.updateFaultItem(brokerName, System.currentTimeMillis() - responseFuture.getBeginTimestamp(), true);

onExceptionImpl(brokerName, msg, timeoutMillis - cost, request, sendCallback, topicPublishInfo, instance,

retryTimesWhenSendFailed, times, e, context, false, producer);

}

} else {

producer.updateFaultItem(brokerName, System.currentTimeMillis() - responseFuture.getBeginTimestamp(), true);

if (!responseFuture.isSendRequestOK()) {

MQClientException ex = new MQClientException("send request failed", responseFuture.getCause());

onExceptionImpl(brokerName, msg, timeoutMillis - cost, request, sendCallback, topicPublishInfo, instance,

retryTimesWhenSendFailed, times, ex, context, true, producer);

} else if (responseFuture.isTimeout()) {

MQClientException ex = new MQClientException("wait response timeout " + responseFuture.getTimeoutMillis() + "ms",

responseFuture.getCause());

onExceptionImpl(brokerName, msg, timeoutMillis - cost, request, sendCallback, topicPublishInfo, instance,

retryTimesWhenSendFailed, times, ex, context, true, producer);

} else {

MQClientException ex = new MQClientException("unknow reseaon", responseFuture.getCause());

onExceptionImpl(brokerName, msg, timeoutMillis - cost, request, sendCallback, topicPublishInfo, instance,

retryTimesWhenSendFailed, times, ex, context, true, producer);

}

}

}

});

}

// org.apache.rocketmq.remoting.netty.NettyRemotingClient#invokeAsync

@Override

public void invokeAsync(String addr, RemotingCommand request, long timeoutMillis, InvokeCallback invokeCallback)

throws InterruptedException, RemotingConnectException, RemotingTooMuchRequestException, RemotingTimeoutException,

RemotingSendRequestException {

long beginStartTime = System.currentTimeMillis();

final Channel channel = this.getAndCreateChannel(addr);

if (channel != null && channel.isActive()) {

try {

doBeforeRpcHooks(addr, request);

long costTime = System.currentTimeMillis() - beginStartTime;

if (timeoutMillis < costTime) {

throw new RemotingTooMuchRequestException("invokeAsync call timeout");

}

this.invokeAsyncImpl(channel, request, timeoutMillis - costTime, invokeCallback);

} catch (RemotingSendRequestException e) {

log.warn("invokeAsync: send request exception, so close the channel[{}]", addr);

this.closeChannel(addr, channel);

throw e;

}

} else {

this.closeChannel(addr, channel);

throw new RemotingConnectException(addr);

}

}

// org.apache.rocketmq.remoting.netty.NettyRemotingAbstract#invokeAsyncImpl

public void invokeAsyncImpl(final Channel channel, final RemotingCommand request, final long timeoutMillis,

final InvokeCallback invokeCallback)

throws InterruptedException, RemotingTooMuchRequestException, RemotingTimeoutException, RemotingSendRequestException {

long beginStartTime = System.currentTimeMillis();

final int opaque = request.getOpaque();

// 使用户可控异步请求数,默认为无限 65535

boolean acquired = this.semaphoreAsync.tryAcquire(timeoutMillis, TimeUnit.MILLISECONDS);

if (acquired) {

final SemaphoreReleaseOnlyOnce once = new SemaphoreReleaseOnlyOnce(this.semaphoreAsync);

long costTime = System.currentTimeMillis() - beginStartTime;

if (timeoutMillis < costTime) {

once.release();

throw new RemotingTimeoutException("invokeAsyncImpl call timeout");

} final ResponseFuture responseFuture = new ResponseFuture(channel, opaque, timeoutMillis - costTime, invokeCallback, once);

this.responseTable.put(opaque, responseFuture);

try {

// 向channel中写入数据后即返回,从而达到异步执行的效果

channel.writeAndFlush(request).addListener(new ChannelFutureListener() {

@Override

public void operationComplete(ChannelFuture f) throws Exception {

if (f.isSuccess()) {

responseFuture.setSendRequestOK(true);

return;

}

requestFail(opaque);

log.warn("send a request command to channel <{}> failed.", RemotingHelper.parseChannelRemoteAddr(channel));

}

});

} catch (Exception e) {

responseFuture.release();

log.warn("send a request command to channel <" + RemotingHelper.parseChannelRemoteAddr(channel) + "> Exception", e);

throw new RemotingSendRequestException(RemotingHelper.parseChannelRemoteAddr(channel), e);

}

} else {

if (timeoutMillis <= 0) {

throw new RemotingTooMuchRequestException("invokeAsyncImpl invoke too fast");

} else {

String info =

String.format("invokeAsyncImpl tryAcquire semaphore timeout, %dms, waiting thread nums: %d semaphoreAsyncValue: %d",

timeoutMillis,

this.semaphoreAsync.getQueueLength(),

this.semaphoreAsync.availablePermits()

);

log.warn(info);

throw new RemotingTimeoutException(info);

}

}

}

本身producer的线程模型是非常简单的,只不过我们顺便了解了下producer如何实现同步、异步发送消息的过程而已。

6. consumer的线程模型

consumer与producer同属于客户端,理论上其线程模型都会非常简单,且应基本一致。但是,因各自业务不同,特性自然不同。我们就其中一个探讨下:producer发送消息时,通过重试的方式,向broker发送消息,它的消息可能落到其中任意一台broker中。那么,consumer在做消息消费时,又当如何呢?有两个点我们需要得到保证:消费的安全性和完整性。换个名词说就是:At Least Once/At Most Once/Exactly Once。另外,rocketmq的消费模型中,提供了所谓的推送模型和拉取模型,所以又会存在同步和异步的问题了可能。(实际上都拉取模型的不同伪装而已)

下面我们可以简单看看其订阅消费基本过程:

// 1. 订阅配置

// org.apache.rocketmq.client.impl.consumer.DefaultMQPushConsumerImpl#subscribe(java.lang.String, java.lang.String)

public void subscribe(String topic, String subExpression) throws MQClientException {

try {

SubscriptionData subscriptionData = FilterAPI.buildSubscriptionData(this.defaultMQPushConsumer.getConsumerGroup(),

topic, subExpression);

this.rebalanceImpl.getSubscriptionInner().put(topic, subscriptionData);

if (this.mQClientFactory != null) {

this.mQClientFactory.sendHeartbeatToAllBrokerWithLock();

}

} catch (Exception e) {

throw new MQClientException("subscription exception", e);

}

}

// 2. 开启消费线程

// org.apache.rocketmq.client.consumer.DefaultMQPushConsumer#start

/**

* This method gets internal infrastructure readily to serve. Instances must call this method after configuration.

*

* @throws MQClientException if there is any client error.

*/

@Override

public void start() throws MQClientException {

setConsumerGroup(NamespaceUtil.wrapNamespace(this.getNamespace(), this.consumerGroup));

this.defaultMQPushConsumerImpl.start();

if (null != traceDispatcher) {

try {

traceDispatcher.start(this.getNamesrvAddr(), this.getAccessChannel());

} catch (MQClientException e) {

log.warn("trace dispatcher start failed ", e);

}

}

}

// org.apache.rocketmq.client.impl.consumer.DefaultMQPushConsumerImpl#start

public synchronized void start() throws MQClientException {

switch (this.serviceState) {

// 一开始就是这个状态

case CREATE_JUST:

log.info("the consumer [{}] start beginning. messageModel={}, isUnitMode={}", this.defaultMQPushConsumer.getConsumerGroup(),

this.defaultMQPushConsumer.getMessageModel(), this.defaultMQPushConsumer.isUnitMode());

this.serviceState = ServiceState.START_FAILED; this.checkConfig(); this.copySubscription(); if (this.defaultMQPushConsumer.getMessageModel() == MessageModel.CLUSTERING) {

this.defaultMQPushConsumer.changeInstanceNameToPID();

} this.mQClientFactory = MQClientManager.getInstance().getOrCreateMQClientInstance(this.defaultMQPushConsumer, this.rpcHook); this.rebalanceImpl.setConsumerGroup(this.defaultMQPushConsumer.getConsumerGroup());

this.rebalanceImpl.setMessageModel(this.defaultMQPushConsumer.getMessageModel());

this.rebalanceImpl.setAllocateMessageQueueStrategy(this.defaultMQPushConsumer.getAllocateMessageQueueStrategy());

this.rebalanceImpl.setmQClientFactory(this.mQClientFactory); this.pullAPIWrapper = new PullAPIWrapper(

mQClientFactory,

this.defaultMQPushConsumer.getConsumerGroup(), isUnitMode());

this.pullAPIWrapper.registerFilterMessageHook(filterMessageHookList); if (this.defaultMQPushConsumer.getOffsetStore() != null) {

this.offsetStore = this.defaultMQPushConsumer.getOffsetStore();

} else {

switch (this.defaultMQPushConsumer.getMessageModel()) {

case BROADCASTING:

this.offsetStore = new LocalFileOffsetStore(this.mQClientFactory, this.defaultMQPushConsumer.getConsumerGroup());

break;

case CLUSTERING:

this.offsetStore = new RemoteBrokerOffsetStore(this.mQClientFactory, this.defaultMQPushConsumer.getConsumerGroup());

break;

default:

break;

}

this.defaultMQPushConsumer.setOffsetStore(this.offsetStore);

}

this.offsetStore.load(); if (this.getMessageListenerInner() instanceof MessageListenerOrderly) {

this.consumeOrderly = true;

this.consumeMessageService =

new ConsumeMessageOrderlyService(this, (MessageListenerOrderly) this.getMessageListenerInner());

} else if (this.getMessageListenerInner() instanceof MessageListenerConcurrently) {

this.consumeOrderly = false;

this.consumeMessageService =

new ConsumeMessageConcurrentlyService(this, (MessageListenerConcurrently) this.getMessageListenerInner());

}

// 开启消费后台线程服务

this.consumeMessageService.start(); boolean registerOK = mQClientFactory.registerConsumer(this.defaultMQPushConsumer.getConsumerGroup(), this);

if (!registerOK) {

this.serviceState = ServiceState.CREATE_JUST;

this.consumeMessageService.shutdown(defaultMQPushConsumer.getAwaitTerminationMillisWhenShutdown());

throw new MQClientException("The consumer group[" + this.defaultMQPushConsumer.getConsumerGroup()

+ "] has been created before, specify another name please." + FAQUrl.suggestTodo(FAQUrl.GROUP_NAME_DUPLICATE_URL),

null);

}

// 开启消费线程

mQClientFactory.start();

log.info("the consumer [{}] start OK.", this.defaultMQPushConsumer.getConsumerGroup());

this.serviceState = ServiceState.RUNNING;

break;

case RUNNING:

case START_FAILED:

case SHUTDOWN_ALREADY:

throw new MQClientException("The PushConsumer service state not OK, maybe started once, "

+ this.serviceState

+ FAQUrl.suggestTodo(FAQUrl.CLIENT_SERVICE_NOT_OK),

null);

default:

break;

} this.updateTopicSubscribeInfoWhenSubscriptionChanged();

this.mQClientFactory.checkClientInBroker();

this.mQClientFactory.sendHeartbeatToAllBrokerWithLock();

this.mQClientFactory.rebalanceImmediately();

}

// org.apache.rocketmq.client.impl.consumer.ConsumeMessageConcurrentlyService#ConsumeMessageConcurrentlyService

public ConsumeMessageConcurrentlyService(DefaultMQPushConsumerImpl defaultMQPushConsumerImpl,

MessageListenerConcurrently messageListener) {

this.defaultMQPushConsumerImpl = defaultMQPushConsumerImpl;

this.messageListener = messageListener; this.defaultMQPushConsumer = this.defaultMQPushConsumerImpl.getDefaultMQPushConsumer();

this.consumerGroup = this.defaultMQPushConsumer.getConsumerGroup();

this.consumeRequestQueue = new LinkedBlockingQueue<Runnable>(); this.consumeExecutor = new ThreadPoolExecutor(

this.defaultMQPushConsumer.getConsumeThreadMin(),

this.defaultMQPushConsumer.getConsumeThreadMax(),

1000 * 60,

TimeUnit.MILLISECONDS,

this.consumeRequestQueue,

new ThreadFactoryImpl("ConsumeMessageThread_")); this.scheduledExecutorService = Executors.newSingleThreadScheduledExecutor(new ThreadFactoryImpl("ConsumeMessageScheduledThread_"));

this.cleanExpireMsgExecutors = Executors.newSingleThreadScheduledExecutor(new ThreadFactoryImpl("CleanExpireMsgScheduledThread_"));

} public void start() {

this.cleanExpireMsgExecutors.scheduleAtFixedRate(new Runnable() { @Override

public void run() {

cleanExpireMsg();

} }, this.defaultMQPushConsumer.getConsumeTimeout(), this.defaultMQPushConsumer.getConsumeTimeout(), TimeUnit.MINUTES);

}

// org.apache.rocketmq.client.impl.factory.MQClientInstance#start

public void start() throws MQClientException { synchronized (this) {

switch (this.serviceState) {

case CREATE_JUST:

this.serviceState = ServiceState.START_FAILED;

// If not specified,looking address from name server

if (null == this.clientConfig.getNamesrvAddr()) {

this.mQClientAPIImpl.fetchNameServerAddr();

}

// Start request-response channel

this.mQClientAPIImpl.start();

// Start various schedule tasks

this.startScheduledTask();

// Start pull service

this.pullMessageService.start();

// Start rebalance service

this.rebalanceService.start();

// Start push service

this.defaultMQProducer.getDefaultMQProducerImpl().start(false);

log.info("the client factory [{}] start OK", this.clientId);

this.serviceState = ServiceState.RUNNING;

break;

case START_FAILED:

throw new MQClientException("The Factory object[" + this.getClientId() + "] has been created before, and failed.", null);

default:

break;

}

}

}

更多后台线程运行情况请展开阅读:

// org.apache.rocketmq.client.impl.consumer.PullMessageService#run

@Override

public void run() {

log.info(this.getServiceName() + " service started"); while (!this.isStopped()) {

try {

PullRequest pullRequest = this.pullRequestQueue.take();

this.pullMessage(pullRequest);

} catch (InterruptedException ignored) {

} catch (Exception e) {

log.error("Pull Message Service Run Method exception", e);

}

} log.info(this.getServiceName() + " service end");

}

// org.apache.rocketmq.client.impl.consumer.DefaultMQPushConsumerImpl#executePullRequestImmediately

public void executePullRequestImmediately(final PullRequest pullRequest) {

this.mQClientFactory.getPullMessageService().executePullRequestImmediately(pullRequest);

}

// 从serveru端拉取指定messageQueue的消息

public void pullMessage(final PullRequest pullRequest) {

final ProcessQueue processQueue = pullRequest.getProcessQueue();

if (processQueue.isDropped()) {

log.info("the pull request[{}] is dropped.", pullRequest.toString());

return;

} pullRequest.getProcessQueue().setLastPullTimestamp(System.currentTimeMillis()); try {

this.makeSureStateOK();

} catch (MQClientException e) {

log.warn("pullMessage exception, consumer state not ok", e);

this.executePullRequestLater(pullRequest, pullTimeDelayMillsWhenException);

return;

} if (this.isPause()) {

log.warn("consumer was paused, execute pull request later. instanceName={}, group={}", this.defaultMQPushConsumer.getInstanceName(), this.defaultMQPushConsumer.getConsumerGroup());

this.executePullRequestLater(pullRequest, PULL_TIME_DELAY_MILLS_WHEN_SUSPEND);

return;

} long cachedMessageCount = processQueue.getMsgCount().get();

long cachedMessageSizeInMiB = processQueue.getMsgSize().get() / (1024 * 1024); if (cachedMessageCount > this.defaultMQPushConsumer.getPullThresholdForQueue()) {

this.executePullRequestLater(pullRequest, PULL_TIME_DELAY_MILLS_WHEN_FLOW_CONTROL);

if ((queueFlowControlTimes++ % 1000) == 0) {

log.warn(

"the cached message count exceeds the threshold {}, so do flow control, minOffset={}, maxOffset={}, count={}, size={} MiB, pullRequest={}, flowControlTimes={}",

this.defaultMQPushConsumer.getPullThresholdForQueue(), processQueue.getMsgTreeMap().firstKey(), processQueue.getMsgTreeMap().lastKey(), cachedMessageCount, cachedMessageSizeInMiB, pullRequest, queueFlowControlTimes);

}

return;

} if (cachedMessageSizeInMiB > this.defaultMQPushConsumer.getPullThresholdSizeForQueue()) {

this.executePullRequestLater(pullRequest, PULL_TIME_DELAY_MILLS_WHEN_FLOW_CONTROL);

if ((queueFlowControlTimes++ % 1000) == 0) {

log.warn(

"the cached message size exceeds the threshold {} MiB, so do flow control, minOffset={}, maxOffset={}, count={}, size={} MiB, pullRequest={}, flowControlTimes={}",

this.defaultMQPushConsumer.getPullThresholdSizeForQueue(), processQueue.getMsgTreeMap().firstKey(), processQueue.getMsgTreeMap().lastKey(), cachedMessageCount, cachedMessageSizeInMiB, pullRequest, queueFlowControlTimes);

}

return;

} if (!this.consumeOrderly) {

if (processQueue.getMaxSpan() > this.defaultMQPushConsumer.getConsumeConcurrentlyMaxSpan()) {

this.executePullRequestLater(pullRequest, PULL_TIME_DELAY_MILLS_WHEN_FLOW_CONTROL);

if ((queueMaxSpanFlowControlTimes++ % 1000) == 0) {

log.warn(

"the queue's messages, span too long, so do flow control, minOffset={}, maxOffset={}, maxSpan={}, pullRequest={}, flowControlTimes={}",

processQueue.getMsgTreeMap().firstKey(), processQueue.getMsgTreeMap().lastKey(), processQueue.getMaxSpan(),

pullRequest, queueMaxSpanFlowControlTimes);

}

return;

}

} else {

if (processQueue.isLocked()) {

if (!pullRequest.isLockedFirst()) {

final long offset = this.rebalanceImpl.computePullFromWhere(pullRequest.getMessageQueue());

boolean brokerBusy = offset < pullRequest.getNextOffset();

log.info("the first time to pull message, so fix offset from broker. pullRequest: {} NewOffset: {} brokerBusy: {}",

pullRequest, offset, brokerBusy);

if (brokerBusy) {

log.info("[NOTIFYME]the first time to pull message, but pull request offset larger than broker consume offset. pullRequest: {} NewOffset: {}",

pullRequest, offset);

} pullRequest.setLockedFirst(true);

pullRequest.setNextOffset(offset);

}

} else {

this.executePullRequestLater(pullRequest, pullTimeDelayMillsWhenException);

log.info("pull message later because not locked in broker, {}", pullRequest);

return;

}

} final SubscriptionData subscriptionData = this.rebalanceImpl.getSubscriptionInner().get(pullRequest.getMessageQueue().getTopic());

if (null == subscriptionData) {

this.executePullRequestLater(pullRequest, pullTimeDelayMillsWhenException);

log.warn("find the consumer's subscription failed, {}", pullRequest);

return;

} final long beginTimestamp = System.currentTimeMillis(); PullCallback pullCallback = new PullCallback() {

@Override

public void onSuccess(PullResult pullResult) {

if (pullResult != null) {

pullResult = DefaultMQPushConsumerImpl.this.pullAPIWrapper.processPullResult(pullRequest.getMessageQueue(), pullResult,

subscriptionData); switch (pullResult.getPullStatus()) {

case FOUND:

long prevRequestOffset = pullRequest.getNextOffset();

pullRequest.setNextOffset(pullResult.getNextBeginOffset());

long pullRT = System.currentTimeMillis() - beginTimestamp;

DefaultMQPushConsumerImpl.this.getConsumerStatsManager().incPullRT(pullRequest.getConsumerGroup(),

pullRequest.getMessageQueue().getTopic(), pullRT); long firstMsgOffset = Long.MAX_VALUE;

if (pullResult.getMsgFoundList() == null || pullResult.getMsgFoundList().isEmpty()) {

DefaultMQPushConsumerImpl.this.executePullRequestImmediately(pullRequest);

} else {

firstMsgOffset = pullResult.getMsgFoundList().get(0).getQueueOffset(); DefaultMQPushConsumerImpl.this.getConsumerStatsManager().incPullTPS(pullRequest.getConsumerGroup(),

pullRequest.getMessageQueue().getTopic(), pullResult.getMsgFoundList().size()); boolean dispatchToConsume = processQueue.putMessage(pullResult.getMsgFoundList());

DefaultMQPushConsumerImpl.this.consumeMessageService.submitConsumeRequest(

pullResult.getMsgFoundList(),

processQueue,

pullRequest.getMessageQueue(),

dispatchToConsume); if (DefaultMQPushConsumerImpl.this.defaultMQPushConsumer.getPullInterval() > 0) {

DefaultMQPushConsumerImpl.this.executePullRequestLater(pullRequest,

DefaultMQPushConsumerImpl.this.defaultMQPushConsumer.getPullInterval());

} else {

DefaultMQPushConsumerImpl.this.executePullRequestImmediately(pullRequest);

}

} if (pullResult.getNextBeginOffset() < prevRequestOffset

|| firstMsgOffset < prevRequestOffset) {

log.warn(

"[BUG] pull message result maybe data wrong, nextBeginOffset: {} firstMsgOffset: {} prevRequestOffset: {}",

pullResult.getNextBeginOffset(),

firstMsgOffset,

prevRequestOffset);

} break;

case NO_NEW_MSG:

pullRequest.setNextOffset(pullResult.getNextBeginOffset()); DefaultMQPushConsumerImpl.this.correctTagsOffset(pullRequest); DefaultMQPushConsumerImpl.this.executePullRequestImmediately(pullRequest);

break;

case NO_MATCHED_MSG:

pullRequest.setNextOffset(pullResult.getNextBeginOffset()); DefaultMQPushConsumerImpl.this.correctTagsOffset(pullRequest); DefaultMQPushConsumerImpl.this.executePullRequestImmediately(pullRequest);

break;

case OFFSET_ILLEGAL:

log.warn("the pull request offset illegal, {} {}",

pullRequest.toString(), pullResult.toString());

pullRequest.setNextOffset(pullResult.getNextBeginOffset()); pullRequest.getProcessQueue().setDropped(true);

DefaultMQPushConsumerImpl.this.executeTaskLater(new Runnable() { @Override

public void run() {

try {

DefaultMQPushConsumerImpl.this.offsetStore.updateOffset(pullRequest.getMessageQueue(),

pullRequest.getNextOffset(), false); DefaultMQPushConsumerImpl.this.offsetStore.persist(pullRequest.getMessageQueue()); DefaultMQPushConsumerImpl.this.rebalanceImpl.removeProcessQueue(pullRequest.getMessageQueue()); log.warn("fix the pull request offset, {}", pullRequest);

} catch (Throwable e) {

log.error("executeTaskLater Exception", e);

}

}

}, 10000);

break;

default:

break;

}

}

} @Override

public void onException(Throwable e) {

if (!pullRequest.getMessageQueue().getTopic().startsWith(MixAll.RETRY_GROUP_TOPIC_PREFIX)) {

log.warn("execute the pull request exception", e);

} DefaultMQPushConsumerImpl.this.executePullRequestLater(pullRequest, pullTimeDelayMillsWhenException);

}

}; boolean commitOffsetEnable = false;

long commitOffsetValue = 0L;

if (MessageModel.CLUSTERING == this.defaultMQPushConsumer.getMessageModel()) {

commitOffsetValue = this.offsetStore.readOffset(pullRequest.getMessageQueue(), ReadOffsetType.READ_FROM_MEMORY);

if (commitOffsetValue > 0) {

commitOffsetEnable = true;

}

} String subExpression = null;

boolean classFilter = false;

SubscriptionData sd = this.rebalanceImpl.getSubscriptionInner().get(pullRequest.getMessageQueue().getTopic());

if (sd != null) {

if (this.defaultMQPushConsumer.isPostSubscriptionWhenPull() && !sd.isClassFilterMode()) {

subExpression = sd.getSubString();

} classFilter = sd.isClassFilterMode();

} int sysFlag = PullSysFlag.buildSysFlag(

commitOffsetEnable, // commitOffset

true, // suspend

subExpression != null, // subscription

classFilter // class filter

);

try {

this.pullAPIWrapper.pullKernelImpl(

pullRequest.getMessageQueue(),

subExpression,

subscriptionData.getExpressionType(),

subscriptionData.getSubVersion(),

pullRequest.getNextOffset(),

this.defaultMQPushConsumer.getPullBatchSize(),

sysFlag,

commitOffsetValue,

BROKER_SUSPEND_MAX_TIME_MILLIS,

CONSUMER_TIMEOUT_MILLIS_WHEN_SUSPEND,

CommunicationMode.ASYNC,

pullCallback

);

} catch (Exception e) {

log.error("pullKernelImpl exception", e);

this.executePullRequestLater(pullRequest, pullTimeDelayMillsWhenException);

}

} public PullResult pullKernelImpl(

final MessageQueue mq,

final String subExpression,

final String expressionType,

final long subVersion,

final long offset,

final int maxNums,

final int sysFlag,

final long commitOffset,

final long brokerSuspendMaxTimeMillis,

final long timeoutMillis,

final CommunicationMode communicationMode,

final PullCallback pullCallback

) throws MQClientException, RemotingException, MQBrokerException, InterruptedException {

FindBrokerResult findBrokerResult =

this.mQClientFactory.findBrokerAddressInSubscribe(mq.getBrokerName(),

this.recalculatePullFromWhichNode(mq), false);

if (null == findBrokerResult) {

this.mQClientFactory.updateTopicRouteInfoFromNameServer(mq.getTopic());

findBrokerResult =

this.mQClientFactory.findBrokerAddressInSubscribe(mq.getBrokerName(),

this.recalculatePullFromWhichNode(mq), false);

} if (findBrokerResult != null) {

{

// check version

if (!ExpressionType.isTagType(expressionType)

&& findBrokerResult.getBrokerVersion() < MQVersion.Version.V4_1_0_SNAPSHOT.ordinal()) {

throw new MQClientException("The broker[" + mq.getBrokerName() + ", "

+ findBrokerResult.getBrokerVersion() + "] does not upgrade to support for filter message by " + expressionType, null);

}

}

int sysFlagInner = sysFlag; if (findBrokerResult.isSlave()) {

sysFlagInner = PullSysFlag.clearCommitOffsetFlag(sysFlagInner);

} PullMessageRequestHeader requestHeader = new PullMessageRequestHeader();

requestHeader.setConsumerGroup(this.consumerGroup);

requestHeader.setTopic(mq.getTopic());

requestHeader.setQueueId(mq.getQueueId());

requestHeader.setQueueOffset(offset);

requestHeader.setMaxMsgNums(maxNums);

requestHeader.setSysFlag(sysFlagInner);

requestHeader.setCommitOffset(commitOffset);

requestHeader.setSuspendTimeoutMillis(brokerSuspendMaxTimeMillis);

requestHeader.setSubscription(subExpression);

requestHeader.setSubVersion(subVersion);

requestHeader.setExpressionType(expressionType); String brokerAddr = findBrokerResult.getBrokerAddr();

if (PullSysFlag.hasClassFilterFlag(sysFlagInner)) {

brokerAddr = computPullFromWhichFilterServer(mq.getTopic(), brokerAddr);

} PullResult pullResult = this.mQClientFactory.getMQClientAPIImpl().pullMessage(

brokerAddr,

requestHeader,

timeoutMillis,

communicationMode,

pullCallback); return pullResult;

} throw new MQClientException("The broker[" + mq.getBrokerName() + "] not exist", null);

}

// org.apache.rocketmq.client.impl.MQClientAPIImpl#pullMessage

public PullResult pullMessage(

final String addr,

final PullMessageRequestHeader requestHeader,

final long timeoutMillis,

final CommunicationMode communicationMode,

final PullCallback pullCallback

) throws RemotingException, MQBrokerException, InterruptedException {

RemotingCommand request = RemotingCommand.createRequestCommand(RequestCode.PULL_MESSAGE, requestHeader); switch (communicationMode) {

// ONEWAY是不会支持的

case ONEWAY:

assert false;

return null;

case ASYNC:

this.pullMessageAsync(addr, request, timeoutMillis, pullCallback);

return null;

case SYNC:

return this.pullMessageSync(addr, request, timeoutMillis);

default:

assert false;

break;

} return null;

} // 我们只看异步处理

// org.apache.rocketmq.client.impl.MQClientAPIImpl#pullMessageAsync

private void pullMessageAsync(

final String addr,

final RemotingCommand request,

final long timeoutMillis,

final PullCallback pullCallback

) throws RemotingException, InterruptedException {

this.remotingClient.invokeAsync(addr, request, timeoutMillis, new InvokeCallback() {

@Override

public void operationComplete(ResponseFuture responseFuture) {

RemotingCommand response = responseFuture.getResponseCommand();

if (response != null) {

try {

PullResult pullResult = MQClientAPIImpl.this.processPullResponse(response);

assert pullResult != null;

pullCallback.onSuccess(pullResult);

} catch (Exception e) {

pullCallback.onException(e);

}

} else {

// 消费异常回调处理

if (!responseFuture.isSendRequestOK()) {

pullCallback.onException(new MQClientException("send request failed to " + addr + ". Request: " + request, responseFuture.getCause()));

} else if (responseFuture.isTimeout()) {

pullCallback.onException(new MQClientException("wait response from " + addr + " timeout :" + responseFuture.getTimeoutMillis() + "ms" + ". Request: " + request,

responseFuture.getCause()));

} else {

pullCallback.onException(new MQClientException("unknown reason. addr: " + addr + ", timeoutMillis: " + timeoutMillis + ". Request: " + request, responseFuture.getCause()));

}

}

}

});

}

// 与producer一样

// org.apache.rocketmq.remoting.netty.NettyRemotingClient#invokeAsync

@Override

public void invokeAsync(String addr, RemotingCommand request, long timeoutMillis, InvokeCallback invokeCallback)

throws InterruptedException, RemotingConnectException, RemotingTooMuchRequestException, RemotingTimeoutException,

RemotingSendRequestException {

long beginStartTime = System.currentTimeMillis();

final Channel channel = this.getAndCreateChannel(addr);

if (channel != null && channel.isActive()) {

try {

doBeforeRpcHooks(addr, request);

long costTime = System.currentTimeMillis() - beginStartTime;

if (timeoutMillis < costTime) {

throw new RemotingTooMuchRequestException("invokeAsync call timeout");

}

// 与producer一样, channel.writeAndFlush() 后直接返回

this.invokeAsyncImpl(channel, request, timeoutMillis - costTime, invokeCallback);

} catch (RemotingSendRequestException e) {

log.warn("invokeAsync: send request exception, so close the channel[{}]", addr);

this.closeChannel(addr, channel);

throw e;

}

} else {

this.closeChannel(addr, channel);

throw new RemotingConnectException(addr);

}

}

至于每个节点消费哪些broker的数据,逻辑有点复杂,核心主要是依赖于消费策略的配置。感兴趣的同学可展开阅读。

// 消息消费的处理

// org.apache.rocketmq.client.impl.consumer.RebalanceService#run

@Override

public void run() {

log.info(this.getServiceName() + " service started"); while (!this.isStopped()) {

this.waitForRunning(waitInterval);

this.mqClientFactory.doRebalance();

} log.info(this.getServiceName() + " service end");

}

// org.apache.rocketmq.client.impl.factory.MQClientInstance#doRebalance

public void doRebalance() {

for (Map.Entry<String, MQConsumerInner> entry : this.consumerTable.entrySet()) {

MQConsumerInner impl = entry.getValue();

if (impl != null) {

try {

impl.doRebalance();

} catch (Throwable e) {

log.error("doRebalance exception", e);

}

}

}

}

// org.apache.rocketmq.client.impl.consumer.DefaultMQPushConsumerImpl#doRebalance

@Override

public void doRebalance() {

if (!this.pause) {

this.rebalanceImpl.doRebalance(this.isConsumeOrderly());

}

}

// org.apache.rocketmq.client.impl.consumer.RebalanceImpl#doRebalance

public void doRebalance(final boolean isOrder) {

Map<String, SubscriptionData> subTable = this.getSubscriptionInner();

if (subTable != null) {

for (final Map.Entry<String, SubscriptionData> entry : subTable.entrySet()) {

final String topic = entry.getKey();

try {

this.rebalanceByTopic(topic, isOrder);

} catch (Throwable e) {

if (!topic.startsWith(MixAll.RETRY_GROUP_TOPIC_PREFIX)) {

log.warn("rebalanceByTopic Exception", e);

}

}

}

} this.truncateMessageQueueNotMyTopic();

}

// org.apache.rocketmq.client.impl.consumer.RebalanceImpl#rebalanceByTopic

private void rebalanceByTopic(final String topic, final boolean isOrder) {

switch (messageModel) {

// 广播消息,所有节点都消费

case BROADCASTING: {

Set<MessageQueue> mqSet = this.topicSubscribeInfoTable.get(topic);

if (mqSet != null) {

boolean changed = this.updateProcessQueueTableInRebalance(topic, mqSet, isOrder);

if (changed) {

this.messageQueueChanged(topic, mqSet, mqSet);

log.info("messageQueueChanged {} {} {} {}",

consumerGroup,

topic,

mqSet,

mqSet);

}

} else {

log.warn("doRebalance, {}, but the topic[{}] not exist.", consumerGroup, topic);

}

break;

}

// 集群消息,同一级消息分组只有一个节点消费同一消息

case CLUSTERING: {

Set<MessageQueue> mqSet = this.topicSubscribeInfoTable.get(topic);

List<String> cidAll = this.mQClientFactory.findConsumerIdList(topic, consumerGroup);

if (null == mqSet) {

if (!topic.startsWith(MixAll.RETRY_GROUP_TOPIC_PREFIX)) {