Spark记录-Spark作业调试

在本地IDE里直接运行spark程序操作远程集群

一般运行spark作业的方式有两种:

本机调试,通过设置master为local模式运行spark作业,这种方式一般用于调试,不用连接远程集群。

集群运行。一般本机调试通过后会将作业打成jar包通过spark-submit提交运行。生产环境一般使用这种方式。

操作方法

1.设置master

两种方式:

- 在程序中设置

SparkConf conf = new SparkConf()

.setAppName("helloworld")

.setMaster("spark://192.168.66.66:7077");- 在run configuration中设置

VM options中添加:

-Dspark.master="spark://192.168.66.66:7077"2.设置HDFS

在程序中使用HDFS路径,会出现文件系统不匹配hdfs,可以将集群中的hadoop配置中的core-site.xml和hdfs-site.xml拷贝到项目src/main/resources下

3.发送jar包

如果程序中使用了自定义的算子和依赖的jar包,需要将本项目jar包和依赖的jar包发送到集群中SPARK_HOME/jars目录下,可以用maven-assembly打成带依赖的jar包,spark的jars相当于mvn库。

注意集群中每个节点的jars目录下都要放自己的jar包。

可能遇到的问题

如果遇到了节点间通信问题,可能是jar包没有在所有节点放置好。

incompatible loaded等问题,是依赖的spark版本不匹配,修改dependency。

至此,就可以直接在IDE中运行了

实战操作

1.安装jdk1.8、idea2017,maven3、idea2017安装scala插件

2.新建maven项目-scala

3.配置pom.xml,下载依赖包

<?xml version="1.0" encoding="UTF-8"?>

<!--

Licensed to the Apache Software Foundation (ASF) under one

or more contributor license agreements. See the NOTICE file

distributed with this work for additional information

regarding copyright ownership. The ASF licenses this file

to you under the Apache License, Version 2.0 (the

"License"); you may not use this file except in compliance

with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing,

software distributed under the License is distributed on an

"AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

KIND, either express or implied. See the License for the

specific language governing permissions and limitations

under the License.

-->

<!-- $Id: pom.xml 642118 2008-03-28 08:04:16Z reinhard $ -->

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd"> <modelVersion>4.0.0</modelVersion>

<packaging>war</packaging> <name>scala</name>

<groupId>scala</groupId>

<artifactId>scala</artifactId>

<version>1.0-SNAPSHOT</version> <build>

<plugins>

<plugin>

<groupId>org.mortbay.jetty</groupId>

<artifactId>maven-jetty-plugin</artifactId>

<version>6.1.7</version>

<configuration>

<connectors>

<connector implementation="org.mortbay.jetty.nio.SelectChannelConnector">

<port>8888</port>

<maxIdleTime>30000</maxIdleTime>

</connector>

</connectors>

<webAppSourceDirectory>${project.build.directory}/${pom.artifactId}-${pom.version}</webAppSourceDirectory>

<contextPath>/</contextPath>

</configuration>

</plugin>

</plugins>

</build>

<properties>

<scala.version>2.10.5</scala.version>

<hadoop.version>2.7.3</hadoop.version>

</properties> <repositories>

<repository>

<id>scala-tools.org</id>

<name>Scala-Tools Maven2 Repository</name>

<url>http://scala-tools.org/repo-releases</url>

</repository>

</repositories> <dependencies>

<!--dependency>

<groupId>scala</groupId>

<artifactId>[the artifact id of the block to be mounted]</artifactId>

<version>1.0-SNAPSHOT</version>

</dependency-->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.10</artifactId>

<version>1.6.3</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.10</artifactId>

<version>1.6.3</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_2.10</artifactId>

<version>1.6.3</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>${hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>${hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>${hadoop.version}</version>

</dependency>

</dependencies> </project>

4.打开File-Project Structure-Modules,Sources-scala项目src-main新建一个scala目录,设置为Sources

5.打开File-Project Structure-Libraries,添加scala sdk(2.10.5)

6.从集群里复制一个hadoop程序到D盘,再到网上下载https://github.com/srccodes/hadoop-common-2.2.0-bin,解压合并bin文件夹即可,设置用户环境变量HADOOP_HOME。

7.返回主界面,在src-main-scala下新建一个object:sparkPi:

import scala.math.random

import org.apache.spark._ object sparkPi {

def main(args: Array[String]) {

System.setProperty("hadoop.home.dir", "D:\\hadoop")

val conf = new SparkConf().setAppName("Spark Pi").setMaster("spark://192.168.66.66:7077")

.set("spark.executor.memory.","1g")

.set("spark.serializer","org.apache.spark.serializer.KryoSerializer")

.setJars(Seq("D:\\workspace\\scala\\out\\scala.jar"))

val spark = new SparkContext(conf)

val slices = if (args.length > 0) args(0).toInt else 2

println("Time:" + spark.startTime)

val n = math.min(1000L * slices, Int.MaxValue).toInt // avoid overflow

val count = spark.parallelize(1 until n, slices).map { i =>

val x = random * 2 - 1

val y = random * 2 - 1

if (x*x + y*y < 1) 1 else 0

}.reduce(_ + _)

println("Pi is roughly " + 4.0 * count / n)

spark.stop()

}

}

8.打开File-Project Structure-Artifacts,添加JAR-from modules with dependencies,选择好主类-sparkPi,设置好Output,去除不必要的包;

9.Build-Build Artifacts-scala.jar

10.Run-Run sparkPi

"C:\Program Files\Java\jdk1.8.0_121\bin\java" "-javaagent:D:\Program Files (x86)\JetBrains\IntelliJ IDEA 173.3302.5\lib\idea_rt.jar=53908:D:\Program Files (x86)\JetBrains\IntelliJ IDEA 173.3302.5\bin" -Dfile.encoding=UTF-8 -classpath "C:\Program Files\Java\jdk1.8.0_121\jre\lib\charsets.jar;C:\Program Files\Java\jdk1.8.0_121\jre\lib\deploy.jar;C:\Program Files\Java\jdk1.8.0_121\jre\lib\ext\access-bridge-64.jar;C:\Program Files\Java\jdk1.8.0_121\jre\lib\ext\cldrdata.jar;C:\Program Files\Java\jdk1.8.0_121\jre\lib\ext\dnsns.jar;C:\Program Files\Java\jdk1.8.0_121\jre\lib\ext\jaccess.jar;C:\Program Files\Java\jdk1.8.0_121\jre\lib\ext\jfxrt.jar;C:\Program Files\Java\jdk1.8.0_121\jre\lib\ext\localedata.jar;C:\Program Files\Java\jdk1.8.0_121\jre\lib\ext\nashorn.jar;C:\Program Files\Java\jdk1.8.0_121\jre\lib\ext\sunec.jar;C:\Program Files\Java\jdk1.8.0_121\jre\lib\ext\sunjce_provider.jar;C:\Program Files\Java\jdk1.8.0_121\jre\lib\ext\sunmscapi.jar;C:\Program Files\Java\jdk1.8.0_121\jre\lib\ext\sunpkcs11.jar;C:\Program Files\Java\jdk1.8.0_121\jre\lib\ext\zipfs.jar;C:\Program Files\Java\jdk1.8.0_121\jre\lib\javaws.jar;C:\Program Files\Java\jdk1.8.0_121\jre\lib\jce.jar;C:\Program Files\Java\jdk1.8.0_121\jre\lib\jfr.jar;C:\Program Files\Java\jdk1.8.0_121\jre\lib\jfxswt.jar;C:\Program Files\Java\jdk1.8.0_121\jre\lib\jsse.jar;C:\Program Files\Java\jdk1.8.0_121\jre\lib\management-agent.jar;C:\Program Files\Java\jdk1.8.0_121\jre\lib\plugin.jar;C:\Program Files\Java\jdk1.8.0_121\jre\lib\resources.jar;C:\Program Files\Java\jdk1.8.0_121\jre\lib\rt.jar;D:\workspace\scala\target\classes;C:\Users\xinfang\.m2\repository\org\scala-lang\scala-library\2.10.5\scala-library-2.10.5.jar;C:\Users\xinfang\.m2\repository\org\scala-lang\scala-reflect\2.10.5\scala-reflect-2.10.5.jar;C:\Users\xinfang\.m2\repository\org\apache\spark\spark-core_2.10\1.6.3\spark-core_2.10-1.6.3.jar;C:\Users\xinfang\.m2\repository\org\apache\avro\avro-mapred\1.7.7\avro-mapred-1.7.7-hadoop2.jar;C:\Users\xinfang\.m2\repository\org\apache\avro\avro-ipc\1.7.7\avro-ipc-1.7.7.jar;C:\Users\xinfang\.m2\repository\org\apache\avro\avro-ipc\1.7.7\avro-ipc-1.7.7-tests.jar;C:\Users\xinfang\.m2\repository\com\twitter\chill_2.10\0.5.0\chill_2.10-0.5.0.jar;C:\Users\xinfang\.m2\repository\com\esotericsoftware\kryo\kryo\2.21\kryo-2.21.jar;C:\Users\xinfang\.m2\repository\com\esotericsoftware\reflectasm\reflectasm\1.07\reflectasm-1.07-shaded.jar;C:\Users\xinfang\.m2\repository\com\esotericsoftware\minlog\minlog\1.2\minlog-1.2.jar;C:\Users\xinfang\.m2\repository\org\objenesis\objenesis\1.2\objenesis-1.2.jar;C:\Users\xinfang\.m2\repository\com\twitter\chill-java\0.5.0\chill-java-0.5.0.jar;C:\Users\xinfang\.m2\repository\org\apache\xbean\xbean-asm5-shaded\4.4\xbean-asm5-shaded-4.4.jar;C:\Users\xinfang\.m2\repository\org\apache\spark\spark-launcher_2.10\1.6.3\spark-launcher_2.10-1.6.3.jar;C:\Users\xinfang\.m2\repository\org\apache\spark\spark-network-common_2.10\1.6.3\spark-network-common_2.10-1.6.3.jar;C:\Users\xinfang\.m2\repository\org\apache\spark\spark-network-shuffle_2.10\1.6.3\spark-network-shuffle_2.10-1.6.3.jar;C:\Users\xinfang\.m2\repository\com\fasterxml\jackson\core\jackson-annotations\2.4.4\jackson-annotations-2.4.4.jar;C:\Users\xinfang\.m2\repository\org\apache\spark\spark-unsafe_2.10\1.6.3\spark-unsafe_2.10-1.6.3.jar;C:\Users\xinfang\.m2\repository\net\java\dev\jets3t\jets3t\0.7.1\jets3t-0.7.1.jar;C:\Users\xinfang\.m2\repository\org\apache\curator\curator-recipes\2.4.0\curator-recipes-2.4.0.jar;C:\Users\xinfang\.m2\repository\org\apache\curator\curator-framework\2.4.0\curator-framework-2.4.0.jar;C:\Users\xinfang\.m2\repository\org\eclipse\jetty\orbit\javax.servlet\3.0.0.v201112011016\javax.servlet-3.0.0.v201112011016.jar;C:\Users\xinfang\.m2\repository\org\apache\commons\commons-lang3\3.3.2\commons-lang3-3.3.2.jar;C:\Users\xinfang\.m2\repository\org\apache\commons\commons-math3\3.4.1\commons-math3-3.4.1.jar;C:\Users\xinfang\.m2\repository\com\google\code\findbugs\jsr305\1.3.9\jsr305-1.3.9.jar;C:\Users\xinfang\.m2\repository\org\slf4j\slf4j-api\1.7.10\slf4j-api-1.7.10.jar;C:\Users\xinfang\.m2\repository\org\slf4j\jul-to-slf4j\1.7.10\jul-to-slf4j-1.7.10.jar;C:\Users\xinfang\.m2\repository\org\slf4j\jcl-over-slf4j\1.7.10\jcl-over-slf4j-1.7.10.jar;C:\Users\xinfang\.m2\repository\log4j\log4j\1.2.17\log4j-1.2.17.jar;C:\Users\xinfang\.m2\repository\org\slf4j\slf4j-log4j12\1.7.10\slf4j-log4j12-1.7.10.jar;C:\Users\xinfang\.m2\repository\com\ning\compress-lzf\1.0.3\compress-lzf-1.0.3.jar;C:\Users\xinfang\.m2\repository\org\xerial\snappy\snappy-java\1.1.2.6\snappy-java-1.1.2.6.jar;C:\Users\xinfang\.m2\repository\net\jpountz\lz4\lz4\1.3.0\lz4-1.3.0.jar;C:\Users\xinfang\.m2\repository\org\roaringbitmap\RoaringBitmap\0.5.11\RoaringBitmap-0.5.11.jar;C:\Users\xinfang\.m2\repository\commons-net\commons-net\2.2\commons-net-2.2.jar;C:\Users\xinfang\.m2\repository\com\typesafe\akka\akka-remote_2.10\2.3.11\akka-remote_2.10-2.3.11.jar;C:\Users\xinfang\.m2\repository\com\typesafe\akka\akka-actor_2.10\2.3.11\akka-actor_2.10-2.3.11.jar;C:\Users\xinfang\.m2\repository\com\typesafe\config\1.2.1\config-1.2.1.jar;C:\Users\xinfang\.m2\repository\org\uncommons\maths\uncommons-maths\1.2.2a\uncommons-maths-1.2.2a.jar;C:\Users\xinfang\.m2\repository\com\typesafe\akka\akka-slf4j_2.10\2.3.11\akka-slf4j_2.10-2.3.11.jar;C:\Users\xinfang\.m2\repository\org\json4s\json4s-jackson_2.10\3.2.10\json4s-jackson_2.10-3.2.10.jar;C:\Users\xinfang\.m2\repository\org\json4s\json4s-core_2.10\3.2.10\json4s-core_2.10-3.2.10.jar;C:\Users\xinfang\.m2\repository\org\json4s\json4s-ast_2.10\3.2.10\json4s-ast_2.10-3.2.10.jar;C:\Users\xinfang\.m2\repository\org\scala-lang\scalap\2.10.0\scalap-2.10.0.jar;C:\Users\xinfang\.m2\repository\org\scala-lang\scala-compiler\2.10.0\scala-compiler-2.10.0.jar;C:\Users\xinfang\.m2\repository\com\sun\jersey\jersey-server\1.9\jersey-server-1.9.jar;C:\Users\xinfang\.m2\repository\asm\asm\3.1\asm-3.1.jar;C:\Users\xinfang\.m2\repository\com\sun\jersey\jersey-core\1.9\jersey-core-1.9.jar;C:\Users\xinfang\.m2\repository\org\apache\mesos\mesos\0.21.1\mesos-0.21.1-shaded-protobuf.jar;C:\Users\xinfang\.m2\repository\io\netty\netty-all\4.0.29.Final\netty-all-4.0.29.Final.jar;C:\Users\xinfang\.m2\repository\com\clearspring\analytics\stream\2.7.0\stream-2.7.0.jar;C:\Users\xinfang\.m2\repository\io\dropwizard\metrics\metrics-core\3.1.2\metrics-core-3.1.2.jar;C:\Users\xinfang\.m2\repository\io\dropwizard\metrics\metrics-jvm\3.1.2\metrics-jvm-3.1.2.jar;C:\Users\xinfang\.m2\repository\io\dropwizard\metrics\metrics-json\3.1.2\metrics-json-3.1.2.jar;C:\Users\xinfang\.m2\repository\io\dropwizard\metrics\metrics-graphite\3.1.2\metrics-graphite-3.1.2.jar;C:\Users\xinfang\.m2\repository\com\fasterxml\jackson\core\jackson-databind\2.4.4\jackson-databind-2.4.4.jar;C:\Users\xinfang\.m2\repository\com\fasterxml\jackson\core\jackson-core\2.4.4\jackson-core-2.4.4.jar;C:\Users\xinfang\.m2\repository\com\fasterxml\jackson\module\jackson-module-scala_2.10\2.4.4\jackson-module-scala_2.10-2.4.4.jar;C:\Users\xinfang\.m2\repository\org\scala-lang\scala-reflect\2.10.4\scala-reflect-2.10.4.jar;C:\Users\xinfang\.m2\repository\com\thoughtworks\paranamer\paranamer\2.6\paranamer-2.6.jar;C:\Users\xinfang\.m2\repository\org\apache\ivy\ivy\2.4.0\ivy-2.4.0.jar;C:\Users\xinfang\.m2\repository\oro\oro\2.0.8\oro-2.0.8.jar;C:\Users\xinfang\.m2\repository\org\tachyonproject\tachyon-client\0.8.2\tachyon-client-0.8.2.jar;C:\Users\xinfang\.m2\repository\org\tachyonproject\tachyon-underfs-hdfs\0.8.2\tachyon-underfs-hdfs-0.8.2.jar;C:\Users\xinfang\.m2\repository\org\tachyonproject\tachyon-underfs-s3\0.8.2\tachyon-underfs-s3-0.8.2.jar;C:\Users\xinfang\.m2\repository\org\tachyonproject\tachyon-underfs-local\0.8.2\tachyon-underfs-local-0.8.2.jar;C:\Users\xinfang\.m2\repository\net\razorvine\pyrolite\4.9\pyrolite-4.9.jar;C:\Users\xinfang\.m2\repository\net\sf\py4j\py4j\0.9\py4j-0.9.jar;C:\Users\xinfang\.m2\repository\org\spark-project\spark\unused\1.0.0\unused-1.0.0.jar;C:\Users\xinfang\.m2\repository\org\apache\spark\spark-sql_2.10\1.6.3\spark-sql_2.10-1.6.3.jar;C:\Users\xinfang\.m2\repository\org\apache\spark\spark-catalyst_2.10\1.6.3\spark-catalyst_2.10-1.6.3.jar;C:\Users\xinfang\.m2\repository\org\codehaus\janino\janino\2.7.8\janino-2.7.8.jar;C:\Users\xinfang\.m2\repository\org\codehaus\janino\commons-compiler\2.7.8\commons-compiler-2.7.8.jar;C:\Users\xinfang\.m2\repository\org\apache\parquet\parquet-column\1.7.0\parquet-column-1.7.0.jar;C:\Users\xinfang\.m2\repository\org\apache\parquet\parquet-common\1.7.0\parquet-common-1.7.0.jar;C:\Users\xinfang\.m2\repository\org\apache\parquet\parquet-encoding\1.7.0\parquet-encoding-1.7.0.jar;C:\Users\xinfang\.m2\repository\org\apache\parquet\parquet-generator\1.7.0\parquet-generator-1.7.0.jar;C:\Users\xinfang\.m2\repository\org\apache\parquet\parquet-hadoop\1.7.0\parquet-hadoop-1.7.0.jar;C:\Users\xinfang\.m2\repository\org\apache\parquet\parquet-format\2.3.0-incubating\parquet-format-2.3.0-incubating.jar;C:\Users\xinfang\.m2\repository\org\apache\parquet\parquet-jackson\1.7.0\parquet-jackson-1.7.0.jar;C:\Users\xinfang\.m2\repository\org\apache\spark\spark-streaming_2.10\1.6.3\spark-streaming_2.10-1.6.3.jar;C:\Users\xinfang\.m2\repository\org\apache\hadoop\hadoop-client\2.7.3\hadoop-client-2.7.3.jar;C:\Users\xinfang\.m2\repository\org\apache\hadoop\hadoop-mapreduce-client-app\2.7.3\hadoop-mapreduce-client-app-2.7.3.jar;C:\Users\xinfang\.m2\repository\org\apache\hadoop\hadoop-mapreduce-client-common\2.7.3\hadoop-mapreduce-client-common-2.7.3.jar;C:\Users\xinfang\.m2\repository\org\apache\hadoop\hadoop-yarn-client\2.7.3\hadoop-yarn-client-2.7.3.jar;C:\Users\xinfang\.m2\repository\org\apache\hadoop\hadoop-yarn-server-common\2.7.3\hadoop-yarn-server-common-2.7.3.jar;C:\Users\xinfang\.m2\repository\org\apache\hadoop\hadoop-mapreduce-client-shuffle\2.7.3\hadoop-mapreduce-client-shuffle-2.7.3.jar;C:\Users\xinfang\.m2\repository\org\apache\hadoop\hadoop-yarn-api\2.7.3\hadoop-yarn-api-2.7.3.jar;C:\Users\xinfang\.m2\repository\org\apache\hadoop\hadoop-mapreduce-client-core\2.7.3\hadoop-mapreduce-client-core-2.7.3.jar;C:\Users\xinfang\.m2\repository\org\apache\hadoop\hadoop-yarn-common\2.7.3\hadoop-yarn-common-2.7.3.jar;C:\Users\xinfang\.m2\repository\javax\xml\bind\jaxb-api\2.2.2\jaxb-api-2.2.2.jar;C:\Users\xinfang\.m2\repository\javax\xml\stream\stax-api\1.0-2\stax-api-1.0-2.jar;C:\Users\xinfang\.m2\repository\javax\activation\activation\1.1\activation-1.1.jar;C:\Users\xinfang\.m2\repository\com\sun\jersey\jersey-client\1.9\jersey-client-1.9.jar;C:\Users\xinfang\.m2\repository\org\apache\hadoop\hadoop-mapreduce-client-jobclient\2.7.3\hadoop-mapreduce-client-jobclient-2.7.3.jar;C:\Users\xinfang\.m2\repository\org\apache\hadoop\hadoop-annotations\2.7.3\hadoop-annotations-2.7.3.jar;C:\Users\xinfang\.m2\repository\org\apache\hadoop\hadoop-common\2.7.3\hadoop-common-2.7.3.jar;C:\Users\xinfang\.m2\repository\com\google\guava\guava\11.0.2\guava-11.0.2.jar;C:\Users\xinfang\.m2\repository\commons-cli\commons-cli\1.2\commons-cli-1.2.jar;C:\Users\xinfang\.m2\repository\xmlenc\xmlenc\0.52\xmlenc-0.52.jar;C:\Users\xinfang\.m2\repository\commons-httpclient\commons-httpclient\3.1\commons-httpclient-3.1.jar;C:\Users\xinfang\.m2\repository\commons-codec\commons-codec\1.4\commons-codec-1.4.jar;C:\Users\xinfang\.m2\repository\commons-io\commons-io\2.4\commons-io-2.4.jar;C:\Users\xinfang\.m2\repository\commons-collections\commons-collections\3.2.2\commons-collections-3.2.2.jar;C:\Users\xinfang\.m2\repository\javax\servlet\servlet-api\2.5\servlet-api-2.5.jar;C:\Users\xinfang\.m2\repository\org\mortbay\jetty\jetty\6.1.26\jetty-6.1.26.jar;C:\Users\xinfang\.m2\repository\org\mortbay\jetty\jetty-util\6.1.26\jetty-util-6.1.26.jar;C:\Users\xinfang\.m2\repository\javax\servlet\jsp\jsp-api\2.1\jsp-api-2.1.jar;C:\Users\xinfang\.m2\repository\com\sun\jersey\jersey-json\1.9\jersey-json-1.9.jar;C:\Users\xinfang\.m2\repository\org\codehaus\jettison\jettison\1.1\jettison-1.1.jar;C:\Users\xinfang\.m2\repository\com\sun\xml\bind\jaxb-impl\2.2.3-1\jaxb-impl-2.2.3-1.jar;C:\Users\xinfang\.m2\repository\org\codehaus\jackson\jackson-jaxrs\1.8.3\jackson-jaxrs-1.8.3.jar;C:\Users\xinfang\.m2\repository\org\codehaus\jackson\jackson-xc\1.8.3\jackson-xc-1.8.3.jar;C:\Users\xinfang\.m2\repository\commons-logging\commons-logging\1.1.3\commons-logging-1.1.3.jar;C:\Users\xinfang\.m2\repository\commons-lang\commons-lang\2.6\commons-lang-2.6.jar;C:\Users\xinfang\.m2\repository\commons-configuration\commons-configuration\1.6\commons-configuration-1.6.jar;C:\Users\xinfang\.m2\repository\commons-digester\commons-digester\1.8\commons-digester-1.8.jar;C:\Users\xinfang\.m2\repository\commons-beanutils\commons-beanutils\1.7.0\commons-beanutils-1.7.0.jar;C:\Users\xinfang\.m2\repository\commons-beanutils\commons-beanutils-core\1.8.0\commons-beanutils-core-1.8.0.jar;C:\Users\xinfang\.m2\repository\org\codehaus\jackson\jackson-core-asl\1.9.13\jackson-core-asl-1.9.13.jar;C:\Users\xinfang\.m2\repository\org\codehaus\jackson\jackson-mapper-asl\1.9.13\jackson-mapper-asl-1.9.13.jar;C:\Users\xinfang\.m2\repository\org\apache\avro\avro\1.7.4\avro-1.7.4.jar;C:\Users\xinfang\.m2\repository\com\google\protobuf\protobuf-java\2.5.0\protobuf-java-2.5.0.jar;C:\Users\xinfang\.m2\repository\com\google\code\gson\gson\2.2.4\gson-2.2.4.jar;C:\Users\xinfang\.m2\repository\org\apache\hadoop\hadoop-auth\2.7.3\hadoop-auth-2.7.3.jar;C:\Users\xinfang\.m2\repository\org\apache\httpcomponents\httpclient\4.2.5\httpclient-4.2.5.jar;C:\Users\xinfang\.m2\repository\org\apache\httpcomponents\httpcore\4.2.4\httpcore-4.2.4.jar;C:\Users\xinfang\.m2\repository\org\apache\directory\server\apacheds-kerberos-codec\2.0.0-M15\apacheds-kerberos-codec-2.0.0-M15.jar;C:\Users\xinfang\.m2\repository\org\apache\directory\server\apacheds-i18n\2.0.0-M15\apacheds-i18n-2.0.0-M15.jar;C:\Users\xinfang\.m2\repository\org\apache\directory\api\api-asn1-api\1.0.0-M20\api-asn1-api-1.0.0-M20.jar;C:\Users\xinfang\.m2\repository\org\apache\directory\api\api-util\1.0.0-M20\api-util-1.0.0-M20.jar;C:\Users\xinfang\.m2\repository\com\jcraft\jsch\0.1.42\jsch-0.1.42.jar;C:\Users\xinfang\.m2\repository\org\apache\curator\curator-client\2.7.1\curator-client-2.7.1.jar;C:\Users\xinfang\.m2\repository\org\apache\htrace\htrace-core\3.1.0-incubating\htrace-core-3.1.0-incubating.jar;C:\Users\xinfang\.m2\repository\org\apache\zookeeper\zookeeper\3.4.6\zookeeper-3.4.6.jar;C:\Users\xinfang\.m2\repository\org\apache\commons\commons-compress\1.4.1\commons-compress-1.4.1.jar;C:\Users\xinfang\.m2\repository\org\tukaani\xz\1.0\xz-1.0.jar;C:\Users\xinfang\.m2\repository\org\apache\hadoop\hadoop-hdfs\2.7.3\hadoop-hdfs-2.7.3.jar;C:\Users\xinfang\.m2\repository\commons-daemon\commons-daemon\1.0.13\commons-daemon-1.0.13.jar;C:\Users\xinfang\.m2\repository\io\netty\netty\3.6.2.Final\netty-3.6.2.Final.jar;C:\Users\xinfang\.m2\repository\xerces\xercesImpl\2.9.1\xercesImpl-2.9.1.jar;C:\Users\xinfang\.m2\repository\xml-apis\xml-apis\1.3.04\xml-apis-1.3.04.jar;C:\Users\xinfang\.m2\repository\org\fusesource\leveldbjni\leveldbjni-all\1.8\leveldbjni-all-1.8.jar" sparkPi

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

17/11/07 15:34:22 INFO SparkContext: Running Spark version 1.6.3

17/11/07 15:34:24 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

17/11/07 15:34:24 INFO SecurityManager: Changing view acls to: xinfang

17/11/07 15:34:24 INFO SecurityManager: Changing modify acls to: xinfang

17/11/07 15:34:24 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(xinfang); users with modify permissions: Set(xinfang)

17/11/07 15:34:26 INFO Utils: Successfully started service 'sparkDriver' on port 53931.

17/11/07 15:34:26 INFO Slf4jLogger: Slf4jLogger started

17/11/07 15:34:26 INFO Remoting: Starting remoting

17/11/07 15:34:27 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkDriverActorSystem@172.20.107.151:53944]

17/11/07 15:34:27 INFO Utils: Successfully started service 'sparkDriverActorSystem' on port 53944.

17/11/07 15:34:27 INFO SparkEnv: Registering MapOutputTracker

17/11/07 15:34:27 INFO SparkEnv: Registering BlockManagerMaster

17/11/07 15:34:27 INFO DiskBlockManager: Created local directory at C:\Users\xinfang\AppData\Local\Temp\blockmgr-d4ba2426-7f9b-47f1-800e-2aad1aa75f70

17/11/07 15:34:27 INFO MemoryStore: MemoryStore started with capacity 1122.0 MB

17/11/07 15:34:27 INFO SparkEnv: Registering OutputCommitCoordinator

17/11/07 15:34:27 INFO Utils: Successfully started service 'SparkUI' on port 4040.

17/11/07 15:34:27 INFO SparkUI: Started SparkUI at http://172.20.107.151:4040

17/11/07 15:34:27 INFO HttpFileServer: HTTP File server directory is C:\Users\xinfang\AppData\Local\Temp\spark-150f8c4e-c5df-4254-a9f0-a8158b4caff0\httpd-b0aaa30f-cd92-4fbd-b064-6284fa604359

17/11/07 15:34:27 INFO HttpServer: Starting HTTP Server

17/11/07 15:34:28 INFO Utils: Successfully started service 'HTTP file server' on port 53947.

17/11/07 15:34:28 INFO SparkContext: Added JAR D:\workspace\scala\out\scala.jar at http://172.20.107.151:53947/jars/scala.jar with timestamp 1510040068032

17/11/07 15:34:28 INFO AppClient$ClientEndpoint: Connecting to master spark://192.168.66.66:7077...

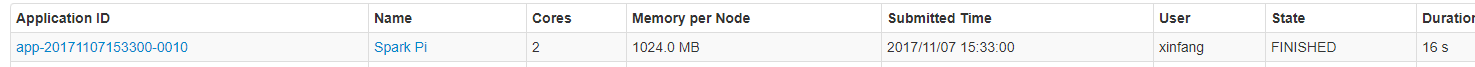

17/11/07 15:34:30 INFO SparkDeploySchedulerBackend: Connected to Spark cluster with app ID app-20171107153300-0010

17/11/07 15:34:30 INFO AppClient$ClientEndpoint: Executor added: app-20171107153300-0010/0 on worker-20171106165832-192.168.66.66-7078 (192.168.66.66:7078) with 2 cores

17/11/07 15:34:30 INFO SparkDeploySchedulerBackend: Granted executor ID app-20171107153300-0010/0 on hostPort 192.168.66.66:7078 with 2 cores, 1024.0 MB RAM

17/11/07 15:34:30 INFO AppClient$ClientEndpoint: Executor updated: app-20171107153300-0010/0 is now RUNNING

17/11/07 15:34:30 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 53967.

17/11/07 15:34:30 INFO NettyBlockTransferService: Server created on 53967

17/11/07 15:34:30 INFO BlockManagerMaster: Trying to register BlockManager

17/11/07 15:34:30 INFO BlockManagerMasterEndpoint: Registering block manager 172.20.107.151:53967 with 1122.0 MB RAM, BlockManagerId(driver, 172.20.107.151, 53967)

17/11/07 15:34:30 INFO BlockManagerMaster: Registered BlockManager

17/11/07 15:34:31 INFO SparkDeploySchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.0

Time:1510040062460

17/11/07 15:34:31 INFO SparkContext: Starting job: reduce at sparkPi.scala:17

17/11/07 15:34:31 INFO DAGScheduler: Got job 0 (reduce at sparkPi.scala:17) with 2 output partitions

17/11/07 15:34:31 INFO DAGScheduler: Final stage: ResultStage 0 (reduce at sparkPi.scala:17)

17/11/07 15:34:31 INFO DAGScheduler: Parents of final stage: List()

17/11/07 15:34:31 INFO DAGScheduler: Missing parents: List()

17/11/07 15:34:32 INFO DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[1] at map at sparkPi.scala:13), which has no missing parents

17/11/07 15:34:32 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 1848.0 B, free 1122.0 MB)

17/11/07 15:34:32 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 1206.0 B, free 1122.0 MB)

17/11/07 15:34:32 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 172.20.107.151:53967 (size: 1206.0 B, free: 1122.0 MB)

17/11/07 15:34:32 INFO SparkContext: Created broadcast 0 from broadcast at DAGScheduler.scala:1006

17/11/07 15:34:32 INFO DAGScheduler: Submitting 2 missing tasks from ResultStage 0 (MapPartitionsRDD[1] at map at sparkPi.scala:13)

17/11/07 15:34:32 INFO TaskSchedulerImpl: Adding task set 0.0 with 2 tasks

17/11/07 15:34:36 INFO SparkDeploySchedulerBackend: Registered executor NettyRpcEndpointRef(null) (xinfang:53977) with ID 0

17/11/07 15:34:36 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, xinfang, partition 0,PROCESS_LOCAL, 2130 bytes)

17/11/07 15:34:36 INFO TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, xinfang, partition 1,PROCESS_LOCAL, 2130 bytes)

17/11/07 15:34:37 INFO BlockManagerMasterEndpoint: Registering block manager xinfang:64456 with 511.1 MB RAM, BlockManagerId(0, xinfang, 64456)

17/11/07 15:34:42 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on xinfang:64456 (size: 1206.0 B, free: 511.1 MB)

17/11/07 15:34:44 INFO TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 7589 ms on xinfang (1/2)

17/11/07 15:34:44 INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 7654 ms on xinfang (2/2)

17/11/07 15:34:44 INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

17/11/07 15:34:44 INFO DAGScheduler: ResultStage 0 (reduce at sparkPi.scala:17) finished in 11.849 s

17/11/07 15:34:44 INFO DAGScheduler: Job 0 finished: reduce at sparkPi.scala:17, took 12.553598 s

Pi is roughly 3.15

17/11/07 15:34:44 INFO SparkUI: Stopped Spark web UI at http://172.20.107.151:4040

17/11/07 15:34:44 INFO SparkDeploySchedulerBackend: Shutting down all executors

17/11/07 15:34:44 INFO SparkDeploySchedulerBackend: Asking each executor to shut down

17/11/07 15:34:44 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

17/11/07 15:34:44 INFO MemoryStore: MemoryStore cleared

17/11/07 15:34:44 INFO BlockManager: BlockManager stopped

17/11/07 15:34:44 INFO BlockManagerMaster: BlockManagerMaster stopped

17/11/07 15:34:44 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

17/11/07 15:34:44 INFO SparkContext: Successfully stopped SparkContext

17/11/07 15:34:44 INFO RemoteActorRefProvider$RemotingTerminator: Shutting down remote daemon.

17/11/07 15:34:44 INFO RemoteActorRefProvider$RemotingTerminator: Remote daemon shut down; proceeding with flushing remote transports.

17/11/07 15:34:44 INFO ShutdownHookManager: Shutdown hook called

17/11/07 15:34:44 INFO ShutdownHookManager: Deleting directory C:\Users\xinfang\AppData\Local\Temp\spark-150f8c4e-c5df-4254-a9f0-a8158b4caff0\httpd-b0aaa30f-cd92-4fbd-b064-6284fa604359

17/11/07 15:34:44 INFO ShutdownHookManager: Deleting directory C:\Users\xinfang\AppData\Local\Temp\spark-150f8c4e-c5df-4254-a9f0-a8158b4caff0 Process finished with exit code 0

Spark记录-Spark作业调试的更多相关文章

- Spark记录-spark编程介绍

Spark核心编程 Spark 核心是整个项目的基础.它提供了分布式任务调度,调度和基本的 I/O 功能.Spark 使用一种称为RDD(弹性分布式数据集)一个专门的基础数据结构,是整个机器分区数据的 ...

- Spark记录-Spark性能优化解决方案

Spark性能优化的10大问题及其解决方案 问题1:reduce task数目不合适解决方式:需根据实际情况调节默认配置,调整方式是修改参数spark.default.parallelism.通常,r ...

- Spark记录-spark介绍

Apache Spark是一个集群计算设计的快速计算.它是建立在Hadoop MapReduce之上,它扩展了 MapReduce 模式,有效地使用更多类型的计算,其中包括交互式查询和流处理.这是一个 ...

- Spark记录-Spark On YARN内存分配(转载)

Spark On YARN内存分配(转载) 说明 按照Spark应用程序中的driver分布方式不同,Spark on YARN有两种模式: yarn-client模式.yarn-cluster模式. ...

- Spark记录-Spark性能优化(开发、资源、数据、shuffle)

开发调优篇 原则一:避免创建重复的RDD 通常来说,我们在开发一个Spark作业时,首先是基于某个数据源(比如Hive表或HDFS文件)创建一个初始的RDD:接着对这个RDD执行某个算子操作,然后得到 ...

- Spark记录-Spark on Yarn框架

一.客户端进行操作 1.根据yarnConf来初始化yarnClient,并启动yarnClient2.创建客户端Application,并获取Application的ID,进一步判断集群中的资源是否 ...

- Spark记录-Spark on mesos配置

1.安装mesos #用centos6的源yum安装 # rpm -Uvh http://repos.mesosphere.io/el/6/noarch/RPMS/mesosphere-el-repo ...

- Spark记录-spark与storm比对与选型(转载)

大数据实时处理平台市场上产品众多,本文着重讨论spark与storm的比对,最后结合适用场景进行选型. 一.spark与storm的比较 比较点 Storm Spark Streaming 实时计算模 ...

- Spark记录-spark报错Unable to load native-hadoop library for your platform

解决方案一: #cp $HADOOP_HOME/lib/native/libhadoop.so $JAVA_HOME/jre/lib/amd64 #源码编译snappy---./configure ...

随机推荐

- 一款基于Zigbee技术的智慧鱼塘系统研究与设计

在现代鱼塘养鱼中,主要困扰渔农的就是养殖成本问题.而鱼塘养殖成本最高的就是养殖的人工费,喂养的饲料费和鱼塘中高达几千瓦增氧机的消耗的电费.实现鱼塘自动化养殖将会很好地解决上述问题,大大提高渔农的经济效 ...

- linux下SpringBoot Jar包自启脚本配置

今天整理服务器上SpringBoot项目发现是自启的,于是想看看实现.翻看离职同事的交接文档发现一个***.service文件内容如下 [Unit] Description=sgfront After ...

- DevOps on AWS之Cloudformation概念介绍篇

Cloudformation的相关概念 AWS cloudformation是一项典型的(IAC)基础架构即代码服务..通过编写模板对亚马逊云服务的资源进行调用和编排.借助cloudformation ...

- Bitmap 位图 Java实现

一.结构思想 以 bit 作为存储单位进行布尔值存取的数据结构. 表现为:给定第i位,该bit为1则表示true,为0则表示false. 二.使用场景及优点 适用于对布尔或0.1值进行(大量)存取的场 ...

- dtcp格式定义

common name type optional comment id string y Content id version string y DTCP version. "1.0&qu ...

- ubuntu 下配置 开发环境

1. apache: sudo apt-get install apache2 安装好输入网址测试所否成功: http://localhost 2. mongo 已经安装好了 版本:2.4.8 ref ...

- 20135220谈愈敏Blog1_计算机是如何工作的

计算机是如何工作的 存储程序计算机工作模型 冯诺依曼体系结构 从硬件角度来看:CPU和内存,由总线连接,CPU中有一个名为IP的寄存器,总是指向内存的某一块:CS,代码段,执行命令时就取IP指向的一条 ...

- LINUX内核分析第五周学习总结——扒开应用系统的三层皮(下)

LINUX内核分析第五周学习总结——扒开应用系统的三层皮(下) 张忻(原创作品转载请注明出处) <Linux内核分析>MOOC课程http://mooc.study.163.com/cou ...

- Alpha版使用说明

1引言 1 .1编写目的 针对我们发布的alpha版本做出安装和使用说明,使参与内测的人员及用户了解软件的使用方法和相关内容. 1 .2参考资料 <javaWeb程序设计基础><di ...

- ElasticSearch 2 (31) - 信息聚合系列之时间处理

ElasticSearch 2 (31) - 信息聚合系列之时间处理 摘要 如果说搜索是 Elasticsearch 里最受欢迎的功能,那么按时间创建直方图一定排在第二位.为什么需要使用时间直方图? ...