webrtc之视频显示模块--video_render

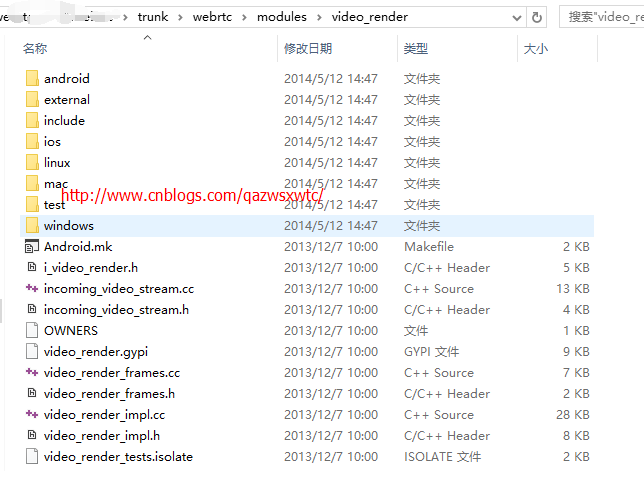

在上一篇博文中,简单介绍了webrtc为我们提供了跨平台的视频采集模块,这篇博文也简单介绍下webrtc为我们提供的跨平台的视频显示模块:video_render。 该模块的源码结构如下:

如上图,我们也可以看到webrtc提供的视频显示模块video_render模块支持android、IOS、linux、mac和windows平台。我们在使用的时候只用单独编译该模块或者从webrtc中提取出该模块即可。

video_render模块的头文件内容为:

// Class definitions

class VideoRender: public Module

{

public:

/*

* Create a video render module object

*

* id - unique identifier of this video render module object

* window - pointer to the window to render to

* fullscreen - true if this is a fullscreen renderer

* videoRenderType - type of renderer to create

*/

static VideoRender

* CreateVideoRender(

const int32_t id,

void* window,

const bool fullscreen,

const VideoRenderType videoRenderType =

kRenderDefault); /*

* Destroy a video render module object

*

* module - object to destroy

*/

static void DestroyVideoRender(VideoRender* module); /*

* Change the unique identifier of this object

*

* id - new unique identifier of this video render module object

*/

virtual int32_t ChangeUniqueId(const int32_t id) = ; virtual int32_t TimeUntilNextProcess() = ;

virtual int32_t Process() = ; /**************************************************************************

*

* Window functions

*

***************************************************************************/ /*

* Get window for this renderer

*/

virtual void* Window() = ; /*

* Change render window

*

* window - the new render window, assuming same type as originally created.

*/

virtual int32_t ChangeWindow(void* window) = ; /**************************************************************************

*

* Incoming Streams

*

***************************************************************************/ /*

* Add incoming render stream

*

* streamID - id of the stream to add

* zOrder - relative render order for the streams, 0 = on top

* left - position of the stream in the window, [0.0f, 1.0f]

* top - position of the stream in the window, [0.0f, 1.0f]

* right - position of the stream in the window, [0.0f, 1.0f]

* bottom - position of the stream in the window, [0.0f, 1.0f]

*

* Return - callback class to use for delivering new frames to render.

*/

virtual VideoRenderCallback

* AddIncomingRenderStream(const uint32_t streamId,

const uint32_t zOrder,

const float left, const float top,

const float right, const float bottom) = ;

/*

* Delete incoming render stream

*

* streamID - id of the stream to add

*/

virtual int32_t

DeleteIncomingRenderStream(const uint32_t streamId) = ; /*

* Add incoming render callback, used for external rendering

*

* streamID - id of the stream the callback is used for

* renderObject - the VideoRenderCallback to use for this stream, NULL to remove

*

* Return - callback class to use for delivering new frames to render.

*/

virtual int32_t

AddExternalRenderCallback(const uint32_t streamId,

VideoRenderCallback* renderObject) = ; /*

* Get the porperties for an incoming render stream

*

* streamID - [in] id of the stream to get properties for

* zOrder - [out] relative render order for the streams, 0 = on top

* left - [out] position of the stream in the window, [0.0f, 1.0f]

* top - [out] position of the stream in the window, [0.0f, 1.0f]

* right - [out] position of the stream in the window, [0.0f, 1.0f]

* bottom - [out] position of the stream in the window, [0.0f, 1.0f]

*/

virtual int32_t

GetIncomingRenderStreamProperties(const uint32_t streamId,

uint32_t& zOrder,

float& left, float& top,

float& right, float& bottom) const = ;

/*

* The incoming frame rate to the module, not the rate rendered in the window.

*/

virtual uint32_t

GetIncomingFrameRate(const uint32_t streamId) = ; /*

* Returns the number of incoming streams added to this render module

*/

virtual uint32_t GetNumIncomingRenderStreams() const = ; /*

* Returns true if this render module has the streamId added, false otherwise.

*/

virtual bool

HasIncomingRenderStream(const uint32_t streamId) const = ; /*

* Registers a callback to get raw images in the same time as sent

* to the renderer. To be used for external rendering.

*/

virtual int32_t

RegisterRawFrameCallback(const uint32_t streamId,

VideoRenderCallback* callbackObj) = ; /*

* This method is usefull to get last rendered frame for the stream specified

*/

virtual int32_t

GetLastRenderedFrame(const uint32_t streamId,

I420VideoFrame &frame) const = ; /**************************************************************************

*

* Start/Stop

*

***************************************************************************/ /*

* Starts rendering the specified stream

*/

virtual int32_t StartRender(const uint32_t streamId) = ; /*

* Stops the renderer

*/

virtual int32_t StopRender(const uint32_t streamId) = ; /*

* Resets the renderer

* No streams are removed. The state should be as after AddStream was called.

*/

virtual int32_t ResetRender() = ; /**************************************************************************

*

* Properties

*

***************************************************************************/ /*

* Returns the preferred render video type

*/

virtual RawVideoType PreferredVideoType() const = ; /*

* Returns true if the renderer is in fullscreen mode, otherwise false.

*/

virtual bool IsFullScreen() = ; /*

* Gets screen resolution in pixels

*/

virtual int32_t

GetScreenResolution(uint32_t& screenWidth,

uint32_t& screenHeight) const = ; /*

* Get the actual render rate for this stream. I.e rendered frame rate,

* not frames delivered to the renderer.

*/

virtual uint32_t RenderFrameRate(const uint32_t streamId) = ; /*

* Set cropping of incoming stream

*/

virtual int32_t SetStreamCropping(const uint32_t streamId,

const float left,

const float top,

const float right,

const float bottom) = ; /*

* re-configure renderer

*/ // Set the expected time needed by the graphics card or external renderer,

// i.e. frames will be released for rendering |delay_ms| before set render

// time in the video frame.

virtual int32_t SetExpectedRenderDelay(uint32_t stream_id,

int32_t delay_ms) = ; virtual int32_t ConfigureRenderer(const uint32_t streamId,

const unsigned int zOrder,

const float left,

const float top,

const float right,

const float bottom) = ; virtual int32_t SetTransparentBackground(const bool enable) = ; virtual int32_t FullScreenRender(void* window, const bool enable) = ; virtual int32_t SetBitmap(const void* bitMap,

const uint8_t pictureId,

const void* colorKey,

const float left, const float top,

const float right, const float bottom) = ; virtual int32_t SetText(const uint8_t textId,

const uint8_t* text,

const int32_t textLength,

const uint32_t textColorRef,

const uint32_t backgroundColorRef,

const float left, const float top,

const float right, const float bottom) = ; /*

* Set a start image. The image is rendered before the first image has been delivered

*/

virtual int32_t

SetStartImage(const uint32_t streamId,

const I420VideoFrame& videoFrame) = ; /*

* Set a timout image. The image is rendered if no videoframe has been delivered

*/

virtual int32_t SetTimeoutImage(const uint32_t streamId,

const I420VideoFrame& videoFrame,

const uint32_t timeout)= ; virtual int32_t MirrorRenderStream(const int renderId,

const bool enable,

const bool mirrorXAxis,

const bool mirrorYAxis) = ;

};

1,在显示前需要向该模块传入一个windows窗口对象的指针,理由如下函数:

static VideoRender

* CreateVideoRender(

const int32_t id,

void* window,

const bool fullscreen,

const VideoRenderType videoRenderType =

kRenderDefault);, 2,

以下两个函数主要用于开始显示和结束显示。

/*

* Starts rendering the specified stream

*/

virtual int32_t StartRender(const uint32_t streamId) = 0; /*

* Stops the renderer

*/

virtual int32_t StopRender(const uint32_t streamId) = 0; 另:

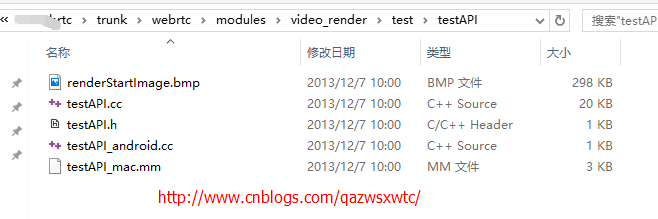

webrtc也有自带调用video_render的例程: 如下图

该例程包含 直接c++调用(主要针对windows系统)和android版本(其实android版本是没有实际源码,但是我们能仿照windows的调用方式写出来)和ios的调用,下面只列出直接C++调用的程序

头文件为:

/*

* Copyright (c) 2011 The WebRTC project authors. All Rights Reserved.

*

* Use of this source code is governed by a BSD-style license

* that can be found in the LICENSE file in the root of the source

* tree. An additional intellectual property rights grant can be found

* in the file PATENTS. All contributing project authors may

* be found in the AUTHORS file in the root of the source tree.

*/ #ifndef WEBRTC_MODULES_VIDEO_RENDER_MAIN_TEST_TESTAPI_TESTAPI_H

#define WEBRTC_MODULES_VIDEO_RENDER_MAIN_TEST_TESTAPI_TESTAPI_H #include "webrtc/modules/video_render/include/video_render_defines.h" void RunVideoRenderTests(void* window, webrtc::VideoRenderType windowType); #endif // WEBRTC_MODULES_VIDEO_RENDER_MAIN_TEST_TESTAPI_TESTAPI_H

源码文件为:

/*

* Copyright (c) 2012 The WebRTC project authors. All Rights Reserved.

*

* Use of this source code is governed by a BSD-style license

* that can be found in the LICENSE file in the root of the source

* tree. An additional intellectual property rights grant can be found

* in the file PATENTS. All contributing project authors may

* be found in the AUTHORS file in the root of the source tree.

*/ #include "webrtc/modules/video_render/test/testAPI/testAPI.h" #include <stdio.h> #if defined(_WIN32)

#include <tchar.h>

#include <windows.h>

#include <assert.h>

#include <fstream>

#include <iostream>

#include <string>

#include <windows.h>

#include <ddraw.h> #elif defined(WEBRTC_LINUX) && !defined(WEBRTC_ANDROID) #include <X11/Xlib.h>

#include <X11/Xutil.h>

#include <iostream>

#include <sys/time.h> #endif #include "webrtc/common_types.h"

#include "webrtc/modules/interface/module_common_types.h"

#include "webrtc/modules/utility/interface/process_thread.h"

#include "webrtc/modules/video_render/include/video_render.h"

#include "webrtc/modules/video_render/include/video_render_defines.h"

#include "webrtc/system_wrappers/interface/sleep.h"

#include "webrtc/system_wrappers/interface/tick_util.h"

#include "webrtc/system_wrappers/interface/trace.h" using namespace webrtc; void GetTestVideoFrame(I420VideoFrame* frame,

uint8_t startColor);

int TestSingleStream(VideoRender* renderModule);

int TestFullscreenStream(VideoRender* &renderModule,

void* window,

const VideoRenderType videoRenderType);

int TestBitmapText(VideoRender* renderModule);

int TestMultipleStreams(VideoRender* renderModule);

int TestExternalRender(VideoRender* renderModule); #define TEST_FRAME_RATE 30

#define TEST_TIME_SECOND 5

#define TEST_FRAME_NUM (TEST_FRAME_RATE*TEST_TIME_SECOND)

#define TEST_STREAM0_START_COLOR 0

#define TEST_STREAM1_START_COLOR 64

#define TEST_STREAM2_START_COLOR 128

#define TEST_STREAM3_START_COLOR 192 #if defined(WEBRTC_LINUX) #define GET_TIME_IN_MS timeGetTime() unsigned long timeGetTime()

{

struct timeval tv;

struct timezone tz;

unsigned long val; gettimeofday(&tv, &tz);

val= tv.tv_sec*+ tv.tv_usec/;

return(val);

} #elif defined(WEBRTC_MAC) #include <unistd.h> #define GET_TIME_IN_MS timeGetTime() unsigned long timeGetTime()

{

return ;

} #else #define GET_TIME_IN_MS ::timeGetTime() #endif using namespace std; #if defined(_WIN32)

LRESULT CALLBACK WebRtcWinProc( HWND hWnd,UINT uMsg,WPARAM wParam,LPARAM lParam)

{

switch(uMsg)

{

case WM_DESTROY:

break;

case WM_COMMAND:

break;

}

return DefWindowProc(hWnd,uMsg,wParam,lParam);

} int WebRtcCreateWindow(HWND &hwndMain,int winNum, int width, int height)

{

HINSTANCE hinst = GetModuleHandle();

WNDCLASSEX wcx;

wcx.hInstance = hinst;

wcx.lpszClassName = TEXT("VideoRenderTest");

wcx.lpfnWndProc = (WNDPROC)WebRtcWinProc;

wcx.style = CS_DBLCLKS;

wcx.hIcon = LoadIcon (NULL, IDI_APPLICATION);

wcx.hIconSm = LoadIcon (NULL, IDI_APPLICATION);

wcx.hCursor = LoadCursor (NULL, IDC_ARROW);

wcx.lpszMenuName = NULL;

wcx.cbSize = sizeof (WNDCLASSEX);

wcx.cbClsExtra = ;

wcx.cbWndExtra = ;

wcx.hbrBackground = GetSysColorBrush(COLOR_3DFACE); // Register our window class with the operating system.

// If there is an error, exit program.

if ( !RegisterClassEx (&wcx) )

{

MessageBox( , TEXT("Failed to register window class!"),TEXT("Error!"), MB_OK|MB_ICONERROR );

return ;

} // Create the main window.

hwndMain = CreateWindowEx(

, // no extended styles

TEXT("VideoRenderTest"), // class name

TEXT("VideoRenderTest Window"), // window name

WS_OVERLAPPED |WS_THICKFRAME, // overlapped window

, // horizontal position

, // vertical position

width, // width

height, // height

(HWND) NULL, // no parent or owner window

(HMENU) NULL, // class menu used

hinst, // instance handle

NULL); // no window creation data if (!hwndMain)

return -; // Show the window using the flag specified by the program

// that started the application, and send the application

// a WM_PAINT message. ShowWindow(hwndMain, SW_SHOWDEFAULT);

UpdateWindow(hwndMain);

return ;

} #elif defined(WEBRTC_LINUX) && !defined(WEBRTC_ANDROID) int WebRtcCreateWindow(Window *outWindow, Display **outDisplay, int winNum, int width, int height) // unsigned char* title, int titleLength) {

int screen, xpos = , ypos = ;

XEvent evnt;

XSetWindowAttributes xswa; // window attribute struct

XVisualInfo vinfo; // screen visual info struct

unsigned long mask; // attribute mask // get connection handle to xserver

Display* _display = XOpenDisplay( NULL ); // get screen number

screen = DefaultScreen(_display); // put desired visual info for the screen in vinfo

if( XMatchVisualInfo(_display, screen, , TrueColor, &vinfo) != )

{

//printf( "Screen visual info match!\n" );

} // set window attributes

xswa.colormap = XCreateColormap(_display, DefaultRootWindow(_display), vinfo.visual, AllocNone);

xswa.event_mask = StructureNotifyMask | ExposureMask;

xswa.background_pixel = ;

xswa.border_pixel = ; // value mask for attributes

mask = CWBackPixel | CWBorderPixel | CWColormap | CWEventMask; switch( winNum )

{

case :

xpos = ;

ypos = ;

break;

case :

xpos = ;

ypos = ;

break;

default:

break;

} // create a subwindow for parent (defroot)

Window _window = XCreateWindow(_display, DefaultRootWindow(_display),

xpos, ypos,

width,

height,

, vinfo.depth,

InputOutput,

vinfo.visual,

mask, &xswa); // Set window name

if( winNum == )

{

XStoreName(_display, _window, "VE MM Local Window");

XSetIconName(_display, _window, "VE MM Local Window");

}

else if( winNum == )

{

XStoreName(_display, _window, "VE MM Remote Window");

XSetIconName(_display, _window, "VE MM Remote Window");

} // make x report events for mask

XSelectInput(_display, _window, StructureNotifyMask); // map the window to the display

XMapWindow(_display, _window); // wait for map event

do

{

XNextEvent(_display, &evnt);

}

while (evnt.type != MapNotify || evnt.xmap.event != _window); *outWindow = _window;

*outDisplay = _display; return ;

}

#endif // LINUX // Note: Mac code is in testApi_mac.mm. class MyRenderCallback: public VideoRenderCallback

{

public:

MyRenderCallback() :

_cnt()

{

}

;

~MyRenderCallback()

{

}

;

virtual int32_t RenderFrame(const uint32_t streamId,

I420VideoFrame& videoFrame)

{

_cnt++;

if (_cnt % == )

{

printf("Render callback %d \n",_cnt);

}

return ;

}

int32_t _cnt;

}; void GetTestVideoFrame(I420VideoFrame* frame,

uint8_t startColor) {

// changing color

static uint8_t color = startColor; memset(frame->buffer(kYPlane), color, frame->allocated_size(kYPlane));

memset(frame->buffer(kUPlane), color, frame->allocated_size(kUPlane));

memset(frame->buffer(kVPlane), color, frame->allocated_size(kVPlane)); ++color;

} int TestSingleStream(VideoRender* renderModule) {

int error = ;

// Add settings for a stream to render

printf("Add stream 0 to entire window\n");

const int streamId0 = ;

VideoRenderCallback* renderCallback0 = renderModule->AddIncomingRenderStream(streamId0, , 0.0f, 0.0f, 1.0f, 1.0f);

assert(renderCallback0 != NULL); #ifndef WEBRTC_INCLUDE_INTERNAL_VIDEO_RENDER

MyRenderCallback externalRender;

renderModule->AddExternalRenderCallback(streamId0, &externalRender);

#endif printf("Start render\n");

error = renderModule->StartRender(streamId0);

if (error != ) {

// TODO(phoglund): This test will not work if compiled in release mode.

// This rather silly construct here is to avoid compilation errors when

// compiling in release. Release => no asserts => unused 'error' variable.

assert(false);

} // Loop through an I420 file and render each frame

const int width = ;

const int half_width = (width + ) / ;

const int height = ; I420VideoFrame videoFrame0;

videoFrame0.CreateEmptyFrame(width, height, width, half_width, half_width); const uint32_t renderDelayMs = ; for (int i=; i<TEST_FRAME_NUM; i++) {

GetTestVideoFrame(&videoFrame0, TEST_STREAM0_START_COLOR);

// Render this frame with the specified delay

videoFrame0.set_render_time_ms(TickTime::MillisecondTimestamp()

+ renderDelayMs);

renderCallback0->RenderFrame(streamId0, videoFrame0);

SleepMs(/TEST_FRAME_RATE);

} // Shut down

printf("Closing...\n");

error = renderModule->StopRender(streamId0);

assert(error == ); error = renderModule->DeleteIncomingRenderStream(streamId0);

assert(error == ); return ;

} int TestFullscreenStream(VideoRender* &renderModule,

void* window,

const VideoRenderType videoRenderType) {

VideoRender::DestroyVideoRender(renderModule);

renderModule = VideoRender::CreateVideoRender(, window, true, videoRenderType); TestSingleStream(renderModule); VideoRender::DestroyVideoRender(renderModule);

renderModule = VideoRender::CreateVideoRender(, window, false, videoRenderType); return ;

} int TestBitmapText(VideoRender* renderModule) {

#if defined(WIN32) int error = ;

// Add settings for a stream to render

printf("Add stream 0 to entire window\n");

const int streamId0 = ;

VideoRenderCallback* renderCallback0 = renderModule->AddIncomingRenderStream(streamId0, , 0.0f, 0.0f, 1.0f, 1.0f);

assert(renderCallback0 != NULL); printf("Adding Bitmap\n");

DDCOLORKEY ColorKey; // black

ColorKey.dwColorSpaceHighValue = RGB(, , );

ColorKey.dwColorSpaceLowValue = RGB(, , );

HBITMAP hbm = (HBITMAP)LoadImage(NULL,

(LPCTSTR)_T("renderStartImage.bmp"),

IMAGE_BITMAP, , , LR_LOADFROMFILE);

renderModule->SetBitmap(hbm, , &ColorKey, 0.0f, 0.0f, 0.3f,

0.3f); printf("Adding Text\n");

renderModule->SetText(, (uint8_t*) "WebRtc Render Demo App", ,

RGB(, , ), RGB(, , ), 0.25f, 0.1f, 1.0f,

1.0f); printf("Start render\n");

error = renderModule->StartRender(streamId0);

assert(error == ); // Loop through an I420 file and render each frame

const int width = ;

const int half_width = (width + ) / ;

const int height = ; I420VideoFrame videoFrame0;

videoFrame0.CreateEmptyFrame(width, height, width, half_width, half_width); const uint32_t renderDelayMs = ; for (int i=; i<TEST_FRAME_NUM; i++) {

GetTestVideoFrame(&videoFrame0, TEST_STREAM0_START_COLOR);

// Render this frame with the specified delay

videoFrame0.set_render_time_ms(TickTime::MillisecondTimestamp() +

renderDelayMs);

renderCallback0->RenderFrame(streamId0, videoFrame0);

SleepMs(/TEST_FRAME_RATE);

}

// Sleep and let all frames be rendered before closing

SleepMs(renderDelayMs*); // Shut down

printf("Closing...\n");

ColorKey.dwColorSpaceHighValue = RGB(,,);

ColorKey.dwColorSpaceLowValue = RGB(,,);

renderModule->SetBitmap(NULL, , &ColorKey, 0.0f, 0.0f, 0.0f, 0.0f);

renderModule->SetText(, NULL, , RGB(,,),

RGB(,,), 0.0f, 0.0f, 0.0f, 0.0f); error = renderModule->StopRender(streamId0);

assert(error == ); error = renderModule->DeleteIncomingRenderStream(streamId0);

assert(error == );

#endif return ;

} int TestMultipleStreams(VideoRender* renderModule) {

// Add settings for a stream to render

printf("Add stream 0\n");

const int streamId0 = ;

VideoRenderCallback* renderCallback0 =

renderModule->AddIncomingRenderStream(streamId0, , 0.0f, 0.0f, 0.45f, 0.45f);

assert(renderCallback0 != NULL);

printf("Add stream 1\n");

const int streamId1 = ;

VideoRenderCallback* renderCallback1 =

renderModule->AddIncomingRenderStream(streamId1, , 0.55f, 0.0f, 1.0f, 0.45f);

assert(renderCallback1 != NULL);

printf("Add stream 2\n");

const int streamId2 = ;

VideoRenderCallback* renderCallback2 =

renderModule->AddIncomingRenderStream(streamId2, , 0.0f, 0.55f, 0.45f, 1.0f);

assert(renderCallback2 != NULL);

printf("Add stream 3\n");

const int streamId3 = ;

VideoRenderCallback* renderCallback3 =

renderModule->AddIncomingRenderStream(streamId3, , 0.55f, 0.55f, 1.0f, 1.0f);

assert(renderCallback3 != NULL);

assert(renderModule->StartRender(streamId0) == );

assert(renderModule->StartRender(streamId1) == );

assert(renderModule->StartRender(streamId2) == );

assert(renderModule->StartRender(streamId3) == ); // Loop through an I420 file and render each frame

const int width = ;

const int half_width = (width + ) / ;

const int height = ; I420VideoFrame videoFrame0;

videoFrame0.CreateEmptyFrame(width, height, width, half_width, half_width);

I420VideoFrame videoFrame1;

videoFrame1.CreateEmptyFrame(width, height, width, half_width, half_width);

I420VideoFrame videoFrame2;

videoFrame2.CreateEmptyFrame(width, height, width, half_width, half_width);

I420VideoFrame videoFrame3;

videoFrame3.CreateEmptyFrame(width, height, width, half_width, half_width); const uint32_t renderDelayMs = ; // Render frames with the specified delay.

for (int i=; i<TEST_FRAME_NUM; i++) {

GetTestVideoFrame(&videoFrame0, TEST_STREAM0_START_COLOR); videoFrame0.set_render_time_ms(TickTime::MillisecondTimestamp() +

renderDelayMs);

renderCallback0->RenderFrame(streamId0, videoFrame0); GetTestVideoFrame(&videoFrame1, TEST_STREAM1_START_COLOR);

videoFrame1.set_render_time_ms(TickTime::MillisecondTimestamp() +

renderDelayMs);

renderCallback1->RenderFrame(streamId1, videoFrame1); GetTestVideoFrame(&videoFrame2, TEST_STREAM2_START_COLOR);

videoFrame2.set_render_time_ms(TickTime::MillisecondTimestamp() +

renderDelayMs);

renderCallback2->RenderFrame(streamId2, videoFrame2); GetTestVideoFrame(&videoFrame3, TEST_STREAM3_START_COLOR);

videoFrame3.set_render_time_ms(TickTime::MillisecondTimestamp() +

renderDelayMs);

renderCallback3->RenderFrame(streamId3, videoFrame3); SleepMs(/TEST_FRAME_RATE);

} // Shut down

printf("Closing...\n");

assert(renderModule->StopRender(streamId0) == );

assert(renderModule->DeleteIncomingRenderStream(streamId0) == );

assert(renderModule->StopRender(streamId1) == );

assert(renderModule->DeleteIncomingRenderStream(streamId1) == );

assert(renderModule->StopRender(streamId2) == );

assert(renderModule->DeleteIncomingRenderStream(streamId2) == );

assert(renderModule->StopRender(streamId3) == );

assert(renderModule->DeleteIncomingRenderStream(streamId3) == ); return ;

} int TestExternalRender(VideoRender* renderModule) {

MyRenderCallback *externalRender = new MyRenderCallback(); const int streamId0 = ;

VideoRenderCallback* renderCallback0 =

renderModule->AddIncomingRenderStream(streamId0, , 0.0f, 0.0f,

1.0f, 1.0f);

assert(renderCallback0 != NULL);

assert(renderModule->AddExternalRenderCallback(streamId0,

externalRender) == ); assert(renderModule->StartRender(streamId0) == ); const int width = ;

const int half_width = (width + ) / ;

const int height = ;

I420VideoFrame videoFrame0;

videoFrame0.CreateEmptyFrame(width, height, width, half_width, half_width); const uint32_t renderDelayMs = ;

int frameCount = TEST_FRAME_NUM;

for (int i=; i<frameCount; i++) {

videoFrame0.set_render_time_ms(TickTime::MillisecondTimestamp() +

renderDelayMs);

renderCallback0->RenderFrame(streamId0, videoFrame0);

SleepMs();

} // Sleep and let all frames be rendered before closing

SleepMs(*renderDelayMs); assert(renderModule->StopRender(streamId0) == );

assert(renderModule->DeleteIncomingRenderStream(streamId0) == );

assert(frameCount == externalRender->_cnt); delete externalRender;

externalRender = NULL; return ;

} void RunVideoRenderTests(void* window, VideoRenderType windowType) {

#ifndef WEBRTC_INCLUDE_INTERNAL_VIDEO_RENDER

windowType = kRenderExternal;

#endif int myId = ; // Create the render module

printf("Create render module\n");

VideoRender* renderModule = NULL;

renderModule = VideoRender::CreateVideoRender(myId,

window,

false,

windowType);

assert(renderModule != NULL); // ##### Test single stream rendering ####

printf("#### TestSingleStream ####\n");

if (TestSingleStream(renderModule) != ) {

printf ("TestSingleStream failed\n");

} // ##### Test fullscreen rendering ####

printf("#### TestFullscreenStream ####\n");

if (TestFullscreenStream(renderModule, window, windowType) != ) {

printf ("TestFullscreenStream failed\n");

} // ##### Test bitmap and text ####

printf("#### TestBitmapText ####\n");

if (TestBitmapText(renderModule) != ) {

printf ("TestBitmapText failed\n");

} // ##### Test multiple streams ####

printf("#### TestMultipleStreams ####\n");

if (TestMultipleStreams(renderModule) != ) {

printf ("TestMultipleStreams failed\n");

} // ##### Test multiple streams ####

printf("#### TestExternalRender ####\n");

if (TestExternalRender(renderModule) != ) {

printf ("TestExternalRender failed\n");

} delete renderModule;

renderModule = NULL; printf("VideoRender unit tests passed.\n");

} // Note: The Mac main is implemented in testApi_mac.mm.

#if defined(_WIN32)

int _tmain(int argc, _TCHAR* argv[])

#elif defined(WEBRTC_LINUX) && !defined(WEBRTC_ANDROID)

int main(int argc, char* argv[])

#endif

#if !defined(WEBRTC_MAC) && !defined(WEBRTC_ANDROID)

{

// Create a window for testing.

void* window = NULL;

#if defined (_WIN32)

HWND testHwnd;

WebRtcCreateWindow(testHwnd, , , );

window = (void*)testHwnd;

VideoRenderType windowType = kRenderWindows;

#elif defined(WEBRTC_LINUX)

Window testWindow;

Display* display;

WebRtcCreateWindow(&testWindow, &display, , , );

VideoRenderType windowType = kRenderX11;

window = (void*)testWindow;

#endif // WEBRTC_LINUX RunVideoRenderTests(window, windowType);

return ;

}

#endif // !WEBRTC_MAC

webrtc之视频显示模块--video_render的更多相关文章

- 单独编译和使用webrtc音频降噪模块(附完整源码+测试音频文件)

单独编译和使用webrtc音频增益模块(附完整源码+测试音频文件) 单独编译和使用webrtc音频回声消除模块(附完整源码+测试音频文件) webrtc的音频处理模块分为降噪ns,回音消除aec,回声 ...

- 单独编译和使用webrtc音频增益模块(附完整源码+测试音频文件)

webrtc的音频处理模块分为降噪ns和nsx,回音消除aec,回声控制acem,音频增益agc,静音检测部分.另外webrtc已经封装好了一套音频处理模块APM,如果不是有特殊必要,使用者如果要用到 ...

- 【转帖】WebRTC回声抵消模块简要分析

webrtc 的回声抵消(aec.aecm)算法主要包括以下几个重要模块:回声时延估计:NLMS(归一化最小均方自适应算法):NLP(非线性滤波):CNG(舒适噪声产生).一般经典aec算法还应包括双 ...

- c#项目使用webrtc的降噪模块方法

分离webrtc的降噪(Noise Suppression)部分 webrtc是Google开源的优秀音视频处理及传输代码库,其中包含了audio processing.video processin ...

- 单独编译使用WebRTC的音频处理模块

块,每块个点,(12*64=768采样)即AEC-PC仅能处理48ms的单声道16kHz延迟的数据,而 - 加载编译好的NS模块动态库 接下来只需要按照 此文 的描述在 android 的JAVA代码 ...

- webrtc之视频捕获模块--video_capture

webrtc的video_capture模块,为我们在不同端设备上采集视频提供了一个跨平台封装的视频采集功能,如下图中的webrtc的video_capture源码,现webrtc的video_cap ...

- 单独编译和使用webrtc音频回声消除模块(附完整源码+测试音频文件)

单独编译和使用webrtc音频降噪模块(附完整源码+测试音频文件) 单独编译和使用webrtc音频增益模块(附完整源码+测试音频文件) 说实话很不想写这篇文章,因为这和我一贯推崇的最好全部编译并使用w ...

- webrtc学习(二): audio_device之opensles

audio_device是webrtc的音频设备模块. 封装了各个平台的音频设备相关的代码 audio device 在android下封装了两套音频代码. 1. 通过jni调用java的media ...

- WebRTC 基于GCC的拥塞控制(下)

转自;http://blog.csdn.net/ljh081231/article/details/79152578 本文在文章[1]的基础上,从源代码实现角度对WebRTC的GCC算法进行分析.主要 ...

随机推荐

- [Unity优化] Unity CPU性能优化

前段时间本人转战unity手游,由于作者(Chwen)之前参与端游开发,有些端游的经验可以直接移植到手游,比如项目框架架构.代码设计.部分性能分析,而对于移动终端而言,CPU.内存.显卡甚至电池等硬件 ...

- mysql 5.6 参数详解

系统变量提供的是各种与服务器配置和功能有关的信息.大部分的系统变量都可以在服务器启动时进行设置.在运行时,每一个系统变量都拥有一个全局值或会话值,或者同时拥有这两个值.许多系统变量都是动态的,也就是说 ...

- Guava API学习之Optional 判断对象是否为null

java.lang.NullPointerException,只要敢自称Java程序员,那对这个异常就再熟悉不过了.为了防止抛出这个异常,我们经常会写出这样的代码: Person person = p ...

- nginx+uwsgi+flask搭建python-web应用程序

Flask本身就可以直接启动HTTP服务器,但是受限于管理.部署.性能等问题,在生产环境中,我们一般不会使用Flask自身所带的HTTP服务器. 从现在已有的实践来看,对于Flask,比较好的部署方式 ...

- Altium Designer (protel) 各版本“故障”随谈

Altium 的版本很多,每个版本都或多或少有些可容忍或可不容忍的问题,此贴只是希望各位能将遇到的问题写出来,只是希望 给还在使用 altium 的网友一些参考,也希望有些能被 altium 所接受@ ...

- iptables 顺序

-A INPUT -s 115.236.6.6/32 -p udp -m udp --dport 111 -j ACCEPT -A INPUT -s 10.175.197.98/32 -p udp - ...

- usaco silver

大神们都在刷usaco,我也来水一水 1606: [Usaco2008 Dec]Hay For Sale 购买干草 裸背包 1607: [Usaco2008 Dec]Patting Heads 轻 ...

- 黑马程序员_Java面向对象_包

7.包 7.1包(package) 对类文件进行分类管理. 给类提供多层命名空间. 写在程序文件的第一行. 类名的全称是:包名.类名. 包也是一种封装形式. 利用命令行自动生成文件夹格式:D:\jav ...

- PHP常用魔术方法(__set、__get魔术方法:)

__set.__get魔术方法: //文件名:Object.php <?phpnamespace IMooc;class Object{ protected $array = array(); ...

- WPF - 为什么不能往Library的工程中添加WPF window

项目中添加一个Library 工程,但是却无法加入WPF window, WPF customize control. 调查了一下,发现这一切都由于Library工程中没有:ProjectTypeGu ...