ubuntu18.04.2 hadoop3.1.2+zookeeper3.5.5高可用完全分布式集群搭建

ubuntu18.04.2 hadoop3.1.2+zookeeper3.5.5高可用完全分布式集群搭建

集群规划:

| hostname | NameNode | DataNode | JournalNode | ResourceManager | Zookeeper |

|---|---|---|---|---|---|

| node01 | √ | √ | √ | ||

| node02 | √ | √ | |||

| node03 | √ | √ | √ | √ | |

| node04 | √ | √ | √ | ||

| node05 | √ | √ | √ |

准备工作:

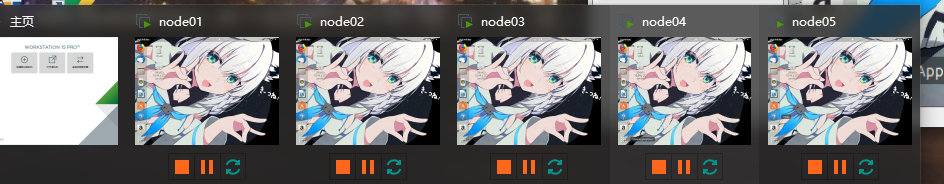

首先克隆5台ubuntu虚拟机

vim /etc/netplan/01-network-manager-all.yaml修改网络配置

我的5台网络配置如下: (ps: 由于这次是家里台式, 所以网关和之前笔记本搭的那次不一样)

# Let NetworkManager manage all devices on this system

# node01

network:

version: 2

renderer: NetworkManager

ethernets:

ens33:

dhcp4: no

dhcp6: no

addresses: [192.168.180.130/24]

gateway4: 192.168.180.2

nameservers:

addresses: [114.114.114.114, 8.8.8.8]

# Let NetworkManager manage all devices on this system

# node02

network:

version: 2

renderer: NetworkManager

ethernets:

ens33:

dhcp4: no

dhcp6: no

addresses: [192.168.180.131/24]

gateway4: 192.168.180.2

nameservers:

addresses: [114.114.114.114, 8.8.8.8]

# Let NetworkManager manage all devices on this system

# node03

network:

version: 2

renderer: NetworkManager

ethernets:

ens33:

dhcp4: no

dhcp6: no

addresses: [192.168.180.132/24]

gateway4: 192.168.180.2

nameservers:

addresses: [114.114.114.114, 8.8.8.8]

# Let NetworkManager manage all devices on this system

network:

version: 2

renderer: NetworkManager

ethernets:

ens33:

dhcp4: no

dhcp6: no

addresses: [192.168.180.133/24]

gateway4: 192.168.180.2

nameservers:

addresses: [114.114.114.114, 8.8.8.8]

# Let NetworkManager manage all devices on this system

network:

version: 2

renderer: NetworkManager

ethernets:

ens33:

dhcp4: no

dhcp6: no

addresses: [192.168.180.134/24]

gateway4: 192.168.180.2

nameservers:

addresses: [114.114.114.114, 8.8.8.8]

修改完毕后netplan apply 应用该网络配置, ping一下百度有连接网络配置就ok了。

修改主机名

vim /etc/hostname 将对应主机名分别修改为node01, niode02, node03, node04, node05

修改hosts文件

vim /etc/hosts 修改每台机器的hosts文件为如下:

127.0.0.1 localhost

192.168.180.130 node01

192.168.180.131 node02

192.168.180.132 node03

192.168.180.133 node04

192.168.180.134 node05

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

JDK配置

如果没有配置jdk请参考https://www.cnblogs.com/ronnieyuan/p/11461377.html

如果之前有下过别的版本的jdk请将jdk的tar包解压到/usr/lib/jvm, 比如我的jvm目录为如下:

drwxr-xr-x 4 root root 4096 9月 13 08:57 ./

drwxr-xr-x 133 root root 4096 9月 13 08:57 ../

lrwxrwxrwx 1 root root 25 4月 8 2018 default-java -> java-1.11.0-openjdk-amd64/

lrwxrwxrwx 1 root root 21 3月 27 04:57 java-1.11.0-openjdk-amd64 -> java-11-openjdk-amd64/

-rw-r--r-- 1 root root 1994 3月 27 04:57 .java-1.11.0-openjdk-amd64.jinfo

drwxr-xr-x 9 root root 4096 4月 25 20:43 java-11-openjdk-amd64/

drwxr-xr-x 7 uucp 143 4096 12月 16 2018 jdk1.8/

然后再修改配置文件并选择jdk(指令在参考中已写)

jdk版本信息:

root@node01:~# java -version

java version "1.8.0_202"

Java(TM) SE Runtime Environment (build 1.8.0_202-b08)

Java HotSpot(TM) 64-Bit Server VM (build 25.202-b08, mixed mode)

免密登录

在每台机器上执行ssh-keygen -t rsa -P "" (记得enter)

示例

root@node01:~# ssh-keygen -t rsa -P ""

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:el2s+e9UXxWjfGY1LS6RYD1CcHLmlXY+zJCopqRnuf0 root@node01

The key's randomart image is:

+---[RSA 2048]----+

| ooB+.+ +o|

| Bo.@ + *|

| ..o % =.|

| . o .. X .|

| o +S o. .o|

| . =. . + .o|

| o.o. + . .|

| ... . . |

| .E .oo |

+----[SHA256]-----+

在~目录下vim .ssh/authorized_keys:

将5台虚拟机公钥都存入该文件中, 每台的authorized_keys都一致

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDBw4yPomSFt009LQ3gvxv9vnAF4tSXrJvVBMkpoi78mLMspgxYW6q3vLCWFEHT6HOLrLAQ/+UjclXjuVEEUGVOyn+dgvX7fK+XCOuTVdTyJZ3nIGbHUZ5zB+KHcJN3tiGjFQ3vGEuUeVkQ4jkN5RXI33nSx1eUM/sOuXtQ7DdhJjAuBko7RNw/jjTXW8znv8l8n5hb4fu4B+2CLkIkO+1+mTu8hljE2B+pu4o6cIiY/RTb0hNRLSs6w7K7BJFa+3ZkeMtiLtI8MUaIQzo4/nv4FKa8/GSvxLyyBZGoaunAYsUn7qmlNxNjEXY7wojAnVkRMiyCsEXQU6cEsR//Zocz root@node01

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDg2AsPvf9TjjIVUlZutDqxFH579THtl6e7/SxYxHJ/def/T4dY5glzwW3AJ30Gcsw+k9E8PKiZIAiaQ7kU4/EmFK9LFhAuQx+glZS5GS88lXv7qSYOLmZtJPp0l4tgrIgk9u+PtZToCdlWpGLO2Xi3Dfggt//Lsl4Dqhl3dtrpZSjMGY7zkAd4fu696ri4rjv3kDciUdFNlKBFBkGA4RNFKylkPTlxLZfpqNU2pkZtBySHsGbEHMvnMQ1KOXRoW7pVvZ4QveR/eiQVXqq+v53oZ5KUmC5jpp6Abe3PVa7tG6s2ZOSP9ikOuFKrwXWArjp5H4oaYZIF/UenhhIdjxh3 root@node02

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDwtqNeAwYLWqY5otArMcKd4iMBCpZ5cd+RyECunVnmeefuN1U53fR+h2UcS6/Jr84ZKlDmJ5+r9jgcBPIftbkGi9RE4aHEqo14sC3P4t6DODxNCI+enytx5/kw3gpKmxOdanrtojSWLdL+5v/h4qPt5e8AFfxqJ9HfZ5darXgRLWbkYcBADH51XvisY9Gf+DJKPjcD+3E8gMbHHdeYWt0crOkxbRVgnjmZVuWsYBRFH5x6ueR5SOHUC3WPzfeEdBvIeRddl4y1DvtvZZuVOxs1rQF59KdDSKSKt4s1lScZS1Kc57yXY2s+L6HrFqxfOO0u1pisfiDwDKvZDwKeMd3n root@node03

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQC+QfivNznStFt8xCZ1Qav6jKdErir0VbNRN0nqJaXUe+KL8YmYygofKEZRGQHCpYY2/rM7Cla6Pl9HLoatbvi89OYVy7V3hnu7SJwrqbkAGOqxCzW+OGdV9GRvhi3LTwJMAKxSrXB73tKK9ZqJd7WrP7o7ibyYMAbUiJTc0qa4gSXxXTunUuF2hOG7D88/93bxXXqSI9AydWrXBVxzmrP7CipXFOBqVC/mA/8SEdbVxSK0oGwa9KAAm690onoVevOVtTXWcvKSE/57WM94KJMbIKM/ypxKtUqKrgKuMfBsgs31Zu1j3SDkFC3Vm8uGj4yKnpxsaVJOwuMoRYiW90tT root@node04

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDF/mqbRAwPusxpz5FA9FtIa97QJSuXjaRP+/37S7JvtCAh2FvgPBLIQeAdp7hvc/RFJ8WqDlQWj2UVpBsu2sn3Kg2VZ30qEghMLkMcCTtKknNX+U7SvBWCRoGojxl9lmi/Y1kkVNQUTRPQ8QeNGN2SvUi5A4Q+X1H6MEy16sLuamMlXqiIeqttY33odXj6oXI6OFqoE98FrNbTBrPwJFCk4Uhgnplbb0YE+4dbs9mVdR/iHpGm84WfvITe6Rn9Ry4K+Wo4C+Bms4dGfcO8eh8lrwSCff2IUIc877Zzc6ImYrdvZu7rvrCPyfNdoCJzA5wtExPoAfUbuN5T77ieLgWH root@node05

依次测试免密登录是否成功

成功案例:

root@node01:~# ssh root@192.168.180.131

The authenticity of host '192.168.180.131 (192.168.180.131)' can't be established.

ECDSA key fingerprint is SHA256:++PMZ5boD2CgToi43EdaCSLtNGdVFt0xxCBoAIkggqk.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.180.131' (ECDSA) to the list of known hosts.

Welcome to Ubuntu 18.04.2 LTS (GNU/Linux 4.18.0-17-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

* Canonical Livepatch is available for installation.

- Reduce system reboots and improve kernel security. Activate at:

https://ubuntu.com/livepatch

254 packages can be updated.

253 updates are security updates.

Your Hardware Enablement Stack (HWE) is supported until April 2023.

Last login: Sat Sep 14 08:44:54 2019 from 192.168.180.1

root@node02:~#

Zookeeper3.5.5安装

注:zookeeper只安装在node03, node04 和node05上

上传zookeeper3.5.5到/home/ronnie/soft目录下

root@node03:/home/ronnie/soft# ll

total 524532

drwxr-xr-x 2 root root 4096 9月 14 09:51 ./

drwxr-xr-x 32 ronnie ronnie 4096 9月 14 08:39 ../

-rw-r--r-- 1 root root 10622522 9月 13 11:35 apache-zookeeper-3.5.5-bin.tar.gz

-rw-r--r-- 1 root root 332433589 9月 13 09:18 hadoop-3.1.2.tar.gz

-rw-r--r-- 1 root root 194042837 1月 18 2019 jdk-8u202-linux-x64.tar.gz

tar -zxvf apache-zookeeper-3.5.5-bin.tar.gz -C /opt/ronnie/ 将其解压至/opt/ronnie目录

修改zookeeper目录名:

cd /opt/ronnie

mv apache-zookeeper-3.5.5-bin/ zookeeper

创建并修改zookeeper配置文件

首先进入配置文件目录:

root@node03:/opt/ronnie# cd zookeeper/conf/

root@node03:/opt/ronnie/zookeeper/conf# ll

total 20

drwxr-xr-x 2 2002 2002 4096 4月 2 21:05 ./

drwxr-xr-x 6 root root 4096 9月 14 09:54 ../

-rw-r--r-- 1 2002 2002 535 2月 15 2019 configuration.xsl

-rw-r--r-- 1 2002 2002 2712 4月 2 21:05 log4j.properties

-rw-r--r-- 1 2002 2002 922 2月 15 2019 zoo_sample.cfg

拷贝一份zoo_sample.cfg 为 zoo.cfg:

cp zoo_sample.cfg zoo.cfg

vim zoo.cfg

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/var/ronnie/zookeeper

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

server.1=node03:2888:3888

server.2=node04:2888:3888

server.3=node05:2888:3888

将配置好的zookeeper目录传送给其他2台机子

scp -r /opt/ronnie/zookeeper/ root@192.168.180.133:/opt/ronnie/

scp -r /opt/ronnie/zookeeper/ root@192.168.180.134:/opt/ronnie/

在Datadir下创建myid, 并分别指定1, 2, 3

node03上的操作(其他同理), 若没有该目录需要先创建mkdir -p /var/ronnie/zookeeper/

cd /var/ronnie/zookeeper/

touch myid

echo 1 > myid

启动Zookeeper

/opt/ronnie/zookeeper/bin/zkServer.sh start

# 若启动成功

ZooKeeper JMX enabled by default

Using config: /opt/ronnie/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

# 检测状态

/opt/ronnie/zookeeper/bin/zkServer.sh status

# 这是一个从节点

ZooKeeper JMX enabled by default

Using config: /opt/ronnie/zookeeper/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost.

Mode: follower

# 这是一个主节点

ZooKeeper JMX enabled by default

Using config: /opt/ronnie/zookeeper/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost.

Mode: leader

关闭zookeeper:

/opt/ronnie/zookeeper/bin/zkServer.sh stop

那么zookeeper到这里就安装完毕了

Hadoop配置

vim ~/.bashrc 添加hadoop路径

#HADOOP VARIABLES

export HADOOP_HOME=/opt/ronnie/hadoop-3.1.2

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib"

source ~/.bashrc 使新配置生效(记得每台都要改)

hadoop version查看版本, 显示如下则hadoop路径配置成功:

Hadoop 3.1.2

Source code repository https://github.com/apache/hadoop.git -r 1019dde65bcf12e05ef48ac71e84550d589e5d9a

Compiled by sunilg on 2019-01-29T01:39Z

Compiled with protoc 2.5.0

From source with checksum 64b8bdd4ca6e77cce75a93eb09ab2a9

This command was run using /opt/ronnie/hadoop-3.1.2/share/hadoop/common/hadoop-common-3.1.2.jar

修改hadoop-env.sh, mapred-env.sh, yarn-env.sh中的JAVA_HOME路径, 没有就在底下添加

vim /opt/ronnie/hadoop-3.1.2/etc/hadoop/hadoop-env.sh

53 # variable is REQUIRED on ALL platforms except OS X!

54 export JAVA_HOME=/usr/lib/jvm/jdk1.8

vim /opt/ronnie/hadoop-3.1.2/etc/hadoop/mapred-env.sh

47 # JDK

48 export JAVA_HOME=/usr/lib/jvm/jdk1.8

vim /opt/ronnie/hadoop-3.1.2/etc/hadoop/yarn-env.sh

171 # JDK

172 export JAVA_HOME=/usr/lib/jvm/jdk1.8

vim /opt/ronnie/hadoop-3.1.2/etc/hadoop/core-site.xml配置core-site.xml文件

1 <?xml version="1.0" encoding="UTF-8"?>

4 Licensed under the Apache License, Version 2.0 (the "License");

7

8 http://www.apache.org/licenses/LICENSE-2.0

9

12 WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 See the License for the specific language governing permissions and

14 limitations under the License. See accompanying LICENSE file.

15 -->

16

17 <!-- Put site-specific property overrides in this file. -->

18

19 <configuration>

20 <!-- 指定hdfs的nameservice名称 -->

21 <property>

22 <name>fs.defaultFS</name>

23 <value>hdfs://ns</value>

24 </property>

25 <!-- 指定临时目录 -->

26 <property>

27 <name>hadoop.tmp.dir</name>

28 <value>/var/ronnie/hadoop/tmp</value>

29 </property>

30 <!-- 指定zookeeper -->

31 <property>

32 <name>ha.zookeeper.quorum</name>

33 <value>node03:2181,node04:2181,node05:2181</value>

34 </property>

35 <!-- Namenode向JournalNode发起的ipc连接请求重试最大次数 -->

36 <property>

37 <name>ipc.client.connect.max.retries</name>

38 <value>100</value>

39 <description>Indicates the number of retries a client will make to establish a server c onnection.

40 </description>

41 </property>

42 <!-- Namenode向JournalNode发起的ipc连接请求的重试间隔时间 -->

43 <property>

44 <name>ipc.client.connect.retry.interval</name>

45 <value>10000</value>

46 <description>Indicates the number of milliseconds a client will wait for before retryin g to establish.

47 </description>

48 </property>

49 <!-- 开启回收功能, 并设置垃圾删除间隔(min) -->

50 <property>

51 <name>fs.trash.interval</name>

52 <value>360</value>

53 <description>

54 Trash deletion interval in minutes. If zero, the trash feature is diabled.

55 </description>

56 </property>

57 <!-- 设置垃圾检查点介个(min), 不设置的话默认和fs.trash.interval一样 -->

58 <property>

59 <name>fs.trash.checkpoint.interval</name>

60 <value>60</value>

61 <description>

62 Trash checkpoint interval in minutes. If zero, the deletion interval is used.

63 </description>

64 </property>

65 <!-- 配置oozie时使用以下参数 -->

66 <property>

67 <name>hadoop.proxyuser.deplab.groups</name>

68 <value>*</value>

69 </property>

70 <property>

71 <name>hadoop.proxyuser.deplab.hosts</name>

72 <value>*</value>

73 </property>

74 </configuration>

vim /opt/ronnie/hadoop-3.1.2/etc/hadoop/hdfs-site.xml修改hdfs-site.xml

1 <?xml version="1.0" encoding="UTF-8"?>

2 <?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

3 <!--

4 Licensed under the Apache License, Version 2.0 (the "License");

5 you may not use this file except in compliance with the License.

6 You may obtain a copy of the License at

7

8 http://www.apache.org/licenses/LICENSE-2.0

9

10 Unless required by applicable law or agreed to in writing, software

11 distributed under the License is distributed on an "AS IS" BASIS,

12 WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 See the License for the specific language governing permissions and

14 limitations under the License. See accompanying LICENSE file.

15 -->

16

17 <!-- Put site-specific property overrides in this file. -->

18

19 <configuration>

20 <!-- 指定hdfs的nameservice为ns, 需要和core-site.xml中的保持一致 -->

21 <property>

22 <name>dfs.nameservices</name>

23 <value>ns</value>

24 </property>

25 <!-- ns下有nn1, nn2 两个NameNode -->

28 <value>nn1,nn2</value>

29 </property>

30 <!-- nn1的RPC通信地址 -->

33 <value>node01:9000</value>

34 </property>

35 <!-- nn1 的http通信地址 -->

36 <property>

37 <name>dfs.namenode.http-address.ns.nn1</name>

38 <value>node01:50070</value>

39 </property>

40 <!-- nn2的RPC通信地址 -->

41 <property>

42 <name>dfs.namenode.rpc-address.ns.nn2</name>

43 <value>node02:9000</value>

44 </property>

45 <!-- nn2 的http通信地址 -->

46 <property>

47 <name>dfs.namenode.http-address.ns.nn2</name>

48 <value>node02:50070</value>

49 </property>

50 <!-- 指定NameNode的edits元数据在JournalNode上的存放位置 -->

51 <property>

52 <name>dfs.namenode.shared.edits.dir</name>

53 <value>qjournal://node03:8485;node04:8485;node05:8485/ns</value>

54 </property>

55 <!-- 指定JournalNode在本地磁盘存放数据的尾椎 -->

56 <property>

57 <name>dfs.journalnode.edits.dir</name>

58 <value>/var/ronnie/hadoop/jdata</value>

59 </property>

60 <!-- 开启NameNode失败自动切换 -->

61 <property>

62 <name>dfs.ha.automatic-failover.enabled</name>

63 <value>true</value>

64 </property>

65 <!-- 配置失败自动切换实现方式 -->

66 <property>

67 <name>dfs.client.failover.proxy.provider.ns</name>

68 <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value >

69 </property>

70 <!-- 配置隔离机制方法, 多个机制用换行分割, 即每个机制暂用一行 -->

71 <property>

72 <name>dfs.ha.fencing.methods</name>

73 <value>

74 sshfence

75 shell(/bin/true)

76 </value>

77 </property>

78 <!-- 使用sshfence隔离机制时需要ssh免密登录 -->

79 <property>

80 <name>dfs.ha.fencing.ssh.private-key-files</name>

81 <value>/root/.ssh/id_rsa</value>

82 </property>

83 <!-- 配置sshfence隔离机制超时时间 -->

84 <property>

85 <name>dfs.ha.fencing.ssh.connect-timeout</name>

86 <value>30000</value>

87 </property>

88 </configuration>

vim /opt/ronnie/hadoop-3.1.2/etc/hadoop/mapred-site.xml修改mapred-site.xml

1 <?xml version="1.0"?>

2 <?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

3 <!--

4 Licensed under the Apache License, Version 2.0 (the "License");

5 you may not use this file except in compliance with the License.

6 You may obtain a copy of the License at

7

8 http://www.apache.org/licenses/LICENSE-2.0

9

10 Unless required by applicable law or agreed to in writing, software

11 distributed under the License is distributed on an "AS IS" BASIS,

12 WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 See the License for the specific language governing permissions and

14 limitations under the License. See accompanying LICENSE file.

15 -->

16

17 <!-- Put site-specific property overrides in this file. -->

18

19 <configuration>

20 <!-- 指定mr框架为yarn方式 -->

21 <property>

22 <name>mapreduce.framework.name</name>

23 <value>yarn</value>

24 </property>

25 </configuration>

vim /opt/ronnie/hadoop-3.1.2/etc/hadoop/yarn-site.xml修改yarn-site.xml

1 <?xml version="1.0"?>

2 <!--

3 Licensed under the Apache License, Version 2.0 (the "License");

4 you may not use this file except in compliance with the License.

5 You may obtain a copy of the License at

6

7 http://www.apache.org/licenses/LICENSE-2.0

8

9 Unless required by applicable law or agreed to in writing, software

10 distributed under the License is distributed on an "AS IS" BASIS,

11 WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 See the License for the specific language governing permissions and

13 limitations under the License. See accompanying LICENSE file.

14 -->

15 <configuration>

16 <!-- 开启RM高可用 -->

17 <property>

18 <name>yarn.resourcemanager.ha.enabled</name>

19 <value>true</value>

20 </property>

21 <!-- 指定RM的集群id -->

22 <property>

23 <name>yarn.resourcemanager.cluster-id</name>

24 <value>yrc</value>

25 </property>

26 <!-- 指定RM的名称 -->

27 <property>

28 <name>yarn.resourcemanager.ha.rm-ids</name>

29 <value>rm1,rm2</value>

30 </property>

31 <!-- 指定rm1, rm2的地址 -->

32 <property>

33 <name>yarn.resourcemanager.hostname.rm1</name>

34 <value>node01</value>

35 </property>

36 <property>

37 <name>yarn.resourcemanager.hostname.rm2</name>

38 <value>node02</value>

39 </property>

40 <!-- 指定zookeeper集群地址 -->

41 <property>

42 <name>yarn.resourcemanager.zk-address</name>

43 <value>node03:2181,node04:2181,node05:2181</value>

44 </property>

45 <!-- 设定洗牌 -->

46 <property>

47 <name>yarn.nodemanager.aux-services</name>

48 <value>mapreduce_shuffle</value>

49 </property>

50 </configuration>

vim /opt/ronnie/hadoop-3.1.2/etc/hadoop/workers修改工作组

node01

node02

node03

node04

node05

vim /opt/ronnie/hadoop-3.1.2/sbin/start-dfs.sh

vim /opt/ronnie/hadoop-3.1.2/sbin/stop-dfs.sh

在文件顶部头文件之后添加:

#!/usr/bin/env bash

HDFS_DATANODE_USER=root

HADOOP_SECURE_DN_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

HDFS_JOURNALNODE_USER=root

HDFS_ZKFC_USER=root

vim /opt/ronnie/hadoop-3.1.2/sbin/start-yarn.sh

vim /opt/ronnie/hadoop-3.1.2/sbin/stop-yarn.sh

在文件顶部头文件之后添加:

#!/usr/bin/env bash

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

将配置好的hadoop目录传送给其他节点

scp -r /opt/ronnie/hadoop-3.1.2/ root@192.168.180.131:/opt/ronnie/

scp -r /opt/ronnie/hadoop-3.1.2/ root@192.168.180.132:/opt/ronnie/

scp -r /opt/ronnie/hadoop-3.1.2/ root@192.168.180.133:/opt/ronnie/

scp -r /opt/ronnie/hadoop-3.1.2/ root@192.168.180.134:/opt/ronnie/

启动集群

先启动zookeeper集群:

root@node03:/var/ronnie/zookeeper# /opt/ronnie/zookeeper/bin/zkServer.sh start

root@node04:/var/ronnie/zookeeper# /opt/ronnie/zookeeper/bin/zkServer.sh start

root@node05:/var/ronnie/zookeeper# /opt/ronnie/zookeeper/bin/zkServer.sh start

分别在node03, node04, node05上启动journalnode

root@node03:~# /opt/ronnie/hadoop-3.1.2/sbin/hadoop-daemon.sh start journalnode

root@node04:~# /opt/ronnie/hadoop-3.1.2/sbin/hadoop-daemon.sh start journalnode

root@node05:~# /opt/ronnie/hadoop-3.1.2/sbin/hadoop-daemon.sh start journalnode

jps查看进程

root@node03:~# jps

6770 Jps

6724 JournalNode

6616 QuorumPeerMain

在两台NameNode中选一台进行格式化(这里选node01)

root@node01:~# hdfs namenode -format

如有报错会写明哪里配置文件写错错了, 再回去改。

开启NameNode:

hdfs --daemon start namenode

jps查看是否开启

root@node01:~# jps

5622 Jps

5549 NameNode

在另一台NameNode上同步格式化后的相关信息

root@node02:~# hdfs namenode -bootstrapStandby

在node01上格式化ZKFC(只需要执行一次)

root@node01:~# hdfs zkfc -formatZK

启动hdfs集群

root@node01:~# start-dfs.sh

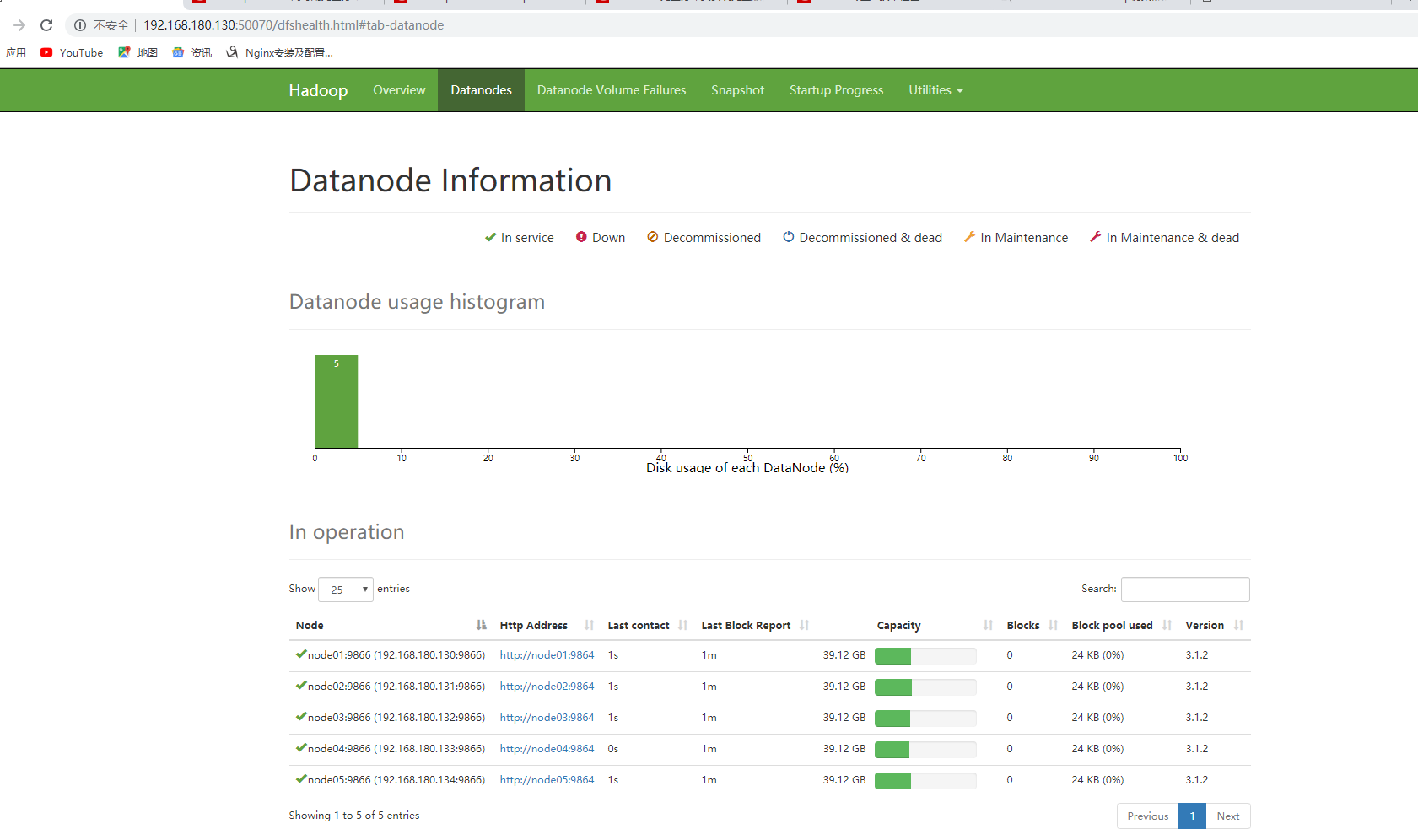

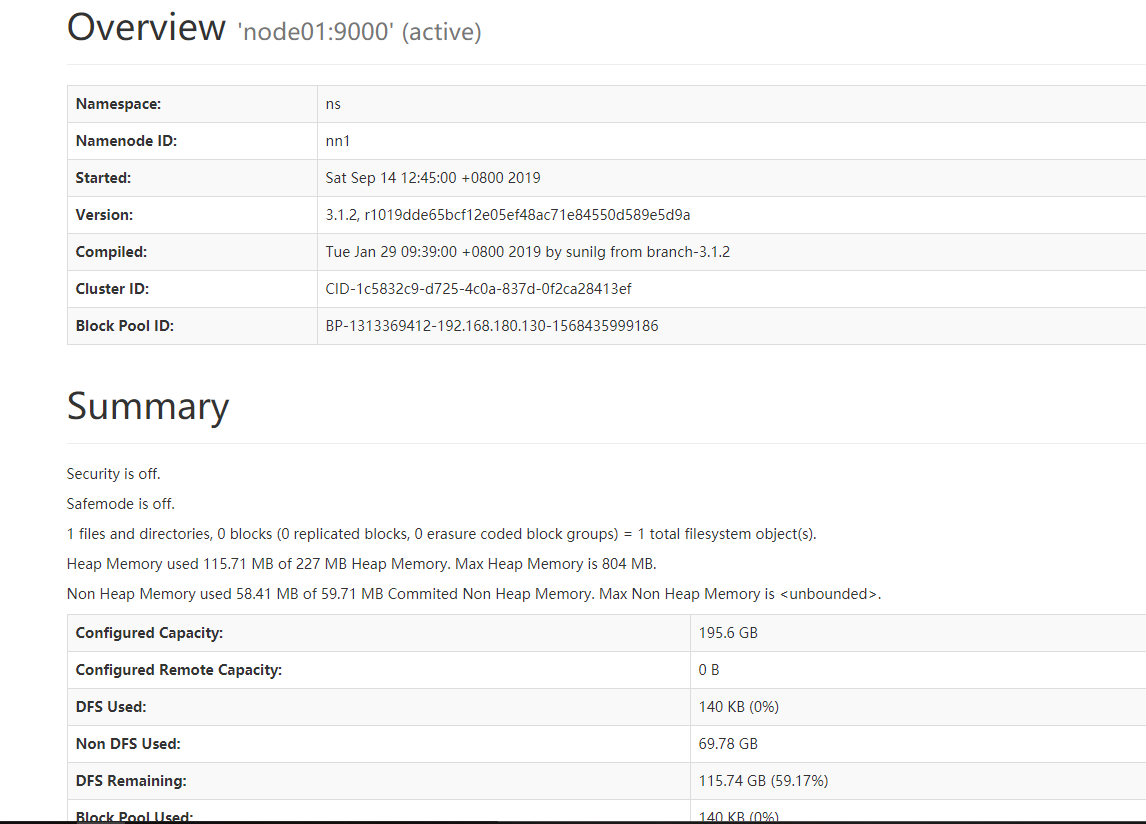

打开node01的50070端口

启动yarn集群

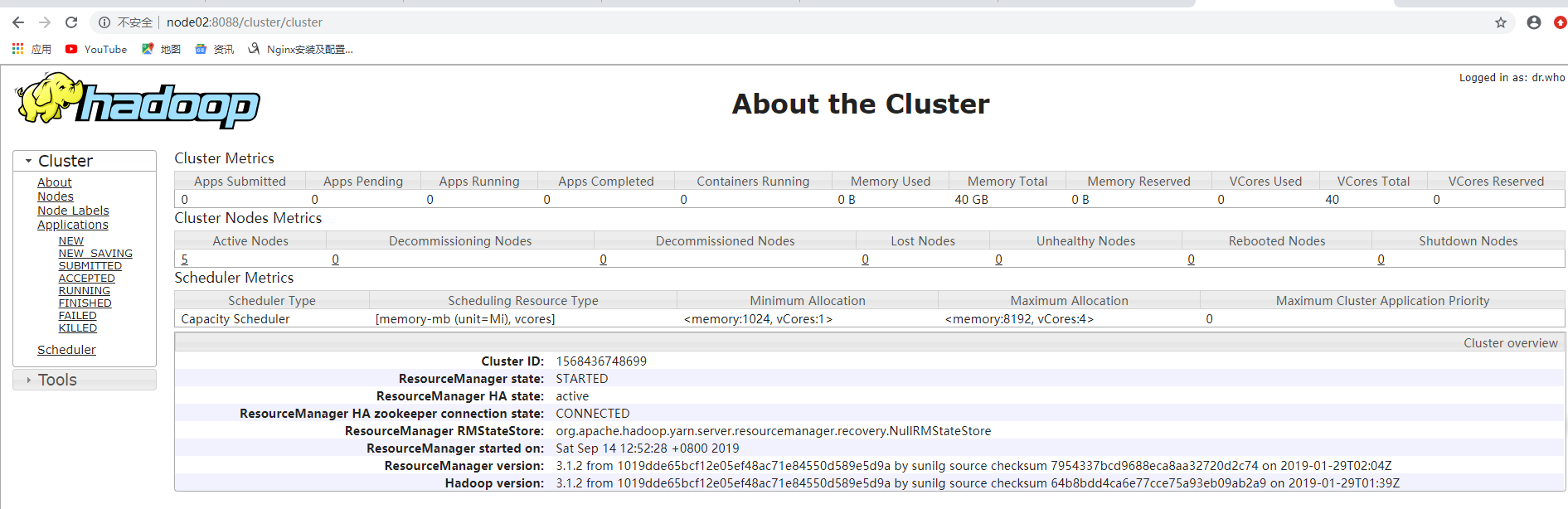

root@node01:~# start-yarn.sh

打开node01的8088端口

到此配置成功

如果上传文件遇到permission denied,则在hdfs-site.xml文件末尾添加:

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

ubuntu18.04.2 hadoop3.1.2+zookeeper3.5.5高可用完全分布式集群搭建的更多相关文章

- ActiveMQ5.14.1+Zookeeper3.4.9高可用伪分布式部署

本文借鉴http://www.cnblogs.com/gossip/p/5977489.html,在此基础上进行了完善,使之成为一个完整版的伪分布式部署说明,在此记录一下! 一.本文目的 ...

- Solr5.2.1+Zookeeper3.4.8分布式集群搭建

1.选取三台服务器 由于机器比较少,现将zookeeper和solr都部署在以下三台机器上.(以下操作都是在172.16.20.101主节点上进行的哦) 172.16.20.101 主节点 172.1 ...

- Hadoop分布式集群搭建hadoop2.6+Ubuntu16.04

前段时间搭建Hadoop分布式集群,踩了不少坑,网上很多资料都写得不够详细,对于新手来说搭建起来会遇到很多问题.以下是自己根据搭建Hadoop分布式集群的经验希望给新手一些帮助.当然,建议先把HDFS ...

- Solr5.2.1+Zookeeper3.4.9分布式集群搭建

1.选取三台服务器 由于机器比较少,现将zookeeper和solr都部署在以下三台机器上.(以下操作都是在172.16.20.101主节点上进行的哦) 172.16.20.101 主节点 172.1 ...

- 3 视频里weekend05、06、07的可靠性 + HA原理、分析、机制 + weekend01、02、03、04、05、06、07的分布式集群搭建

现在,我们来验证分析下,zookeeper集群的可靠性 现在有weekend05.06.07 将其一个关掉, 分析,这3个zookeeper集群里,杀死了weekend06,还存活weekend05. ...

- HBASE分布式集群搭建(ubuntu 16.04)

1.hbase是依赖Hadoop运行的,因此先确保自己已搭建好Hadoop集群环境 没安装的可以参考这里:https://www.cnblogs.com/chaofan-/p/9740408.html ...

- ubuntu18.04 基于Hadoop3.1.2集群的Hbase2.0.6集群搭建

前置条件: 之前已经搭好了带有HDFS, MapReduce,Yarn 的 Hadoop 集群 链接: ubuntu18.04.2 hadoop3.1.2+zookeeper3.5.5高可用完全分布式 ...

- Hadoop3集群搭建之——安装hadoop,配置环境

接上篇:Hadoop3集群搭建之——虚拟机安装 下篇:Hadoop3集群搭建之——配置ntp服务 Hadoop3集群搭建之——hive安装 Hadoop3集群搭建之——hbase安装及简单操作 上篇已 ...

- ubuntu18.04 flink-1.9.0 Standalone集群搭建

集群规划 Master JobManager Standby JobManager Task Manager Zookeeper flink01 √ √ flink02 √ √ flink03 √ √ ...

随机推荐

- jmeter实现文件下载

通过浏览器下载文件时,会提示选择保存路径,但是利用测试工具jmeter请求时,在页面看到请求次数是增加了,而本地没有具体下载下来的文件. 需要在具体的文件下载请求下面,添加后置处理器-bean she ...

- 吴裕雄 Bootstrap 前端框架开发——Bootstrap 表单:表单控件大小

<!DOCTYPE html> <html> <head> <meta charset="utf-8"> <title> ...

- 新闻网大数据实时分析可视化系统项目——13、Cloudera HUE大数据可视化分析

1.Hue 概述及版本下载 1)概述 Hue是一个开源的Apache Hadoop UI系统,最早是由Cloudera Desktop演化而来,由Cloudera贡献给开源社区,它是基于Python ...

- 阿里云配置mysql

环境:阿里云ECS服务器,系统为centos7.2 用户:root 参考博客:https://blog.csdn.net/kunzai6/article/details/81938613 师兄的哈哈哈 ...

- 常用命令提示符(cmd)

MS-DOS(Microsoft Disk Operation System)命令提示符(cmd) 启动: win+ R 输入cmd回车切换盘符 盘符名称:进入文件夹 cd 文件夹名称进入多级 ...

- python 阶乘函数

def num(n): if n == 1: return n return n*num(n-1) print(num(10)) 输出 3628800 该函数使用了递归函数的规则.return 后面为 ...

- 【LOJ2542】「PKUWC2018」随机游走

题意 给定一棵 \(n\) 个结点的树,你从点 \(x\) 出发,每次等概率随机选择一条与所在点相邻的边走过去. 有 \(Q\) 次询问,每次询问给定一个集合 \(S\),求如果从 \(x\) 出发一 ...

- CF 1198 A. MP3 模拟+滑动窗口

A. MP3 题意:给你n个数,一个大小为8*I的容量,保存一个数需要多少容量取决于给定n个数的种类k,用公式 log2 k 计算,如果给定的容量不能保存所有数,选择减少数的种类来降低保存一个 ...

- Adapter之GridAdapter

前言: 在我们写界面的时候想让我们展示的页面是网格的,这是我们可以使用GridAdapter,这个和listView的使用有相似之处,如果学过ListView的话还是很简单的 正文: 下面我们来看看G ...

- P1090 危险品装箱

1090 危险品装箱 (25分) 集装箱运输货物时,我们必须特别小心,不能把不相容的货物装在一只箱子里.比如氧化剂绝对不能跟易燃液体同箱,否则很容易造成爆炸. 本题给定一张不相容物品的清单,需要你 ...