《C-RNN-GAN: Continuous recurrent neural networks with adversarial training》论文笔记

出处:arXiv: Artificial Intelligence, 2016(一年了还没中吗?)

Motivation

使用GAN+RNN来处理continuous sequential data,并训练生成古典音乐

Introduction

In this work, we investigate the feasibility of using adversarial training for a sequential model with continuous data, and evaluate it using classical music in freely available midi files.也就是利用GAN+RNN来处理midi file中的连续数据。RNN主要工作用于处理时序相关的自然语言,同时也被引入到了音乐生成的领域[1,2,3],but to our knowledge they always use a symbolic representation. In contrast,our work demonstrates how one can train a highly flexible and expressive model with fully continuous sequence data for tone lengths, frequencies, intensities, and timing.作者还刻意提到了LapGAN实现coarse-to-fine的图片生成过程(个人思考:对音乐生成很有启发,包括利用双层GAN来从caption生成image,一层用于生成低分辨率的粗线条色彩图片,一层用于生成细节,这些思路应该可以结合到音乐生成中去)。

Model

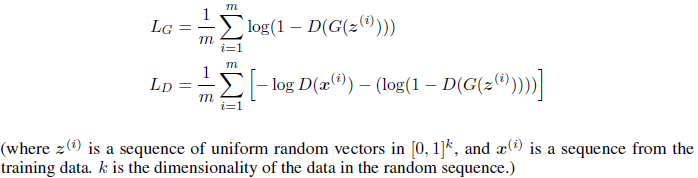

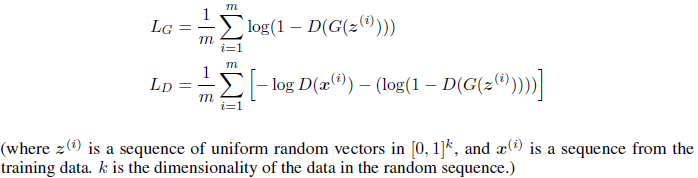

对抗网络中的G和D都是RNN模型,损失函数定义为

The input to each cell in G is a random vector, concatenated with the output of previous cell.D采用的是双向循环RNN(LSTM)。数据方面构建了一个tone length, frequency, intensity, and time的四元数组,数据可以表示出复调和弦polyphonous chords。

G和D的LSTM层数皆设置为2,BaseLine为去掉对抗性的单一的RNN生成网络。训练集Dataset是从网上down下来的标准midi格式的古典音乐文件,对所有的”note on“事件进行了记录的读取(包括该note的其他属性,时延,tone,强度等等),代码地址:https://github.com/olofmogren/c-rnn-gan

Training过程中使用了很多小技巧:

- 使用L2 regularization对G和D的权重做正则化约束

- The model was pretrained for 6 epochs with a squared error loss for predicting the next event in the

training sequence - the input to each LSTM cell is a random vector v, concatenated with the output at previous time step. v is uniformly distributed in [0; 1]k, and k

was chosen to be the number of features in each tone, 4. - 在预训练时,对采样的序列长度做了管理,从小序列开始逐渐加大,最后变成长序列

- 采用了[4]中的freezen的trick,当D或G被训练得异常强大以至于对方梯度消失,无法正常进行训练时,对过于强大的一方实施冻结。这里采用的是A‘s training loss is less than 70% of the training loss of B时,冻结A

- 采用了[4]中的feature matching的trick,将G的目标函数替换为使真假样本的feature差值最小化:

其中,R是D的最后一层(激活函数logistic之前)输出。

其中,R是D的最后一层(激活函数logistic之前)输出。

评估标准

Polyphony 复音是否在同一时间点开始

Scale consistency were computed by counting the fraction of tones that were part of a standard scale, and reporting the number for the best matching such scale.(标准音程是什么鬼?)

Repetitions 小节重复数量

Tone span 最高音和最低音的音程统计

评估工具代码也放在github上面了

结论

第一例通过GAN对抗训练来生成音乐的paper。从人耳听觉的感受上来说,c-RNN-GAN生成的音乐完全不能和真实样本相提并论,应该是单纯地进行对抗训练,单轨音调,缺乏先验乐理知识的融入的缘故导致。

sample 试听:http://mogren.one/publications/2016/c-rnn-gan/

[1]Douglas Eck and Juergen Schmidhuber. Finding temporal structure in music: Blues improvisation

with lstm recurrent networks. In Neural Networks for Signal Processing, 2002. Proceedings of the

2002 12th IEEE Workshop on, pages 747–756. IEEE, 2002.

[2]Pascal Vincent Nicolas Boulanger-Lewandowski, Yoshua Bengio. Modeling temporal dependencies

in high-dimensional sequences: Application to polyphonic music generation and transcription. In

Proceedings of the 29th International Conference on Machine Learning (ICML), page 1159–1166,

2012.

[3]Lantao Yu, Weinan Zhang, Jun Wang, and Yong Yu. Seqgan: Sequence generative adversarial nets

with policy gradient. arXiv preprint arXiv:1609.05473, 2016.

[4]Tim Salimans, Ian Goodfellow, Wojciech Zaremba, Vicki Cheung, Alec Radford, and Xi Chen.

Improved techniques for training gans. In Advances in Neural Information Processing Systems,

pages 2226–2234, 2016.

代码分析

Restore保存的参数:

'num_layers_g' : RNN cell g的层数

'num_layers_d' :RNN Cell D的层数

'meta_layer_size':

'hidden_size_g':

'hidden_size_d':

'biscale_slow_layer_ticks':

'multiscale':

'disable_feed_previous':

'pace_events':

'minibatch_d':

'unidirectional_d':

'feature_matching':

'composer':选取训练集中哪个作曲家的风格来进行训练,如巴赫 贝多芬......

do-not-redownload.txt存在,则不再下载新的midi文件

read_data函数读出的格式为[genre, composer, song_data]

这里组织了一个sources列表,键值为风格,艺术家

用python-midi读出midi_pattern后,遍历每一个track的每一个event,通过NoteOnEvent和NoteOffEvent记录每一个note的四个维度数值:

最后,一首歌的所有的note被汇总到一个song_data的list中去了。每一个[genre, composer, song_data]代表一首歌的特征数据,这些数据被append到 loader.songs['validation'], loader.songs['test'] ,loader.songs['train']中去了。

创建模型训练时使用了l2正则项来避免过拟合:scope.set_regularizer(tf.contrib.layers.l2_regularizer(scale=FLAGS.reg_scale))

创建G,一个多层的LSTM:

输入噪声random_rnninputs的shape为[batch_size, songlength, int(FLAGS.random_input_scale*num_song_features)],然后转换为list

---恢复内容结束---

出处:arXiv: Artificial Intelligence, 2016(一年了还没中吗?)

Motivation

使用GAN+RNN来处理continuous sequential data,并训练生成古典音乐

Introduction

In this work, we investigate the feasibility of using adversarial training for a sequential model with continuous data, and evaluate it using classical music in freely available midi files.也就是利用GAN+RNN来处理midi file中的连续数据。RNN主要工作用于处理时序相关的自然语言,同时也被引入到了音乐生成的领域[1,2,3],but to our knowledge they always use a symbolic representation. In contrast,our work demonstrates how one can train a highly flexible and expressive model with fully continuous sequence data for tone lengths, frequencies, intensities, and timing.作者还刻意提到了LapGAN实现coarse-to-fine的图片生成过程(个人思考:对音乐生成很有启发,包括利用双层GAN来从caption生成image,一层用于生成低分辨率的粗线条色彩图片,一层用于生成细节,这些思路应该可以结合到音乐生成中去)。

Model

对抗网络中的G和D都是RNN模型,损失函数定义为

The input to each cell in G is a random vector, concatenated with the output of previous cell.D采用的是双向循环RNN(LSTM)。数据方面构建了一个tone length, frequency, intensity, and time的四元数组,数据可以表示出复调和弦polyphonous chords。

G和D的LSTM层数皆设置为2,BaseLine为去掉对抗性的单一的RNN生成网络。训练集Dataset是从网上down下来的标准midi格式的古典音乐文件,对所有的”note on“事件进行了记录的读取(包括该note的其他属性,时延,tone,强度等等),代码地址:https://github.com/olofmogren/c-rnn-gan

Training过程中使用了很多小技巧:

- 使用L2 regularization对G和D的权重做正则化约束

- The model was pretrained for 6 epochs with a squared error loss for predicting the next event in the

training sequence - the input to each LSTM cell is a random vector v, concatenated with the output at previous time step. v is uniformly distributed in [0; 1]k, and k

was chosen to be the number of features in each tone, 4. - 在预训练时,对采样的序列长度做了管理,从小序列开始逐渐加大,最后变成长序列

- 采用了[4]中的freezen的trick,当D或G被训练得异常强大以至于对方梯度消失,无法正常进行训练时,对过于强大的一方实施冻结。这里采用的是A‘s training loss is less than 70% of the training loss of B时,冻结A

- 采用了[4]中的feature matching的trick,将G的目标函数替换为使真假样本的feature差值最小化:

其中,R是D的最后一层(激活函数logistic之前)输出。

其中,R是D的最后一层(激活函数logistic之前)输出。

评估标准

Polyphony 复音是否在同一时间点开始

Scale consistency were computed by counting the fraction of tones that were part of a standard scale, and reporting the number for the best matching such scale.(标准音程是什么鬼?)

Repetitions 小节重复数量

Tone span 最高音和最低音的音程统计

评估工具代码也放在github上面了

结论

第一例通过GAN对抗训练来生成音乐的paper。从人耳听觉的感受上来说,c-RNN-GAN生成的音乐完全不能和真实样本相提并论,应该是单纯地进行对抗训练,单轨音调,缺乏先验乐理知识的融入的缘故导致。

sample 试听:http://mogren.one/publications/2016/c-rnn-gan/

[1]Douglas Eck and Juergen Schmidhuber. Finding temporal structure in music: Blues improvisation

with lstm recurrent networks. In Neural Networks for Signal Processing, 2002. Proceedings of the

2002 12th IEEE Workshop on, pages 747–756. IEEE, 2002.

[2]Pascal Vincent Nicolas Boulanger-Lewandowski, Yoshua Bengio. Modeling temporal dependencies

in high-dimensional sequences: Application to polyphonic music generation and transcription. In

Proceedings of the 29th International Conference on Machine Learning (ICML), page 1159–1166,

2012.

[3]Lantao Yu, Weinan Zhang, Jun Wang, and Yong Yu. Seqgan: Sequence generative adversarial nets

with policy gradient. arXiv preprint arXiv:1609.05473, 2016.

[4]Tim Salimans, Ian Goodfellow, Wojciech Zaremba, Vicki Cheung, Alec Radford, and Xi Chen.

Improved techniques for training gans. In Advances in Neural Information Processing Systems,

pages 2226–2234, 2016.

代码分析

Restore保存的参数:

'num_layers_g' : RNN cell g的层数

'num_layers_d' :RNN Cell D的层数

'meta_layer_size':

'hidden_size_g':

'hidden_size_d':

'biscale_slow_layer_ticks':

'multiscale':

'disable_feed_previous':

'pace_events':

'minibatch_d':

'unidirectional_d':

'feature_matching':

'composer':选取训练集中哪个作曲家的风格来进行训练,如巴赫 贝多芬......

do-not-redownload.txt存在,则不再下载新的midi文件

read_data函数读出的格式为[genre, composer, song_data]

这里组织了一个sources列表,键值为风格,艺术家

用python-midi读出midi_pattern后,遍历每一个track的每一个event,通过NoteOnEvent和NoteOffEvent记录每一个note的四个维度数值:

最后,一首歌的所有的note被汇总到一个song_data的list中去了。每一个[genre, composer, song_data]代表一首歌的特征数据,这些数据被append到 loader.songs['validation'] loader.songs['test'] loader.songs['train']中去了。

对于待训练的placeholder数据有:

创建模型训练时使用了l2正则项来避免过拟合:scope.set_regularizer(tf.contrib.layers.l2_regularizer(scale=FLAGS.reg_scale))

创建G的LSTM网络:

输入噪声random_rnninputs的shape为[batch_size, songlength, int(FLAGS.random_input_scale*num_song_features)],然后转换为list(unstack?)

对G进行RNN的分步训练过程,每个循环是一步,输入为噪音random_rnninput和上一步的输出generated_point(两者concat为一个[batch_size,2*num_song_features]的tensor,第一步输出的初始化从均匀分布中采样)

对G还有个pretraining的过程,输入为噪音random_rnninputs和真实的sample songdata_input[i]

针对G的pretraining的loss是L2距离,注意这里的链表stack和[1,0,2]转置:

要注意的是(1)由于bidirectional_dynamic_rnn每构建一次就会自动在名字空间中序号+1,所以用层数名来限定了scope(折腾了一天,是我菜还是tf太坑?)

(2)每次的输入_inputs需要把output中包含了bw和fw的tuple元组concat起来,每个tensor的shape为[batch_size,song_length,ouput_dim],其中output_dim和lstm隐层单元数量(状态数量)

一致,合并后shape为[batch_size,song_length,2×ouput_dim]

随后D将双向LSTM的输出全连接(output num = 1)并sigmoid映射为真假概率,同时输出output作为features,参与到feature loss的计算中去。

loss计算:

《C-RNN-GAN: Continuous recurrent neural networks with adversarial training》论文笔记的更多相关文章

- 《Vision Permutator: A Permutable MLP-Like ArchItecture For Visual Recognition》论文笔记

论文题目:<Vision Permutator: A Permutable MLP-Like ArchItecture For Visual Recognition> 论文作者:Qibin ...

- [place recognition]NetVLAD: CNN architecture for weakly supervised place recognition 论文翻译及解析(转)

https://blog.csdn.net/qq_32417287/article/details/80102466 abstract introduction method overview Dee ...

- 论文笔记系列-Auto-DeepLab:Hierarchical Neural Architecture Search for Semantic Image Segmentation

Pytorch实现代码:https://github.com/MenghaoGuo/AutoDeeplab 创新点 cell-level and network-level search 以往的NAS ...

- 论文笔记——Rethinking the Inception Architecture for Computer Vision

1. 论文思想 factorized convolutions and aggressive regularization. 本文给出了一些网络设计的技巧. 2. 结果 用5G的计算量和25M的参数. ...

- 论文笔记:Fast Neural Architecture Search of Compact Semantic Segmentation Models via Auxiliary Cells

Fast Neural Architecture Search of Compact Semantic Segmentation Models via Auxiliary Cells 2019-04- ...

- 论文笔记:ProxylessNAS: Direct Neural Architecture Search on Target Task and Hardware

ProxylessNAS: Direct Neural Architecture Search on Target Task and Hardware 2019-03-19 16:13:18 Pape ...

- 论文笔记:DARTS: Differentiable Architecture Search

DARTS: Differentiable Architecture Search 2019-03-19 10:04:26accepted by ICLR 2019 Paper:https://arx ...

- 论文笔记:Progressive Neural Architecture Search

Progressive Neural Architecture Search 2019-03-18 20:28:13 Paper:http://openaccess.thecvf.com/conten ...

- 论文笔记:Auto-DeepLab: Hierarchical Neural Architecture Search for Semantic Image Segmentation

Auto-DeepLab: Hierarchical Neural Architecture Search for Semantic Image Segmentation2019-03-18 14:4 ...

- 论文笔记系列-DARTS: Differentiable Architecture Search

Summary 我的理解就是原本节点和节点之间操作是离散的,因为就是从若干个操作中选择某一个,而作者试图使用softmax和relaxation(松弛化)将操作连续化,所以模型结构搜索的任务就转变成了 ...

随机推荐

- [luoguP2622] 关灯问题II(状压最短路)

传送门 本以为是状压DP,但是有后效性. 所以写一手状压spfa #include <queue> #include <cstdio> #include <cstring ...

- Vim command handbook

/* 本篇文章已经默认你通过了vimtuor训练并能熟练使用大部分命令.此篇文章主要是对于tutor命令的总结和梳理.适合边学习边记忆 tutor那个完全是在学习中记忆 符合认知规律但是练习有限.所以 ...

- 静态工具类中使用注解注入service实例

一般需要在一个工具类中使用@Autowired 注解注入一个service.但是由于工具类方法一般都写成static,所以直接注入就存在问题. 使用如下方式可以解决: /** * */ package ...

- Java开发一些小的思想与功能小记(二)

1.用if+return代替复杂的if...else(if+return) public static void test1(String str) { if ("1".equal ...

- Speculative store buffer

A speculative store buffer is speculatively updated in response to speculative store memory operatio ...

- python-web apache mod_python 模块的安装

安装apache 下载mod_python 编译安装 测试 下载mod_python,下载地址:mod_python 在GitHub 上面, 下载之后:目录结构如下: 安装依赖: #查找可安装的依赖 ...

- 2017多校Round4(hdu6067~hdu6079)

补题进度:10/13 1001 待填坑 1002(kmp+递推) 题意: 有长度为n(<=50000)的字符串S和长度为m(m<=100)的字符串T,有k(k<=50000)组询问, ...

- eclipse提速03 - 禁用动画

- 条款一:尽量使用const、inline而不是#define

#define ASPECT_RATIO 1.653 编译器会永远也看不到ASPECT_RATIO这个符号名,因为在源码进入编译器之前,它会被预处理程序去掉,于是ASPECT_RATIO不会加入到符号 ...

- 【APUE】一个fork的面试题及字符设备、块设备的区别

具体内容见:http://coolshell.cn/articles/7965.html 字符设备.块设备主要区别是:在对字符设备发出读/写请求时,实际的硬件I/O一般就紧接着发生了,而块设备则不然, ...