apache-storm-0.9.6.tar.gz的集群搭建(3节点)(图文详解)

不多说,直接上干货!

Storm的版本选取

我这里,是选用apache-storm-0.9.6.tar.gz

Storm的本地模式安装

本地模式在一个进程里面模拟一个storm集群的所有功能, 这对开发和测试来说非常方便。以本地模式运行topology跟在集群上运行topology类似。

要创建一个进程内“集群”,使用LocalCluster对象就可以了:

import backtype.storm.LocalCluster;

LocalCluster cluster = new LocalCluster();

然后可以通过LocalCluster对象的submitTopology方法来提交topology, 效果和StormSubmitter对应的方法是一样的。submitTopology方法需要三个参数: topology的名字, topology的配置以及topology对象本身。你可以通过killTopology方法来终止一个topology, 它需要一个topology名字作为参数。

要关闭一个本地集群,简单调用:

cluster.shutdown();

就可以了。

Storm的分布式模式安装(本博文)

官方安装文档

http://storm.apache.org/releases/current/Setting-up-a-Storm-cluster.html

机器情况:在master、slave1、slave2机器的/home/hadoop/app目录下分别下载storm安装包

1、apache-storm-0.9.6.tar.gz的下载

http://archive.apache.org/dist/storm/apache-storm-0.9.6/

或者,直接在安装目录下,在线下载

wget http://apache.fayea.com/storm/apache-storm-0.9.6/apache-storm-0.9.6.tar.gz

我这里,选择先下载好,再上传安装的方式。

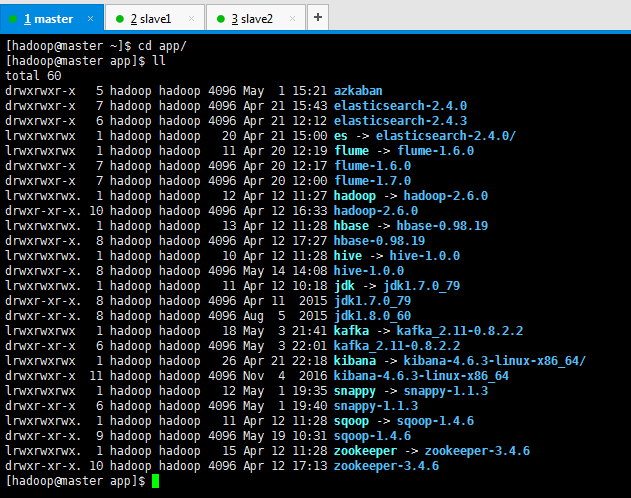

2、上传压缩包

[hadoop@master ~]$ cd app/

[hadoop@master app]$ ll

total

drwxrwxr-x hadoop hadoop May : azkaban

drwxrwxr-x hadoop hadoop Apr : elasticsearch-2.4.

drwxrwxr-x hadoop hadoop Apr : elasticsearch-2.4.

lrwxrwxrwx hadoop hadoop Apr : es -> elasticsearch-2.4./

lrwxrwxrwx hadoop hadoop Apr : flume -> flume-1.6.

drwxrwxr-x hadoop hadoop Apr : flume-1.6.

drwxrwxr-x hadoop hadoop Apr : flume-1.7.

lrwxrwxrwx. hadoop hadoop Apr : hadoop -> hadoop-2.6.

drwxr-xr-x. hadoop hadoop Apr : hadoop-2.6.

lrwxrwxrwx. hadoop hadoop Apr : hbase -> hbase-0.98.

drwxrwxr-x. hadoop hadoop Apr : hbase-0.98.

lrwxrwxrwx. hadoop hadoop Apr : hive -> hive-1.0.

drwxrwxr-x. hadoop hadoop May : hive-1.0.

lrwxrwxrwx. hadoop hadoop Apr : jdk -> jdk1..0_79

drwxr-xr-x. hadoop hadoop Apr jdk1..0_79

drwxr-xr-x. hadoop hadoop Aug jdk1..0_60

lrwxrwxrwx hadoop hadoop May : kafka -> kafka_2.-0.8.2.2

drwxr-xr-x hadoop hadoop May : kafka_2.-0.8.2.2

lrwxrwxrwx hadoop hadoop Apr : kibana -> kibana-4.6.-linux-x86_64/

drwxrwxr-x hadoop hadoop Nov kibana-4.6.-linux-x86_64

lrwxrwxrwx hadoop hadoop May : snappy -> snappy-1.1.

drwxr-xr-x hadoop hadoop May : snappy-1.1.

lrwxrwxrwx. hadoop hadoop Apr : sqoop -> sqoop-1.4.

drwxr-xr-x. hadoop hadoop May : sqoop-1.4.

lrwxrwxrwx. hadoop hadoop Apr : zookeeper -> zookeeper-3.4.

drwxr-xr-x. hadoop hadoop Apr : zookeeper-3.4.

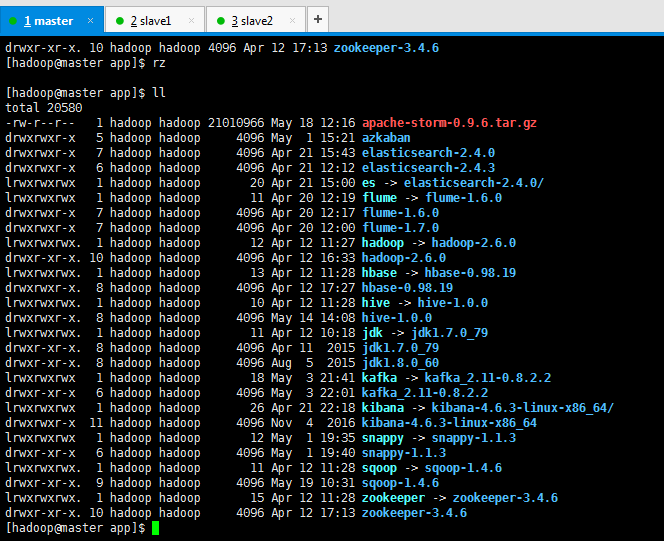

[hadoop@master app]$ rz [hadoop@master app]$ ll

total

-rw-r--r-- hadoop hadoop May : apache-storm-0.9..tar.gz

drwxrwxr-x hadoop hadoop May : azkaban

drwxrwxr-x hadoop hadoop Apr : elasticsearch-2.4.

drwxrwxr-x hadoop hadoop Apr : elasticsearch-2.4.

lrwxrwxrwx hadoop hadoop Apr : es -> elasticsearch-2.4./

lrwxrwxrwx hadoop hadoop Apr : flume -> flume-1.6.

drwxrwxr-x hadoop hadoop Apr : flume-1.6.

drwxrwxr-x hadoop hadoop Apr : flume-1.7.

lrwxrwxrwx. hadoop hadoop Apr : hadoop -> hadoop-2.6.

drwxr-xr-x. hadoop hadoop Apr : hadoop-2.6.

lrwxrwxrwx. hadoop hadoop Apr : hbase -> hbase-0.98.

drwxrwxr-x. hadoop hadoop Apr : hbase-0.98.

lrwxrwxrwx. hadoop hadoop Apr : hive -> hive-1.0.

drwxrwxr-x. hadoop hadoop May : hive-1.0.

lrwxrwxrwx. hadoop hadoop Apr : jdk -> jdk1..0_79

drwxr-xr-x. hadoop hadoop Apr jdk1..0_79

drwxr-xr-x. hadoop hadoop Aug jdk1..0_60

lrwxrwxrwx hadoop hadoop May : kafka -> kafka_2.-0.8.2.2

drwxr-xr-x hadoop hadoop May : kafka_2.-0.8.2.2

lrwxrwxrwx hadoop hadoop Apr : kibana -> kibana-4.6.-linux-x86_64/

drwxrwxr-x hadoop hadoop Nov kibana-4.6.-linux-x86_64

lrwxrwxrwx hadoop hadoop May : snappy -> snappy-1.1.

drwxr-xr-x hadoop hadoop May : snappy-1.1.

lrwxrwxrwx. hadoop hadoop Apr : sqoop -> sqoop-1.4.

drwxr-xr-x. hadoop hadoop May : sqoop-1.4.

lrwxrwxrwx. hadoop hadoop Apr : zookeeper -> zookeeper-3.4.

drwxr-xr-x. hadoop hadoop Apr : zookeeper-3.4.

[hadoop@master app]$

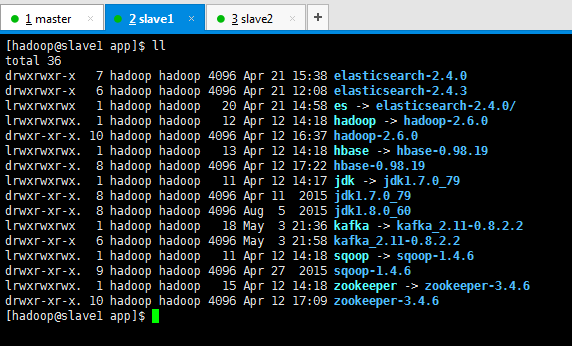

slave1和slave2机器同样。不多赘述。

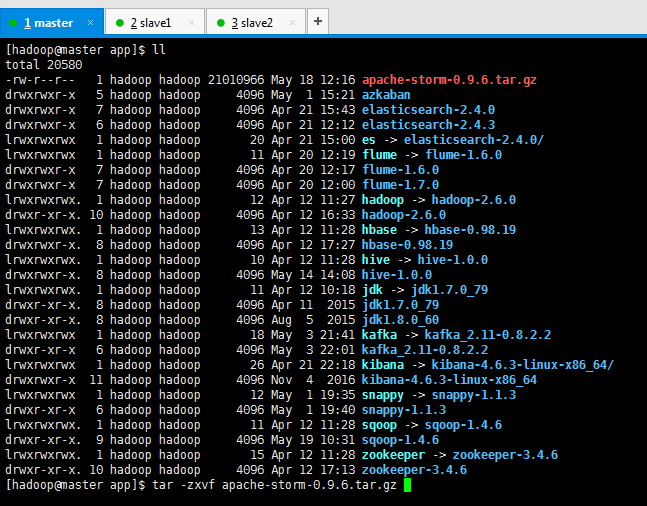

3、解压压缩包,并赋予用户组和用户权限

[hadoop@master app]$ ll

total

-rw-r--r-- hadoop hadoop May : apache-storm-0.9..tar.gz

drwxrwxr-x hadoop hadoop May : azkaban

drwxrwxr-x hadoop hadoop Apr : elasticsearch-2.4.

drwxrwxr-x hadoop hadoop Apr : elasticsearch-2.4.

lrwxrwxrwx hadoop hadoop Apr : es -> elasticsearch-2.4./

lrwxrwxrwx hadoop hadoop Apr : flume -> flume-1.6.

drwxrwxr-x hadoop hadoop Apr : flume-1.6.

drwxrwxr-x hadoop hadoop Apr : flume-1.7.

lrwxrwxrwx. hadoop hadoop Apr : hadoop -> hadoop-2.6.

drwxr-xr-x. hadoop hadoop Apr : hadoop-2.6.

lrwxrwxrwx. hadoop hadoop Apr : hbase -> hbase-0.98.

drwxrwxr-x. hadoop hadoop Apr : hbase-0.98.

lrwxrwxrwx. hadoop hadoop Apr : hive -> hive-1.0.

drwxrwxr-x. hadoop hadoop May : hive-1.0.

lrwxrwxrwx. hadoop hadoop Apr : jdk -> jdk1..0_79

drwxr-xr-x. hadoop hadoop Apr jdk1..0_79

drwxr-xr-x. hadoop hadoop Aug jdk1..0_60

lrwxrwxrwx hadoop hadoop May : kafka -> kafka_2.-0.8.2.2

drwxr-xr-x hadoop hadoop May : kafka_2.-0.8.2.2

lrwxrwxrwx hadoop hadoop Apr : kibana -> kibana-4.6.-linux-x86_64/

drwxrwxr-x hadoop hadoop Nov kibana-4.6.-linux-x86_64

lrwxrwxrwx hadoop hadoop May : snappy -> snappy-1.1.

drwxr-xr-x hadoop hadoop May : snappy-1.1.

lrwxrwxrwx. hadoop hadoop Apr : sqoop -> sqoop-1.4.

drwxr-xr-x. hadoop hadoop May : sqoop-1.4.

lrwxrwxrwx. hadoop hadoop Apr : zookeeper -> zookeeper-3.4.

drwxr-xr-x. hadoop hadoop Apr : zookeeper-3.4.

[hadoop@master app]$ tar -zxvf apache-storm-0.9..tar.gz

slave1和slave2机器同样。不多赘述。

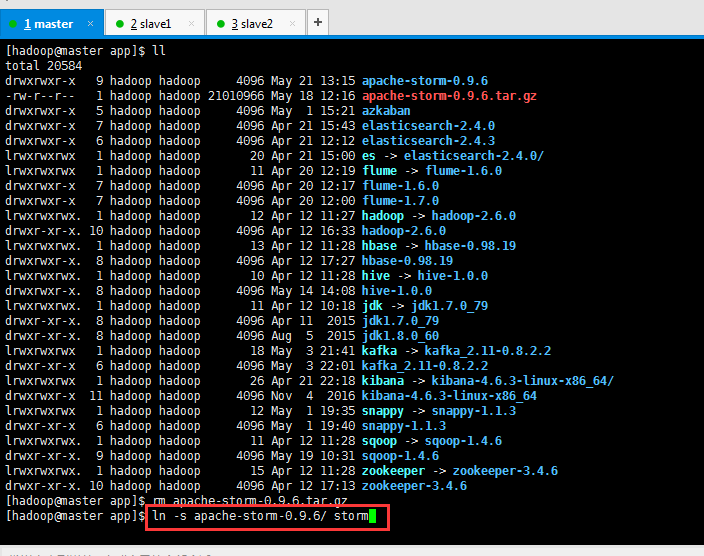

4、删除压缩包,为了更好容下多版本,创建软链接

大数据各子项目的环境搭建之建立与删除软连接(博主推荐)

[hadoop@master app]$ ll

total

drwxrwxr-x hadoop hadoop May : apache-storm-0.9.

-rw-r--r-- hadoop hadoop May : apache-storm-0.9..tar.gz

drwxrwxr-x hadoop hadoop May : azkaban

drwxrwxr-x hadoop hadoop Apr : elasticsearch-2.4.

drwxrwxr-x hadoop hadoop Apr : elasticsearch-2.4.

lrwxrwxrwx hadoop hadoop Apr : es -> elasticsearch-2.4./

lrwxrwxrwx hadoop hadoop Apr : flume -> flume-1.6.

drwxrwxr-x hadoop hadoop Apr : flume-1.6.

drwxrwxr-x hadoop hadoop Apr : flume-1.7.

lrwxrwxrwx. hadoop hadoop Apr : hadoop -> hadoop-2.6.

drwxr-xr-x. hadoop hadoop Apr : hadoop-2.6.

lrwxrwxrwx. hadoop hadoop Apr : hbase -> hbase-0.98.

drwxrwxr-x. hadoop hadoop Apr : hbase-0.98.

lrwxrwxrwx. hadoop hadoop Apr : hive -> hive-1.0.

drwxrwxr-x. hadoop hadoop May : hive-1.0.

lrwxrwxrwx. hadoop hadoop Apr : jdk -> jdk1..0_79

drwxr-xr-x. hadoop hadoop Apr jdk1..0_79

drwxr-xr-x. hadoop hadoop Aug jdk1..0_60

lrwxrwxrwx hadoop hadoop May : kafka -> kafka_2.-0.8.2.2

drwxr-xr-x hadoop hadoop May : kafka_2.-0.8.2.2

lrwxrwxrwx hadoop hadoop Apr : kibana -> kibana-4.6.-linux-x86_64/

drwxrwxr-x hadoop hadoop Nov kibana-4.6.-linux-x86_64

lrwxrwxrwx hadoop hadoop May : snappy -> snappy-1.1.

drwxr-xr-x hadoop hadoop May : snappy-1.1.

lrwxrwxrwx. hadoop hadoop Apr : sqoop -> sqoop-1.4.

drwxr-xr-x. hadoop hadoop May : sqoop-1.4.6

lrwxrwxrwx. 1 hadoop hadoop 15 Apr 12 11:28 zookeeper -> zookeeper-3.4.6

drwxr-xr-x. 10 hadoop hadoop 4096 Apr 12 17:13 zookeeper-3.4.6

[hadoop@master app]$ rm apache-storm-0.9.6.tar.gz

[hadoop@master app]$ ln -s apache-storm-0.9.6/ storm

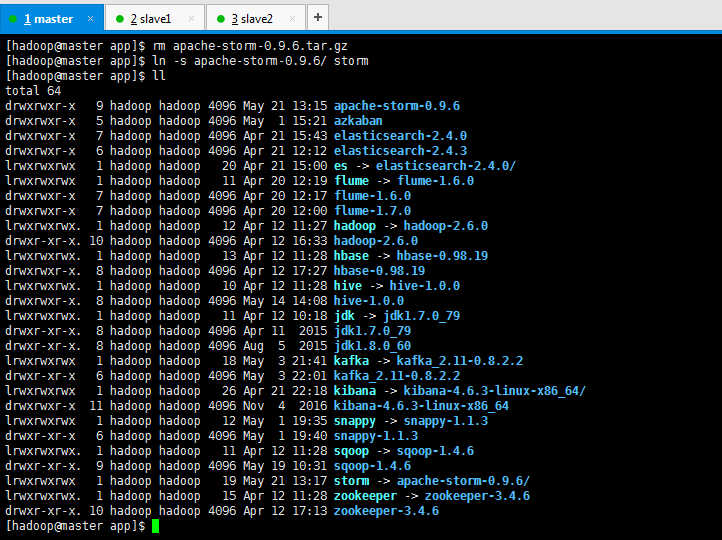

[hadoop@master app]$ ll

total 64

drwxrwxr-x 9 hadoop hadoop 4096 May 21 13:15 apache-storm-0.9.6

drwxrwxr-x 5 hadoop hadoop 4096 May 1 15:21 azkaban

drwxrwxr-x 7 hadoop hadoop 4096 Apr 21 15:43 elasticsearch-2.4.0

drwxrwxr-x 6 hadoop hadoop 4096 Apr 21 12:12 elasticsearch-2.4.3

lrwxrwxrwx 1 hadoop hadoop 20 Apr 21 15:00 es -> elasticsearch-2.4.0/

lrwxrwxrwx 1 hadoop hadoop 11 Apr 20 12:19 flume -> flume-1.6.0

drwxrwxr-x 7 hadoop hadoop 4096 Apr 20 12:17 flume-1.6.0

drwxrwxr-x 7 hadoop hadoop 4096 Apr 20 12:00 flume-1.7.0

lrwxrwxrwx. 1 hadoop hadoop 12 Apr 12 11:27 hadoop -> hadoop-2.6.0

drwxr-xr-x. 10 hadoop hadoop 4096 Apr 12 16:33 hadoop-2.6.0

lrwxrwxrwx. 1 hadoop hadoop 13 Apr 12 11:28 hbase -> hbase-0.98.19

drwxrwxr-x. 8 hadoop hadoop 4096 Apr 12 17:27 hbase-0.98.19

lrwxrwxrwx. 1 hadoop hadoop 10 Apr 12 11:28 hive -> hive-1.0.0

drwxrwxr-x. 8 hadoop hadoop 4096 May 14 14:08 hive-1.0.0

lrwxrwxrwx. 1 hadoop hadoop 11 Apr 12 10:18 jdk -> jdk1.7.0_79

drwxr-xr-x. 8 hadoop hadoop 4096 Apr 11 2015 jdk1.7.0_79

drwxr-xr-x. 8 hadoop hadoop 4096 Aug 5 2015 jdk1.8.0_60

lrwxrwxrwx 1 hadoop hadoop 18 May 3 21:41 kafka -> kafka_2.11-0.8.2.2

drwxr-xr-x 6 hadoop hadoop 4096 May 3 22:01 kafka_2.11-0.8.2.2

lrwxrwxrwx 1 hadoop hadoop 26 Apr 21 22:18 kibana -> kibana-4.6.3-linux-x86_64/

drwxrwxr-x 11 hadoop hadoop 4096 Nov 4 2016 kibana-4.6.3-linux-x86_64

lrwxrwxrwx 1 hadoop hadoop 12 May 1 19:35 snappy -> snappy-1.1.3

drwxr-xr-x 6 hadoop hadoop 4096 May 1 19:40 snappy-1.1.3

lrwxrwxrwx. 1 hadoop hadoop 11 Apr 12 11:28 sqoop -> sqoop-1.4.6

drwxr-xr-x. 9 hadoop hadoop 4096 May 19 10:31 sqoop-1.4.6

lrwxrwxrwx 1 hadoop hadoop 19 May 21 13:17 storm -> apache-storm-0.9.6/

lrwxrwxrwx. 1 hadoop hadoop 15 Apr 12 11:28 zookeeper -> zookeeper-3.4.6

drwxr-xr-x. 10 hadoop hadoop 4096 Apr 12 17:13 zookeeper-3.4.6

[hadoop@master app]$

slave1和slave2机器同样。不多赘述。

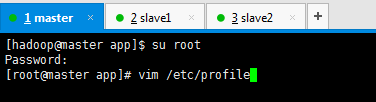

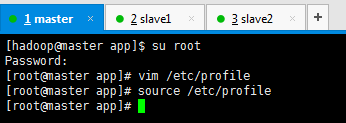

5、修改配置环境

[hadoop@master app]$ su root

Password:

[root@master app]# vim /etc/profile

slave1和slave2机器同样。不多赘述

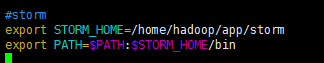

#storm

export STORM_HOME=/home/hadoop/app/storm

export PATH=$PATH:$STORM_HOME/bin

slave1和slave2机器同样。不多赘述

[hadoop@master app]$ su root

Password:

[root@master app]# vim /etc/profile

[root@master app]# source /etc/profile

[root@master app]#

slave1和slave2机器同样。不多赘述

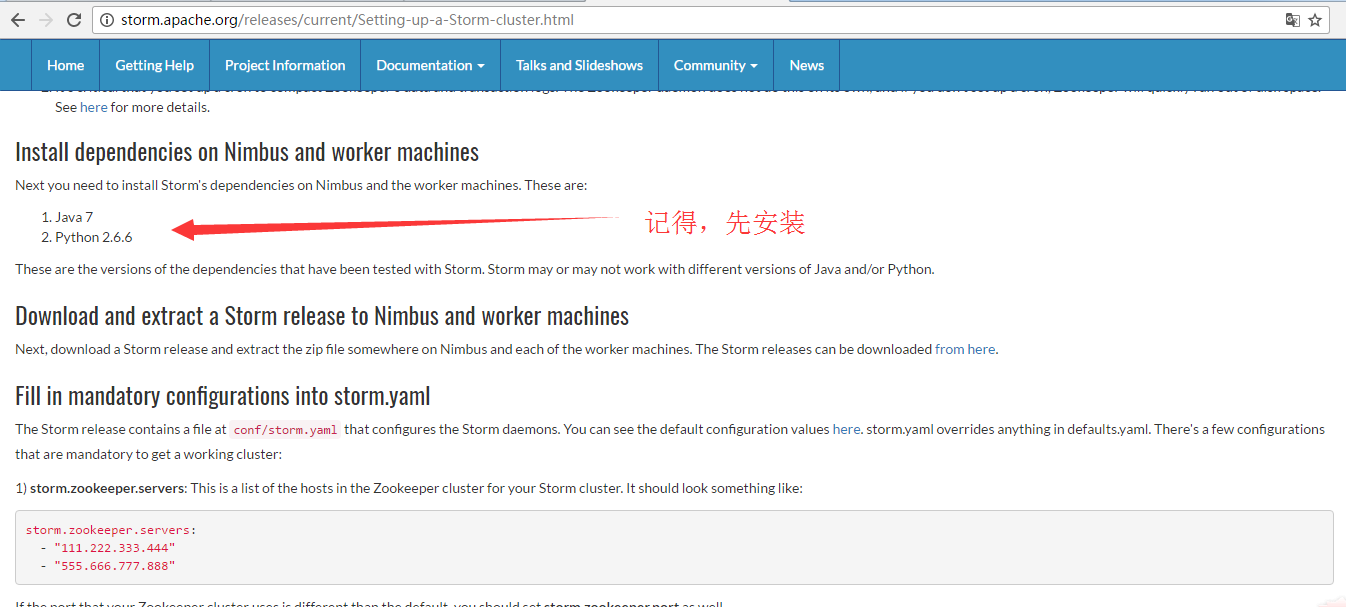

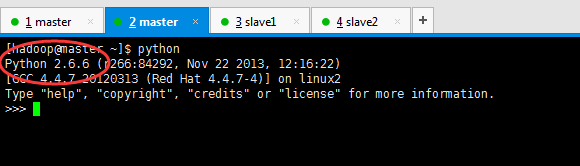

6、下载好Storm集群所需的其他

因为博主我的机器是CentOS6.5,已经自带了

[hadoop@master ~]$ python

Python 2.6. (r266:, Nov , ::)

[GCC 4.4. (Red Hat 4.4.-)] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>>

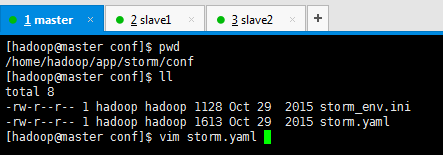

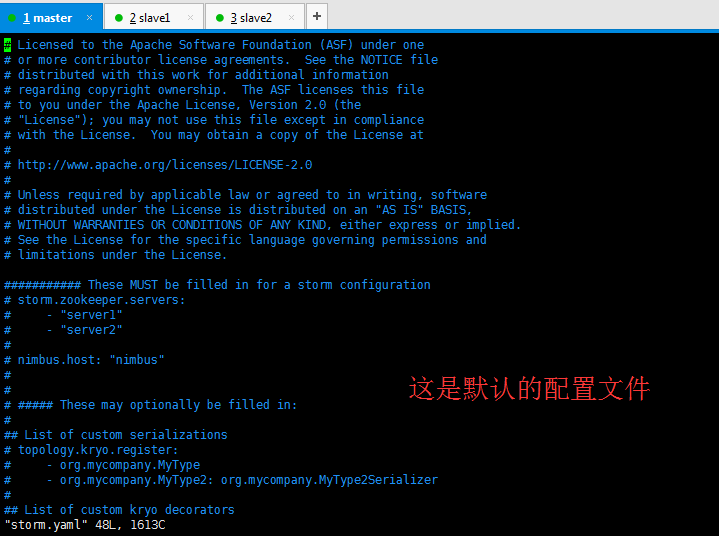

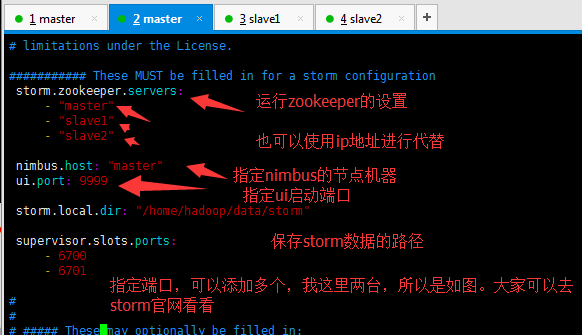

7、配置storm的配置文件

[hadoop@master storm]$ pwd

/home/hadoop/app/storm

[hadoop@master storm]$ ll

total

drwxrwxr-x hadoop hadoop May : bin

-rw-r--r-- hadoop hadoop Oct CHANGELOG.md

drwxrwxr-x hadoop hadoop May : conf

-rw-r--r-- hadoop hadoop Oct DISCLAIMER

drwxr-xr-x hadoop hadoop Oct examples

drwxrwxr-x hadoop hadoop May : external

drwxrwxr-x hadoop hadoop May : lib

-rw-r--r-- hadoop hadoop Oct LICENSE

drwxrwxr-x hadoop hadoop May : logback

-rw-r--r-- hadoop hadoop Oct NOTICE

drwxrwxr-x hadoop hadoop May : public

-rw-r--r-- hadoop hadoop Oct README.markdown

-rw-r--r-- hadoop hadoop Oct RELEASE

-rw-r--r-- hadoop hadoop Oct SECURITY.md

[hadoop@master storm]$

slave1和slave2机器同样。不多赘述

进入storm配置目录下,修改配置文件storm.yaml

[hadoop@master conf]$ pwd

/home/hadoop/app/storm/conf

[hadoop@master conf]$ ll

total

-rw-r--r-- hadoop hadoop Oct storm_env.ini

-rw-r--r-- hadoop hadoop Oct storm.yaml

[hadoop@master conf]$ vim storm.yaml

slave1和slave2机器同样。不多赘述

这里,教给大家一个非常好的技巧。

大数据搭建各个子项目时配置文件技巧(适合CentOS和Ubuntu系统)(博主推荐)

注意第一列需要一个空格

storm.zookeeper.servers:

- "master"

- "slave1"

- "slave2" nimbus.host: "master"

ui.port: storm.local.dir: "/home/hadoop/data/storm" supervisor.slots.ports:

-

-

注意:我的这里ui.port选定为9999,是自定义,为了解决Storm 和spark默认的 8080 端口冲突!

supervisor.slots.ports,我这里是两个,因为我只有slave和slave2.

slave1和slave2机器同样。不多赘述。

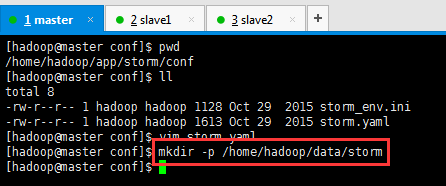

8、新建storm数据存储的路径目录

[hadoop@master conf]$ mkdir -p /home/hadoop/data/storm

slave1和slave2机器同样。不多赘述

9、启动storm集群

1、先在master上启动

storm nimbus &

jps出现nimbus

2、再在master上启动

storm ui &

jps出现core

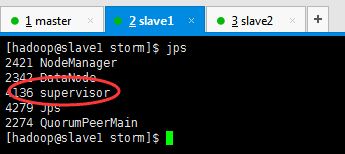

3、最后在slave1和slave2上启动 supervisor

storm supervisor &

jps出现supervisor

或者直接用后台方式来运行(推荐)

- 启动nimbus后台运行:bin/storm nimbus < /dev/null 2<&1 &

- 启动supervisor后台运行:bin/storm supervisor < /dev/null 2<&1 &

- 启动ui后台运行:bin/storm ui < /dev/null 2<&1 &

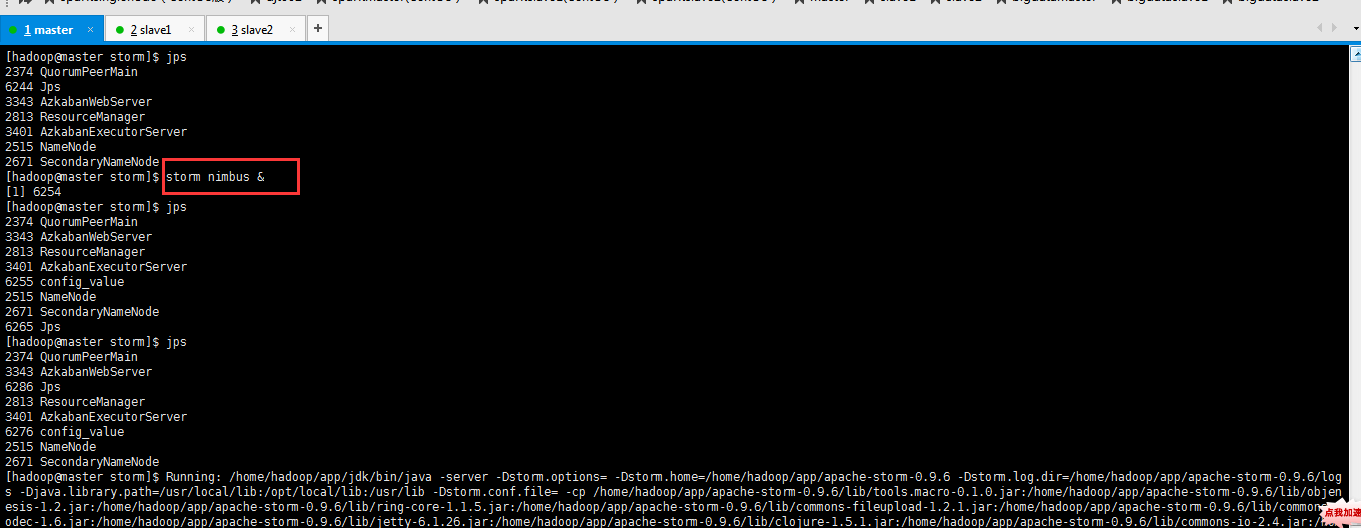

a) 在nimbus设备(我这里是master)上启动storm nimbus进程

[hadoop@master storm]$ jps

QuorumPeerMain

Jps

AzkabanWebServer

ResourceManager

AzkabanExecutorServer

NameNode

SecondaryNameNode

[hadoop@master storm]$ storm nimbus &

[]

[hadoop@master storm]$ jps

QuorumPeerMain

AzkabanWebServer

ResourceManager

AzkabanExecutorServer

config_value

NameNode

SecondaryNameNode

Jps

[hadoop@master storm]$ jps

QuorumPeerMain

AzkabanWebServer

Jps

ResourceManager

AzkabanExecutorServer

config_value

NameNode

SecondaryNameNode

[hadoop@master storm]$ Running: /home/hadoop/app/jdk/bin/java -server -Dstorm.options= -Dstorm.home=/home/hadoop/app/apache-storm-0.9. -Dstorm.log.dir=/home/hadoop/app/apache-storm-0.9./logs -Djava.library.path=/usr/local/lib:/opt/local/lib:/usr/lib -Dstorm.conf.file= -cp /home/hadoop/app/apache-storm-0.9./lib/tools.macro-0.1..jar:/home/hadoop/app/apache-storm-0.9./lib/objen

esis-1.2.jar:/home/hadoop/app/apache-storm-0.9./lib/ring-core-1.1..jar:/home/hadoop/app/apache-storm-0.9./lib/commons-fileupload-1.2..jar:/home/hadoop/app/apache-storm-0.9./lib/commons-codec-1.6.jar:/home/hadoop/app/apache-storm-0.9./lib/jetty-6.1..jar:/home/hadoop/app/apache-storm-0.9./lib/clojure-1.5..jar:/home/hadoop/app/apache-storm-0.9./lib/commons-io-2.4.jar:/home/hadoop/app/apache-storm-0.9./lib/hiccup-0.3..jar:/home/hadoop/app/apache-storm-0.9./lib/logback-core-1.0..jar:/home/hadoop/app/apache-storm-0.9./lib/tools.logging-0.2..jar:/home/hadoop/app/apache-storm-0.9./lib/clj-stacktrace-0.2..jar:/home/hadoop/app/apache-storm-0.9./lib/commons-exec-1.1.jar:/home/hadoop/app/apache-storm-0.9./lib/kryo-2.21.jar:/home/hadoop/app/apache-storm-0.9./lib/logback-classic-1.0..jar:/home/hadoop/app/apache-storm-0.9./lib/tools.cli-0.2..jar:/home/hadoop/app/apache-storm-0.9./lib/commons-logging-1.1..jar:/home/hadoop/app/apache-storm-0.9./lib/disruptor-2.10..jar:/home/hadoop/app/apache-storm-0.9./lib/jetty-util-6.1..jar:/home/hadoop/app/apache-storm-0.9./lib/compojure-1.1..jar:/home/hadoop/app/apache-storm-0.9./lib/minlog-1.2.jar:/home/hadoop/app/apache-storm-0.9./lib/ring-devel-0.3..jar:/home/hadoop/app/apache-storm-0.9./lib/reflectasm-1.07-shaded.jar:/home/hadoop/app/apache-storm-0.9./lib/carbonite-1.4..jar:/home/hadoop/app/apache-storm-0.9./lib/chill-java-0.3..jar:/home/hadoop/app/apache-storm-0.9./lib/asm-4.0.jar:/home/hadoop/app/apache-storm-0.9./lib/ring-jetty-adapter-0.3..jar:/home/hadoop/app/apache-storm-0.9./lib/ring-servlet-0.3..jar:/home/hadoop/app/apache-storm-0.9./lib/storm-core-0.9..jar:/home/hadoop/app/apache-storm-0.9./lib/clj-time-0.4..jar:/home/hadoop/app/apache-storm-0.9./lib/snakeyaml-1.11.jar:/home/hadoop/app/apache-storm-0.9./lib/servlet-api-2.5.jar:/home/hadoop/app/apache-storm-0.9./lib/json-simple-1.1.jar:/home/hadoop/app/apache-storm-0.9./lib/core.incubator-0.1..jar:/home/hadoop/app/apache-storm-0.9./lib/log4j-over-slf4j-1.6..jar:/home/hadoop/app/apache-storm-0.9./lib/clout-1.0..jar:/home/hadoop/app/apache-storm-0.9./lib/commons-lang-2.5.jar:/home/hadoop/app/apache-storm-0.9./lib/jline-2.11.jar:/home/hadoop/app/apache-storm-0.9./lib/jgrapht-core-0.9..jar:/home/hadoop/app/apache-storm-0.9./lib/math.numeric-tower-0.0..jar:/home/hadoop/app/apache-storm-0.9./lib/slf4j-api-1.7..jar:/home/hadoop/app/apache-storm-0.9./lib/joda-time-2.0.jar:/home/hadoop/app/apache-storm-0.9./conf -Xmx1024m -Dlogfile.name=nimbus.log -Dlogback.configurationFile=/home/hadoop/app/apache-storm-0.9./logback/cluster.xml backtype.storm.daemon.nimbus

[hadoop@master storm]$ jps

QuorumPeerMain

Jps

AzkabanWebServer

ResourceManager

AzkabanExecutorServer

NameNode

SecondaryNameNode

[hadoop@master storm]$ storm nimbus &

[]

[hadoop@master storm]$ jps

QuorumPeerMain

AzkabanWebServer

ResourceManager

AzkabanExecutorServer

config_value

NameNode

SecondaryNameNode

Jps

[hadoop@master storm]$ jps

QuorumPeerMain

AzkabanWebServer

Jps

ResourceManager

AzkabanExecutorServer

config_value

NameNode

SecondaryNameNode

[hadoop@master storm]$ Running: /home/hadoop/app/jdk/bin/java -server -Dstorm.options= -Dstorm.home=/home/hadoop/app/apache-storm-0.9. -Dstorm.log.dir=/home/hadoop/app/apache-storm-0.9./logs -Djava.library.path=/usr/local/lib:/opt/local/lib:/usr/lib -Dstorm.conf.file= -cp /home/hadoop/app/apache-storm-0.9./lib/tools.macro-0.1..jar:/home/hadoop/app/apache-storm-0.9./lib/objen

esis-1.2.jar:/home/hadoop/app/apache-storm-0.9./lib/ring-core-1.1..jar:/home/hadoop/app/apache-storm-0.9./lib/commons-fileupload-1.2..jar:/home/hadoop/app/apache-storm-0.9./lib/commons-codec-1.6.jar:/home/hadoop/app/apache-storm-0.9./lib/jetty-6.1..jar:/home/hadoop/app/apache-storm-0.9./lib/clojure-1.5..jar:/home/hadoop/app/apache-storm-0.9./lib/commons-io-2.4.jar:/home/hadoop/app/apache-storm-0.9./lib/hiccup-0.3..jar:/home/hadoop/app/apache-storm-0.9./lib/logback-core-1.0..jar:/home/hadoop/app/apache-storm-0.9./lib/tools.logging-0.2..jar:/home/hadoop/app/apache-storm-0.9./lib/clj-stacktrace-0.2..jar:/home/hadoop/app/apache-storm-0.9./lib/commons-exec-1.1.jar:/home/hadoop/app/apache-storm-0.9./lib/kryo-2.21.jar:/home/hadoop/app/apache-storm-0.9./lib/logback-classic-1.0..jar:/home/hadoop/app/apache-storm-0.9./lib/tools.cli-0.2..jar:/home/hadoop/app/apache-storm-0.9./lib/commons-logging-1.1..jar:/home/hadoop/app/apache-storm-0.9./lib/disruptor-2.10..jar:/home/hadoop/app/apache-storm-0.9./lib/jetty-util-6.1..jar:/home/hadoop/app/apache-storm-0.9./lib/compojure-1.1..jar:/home/hadoop/app/apache-storm-0.9./lib/minlog-1.2.jar:/home/hadoop/app/apache-storm-0.9./lib/ring-devel-0.3..jar:/home/hadoop/app/apache-storm-0.9./lib/reflectasm-1.07-shaded.jar:/home/hadoop/app/apache-storm-0.9./lib/carbonite-1.4..jar:/home/hadoop/app/apache-storm-0.9./lib/chill-java-0.3..jar:/home/hadoop/app/apache-storm-0.9./lib/asm-4.0.jar:/home/hadoop/app/apache-storm-0.9./lib/ring-jetty-adapter-0.3..jar:/home/hadoop/app/apache-storm-0.9./lib/ring-servlet-0.3..jar:/home/hadoop/app/apache-storm-0.9./lib/storm-core-0.9..jar:/home/hadoop/app/apache-storm-0.9./lib/clj-time-0.4..jar:/home/hadoop/app/apache-storm-0.9./lib/snakeyaml-1.11.jar:/home/hadoop/app/apache-storm-0.9./lib/servlet-api-2.5.jar:/home/hadoop/app/apache-storm-0.9./lib/json-simple-1.1.jar:/home/hadoop/app/apache-storm-0.9./lib/core.incubator-0.1..jar:/home/hadoop/app/apache-storm-0.9./lib/log4j-over-slf4j-1.6..jar:/home/hadoop/app/apache-storm-0.9./lib/clout-1.0..jar:/home/hadoop/app/apache-storm-0.9./lib/commons-lang-2.5.jar:/home/hadoop/app/apache-storm-0.9./lib/jline-2.11.jar:/home/hadoop/app/apache-storm-0.9./lib/jgrapht-core-0.9..jar:/home/hadoop/app/apache-storm-0.9./lib/math.numeric-tower-0.0..jar:/home/hadoop/app/apache-storm-0.9./lib/slf4j-api-1.7..jar:/home/hadoop/app/apache-storm-0.9./lib/joda-time-2.0.jar:/home/hadoop/app/apache-storm-0.9./conf -Xmx1024m -Dlogfile.name=nimbus.log -Dlogback.configurationFile=/home/hadoop/app/apache-storm-0.9./logback/cluster.xml backtype.storm.daemon.nimbus

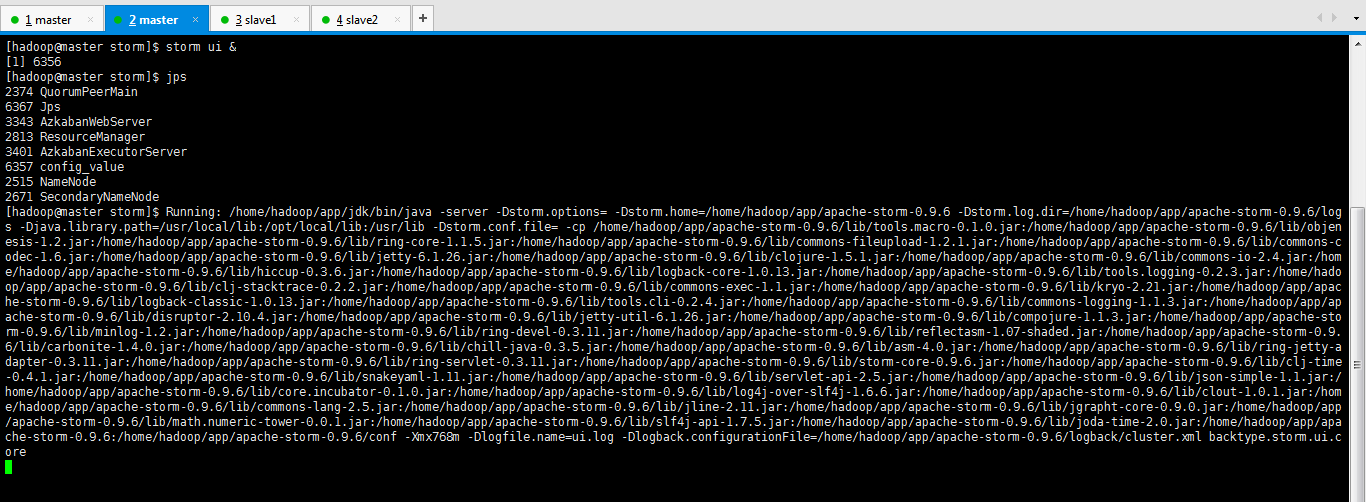

b) 在nimbus设备(我这里是master)上启动storm ui进程

[hadoop@master storm]$ storm ui &

[]

[hadoop@master storm]$ jps

QuorumPeerMain

Jps

AzkabanWebServer

ResourceManager

AzkabanExecutorServer

config_value

NameNode

SecondaryNameNode

[hadoop@master storm]$ Running: /home/hadoop/app/jdk/bin/java -server -Dstorm.options= -Dstorm.home=/home/hadoop/app/apache-storm-0.9. -Dstorm.log.dir=/home/hadoop/app/apache-storm-0.9./logs -Djava.library.path=/usr/local/lib:/opt/local/lib:/usr/lib -Dstorm.conf.file= -cp /home/hadoop/app/apache-storm-0.9./lib/tools.macro-0.1..jar:/home/hadoop/app/apache-storm-0.9./lib/objenesis-1.2.jar:/home/hadoop/app/apache-storm-0.9./lib/ring-core-1.1..jar:/home/hadoop/app/apache-storm-0.9./lib/commons-fileupload-1.2..jar:/home/hadoop/app/apache-storm-0.9./lib/commons-codec-1.6.jar:/home/hadoop/app/apache-storm-0.9./lib/jetty-6.1..jar:/home/hadoop/app/apache-storm-0.9./lib/clojure-1.5..jar:/home/hadoop/app/apache-storm-0.9./lib/commons-io-2.4.jar:/home/hadoop/app/apache-storm-0.9./lib/hiccup-0.3..jar:/home/hadoop/app/apache-storm-0.9./lib/logback-core-1.0..jar:/home/hadoop/app/apache-storm-0.9./lib/tools.logging-0.2..jar:/home/hadoop/app/apache-storm-0.9./lib/clj-stacktrace-0.2..jar:/home/hadoop/app/apache-storm-0.9./lib/commons-exec-1.1.jar:/home/hadoop/app/apache-storm-0.9./lib/kryo-2.21.jar:/home/hadoop/app/apache-storm-0.9./lib/logback-classic-1.0..jar:/home/hadoop/app/apache-storm-0.9./lib/tools.cli-0.2..jar:/home/hadoop/app/apache-storm-0.9./lib/commons-logging-1.1..jar:/home/hadoop/app/apache-storm-0.9./lib/disruptor-2.10..jar:/home/hadoop/app/apache-storm-0.9./lib/jetty-util-6.1..jar:/home/hadoop/app/apache-storm-0.9./lib/compojure-1.1..jar:/home/hadoop/app/apache-storm-0.9./lib/minlog-1.2.jar:/home/hadoop/app/apache-storm-0.9./lib/ring-devel-0.3..jar:/home/hadoop/app/apache-storm-0.9./lib/reflectasm-1.07-shaded.jar:/home/hadoop/app/apache-storm-0.9./lib/carbonite-1.4..jar:/home/hadoop/app/apache-storm-0.9./lib/chill-java-0.3..jar:/home/hadoop/app/apache-storm-0.9./lib/asm-4.0.jar:/home/hadoop/app/apache-storm-0.9./lib/ring-jetty-adapter-0.3..jar:/home/hadoop/app/apache-storm-0.9./lib/ring-servlet-0.3..jar:/home/hadoop/app/apache-storm-0.9./lib/storm-core-0.9..jar:/home/hadoop/app/apache-storm-0.9./lib/clj-time-0.4..jar:/home/hadoop/app/apache-storm-0.9./lib/snakeyaml-1.11.jar:/home/hadoop/app/apache-storm-0.9./lib/servlet-api-2.5.jar:/home/hadoop/app/apache-storm-0.9./lib/json-simple-1.1.jar:/home/hadoop/app/apache-storm-0.9./lib/core.incubator-0.1..jar:/home/hadoop/app/apache-storm-0.9./lib/log4j-over-slf4j-1.6..jar:/home/hadoop/app/apache-storm-0.9./lib/clout-1.0..jar:/home/hadoop/app/apache-storm-0.9./lib/commons-lang-2.5.jar:/home/hadoop/app/apache-storm-0.9./lib/jline-2.11.jar:/home/hadoop/app/apache-storm-0.9./lib/jgrapht-core-0.9..jar:/home/hadoop/app/apache-storm-0.9./lib/math.numeric-tower-0.0..jar:/home/hadoop/app/apache-storm-0.9./lib/slf4j-api-1.7..jar:/home/hadoop/app/apache-storm-0.9./lib/joda-time-2.0.jar:/home/hadoop/app/apache-storm-0.9.:/home/hadoop/app/apache-storm-0.9./conf -Xmx768m -Dlogfile.name=ui.log -Dlogback.configurationFile=/home/hadoop/app/apache-storm-0.9./logback/cluster.xml backtype.storm.ui.core

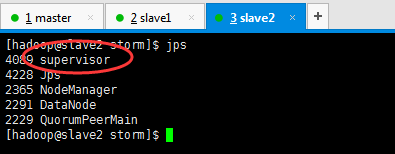

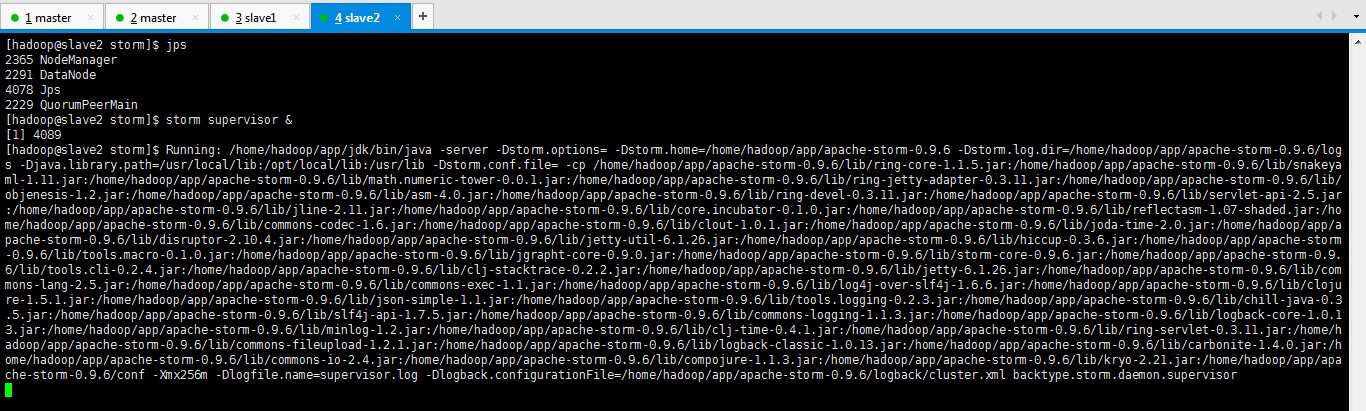

c) 在slave1和slave2设备上分别启动storm supervisor进程

storm supervisor &

[hadoop@slave1 storm]$ jps

NodeManager

DataNode

QuorumPeerMain

Jps

[hadoop@slave1 storm]$ storm supervisor &

[]

[hadoop@slave1 storm]$ Running: /home/hadoop/app/jdk/bin/java -server -Dstorm.options= -Dstorm.home=/home/hadoop/app/apache-storm-0.9. -Dstorm.log.dir=/home/hadoop/app/apache-storm-0.9./logs -Djava.library.path=/usr/local/lib:/opt/local/lib:/usr/lib -Dstorm.conf.file= -cp /home/hadoop/app/apache-storm-0.9./lib/snakeyaml-1.11.jar:/home/hadoop/app/apache-storm-0.9./lib/ring-core-1.1..jar:/home/hadoop/app/apache-storm-0.9./lib/kryo-2.21.jar:/home/hadoop/app/apache-storm-0.9./lib/ring-devel-0.3..jar:/home/hadoop/app/apache-storm-0.9./lib/carbonite-1.4..jar:/home/hadoop/app/apache-storm-0.9./lib/slf4j-api-1.7..jar:/home/hadoop/app/apache-storm-0.9./lib/math.numeric-tower-0.0..jar:/home/hadoop/app/apache-storm-0.9./lib/log4j-over-slf4j-1.6..jar:/home/hadoop/app/apache-storm-0.9./lib/commons-codec-1.6.jar:/home/hadoop/app/apache-storm-0.9./lib/jetty-6.1..jar:/home/hadoop/app/apache-storm-0.9./lib/reflectasm-1.07-shaded.jar:/home/hadoop/app/apache-storm-0.9./lib/commons-logging-1.1..jar:/home/hadoop/app/apache-storm-0.9./lib/tools.logging-0.2..jar:/home/hadoop/app/apache-storm-0.9./lib/jline-2.11.jar:/home/hadoop/app/apache-storm-0.9./lib/commons-lang-2.5.jar:/home/hadoop/app/apache-storm-0.9./lib/disruptor-2.10..jar:/home/hadoop/app/apache-storm-0.9./lib/minlog-1.2.jar:/home/hadoop/app/apache-storm-0.9./lib/clout-1.0..jar:/home/hadoop/app/apache-storm-0.9./lib/json-simple-1.1.jar:/home/hadoop/app/apache-storm-0.9./lib/chill-java-0.3..jar:/home/hadoop/app/apache-storm-0.9./lib/clojure-1.5..jar:/home/hadoop/app/apache-storm-0.9./lib/joda-time-2.0.jar:/home/hadoop/app/apache-storm-0.9./lib/clj-stacktrace-0.2..jar:/home/hadoop/app/apache-storm-0.9./lib/asm-4.0.jar:/home/hadoop/app/apache-storm-0.9./lib/commons-exec-1.1.jar:/home/hadoop/app/apache-storm-0.9./lib/jgrapht-core-0.9..jar:/home/hadoop/app/apache-storm-0.9./lib/ring-jetty-adapter-0.3..jar:/home/hadoop/app/apache-storm-0.9./lib/logback-classic-1.0..jar:/home/hadoop/app/apache-storm-0.9./lib/jetty-util-6.1..jar:/home/hadoop/app/apache-storm-0.9./lib/objenesis-1.2.jar:/home/hadoop/app/apache-storm-0.9./lib/servlet-api-2.5.jar:/home/hadoop/app/apache-storm-0.9./lib/logback-core-1.0..jar:/home/hadoop/app/apache-storm-0.9./lib/clj-time-0.4..jar:/home/hadoop/app/apache-storm-0.9./lib/hiccup-0.3..jar:/home/hadoop/app/apache-storm-0.9./lib/core.incubator-0.1..jar:/home/hadoop/app/apache-storm-0.9./lib/ring-servlet-0.3..jar:/home/hadoop/app/apache-storm-0.9./lib/commons-io-2.4.jar:/home/hadoop/app/apache-storm-0.9./lib/tools.macro-0.1..jar:/home/hadoop/app/apache-storm-0.9./lib/commons-fileupload-1.2..jar:/home/hadoop/app/apache-storm-0.9./lib/storm-core-0.9..jar:/home/hadoop/app/apache-storm-0.9./lib/compojure-1.1..jar:/home/hadoop/app/apache-storm-0.9./lib/tools.cli-0.2..jar:/home/hadoop/app/apache-storm-0.9./conf -Xmx256m -Dlogfile.name=supervisor.log -Dlogback.configurationFile=/home/hadoop/app/apache-storm-0.9./logback/cluster.xml backtype.storm.daemon.supervisor

[hadoop@slave2 storm]$ jps

NodeManager

DataNode

Jps

QuorumPeerMain

[hadoop@slave2 storm]$ storm supervisor &

[]

[hadoop@slave2 storm]$ Running: /home/hadoop/app/jdk/bin/java -server -Dstorm.options= -Dstorm.home=/home/hadoop/app/apache-storm-0.9. -Dstorm.log.dir=/home/hadoop/app/apache-storm-0.9./logs -Djava.library.path=/usr/local/lib:/opt/local/lib:/usr/lib -Dstorm.conf.file= -cp /home/hadoop/app/apache-storm-0.9./lib/ring-core-1.1..jar:/home/hadoop/app/apache-storm-0.9./lib/snakeyaml-1.11.jar:/home/hadoop/app/apache-storm-0.9./lib/math.numeric-tower-0.0..jar:/home/hadoop/app/apache-storm-0.9./lib/ring-jetty-adapter-0.3..jar:/home/hadoop/app/apache-storm-0.9./lib/objenesis-1.2.jar:/home/hadoop/app/apache-storm-0.9./lib/asm-4.0.jar:/home/hadoop/app/apache-storm-0.9./lib/ring-devel-0.3..jar:/home/hadoop/app/apache-storm-0.9./lib/servlet-api-2.5.jar:/home/hadoop/app/apache-storm-0.9./lib/jline-2.11.jar:/home/hadoop/app/apache-storm-0.9./lib/core.incubator-0.1..jar:/home/hadoop/app/apache-storm-0.9./lib/reflectasm-1.07-shaded.jar:/home/hadoop/app/apache-storm-0.9./lib/commons-codec-1.6.jar:/home/hadoop/app/apache-storm-0.9./lib/clout-1.0..jar:/home/hadoop/app/apache-storm-0.9./lib/joda-time-2.0.jar:/home/hadoop/app/apache-storm-0.9./lib/disruptor-2.10..jar:/home/hadoop/app/apache-storm-0.9./lib/jetty-util-6.1..jar:/home/hadoop/app/apache-storm-0.9./lib/hiccup-0.3..jar:/home/hadoop/app/apache-storm-0.9./lib/tools.macro-0.1..jar:/home/hadoop/app/apache-storm-0.9./lib/jgrapht-core-0.9..jar:/home/hadoop/app/apache-storm-0.9./lib/storm-core-0.9..jar:/home/hadoop/app/apache-storm-0.9./lib/tools.cli-0.2..jar:/home/hadoop/app/apache-storm-0.9./lib/clj-stacktrace-0.2..jar:/home/hadoop/app/apache-storm-0.9./lib/jetty-6.1..jar:/home/hadoop/app/apache-storm-0.9./lib/commons-lang-2.5.jar:/home/hadoop/app/apache-storm-0.9./lib/commons-exec-1.1.jar:/home/hadoop/app/apache-storm-0.9./lib/log4j-over-slf4j-1.6..jar:/home/hadoop/app/apache-storm-0.9./lib/clojure-1.5..jar:/home/hadoop/app/apache-storm-0.9./lib/json-simple-1.1.jar:/home/hadoop/app/apache-storm-0.9./lib/tools.logging-0.2..jar:/home/hadoop/app/apache-storm-0.9./lib/chill-java-0.3..jar:/home/hadoop/app/apache-storm-0.9./lib/slf4j-api-1.7..jar:/home/hadoop/app/apache-storm-0.9./lib/commons-logging-1.1..jar:/home/hadoop/app/apache-storm-0.9./lib/logback-core-1.0..jar:/home/hadoop/app/apache-storm-0.9./lib/minlog-1.2.jar:/home/hadoop/app/apache-storm-0.9./lib/clj-time-0.4..jar:/home/hadoop/app/apache-storm-0.9./lib/ring-servlet-0.3..jar:/home/hadoop/app/apache-storm-0.9./lib/commons-fileupload-1.2..jar:/home/hadoop/app/apache-storm-0.9./lib/logback-classic-1.0..jar:/home/hadoop/app/apache-storm-0.9./lib/carbonite-1.4..jar:/home/hadoop/app/apache-storm-0.9./lib/commons-io-2.4.jar:/home/hadoop/app/apache-storm-0.9./lib/compojure-1.1..jar:/home/hadoop/app/apache-storm-0.9./lib/kryo-2.21.jar:/home/hadoop/app/apache-storm-0.9./conf -Xmx256m -Dlogfile.name=supervisor.log -Dlogback.configurationFile=/home/hadoop/app/apache-storm-0.9./logback/cluster.xml backtype.storm.daemon.supervisor

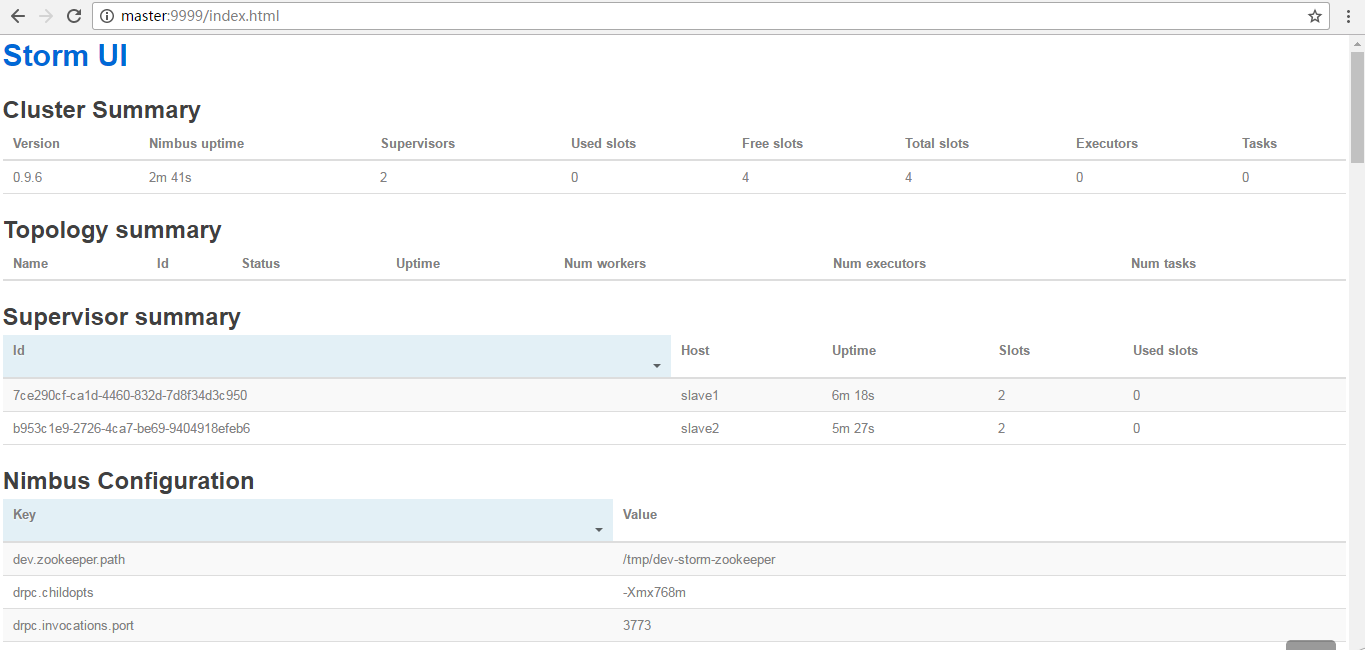

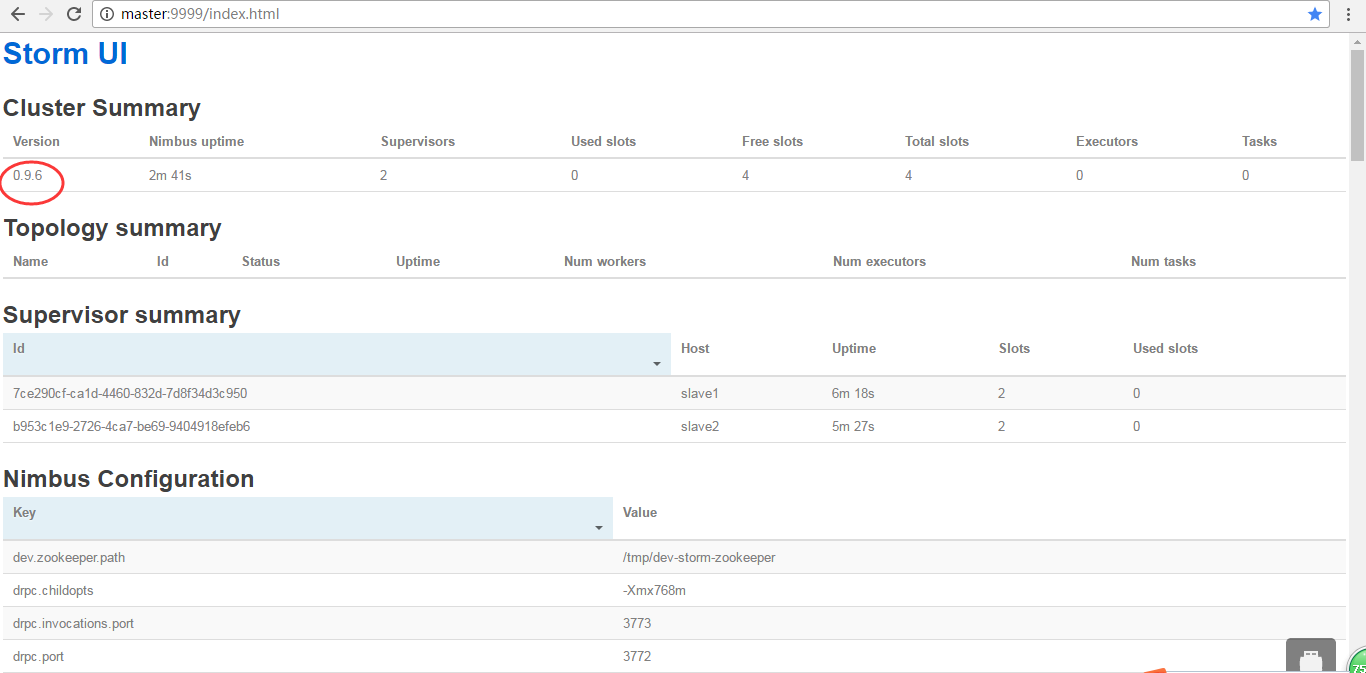

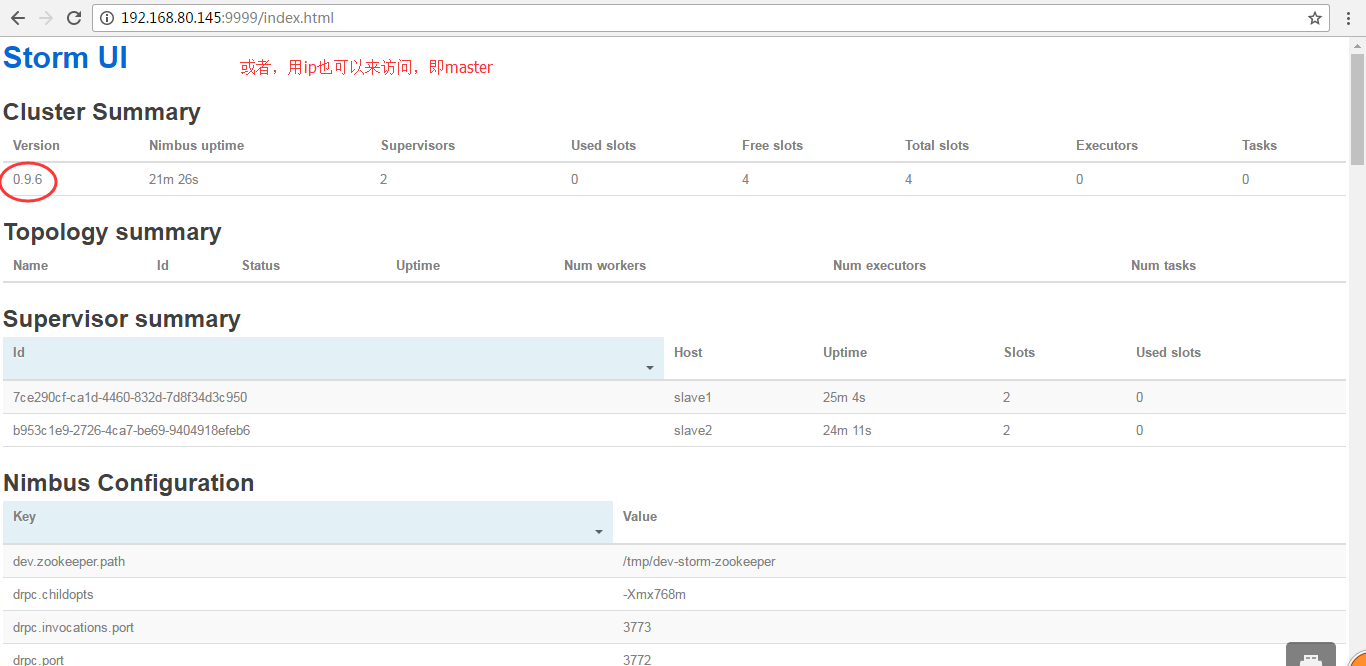

10、查看storm u集群

http://192.168.80.145:9999/index.html

成功!,其他的大家,自行去看吧,这里不多赘述了。

apache-storm-0.9.6.tar.gz的集群搭建(3节点)(图文详解)的更多相关文章

- apache-storm-1.0.2.tar.gz的集群搭建(3节点)(图文详解)(非HA和HA)

不多说,直接上干货! Storm的版本选取 我这里,是选用apache-storm-1.0.2.tar.gz apache-storm-0.9.6.tar.gz的集群搭建(3节点)(图文详解) 为什么 ...

- hadoop-2.6.0.tar.gz的集群搭建(3节点)(不含zookeeper集群安装)

前言 本人呕心沥血所写,经过好一段时间反复锤炼和整理修改.感谢所参考的博友们!同时,欢迎前来查阅赏脸的博友们收藏和转载,附上本人的链接http://www.cnblogs.com/zlslch/p/5 ...

- Centos7.4 Storm2.0.0 + Zookeeper3.5.5 高可用集群搭建

想了下还是把kafka集群和storm集群分开比较好 集群规划: Nimbus Supervisor storm01 √ √ storm02 √(备份) √ storm03 √ 准备工作 老样子复制三 ...

- spark2.4.0+hadoop2.8.3全分布式集群搭建

集群环境 hadoop-2.8.3搭建详细请查看hadoop系列文章 scala-2.11.12环境请查看scala系列文章 jdk1.8.0_161 spark-2.4.0-bin-hadoop2. ...

- Hadoop2.6.0在CentOS 7中的集群搭建

我这边给出我的集群环境是由一台主节点master和三台从节点slave组成: master 192.168.1.2 slave1 192.168.1.3 slave2 ...

- storm集群部署和配置过程详解

先整体介绍一下搭建storm集群的步骤: 设置zookeeper集群 安装依赖到所有nimbus和worker节点 下载并解压storm发布版本到所有nimbus和worker节点 配置storm ...

- 访问Storm ui界面,出现org.apache.storm.utils.NimbusLeaderNotFoundException: Could not find leader nimbus from seed hosts ["master"]. Did you specify a valid list of nimbus hosts for confi的问题解决(图文详解)

不多说,直接上干货! 前期博客 apache-storm-0.9.6.tar.gz的集群搭建(3节点)(图文详解) apache-storm-1.0.2.tar.gz的集群搭建(3节点)(图文详解)( ...

- 访问Storm ui界面,出现org.apache.storm.utils.NimbusLeaderNotFoundException: Could not find leader nimbus from seed hosts ["master" "slave1"]. Did you specify a valid list of nimbus hosts for confi的问题解决(图文详解)

不多说,直接上干货! 前期博客 apache-storm-1.0.2.tar.gz的集群搭建(3节点)(图文详解)(非HA和HA) 问题详情 org.apache.storm.utils.Nimbu ...

- 访问Storm ui界面,出现org.apache.thrift7.transport.TTransportException: java.net.ConnectException: Connection refused的问题解决(图文详解)

不多说,直接上干货! 前期博客 apache-storm-0.9.6.tar.gz的集群搭建(3节点)(图文详解) 问题详情 org.apache.thrift7.transport.TTranspo ...

随机推荐

- 网页js粘贴截图

<!DOCTYPE html> <html> <head> <meta charset="utf-8"> <title> ...

- IOS开发之简单计算器

用Object-C写的一个简单的计算机程序,主要学习按钮的action动作. 以下是主界面: 以下代码时界面按钮和ViewController.h连接的地方: - (IBAction)button_0 ...

- 利用Python爬虫实现百度网盘自动化添加资源

事情的起因是这样的,由于我想找几部经典电影欣赏欣赏,于是便向某老司机寻求资源(我备注了需要正规视频,绝对不是他想的那种资源),然后他丢给了我一个视频资源网站,说是比较有名的视频资源网站.我信以为真,便 ...

- Oracle Warehouse Builder(OWB) 安装报seeding owbsys错误的解决

今天在RHEL6.4上安装Oracle Warehouse Builder 11.2时在最后一步报错,打开日志查看有例如以下信息: main.TaskScheduler timer[5]2014052 ...

- HDU 1505 City Game(DP)

City Game Time Limit: 2000/1000 MS (Java/Others) Memory Limit: 65536/32768 K (Java/Others) Total ...

- 关于移动平台的viewport

viewport是用来设置移动平台上的网页宽度,写device-width比较好,不然会和设备上不一样 在使用device-width之后,图片资源最好使用百分比布局,进行自动缩放. 文字大小是一样的 ...

- 6 Workbook 对象

6.1 在奔跑之前先学会走路:打开和关闭工作薄 代码清单6.1:一个完整的工作薄批处理框架 '代码清单6.1:一个完整的工作薄批处理框架 Sub ProcessFileBatch() Dim nInd ...

- 记录一次Mysql死锁排查过程

背景 以前接触到的数据库死锁,都是批量更新时加锁顺序不一致而导致的死锁,但是上周却遇到了一个很难理解的死锁.借着这个机会又重新学习了一下mysql的死锁知识以及常见的死锁场景.在多方调研以及和同事们的 ...

- java笔记线程电影院卖票最终版

* 如何解决线程安全问题呢? * * 要想解决问题,就要知道哪些原因会导致出问题:(而且这些原因也是以后我们判断一个程序是否会有线程安全问题的标准) * A:是否是多线程环境 * B:是否有共享数据 ...

- HashMap1

一.Java并发基础 当一个对象或变量可以被多个线程共享的时候,就有可能使得程序的逻辑出现问题. 在一个对象中有一个变量i=0,有两个线程A,B都想对i加1,这个时候便有问题显现出来,关键就是对i加1 ...