"基础模型时代的机器人技术" —— Robotics in the Era of Foundation Models

翻译:

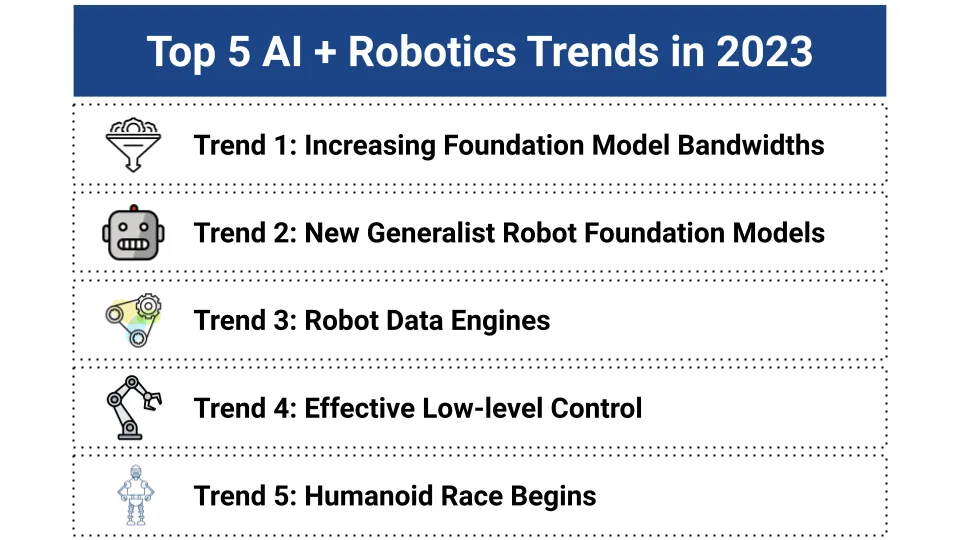

2023年是智能机器人规模化的重要一年!对于机器人领域之外的人来说,要传达事物变化的速度和程度是有些棘手的。与仅仅12个月前的情况相比,如今人工智能+机器人领域的大部分景观似乎完全不可识别。从学术界到初创公司再到工业研究实验室,2023年带来了一波研究进展,迫使许多人更新了他们的心理模型,调整了研究议程,并加快了时间表。这篇文章旨在突出5个主要趋势和一些基础研究进展。最后,我会列出我对每个趋势的首选个人选择。

2023年进步浪潮背后的一个重要推动力是基础模型的大量涌现,不仅作为一种技术,还作为一种研究理念。作为一种技术,机器人的突破包括特定模型(GPT-3、PaLI、PaLM)、学习算法/架构组件(自注意力、扩散)以及基础数据集和基础设施(VQA、CV)。更重要的是,机器人研究也越来越多地将基础建模视为一种哲学:一种对规模化、大量多样化数据源、泛化的重要性以及积极转移和新兴能力的坚定信念。即使过去这些曾是无争议的信条,但2023年似乎是第一年,人们不仅可以理论上地将这些思维模式应用于机器人,而且实际上可以去做。那么,我们是如何达到这个激动人心的时刻的呢?

为了铺设舞台,最近推动具体智能的行动的第一幕始于2022年,人类发现/创造了历史上最接近神奇外星人制品的东西,即大型语言模型。这些制品在许多不同的语言推理领域似乎“只是工作”,但我们仍然不确定如何将它们应用于机器人技术。到了2022年中期,我们似乎可以将大型语言模型制品置于严格控制的盒子中,为规划和语义推理提供帮助,但其输出仍然是非常高级的抽象。在第一幕结束时,我们决定低级控制——机器人技术中的难点——仍然需要内部建造:或许受到启发,但仍然远离大型语言模型制品的奥秘运作。机器人技术与大型语言模型的第一次接触令人着迷和兴奋,但尚未带来变革性的改变。

第二幕始于2023年,许多研究人员希望从这些神奇的大型语言模型制品中获得不仅仅是严格控制的抽象语言输出。调查取得了进展,增加了基础模型与机器人技术之间的带宽,研究了各种方法来为机器人技术提炼现有基础模型中包含的互联网规模的语义知识。似乎基础模型不仅理解高级语义推理,还内化了物理学、低级控制和代理决策的一些概念。在这个时候,新的专门针对机器人技术进行训练或调整的机器人基础模型也开始涌现,显示出更多关注于成为通用性而不是专家的特点。为了为这些基础模型提供动力,还在进行重大研究工作,探索为机器人技术的数据引擎扩展规模的科学和工程,这是推动任何能力进步的关键燃料。随着越来越多的高级机器人问题通过基础建模技术得到“解决”,对有效的低级控制的重视再次增强。第二幕的巨大进展不仅仅发生在学术界或工业研究实验室中。对人形机器人初创公司的早期积极参与者看到了从零到令人印象深刻的硬件原型的迅速增长,为具体智能的第一代准备了试验场。

让我们更深入地探讨这些核心领域!

强制性声明:2023年有太多令人惊奇的作品,无法在一个简要摘要中概括,所以我突出了与我自己的研究兴趣相邻的作品。它们倾向于操纵、端到端学习方法和规模化。这些是我个人的观点,不一定代表我的雇主的观点。

原文:

2023 was a momentous year in scaling intelligent robots! To those outside of the robotics bubble, it’s a bit tricky how to convey the sheer magnitude of how fast and how much things have changed. Compared to what the field looked like just a short 12 months ago, large parts of the landscape today in AI + Robotics seem completely unrecognizable. From academia to startups to industry research labs, 2023 has brought a wave of research advances that have forced many to update their mental models, adjust their research agendas, and accelerate their timelines. This post aims to highlight 5 major trends and some of the underlying research advances. At the end, I add my top personal picks from each trend.

A large driving force behind the tsunami of progress in 2023 has been the proliferation of Foundation Models, both as a technology but also as a research philosophy. As a technology, robotics breakthroughs incorporated specific models (GPT-3, PaLI, PaLM), the learning algorithms/architectural components (self-attention, diffusion), and the underlying datasets and infrastructure (VQA, CV). Perhaps even more importantly, robotics research also increasingly embraced foundation modeling as a philosophy: a fervent belief in the power of scaling up, of large diverse data sources, of the importance of generalization, of positive transfer and emergent capabilities. Even if these were uncontroversial tenets to rally behind in the past, 2023 has felt like the first year where one could not just theorize about applying these mindsets to robotics, but actually go ahead and do it. So, how did we get to this exciting point in time?

Thanks for reading Action-driven Intelligence! Subscribe for free to receive new posts and support my work.

Pledge your support

To set the stage, Act I of this recent push towards Embodied Intelligence begins in 2022, where humans have discovered/created the closest thing in history to a magical alien artifact, Large Language Models. These artifacts sort of “just work” on so many different language reasoning domains, but we’re still not sure exactly how to bring these to bear for robotics. In mid-2022, it seems that we can keep the LLM artifact in a tightly controlled box, providing help with planning and semantic reasoning, but its outputs are still very high-level abstractions. At the end of Act I, we decide that low-level control – the hard parts of robotics – still need to be built in-house: perhaps inspired by, but still kept away from the arcane workings of the LLM artifact. First contact between robotics and LLMs is intriguing and exciting, but not yet transformative.

Act II picks up in 2023 with many researchers hoping to get more than just tightly-controlled abstract language outputs out of these magical LLM artifacts. Investigations make progress on increasing the bandwidth between foundation models and robotics, studying various approaches to distill for robotics the internet-scale semantic knowledge contained in existing foundation models. It seems that foundation models not only understand high-level semantic reasoning, but also internalize some notion of physics, low-level control, and agentic decision-making. Around this time, new robot foundation models trained or adapted specifically for robotics also start landing, showing significantly more focus on being generalists rather than specialists. To power these foundation models, major research efforts are also underway to explore the science and engineering behind scaling data engines for robotics as the critical fuel that powers any capabilities advances. As more and more high-level robotics problems get “solved” by foundation modeling techniques, there is a renewed emphasis on effective low-level control. The tremendous progress in Act II does not just occur in academic or industry research labs. Keen early players at humanoid startups see rapid growth from zero to impressive hardware prototypes, preparing the proving grounds for the first generation for embodied intelligence.

Let’s dive deeper into these core areas!

Mandatory disclaimer: there are way too many awesome works in 2023 to consolidate into one brief summary, so I highlight works adjacent to my own research interests. They lean towards manipulation, end-to-end learning methods, and scaling. These are my own opinions and don’t necessarily reflect those of my employer.

Trend 1: Increasing Foundation Model Bandwidths

The bottleneck between foundation models and robot policies has previously been very narrow. Recent approaches in the past decade often leverage “non-robotic” domains like perception or language solely as an input preprocessing step, where learned representations (such as ImageNet-pretrained features) are passed off to robot-specific models for end-to-end training. In contrast, 2023 saw an explosion in more ambitious research that aimed to get more out of the internet-scale multimodal knowledge contained in foundation models like PaLM, GPT-3, CLIP, or LLaMA.

Foundation Models for Data Enrichening

These efforts attempt to import internet-scale knowledge into robot policies via synthetic data in the form of augmentations or labels.

GenAug, ROSIE, CACTI are concurrent works which study using generative text-to-image models for semantic image augmentation on offline datasets: salient parts of robot scenes (backgrounds, table texture, objects) can be visually augmented or randomized by leveraging modern image generation methods. Policies trained on these augmented datasets are then more robust to these types of distribution shifts, without any additional real data required.

DIAL introduces hindsight language relabeling and semantic language augmentation via VLMs. Allows policies to extract more semantic knowledge out of existing offline datasets.

SucessVQA, RoboFuME shows how VLMs can treat success detection as a VQA task, and propagate the VLM predictions back to robot policies in the form of reward signals.

VIPER considers log-likelihoods of a video prediction model, trained to model successful behaviors, as a dense reward signal for policy learning

Foundation Model Powered Modalities and Representations

These works explored how to leverage foundation models for data modalities or representations beyond language. They seek to express internet-scale foundation model knowledge directly via input features or maps.

Visual-language Representations:

Voltron fuses ideas from R3M and MVP (which both saw massive adoption in 2023; most graduate students in robot learning default to one of these as a building block for new projects). Voltron leverages both language-to-image (reconstruction) and image-to-language (captioning) losses to produce a robust visual-language pretrained representation.

Object-centric Modalities:

MOO and KITE leverage VLM representations from object detectors or segmenters as additional features for downstream policy learning.

OVMM and CLIP-Fields utilize object-centric representations for indoor navigation spatial maps.

Motion-centric Modalities:

RT-Trajectory utilizes hindsight robot end-effector trajectories as 2D RGB task representations. During inference, these trajectories can be generated manually by humans or automatically by LLMs/VLMs, enabling motion generalization.

Goal-centric Modalities:

DALL-E-Bot uses a pipelined system for mapping text instructions to diffusion generated imaginary goal images back to robot controls.

SuSIE brings in a similar idea with imagined goal images, but closes the loop with a learning-based policy which considers imagined goals during training.

RT-Sketch trains on hindsight goal images with style augmentations such that at test time the policy can interpret novel goal sketches directly provided by humans.

3D-centric Modalities:

VoxPoser creates a 3D voxel map based on semantics and affordances represented in LLMs and VLMs, and then applies classical motion planning.

F3RM uses 3D visual-language feature fields distilled from VLMs applied to 2D scene images, which enable sample-efficient few-shot learning.

LERF infuses language embeddings into NeRFs directly, so unlike VoxPoser and F3RM, LERF’s 3D map is learned end-to-end as a neural field.

Raw LLMs Keep Surprising Us

Raw LLMs (which aren’t finetuned for robotics) still manage to keep impressing us with how much they know about physics, actions, and interaction.

Code as Policies directly uses LLM code outputs as robot policies. LLMs are pretty good at generating code, and have also been trained on robotics software APIs and control theory textbooks.

Language to Rewards shows that LLMs can generate cost functions for motion planning, enabling generating motions which may be too complex for LLMs to directly generate.

Eureka extends Language to Rewards with behavior feedback from LLMs and VLMs in order to update cost function predictions.

Prompt2Walk With prompting alone, LLMs are able to directly output joint angles for locomotion. This was thought of as impossible by those doubtful of how much physical understanding is contained in Foundation Models.

Minecraft as a Proving Ground

Minecraft is a rich simulated domain where many exciting ideas in decision making and embodiment have been prototyped. These ideas easily transfer to robotics.

Voyager uses LLMs as the entire RL agent: using an LLM for hierarchical code-based skills, exploration curriculum, reward verification, behavior improvement.

JARVIS-1 showcases planning, memory, and exploration with multi-modal LLMs

STEVE-1 combines MineCLIP + VPT for translating text goals to image goals to low-level mouse+keyboard control.

Trend 2: New Generalist Robot Foundation Models

One of the most exciting trends this year has been the shift towards focusing on generalist agents which can perform many tasks and handle various distribution shifts. This change mirrors attitudes in language modeling over the past years, where scaling and generalization have been accepted as non-negotiable properties that are critical to any research projects or model building efforts.

RT-1 introduces a language-conditioned multitask imitation learning policy on over 500 manipulation tasks. First effort at Google DeepMind to make some drastic changes such as: bet on action tokenization, Transformer architecture, switch from RL to BC. Culmination of 1.5 years of demonstration data collection across many robots.

VIMA leverages multimodal prompts interleaving text and object-centric tokens, one of the first works to showcase a heavy focus on generalization as a test-time criteria.

RVT shows how multi-view + depth point clouds can enable virtual camera images which provide richer features for 3D action prediction. Nice followup to other RGB-D works at NVIDIA like PerAct.

RT-2 introduces Vision Language Action (VLA) models: the base VLM directly predicts actions as strings. Co-fine-tuning robot action data alongside internet data enables generalization that would previously need to come from robot data or representations.

RoboCat is a goal-conditioned decision transformer with highly sample-efficient generalization and finetuning to new tasks. A ton of rigorous ablations and justified design decisions, as dissected in this nice writeup.

RT-X brings RT-1 and RT-2 into the age of large-scale cross-embodiment mainipulation datasets. Massive open-source dataset and infrastructure combining data from a ton of academic institutions, robot morphologies, and robot skills. Exhibits positive transfer of skills and concepts between datasets of different robot types.

GNM / ViNT are general navigation foundation models for visual navigation across multiple embodiments, including zero shot generalization to new embodiments. Culmination of multi-year investigations into general purpose outdoor and indoor navigation policies.

RoboAgent is a combination of a sim+real framework (RoboHive), a real world multitask dataset (RoboSet), semantic data augmentation, and action chunking. Impressive 2 year effort bringing together many prior projects.

LEO introduces a multi-modal LLM which consumes 2D and 3D encoded tokens and uses a significant amount of 3D reasoning datasets during training. A nice study exploring the thesis that to exist/act in a 3D world, you should focus heavily on 3D inputs, representations, and reasoning.

Octo is a cross-embodiment generalist trained on the Open-X-Embodiment dataset leveraging flexible input observations, task representations, and action outputs via individual diffusion heads. Fully open-sourced checkpoints and training infrastructure.

Trend 3: Robot Data Engines

It's hard to imagine the AI revolution in VLMs and LLMs if we didn't happen to have the Internet with all its data “for free”. Clearly, a grand challenge for robotics is to generate an equivalent internet-scale of robot action data. In 2023, various projects tackled this challenge with advances in hardware, methodologies, simulation, data science, and cross-embodiment.

Real World Data Collection

ALOHA introduces a low-cost bimanual manipulation puppeteering setup that is able to perform an absurd amount of fine-grained dexterous manipulation tasks. This work single-handedly reinvigorated puppeteering as a scalable data collection strategy (previously, there were doubts as to how dexterous/precise such systems could be). ALOHA results and systems have been reproduced around the world.

GELLO focuses on the teleoperation framework aspect of ALOHA. The two main contributions are scaling down the “leader” robot arm (lower-cost, easier to create) and extending the system to numerous “follower” robot arm morphologies.

MimicPlay combines two very scalable data collection approaches: human demonstration data and long-horizon robot play data. Humans provide long-horizon semantic plans, and the play data provides low-level control information.

Dobb-E uses a specialized low-cost hardware tool called “the Stick”, a reacher grabber tool that uses an actual robot’s end-effector grippers along with a mount for a wrist camera. This cheap 3D-printed tool with literally zero embodiment distribution shift to the real robot’s hand enables cheap data collection, and is showcased in Dobb-E with diverse datasets that power a sample-efficient manipulation policy. Such low-cost hardware tool interfaces have been explored before, but Dobb-E is the most impressive and scaled up iteration I’ve seen.

Simulation + Foundation Models

Scaling Up Distilling Down / GenSim scale up simulation datasets by combining diverse scenes/assets from generative models with rapid behavior/skill acquisition via RL or motion planning from ground truth state. Afterwards, the datasets can be distilled down into a vision-based policy.

Language to Rewards / Eureka are able to very rapidly generate diverse behaviors in simulation from language instructions by combining reward-generating LLMs with motion planners.

MimicGen is able to generate large synthetic datasets starting from a few initial seed human demonstrations.

Cross-Embodiment

Open X-Embodiment is a massive collaboration consisting of more than 60 cross-embodiment datasets across 22 robot morphologies. Real robot data may be sparse and expensive, but it can be less sparse if we all pool and share our data.

World Models

GAIA-1 is a action and text conditioned generative video model for autonomous driving

UniSim learns interactive text-conditioned simulators from video data for robotics and human video domains

DreamerV3 leverages a learned latent world model for imagined abstract rollouts during policy learning, resulting in very sample-efficient exploration

Understanding Robot Data

Data Quality in Imitation Learning formalizes data quality for downstream imitation learning, and focuses on two salient aspects: action consistency and transition diversity.

ACT shows how action chunking can help ameliorate action diversity challenges in long-horizon high-frequency imitation learning datasets.

GenGap studies various visual distribution shifts and the challenges they bring for imitation learning methods.

Learning to Discern explores using an explicit learned preference critic for filtering and weighting offline trajectories

Trend 4: Effective Low-level Control

Completely separate from the advances exploring foundation models for robotics, there has been a continued push in performant low-level control policies. Diffusion policies have taken real-world manipulation policies by storm, and locomotion has been virtually solved with simulation + RL + sim2real.

Diffusion Policies

Diffusion Policies apply iterative denoising diffusion to action prediction, excelling at multimodal and high-dimensional action spaces. Diffusion Policies have proliferated in many robot learning settings, especially in low-data or multimodal-data regimes.

TRI uses Diffusion Policies for training specialist policies on a modest amounts of real world demonstrations

BESO is a goal-conditioned score-based diffusion policy trained on play data, which is a natural fit for diffusion policies due to high state-action entropy and multimodality.

Simulation + RL + Distillation + Sim2Real

Barkour / Robot Parkour train large-scale simulation RL specialists on agile quadrupedal locomotion tasks, distill them into expert policies, and then deploy them zero-shot into the real world.

Bipedal Locomotion trains a causal transformer (performing implicit systems identification) in simulation and then transfers zero-shot into the real world

CMS showcases visual locomotion starting from a “blind” sim2real policy that incorporates a real world visual module that improves with more real data.

RL at Scale is a large-scale effort of mixing simulation RL training with real-world policy deployment over many months on mobile manipulation trash sorting. This is one of the largest RL flywheels deployed at scale in the real world.

DeXtreme presents a system transferring dexterous manipulation learned in simulation to the real world via robust multi-fingered hand pose estimation

Offline RL

Cal-QL conservatively initializes a value function when switching from offline RL pre-training to online finetuning

ICVF introduces Intent-Conditioned Value Functions which can leverage passive video data to generate value functions over intents rather than raw actions, enabling better usage of prior data which may not have action labels

Trend 5: Humanoid Race Begins

For perhaps the first time ever, a path towards truly embodied intelligence seems to exist – and ambitious early believers have moved rapidly this year to building out the first chapters of humanoid robotics startups. Robotics startups are historically really really hard, so in order to justify such a massive scaling effort in 2023 with such urgency, the current cohort of humanoid players believe in three core theses:

when “robot brains” are solved, “robot bodies” become the critical bottleneck

“robot brains” will be solved within the next 10 years.

“robot bodies” are easier to build than before, but will still take at least 3-5 years

And if you buy these three theses, then the first chapter of the humanoid robot race makes sense. Humanoid startups are speedrunning the zero-to-untethered-locomotion demos, and preparing for the next chapter of R&D in 2024 that will begin to truly start focusing on data-driven scaling.

Tesla Optimus impressed robotics experts with how quickly they went from marketing pitch to live hardware demo in <1 year. We’re already seeing signs of early pay-off with the synergies with in-house mechatronics expertise and supply chains. If rumors are to be believed, the weekly unit production numbers are already quite impressive.

1X EVE is well-positioned with a large head-start in building out an already impressive hardware platform and the buy-in of OpenAI. I think they’re one of the leaders in building out a scalable data flywheel, both on the technical vision as well as operations.

Figure 01 is one of the best-funded players led by a superstar founder, who has a proven track record of shipping hard tech products. Scaling up a ton in 2024.

Agility Digit is one of the only humanoids that’s actually deployed and generating value, thanks to a productive partnership with warehouse logistics for Amazon.

Sanctuary Phoenix has made rapid progress as one of the few manipulation-first humanoid companies.

Boston Dynamics Atlas is of course the OG, but it’s unclear whether Atlas will ever be truly scaled up and productionized to consumer markets, especially relative to Spot and Stretch.

Unitree H1: Unitree did for quadrupeds what DJI did for drones. Absolutely believable that the “Unitree for humanoids” will just be… Unitree?

AgiBot RAISE-A1 is an upstart Chinese company with an ambitious roadmap (I highly recommend the founder’s amazing YouTube). From inception to an on-stage demo of self-powered bipedal locomotion without support, it took less than 6 months

Clone is taking a unique approach with bio-mimetic musculoskeletal humanoids. Big bet to swing for the fences, and could be a major differentiator in the future.

Top Picks and Conclusion

What a year! What was meant to be a brief summary exercise for just myself has turned into a massive blog post, but there’s just been so many exciting developments this year. Just for fun, here are my top picks for results that influenced my mental model the most in 2023! I’ll list one Google Result and one non-Google Result for each trend:

Trend 1: Increasing Foundation Model Bandwidths

Top Non-Google Result: Eureka really impressed me with how much robotics and action understanding is already contained in SOTA foundation models! And convinced me to study code as a reasoning modality a lot more seriously.

Top Google Result: RT-Trajectory changed my mind about whether motion generalization is actually possible for imitation learning methods. I’m increasingly convinced that the answer is yes, if you figure out the right interface and if you figure out how foundation models can help you achieve this!

Trend 2: New Generalist Robot Foundation Models

Top Non-Google Result: GNM shows an example of what a mature robot foundational model looks like in robot navigation: robust performance out of the box, exhibiting zero shot generalization to new embodiments, extremely easy to extend or finetune. While navigation is in many ways a simpler problem than manipulation, GNM raises the bar on what a fully shipped robot foundation model should look like.

Top Google Result: RT-2 made me think about what the robot learning world might look like in the future if everything is just one model. No more data augmentation, no more representations, no more delineations between what counts as reasoning vs. what counts as control – everything is just one model, one problem. I think the jury is still out, but it’s clear that we can now actually go ahead and try this and test it out! Even if it’s not the final answer to robotics, it’s going to be a very strong baseline to beat.

Trend 3: Robot Data Engines

Top Non-Google Result: ALOHA has such an oversized impact with everything from the data collection system to the mind-blowing “you need to see it to believe it” results of sample efficient long-horizon dexterous tasks sort of just working.

Top Google Result: Open X-Embodiment seemed from the start that it would be a clear win (who doesn’t want more data?) but the sheer scale and magnitude of contributions from the entire community impressed me. I’m more optimistic than ever about increasing robot data by a few orders of magnitude together as a community.

Trend 4: Effective Low-level Control

Top Non-Google Result: Diffusion Policies have been rapidly adopted across industry and academia, and they seem to work really well. The expressivity and stability of Diffusion Policies have increasingly convinced me that they are not just a fad, but likely a new learning approach that is here to stay.

Top Google Result: Barkour showed that it’s possible to “just distill” specialist policies across multiple distribution shifts under the right conditions. I was already pretty optimistic about generalist data sponge policies consuming diverse data sources, but the impressive real world results (which push on many dimensions like speed, agility, and robustness) have convinced me even more.

Trend 5: Humanoid Race Begins

Top Non-Google Result: 1X teleoperation demo in-person is absolutely phenomenal. The reactivity, the embodiment, the dexterity – it really felt like I was in a VR video game, rather than controlling a real robot.

Top Google Result: N/A

"基础模型时代的机器人技术" —— Robotics in the Era of Foundation Models的更多相关文章

- LTE时代的定位技术:OTDOA,LPP,SUPL2.0

LTE时代的定位技术:OTDOA,LPP,SUPL2.0 移动定位技术的发展历程 如今智能手机已经在整个社会普及,数量众多的手机应用成为了人们生活当中不可或缺的一部分.越来越多的手机应用都用到了手机定 ...

- 香港城市大学:全球首创3D打印微型机器人技术 有望作治疗癌症用途

香港城市大学(香港城大)的研究团队开发出了全球首创以磁力控制的3D打印微型机器人,该微型机器人技术能做到在生物体内精准运载细胞到指定的位置.新研发的微型机器人有望应用在治疗癌症的靶向治疗,并为细胞层面 ...

- 香港城大:首创3D打印磁控微型机器人技术推动人体送药研究发展

香港城市大学研究团队全球首创由磁力推动.3D打印的微型机器人技术,能于生物体内精确地运载细胞到指定位置,预料可用作人体送药,为癌症治疗.细胞层面的治疗.再生医学等方面的应用,带来革命性改变. 近年,再 ...

- linux下bus、devices和platform的基础模型

转自:http://blog.chinaunix.net/uid-20672257-id-3147337.html 一.kobject的定义:kobject是Linux2.6引入的设备管理机制,在内核 ...

- ThinkPHP 学习笔记 ( 三 ) 数据库操作之数据表模型和基础模型 ( Model )

//TP 恶补ing... 一.定义数据表模型 1.模型映射 要测试数据库是否正常连接,最直接的办法就是在当前控制器中实例化数据表,然后使用 dump 函数输出,查看数据库的链接状态.代码: publ ...

- ChatGirl 一个基于 TensorFlow Seq2Seq 模型的聊天机器人[中文文档]

ChatGirl 一个基于 TensorFlow Seq2Seq 模型的聊天机器人[中文文档] 简介 简单地说就是该有的都有了,但是总体跑起来效果还不好. 还在开发中,它工作的效果还不好.但是你可以直 ...

- Java基础-反射(reflect)技术详解

Java基础-反射(reflect)技术详解 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 一.类加载器 1>.JVM 类加载机制 如下图所示,JVM类加载机制分为五个部分 ...

- 一个标准的,兼容性很好的div仿框架的基础模型!

<!DOCTYPE html> <html > <head> <meta http-equiv="Content-Type" conten ...

- ThinkPHP 数据库操作之数据表模型和基础模型 ( Model )

一.定义数据表模型 1.模型映射 要测试数据库是否正常连接,最直接的办法就是在当前控制器中实例化数据表,然后使用 dump 函数输出,查看数据库的链接状态.代码: public function te ...

- [您有新的未分配科技点]博弈论进阶:似乎不那么恐惧了…… (SJ定理,简单的基础模型)

这次,我们来继续学习博弈论的知识.今天我们会学习更多的基础模型,以及SJ定理的应用. 首先,我们来看博弈论在DAG上的应用.首先来看一个小例子:在一个有向无环图中,有一个棋子从某一个点开始一直向它的出 ...

随机推荐

- 2023CSP-S游记

2023 CSP-S 游记 赛前 上午去花卉市场看了半天花,算是放松放松,主要是为了晚上给干妈过50岁生日. 还以为是 2 点开始,1 点 40 多就到了,然后去买了杯奶茶,然后进场. 结果我是第一考 ...

- UDP 发送两遍对比一致能绝对判定发送过程成功传递完整数据吗

UDP 发送两边对比一致,能确定数据传输无错误吗 对比两条相同数据的MD5 这样做可行吗

- Oracle使用序列和触发器设置自增字段

一.创建一张工作表 例: create table tv(ID NUMBER primary key,TVNAME VARCHAR(16),ISPASS NUMBER); 二.先创建一个序列 cr ...

- Vue学习:10.v标签综合-进阶版

再来一节v标签综合... 实例:水果购物车 实现功能: 显示水果列表:展示可供选择的水果列表,包括名称.价格等信息. 修改水果数量:允许用户在购物车中增加或减少水果的数量. 删除水果:允许用户从购物车 ...

- rust 程序设计笔记(1)

简介 - Rust 程序设计语言 简体中文版 hello world & rust相关工具使用 hello world rustc rustc --version complie .rs pr ...

- CS后门源码特征分析与IDS入侵检测

CS后门源码特征分析与IDS入侵检测考核作业 上线x64 getshell 抓心跳包,对特征字符解密Uqd3 用java的checksum8算法得到93,说明是x64的木马 public class ...

- azure 代码管理器网址

visual studio 团队资源管理器 azure代码管理网页 记录一下 https://dev.azure.com

- 有点儿神奇,原来vue3的setup语法糖中组件无需注册因为这个

前言 众所周知,在vue2的时候使用一个vue组件要么全局注册,要么局部注册.但是在setup语法糖中直接将组件import导入无需注册就可以使用,你知道这是为什么呢?注:本文中使用的vue版本为3. ...

- Linux多网卡的bond模式原理

Linux多网卡绑定 网卡绑定mode共有7种: bond0,bond1,bond2,bond3,bond4,bond5,bond6,bond7 常用的有三种: mode=0: 平衡负载模式, ...

- FFmpeg开发笔记(三十二)利用RTMP协议构建电脑与手机的直播Demo

不管是传统互联网还是移动互联网,实时数据传输都是刚需,比如以QQ.微信为代表的即时通信工具,能够实时传输文本和图片.其中一对一的图文通信叫做私聊,多对多的图文通信叫做群聊. 除了常见的图文即时通信,还 ...