cuda中threadIdx、blockIdx、blockDim和gridDim的使用

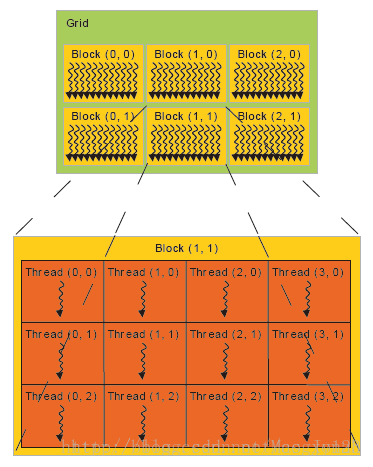

threadIdx是一个uint3类型,表示一个线程的索引。

blockIdx是一个uint3类型,表示一个线程块的索引,一个线程块中通常有多个线程。

blockDim是一个dim3类型,表示线程块的大小。

gridDim是一个dim3类型,表示网格的大小,一个网格中通常有多个线程块。

下面这张图比较清晰的表示的几个概念的关系:

cuda 通过<<< >>>符号来分配索引线程的方式,我知道的一共有15种索引方式。

下面程序展示了这15种索引方式:

#include "cuda_runtime.h"

#include "device_launch_parameters.h" #include <stdio.h>

#include <stdlib.h>

#include <iostream> using namespace std; //thread 1D

__global__ void testThread1(int *c, const int *a, const int *b)

{

int i = threadIdx.x;

c[i] = b[i] - a[i];

} //thread 2D

__global__ void testThread2(int *c, const int *a, const int *b)

{

int i = threadIdx.x + threadIdx.y*blockDim.x;

c[i] = b[i] - a[i];

} //thread 3D

__global__ void testThread3(int *c, const int *a, const int *b)

{

int i = threadIdx.x + threadIdx.y*blockDim.x + threadIdx.z*blockDim.x*blockDim.y;

c[i] = b[i] - a[i];

} //block 1D

__global__ void testBlock1(int *c, const int *a, const int *b)

{

int i = blockIdx.x;

c[i] = b[i] - a[i];

} //block 2D

__global__ void testBlock2(int *c, const int *a, const int *b)

{

int i = blockIdx.x + blockIdx.y*gridDim.x;

c[i] = b[i] - a[i];

} //block 3D

__global__ void testBlock3(int *c, const int *a, const int *b)

{

int i = blockIdx.x + blockIdx.y*gridDim.x + blockIdx.z*gridDim.x*gridDim.y;

c[i] = b[i] - a[i];

} //block-thread 1D-1D

__global__ void testBlockThread1(int *c, const int *a, const int *b)

{

int i = threadIdx.x + blockDim.x*blockIdx.x;

c[i] = b[i] - a[i];

} //block-thread 1D-2D

__global__ void testBlockThread2(int *c, const int *a, const int *b)

{

int threadId_2D = threadIdx.x + threadIdx.y*blockDim.x;

int i = threadId_2D+ (blockDim.x*blockDim.y)*blockIdx.x;

c[i] = b[i] - a[i];

} //block-thread 1D-3D

__global__ void testBlockThread3(int *c, const int *a, const int *b)

{

int threadId_3D = threadIdx.x + threadIdx.y*blockDim.x + threadIdx.z*blockDim.x*blockDim.y;

int i = threadId_3D + (blockDim.x*blockDim.y*blockDim.z)*blockIdx.x;

c[i] = b[i] - a[i];

} //block-thread 2D-1D

__global__ void testBlockThread4(int *c, const int *a, const int *b)

{

int blockId_2D = blockIdx.x + blockIdx.y*gridDim.x;

int i = threadIdx.x + blockDim.x*blockId_2D;

c[i] = b[i] - a[i];

} //block-thread 3D-1D

__global__ void testBlockThread5(int *c, const int *a, const int *b)

{

int blockId_3D = blockIdx.x + blockIdx.y*gridDim.x + blockIdx.z*gridDim.x*gridDim.y;

int i = threadIdx.x + blockDim.x*blockId_3D;

c[i] = b[i] - a[i];

} //block-thread 2D-2D

__global__ void testBlockThread6(int *c, const int *a, const int *b)

{

int threadId_2D = threadIdx.x + threadIdx.y*blockDim.x;

int blockId_2D = blockIdx.x + blockIdx.y*gridDim.x;

int i = threadId_2D + (blockDim.x*blockDim.y)*blockId_2D;

c[i] = b[i] - a[i];

} //block-thread 2D-3D

__global__ void testBlockThread7(int *c, const int *a, const int *b)

{

int threadId_3D = threadIdx.x + threadIdx.y*blockDim.x + threadIdx.z*blockDim.x*blockDim.y;

int blockId_2D = blockIdx.x + blockIdx.y*gridDim.x;

int i = threadId_3D + (blockDim.x*blockDim.y*blockDim.z)*blockId_2D;

c[i] = b[i] - a[i];

} //block-thread 3D-2D

__global__ void testBlockThread8(int *c, const int *a, const int *b)

{

int threadId_2D = threadIdx.x + threadIdx.y*blockDim.x;

int blockId_3D = blockIdx.x + blockIdx.y*gridDim.x + blockIdx.z*gridDim.x*gridDim.y;

int i = threadId_2D + (blockDim.x*blockDim.y)*blockId_3D;

c[i] = b[i] - a[i];

} //block-thread 3D-3D

__global__ void testBlockThread9(int *c, const int *a, const int *b)

{

int threadId_3D = threadIdx.x + threadIdx.y*blockDim.x + threadIdx.z*blockDim.x*blockDim.y;

int blockId_3D = blockIdx.x + blockIdx.y*gridDim.x + blockIdx.z*gridDim.x*gridDim.y;

int i = threadId_3D + (blockDim.x*blockDim.y*blockDim.z)*blockId_3D;

c[i] = b[i] - a[i];

} void addWithCuda(int *c, const int *a, const int *b, unsigned int size)

{

int *dev_a = ;

int *dev_b = ;

int *dev_c = ; cudaSetDevice(); cudaMalloc((void**)&dev_c, size * sizeof(int));

cudaMalloc((void**)&dev_a, size * sizeof(int));

cudaMalloc((void**)&dev_b, size * sizeof(int)); cudaMemcpy(dev_a, a, size * sizeof(int), cudaMemcpyHostToDevice);

cudaMemcpy(dev_b, b, size * sizeof(int), cudaMemcpyHostToDevice); //testThread1<<<1, size>>>(dev_c, dev_a, dev_b); //uint3 s;s.x = size/5;s.y = 5;s.z = 1;

//testThread2 <<<1,s>>>(dev_c, dev_a, dev_b); //uint3 s; s.x = size / 10; s.y = 5; s.z = 2;

//testThread3<<<1, s >>>(dev_c, dev_a, dev_b); //testBlock1<<<size,1 >>>(dev_c, dev_a, dev_b); //uint3 s; s.x = size / 5; s.y = 5; s.z = 1;

//testBlock2<<<s, 1 >>>(dev_c, dev_a, dev_b); //uint3 s; s.x = size / 10; s.y = 5; s.z = 2;

//testBlock3<<<s, 1 >>>(dev_c, dev_a, dev_b); //testBlockThread1<<<size/10, 10>>>(dev_c, dev_a, dev_b); //uint3 s1; s1.x = size / 100; s1.y = 1; s1.z = 1;

//uint3 s2; s2.x = 10; s2.y = 10; s2.z = 1;

//testBlockThread2 << <s1, s2 >> >(dev_c, dev_a, dev_b); //uint3 s1; s1.x = size / 100; s1.y = 1; s1.z = 1;

//uint3 s2; s2.x = 10; s2.y = 5; s2.z = 2;

//testBlockThread3 << <s1, s2 >> >(dev_c, dev_a, dev_b); //uint3 s1; s1.x = 10; s1.y = 10; s1.z = 1;

//uint3 s2; s2.x = size / 100; s2.y = 1; s2.z = 1;

//testBlockThread4 << <s1, s2 >> >(dev_c, dev_a, dev_b); //uint3 s1; s1.x = 10; s1.y = 5; s1.z = 2;

//uint3 s2; s2.x = size / 100; s2.y = 1; s2.z = 1;

//testBlockThread5 << <s1, s2 >> >(dev_c, dev_a, dev_b); //uint3 s1; s1.x = size / 100; s1.y = 10; s1.z = 1;

//uint3 s2; s2.x = 5; s2.y = 2; s2.z = 1;

//testBlockThread6 << <s1, s2 >> >(dev_c, dev_a, dev_b); //uint3 s1; s1.x = size / 100; s1.y = 5; s1.z = 1;

//uint3 s2; s2.x = 5; s2.y = 2; s2.z = 2;

//testBlockThread7 << <s1, s2 >> >(dev_c, dev_a, dev_b); //uint3 s1; s1.x = 5; s1.y = 2; s1.z = 2;

//uint3 s2; s2.x = size / 100; s2.y = 5; s2.z = 1;

//testBlockThread8 <<<s1, s2 >>>(dev_c, dev_a, dev_b); uint3 s1; s1.x = ; s1.y = ; s1.z = ;

uint3 s2; s2.x = size / ; s2.y = ; s2.z = ;

testBlockThread9<<<s1, s2 >>>(dev_c, dev_a, dev_b); cudaMemcpy(c, dev_c, size*sizeof(int), cudaMemcpyDeviceToHost); cudaFree(dev_a);

cudaFree(dev_b);

cudaFree(dev_c); cudaGetLastError();

} int main()

{

const int n = ; int *a = new int[n];

int *b = new int[n];

int *c = new int[n];

int *cc = new int[n]; for (int i = ; i < n; i++)

{

a[i] = rand() % ;

b[i] = rand() % ;

c[i] = b[i] - a[i];

} addWithCuda(cc, a, b, n); FILE *fp = fopen("out.txt", "w");

for (int i = ; i < n; i++)

fprintf(fp, "%d %d\n", c[i], cc[i]);

fclose(fp); bool flag = true;

for (int i = ; i < n; i++)

{

if (c[i] != cc[i])

{

flag = false;

break;

}

} if (flag == false)

printf("no pass");

else

printf("pass"); cudaDeviceReset(); delete[] a;

delete[] b;

delete[] c;

delete[] cc; getchar();

return ;

}

这里只保留了3D-3D方式,注释了其余14种方式,所有索引方式均测试通过。

还是能看出一些规律的:)

cuda中threadIdx、blockIdx、blockDim和gridDim的使用的更多相关文章

- GPU CUDA编程中threadIdx, blockIdx, blockDim, gridDim之间的区别与联系

前期写代码的时候都会困惑这个实际的threadIdx(tid,实际的线程id)到底是多少,自己写出来的对不对,今天经过自己一些小例子的推敲,以及找到官网的相关介绍,总算自己弄清楚了. 在启动kerne ...

- CUDA中的常量内存__constant__

GPU包含数百个数学计算单元,具有强大的处理运算能力,可以强大到计算速率高于输入数据的速率,即充分利用带宽,满负荷向GPU传输数据还不够它计算的.CUDA C除全局内存和共享内存外,还支持常量内存,常 ...

- CUDA中关于C++特性的限制

CUDA中关于C++特性的限制 CUDA官方文档中对C++语言的支持和限制,懒得每次看英文文档,自己尝试翻译一下(没有放lambda表达式的相关内容,太过于复杂,我选择不用).官方文档https:// ...

- CUDA中并行规约(Parallel Reduction)的优化

转自: http://hackecho.com/2013/04/cuda-parallel-reduction/ Parallel Reduction是NVIDIA-CUDA自带的例子,也几乎是所有C ...

- OpenCV二维Mat数组(二级指针)在CUDA中的使用

CUDA用于并行计算非常方便,但是GPU与CPU之间的交互,比如传递参数等相对麻烦一些.在写CUDA核函数的时候形参往往会有很多个,动辄达到10-20个,如果能够在CPU中提前把数据组织好,比如使用二 ...

- cuda中当数组数大于线程数的处理方法

参考stackoverflow一篇帖子的处理方法:https://stackoverflow.com/questions/26913683/different-way-to-index-threads ...

- CUDA中多维数组以及多维纹理内存的使用

纹理存储器(texture memory)是一种只读存储器,由GPU用于纹理渲染的图形专用单元发展而来,因此也提供了一些特殊功能.纹理存储器中的数据位于显存,但可以通过纹理缓存加速读取.在纹理存储器中 ...

- cuda中当元素个数超过线程个数时的处理案例

项目打包下载 当向量元素超过线程个数时的情况 向量元素个数为(33 * 1024)/(128 * 128)=2.x倍 /* * Copyright 1993-2010 NVIDIA Corporati ...

- CUDA中使用多维数组

今天想起一个问题,看到的绝大多数CUDA代码都是使用的一维数组,是否可以在CUDA中使用一维数组,这是一个问题,想了各种问题,各种被77的错误状态码和段错误折磨,最后发现有一个cudaMallocMa ...

随机推荐

- JavaScript初探一

<!DOCTYPE html> <html xmlns="http://www.w3.org/1999/xhtml"> <head> <m ...

- 12 二叉树-链式存储-二叉排序树(BST)

呜呜 写这个东西花了我2天 居然花了两天!!我还要写AVL呢啊啊啊啊啊啊啊!!!!!! 等下还要跑去上自习 大早上起来脸都没洗现在先赶紧发博客 昨晚写出来了独自在其他人都睡着了的宿舍狂喜乱舞.. 迷之 ...

- 记一次解决CSS定位bug思路

事因 网站中的遮罩层大都有一个问题,就是在这个遮罩层中滑动,里面的内容也会跟着滑动,我是这样想的,既然都有这个问题,干脆写一个通用的插件出来,省的每个还得单独处理.如果是单独处理这个问题是比较好解决的 ...

- mysql通信协议的半双工机制理解

一.通信知识中的半双工概念 通信的方式分为:单工通信,半双工,全双工. 全双工的典型例子是:打电话.电话在接到声音的同时也会传递声音.在一个时刻,线路上允许两个方向上的数据传输.网卡也是双工模式.在接 ...

- 卸载或重新安装Redis集群

卸载或重新安装Redis集群 1.如果需要修改端口号,则需要将原来的Redis各节点的服务器卸载,并重新安装, 卸载服务命令如下: D:/Redis/redis-server.exe --servic ...

- 厌倦了“正在输入…”的客服对话,是时候pick视频客服了

欢迎大家前往腾讯云+社区,获取更多腾讯海量技术实践干货哦~ 本文由腾讯云视频发表于云+社区专栏 关注公众号"腾讯云视频",一键获取 技术干货 | 优惠活动 | 视频方案 什么?! ...

- Java设计模式学习记录-单例模式

前言 已经介绍和学习了两个创建型模式了,今天来学习一下另一个非常常见的创建型模式,单例模式. 单例模式也被称为单件模式(或单体模式),主要作用是控制某个类型的实例数量是一个,而且只有一个. 单例模式 ...

- FFmpeg简易播放器的实现-音频播放

本文为作者原创,转载请注明出处:https://www.cnblogs.com/leisure_chn/p/10068490.html 基于FFmpeg和SDL实现的简易视频播放器,主要分为读取视频文 ...

- UVa 122 Trees on the level(链式二叉树的建立和层次遍历)

题目链接: https://cn.vjudge.net/problem/UVA-122 /* 问题 给出每个节点的权值和路线,输出该二叉树的层次遍历序列. 解题思路 根据输入构建链式二叉树,再用广度优 ...

- Webscoket

websocket: http://blog.csdn.net/xiaoping0915/article/details/78754482 很好的讲解了websocket ,还有一个小例子 ht ...