Octavia 创建 Listener、Pool、Member、L7policy、L7 rule 与 Health Manager 的实现与分析

目录

文章目录

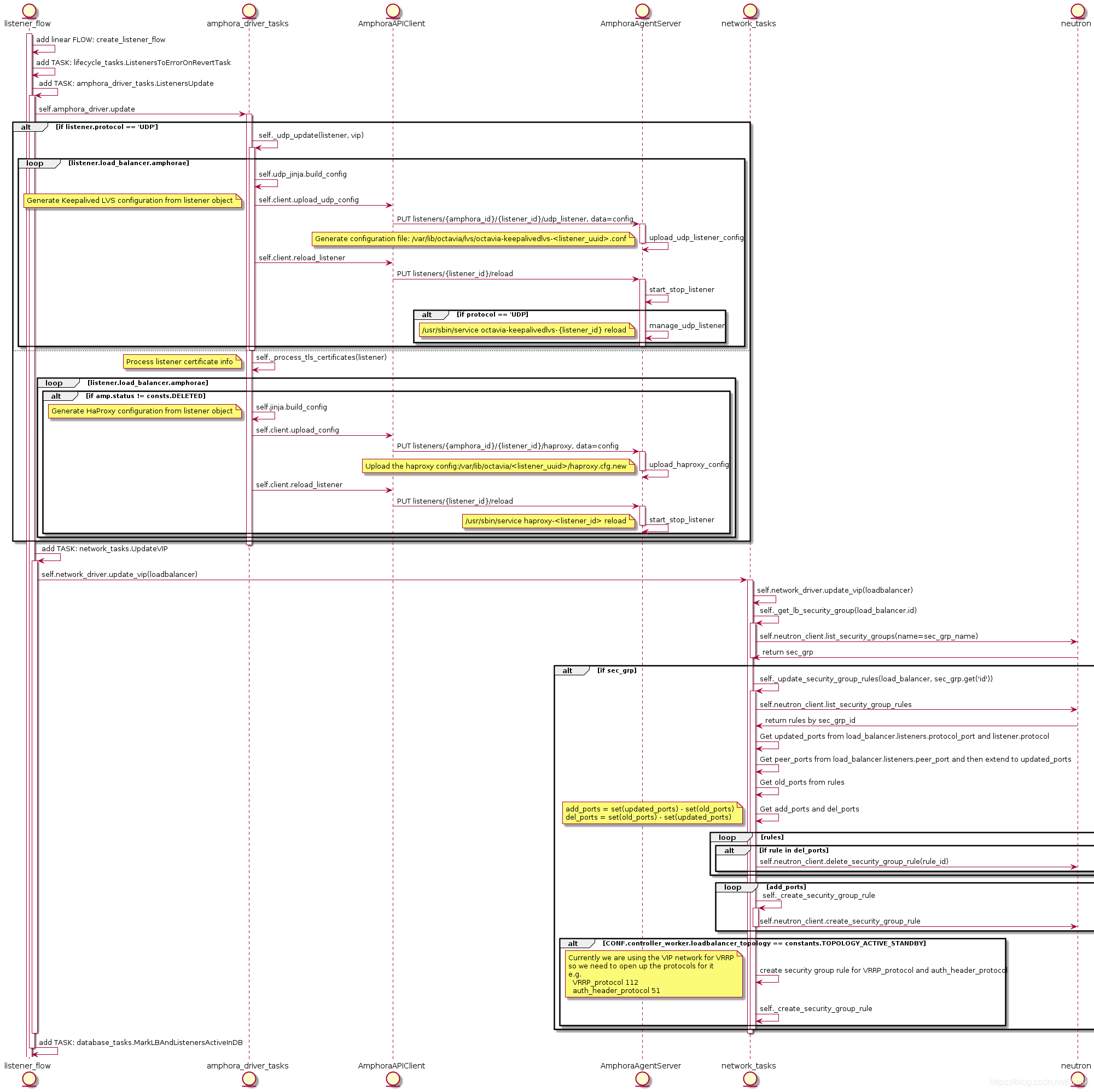

创建 Listener

我们知道只有为 loadbalancer 创建 listener 时才会启动 haproxy 服务进程。

从 UML 可知,执行指令 openstack loadbalancer listener create --protocol HTTP --protocol-port 8080 lb-1 创建 Listener 时会执行到 Task:ListenersUpdate,由 ListenersUpdate 完成了 haproxy 配置文件的 Upload 和 haproxy 服务进程的 Reload。

配置文件 /var/lib/octavia/1385d3c4-615e-4a92-aea1-c4fa51a75557/haproxy.cfg,其中 1385d3c4-615e-4a92-aea1-c4fa51a75557 为 Listener UUID:

# Configuration for loadbalancer 01197be7-98d5-440d-a846-cd70f52dc503

global

daemon

user nobody

log /dev/log local0

log /dev/log local1 notice

stats socket /var/lib/octavia/1385d3c4-615e-4a92-aea1-c4fa51a75557.sock mode 0666 level user

maxconn 1000000

defaults

log global

retries 3

option redispatch

peers 1385d3c4615e4a92aea1c4fa51a75557_peers

peer l_Ustq0qE-h-_Q1dlXLXBAiWR8U 172.16.1.7:1025

peer O08zAgUhIv9TEXhyYZf2iHdxOkA 172.16.1.3:1025

frontend 1385d3c4-615e-4a92-aea1-c4fa51a75557

option httplog

maxconn 1000000

bind 172.16.1.10:8080

mode http

timeout client 50000

因为此时的 Listener 只指定了监听的协议和端口,所以 frontend section 也设置了相应的 bind 172.16.1.10:8080 和 mode http。

服务进程:systemctl status haproxy-1385d3c4-615e-4a92-aea1-c4fa51a75557.service 其中 1385d3c4-615e-4a92-aea1-c4fa51a75557 为 Listener UUID:

# file: /usr/lib/systemd/system/haproxy-1385d3c4-615e-4a92-aea1-c4fa51a75557.service

[Unit]

Description=HAProxy Load Balancer

After=network.target syslog.service amphora-netns.service

Before=octavia-keepalived.service

Wants=syslog.service

Requires=amphora-netns.service

[Service]

# Force context as we start haproxy under "ip netns exec"

SELinuxContext=system_u:system_r:haproxy_t:s0

Environment="CONFIG=/var/lib/octavia/1385d3c4-615e-4a92-aea1-c4fa51a75557/haproxy.cfg" "USERCONFIG=/var/lib/octavia/haproxy-default-user-group.conf" "PIDFILE=/var/lib/octavia/1385d3c4-615e-4a92-aea1-c4fa51a75557/1385d3c4-615e-4a92-aea1-c4fa51a75557.pid"

ExecStartPre=/usr/sbin/haproxy -f $CONFIG -f $USERCONFIG -c -q -L O08zAgUhIv9TEXhyYZf2iHdxOkA

ExecReload=/usr/sbin/haproxy -c -f $CONFIG -f $USERCONFIG -L O08zAgUhIv9TEXhyYZf2iHdxOkA

ExecReload=/bin/kill -USR2 $MAINPID

ExecStart=/sbin/ip netns exec amphora-haproxy /usr/sbin/haproxy-systemd-wrapper -f $CONFIG -f $USERCONFIG -p $PIDFILE -L O08zAgUhIv9TEXhyYZf2iHdxOkA

KillMode=mixed

Restart=always

LimitNOFILE=2097152

[Install]

WantedBy=multi-user.target

从服务进程配置可以看出实际启动的服务为 /usr/sbin/haproxy-systemd-wrapper,它是运行在 namespace amphora-haproxy 中的,该脚本做的事情可以从日志了解到:

Nov 15 10:12:01 amphora-cd444019-ce8f-4f89-be6b-0edf76f41b77 ip[13206]: haproxy-systemd-wrapper: executing /usr/sbin/haproxy -f /var/lib/octavia/1385d3c4-615e-4a92-aea1-c4fa51a75557/haproxy.cfg -f /var/lib/octavia/haproxy-default-user-group.conf -p /var/lib/octavia/1385d3c4-615e-4a92-aea1-c4fa51a75557/1385d3c4-615e-4a92-aea1-c4fa51a75557.pid -L O08zAgUhIv9TEXhyYZf2iHdxOkA -Ds

就是调用了 /usr/sbin/haproxy 指令而已。

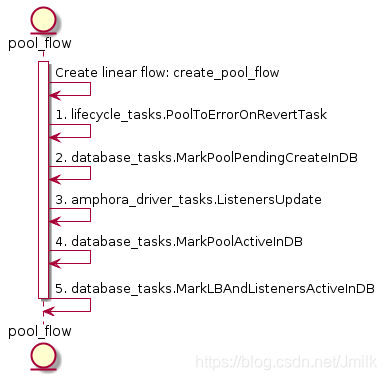

创建 Pool

我们还是主要关注 octavia-worker 的 create pool flow。

create pool flow 中最重要的还是在于 Task:ListenersUpdate,更新 haproxy 的配置文件。比如:我们执行指令 openstack loadbalancer pool create --protocol HTTP --lb-algorithm ROUND_ROBIN --listener 1385d3c4-615e-4a92-aea1-c4fa51a75557 为 listener 创建一个 default pool。

[root@control01 ~]# openstack loadbalancer listener list

+--------------------------------------+--------------------------------------+------+----------------------------------+----------+---------------+----------------+

| id | default_pool_id | name | project_id | protocol | protocol_port | admin_state_up |

+--------------------------------------+--------------------------------------+------+----------------------------------+----------+---------------+----------------+

| 1385d3c4-615e-4a92-aea1-c4fa51a75557 | 8196f752-a367-4fb4-9194-37c7eab95714 | | 9e4fe13a6d7645269dc69579c027fde4 | HTTP | 8080 | True |

+--------------------------------------+--------------------------------------+------+----------------------------------+----------+---------------+----------------+

haproxy 的配置文件会添加一个 backend section,并且根据上述 CLI 传入的参数设定了 backend mode http 和 balance roundrobin。

# Configuration for loadbalancer 01197be7-98d5-440d-a846-cd70f52dc503

global

daemon

user nobody

log /dev/log local0

log /dev/log local1 notice

stats socket /var/lib/octavia/1385d3c4-615e-4a92-aea1-c4fa51a75557.sock mode 0666 level user

maxconn 1000000

defaults

log global

retries 3

option redispatch

peers 1385d3c4615e4a92aea1c4fa51a75557_peers

peer l_Ustq0qE-h-_Q1dlXLXBAiWR8U 172.16.1.7:1025

peer O08zAgUhIv9TEXhyYZf2iHdxOkA 172.16.1.3:1025

frontend 1385d3c4-615e-4a92-aea1-c4fa51a75557

option httplog

maxconn 1000000

bind 172.16.1.10:8080

mode http

default_backend 8196f752-a367-4fb4-9194-37c7eab95714 # UUID of pool

timeout client 50000

backend 8196f752-a367-4fb4-9194-37c7eab95714

mode http

balance roundrobin

fullconn 1000000

option allbackups

timeout connect 5000

timeout server 50000

需要注意的是,创建一个 pool 时可以指定一个 listener uuid 或者 loadbalancer uuid。当指定了前者时,意味着为 listener 指定了一个 default pool,listener 只能有一个 default pool。后续再为该 listener 指定 default pool 则会触发异常;当指定了 loadbalancer uuid 时,则创建了一个 shared pool。shared pool 能被同属一个 loadbalancer 下的所有 listener 共享,常被用于辅助实现 l7policy 的功能。当 Listener 的 l7policy 动作被设定为为「转发至另一个 pool」时,此时就可以选定一个 shared pool。shared pool 可以接受同属 loadbalancer 下所有 listener 的转发请求。

执行指令创建一个 shared pool:

[root@control01 ~]# openstack loadbalancer pool create --protocol HTTP --lb-algorithm ROUND_ROBIN --loadbalancer 01197be7-98d5-440d-a846-cd70f52dc503

+---------------------+--------------------------------------+

| Field | Value |

+---------------------+--------------------------------------+

| admin_state_up | True |

| created_at | 2018-11-20T03:35:08 |

| description | |

| healthmonitor_id | |

| id | 822f78c3-ea2c-4770-bef0-e97f1ac2eba8 |

| lb_algorithm | ROUND_ROBIN |

| listeners | |

| loadbalancers | 01197be7-98d5-440d-a846-cd70f52dc503 |

| members | |

| name | |

| operating_status | OFFLINE |

| project_id | 9e4fe13a6d7645269dc69579c027fde4 |

| protocol | HTTP |

| provisioning_status | PENDING_CREATE |

| session_persistence | None |

| updated_at | None |

+---------------------+--------------------------------------+

注意,单纯的创建 shared pool 不将其绑定到 listener 的话,listener 对应的 haproxy 配置文件的内容是不会被更改的。

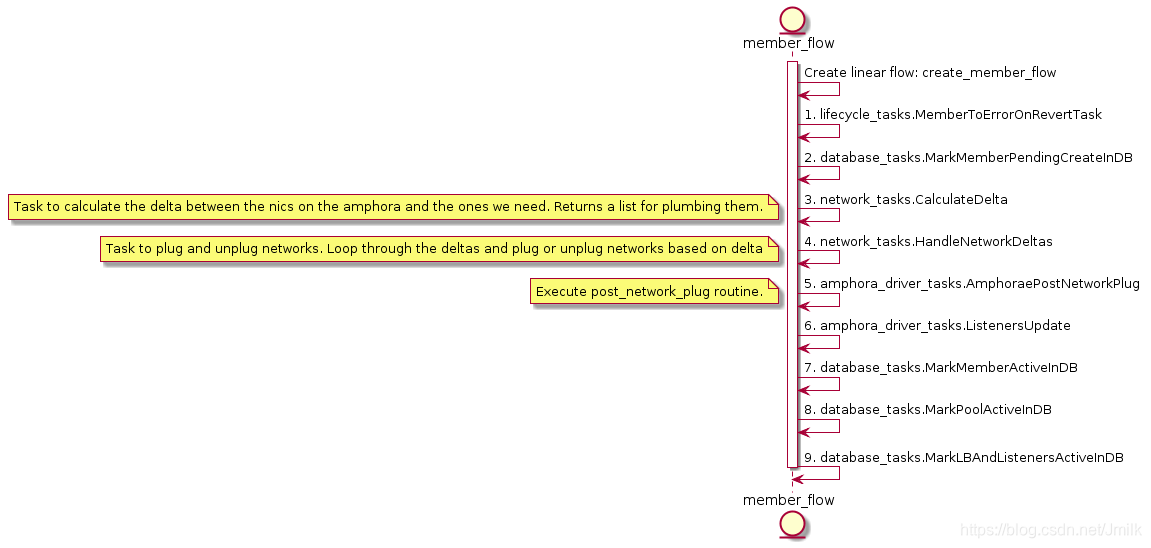

创建 Member

使用下述指令创建一个 member 到 default pool,选项指定了云主机所在的 subnet、ipaddress 以及接收数据转发的 protocol-port。

[root@control01 ~]# openstack loadbalancer member create --subnet-id 2137f3fb-00ee-41a9-b66e-06705c724a36 --address 192.168.1.14 --protocol-port 80 8196f752-a367-4fb4-9194-37c7eab95714

+---------------------+--------------------------------------+

| Field | Value |

+---------------------+--------------------------------------+

| address | 192.168.1.14 |

| admin_state_up | True |

| created_at | 2018-11-20T06:09:58 |

| id | b6e464fd-dd1e-4775-90f2-4231444a0bbe |

| name | |

| operating_status | NO_MONITOR |

| project_id | 9e4fe13a6d7645269dc69579c027fde4 |

| protocol_port | 80 |

| provisioning_status | PENDING_CREATE |

| subnet_id | 2137f3fb-00ee-41a9-b66e-06705c724a36 |

| updated_at | None |

| weight | 1 |

| monitor_port | None |

| monitor_address | None |

| backup | False |

+---------------------+--------------------------------------+

在 octavia-api 层面首先会通过配置 CONF.networking.reserved_ips 验证该 menber 的 ipaddress 是否可用,验证 member 所在的 subnet 是否存在,然后再进入 octavia-worker 的流程。

CalculateDelta

CalculateDelta 轮询 loadbalancer 下属的 amphorae 执行 Task:CalculateAmphoraDelta,用于计算 Amphora 上的 NICs 数量与需要的 NICs 之间的 “差值”。

# file: /opt/rocky/octavia/octavia/controller/worker/tasks/network_tasks.py

class CalculateAmphoraDelta(BaseNetworkTask):

default_provides = constants.DELTA

def execute(self, loadbalancer, amphora):

LOG.debug("Calculating network delta for amphora id: %s", amphora.id)

# Figure out what networks we want

# seed with lb network(s)

vrrp_port = self.network_driver.get_port(amphora.vrrp_port_id)

desired_network_ids = {vrrp_port.network_id}.union(

CONF.controller_worker.amp_boot_network_list)

for pool in loadbalancer.pools:

member_networks = [

self.network_driver.get_subnet(member.subnet_id).network_id

for member in pool.members

if member.subnet_id

]

desired_network_ids.update(member_networks)

nics = self.network_driver.get_plugged_networks(amphora.compute_id)

# assume we don't have two nics in the same network

actual_network_nics = dict((nic.network_id, nic) for nic in nics)

del_ids = set(actual_network_nics) - desired_network_ids

delete_nics = list(

actual_network_nics[net_id] for net_id in del_ids)

add_ids = desired_network_ids - set(actual_network_nics)

add_nics = list(n_data_models.Interface(

network_id=net_id) for net_id in add_ids)

delta = n_data_models.Delta(

amphora_id=amphora.id, compute_id=amphora.compute_id,

add_nics=add_nics, delete_nics=delete_nics)

return delta

简单来说,首先获取所需要的 networks:desired_network_ids 和已经存在的 networks:actual_network_nics。然后计算出待删除的 networks:delete_nics 和待添加的 networks:add_nics,并最终 returns 一个 Delta data models 到 Task:HandleNetworkDeltas 执行实际的 Amphora NICs 添加与删除。

HandleNetworkDeltas

Task:HandleNetworkDelta —— Plug or unplug networks based on delta。

# file: /opt/rocky/octavia/octavia/controller/worker/tasks/network_tasks.py

class HandleNetworkDelta(BaseNetworkTask):

"""Task to plug and unplug networks

Plug or unplug networks based on delta

"""

def execute(self, amphora, delta):

"""Handle network plugging based off deltas."""

added_ports = {}

added_ports[amphora.id] = []

for nic in delta.add_nics:

interface = self.network_driver.plug_network(delta.compute_id,

nic.network_id)

port = self.network_driver.get_port(interface.port_id)

port.network = self.network_driver.get_network(port.network_id)

for fixed_ip in port.fixed_ips:

fixed_ip.subnet = self.network_driver.get_subnet(

fixed_ip.subnet_id)

added_ports[amphora.id].append(port)

for nic in delta.delete_nics:

try:

self.network_driver.unplug_network(delta.compute_id,

nic.network_id)

except base.NetworkNotFound:

LOG.debug("Network %d not found ", nic.network_id)

except Exception:

LOG.exception("Unable to unplug network")

return added_ports

根据 Task:CalculateAmphoraDelta returns 的 Delta data models 来进行 amphorae interfaces 的挂载和卸载。

# file: /opt/rocky/octavia/octavia/network/drivers/neutron/allowed_address_pairs.py

def plug_network(self, compute_id, network_id, ip_address=None):

try:

interface = self.nova_client.servers.interface_attach(

server=compute_id, net_id=network_id, fixed_ip=ip_address,

port_id=None)

except nova_client_exceptions.NotFound as e:

if 'Instance' in str(e):

raise base.AmphoraNotFound(str(e))

elif 'Network' in str(e):

raise base.NetworkNotFound(str(e))

else:

raise base.PlugNetworkException(str(e))

except Exception:

message = _('Error plugging amphora (compute_id: {compute_id}) '

'into network {network_id}.').format(

compute_id=compute_id,

network_id=network_id)

LOG.exception(message)

raise base.PlugNetworkException(message)

return self._nova_interface_to_octavia_interface(compute_id, interface)

def unplug_network(self, compute_id, network_id, ip_address=None):

interfaces = self.get_plugged_networks(compute_id)

if not interfaces:

msg = ('Amphora with compute id {compute_id} does not have any '

'plugged networks').format(compute_id=compute_id)

raise base.NetworkNotFound(msg)

unpluggers = self._get_interfaces_to_unplug(interfaces, network_id,

ip_address=ip_address)

for index, unplugger in enumerate(unpluggers):

try:

self.nova_client.servers.interface_detach(

server=compute_id, port_id=unplugger.port_id)

except Exception:

LOG.warning('Error unplugging port {port_id} from amphora '

'with compute ID {compute_id}. '

'Skipping.'.format(port_id=unplugger.port_id,

compute_id=compute_id))

最后会将 added_port return 到后续的 TASK:AmphoraePostNetworkPlug 使用。

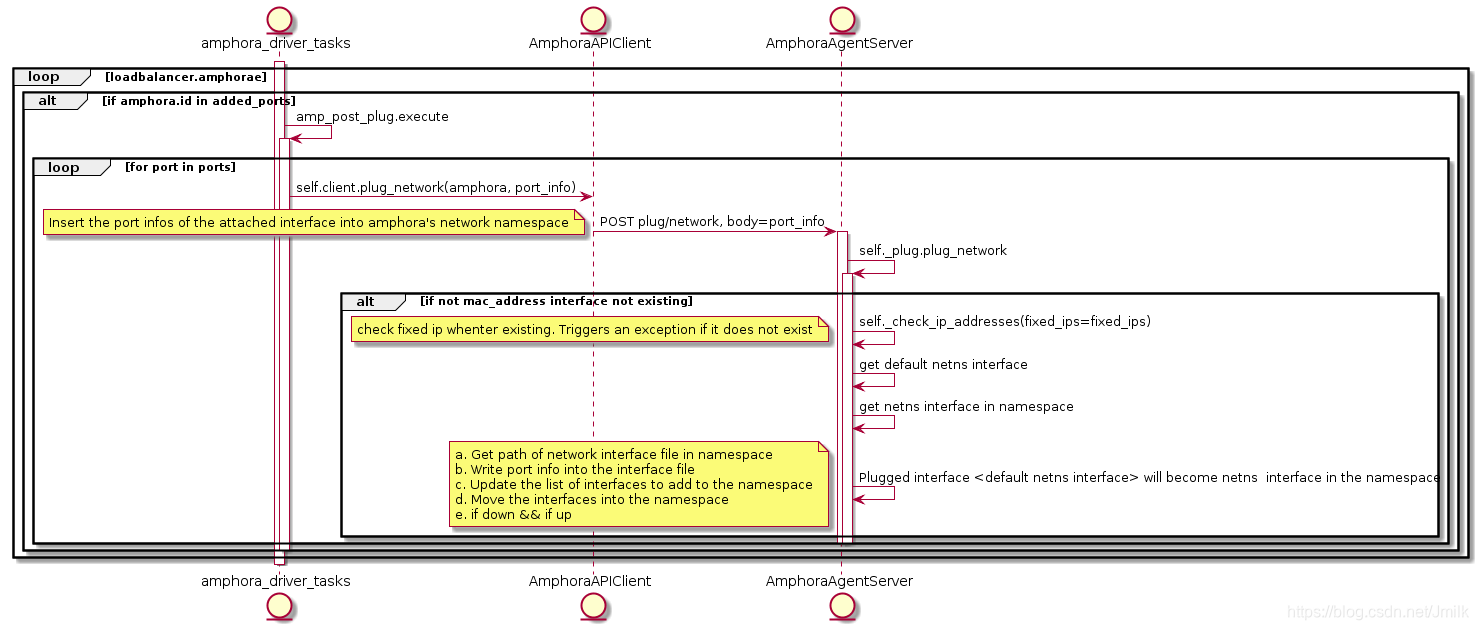

AmphoraePostNetworkPlug

Task:AmphoraePostNetworkPlug 的主要工作是将通过 novaclient 挂载到 amphora 上的 interface 注入到 namespace 中。

回顾一下 Amphora 的网络拓扑:

- Amphora 初始状态下只有一个用于与 lb-mgmt-net 通信的端口:

root@amphora-cd444019-ce8f-4f89-be6b-0edf76f41b77:~# ifconfig

ens3 Link encap:Ethernet HWaddr fa:16:3e:b6:8f:a5

inet addr:192.168.0.9 Bcast:192.168.0.255 Mask:255.255.255.0

inet6 addr: fe80::f816:3eff:feb6:8fa5/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1450 Metric:1

RX packets:19462 errors:14099 dropped:0 overruns:0 frame:14099

TX packets:70317 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:1350041 (1.3 MB) TX bytes:15533572 (15.5 MB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

如上,inet addr:192.168.0.9 是有 lb-mgmt-net DHCP 分配的。

- 在 Amphora 被分配到 loadbalancer 之后会添加一个 vrrp_port 类型的端口,vrrp_port 充当着 Keepalived 虚拟路由的一张网卡,被注入到 namespace 中,一般是 eth1。

root@amphora-cd444019-ce8f-4f89-be6b-0edf76f41b77:~# ip netns exec amphora-haproxy ifconfig

eth1 Link encap:Ethernet HWaddr fa:16:3e:f4:69:4b

inet addr:172.16.1.3 Bcast:172.16.1.255 Mask:255.255.255.0

inet6 addr: fe80::f816:3eff:fef4:694b/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1450 Metric:1

RX packets:12705 errors:0 dropped:0 overruns:0 frame:0

TX packets:613211 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:762300 (762.3 KB) TX bytes:36792968 (36.7 MB)

eth1:0 Link encap:Ethernet HWaddr fa:16:3e:f4:69:4b

inet addr:172.16.1.10 Bcast:172.16.1.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1450 Metric:1

VRRP IP: 172.16.1.3 和 VIP: 172.16.1.10 均由 lb-vip-network 的 DHCP 分配,分别对应 lb-vip-network 上的 ports octavia-lb-vrrp-<amphora_uuid> 与 octavia-lb-<loadbalancer_uuid>。interface eth1 的配置为:

root@amphora-cd444019-ce8f-4f89-be6b-0edf76f41b77:~# ip netns exec amphora-haproxy cat /etc/network/interfaces.d/eth1

auto eth1

iface eth1 inet dhcp

root@amphora-cd444019-ce8f-4f89-be6b-0edf76f41b77:~# ip netns exec amphora-haproxy cat /etc/network/interfaces.d/eth1.cfg

# Generated by Octavia agent

auto eth1 eth1:0

iface eth1 inet static

address 172.16.1.3

broadcast 172.16.1.255

netmask 255.255.255.0

gateway 172.16.1.1

mtu 1450

iface eth1:0 inet static

address 172.16.1.10

broadcast 172.16.1.255

netmask 255.255.255.0

# Add a source routing table to allow members to access the VIP

post-up /sbin/ip route add 172.16.1.0/24 dev eth1 src 172.16.1.10 scope link table 1

post-up /sbin/ip route add default via 172.16.1.1 dev eth1 onlink table 1

post-down /sbin/ip route del default via 172.16.1.1 dev eth1 onlink table 1

post-down /sbin/ip route del 172.16.1.0/24 dev eth1 src 172.16.1.10 scope link table 1

post-up /sbin/ip rule add from 172.16.1.10/32 table 1 priority 100

post-down /sbin/ip rule del from 172.16.1.10/32 table 1 priority 100

post-up /sbin/iptables -t nat -A POSTROUTING -p udp -o eth1 -j MASQUERADE

post-down /sbin/iptables -t nat -D POSTROUTING -p udp -o eth1 -j MASQUERADE

Task:AmphoraePostNetworkPlug 主要就是为了生成这些配置文件的内容。

- 在为 loadbalancer 创建了 member 之后,会根据 menber 所在的 subnet 再为 amphora 添加新的 interfaces。当然了,如果说 member 与 vrrp_port 来自同一个 subnet,则不再为 amphora 添加新的 inferface 了。

root@amphora-cd444019-ce8f-4f89-be6b-0edf76f41b77:~# ip netns exec amphora-haproxy ifconfig

eth1 Link encap:Ethernet HWaddr fa:16:3e:f4:69:4b

inet addr:172.16.1.3 Bcast:172.16.1.255 Mask:255.255.255.0

inet6 addr: fe80::f816:3eff:fef4:694b/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1450 Metric:1

RX packets:12705 errors:0 dropped:0 overruns:0 frame:0

TX packets:613211 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:762300 (762.3 KB) TX bytes:36792968 (36.7 MB)

eth1:0 Link encap:Ethernet HWaddr fa:16:3e:f4:69:4b

inet addr:172.16.1.10 Bcast:172.16.1.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1450 Metric:1

eth2 Link encap:Ethernet HWaddr fa:16:3e:18:23:7a

inet addr:192.168.1.3 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::f816:3eff:fe18:237a/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1450 Metric:1

RX packets:8 errors:2 dropped:0 overruns:0 frame:2

TX packets:8 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:2156 (2.1 KB) TX bytes:808 (808.0 B)

inet addr:192.168.1.3 由 member 所在的 subnet DHCP 分配,配置文件如下:

# Generated by Octavia agent

auto eth2

iface eth2 inet static

address 192.168.1.3

broadcast 192.168.1.255

netmask 255.255.255.0

mtu 1450

post-up /sbin/iptables -t nat -A POSTROUTING -p udp -o eth2 -j MASQUERADE

post-down /sbin/iptables -t nat -D POSTROUTING -p udp -o eth2 -j MASQUERADE

ListenersUpdate

显然,最后 haproxy 配置的更改还是由 Task:ListenersUpdate 完成。

# Configuration for loadbalancer 01197be7-98d5-440d-a846-cd70f52dc503

global

daemon

user nobody

log /dev/log local0

log /dev/log local1 notice

stats socket /var/lib/octavia/1385d3c4-615e-4a92-aea1-c4fa51a75557.sock mode 0666 level user

maxconn 1000000

defaults

log global

retries 3

option redispatch

peers 1385d3c4615e4a92aea1c4fa51a75557_peers

peer l_Ustq0qE-h-_Q1dlXLXBAiWR8U 172.16.1.7:1025

peer O08zAgUhIv9TEXhyYZf2iHdxOkA 172.16.1.3:1025

frontend 1385d3c4-615e-4a92-aea1-c4fa51a75557

option httplog

maxconn 1000000

bind 172.16.1.10:8080

mode http

default_backend 8196f752-a367-4fb4-9194-37c7eab95714

timeout client 50000

backend 8196f752-a367-4fb4-9194-37c7eab95714

mode http

balance roundrobin

fullconn 1000000

option allbackups

timeout connect 5000

timeout server 50000

server b6e464fd-dd1e-4775-90f2-4231444a0bbe 192.168.1.14:80 weight 1

实际上,添加 member 就是在 backend(default pool)中加入了 server <member_id> 192.168.1.14:80 weight 1 项目,表示该云主机作为了 default pool 的一部分。

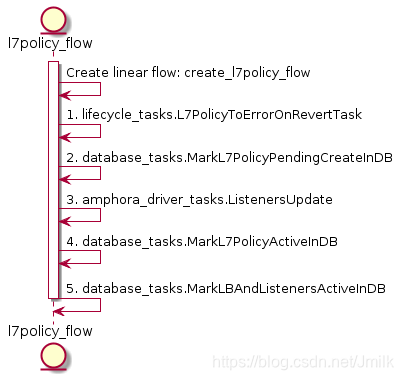

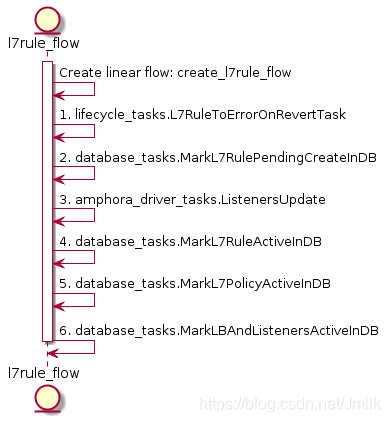

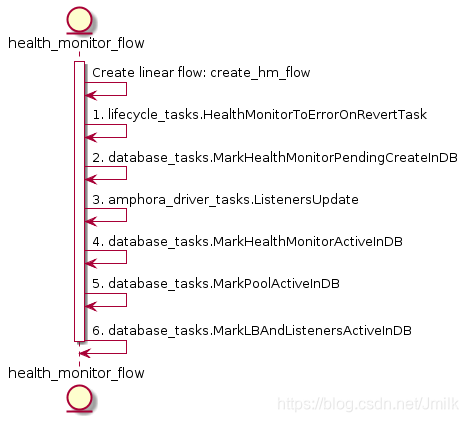

创建 L7policy & L7rule & Health Monitor

L7policy 对象在 Octavia 中的语义是用于描述转发的动作类型(e.g. 转发至 pool?转发至 URL?拒绝转发?)以及 L7rule 的容器,下属于 Listener。

L7Rule 对象在 Octavia 中的语义是数据转发的匹配域,描述了转发的路由关系,下属于 L7policy。

Health Monitor 对象用于对 Pool 中 Member 的健康状态进行监控,本质就是一条数据库记录,描述了健康检查的的规则,下属于 Pool。

从上述三个 UML 图可以感受到,Create L7policy、L7rule、Health-Monitor 和 Pool 实际上非常类似,关键都是在于 TASK:ListenersUpdate 对 haproxy 配置文件内容的更新。所以,我们主要通过一些例子来观察 haproxy 配置文件的更改规律即可。

- EXAMPLE 1

# 创建 pool 8196f752-a367-4fb4-9194-37c7eab95714 的 healthmonitor

[root@control01 ~]# openstack loadbalancer healthmonitor create --name healthmonitor1 --type PING --delay 5 --timeout 10 --max-retries 3 8196f752-a367-4fb4-9194-37c7eab95714

# pool 8196f752-a367-4fb4-9194-37c7eab95714 下属有一个 member

[root@control01 ~]# openstack loadbalancer member list 8196f752-a367-4fb4-9194-37c7eab95714

+--------------------------------------+------+----------------------------------+---------------------+--------------+---------------+------------------+--------+

| id | name | project_id | provisioning_status | address | protocol_port | operating_status | weight |

+--------------------------------------+------+----------------------------------+---------------------+--------------+---------------+------------------+--------+

| b6e464fd-dd1e-4775-90f2-4231444a0bbe | | 9e4fe13a6d7645269dc69579c027fde4 | ACTIVE | 192.168.1.14 | 80 | ONLINE | 1 |

+--------------------------------------+------+----------------------------------+---------------------+--------------+---------------+------------------+--------+

# listener 的 1385d3c4-615e-4a92-aea1-c4fa51a75557 l7policy 就是转发至 default pool

[root@control01 ~]# openstack loadbalancer l7policy create --name l7p1 --action REDIRECT_TO_POOL --redirect-pool 8196f752-a367-4fb4-9194-37c7eab95714 1385d3c4-615e-4a92-aea1-c4fa51a75557

# 转发的匹配与就是 hostname 以 server 开头的 instances

[root@control01 ~]# openstack loadbalancer l7rule create --type HOST_NAME --compare-type STARTS_WITH --value "server" 87593985-e02f-4880-b80f-22a4095c05a7

haproxy 配置文件:

# Configuration for loadbalancer 01197be7-98d5-440d-a846-cd70f52dc503

global

daemon

user nobody

log /dev/log local0

log /dev/log local1 notice

stats socket /var/lib/octavia/1385d3c4-615e-4a92-aea1-c4fa51a75557.sock mode 0666 level user

maxconn 1000000

external-check

defaults

log global

retries 3

option redispatch

peers 1385d3c4615e4a92aea1c4fa51a75557_peers

peer l_Ustq0qE-h-_Q1dlXLXBAiWR8U 172.16.1.7:1025

peer O08zAgUhIv9TEXhyYZf2iHdxOkA 172.16.1.3:1025

frontend 1385d3c4-615e-4a92-aea1-c4fa51a75557

option httplog

maxconn 1000000

# 前端监听 http://172.16.1.10:8080

bind 172.16.1.10:8080

mode http

# ACL 转发规则

acl 8d9b8b1e-83d7-44ca-a5b4-0103d5f90cb9 req.hdr(host) -i -m beg server

# if ACL 8d9b8b1e-83d7-44ca-a5b4-0103d5f90cb9 满足,则转发至 backend 8196f752-a367-4fb4-9194-37c7eab95714

use_backend 8196f752-a367-4fb4-9194-37c7eab95714 if 8d9b8b1e-83d7-44ca-a5b4-0103d5f90cb9

# 如果没有匹配到任何 ACL 规则,则转发至默认 backend 8196f752-a367-4fb4-9194-37c7eab95714

default_backend 8196f752-a367-4fb4-9194-37c7eab95714

timeout client 50000

backend 8196f752-a367-4fb4-9194-37c7eab95714

# 后端监听协议为 http

mode http

# 负载均衡算法为 RR 轮询

balance roundrobin

timeout check 10s

option external-check

# 使用脚本 ping-wrapper.sh 对 server 进行健康检查

external-check command /var/lib/octavia/ping-wrapper.sh

fullconn 1000000

option allbackups

timeout connect 5000

timeout server 50000

# 后端真实服务器(real server),服务端口为 80,监控检查规则为 inter 5s fall 3 rise 3

server b6e464fd-dd1e-4775-90f2-4231444a0bbe 192.168.1.14:80 weight 1 check inter 5s fall 3 rise 3

健康检查脚本:

#!/bin/bash

if [[ $HAPROXY_SERVER_ADDR =~ ":" ]]; then

/bin/ping6 -q -n -w 1 -c 1 $HAPROXY_SERVER_ADDR > /dev/null 2>&1

else

/bin/ping -q -n -w 1 -c 1 $HAPROXY_SERVER_ADDR > /dev/null 2>&1

fi

脚本的内容,正如我们设定的监控方式为 PING。

- EXAMPLE 2

[root@control01 ~]# openstack loadbalancer healthmonitor create --name healthmonitor1 --type PING --delay 5 --timeout 10 --max-retries 3 822f78c3-ea2c-4770-bef0-e97f1ac2eba8

[root@control01 ~]# openstack loadbalancer l7policy create --name l7p1 --action REDIRECT_TO_POOL --redirect-pool 822f78c3-ea2c-4770-bef0-e97f1ac2eba8 1385d3c4-615e-4a92-aea1-c4fa51a75557

[root@control01 ~]# openstack loadbalancer l7rule create --type HOST_NAME --compare-type STARTS_WITH --value "server" fb90a3b5-c97c-4d99-973e-118840a7a236

haproxy 的配置文件内容变更为:

# Configuration for loadbalancer 01197be7-98d5-440d-a846-cd70f52dc503

global

daemon

user nobody

log /dev/log local0

log /dev/log local1 notice

stats socket /var/lib/octavia/1385d3c4-615e-4a92-aea1-c4fa51a75557.sock mode 0666 level user

maxconn 1000000

external-check

defaults

log global

retries 3

option redispatch

peers 1385d3c4615e4a92aea1c4fa51a75557_peers

peer l_Ustq0qE-h-_Q1dlXLXBAiWR8U 172.16.1.7:1025

peer O08zAgUhIv9TEXhyYZf2iHdxOkA 172.16.1.3:1025

frontend 1385d3c4-615e-4a92-aea1-c4fa51a75557

option httplog

maxconn 1000000

bind 172.16.1.10:8080

mode http

acl 8d9b8b1e-83d7-44ca-a5b4-0103d5f90cb9 req.hdr(host) -i -m beg server

use_backend 8196f752-a367-4fb4-9194-37c7eab95714 if 8d9b8b1e-83d7-44ca-a5b4-0103d5f90cb9

acl c76f36bc-92c0-4f48-8d57-a13e3b1f09e1 req.hdr(host) -i -m beg server

use_backend 822f78c3-ea2c-4770-bef0-e97f1ac2eba8 if c76f36bc-92c0-4f48-8d57-a13e3b1f09e1

default_backend 8196f752-a367-4fb4-9194-37c7eab95714

timeout client 50000

backend 8196f752-a367-4fb4-9194-37c7eab95714

mode http

balance roundrobin

timeout check 10s

option external-check

external-check command /var/lib/octavia/ping-wrapper.sh

fullconn 1000000

option allbackups

timeout connect 5000

timeout server 50000

server b6e464fd-dd1e-4775-90f2-4231444a0bbe 192.168.1.14:80 weight 1 check inter 5s fall 3 rise 3

backend 822f78c3-ea2c-4770-bef0-e97f1ac2eba8

mode http

balance roundrobin

timeout check 10s

option external-check

external-check command /var/lib/octavia/ping-wrapper.sh

fullconn 1000000

option allbackups

timeout connect 5000

timeout server 50000

server 7da6f176-36c6-479a-9d86-c892ecca6ae5 192.168.1.6:80 weight 1 check inter 5s fall 3 rise 3

可见,在为 listener 添加了 shared pool 之后,会在增加一个 backend section 对应 shared pool 822f78c3-ea2c-4770-bef0-e97f1ac2eba8。

Octavia 创建 Listener、Pool、Member、L7policy、L7 rule 与 Health Manager 的实现与分析的更多相关文章

- Octavia 创建 loadbalancer 的实现与分析

目录 文章目录 目录 从 Octavia API 看起 Octavia Controller Worker database_tasks.MapLoadbalancerToAmphora comput ...

- 添加 Pool Member - 每天5分钟玩转 OpenStack(123)

我们已经有了 Load Balance Pool "web servers"和 VIP,接下来需要往 Pool 里添加 member 并学习如何使用 cloud image. 先准 ...

- MYSQ创建联合索引,字段的先后顺序,对查询的影响分析

MYSQ创建联合索引,字段的先后顺序,对查询的影响分析 前言 最左匹配原则 为什么会有最左前缀呢? 联合索引的存储结构 联合索引字段的先后顺序 b+树可以存储的数据条数 总结 参考 MYSQ创建联合索 ...

- Android 创建Listener监听器形式选择:匿名内部类?外部类?

说到监听器,第一感觉就是直接写作匿名内部类来用,可是依据单一职责原则,好像又不应该作为匿名内部类来写(由于监听中有时要写较多的逻辑代码),所曾经段时间把有共性的listener单独创建放在glut.l ...

- 线程池内的异步线程创建UI控件,造成UI线程卡死无响应的问题分析

winform应用在使用一段时间后,切换到其他系统或者打开word.excel文档,再切换回winform应用时,系统有时出现不响应的现象.有时在锁屏后恢复桌面及应用时也发生此问题. 经微软支持确认, ...

- 性能学习随笔(1)--负载均衡之f5负载均衡

负载均衡设计涉及软件负载和硬件负载,下文转自CSDN中一篇文章涉及f5硬负载知识 ----转载:https://blog.csdn.net/tvk872/article/details/8063489 ...

- 关于F5负载均衡你认识多少?

关于F5负载均衡你认识多少? 2018年06月09日 18:01:09 tvk872 阅读数:14008 网络负载均衡(load balance),就是将负载(工作任务)进行平衡.分摊到多个操作单 ...

- F5负载均衡综合实例详解(转)

转载自:https://blog.csdn.net/weixin_43089453/article/details/87937994 女程序员就不脱发了吗来源于:<网络运维与管理>201 ...

- JMeter性能测试的基础知识和个人理解

JMeter性能测试的基础知识和个人理解 1. JMeter的简介 JMeter是Apache组织开发的开源项目,设计之初是用于做性能测试的,同时它在实现对各种接口的调用方面做的比较成熟,因此,常 ...

随机推荐

- 为Xcode配置Git和Github

Xcode.Git和Github是三个伟大的编程工具.本文记录一下如何在Xcode中使用Git作为源代码控制工具,以及如何将本地的Git仓库和远程Github上的仓库集成起来. 1. 如何为新建的Xc ...

- 企业级开发账号In House ipa发布流程

这两天需要发布一个ipa放到网上供其他人安装,需要用到企业级开发者账号.在网上查了一下资料,感觉没有一个比较完善的流程,于是决定把整个流程写下来,供大家参考. 首先详细说明一下我们的目标,我们需要发布 ...

- 重置Brocade光纤交换机的管理IP地址

1.使用串口登录光纤交换机 使用RS/232 (9针)串口连接线将笔记本连至交换机的串口. 输入以下参数: Bits per second (每秒位数): 9600 Data Bits (数据位): ...

- kubernets全套笔记

Master/node Master核心组件: API server,Scheduler,Controller-Manager etcd(存储组件) Node核心组件: kubelet(核心组件) ...

- Python面向对象的三大特性之异常处理

一.错误与异常 程序中难免会出现错误,而错误分为两种 1.语法错误:(这种错误,根本过不了python解释器的语法检测,必须在程序执行前就改正) 2.逻辑错误:(逻辑错误),比如用户输入的不合适等一系 ...

- Excel去重操作

工作中经常遇到要对 Excel 中的某一列进行去重操作,得到不重复的结果,总结如下: 选中要操作的列(鼠标点击指定列的字母,如T列) 点击"数据"中"排序和筛选" ...

- 怎样将shp文件的坐标点导出来?

方法一: 1.将线矢量转化为点矢量 具体操作步骤如下: (1)arctoolbox\Data Management tools\Features\双击Feature Vertices to point ...

- windows 环境如何启动 redis ?

1.cd 到 redis 的安装目录 C:\Users\dell>cd C:\redis 2.执行 redis 启动命令 C:\redis>redis-server.exe redis.w ...

- JSP如何实现文件断点上传和断点下载?

核心原理: 该项目核心就是文件分块上传.前后端要高度配合,需要双方约定好一些数据,才能完成大文件分块,我们在项目中要重点解决的以下问题. * 如何分片: * 如何合成一个文件: * 中断了从哪个分片开 ...

- #381 Div2 Problem C Alyona and mex (思维 && 构造)

题意 : 题目的要求是构造出一个长度为 n 的数列, 构造条件是在接下来给出的 m 个子区间中, 要求每一个子区间的mex值最大, 然后在这 m 个子区间产生的mex值中取最小的输出, 并且输出构造出 ...