Spark译文(三)

Structured Streaming Programming Guide(结构化流编程指南)

Overview(概貌)

Quick Example(快速示例)

from pyspark.sql import SparkSession

from pyspark.sql.functions import explode

from pyspark.sql.functions import split

spark = SparkSession \

.builder \

.appName("StructuredNetworkWordCount") \

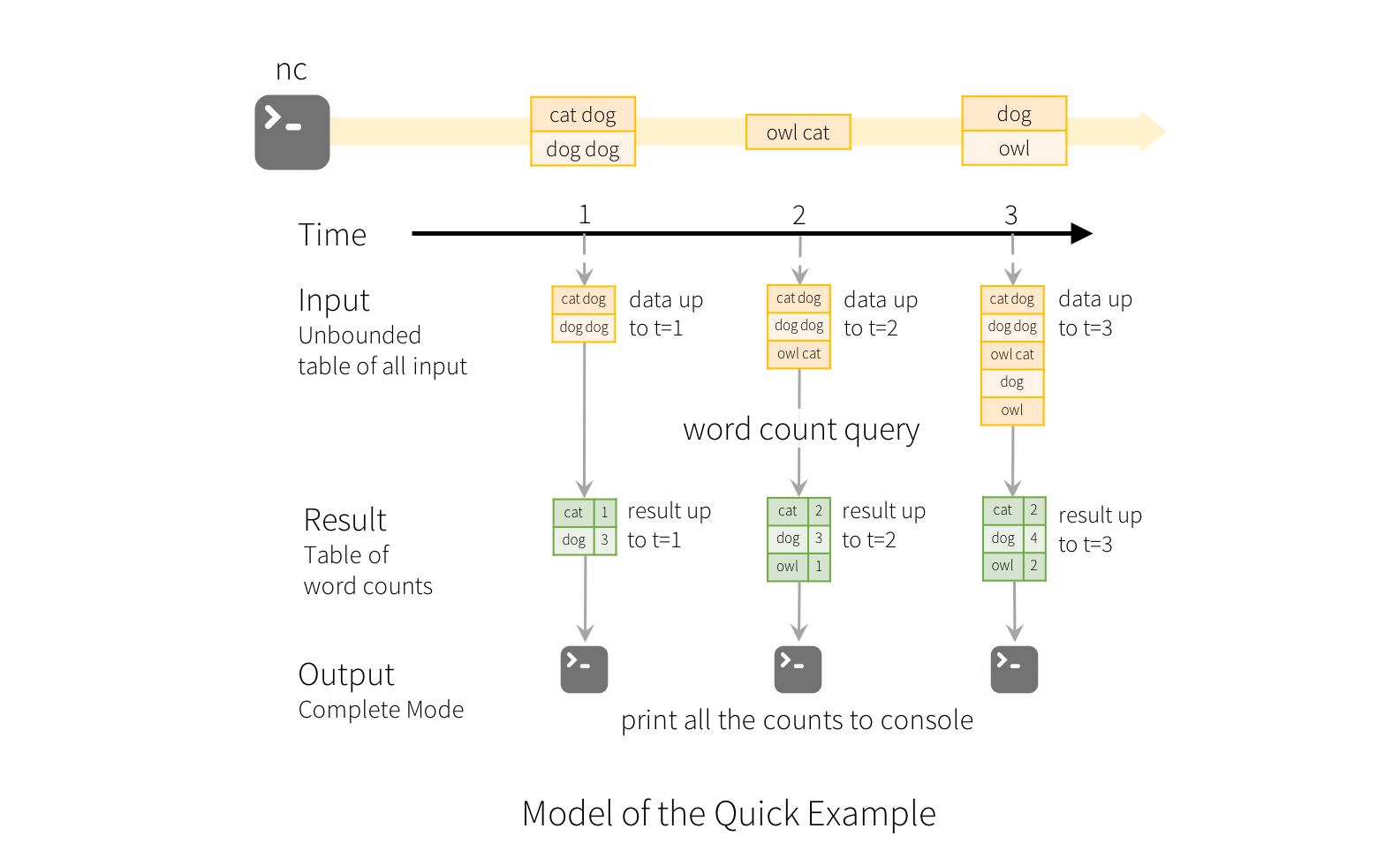

.getOrCreate()接下来,让我们创建一个流式DataFrame,它表示从侦听localhost:9999的服务器接收的文本数据,并转换DataFrame以计算字数。

# Create DataFrame representing the stream of input lines from connection to localhost:9999

lines = spark \

.readStream \

.format("socket") \

.option("host", "localhost") \

.option("port", 9999) \

.load()

# Split the lines into words

words = lines.select(

explode(

split(lines.value, " ")

).alias("word")

)

# Generate running word count

wordCounts = words.groupBy("word").count() # Start running the query that prints the running counts to the console

query = wordCounts \

.writeStream \

.outputMode("complete") \

.format("console") \

.start()

query.awaitTermination()$ nc -lk 9999

然后,在不同的终端中,您可以使用启动示例

$ ./bin/spark-submit examples/src/main/python/sql/streaming/structured_network_wordcount.py localhost 9999

|

|

Programming Model(编程模型)

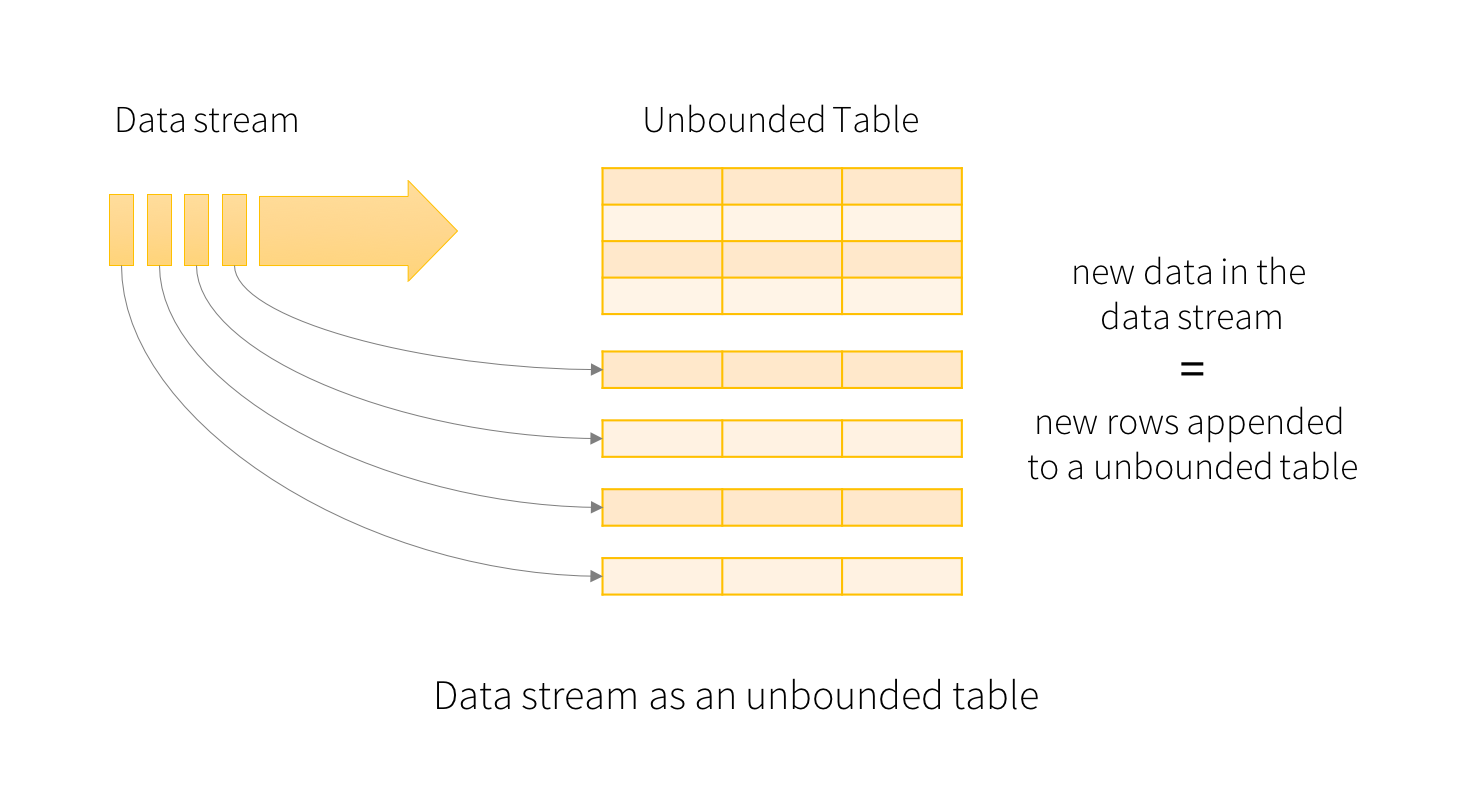

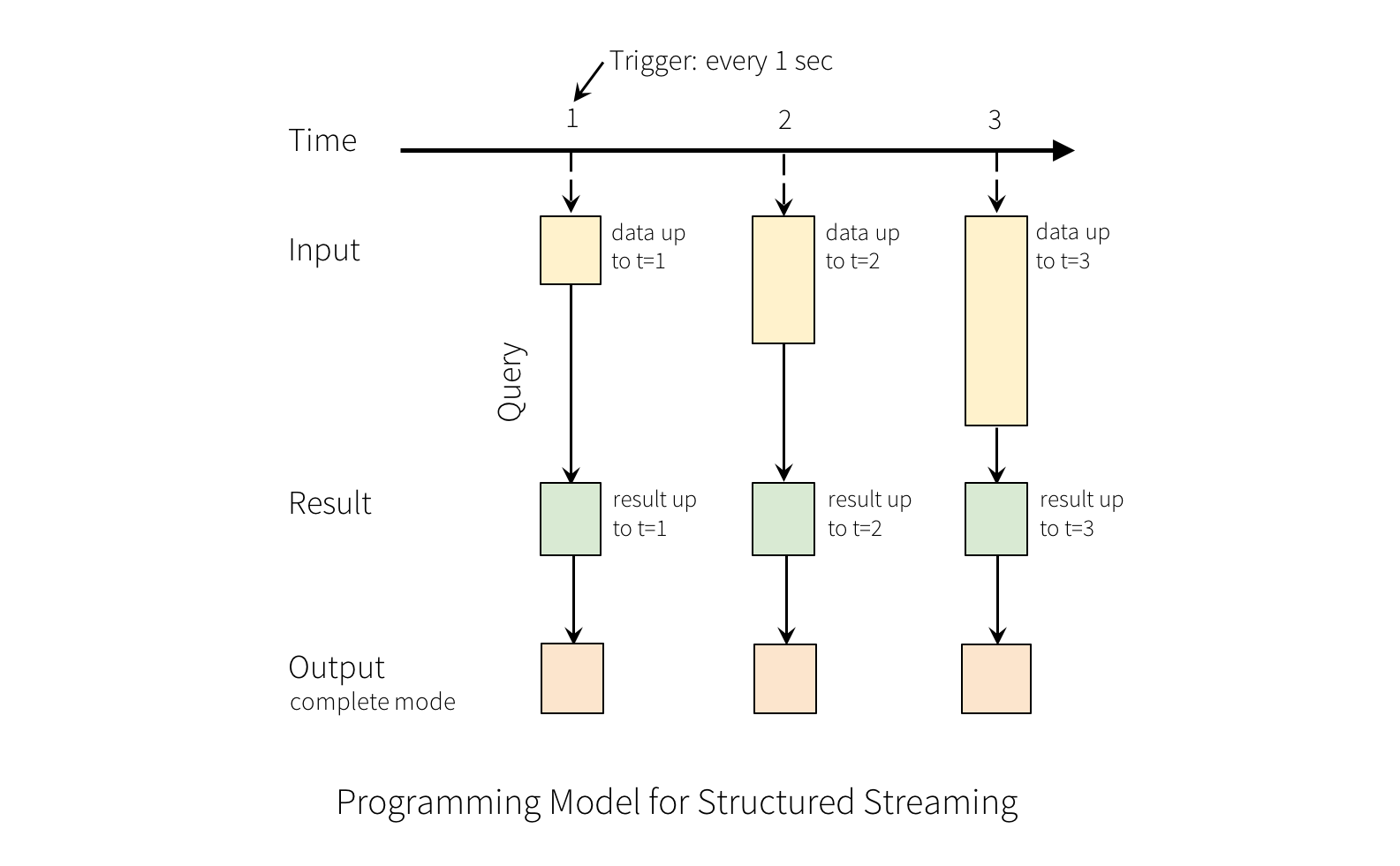

Basic Concepts(基本概念)

请注意,Structured Streaming不会实现整个表。·它从流数据源读取最新的可用数据,逐步处理以更新结果,然后丢弃源数据。·它只保留更新结果所需的最小中间状态数据(例如前面例子中的中间计数)

Handling Event-time and Late Data(处理事件时间和后期数据)

Fault Tolerance Semantics(容错语义)

API using Datasets and DataFrames(使用数据集和数据框架的API)

Creating streaming DataFrames and streaming Datasets(创建流式DataFrame和流式数据集)

Input Sources(输入源)

| Source | Options | Fault-tolerant | Notes |

|---|---|---|---|

| File source | path: path to the input directory, and common to all file formats. maxFilesPerTrigger: maximum number of new files to be considered in every trigger (default: no max) latestFirst: whether to process the latest new files first, useful when there is a large backlog of files (default: false) fileNameOnly: whether to check new files based on only the filename instead of on the full path (default: false). With this set to `true`, the following files would be considered as the same file, because their filenames, "dataset.txt", are the same: "file:///dataset.txt" "s3://a/dataset.txt" "s3n://a/b/dataset.txt" "s3a://a/b/c/dataset.txt" For file-format-specific options, see the related methods in In addition, there are session configurations that affect certain file-formats. See the SQL Programming Guide for more details. E.g., for "parquet", see Parquet configuration section. |

Yes | Supports glob paths, but does not support multiple comma-separated paths/globs. |

| Socket Source | host: host to connect to, must be specifiedport: port to connect to, must be specified |

No | |

| Rate Source | rowsPerSecond (e.g. 100, default: 1): How many rows should be generated per second.

The source will try its best to reach |

Yes | |

| Kafka Source | See the Kafka Integration Guide. | Yes | |

Here are some examples.

spark = SparkSession. ...

# Read text from socket

socketDF = spark \

.readStream \

.format("socket") \

.option("host", "localhost") \

.option("port", 9999) \

.load()

socketDF.isStreaming() # Returns True for DataFrames that have streaming sources

socketDF.printSchema()

# Read all the csv files written atomically in a directory

userSchema = StructType().add("name", "string").add("age", "integer")

csvDF = spark \

.readStream \

.option("sep", ";") \

.schema(userSchema) \

.csv("/path/to/directory") # Equivalent to format("csv").load("/path/to/directory")Schema inference and partition of streaming DataFrames/Datasets(流式DataFrames / Datasets的模式推理和分区)

Operations on streaming DataFrames/Datasets(流式传输DataFrames / Datasets的操作)

Basic Operations - Selection, Projection, Aggregation(基本操作 - 选择,投影,聚合)

df = ... # streaming DataFrame with IOT device data with schema { device: string, deviceType: string, signal: double, time: DateType }

# Select the devices which have signal more than 10

df.select("device").where("signal > 10")

# Running count of the number of updates for each device type

df.groupBy("deviceType").count()您还可以将流式DataFrame / Dataset注册为临时视图,然后在其上应用SQL命令。

df.createOrReplaceTempView("updates")

spark.sql("select count(*) from updates") # returns another streaming DF注意,您可以使用df.isStreaming来识别DataFrame / Dataset是否具有流数据。

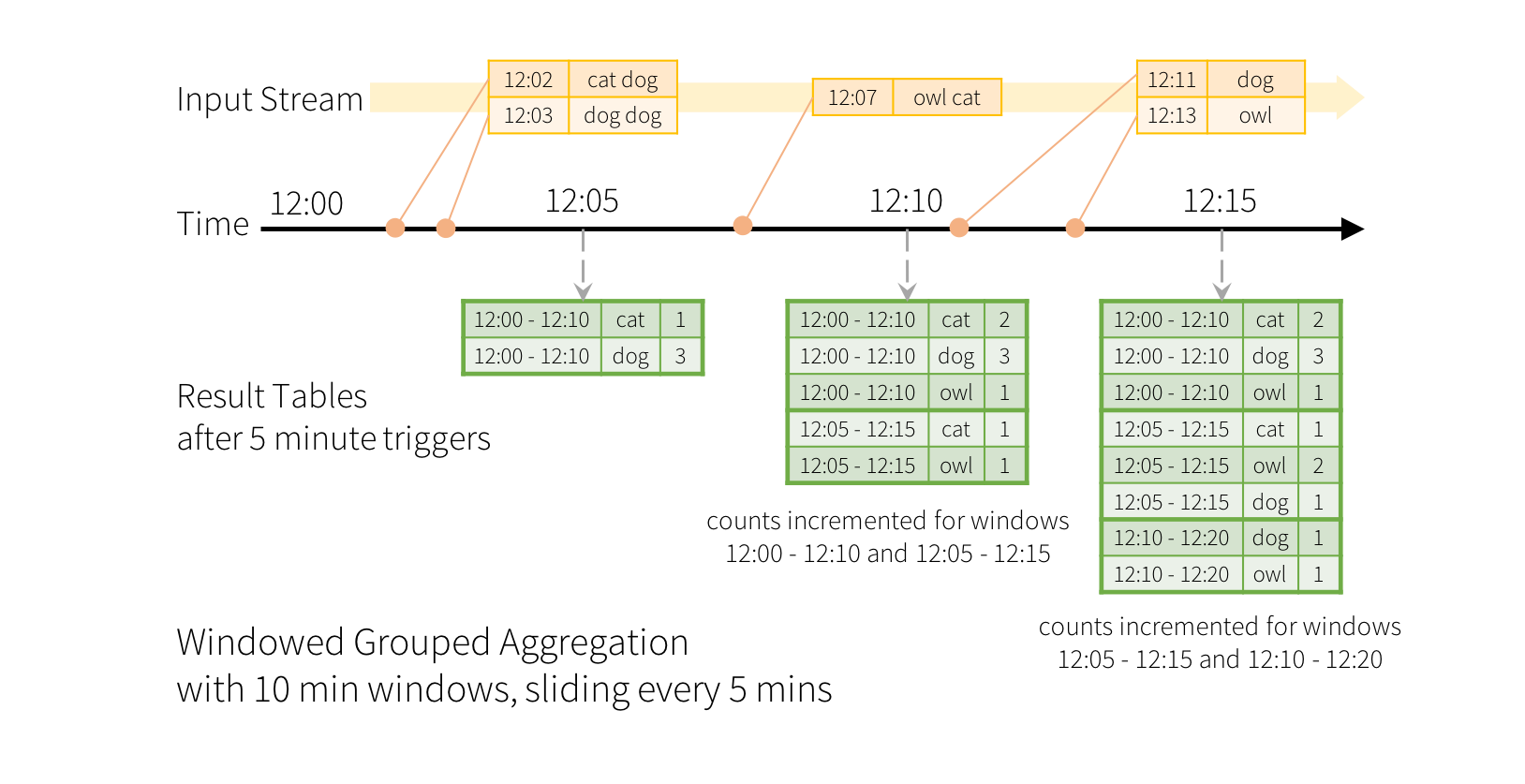

df.isStreaming()Window Operations on Event Time(事件时间的窗口操作)

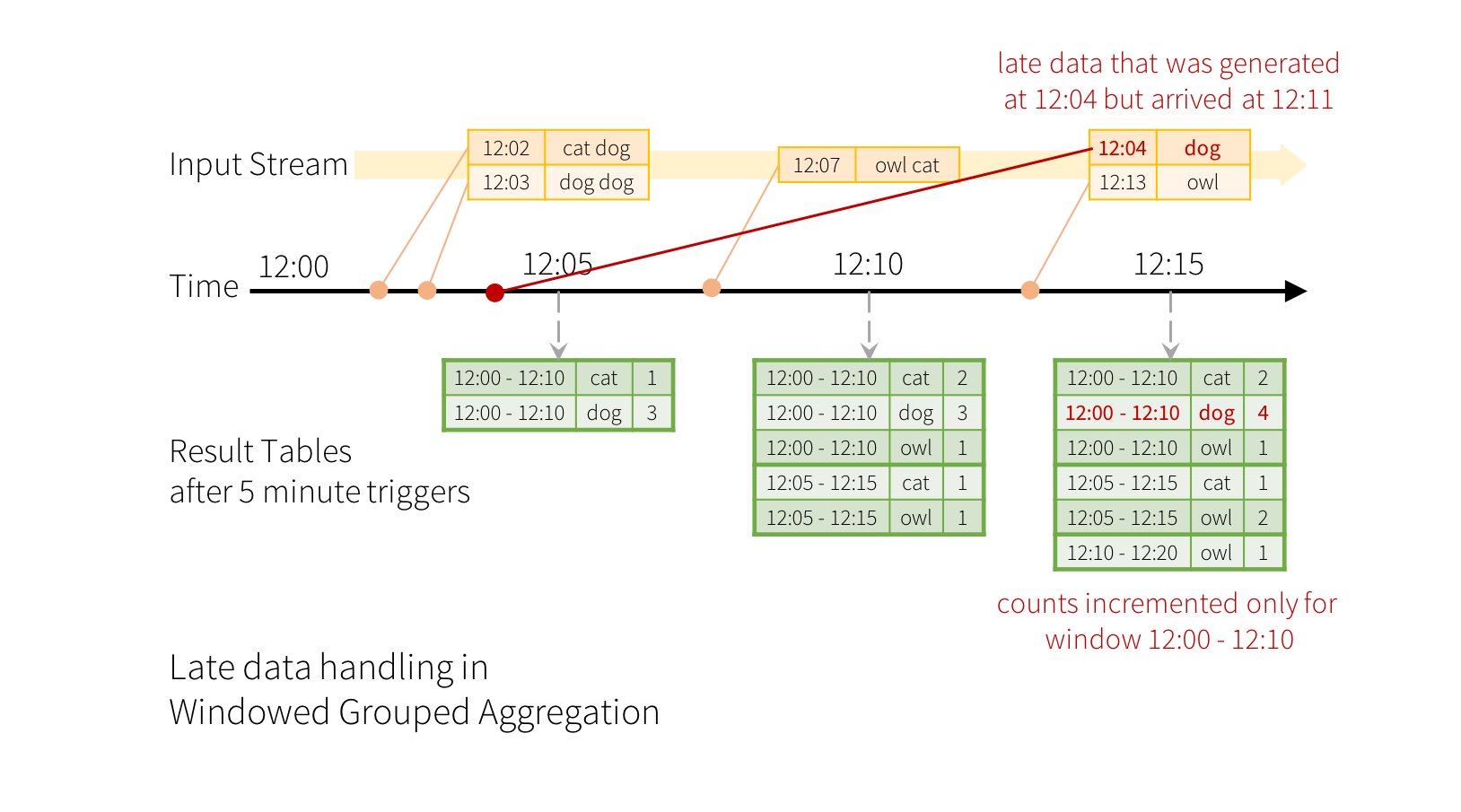

结果表看起来如下所示。

words = ... # streaming DataFrame of schema { timestamp: Timestamp, word: String }

# Group the data by window and word and compute the count of each group

windowedCounts = words.groupBy(

window(words.timestamp, "10 minutes", "5 minutes"),

words.word

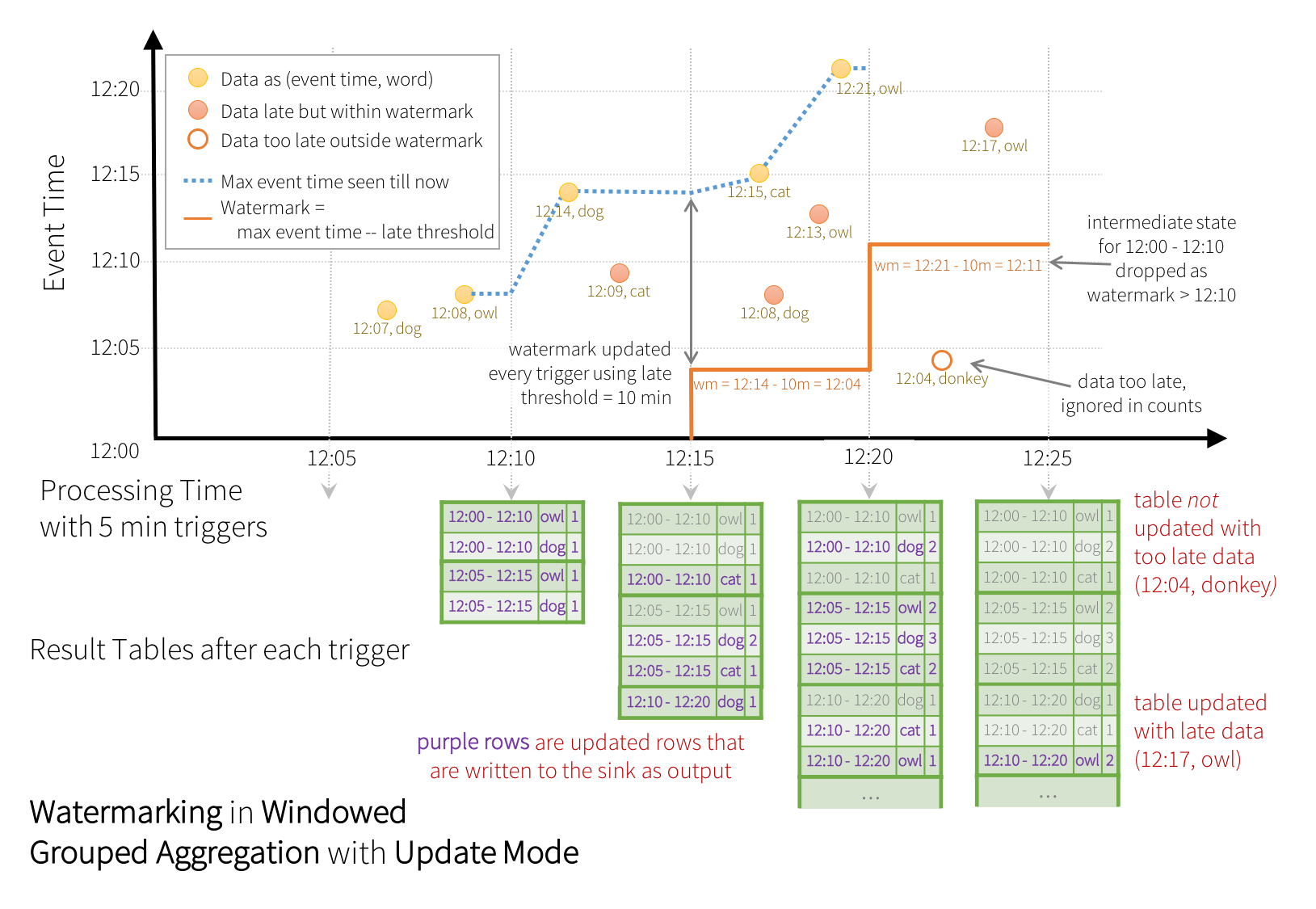

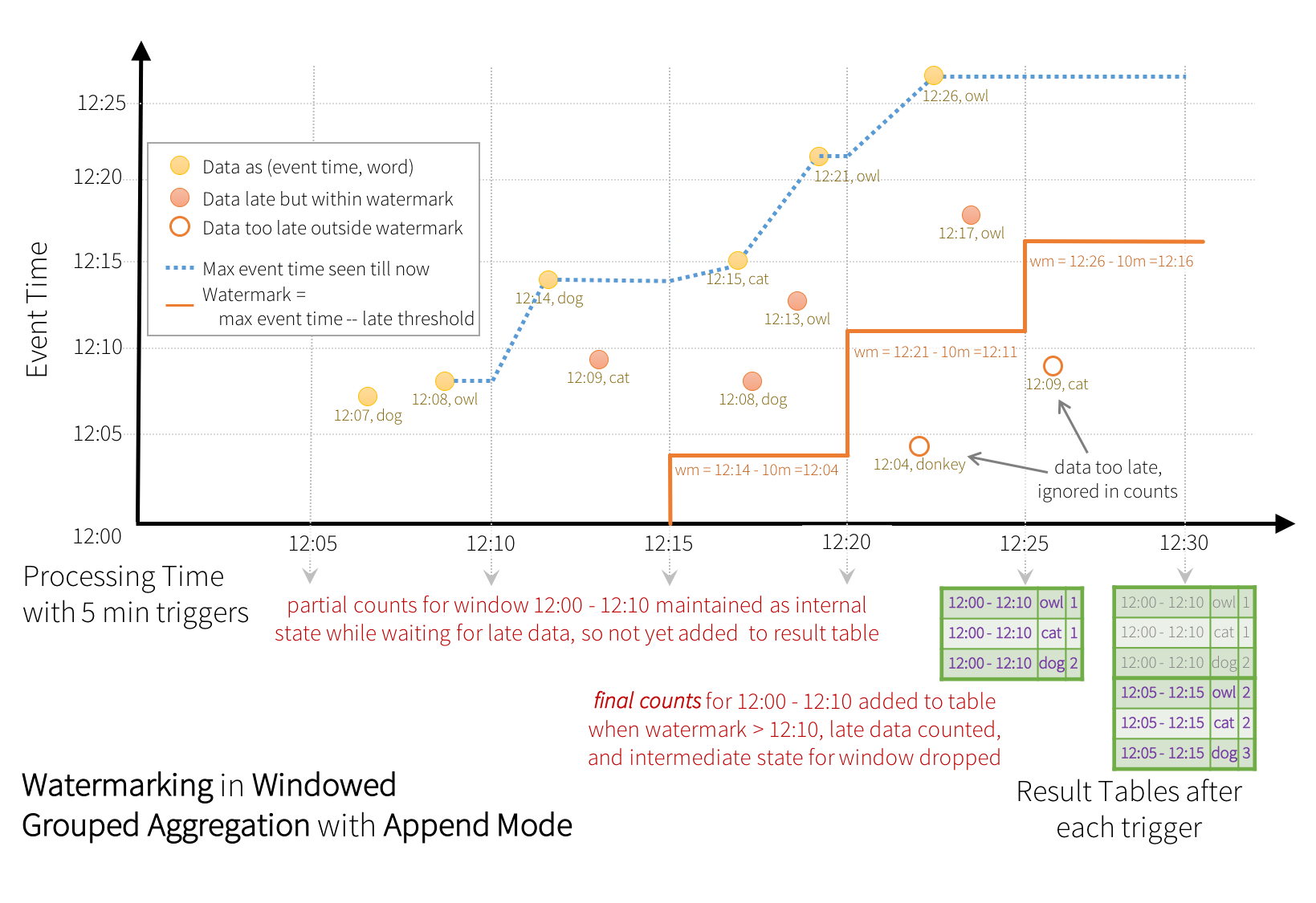

).count()Handling Late Data and Watermarking(处理延迟数据和水印)

words = ... # streaming DataFrame of schema { timestamp: Timestamp, word: String }

# Group the data by window and word and compute the count of each group

windowedCounts = words \

.withWatermark("timestamp", "10 minutes") \

.groupBy(

window(words.timestamp, "10 minutes", "5 minutes"),

words.word) \

.count()

Conditions for watermarking to clean aggregation state(用于清除聚合状态的水印的条件)

Semantic Guarantees of Aggregation with Watermarking(带水印聚合的语义保证)

Join Operations(流静态连接)

Stream-static Joins(流静态连接)

staticDf = spark.read. ...

streamingDf = spark.readStream. ...

streamingDf.join(staticDf, "type") # inner equi-join with a static DF

streamingDf.join(staticDf, "type", "right_join") # right outer join with a static DFStream-stream Joins(流静态连接)

In Spark 2.3, we have added support for stream-stream joins, that is, you can join two streaming Datasets/DataFrames. The challenge of generating join results between two data streams is that, at any point of time, the view of the dataset is incomplete for both sides of the join making it much harder to find matches between inputs. Any row received from one input stream can match with any future, yet-to-be-received row from the other input stream. Hence, for both the input streams, we buffer past input as streaming state, so that we can match every future input with past input and accordingly generate joined results. Furthermore, similar to streaming aggregations, we automatically handle late, out-of-order data and can limit the state using watermarks. Let’s discuss the different types of supported stream-stream joins and how to use them.

Inner Joins with optional Watermarking(内部联合可选水印)

from pyspark.sql.functions import expr

impressions = spark.readStream. ...

clicks = spark.readStream. ...

# Apply watermarks on event-time columns

impressionsWithWatermark = impressions.withWatermark("impressionTime", "2 hours")

clicksWithWatermark = clicks.withWatermark("clickTime", "3 hours")

# Join with event-time constraints

impressionsWithWatermark.join(

clicksWithWatermark,

expr("""

clickAdId = impressionAdId AND

clickTime >= impressionTime AND

clickTime <= impressionTime + interval 1 hour

""")

)Semantic Guarantees of Stream-stream Inner Joins with Watermarking(具有水印的流内部连接的语义保证)

Outer Joins with Watermarking(带水印的外部连接)

impressionsWithWatermark.join(

clicksWithWatermark,

expr("""

clickAdId = impressionAdId AND

clickTime >= impressionTime AND

clickTime <= impressionTime + interval 1 hour

"""),

"leftOuter" # can be "inner", "leftOuter", "rightOuter"

)Semantic Guarantees of Stream-stream Outer Joins with Watermarking(具有水印的流 - 流外连接的语义保证)

外连接与内部连接具有相同的保证,关于水印延迟以及数据是否会被丢弃。

Caveats(注意事项)

Support matrix for joins in streaming queries(支持流式查询中的连接矩阵)

| Left Input | Right Input | Join Type | |

|---|---|---|---|

| Static | Static | All types | Supported, since its not on streaming data even though it can be present in a streaming query |

| Stream | Static | Inner | Supported, not stateful |

| Left Outer | Supported, not stateful | ||

| Right Outer | Not supported | ||

| Full Outer | Not supported | ||

| Static | Stream | Inner | Supported, not stateful |

| Left Outer | Not supported | ||

| Right Outer | Supported, not stateful | ||

| Full Outer | Not supported | ||

| Stream | Stream | Inner | Supported, optionally specify watermark on both sides + time constraints for state cleanup |

| Left Outer | Conditionally supported, must specify watermark on right + time constraints for correct results, optionally specify watermark on left for all state cleanup | ||

| Right Outer | Conditionally supported, must specify watermark on left + time constraints for correct results, optionally specify watermark on right for all state cleanup | ||

| Full Outer | Not supported | ||

Streaming Deduplication(流式重复数据删除)

streamingDf = spark.readStream. ...

# Without watermark using guid column

streamingDf.dropDuplicates("guid")

# With watermark using guid and eventTime columns

streamingDf \

.withWatermark("eventTime", "10 seconds") \

.dropDuplicates("guid", "eventTime")Policy for handling multiple watermarks(处理多个水印的政策)

Arbitrary Stateful Operations(任意有状态的行动)

Unsupported Operations(不支持的操作)

Starting Streaming Queries(启动流式查询)

Output Modes(输出模式)

| Query Type | Supported Output Modes | Notes | |

|---|---|---|---|

| Queries with aggregation | Aggregation on event-time with watermark | Append, Update, Complete | Append mode uses watermark to drop old aggregation state. But the output of a windowed aggregation is delayed the late threshold specified in `withWatermark()` as by the modes semantics, rows can be added to the Result Table only once after they are finalized (i.e. after watermark is crossed). See the Late Data section for more details.

Update mode uses watermark to drop old aggregation state. Complete mode does not drop old aggregation state since by definition this mode preserves all data in the Result Table. |

| Other aggregations | Complete, Update | Since no watermark is defined (only defined in other category), old aggregation state is not dropped.

Append mode is not supported as aggregates can update thus violating the semantics of this mode. |

|

Queries with mapGroupsWithState |

Update | ||

Queries with flatMapGroupsWithState |

Append operation mode | Append | Aggregations are allowed after flatMapGroupsWithState. |

| Update operation mode | Update | Aggregations not allowed after flatMapGroupsWithState. |

|

Queries with joins |

Append | Update and Complete mode not supported yet. See the support matrix in the Join Operations section for more details on what types of joins are supported. | |

| Other queries | Append, Update | Complete mode not supported as it is infeasible to keep all unaggregated data in the Result Table. | |

Output Sinks(输出接收器)

有几种类型的内置输出接收器

- 文件接收器 - 将输出存储到目录。

writeStream

.format("parquet") // can be "orc", "json", "csv", etc.

.option("path", "path/to/destination/dir")

.start()- Kafka sink - 将输出存储到Kafka中的一个或多个主题

writeStream

.format("kafka")

.option("kafka.bootstrap.servers", "host1:port1,host2:port2")

.option("topic", "updates")

.start()- Foreach接收器 - 对输出中的记录运行任意计算。有关详细信息,请参阅本节后面的内容

writeStream

.foreach(...)

.start()- 控制台接收器(用于调试) - 每次触发时将输出打印到控制台/标准输出。支持Append和Complete输出模式。这应该用于低数据量的调试目的,因为在每次触发后收集整个输出并将其存储在驱动程序的内存中

writeStream

.format("console")

.start()- 内存接收器(用于调试) - 输出作为内存表存储在内存中。支持Append和Complete输出模式。这应该用于低数据量的调试目的,因为整个输出被收集并存储在驱动程序的内存中。因此,请谨慎使用。

writeStream

.format("memory")

.queryName("tableName")

.start()| Sink | Supported Output Modes | Options | Fault-tolerant | Notes |

|---|---|---|---|---|

| File Sink | Append | path: path to the output directory, must be specified.

For file-format-specific options, see the related methods in DataFrameWriter (Scala/Java/Python/R). E.g. for "parquet" format options see |

Yes (exactly-once) | Supports writes to partitioned tables. Partitioning by time may be useful. |

| Kafka Sink | Append, Update, Complete | See the Kafka Integration Guide | Yes (at-least-once) | More details in the Kafka Integration Guide |

| Foreach Sink | Append, Update, Complete | None | Depends on ForeachWriter implementation | More details in the next section |

| ForeachBatch Sink | Append, Update, Complete | None | Depends on the implementation | More details in the next section |

| Console Sink | Append, Update, Complete | numRows: Number of rows to print every trigger (default: 20) truncate: Whether to truncate the output if too long (default: true) |

No | |

| Memory Sink | Append, Complete | None | No. But in Complete Mode, restarted query will recreate the full table. | Table name is the query name. |

# ========== DF with no aggregations ==========

noAggDF = deviceDataDf.select("device").where("signal > 10")

# Print new data to console

noAggDF \

.writeStream \

.format("console") \

.start()

# Write new data to Parquet files

noAggDF \

.writeStream \

.format("parquet") \

.option("checkpointLocation", "path/to/checkpoint/dir") \

.option("path", "path/to/destination/dir") \

.start()

# ========== DF with aggregation ==========

aggDF = df.groupBy("device").count()

# Print updated aggregations to console

aggDF \

.writeStream \

.outputMode("complete") \

.format("console") \

.start()

# Have all the aggregates in an in-memory table. The query name will be the table name

aggDF \

.writeStream \

.queryName("aggregates") \

.outputMode("complete") \

.format("memory") \

.start()

spark.sql("select * from aggregates").show() # interactively query in-memory tableUsing Foreach and ForeachBatch(使用Foreach和ForeachBatch)

ForeachBatch

def foreach_batch_function(df, epoch_id):

# Transform and write batchDF

pass

streamingDF.writeStream.foreachBatch(foreach_batch_function).start() 使用foreachBatch,您可以执行以下操作。

- 重用现有的批处理数据源 - 对于许多存储系统,可能还没有可用的流式接收器,但可能已经存在用于批量查询的数据写入器。使用foreachBatch,您可以在每个微批次的输出上使用批处理数据编写器。

- 写入多个位置 - 如果要将流式查询的输出写入多个位置,则可以简单地多次写入输出DataFrame / Dataset。但是,每次写入尝试都会导致重新计算输出数据(包括可能重新读取输入数据)。要避免重新计算,您应该缓存输出DataFrame / Dataset,将其写入多个位置,然后将其解除。这是一个大纲。

streamingDF.writeStream.foreachBatch { (batchDF: DataFrame, batchId: Long) => batchDF.persist() batchDF.write.format(…).save(…) // location 1 batchDF.write.format(…).save(…) // location 2 batchDF.unpersist() }

- 应用其他DataFrame操作 - 流式DataFrame中不支持许多DataFrame和Dataset操作,因为Spark不支持在这些情况下生成增量计划。使用foreachBatch,您可以在每个微批输出上应用其中一些操作。但是,您必须自己解释执行该操作的端到端语义。

Note:

- 默认情况下,foreachBatch仅提供至少一次写保证。但是,您可以使用提供给该函数的batchId作为重复数据删除输出并获得一次性保证的方法。

- foreachBatch不适用于连续处理模式,因为它从根本上依赖于流式查询的微批量执行。如果以连续模式写入数据,请改用foreach。

Foreach

- 该函数将一行作为输入。

def process_row(row):

# Write row to storage

pass

query = streamingDF.writeStream.foreach(process_row).start()

- 该对象有一个处理方法和可选的打开和关闭方法:

class ForeachWriter:

def open(self, partition_id, epoch_id):

# Open connection. This method is optional in Python.

pass

def process(self, row):

# Write row to connection. This method is NOT optional in Python.

pass

def close(self, error):

# Close the connection. This method in optional in Python.

pass

query = streamingDF.writeStream.foreach(ForeachWriter()).start()

执行语义启动流式查询时,Spark以下列方式调用函数或对象的方法:

- 此对象的单个副本负责查询中单个任务生成的所有数据。换句话说,一个实例负责处理以分布式方式生成的数据的一个分区。

- 此对象必须是可序列化的,因为每个任务都将获得所提供对象的新的序列化反序列化副本。因此,强烈建议在调用open()方法之后完成用于写入数据的任何初始化(例如,打开连接或启动事务),这表示任务已准备好生成数据

方法的生命周期如下:

对于partition_id的每个分区:

对于epoch_id的流数据的每个批次/纪元:

方法open(partitionId,epochId)被调用。

如果open(...)返回true,则对于分区和批处理/纪元中的每一行,将调用方法进程(行)

调用方法close(错误),在处理行时看到错误(如果有)。

如果open()方法存在并且成功返回(不管返回值),则调用close()方法(如果存在),除非JVM或Python进程在中间崩溃。

Note: 当失败导致某些输入数据的重新处理时,open()方法中的partitionId和epochId可用于对生成的数据进行重复数据删除。这取决于查询的执行模式。如果以微批处理模式执行流式查询,则保证由唯一元组(partition_id,epoch_id)表示的每个分区具有相同的数据。因此,(partition_id,epoch_id)可用于对数据进行重复数据删除和/或事务提交,并实现一次性保证。但是,如果正在以连续模式执行流式查询,则此保证不成立,因此不应用于重复数据删除。

Triggers(触发器)

| Trigger Type | Description |

|---|---|

| unspecified (default) | 如果未明确指定触发设置,则默认情况下,查询将以微批处理模式执行,一旦前一个微批处理完成处理,将立即生成微批处理。 |

| Fixed interval micro-batches | 查询将以微批处理模式执行,其中微批处理将以用户指定的间隔启动。

|

| One-time micro-batch |

查询将执行*仅一个*微批处理所有可用数据,然后自行停止。这在您希望定期启动集群,处理自上一个时间段以来可用的所有内容,然后关闭集群的方案中非常有用。在某些情况下,这可能会显着节省成本。

|

| Continuous with fixed checkpoint interval (experimental) |

查询将以新的低延迟,连续处理模式执行。

·在下面的连续处理部分中阅读更多相关信息

|

以下是一些代码示例:

# Default trigger (runs micro-batch as soon as it can)

df.writeStream \

.format("console") \

.start()

# ProcessingTime trigger with two-seconds micro-batch interval

df.writeStream \

.format("console") \

.trigger(processingTime='2 seconds') \

.start()

# One-time trigger

df.writeStream \

.format("console") \

.trigger(once=True) \

.start()

# Continuous trigger with one-second checkpointing interval

df.writeStream

.format("console")

.trigger(continuous='1 second')

.start()Managing Streaming Queries(管理流式查询)

启动查询时创建的StreamingQuery对象可用于监视和管理查询

query = df.writeStream.format("console").start() # get the query object

query.id() # get the unique identifier of the running query that persists across restarts from checkpoint data

query.runId() # get the unique id of this run of the query, which will be generated at every start/restart

query.name() # get the name of the auto-generated or user-specified name

query.explain() # print detailed explanations of the query

query.stop() # stop the query

query.awaitTermination() # block until query is terminated, with stop() or with error

query.exception() # the exception if the query has been terminated with error

query.recentProgress() # an array of the most recent progress updates for this query

query.lastProgress() # the most recent progress update of this streaming queryspark = ... # spark session

spark.streams().active # get the list of currently active streaming queries

spark.streams().get(id) # get a query object by its unique id

spark.streams().awaitAnyTermination() # block until any one of them terminatesMonitoring Streaming Queries(监视流式查询)

Reading Metrics Interactively(以交互方式阅读度量标准)

query = ... # a StreamingQuery

print(query.lastProgress)

'''

Will print something like the following.

{u'stateOperators': [], u'eventTime': {u'watermark': u'2016-12-14T18:45:24.873Z'}, u'name': u'MyQuery', u'timestamp': u'2016-12-14T18:45:24.873Z', u'processedRowsPerSecond': 200.0, u'inputRowsPerSecond': 120.0, u'numInputRows': 10, u'sources': [{u'description': u'KafkaSource[Subscribe[topic-0]]', u'endOffset': {u'topic-0': {u'1': 134, u'0': 534, u'3': 21, u'2': 0, u'4': 115}}, u'processedRowsPerSecond': 200.0, u'inputRowsPerSecond': 120.0, u'numInputRows': 10, u'startOffset': {u'topic-0': {u'1': 1, u'0': 1, u'3': 1, u'2': 0, u'4': 1}}}], u'durationMs': {u'getOffset': 2, u'triggerExecution': 3}, u'runId': u'88e2ff94-ede0-45a8-b687-6316fbef529a', u'id': u'ce011fdc-8762-4dcb-84eb-a77333e28109', u'sink': {u'description': u'MemorySink'}}

'''

print(query.status)

'''

Will print something like the following.

{u'message': u'Waiting for data to arrive', u'isTriggerActive': False, u'isDataAvailable': False}

'''Reporting Metrics programmatically using Asynchronous APIs(使用异步API以编程方式报告度量标准)

Not available in Python.Reporting Metrics using Dropwizard(使用Dropwizard报告指标)

spark.conf.set("spark.sql.streaming.metricsEnabled", "true")

# or

spark.sql("SET spark.sql.streaming.metricsEnabled=true")All queries started in the SparkSession after this configuration has been enabled will report metrics through Dropwizard to whatever sinks have been configured (e.g. Ganglia, Graphite, JMX, etc.).

Recovering from Failures with Checkpointing(通过检查点从故障中恢复)

aggDF \

.writeStream \

.outputMode("complete") \

.option("checkpointLocation", "path/to/HDFS/dir") \

.format("memory") \

.start()Recovery Semantics after Changes in a Streaming Query(流式查询中更改后的恢复语义)

术语“允许”意味着您可以执行指定的更改,但其效果的语义是否明确定义取决于查询和更改.

- 术语“不允许”意味着您不应该执行指定的更改,因为重新启动的查询可能会因不可预测的错误而失败。sdf表示使用sparkSession.readStream生成的流式DataFrame / Dataset

Types of changes(变化的类型)

输入源的数量或类型(即不同来源)的变化:这是不允许的。

- 输入源参数的更改:是否允许此更改以及更改的语义是否明确定义取决于源和查询。这里有一些例子。

- 允许添加/删除/修改速率限制:

spark.readStream.format("kafka").option("subscribe", "topic")tospark.readStream.format("kafka").option("subscribe", "topic").option("maxOffsetsPerTrigger", ...) 不允许对订阅的主题/文件进行更改,因为结果是不可预测:spark.readStream.format("kafka").option("subscribe", "topic")tospark.readStream.format("kafka").option("subscribe", "newTopic")

- 输出接收器类型的变化:允许几个特定接收器组合之间的变化。这需要根据具体情况进行验证。这里有一些例子。

File sink to Kafka sink is allowed. Kafka will see only the new data.

Kafka sink to file sink is not allowed.

Kafka sink changed to foreach, or vice versa is allowed.

Changes in the parameters of output sink: Whether this is allowed and whether the semantics of the change are well-defined depends on the sink and the query. Here are a few examples.

Changes to output directory of a file sink is not allowed:

sdf.writeStream.format("parquet").option("path", "/somePath")tosdf.writeStream.format("parquet").option("path", "/anotherPath")Changes to output topic is allowed:

sdf.writeStream.format("kafka").option("topic", "someTopic")tosdf.writeStream.format("kafka").option("topic", "anotherTopic")Changes to the user-defined foreach sink (that is, the

ForeachWritercode) is allowed, but the semantics of the change depends on the code.

*Changes in projection / filter / map-like operations**: Some cases are allowed. For example:

Addition / deletion of filters is allowed:

sdf.selectExpr("a")tosdf.where(...).selectExpr("a").filter(...).Changes in projections with same output schema is allowed:

sdf.selectExpr("stringColumn AS json").writeStreamtosdf.selectExpr("anotherStringColumn AS json").writeStreamChanges in projections with different output schema are conditionally allowed:

sdf.selectExpr("a").writeStreamtosdf.selectExpr("b").writeStreamis allowed only if the output sink allows the schema change from"a"to"b".

Changes in stateful operations: Some operations in streaming queries need to maintain state data in order to continuously update the result. Structured Streaming automatically checkpoints the state data to fault-tolerant storage (for example, HDFS, AWS S3, Azure Blob storage) and restores it after restart. However, this assumes that the schema of the state data remains same across restarts. This means that any changes (that is, additions, deletions, or schema modifications) to the stateful operations of a streaming query are not allowed between restarts. Here is the list of stateful operations whose schema should not be changed between restarts in order to ensure state recovery:

Streaming aggregation: For example,

sdf.groupBy("a").agg(...). Any change in number or type of grouping keys or aggregates is not allowed.Streaming deduplication: For example,

sdf.dropDuplicates("a"). Any change in number or type of grouping keys or aggregates is not allowed.Stream-stream join: For example,

sdf1.join(sdf2, ...)(i.e. both inputs are generated withsparkSession.readStream). Changes in the schema or equi-joining columns are not allowed. Changes in join type (outer or inner) not allowed. Other changes in the join condition are ill-defined.Arbitrary stateful operation: For example,

sdf.groupByKey(...).mapGroupsWithState(...)orsdf.groupByKey(...).flatMapGroupsWithState(...). Any change to the schema of the user-defined state and the type of timeout is not allowed. Any change within the user-defined state-mapping function are allowed, but the semantic effect of the change depends on the user-defined logic. If you really want to support state schema changes, then you can explicitly encode/decode your complex state data structures into bytes using an encoding/decoding scheme that supports schema migration. For example, if you save your state as Avro-encoded bytes, then you are free to change the Avro-state-schema between query restarts as the binary state will always be restored successfully.

Continuous Processing(连续处理)

[Experimental]

spark \

.readStream \

.format("kafka") \

.option("kafka.bootstrap.servers", "host1:port1,host2:port2") \

.option("subscribe", "topic1") \

.load() \

.selectExpr("CAST(key AS STRING)", "CAST(value AS STRING)") \

.writeStream \

.format("kafka") \

.option("kafka.bootstrap.servers", "host1:port1,host2:port2") \

.option("topic", "topic1") \

.trigger(continuous="1 second") \ # only change in query

.start()A checkpoint interval of 1 second means that the continuous processing engine will records the progress of the query every second. The resulting checkpoints are in a format compatible with the micro-batch engine, hence any query can be restarted with any trigger. For example, a supported query started with the micro-batch mode can be restarted in continuous mode, and vice versa. Note that any time you switch to continuous mode, you will get at-least-once fault-tolerance guarantees.

Supported Queries(支持的查询)

从Spark 2.3开始,连续处理模式仅支持以下类型的查询。

- 操作:在连续模式下仅支持类似地图的数据集/数据框操作,即仅投影(select,map,flatMap,mapPartitions等)和选择(where,filter等)。

- 除了聚合函数(因为尚不支持聚合),current_timestamp()和current_date()(使用时间的确定性计算具有挑战性)之外,支持所有SQL函数。

- Sources:

- Kafka来源:支持所有选项。

- Rate source: Good for testing. Only options that are supported in the continuous mode are

numPartitionsandrowsPerSecond.

- Sinks:

- Kafka sink:支持所有选项。

- Memory sink: Good for debugging.

- Console sink: Good for debugging. All options are supported. Note that the console will print every checkpoint interval that you have specified in the continuous trigger.

Caveats(注意事项)

- 连续处理引擎启动多个长时间运行的任务,这些任务不断从源中读取数据,处理数据并连续写入接收器。查询所需的任务数取决于查询可以并行从源读取的分区数。因此,在开始连续处理查询之前,必须确保群集中有足够的核心并行执行所有任务。例如,如果您正在读取具有10个分区的Kafka主题,则群集必须至少具有10个核心才能使查询取得进展。

- 停止连续处理流可能会产生虚假的任务终止警告。这些可以安全地忽略。

- 目前没有自动重试失败的任务。任何失败都将导致查询停止,并且需要从检查点手动重新启动。

Additional Information(附加信息)

Further Reading(进一步阅读)

- 查看并运行Scala / Java / Python / R示例。

- 有关如何运行Spark示例的说明

- Read about integrating with Kafka in the Structured Streaming Kafka Integration Guide

- Read more details about using DataFrames/Datasets in the Spark SQL Programming Guide

- Third-party Blog Posts

Talks

- Spark Summit Europe 2017

- Easy, Scalable, Fault-tolerant Stream Processing with Structured Streaming in Apache Spark - Part 1 slides/video, Part 2 slides/video

- Deep Dive into Stateful Stream Processing in Structured Streaming - slides/video

- Spark Summit 2016

- A Deep Dive into Structured Streaming - slides/video

Spark译文(三)的更多相关文章

- Spark部署三种方式介绍:YARN模式、Standalone模式、HA模式

参考自:Spark部署三种方式介绍:YARN模式.Standalone模式.HA模式http://www.aboutyun.com/forum.php?mod=viewthread&tid=7 ...

- 基于Spark1.3.0的Spark sql三个核心部分

基于Spark1.3.0的Spark sql三个核心部分: 1.可以架子啊各种结构化数据源(JSON,Hive,and Parquet) 2.可以让你通过SQL,saprk内部程序或者外部攻击,通过标 ...

- Spark(三)角色和搭建

目录 Spark(三)角色和搭建 一.Spark集群角色介绍 二.集群的搭建 三.history服务 四.使用spark-submit进行计算Pi 五.Spark On Yarn 六.shell脚本 ...

- 大话Spark(5)-三图详述Spark Standalone/Client/Cluster运行模式

之前在 大话Spark(2)里讲过Spark Yarn-Client的运行模式,有同学反馈与Cluster模式没有对比, 这里我重新整理了三张图分别看下Standalone,Yarn-Client 和 ...

- 大数据入门第二十二天——spark(三)自定义分区、排序与查找

一.自定义分区 1.概述 默认的是Hash的分区策略,这点和Hadoop是类似的,具体的分区介绍,参见:https://blog.csdn.net/high2011/article/details/6 ...

- Spark(三)RDD与广播变量、累加器

一.RDD的概述 1.1 什么是RDD RDD(Resilient Distributed Dataset)叫做弹性分布式数据集,是Spark中最基本的数据抽象,它代表一个不可变.可分区.里面的元素可 ...

- Spark(三) -- Shark与SparkSQL

首先介绍一下Shark的概念 Shark简单的说就是Spark上的Hive,其底层依赖于Hive引擎的 但是在Spark平台上,Shark的解析速度是Hive的几多倍 它就是Hive在Spark上的体 ...

- 理解Spark SQL(三)—— Spark SQL程序举例

上一篇说到,在Spark 2.x当中,实际上SQLContext和HiveContext是过时的,相反是采用SparkSession对象的sql函数来操作SQL语句的.使用这个函数执行SQL语句前需要 ...

- spark教程(三)-RDD认知与创建

RDD 介绍 spark 最重要的一个概念叫 RDD,Resilient Distributed Dataset,弹性分布式数据集,它是 spark 的最基本的数据(也是计算)抽象. 代码中是一个抽象 ...

随机推荐

- C++学习 之 类的声明及成员的访问(笔记)

1.类的声明 简单来说,属性以及对属性的操作的整合叫做类.要声明类可使用关键字class,并在它的后面定义类名,然后紧接着是属于该类的代码块{}.类的声明类似于函数声明,类的声明本身并不改变程序 的行 ...

- Momentum Contrast for Unsupervised Visual Representation Learning

Momentum Contrast for Unsupervised Visual Representation Learning 一.Methods Previously Proposed 1. E ...

- 微信小程序使用页面栈改变上一页面的数据

微信小程序中如果从一个页面中进入下一个页面,如果下个页面的数据有删除或者增加再返回上一个页面的时候,就会导致页面不刷新(数据加载函数在onload中),从而造成数据不一致的情况.其实在微信小程序中是可 ...

- 将Abp的UnitTest中的InMemory改为SQLite in memory

添加nuget包 Microsoft.EntityFrameworkCore.Sqlite 添加ServiceCollectionRegistrarSqlite public static class ...

- laravel 中使用es 流程总结

1. query_string 2.mutil_match 3.match 4.should.must.bool 5.analysiz

- 07 MySQL之索引原理

一.介绍 为什么有索引:使用索引可快速访问数据库表中的特定信息.索引是对数据库表中一列或多列的值进行排序的一种结构. 作用: 1. 快速查询数据 2. 保证数据的唯一性 3 ...

- yarn的安装步骤

yarn依赖npm,是npm中的一个包 https://www.npmjs.com/package/yarn 1.安装 npm i -g -yarn 2.测试是否安装成功 yarn --vresion ...

- 【项目构建工具】 Gradle笔记2

一.Gradle执行流程 1.Gradle的执行流程(生命周期)主要是三个阶段: 初始化阶段:解析整个工程中所有Project,构建所有的Project对应的project对象 配置阶段:解析所有的p ...

- python之SSH远程登录

一.SSH简介 SSH(Secure Shell)属于在传输层上运行的用户层协议,相对于Telnet来说具有更高的安全性. 二.SSH远程连接 SSH远程连接有两种方式,一种是通过用户名和密码直接登录 ...

- 织梦DEDEcms5.7解决arclist标签调用副栏目文章

使用arclist标签调用文章的时候才发现,根本无法调用相关文章. 下面给出解决办法,希望帮到需要的人. 找到/include/taglib/arclist.lib.php文件然后打开.然后在大约30 ...