Jetson TX2上的demo(原创)

Jetson TX2上的demo

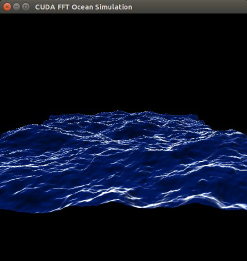

一、快速傅里叶-海动图 sample

The CUDA samples directory is copied to the home directory on the device by JetPack. The built binaries are in the following directory:

/home/ubuntu/NVIDIA_CUDA-<version>_Samples/bin/armv7l/linux/release/gnueabihf/

这里的version需要看你自己安装的CUDA版本而定

Run the samples at the command line or by double-clicking on them in the file browser. For example, when you run the oceanFFT sample, the following screen is displayed.

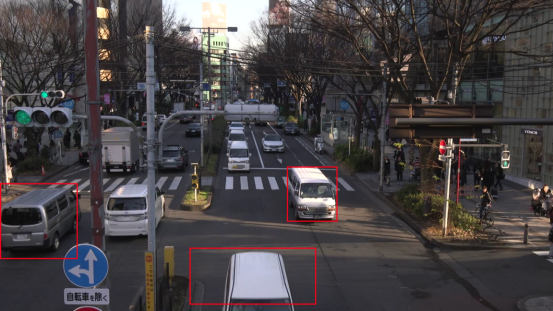

二、车辆识别加框sample

nvidia@tegra-ubuntu:~/tegra_multimedia_api/samples/backend$

./backend 1 ../../data/Video/sample_outdoor_car_1080p_10fps.h264 H264

--trt-deployfile ../../data/Model/GoogleNet_one_class/GoogleNet_modified_oneClass_halfHD.prototxt

--trt-modelfile ../../data/Model/GoogleNet_one_class/GoogleNet_modified_oneClass_halfHD.caffemodel --trt-forcefp32 0 --trt-proc-interval 1 -fps 10

三、GEMM(通用矩阵乘法)测试

nvidia@tegra-ubuntu:/usr/local/cuda/samples/7_CUDALibraries/batchCUBLAS$ ./batchCUBLAS -m1024 -n1024 -k1024

batchCUBLAS Starting...

GPU Device 0: "NVIDIA Tegra X2" with compute capability 6.2

==== Running single kernels ====

Testing sgemm#### args: ta=0 tb=0 m=1024 n=1024 k=1024 alpha = (0xbf800000, -1) beta= (0x40000000, 2)#### args: lda=1024 ldb=1024 ldc=1024

^^^^ elapsed = 0.00372291 sec GFLOPS=576.83@@@@ sgemm test OK

Testing dgemm#### args: ta=0 tb=0 m=1024 n=1024 k=1024 alpha = (0x0000000000000000, 0) beta= (0x0000000000000000, 0)#### args: lda=1024 ldb=1024 ldc=1024

^^^^ elapsed = 0.10940003 sec GFLOPS=19.6296@@@@ dgemm test OK

==== Running N=10 without streams ====

Testing sgemm#### args: ta=0 tb=0 m=1024 n=1024 k=1024 alpha = (0xbf800000, -1) beta= (0x00000000, 0)#### args: lda=1024 ldb=1024 ldc=1024

^^^^ elapsed = 0.03462315 sec GFLOPS=620.245@@@@ sgemm test OK

Testing dgemm#### args: ta=0 tb=0 m=1024 n=1024 k=1024 alpha = (0xbff0000000000000, -1) beta= (0x0000000000000000, 0)#### args: lda=1024 ldb=1024 ldc=1024

^^^^ elapsed = 1.09212208 sec GFLOPS=19.6634@@@@ dgemm test OK

==== Running N=10 with streams ====

Testing sgemm#### args: ta=0 tb=0 m=1024 n=1024 k=1024 alpha = (0x40000000, 2) beta= (0x40000000, 2)#### args: lda=1024 ldb=1024 ldc=1024

^^^^ elapsed = 0.03504515 sec GFLOPS=612.776@@@@ sgemm test OK

Testing dgemm#### args: ta=0 tb=0 m=1024 n=1024 k=1024 alpha = (0xbff0000000000000, -1) beta= (0x0000000000000000, 0)#### args: lda=1024 ldb=1024 ldc=1024

^^^^ elapsed = 1.09177494 sec GFLOPS=19.6697@@@@ dgemm test OK

==== Running N=10 batched ====

Testing sgemm#### args: ta=0 tb=0 m=1024 n=1024 k=1024 alpha = (0x3f800000, 1) beta= (0xbf800000, -1)#### args: lda=1024 ldb=1024 ldc=1024

^^^^ elapsed = 0.03766394 sec GFLOPS=570.17@@@@ sgemm test OK

Testing dgemm#### args: ta=0 tb=0 m=1024 n=1024 k=1024 alpha = (0xbff0000000000000, -1) beta= (0x4000000000000000, 2)#### args: lda=1024 ldb=1024 ldc=1024

^^^^ elapsed = 1.09389901 sec GFLOPS=19.6315@@@@ dgemm test OK

Test Summary0 error(s)

四、内存带宽测试

nvidia@tegra-ubuntu:/usr/local/cuda/samples/1_Utilities/bandwidthTest$ ./bandwidthTest

[CUDA Bandwidth Test] - Starting...

Running on...

Device 0: NVIDIA Tegra X2

Quick Mode

Host to Device Bandwidth, 1 Device(s)

PINNED Memory Transfers

Transfer Size (Bytes) Bandwidth(MB/s)

33554432 20215.8

Device to Host Bandwidth, 1 Device(s)

PINNED Memory Transfers

Transfer Size (Bytes) Bandwidth(MB/s)

33554432 20182.2

Device to Device Bandwidth, 1 Device(s)

PINNED Memory Transfers

Transfer Size (Bytes) Bandwidth(MB/s)

33554432 35742.8

Result = PASS

NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

五、设备查询

nvidia@tegra-ubuntu:~/work/TensorRT/tmp/usr/src/tensorrt$ cd /usr/local/cuda/samples/1_Utilities/deviceQuery

nvidia@tegra-ubuntu:/usr/local/cuda/samples/1_Utilities/deviceQuery$ ls

deviceQuery deviceQuery.cpp deviceQuery.o Makefile NsightEclipse.xml readme.txt

nvidia@tegra-ubuntu:/usr/local/cuda/samples/1_Utilities/deviceQuery$ ./deviceQuery

./deviceQuery Starting...

CUDA Device Query (Runtime API) version (CUDART static linking)

Detected 1 CUDA Capable device(s)

Device 0: "NVIDIA Tegra X2"

CUDA Driver Version / Runtime Version 8.0 / 8.0

CUDA Capability Major/Minor version number: 6.2

Total amount of global memory: 7851 MBytes (8232062976 bytes)

( 2) Multiprocessors, (128) CUDA Cores/MP: 256 CUDA Cores

GPU Max Clock rate: 1301 MHz (1.30 GHz)

Memory Clock rate: 1600 Mhz

Memory Bus Width: 128-bit

L2 Cache Size: 524288 bytes

Maximum Texture Dimension Size (x,y,z) 1D=(131072), 2D=(131072, 65536), 3D=(16384, 16384, 16384)

Maximum Layered 1D Texture Size, (num) layers 1D=(32768), 2048 layers

Maximum Layered 2D Texture Size, (num) layers 2D=(32768, 32768), 2048 layers

Total amount of constant memory: 65536 bytes

Total amount of shared memory per block: 49152 bytes

Total number of registers available per block: 32768

Warp size: 32

Maximum number of threads per multiprocessor: 2048

Maximum number of threads per block: 1024

Max dimension size of a thread block (x,y,z): (1024, 1024, 64)

Max dimension size of a grid size (x,y,z): (2147483647, 65535, 65535)

Maximum memory pitch: 2147483647 bytes

Texture alignment: 512 bytes

Concurrent copy and kernel execution: Yes with 1 copy engine(s)

Run time limit on kernels: No

Integrated GPU sharing Host Memory: Yes

Support host page-locked memory mapping: Yes

Alignment requirement for Surfaces: Yes

Device has ECC support: Disabled

Device supports Unified Addressing (UVA): Yes

Device PCI Domain ID / Bus ID / location ID: 0 / 0 / 0

Compute Mode:

< Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) >

deviceQuery, CUDA Driver = CUDART, CUDA Driver Version = 8.0, CUDA Runtime Version = 8.0, NumDevs = 1, Device0 = NVIDIA Tegra X2Result = PASS

六、大型项目的测试

详情查看https://developer.nvidia.com/embedded/jetpack

这里面还有一些项目

Jetson TX2上的demo(原创)的更多相关文章

- 在Jetson TX2上显示摄像头视频并使用python进行caffe推理

参考文章:How to Capture Camera Video and Do Caffe Inferencing with Python on Jetson TX2 与参考文章大部分都是相似的,如果 ...

- 在Jetson TX2上捕获、显示摄像头视频

参考文章:How to Capture and Display Camera Video with Python on Jetson TX2 与参考文章大部分都是相似的,如果不习惯看英文,可以看看我下 ...

- 在Jetson TX2上安装caffe和PyCaffe

caffe是Nvidia TensorRT最支持的深度学习框架,因此在Jetson TX2上安装caffe很有必要.顺便说一句,下面的安装是支持python3的. 先决条件 在Jetson TX2上完 ...

- 在Jetson TX2上安装OpenCV(3.4.0)

参考文章:How to Install OpenCV (3.4.0) on Jetson TX2 与参考文章大部分都是相似的,如果不习惯看英文,可以看看我下面的描述 在我们使用python3进行编程时 ...

- Jetson TX2安装tensorflow(原创)

Jetson TX2安装tensorflow 大致分为两步: 一.划分虚拟内存 原因:Jetson TX2自带8G内存这个内存空间在安装tensorflow编译过程中会出现内存溢出引发的安装进程奔溃 ...

- Jetson TX2 安装JetPack3.3教程

Jetson TX2 刷机教程(JetPack3.3版本) 参考网站:https://blog.csdn.net/long19960208/article/details/81538997 版权声明: ...

- 02-NVIDIA Jetson TX2 通过JetPack 3.1刷机完整版(踩坑版)

未经允许,不得擅自改动和转载 文 | 阿小庆 2018-1-20 本文继第一篇文章:01-NVIDIA Jetson TX2开箱上电显示界面 TX2 出厂时,已经自带了 Ubuntu 16.04 系统 ...

- Jetson TX2火力全开

Jetson Tegra系统的应用涵盖越来越广,相应用户对性能和功耗的要求也呈现多样化.为此NVIDIA提供一种新的命令行工具,可以方便地让用户配置CPU状态,以最大限度地提高不同场景下的性能和能耗. ...

- 在TX2上多线程读取视频帧进行caffe推理

参考文章:Multi-threaded Camera Caffe Inferencing TX2之多线程读取视频及深度学习推理 背景 一般在TX2上部署深度学习模型时,都是读取摄像头视频或者传入视频文 ...

随机推荐

- 类Unix平台程序调试

GNU Binutils GNU Binutils 建立main.c文件,内容如下: #include <stdio.h> void main() { int a = 5/0; } 编译m ...

- spring使用之旅(一) ---- bean的装配

基础配置 启用组件扫描配置 Java类配置文件方式 package com.springapp.mvc.application; import ...

- 渗透测试入门DVWA 教程1:环境搭建

首先欢迎新萌入坑.哈哈.你可能抱着好奇心或者疑问.DVWA 是个啥? DVWA是一款渗透测试的演练系统,在圈子里是很出名的.如果你需要入门,并且找不到合适的靶机,那我就推荐你用DVWA. 我们通常将演 ...

- BZOJ 1029: [JSOI2007]建筑抢修【优先队列+贪心策略】

1029: [JSOI2007]建筑抢修 Time Limit: 4 Sec Memory Limit: 162 MBSubmit: 4810 Solved: 2160[Submit][Statu ...

- UnityShader 表面着色器简单例程集合

0.前言 这些简单的shader程序都是写于2015年的暑假.当时实验室空调坏了,30多个人在实验室中挥汗如雨,闷热中学习shader的日子还历历在目.这些文章闲置在我个人博客中,一年将过,师弟也到了 ...

- Equals()和GetHashCode()方法深入了解

最近在看Jeffrey Richter的CLR Via C#,在看到GetHashCode()方法的时候,有一个地方不是特别明白,就是重写Equals()方法时为什么要把GetHashCode()方法 ...

- koala 编译scss不支持中文(包括中文注释),解决方案如下

进入Koala安装目录,例如:C:\Program Files (x86)\Koala\rubygems\gems\sass-3.4.9\lib\sass 找到engine.rb文件,在该文件中找到最 ...

- [国嵌攻略][137][DM9000网卡驱动编程]

DM9000数据发送 DM9000数据发送函数是在/drivers/net/dm9000.c中的dm9000_start_xmit函数 static int dm9000_start_xmit(str ...

- 炫酷线条动画--svg

我们经常可以在一些页面看到看起来很酷的线条动画,有些可以用css实现,有些css就无能为力了,今天来研究另一种实现方式,svg 如果对svg是什么还不了解的话,可以先去看看svg的基础教程: 一般对于 ...

- 【django基础补充之URL,视图,模版】

一.url路由配置 URL配置(URLconf)就像Django 所支撑网站的目录.它的本质是URL与要为该URL调用的视图函数之间的映射表:你就是以这种方式告诉Django,对于这个URL调用这段代 ...