Hive metastore整体代码分析及详解

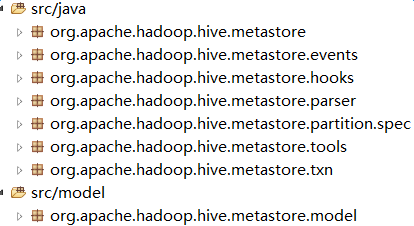

从上一篇对Hive metastore表结构的简要分析中,我再根据数据设计的实体对象,再进行整个代码结构的总结。那么我们先打开metadata的目录,其目录结构:

可以看到,整个hivemeta的目录包含metastore(客户端与服务端调用逻辑)、events(事件目录包含table生命周期中的检查、权限认证等listener实现)、hooks(这里的hooks仅包含了jdo connection的相关接口)、parser(对于表达树的解析)、spec(partition的相关代理类)、tools(jdo execute相关方法)及txn及model,下来我们从整个metadata分逐一进行代码分析及注释:

没有把包打开,很多类?是不是感觉害怕很想死?我也想死,咱们继续。。一开始,我们可能觉得一团乱麻烦躁,这是啥玩意儿啊这。。冷静下来,我们从Hive这个大类开始看,因为它是metastore元数据调用的入口。整个生命周期分析流程为: HiveMetaStoreClient客户端的创建及加载、HiveMetaStore服务端的创建及加载、createTable、dropTable、AlterTable、createPartition、dropPartition、alterPartition。当然,这只是完整metadata的一小部分。

1、HiveMetaStoreClient客户端的创建及加载

那么我们从Hive这个类一点点开始看:

private HiveConf conf = null;

private IMetaStoreClient metaStoreClient;

private UserGroupInformation owner; // metastore calls timing information

private final Map<String, Long> metaCallTimeMap = new HashMap<String, Long>(); private static ThreadLocal<Hive> hiveDB = new ThreadLocal<Hive>() {

@Override

protected synchronized Hive initialValue() {

return null;

} @Override

public synchronized void remove() {

if (this.get() != null) {

this.get().close();

}

super.remove();

}

};

这里声明的有hiveConf对象、metaStoreClient 、操作用户组userGroupInfomation以及调用时间Map,这里存成一个map,用来记录每一个动作的运行时长。同时维护了一个本地线程hiveDB,如果db为空的情况下,会重新创建一个Hive对象,代码如下:

public static Hive get(HiveConf c, boolean needsRefresh) throws HiveException {

Hive db = hiveDB.get();

if (db == null || needsRefresh || !db.isCurrentUserOwner()) {

if (db != null) {

LOG.debug("Creating new db. db = " + db + ", needsRefresh = " + needsRefresh +

", db.isCurrentUserOwner = " + db.isCurrentUserOwner());

}

closeCurrent();

c.set("fs.scheme.class", "dfs");

Hive newdb = new Hive(c);

hiveDB.set(newdb);

return newdb;

}

db.conf = c;

return db;

}

随后我们会发现,在创建Hive对象时,便已经将function进行注册,什么是function呢,通过上次的表结构分析,可以理解为所有udf等jar包的元数据存储。代码如下:

// register all permanent functions. need improvement

static {

try {

reloadFunctions();

} catch (Exception e) {

LOG.warn("Failed to access metastore. This class should not accessed in runtime.",e);

}

} public static void reloadFunctions() throws HiveException {

//获取 Hive对象,用于后续方法的调用

Hive db = Hive.get();

//通过遍历每一个dbName

for (String dbName : db.getAllDatabases()) {

//通过dbName查询挂在该db下的所有function的信息。

for (String functionName : db.getFunctions(dbName, "*")) {

Function function = db.getFunction(dbName, functionName);

try {

//这里的register便是将查询到的function的数据注册到Registry类中的一个Map<String,FunctionInfo>中,以便计算引擎在调用时,不必再次查询数据库。

FunctionRegistry.registerPermanentFunction(

FunctionUtils.qualifyFunctionName(functionName, dbName), function.getClassName(),

false, FunctionTask.toFunctionResource(function.getResourceUris()));

} catch (Exception e) {

LOG.warn("Failed to register persistent function " +

functionName + ":" + function.getClassName() + ". Ignore and continue.");

}

}

}

}

调用getMSC()方法,进行metadataClient客户端的创建,代码如下:

1 private IMetaStoreClient createMetaStoreClient() throws MetaException {

2

//这里实现接口HiveMetaHookLoader

3 HiveMetaHookLoader hookLoader = new HiveMetaHookLoader() {

4 @Override

5 public HiveMetaHook getHook(

6 org.apache.hadoop.hive.metastore.api.Table tbl)

7 throws MetaException {

8

9 try {

10 if (tbl == null) {

11 return null;

12 }

//根据tble的kv属性加载不同storage的实例,比如hbase、redis等等拓展存储,作为外部表进行存储

13 HiveStorageHandler storageHandler =

14 HiveUtils.getStorageHandler(conf,

15 tbl.getParameters().get(META_TABLE_STORAGE));

16 if (storageHandler == null) {

17 return null;

18 }

19 return storageHandler.getMetaHook();

20 } catch (HiveException ex) {

21 LOG.error(StringUtils.stringifyException(ex));

22 throw new MetaException(

23 "Failed to load storage handler: " + ex.getMessage());

24 }

25 }

26 };

27 return RetryingMetaStoreClient.getProxy(conf, hookLoader, metaCallTimeMap,

28 SessionHiveMetaStoreClient.class.getName());

29 }

2、HiveMetaStore服务端的创建及加载

在HiveMetaStoreClient初始化时,会初始化HiveMetaStore客户端,代码如下:

public HiveMetaStoreClient(HiveConf conf, HiveMetaHookLoader hookLoader)

throws MetaException { this.hookLoader = hookLoader;

if (conf == null) {

conf = new HiveConf(HiveMetaStoreClient.class);

}

this.conf = conf;

filterHook = loadFilterHooks();

//根据hive-site.xml中的hive.metastore.uris配置,如果配置该参数,则认为是远程连接,否则为本地连接

String msUri = conf.getVar(HiveConf.ConfVars.METASTOREURIS);

localMetaStore = HiveConfUtil.isEmbeddedMetaStore(msUri);

if (localMetaStore) {

//本地连接直接连接HiveMetaStore

client = HiveMetaStore.newRetryingHMSHandler("hive client", conf, true);

isConnected = true;

snapshotActiveConf();

return;

} //获取配置中的重试次数及timeout时间

retries = HiveConf.getIntVar(conf, HiveConf.ConfVars.METASTORETHRIFTCONNECTIONRETRIES);

retryDelaySeconds = conf.getTimeVar(

ConfVars.METASTORE_CLIENT_CONNECT_RETRY_DELAY, TimeUnit.SECONDS); //拼接metastore uri

if (conf.getVar(HiveConf.ConfVars.METASTOREURIS) != null) {

String metastoreUrisString[] = conf.getVar(

HiveConf.ConfVars.METASTOREURIS).split(",");

metastoreUris = new URI[metastoreUrisString.length];

try {

int i = 0;

for (String s : metastoreUrisString) {

URI tmpUri = new URI(s);

if (tmpUri.getScheme() == null) {

throw new IllegalArgumentException("URI: " + s

+ " does not have a scheme");

}

metastoreUris[i++] = tmpUri; }

} catch (IllegalArgumentException e) {

throw (e);

} catch (Exception e) {

MetaStoreUtils.logAndThrowMetaException(e);

}

} else {

LOG.error("NOT getting uris from conf");

throw new MetaException("MetaStoreURIs not found in conf file");

}

调用open方法创建连接

open();

}

从上面代码中可以看出,如果我们是远程连接,需要配置hive-site.xml中的hive.metastore.uri,是不是很熟悉?加入你的client与server不在同一台机器,就需要配置进行远程连接。那么我们继续往下面看,创建连接的open方法:

private void open() throws MetaException {

isConnected = false;

TTransportException tte = null;

//是否使用Sasl

boolean useSasl = conf.getBoolVar(ConfVars.METASTORE_USE_THRIFT_SASL);

//If true, the metastore Thrift interface will use TFramedTransport. When false (default) a standard TTransport is used.

boolean useFramedTransport = conf.getBoolVar(ConfVars.METASTORE_USE_THRIFT_FRAMED_TRANSPORT);

//If true, the metastore Thrift interface will use TCompactProtocol. When false (default) TBinaryProtocol will be used 具体他们之间的区别我们后续再讨论

boolean useCompactProtocol = conf.getBoolVar(ConfVars.METASTORE_USE_THRIFT_COMPACT_PROTOCOL);

//获取socket timeout时间

int clientSocketTimeout = (int) conf.getTimeVar(

ConfVars.METASTORE_CLIENT_SOCKET_TIMEOUT, TimeUnit.MILLISECONDS);

for (int attempt = 0; !isConnected && attempt < retries; ++attempt) {

for (URI store : metastoreUris) {

LOG.info("Trying to connect to metastore with URI " + store);

try {

transport = new TSocket(store.getHost(), store.getPort(), clientSocketTimeout);

if (useSasl) {

// Wrap thrift connection with SASL for secure connection.

try {

//创建HadoopThriftAuthBridge client

HadoopThriftAuthBridge.Client authBridge =

ShimLoader.getHadoopThriftAuthBridge().createClient();

//权限认证相关

// check if we should use delegation tokens to authenticate

// the call below gets hold of the tokens if they are set up by hadoop

// this should happen on the map/reduce tasks if the client added the

// tokens into hadoop's credential store in the front end during job

// submission.

String tokenSig = conf.get("hive.metastore.token.signature");

// tokenSig could be null

tokenStrForm = Utils.getTokenStrForm(tokenSig);

if(tokenStrForm != null) {

// authenticate using delegation tokens via the "DIGEST" mechanism

transport = authBridge.createClientTransport(null, store.getHost(),

"DIGEST", tokenStrForm, transport,

MetaStoreUtils.getMetaStoreSaslProperties(conf));

} else {

String principalConfig =

conf.getVar(HiveConf.ConfVars.METASTORE_KERBEROS_PRINCIPAL);

transport = authBridge.createClientTransport(

principalConfig, store.getHost(), "KERBEROS", null,

transport, MetaStoreUtils.getMetaStoreSaslProperties(conf));

}

} catch (IOException ioe) {

LOG.error("Couldn't create client transport", ioe);

throw new MetaException(ioe.toString());

}

} else if (useFramedTransport) {

transport = new TFramedTransport(transport);

}

final TProtocol protocol;

//后续详细说明两者的区别(因为俺还没看,哈哈)

if (useCompactProtocol) {

protocol = new TCompactProtocol(transport);

} else {

protocol = new TBinaryProtocol(transport);

}

//创建ThriftHiveMetastore client

client = new ThriftHiveMetastore.Client(protocol);

try {

transport.open();

isConnected = true;

} catch (TTransportException e) {

tte = e;

if (LOG.isDebugEnabled()) {

LOG.warn("Failed to connect to the MetaStore Server...", e);

} else {

// Don't print full exception trace if DEBUG is not on.

LOG.warn("Failed to connect to the MetaStore Server...");

}

}

//用户组及用户的加载

if (isConnected && !useSasl && conf.getBoolVar(ConfVars.METASTORE_EXECUTE_SET_UGI)){

// Call set_ugi, only in unsecure mode.

try {

UserGroupInformation ugi = Utils.getUGI();

client.set_ugi(ugi.getUserName(), Arrays.asList(ugi.getGroupNames()));

} catch (LoginException e) {

LOG.warn("Failed to do login. set_ugi() is not successful, " +

"Continuing without it.", e);

} catch (IOException e) {

LOG.warn("Failed to find ugi of client set_ugi() is not successful, " +

"Continuing without it.", e);

} catch (TException e) {

LOG.warn("set_ugi() not successful, Likely cause: new client talking to old server. "

+ "Continuing without it.", e);

}

}

} catch (MetaException e) {

LOG.error("Unable to connect to metastore with URI " + store

+ " in attempt " + attempt, e);

}

if (isConnected) {

break;

}

}

// Wait before launching the next round of connection retries.

if (!isConnected && retryDelaySeconds > 0) {

try {

LOG.info("Waiting " + retryDelaySeconds + " seconds before next connection attempt.");

Thread.sleep(retryDelaySeconds * 1000);

} catch (InterruptedException ignore) {}

}

}

if (!isConnected) {

throw new MetaException("Could not connect to meta store using any of the URIs provided." +

" Most recent failure: " + StringUtils.stringifyException(tte));

}

snapshotActiveConf();

LOG.info("Connected to metastore.");

}

本篇先对对protocol的原理放置一边。从代码中可以看出HiveMetaStore服务端是通过ThriftHiveMetaStore创建,它本是一个class类,但其中定义了接口Iface、AsyncIface,这样做的好处是利于继承实现。那么下来,我们看一下HMSHandler的初始化。如果是在本地调用的过程中,直接调用newRetryingHMSHandler,便会直接进行HMSHandler的初始化。代码如下:

public HMSHandler(String name, HiveConf conf, boolean init) throws MetaException {

super(name);

hiveConf = conf;

if (init) {

init();

}

}

下俩我们继续看它的init方法:

public void init() throws MetaException {

//获取与数据交互的实现类className,该类为objectStore,是RawStore的实现,负责JDO与数据库的交互。

rawStoreClassName = hiveConf.getVar(HiveConf.ConfVars.METASTORE_RAW_STORE_IMPL);

//加载Listeners,来自hive.metastore.init.hooks,可自行实现并加载

initListeners = MetaStoreUtils.getMetaStoreListeners(

MetaStoreInitListener.class, hiveConf,

hiveConf.getVar(HiveConf.ConfVars.METASTORE_INIT_HOOKS));

for (MetaStoreInitListener singleInitListener: initListeners) {

MetaStoreInitContext context = new MetaStoreInitContext();

singleInitListener.onInit(context);

}

//初始化alter的实现类

String alterHandlerName = hiveConf.get("hive.metastore.alter.impl",

HiveAlterHandler.class.getName());

alterHandler = (AlterHandler) ReflectionUtils.newInstance(MetaStoreUtils.getClass(

alterHandlerName), hiveConf);

//初始化warehouse

wh = new Warehouse(hiveConf);

//创建默认db以及用户,同时加载currentUrl

synchronized (HMSHandler.class) {

if (currentUrl == null || !currentUrl.equals(MetaStoreInit.getConnectionURL(hiveConf))) {

createDefaultDB();

createDefaultRoles();

addAdminUsers();

currentUrl = MetaStoreInit.getConnectionURL(hiveConf);

}

}

//计数信息的初始化

if (hiveConf.getBoolean("hive.metastore.metrics.enabled", false)) {

try {

Metrics.init();

} catch (Exception e) {

// log exception, but ignore inability to start

LOG.error("error in Metrics init: " + e.getClass().getName() + " "

+ e.getMessage(), e);

}

}

//Listener的PreListener的初始化

preListeners = MetaStoreUtils.getMetaStoreListeners(MetaStorePreEventListener.class,

hiveConf,

hiveConf.getVar(HiveConf.ConfVars.METASTORE_PRE_EVENT_LISTENERS));

listeners = MetaStoreUtils.getMetaStoreListeners(MetaStoreEventListener.class, hiveConf,

hiveConf.getVar(HiveConf.ConfVars.METASTORE_EVENT_LISTENERS));

listeners.add(new SessionPropertiesListener(hiveConf));

endFunctionListeners = MetaStoreUtils.getMetaStoreListeners(

MetaStoreEndFunctionListener.class, hiveConf,

hiveConf.getVar(HiveConf.ConfVars.METASTORE_END_FUNCTION_LISTENERS));

//针对partitionName的正则校验,可自行设置,根据hive.metastore.partition.name.whitelist.pattern进行设置

String partitionValidationRegex =

hiveConf.getVar(HiveConf.ConfVars.METASTORE_PARTITION_NAME_WHITELIST_PATTERN);

if (partitionValidationRegex != null && !partitionValidationRegex.isEmpty()) {

partitionValidationPattern = Pattern.compile(partitionValidationRegex);

} else {

partitionValidationPattern = null;

}

long cleanFreq = hiveConf.getTimeVar(ConfVars.METASTORE_EVENT_CLEAN_FREQ, TimeUnit.MILLISECONDS);

if (cleanFreq > 0) {

// In default config, there is no timer.

Timer cleaner = new Timer("Metastore Events Cleaner Thread", true);

cleaner.schedule(new EventCleanerTask(this), cleanFreq, cleanFreq);

}

}

它初始化了与数据库交互的rawStore的实现类、物理操作的warehouse以及Event与Listener。从而通过接口调用相关meta生命周期方法进行表的操作。

3、createTable

从createTable方法开始。上代码:

public void createTable(String tableName, List<String> columns, List<String> partCols,

Class<? extends InputFormat> fileInputFormat,

Class<?> fileOutputFormat, int bucketCount, List<String> bucketCols,

Map<String, String> parameters) throws HiveException {

if (columns == null) {

throw new HiveException("columns not specified for table " + tableName);

} Table tbl = newTable(tableName);

//SD表属性,设置该表的input及output class名,在计算引擎计算时,拉取相应的ClassName 通过反射进行input及output类的加载

tbl.setInputFormatClass(fileInputFormat.getName());

tbl.setOutputFormatClass(fileOutputFormat.getName());

//封装FileSchema对象,该为每个column的名称及字段类型,并加入到sd对象的的column属性中

for (String col : columns) {

FieldSchema field = new FieldSchema(col, STRING_TYPE_NAME, "default");

tbl.getCols().add(field);

}

//如果在创建表时,设置了分区信息,比如dt字段为该分区。则进行分区信息的记录,最终写入Partition表中

if (partCols != null) {

for (String partCol : partCols) {

FieldSchema part = new FieldSchema();

part.setName(partCol);

part.setType(STRING_TYPE_NAME); // default partition key

tbl.getPartCols().add(part);

}

}

//设置序列化的方式

tbl.setSerializationLib(LazySimpleSerDe.class.getName());

//设置分桶信息

tbl.setNumBuckets(bucketCount);

tbl.setBucketCols(bucketCols);

//设置table额外添加的kv信息

if (parameters != null) {

tbl.setParamters(parameters);

}

createTable(tbl);

}

从代码中可以看到,Hive 构造了一个Table的对象,该对象可以当做是一个model,包含了几乎所有以Tbls表为主表的所有以table_id为的外键表属性(具体可参考hive metastore表结构),封装完毕后在进行createTable的调用,接下来的调用如下:

public void createTable(Table tbl, boolean ifNotExists) throws HiveException {

try {

//这里再次获取SessionState中的CurrentDataBase进行setDbName(安全)

if (tbl.getDbName() == null || "".equals(tbl.getDbName().trim())) {

tbl.setDbName(SessionState.get().getCurrentDatabase());

}

//这里主要对每一个column属性进行校验,比如是否有非法字符等等

if (tbl.getCols().size() == 0 || tbl.getSd().getColsSize() == 0) {

tbl.setFields(MetaStoreUtils.getFieldsFromDeserializer(tbl.getTableName(),

tbl.getDeserializer()));

}

//该方法对table属性中的input、output以及column属性的校验

tbl.checkValidity();

if (tbl.getParameters() != null) {

tbl.getParameters().remove(hive_metastoreConstants.DDL_TIME);

}

org.apache.hadoop.hive.metastore.api.Table tTbl = tbl.getTTable();

//这里开始进行权限认证,牵扯到的便是我们再hive中配置的 hive.security.authorization.createtable.user.grants、hive.security.authorization.createtable.group.grants、

hive.security.authorization.createtable.role.grants配置参数,来自于hive自己封装的 用户、角色、组的概念。

PrincipalPrivilegeSet principalPrivs = new PrincipalPrivilegeSet();

SessionState ss = SessionState.get();

if (ss != null) {

CreateTableAutomaticGrant grants = ss.getCreateTableGrants();

if (grants != null) {

principalPrivs.setUserPrivileges(grants.getUserGrants());

principalPrivs.setGroupPrivileges(grants.getGroupGrants());

principalPrivs.setRolePrivileges(grants.getRoleGrants());

tTbl.setPrivileges(principalPrivs);

}

}

//通过客户端链接服务端进行table的创建

getMSC().createTable(tTbl);

} catch (AlreadyExistsException e) {

if (!ifNotExists) {

throw new HiveException(e);

}

} catch (Exception e) {

throw new HiveException(e);

}

}

那么下来,我们来看一下受到调用的HiveMetaClient中createTable方法,代码如下:

public void createTable(Table tbl, EnvironmentContext envContext) throws AlreadyExistsException,

InvalidObjectException, MetaException, NoSuchObjectException, TException {

//这里获取HiveMeetaHook对象,针对不同的存储引擎进行创建前的加载及验证

HiveMetaHook hook = getHook(tbl);

if (hook != null) {

hook.preCreateTable(tbl);

}

boolean success = false;

try {

//随即调用HiveMetaStore进行服务端与数据库的创建交互

create_table_with_environment_context(tbl, envContext);

if (hook != null) {

hook.commitCreateTable(tbl);

}

success = true;

} finally {

如果创建失败的话,进行回滚操作

if (!success && (hook != null)) {

hook.rollbackCreateTable(tbl);

}

}

}

这里简要说下Hook的作用,HiveMetaHook为接口,接口方法包括preCreate、rollbackCreateTable、preDropTable等等操作,它的实现为不同存储类型的预创建加载及验证,以及失败回滚等动作。代码如下:

public interface HiveMetaHook {

/**

* Called before a new table definition is added to the metastore

* during CREATE TABLE.

*

* @param table new table definition

*/

public void preCreateTable(Table table)

throws MetaException;

/**

* Called after failure adding a new table definition to the metastore

* during CREATE TABLE.

*

* @param table new table definition

*/

public void rollbackCreateTable(Table table)

throws MetaException;

public void preDropTale(Table table)

throws MetaException;

...............................

随后,我们再看一下HiveMetaStore服务端的createTable方法,如下:

1 private void create_table_core(final RawStore ms, final Table tbl,

2 final EnvironmentContext envContext)

throws AlreadyExistsException, MetaException,

InvalidObjectException, NoSuchObjectException {

//名称正则校验,校验是否含有非法字符

if (!MetaStoreUtils.validateName(tbl.getTableName())) {

throw new InvalidObjectException(tbl.getTableName()

+ " is not a valid object name");

}

//改端代码属于校验代码,对于column的名称及column type类型j及partitionKey的名称校验

String validate = MetaStoreUtils.validateTblColumns(tbl.getSd().getCols());

if (validate != null) {

throw new InvalidObjectException("Invalid column " + validate);

}

if (tbl.getPartitionKeys() != null) {

validate = MetaStoreUtils.validateTblColumns(tbl.getPartitionKeys());

if (validate != null) {

throw new InvalidObjectException("Invalid partition column " + validate);

}

}

SkewedInfo skew = tbl.getSd().getSkewedInfo();

if (skew != null) {

validate = MetaStoreUtils.validateSkewedColNames(skew.getSkewedColNames());

if (validate != null) {

throw new InvalidObjectException("Invalid skew column " + validate);

}

validate = MetaStoreUtils.validateSkewedColNamesSubsetCol(

skew.getSkewedColNames(), tbl.getSd().getCols());

if (validate != null) {

throw new InvalidObjectException("Invalid skew column " + validate);

}

} Path tblPath = null;

boolean success = false, madeDir = false;

try {

//创建前的事件调用,metastore已实现的listner事件包含DummyPreListener、AuthorizationPreEventListener、AlternateFailurePreListener以及MetaDataExportListener。

//这些Listener是干嘛的呢?详细解释由分析meta设计模式时,详细说明。

firePreEvent(new PreCreateTableEvent(tbl, this));

//打开事务

ms.openTransaction();

//如果db不存在的情况下,则抛异常

Database db = ms.getDatabase(tbl.getDbName());

if (db == null) {

throw new NoSuchObjectException("The database " + tbl.getDbName() + " does not exist");

}

// 校验该db下,table是否存在

if (is_table_exists(ms, tbl.getDbName(), tbl.getTableName())) {

throw new AlreadyExistsException("Table " + tbl.getTableName()

+ " already exists");

}

// 如果该表不为视图表,则组装完整的tbleParth ->fs.getUri().getScheme()+fs.getUri().getAuthority()+path.toUri().getPath())

if (!TableType.VIRTUAL_VIEW.toString().equals(tbl.getTableType())) {

if (tbl.getSd().getLocation() == null

|| tbl.getSd().getLocation().isEmpty()) {

tblPath = wh.getTablePath(

ms.getDatabase(tbl.getDbName()), tbl.getTableName());

} else {

//如果该表不是内部表同时tbl的kv中storage_handler为空时,则只是警告

if (!isExternal(tbl) && !MetaStoreUtils.isNonNativeTable(tbl)) {

LOG.warn("Location: " + tbl.getSd().getLocation()

+ " specified for non-external table:" + tbl.getTableName());

}

tblPath = wh.getDnsPath(new Path(tbl.getSd().getLocation()));

}

//将拼接完的tblPath set到sd的location中

tbl.getSd().setLocation(tblPath.toString());

}

//创建table的路径

if (tblPath != null) {

if (!wh.isDir(tblPath)) {

if (!wh.mkdirs(tblPath, true)) {

throw new MetaException(tblPath

+ " is not a directory or unable to create one");

}

madeDir = true;

}

}

// hive.stats.autogather 配置判断

if (HiveConf.getBoolVar(hiveConf, HiveConf.ConfVars.HIVESTATSAUTOGATHER) &&

!MetaStoreUtils.isView(tbl)) {

if (tbl.getPartitionKeysSize() == 0) { // Unpartitioned table

MetaStoreUtils.updateUnpartitionedTableStatsFast(db, tbl, wh, madeDir);

} else { // Partitioned table with no partitions.

MetaStoreUtils.updateUnpartitionedTableStatsFast(db, tbl, wh, true);

}

}

// set create time

long time = System.currentTimeMillis() / 1000;

tbl.setCreateTime((int) time);

if (tbl.getParameters() == null ||

tbl.getParameters().get(hive_metastoreConstants.DDL_TIME) == null) {

tbl.putToParameters(hive_metastoreConstants.DDL_TIME, Long.toString(time));

}

执行createTable数据库操作

ms.createTable(tbl);

success = ms.commitTransaction();

} finally {

if (!success) {

ms.rollbackTransaction();

//如果由于某些原因没有创建,则进行已创建表路径的删除

if (madeDir) {

wh.deleteDir(tblPath, true);

}

}

//进行create完成时的listener类发送 比如 noftify通知

for (MetaStoreEventListener listener : listeners) {

CreateTableEvent createTableEvent =

new CreateTableEvent(tbl, success, this);

createTableEvent.setEnvironmentContext(envContext);

listener.onCreateTable(createTableEvent);

}

}

}

这里的listener后续会详细说明,那么我们继续垂直往下看,这里的 ms.createTable方法。ms便是RawStore接口对象,这个接口对象包含了所有生命周期的统一方法调用,部分代码如下:

public abstract Database getDatabase(String name)

throws NoSuchObjectException; public abstract boolean dropDatabase(String dbname) throws NoSuchObjectException, MetaException; public abstract boolean alterDatabase(String dbname, Database db) throws NoSuchObjectException, MetaException; public abstract List<String> getDatabases(String pattern) throws MetaException; public abstract List<String> getAllDatabases() throws MetaException; public abstract boolean createType(Type type); public abstract Type getType(String typeName); public abstract boolean dropType(String typeName); public abstract void createTable(Table tbl) throws InvalidObjectException,

MetaException; public abstract boolean dropTable(String dbName, String tableName)

throws MetaException, NoSuchObjectException, InvalidObjectException, InvalidInputException; public abstract Table getTable(String dbName, String tableName)

throws MetaException;

..................

那么下来我们来看一下具体怎么实现的,首先hive metastore会通过调用getMS()方法,获取本地线程中的RawStore的实现,代码如下:

public RawStore getMS() throws MetaException {

//获取本地线程中已存在的RawStore

RawStore ms = threadLocalMS.get();

//如果不存在,则创建该对象的实现,并加入到本地线程中

if (ms == null) {

ms = newRawStore();

ms.verifySchema();

threadLocalMS.set(ms);

ms = threadLocalMS.get();

}

return ms;

}

看到这里,是不是很想看看newRawStore它干嘛啦?那么我们继续:

public static RawStore getProxy(HiveConf hiveConf, Configuration conf, String rawStoreClassName,

int id) throws MetaException {

//通过反射,创建baseClass,随后再进行该实现对象的创建

Class<? extends RawStore> baseClass = (Class<? extends RawStore>) MetaStoreUtils.getClass(

rawStoreClassName); RawStoreProxy handler = new RawStoreProxy(hiveConf, conf, baseClass, id); // Look for interfaces on both the class and all base classes.

return (RawStore) Proxy.newProxyInstance(RawStoreProxy.class.getClassLoader(),

getAllInterfaces(baseClass), handler);

}

那么问题来了,rawstoreClassName从哪里来呢?它是在HiveMetaStore进行初始化时加载的,来源于HiveConf中的METASTORE_RAW_STORE_IMPL,配置参数,也就是RawStore的实现类ObjectStore。好了,既然RawStore的实现类已经创建,那么我们继续深入ObjectStore,代码如下:

@Override

public void createTable(Table tbl) throws InvalidObjectException, MetaException {

boolean commited = false;

try {

//创建事务

openTransaction();

//这里再次进行db 、table的校验,代码不再贴出来,具体为什么又要做一次校验,还需要深入思考

MTable mtbl = convertToMTable(tbl);

这里的pm为ObjectStore创建时,init的JDO PersistenceManage对象。这里便是提交Table对象的地方,具体可研究下JDO module对象与数据库的交互

pm.makePersistent(mtbl);

//封装权限用户、角色、组对象并写入

PrincipalPrivilegeSet principalPrivs = tbl.getPrivileges();

List<Object> toPersistPrivObjs = new ArrayList<Object>();

if (principalPrivs != null) {

int now = (int)(System.currentTimeMillis()/1000); Map<String, List<PrivilegeGrantInfo>> userPrivs = principalPrivs.getUserPrivileges();

putPersistentPrivObjects(mtbl, toPersistPrivObjs, now, userPrivs, PrincipalType.USER); Map<String, List<PrivilegeGrantInfo>> groupPrivs = principalPrivs.getGroupPrivileges();

putPersistentPrivObjects(mtbl, toPersistPrivObjs, now, groupPrivs, PrincipalType.GROUP); Map<String, List<PrivilegeGrantInfo>> rolePrivs = principalPrivs.getRolePrivileges();

putPersistentPrivObjects(mtbl, toPersistPrivObjs, now, rolePrivs, PrincipalType.ROLE);

}

pm.makePersistentAll(toPersistPrivObjs);

commited = commitTransaction();

} finally {

//如果失败则回滚

if (!commited) {

rollbackTransaction();

}

}

}

4、dropTable

二话不说上从Hive类中上代码:

public void dropTable(String tableName, boolean ifPurge) throws HiveException {

//这里Hive 将dbName与TableName合并成一个数组

String[] names = Utilities.getDbTableName(tableName);

dropTable(names[0], names[1], true, true, ifPurge);

}

为什么要进行这样的处理呢,其实是因为 drop table的时候 我们的sql语句会是drop table dbName.tableName 或者是drop table tableName,这里进行tableName和DbName的组装,如果为drop table tableName,则获取当前session中的dbName,代码如下:

public static String[] getDbTableName(String dbtable) throws SemanticException {

//获取当前Session中的DbName

return getDbTableName(SessionState.get().getCurrentDatabase(), dbtable);

}

public static String[] getDbTableName(String defaultDb, String dbtable) throws SemanticException {

if (dbtable == null) {

return new String[2];

}

String[] names = dbtable.split("\\.");

switch (names.length) {

case 2:

return names;

//如果长度为1,则重新组装

case 1:

return new String [] {defaultDb, dbtable};

default:

throw new SemanticException(ErrorMsg.INVALID_TABLE_NAME, dbtable);

}

}

随后通过getMSC()调用HiveMetaStoreClient中的dropTable,代码如下:

public void dropTable(String dbname, String name, boolean deleteData,

boolean ignoreUnknownTab, EnvironmentContext envContext) throws MetaException, TException,

NoSuchObjectException, UnsupportedOperationException {

Table tbl;

try {

//通过dbName与tableName获取正个Table对象,也就是通过dbName与TableName获取该Table存储的所有元数据

tbl = getTable(dbname, name);

} catch (NoSuchObjectException e) {

if (!ignoreUnknownTab) {

throw e;

}

return;

}

//根据table type来判断是否为IndexTable,如果为索引表则不允许删除

if (isIndexTable(tbl)) {

throw new UnsupportedOperationException("Cannot drop index tables");

}

//这里的getHook 与create时getHook一致,获取对应table存储的hook

HiveMetaHook hook = getHook(tbl);

if (hook != null) {

hook.preDropTable(tbl);

}

boolean success = false;

try {

调用HiveMetaStore服务端的dropTable方法

drop_table_with_environment_context(dbname, name, deleteData, envContext);

if (hook != null) {

hook.commitDropTable(tbl, deleteData);

}

success=true;

} catch (NoSuchObjectException e) {

if (!ignoreUnknownTab) {

throw e;

}

} finally {

if (!success && (hook != null)) {

hook.rollbackDropTable(tbl);

}

}

}

下面我们重点看下服务端HiveMetaStore干了些什么,代码如下:

private boolean drop_table_core(final RawStore ms, final String dbname, final String name,

final boolean deleteData, final EnvironmentContext envContext,

final String indexName) throws NoSuchObjectException,

MetaException, IOException, InvalidObjectException, InvalidInputException {

boolean success = false;

boolean isExternal = false;

Path tblPath = null;

List<Path> partPaths = null;

Table tbl = null;

boolean ifPurge = false;

try {

ms.openTransaction();

// 获取正个Table的对象属性

tbl = get_table_core(dbname, name);

if (tbl == null) {

throw new NoSuchObjectException(name + " doesn't exist");

}

//如果sd数据为空,则认为该表数据损坏

if (tbl.getSd() == null) {

throw new MetaException("Table metadata is corrupted");

}

ifPurge = isMustPurge(envContext, tbl); firePreEvent(new PreDropTableEvent(tbl, deleteData, this));

//判断如果该表存在索引,则需要先删除该表的索引

boolean isIndexTable = isIndexTable(tbl);

if (indexName == null && isIndexTable) {

throw new RuntimeException(

"The table " + name + " is an index table. Please do drop index instead.");

}

//如果不是索引表,则删除索引元数据

if (!isIndexTable) {

try {

List<Index> indexes = ms.getIndexes(dbname, name, Short.MAX_VALUE);

while (indexes != null && indexes.size() > 0) {

for (Index idx : indexes) {

this.drop_index_by_name(dbname, name, idx.getIndexName(), true);

}

indexes = ms.getIndexes(dbname, name, Short.MAX_VALUE);

}

} catch (TException e) {

throw new MetaException(e.getMessage());

}

}

//判断是否为外部表

isExternal = isExternal(tbl);

if (tbl.getSd().getLocation() != null) {

tblPath = new Path(tbl.getSd().getLocation());

if (!wh.isWritable(tblPath.getParent())) {

String target = indexName == null ? "Table" : "Index table";

throw new MetaException(target + " metadata not deleted since " +

tblPath.getParent() + " is not writable by " +

hiveConf.getUser());

}

} checkTrashPurgeCombination(tblPath, dbname + "." + name, ifPurge);

//获取所有partition的location path 这里有个奇怪的地方,为什么不将Table对象直接传入,而是又在该方法中重新getTable,同时校验上级目录的读写权限

partPaths = dropPartitionsAndGetLocations(ms, dbname, name, tblPath,

tbl.getPartitionKeys(), deleteData && !isExternal);

//调用ObjectStore进行meta数据的删除

if (!ms.dropTable(dbname, name)) {

String tableName = dbname + "." + name;

throw new MetaException(indexName == null ? "Unable to drop table " + tableName:

"Unable to drop index table " + tableName + " for index " + indexName);

}

success = ms.commitTransaction();

} finally {

if (!success) {

ms.rollbackTransaction();

} else if (deleteData && !isExternal) {

//删除物理partition

deletePartitionData(partPaths, ifPurge);

//删除Table路径

deleteTableData(tblPath, ifPurge);

// ok even if the data is not deleted

//Listener 处理

for (MetaStoreEventListener listener : listeners) {

DropTableEvent dropTableEvent = new DropTableEvent(tbl, success, deleteData, this);

dropTableEvent.setEnvironmentContext(envContext);

listener.onDropTable(dropTableEvent);

}

}

return success;

}

我们继续深入ObjectStore中的dropTable,会发现 再一次通过dbName与tableName获取整个Table对象,随后逐一删除。也许代码并不是同一个人写的也可能是由于安全性考虑?很多可以通过接口传入的Table对象,都重新获取了,这样会不会加重数据库的负担呢?ObjectStore代码如下:

public boolean dropTable(String dbName, String tableName) throws MetaException,

NoSuchObjectException, InvalidObjectException, InvalidInputException {

boolean success = false;

try {

openTransaction();

//重新获取Table对象

MTable tbl = getMTable(dbName, tableName);

pm.retrieve(tbl);

if (tbl != null) {

//下列代码查询并删除所有的权限

List<MTablePrivilege> tabGrants = listAllTableGrants(dbName, tableName);

if (tabGrants != null && tabGrants.size() > 0) {

pm.deletePersistentAll(tabGrants);

}

List<MTableColumnPrivilege> tblColGrants = listTableAllColumnGrants(dbName,

tableName);

if (tblColGrants != null && tblColGrants.size() > 0) {

pm.deletePersistentAll(tblColGrants);

} List<MPartitionPrivilege> partGrants = this.listTableAllPartitionGrants(dbName, tableName);

if (partGrants != null && partGrants.size() > 0) {

pm.deletePersistentAll(partGrants);

} List<MPartitionColumnPrivilege> partColGrants = listTableAllPartitionColumnGrants(dbName,

tableName);

if (partColGrants != null && partColGrants.size() > 0) {

pm.deletePersistentAll(partColGrants);

}

// delete column statistics if present

try {

//删除column统计表数据

deleteTableColumnStatistics(dbName, tableName, null);

} catch (NoSuchObjectException e) {

LOG.info("Found no table level column statistics associated with db " + dbName +

" table " + tableName + " record to delete");

}

//删除mcd表数据

preDropStorageDescriptor(tbl.getSd());

//删除整个Table对象相关表数据

pm.deletePersistentAll(tbl);

}

success = commitTransaction();

} finally {

if (!success) {

rollbackTransaction();

}

}

return success;

}

5、AlterTable

下来我们看下AlterTable,AlterTable包含的逻辑较多,因为牵扯到物理存储上的路径修改等,那么我们来一点点查看。还是从Hive类中开始,上代码:

public void alterTable(String tblName, Table newTbl, boolean cascade)

throws InvalidOperationException, HiveException {

String[] names = Utilities.getDbTableName(tblName);

try {

//删除table kv中的DDL_TIME 因为要alterTable所以,该事件会被改变

if (newTbl.getParameters() != null) {

newTbl.getParameters().remove(hive_metastoreConstants.DDL_TIME);

}

//进行相关校验,包含dbName、tableName、column、inputOutClass、outputClass的校验等,如果校验不通过则抛出HiveException

newTbl.checkValidity();

//调用alterTable

getMSC().alter_table(names[0], names[1], newTbl.getTTable(), cascade);

} catch (MetaException e) {

throw new HiveException("Unable to alter table. " + e.getMessage(), e);

} catch (TException e) {

throw new HiveException("Unable to alter table. " + e.getMessage(), e);

}

}

对于HiveMetaClient,并没有做相应处理,所以我们直接来看HiveMetaStore服务端做了些什么呢?

private void alter_table_core(final String dbname, final String name, final Table newTable,

final EnvironmentContext envContext, final boolean cascade)

throws InvalidOperationException, MetaException {

startFunction("alter_table", ": db=" + dbname + " tbl=" + name

+ " newtbl=" + newTable.getTableName()); //更新DDL_Time

if (newTable.getParameters() == null ||

newTable.getParameters().get(hive_metastoreConstants.DDL_TIME) == null) {

newTable.putToParameters(hive_metastoreConstants.DDL_TIME, Long.toString(System

.currentTimeMillis() / 1000));

}

boolean success = false;

Exception ex = null;

try {

//获取已有Table的整个对象

Table oldt = get_table_core(dbname, name);

//进行Event处理

firePreEvent(new PreAlterTableEvent(oldt, newTable, this));

//进行alterTable处理,后面详细说明

alterHandler.alterTable(getMS(), wh, dbname, name, newTable, cascade);

success = true;

//进行Listener处理

for (MetaStoreEventListener listener : listeners) { AlterTableEvent alterTableEvent =

new AlterTableEvent(oldt, newTable, success, this);

alterTableEvent.setEnvironmentContext(envContext);

listener.onAlterTable(alterTableEvent);

}

} catch (NoSuchObjectException e) {

// thrown when the table to be altered does not exist

ex = e;

throw new InvalidOperationException(e.getMessage());

} catch (Exception e) {

ex = e;

if (e instanceof MetaException) {

throw (MetaException) e;

} else if (e instanceof InvalidOperationException) {

throw (InvalidOperationException) e;

} else {

throw newMetaException(e);

}

} finally {

endFunction("alter_table", success, ex, name);

}

}

那么,我们重点看下alterHandler具体所做的事情,在这之前简要说下alterHandler的初始化,它是在HiveMetaStore init时获取的hive.metastore.alter.impl参数的className,也就是HiveAlterHandler的name,那么具体,我们来看下它alterTable时的实现,前方高能,小心火烛:)

public void alterTable(RawStore msdb, Warehouse wh, String dbname,

String name, Table newt, boolean cascade) throws InvalidOperationException, MetaException {

if (newt == null) {

throw new InvalidOperationException("New table is invalid: " + newt);

}

//校验新的tableName是否合法

if (!MetaStoreUtils.validateName(newt.getTableName())) {

throw new InvalidOperationException(newt.getTableName()

+ " is not a valid object name");

}

//校验新的column Name type是否合法

String validate = MetaStoreUtils.validateTblColumns(newt.getSd().getCols());

if (validate != null) {

throw new InvalidOperationException("Invalid column " + validate);

} Path srcPath = null;

FileSystem srcFs = null;

Path destPath = null;

FileSystem destFs = null; boolean success = false;

boolean moveData = false;

boolean rename = false;

Table oldt = null;

List<ObjectPair<Partition, String>> altps = new ArrayList<ObjectPair<Partition, String>>(); try {

msdb.openTransaction();

//这里直接转换小写,可以看出 代码不是一个人写的

name = name.toLowerCase();

dbname = dbname.toLowerCase(); //校验新的tableName是否存在

if (!newt.getTableName().equalsIgnoreCase(name)

|| !newt.getDbName().equalsIgnoreCase(dbname)) {

if (msdb.getTable(newt.getDbName(), newt.getTableName()) != null) {

throw new InvalidOperationException("new table " + newt.getDbName()

+ "." + newt.getTableName() + " already exists");

}

rename = true;

} //获取老的table对象

oldt = msdb.getTable(dbname, name);

if (oldt == null) {

throw new InvalidOperationException("table " + newt.getDbName() + "."

+ newt.getTableName() + " doesn't exist");

}

//alter Table时 获取 METASTORE_DISALLOW_INCOMPATIBLE_COL_TYPE_CHANGES配置项,如果为true的话,将改变column的type类型,这里为false

if (HiveConf.getBoolVar(hiveConf,

HiveConf.ConfVars.METASTORE_DISALLOW_INCOMPATIBLE_COL_TYPE_CHANGES,

false)) {

// Throws InvalidOperationException if the new column types are not

// compatible with the current column types.

MetaStoreUtils.throwExceptionIfIncompatibleColTypeChange(

oldt.getSd().getCols(), newt.getSd().getCols());

}

//cascade参数由调用Hive altertable方法穿过来的,也就是引擎调用时参数的设置,这里用来查看是否需要alterPartition信息

if (cascade) {

//校验新的column是否与老的column一致,如不一致,说明进行了column的添加或删除操作

if(MetaStoreUtils.isCascadeNeededInAlterTable(oldt, newt)) {

//根据dbName与tableName获取整个partition的信息

List<Partition> parts = msdb.getPartitions(dbname, name, -1);

for (Partition part : parts) {

List<FieldSchema> oldCols = part.getSd().getCols();

part.getSd().setCols(newt.getSd().getCols());

String oldPartName = Warehouse.makePartName(oldt.getPartitionKeys(), part.getValues());

//如果columns不一致,则删除已有的column统计信息

updatePartColumnStatsForAlterColumns(msdb, part, oldPartName, part.getValues(), oldCols, part);

//更新整个Partition的信息

msdb.alterPartition(dbname, name, part.getValues(), part);

}

} else {

LOG.warn("Alter table does not cascade changes to its partitions.");

}

} //判断parititonkey是否改变,也就是dt 或 hour等partName是否改变

boolean partKeysPartiallyEqual = checkPartialPartKeysEqual(oldt.getPartitionKeys(),

newt.getPartitionKeys());

//如果已有表为视图表,同时发现老的partkey与新的partKey不一致,则报错

if(!oldt.getTableType().equals(TableType.VIRTUAL_VIEW.toString())){

if (oldt.getPartitionKeys().size() != newt.getPartitionKeys().size()

|| !partKeysPartiallyEqual) {

throw new InvalidOperationException(

"partition keys can not be changed.");

}

} //如果该表不为视图表,同时,该表的location信息并未发生变化,同时新的location信息并不为空,并且已有的该表不为外部表,说明用户是想要移动数据到新的location地址,那么该操作

// 为alter table rename操作

if (rename

&& !oldt.getTableType().equals(TableType.VIRTUAL_VIEW.toString())

&& (oldt.getSd().getLocation().compareTo(newt.getSd().getLocation()) == 0

|| StringUtils.isEmpty(newt.getSd().getLocation()))

&& !MetaStoreUtils.isExternalTable(oldt)) {

//获取新的location信息

srcPath = new Path(oldt.getSd().getLocation());

srcFs = wh.getFs(srcPath); // that means user is asking metastore to move data to new location

// corresponding to the new name

// get new location

Database db = msdb.getDatabase(newt.getDbName());

Path databasePath = constructRenamedPath(wh.getDatabasePath(db), srcPath);

destPath = new Path(databasePath, newt.getTableName());

destFs = wh.getFs(destPath);

//设置新的table location信息 用于后续更新动作

newt.getSd().setLocation(destPath.toString());

moveData = true; //校验物理目标地址是否存在,如果存在则会override所有数据,是不允许的。

if (!FileUtils.equalsFileSystem(srcFs, destFs)) {

throw new InvalidOperationException("table new location " + destPath

+ " is on a different file system than the old location "

+ srcPath + ". This operation is not supported");

}

try {

srcFs.exists(srcPath); // check that src exists and also checks

// permissions necessary

if (destFs.exists(destPath)) {

throw new InvalidOperationException("New location for this table "

+ newt.getDbName() + "." + newt.getTableName()

+ " already exists : " + destPath);

}

} catch (IOException e) {

throw new InvalidOperationException("Unable to access new location "

+ destPath + " for table " + newt.getDbName() + "."

+ newt.getTableName());

}

String oldTblLocPath = srcPath.toUri().getPath();

String newTblLocPath = destPath.toUri().getPath();

//获取old table中的所有partition信息

List<Partition> parts = msdb.getPartitions(dbname, name, -1);

for (Partition part : parts) {

String oldPartLoc = part.getSd().getLocation();

//这里,便开始新老partition地址的变换,修改partition元数据信息

if (oldPartLoc.contains(oldTblLocPath)) {

URI oldUri = new Path(oldPartLoc).toUri();

String newPath = oldUri.getPath().replace(oldTblLocPath, newTblLocPath);

Path newPartLocPath = new Path(oldUri.getScheme(), oldUri.getAuthority(), newPath);

altps.add(ObjectPair.create(part, part.getSd().getLocation()));

part.getSd().setLocation(newPartLocPath.toString());

String oldPartName = Warehouse.makePartName(oldt.getPartitionKeys(), part.getValues());

try {

//existing partition column stats is no longer valid, remove them

msdb.deletePartitionColumnStatistics(dbname, name, oldPartName, part.getValues(), null);

} catch (InvalidInputException iie) {

throw new InvalidOperationException("Unable to update partition stats in table rename." + iie);

}

msdb.alterPartition(dbname, name, part.getValues(), part);

}

}

//更新stats相关信息

} else if (MetaStoreUtils.requireCalStats(hiveConf, null, null, newt) &&

(newt.getPartitionKeysSize() == 0)) {

Database db = msdb.getDatabase(newt.getDbName());

// Update table stats. For partitioned table, we update stats in

// alterPartition()

MetaStoreUtils.updateUnpartitionedTableStatsFast(db, newt, wh, false, true);

}

updateTableColumnStatsForAlterTable(msdb, oldt, newt);

// now finally call alter table

msdb.alterTable(dbname, name, newt);

// commit the changes

success = msdb.commitTransaction();

} catch (InvalidObjectException e) {

LOG.debug(e);

throw new InvalidOperationException(

"Unable to change partition or table."

+ " Check metastore logs for detailed stack." + e.getMessage());

} catch (NoSuchObjectException e) {

LOG.debug(e);

throw new InvalidOperationException(

"Unable to change partition or table. Database " + dbname + " does not exist"

+ " Check metastore logs for detailed stack." + e.getMessage());

} finally {

if (!success) {

msdb.rollbackTransaction();

}

if (success && moveData) {

//开始更新hdfs路径,进行老路径的rename到新路径 ,调用fileSystem的rename操作

try {

if (srcFs.exists(srcPath) && !srcFs.rename(srcPath, destPath)) {

throw new IOException("Renaming " + srcPath + " to " + destPath + " failed");

}

} catch (IOException e) {

LOG.error("Alter Table operation for " + dbname + "." + name + " failed.", e);

boolean revertMetaDataTransaction = false;

try {

msdb.openTransaction();

//这里会发现,又一次进行了alterTable元数据动作,或许跟JDO的特性有关?还是因为安全?

msdb.alterTable(newt.getDbName(), newt.getTableName(), oldt);

for (ObjectPair<Partition, String> pair : altps) {

Partition part = pair.getFirst();

part.getSd().setLocation(pair.getSecond());

msdb.alterPartition(newt.getDbName(), name, part.getValues(), part);

}

revertMetaDataTransaction = msdb.commitTransaction();

} catch (Exception e1) {

// we should log this for manual rollback by administrator

LOG.error("Reverting metadata by HDFS operation failure failed During HDFS operation failed", e1);

LOG.error("Table " + Warehouse.getQualifiedName(newt) +

" should be renamed to " + Warehouse.getQualifiedName(oldt));

LOG.error("Table " + Warehouse.getQualifiedName(newt) +

" should have path " + srcPath);

for (ObjectPair<Partition, String> pair : altps) {

LOG.error("Partition " + Warehouse.getQualifiedName(pair.getFirst()) +

" should have path " + pair.getSecond());

}

if (!revertMetaDataTransaction) {

msdb.rollbackTransaction();

}

}

throw new InvalidOperationException("Alter Table operation for " + dbname + "." + name +

" failed to move data due to: '" + getSimpleMessage(e) + "' See hive log file for details.");

}

}

}

if (!success) {

throw new MetaException("Committing the alter table transaction was not successful.");

}

}

6、createPartition

在分区数据写入之前,会先进行partition的元数据注册及物理文件路径的创建(内部表),Hive类代码如下:

public Partition createPartition(Table tbl, Map<String, String> partSpec) throws HiveException {

try {

//new出来一个Partition对象,传入Table对象,调用Partition的构造方法来initialize Partition的信息

return new Partition(tbl, getMSC().add_partition(

Partition.createMetaPartitionObject(tbl, partSpec, null)));

} catch (Exception e) {

LOG.error(StringUtils.stringifyException(e));

throw new HiveException(e);

}

}

这里的createMetaPartitionObject作用在于整个Partition传入对象的校验对对象的封装,代码如下:

public static org.apache.hadoop.hive.metastore.api.Partition createMetaPartitionObject(

Table tbl, Map<String, String> partSpec, Path location) throws HiveException {

List<String> pvals = new ArrayList<String>();

//遍历整个PartCols,并且校验partMap中是否一一对应

for (FieldSchema field : tbl.getPartCols()) {

String val = partSpec.get(field.getName());

if (val == null || val.isEmpty()) {

throw new HiveException("partition spec is invalid; field "

+ field.getName() + " does not exist or is empty");

}

pvals.add(val);

}

//set相关的属性信息,包括DbName、TableName、PartValues、以及sd信息

org.apache.hadoop.hive.metastore.api.Partition tpart =

new org.apache.hadoop.hive.metastore.api.Partition();

tpart.setDbName(tbl.getDbName());

tpart.setTableName(tbl.getTableName());

tpart.setValues(pvals); if (!tbl.isView()) {

tpart.setSd(cloneS d(tbl));

tpart.getSd().setLocation((location != null) ? location.toString() : null);

}

return tpart;

}

随之MetaDataClient对于该对象调用MetaDataService的addPartition,并进行了深拷贝,这里不再详细说明,那么我们直接看下服务端干了什么:

private Partition add_partition_core(final RawStore ms,

final Partition part, final EnvironmentContext envContext)

throws InvalidObjectException, AlreadyExistsException, MetaException, TException {

boolean success = false;

Table tbl = null;

try {

ms.openTransaction();

//根据DbName、TableName获取整个Table对象信息

tbl = ms.getTable(part.getDbName(), part.getTableName());

if (tbl == null) {

throw new InvalidObjectException(

"Unable to add partition because table or database do not exist");

}

//事件处理

firePreEvent(new PreAddPartitionEvent(tbl, part, this));

//在创建Partition之前,首先会校验元数据中该partition是否存在

boolean shouldAdd = startAddPartition(ms, part, false);

assert shouldAdd; // start would throw if it already existed here

//创建Partition路径

boolean madeDir = createLocationForAddedPartition(tbl, part);

try {

//加载一些kv信息

initializeAddedPartition(tbl, part, madeDir);

//写入元数据

success = ms.addPartition(part);

} finally {

if (!success && madeDir) {

//如果没有成功,便删除物理路径

wh.deleteDir(new Path(part.getSd().getLocation()), true);

}

}

// we proceed only if we'd actually succeeded anyway, otherwise,

// we'd have thrown an exception

success = success && ms.commitTransaction();

} finally {

if (!success) {

ms.rollbackTransaction();

}

fireMetaStoreAddPartitionEvent(tbl, Arrays.asList(part), envContext, success);

}

return part;

}

这里提及一个设计上的点,从之前的表结构设计上,没有直接存储PartName,而是将key与value单独存在与kv表中,这里我们看下createLocationForAddedPartition:

private boolean createLocationForAddedPartition(

final Table tbl, final Partition part) throws MetaException {

Path partLocation = null;

String partLocationStr = null;

//如果sd不为null,则将sd的location信息作为表跟目录赋给partLocationStr

if (part.getSd() != null) {

partLocationStr = part.getSd().getLocation();

}

//如果为null,则重新拼接part Location

if (partLocationStr == null || partLocationStr.isEmpty()) {

// set default location if not specified and this is

// a physical table partition (not a view)

if (tbl.getSd().getLocation() != null) {

//如果不为null,则继续拼接文件路径及part的路径,组成完成的Partition location

partLocation = new Path(tbl.getSd().getLocation(), Warehouse

.makePartName(tbl.getPartitionKeys(), part.getValues()));

}

} else {

if (tbl.getSd().getLocation() == null) {

throw new MetaException("Cannot specify location for a view partition");

}

partLocation = wh.getDnsPath(new Path(partLocationStr));

} boolean result = false;

//将location信息写入sd表

if (partLocation != null) {

part.getSd().setLocation(partLocation.toString()); // Check to see if the directory already exists before calling

// mkdirs() because if the file system is read-only, mkdirs will

// throw an exception even if the directory already exists.

if (!wh.isDir(partLocation)) {

if (!wh.mkdirs(partLocation, true)) {

throw new MetaException(partLocation

+ " is not a directory or unable to create one");

}

result = true;

}

}

return result;

}

总结:

7、dropPartition

删除partition就不再从Hive开始了,我们直接看HiveMetaStore服务端做了什么:

private boolean drop_partition_common(RawStore ms, String db_name, String tbl_name,

List<String> part_vals, final boolean deleteData, final EnvironmentContext envContext)

throws MetaException, NoSuchObjectException, IOException, InvalidObjectException,

InvalidInputException {

boolean success = false;

Path partPath = null;

Table tbl = null;

Partition part = null;

boolean isArchived = false;

Path archiveParentDir = null;

boolean mustPurge = false; try {

ms.openTransaction();

//根据dbName、tableName、part_values获取整个part信息

part = ms.getPartition(db_name, tbl_name, part_vals);

//获取所有Table对象

tbl = get_table_core(db_name, tbl_name);

firePreEvent(new PreDropPartitionEvent(tbl, part, deleteData, this));

mustPurge = isMustPurge(envContext, tbl); if (part == null) {

throw new NoSuchObjectException("Partition doesn't exist. "

+ part_vals);

}

//这一片还没有深入看Arrchived partition

isArchived = MetaStoreUtils.isArchived(part);

if (isArchived) {

archiveParentDir = MetaStoreUtils.getOriginalLocation(part);

verifyIsWritablePath(archiveParentDir);

checkTrashPurgeCombination(archiveParentDir, db_name + "." + tbl_name + "." + part_vals, mustPurge);

}

if (!ms.dropPartition(db_name, tbl_name, part_vals)) {

throw new MetaException("Unable to drop partition");

}

success = ms.commitTransaction();

if ((part.getSd() != null) && (part.getSd().getLocation() != null)) {

partPath = new Path(part.getSd().getLocation());

verifyIsWritablePath(partPath);

checkTrashPurgeCombination(partPath, db_name + "." + tbl_name + "." + part_vals, mustPurge);

}

} finally {

if (!success) {

ms.rollbackTransaction();

} else if (deleteData && ((partPath != null) || (archiveParentDir != null))) {

if (tbl != null && !isExternal(tbl)) {

if (mustPurge) {

LOG.info("dropPartition() will purge " + partPath + " directly, skipping trash.");

}

else {

LOG.info("dropPartition() will move " + partPath + " to trash-directory.");

}

//删除partition

// Archived partitions have har:/to_har_file as their location.

// The original directory was saved in params

if (isArchived) {

assert (archiveParentDir != null);

wh.deleteDir(archiveParentDir, true, mustPurge);

} else {

assert (partPath != null);

wh.deleteDir(partPath, true, mustPurge);

deleteParentRecursive(partPath.getParent(), part_vals.size() - 1, mustPurge);

}

// ok even if the data is not deleted

}

}

for (MetaStoreEventListener listener : listeners) {

DropPartitionEvent dropPartitionEvent =

new DropPartitionEvent(tbl, part, success, deleteData, this);

dropPartitionEvent.setEnvironmentContext(envContext);

listener.onDropPartition(dropPartitionEvent);

}

}

return true;

}

8、alterPartition

alterPartition牵扯的校验及文件目录的修改,我们直接从HiveMetaStore中的rename_partition中查看:

private void rename_partition(final String db_name, final String tbl_name,

final List<String> part_vals, final Partition new_part,

final EnvironmentContext envContext)

throws InvalidOperationException, MetaException,

TException {

//日志记录

startTableFunction("alter_partition", db_name, tbl_name); if (LOG.isInfoEnabled()) {

LOG.info("New partition values:" + new_part.getValues());

if (part_vals != null && part_vals.size() > 0) {

LOG.info("Old Partition values:" + part_vals);

}

} Partition oldPart = null;

Exception ex = null;

try {

firePreEvent(new PreAlterPartitionEvent(db_name, tbl_name, part_vals, new_part, this));

//校验PartName的规范性

if (part_vals != null && !part_vals.isEmpty()) {

MetaStoreUtils.validatePartitionNameCharacters(new_part.getValues(),

partitionValidationPattern);

}

调用alterHandler的alterPartition进行partition物理上的rename,以及元数据修改

oldPart = alterHandler.alterPartition(getMS(), wh, db_name, tbl_name, part_vals, new_part); // Only fetch the table if we actually have a listener

Table table = null;

for (MetaStoreEventListener listener : listeners) {

if (table == null) {

table = getMS().getTable(db_name, tbl_name);

}

AlterPartitionEvent alterPartitionEvent =

new AlterPartitionEvent(oldPart, new_part, table, true, this);

alterPartitionEvent.setEnvironmentContext(envContext);

listener.onAlterPartition(alterPartitionEvent);

}

} catch (InvalidObjectException e) {

ex = e;

throw new InvalidOperationException(e.getMessage());

} catch (AlreadyExistsException e) {

ex = e;

throw new InvalidOperationException(e.getMessage());

} catch (Exception e) {

ex = e;

if (e instanceof MetaException) {

throw (MetaException) e;

} else if (e instanceof InvalidOperationException) {

throw (InvalidOperationException) e;

} else if (e instanceof TException) {

throw (TException) e;

} else {

throw newMetaException(e);

}

} finally {

endFunction("alter_partition", oldPart != null, ex, tbl_name);

}

return;

}

这里我们着重看一下,alterHandler.alterPartition方法,前方高能:

public Partition alterPartition(final RawStore msdb, Warehouse wh, final String dbname,

final String name, final List<String> part_vals, final Partition new_part)

throws InvalidOperationException, InvalidObjectException, AlreadyExistsException,

MetaException {

boolean success = false; Path srcPath = null;

Path destPath = null;

FileSystem srcFs = null;

FileSystem destFs = null;

Partition oldPart = null;

String oldPartLoc = null;

String newPartLoc = null; //修改新的partition的DDL时间

if (new_part.getParameters() == null ||

new_part.getParameters().get(hive_metastoreConstants.DDL_TIME) == null ||

Integer.parseInt(new_part.getParameters().get(hive_metastoreConstants.DDL_TIME)) == 0) {

new_part.putToParameters(hive_metastoreConstants.DDL_TIME, Long.toString(System

.currentTimeMillis() / 1000));

}

//根据dbName、tableName获取整个Table对象

Table tbl = msdb.getTable(dbname, name);

//如果传入的part_vals为空或为0,说明修改的只是partition的其他元数据信息而不牵扯到partKV,则直接元数据,在msdb.alterPartition会直接更新

if (part_vals == null || part_vals.size() == 0) {

try {

oldPart = msdb.getPartition(dbname, name, new_part.getValues());

if (MetaStoreUtils.requireCalStats(hiveConf, oldPart, new_part, tbl)) {

MetaStoreUtils.updatePartitionStatsFast(new_part, wh, false, true);

}

updatePartColumnStats(msdb, dbname, name, new_part.getValues(), new_part);

msdb.alterPartition(dbname, name, new_part.getValues(), new_part);

} catch (InvalidObjectException e) {

throw new InvalidOperationException("alter is not possible");

} catch (NoSuchObjectException e){

//old partition does not exist

throw new InvalidOperationException("alter is not possible");

}

return oldPart;

}

//rename partition

try {

msdb.openTransaction();

try {

//获取oldPart对象信息

oldPart = msdb.getPartition(dbname, name, part_vals);

} catch (NoSuchObjectException e) {

// this means there is no existing partition

throw new InvalidObjectException(

"Unable to rename partition because old partition does not exist");

}

Partition check_part = null;

try {

//组装newPart的partValues等Partition信息

check_part = msdb.getPartition(dbname, name, new_part.getValues());

} catch(NoSuchObjectException e) {

// this means there is no existing partition

check_part = null;

}

//如果check_part组装成功,说明该part已经存在,则报already exists

if (check_part != null) {

throw new AlreadyExistsException("Partition already exists:" + dbname + "." + name + "." +

new_part.getValues());

}

//table的信息校验

if (tbl == null) {

throw new InvalidObjectException(

"Unable to rename partition because table or database do not exist");

} //如果是外部表的分区变化了,那么不需要操作文件系统,直接更新meta信息即可

if (tbl.getTableType().equals(TableType.EXTERNAL_TABLE.toString())) {

new_part.getSd().setLocation(oldPart.getSd().getLocation());

String oldPartName = Warehouse.makePartName(tbl.getPartitionKeys(), oldPart.getValues());

try {

//existing partition column stats is no longer valid, remove

msdb.deletePartitionColumnStatistics(dbname, name, oldPartName, oldPart.getValues(), null);

} catch (NoSuchObjectException nsoe) {

//ignore

} catch (InvalidInputException iie) {

throw new InvalidOperationException("Unable to update partition stats in table rename." + iie);

}

msdb.alterPartition(dbname, name, part_vals, new_part);

} else {

try {

//获取Table的文件路径

destPath = new Path(wh.getTablePath(msdb.getDatabase(dbname), name),

Warehouse.makePartName(tbl.getPartitionKeys(), new_part.getValues()));

//拼接新的Partition的路径

destPath = constructRenamedPath(destPath, new Path(new_part.getSd().getLocation()));

} catch (NoSuchObjectException e) {

LOG.debug(e);

throw new InvalidOperationException(

"Unable to change partition or table. Database " + dbname + " does not exist"

+ " Check metastore logs for detailed stack." + e.getMessage());

}

//如果destPath不为空,说明改变了文件路径

if (destPath != null) {

newPartLoc = destPath.toString();

oldPartLoc = oldPart.getSd().getLocation();

//根据原有sd的路径获取老的part路径信息

srcPath = new Path(oldPartLoc); LOG.info("srcPath:" + oldPartLoc);

LOG.info("descPath:" + newPartLoc);

srcFs = wh.getFs(srcPath);

destFs = wh.getFs(destPath);

//查看srcFS与destFs是否Wie同一个fileSystem

if (!FileUtils.equalsFileSystem(srcFs, destFs)) {

throw new InvalidOperationException("table new location " + destPath

+ " is on a different file system than the old location "

+ srcPath + ". This operation is not supported");

}

try {

//校验老的partition路径与新的partition路径是否一致,同时新的partition路径是否已经存在

srcFs.exists(srcPath); // check that src exists and also checks

if (newPartLoc.compareTo(oldPartLoc) != 0 && destFs.exists(destPath)) {

throw new InvalidOperationException("New location for this table "

+ tbl.getDbName() + "." + tbl.getTableName()

+ " already exists : " + destPath);

}

} catch (IOException e) {

throw new InvalidOperationException("Unable to access new location "

+ destPath + " for partition " + tbl.getDbName() + "."

+ tbl.getTableName() + " " + new_part.getValues());

}

new_part.getSd().setLocation(newPartLoc);

if (MetaStoreUtils.requireCalStats(hiveConf, oldPart, new_part, tbl)) {

MetaStoreUtils.updatePartitionStatsFast(new_part, wh, false, true);

}

//拼接oldPartName,并且删除原有oldPart的信息,写入新的partition信息

String oldPartName = Warehouse.makePartName(tbl.getPartitionKeys(), oldPart.getValues());

try {

//existing partition column stats is no longer valid, remove

msdb.deletePartitionColumnStatistics(dbname, name, oldPartName, oldPart.getValues(), null);

} catch (NoSuchObjectException nsoe) {

//ignore

} catch (InvalidInputException iie) {

throw new InvalidOperationException("Unable to update partition stats in table rename." + iie);

}

msdb.alterPartition(dbname, name, part_vals, new_part);

}

} success = msdb.commitTransaction();

} finally {

if (!success) {

msdb.rollbackTransaction();

}

if (success && newPartLoc != null && newPartLoc.compareTo(oldPartLoc) != 0) {

//rename the data directory

try{

if (srcFs.exists(srcPath)) {

//如果根路径海微创建,需要重新进行创建,就好比计算引擎先调用了alterTable,又调用了alterPartition,这时partition的根路径或许还未创建

Path destParentPath = destPath.getParent();

if (!wh.mkdirs(destParentPath, true)) {

throw new IOException("Unable to create path " + destParentPath);

}

//进行原路径与目标路径的rename

wh.renameDir(srcPath, destPath, true);

LOG.info("rename done!");

}

} catch (IOException e) {

boolean revertMetaDataTransaction = false;

try {

msdb.openTransaction();

msdb.alterPartition(dbname, name, new_part.getValues(), oldPart);

revertMetaDataTransaction = msdb.commitTransaction();

} catch (Exception e1) {

LOG.error("Reverting metadata opeation failed During HDFS operation failed", e1);

if (!revertMetaDataTransaction) {

msdb.rollbackTransaction();

}

}

throw new InvalidOperationException("Unable to access old location "

+ srcPath + " for partition " + tbl.getDbName() + "."

+ tbl.getTableName() + " " + part_vals);

}

}

}

return oldPart;

}

暂时到这里吧~后续咱们慢慢玩哈~

Hive metastore整体代码分析及详解的更多相关文章

- Understand:高效代码静态分析神器详解(转)

之前用Windows系统,一直用source insight查看代码非常方便,但是年前换到mac下面,虽说很多东西都方便了,但是却没有了静态代码分析工具,很幸运,前段时间找到一款比source ins ...

- 单元测试系列之四:Sonar平台中项目主要指标以及代码坏味道详解

更多原创测试技术文章同步更新到微信公众号 :三国测,敬请扫码关注个人的微信号,感谢! 原文链接:http://www.cnblogs.com/zishi/p/6766994.html 众所周知Sona ...

- 【Java】HashMap源码分析——常用方法详解

上一篇介绍了HashMap的基本概念,这一篇着重介绍HasHMap中的一些常用方法:put()get()**resize()** 首先介绍resize()这个方法,在我看来这是HashMap中一个非常 ...

- Understand:高效代码静态分析神器详解(一)

Understand:高效代码静态分析神器详解(一) Understand 之前用Windows系统,一直用source insight查看代码非常方便,但是年前换到mac下面,虽说很多东西都方便 ...

- php调用C代码的方法详解和zend_parse_parameters函数详解

php调用C代码的方法详解 在php程序中需要用到C代码,应该是下面两种情况: 1 已有C代码,在php程序中想直接用 2 由于php的性能问题,需要用C来实现部分功能 针对第一种情况,最合适的方 ...

- Understand:高效代码静态分析神器详解(一) | 墨香博客 http://www.codemx.cn/2016/04/30/Understand01/

Understand:高效代码静态分析神器详解(一) | 墨香博客 http://www.codemx.cn/2016/04/30/Understand01/ ===== 之前用Windows系统,一 ...

- Understand:高效代码静态分析神器详解(一) 转

之前用Windows系统,一直用source insight查看代码非常方便,但是年前换到mac下面,虽说很多东西都方便了,但是却没有了静态代码分析工具,很幸运,前段时间找到一款比source ins ...

- valgrind和Kcachegrind性能分析工具详解

一.valgrind介绍 valgrind是运行在Linux上的一套基于仿真技术的程序调试和分析工具,用于构建动态分析工具的装备性框架.它包括一个工具集,每个工具执行某种类型的调试.分析或类似的任务, ...

- “全栈2019”Java异常第六章:finally代码块作用域详解

难度 初级 学习时间 10分钟 适合人群 零基础 开发语言 Java 开发环境 JDK v11 IntelliJ IDEA v2018.3 文章原文链接 "全栈2019"Java异 ...

随机推荐

- C++异常层次结构

#define _CRT_SECURE_NO_WARNINGS #include <iostream> using namespace std; class MyArray { publi ...

- phpstudy php5.4以上版本伪静态设置 thinkphp

http://www.thinkphp.cn/topic/35958.html <IfModule mod_rewrite.c> Options +FollowSymlinks -Mult ...

- php常用数据结构

# 常用数据结构--------------------------------------------------------------------------------## 树(Tree)- ...

- MySQL密码忘了怎么办?MySQL重置root密码方法

本文主要介绍Windows和Linux系统下忘记密码重置root密码的方法,需要的朋友可以参考下. MySQL有时候忘记了root密码是一件伤感的事.这里提供Windows 和 Linux 下的密码重 ...

- dede表前缀不定时,查询表#@__archives

$query = "SELECT arc.*,tp.typedir,tp.typename, tp.isdefault,tp.defaultname,tp.nam ...

- [SinGuLaRiTy] 复习模板-搜索

[SinGuLaRiTy-1043] Copyright (c) SinGuLaRiTy 2017. All Rights Reserved. 桶排序 void bucketSort(int a[], ...

- Java进阶篇(三)——Java集合类

集合可以看作一个容器,集合中的对象可以很容易存放到集合中,也很容易将其从集合中取出来,还可以按一定的顺序摆放.Java中提供了不同的集合类,这些类具有不同的存储对象的方式,并提供了相应的方法方便用户对 ...

- 使用wrk进行性能测试

1 wrk介绍 wrk是一款现代化的HTTP性能测试工具,即使运行在单核CPU上也能产生显著的压力.它融合了一种多线程设计,并使用了一些可扩展事件通知机制,例如epoll and kqueue. 一个 ...

- OpenCV3.4两种立体匹配算法效果对比

以OpenCV自带的Aloe图像对为例: 1.BM算法(Block Matching) 参数设置如下: ) + ) & -; cv::Ptr<cv::StereoBM> b ...

- Linux安装mysql 在/etc下没有my.cnf 解决办法

进入 /usr/share/mysql 将my-medium.cnf 移动到etc 并且改名为my.cnf