Spark教程——(5)PySpark入门

启动PySpark:

[root@node1 ~]# pyspark

Python 2.7.5 (default, Nov 6 2016, 00:28:07)

[GCC 4.8.5 20150623 (Red Hat 4.8.5-11)] on linux2

Type "help", "copyright", "credits" or "license" for more information.

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel).

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 1.6.0

/_/

Using Python version 2.7.5 (default, Nov 6 2016 00:28:07)

SparkContext available as sc, HiveContext available as sqlContext.

上下文已经包含 sc 和 sqlContext:

SparkContext available as sc, HiveContext available as sqlContext.

执行脚本:

>>> from __future__ import print_function

>>> import os

>>> import sys

>>> from pyspark import SparkContext

>>> from pyspark.sql import SQLContext

>>> from pyspark.sql.types import Row, StructField, StructType, StringType, IntegerType# RDD is created from a list of rows

>>> some_rdd = sc.parallelize([Row(name="John", age=19),Row(name="Smith", age=23),Row(name="Sarah", age=18)])# Infer schema from the first row, create a DataFrame and print the schema

>>> some_df = sqlContext.createDataFrame(some_rdd)

>>> some_df.printSchema()

root

|-- age: long (nullable = true)

|-- name: string (nullable = true)

# Another RDD is created from a list of tuples

>>> another_rdd = sc.parallelize([("John", 19), ("Smith", 23), ("Sarah", 18)])# Schema with two fields - person_name and person_age

>>> schema = StructType([StructField("person_name", StringType(), False),StructField("person_age", IntegerType(), False)])# Create a DataFrame by applying the schema to the RDD and print the schema

>>> another_df = sqlContext.createDataFrame(another_rdd, schema)

>>> another_df.printSchema()

root

|-- person_name: string (nullable = false)

|-- person_age: integer (nullable = false)

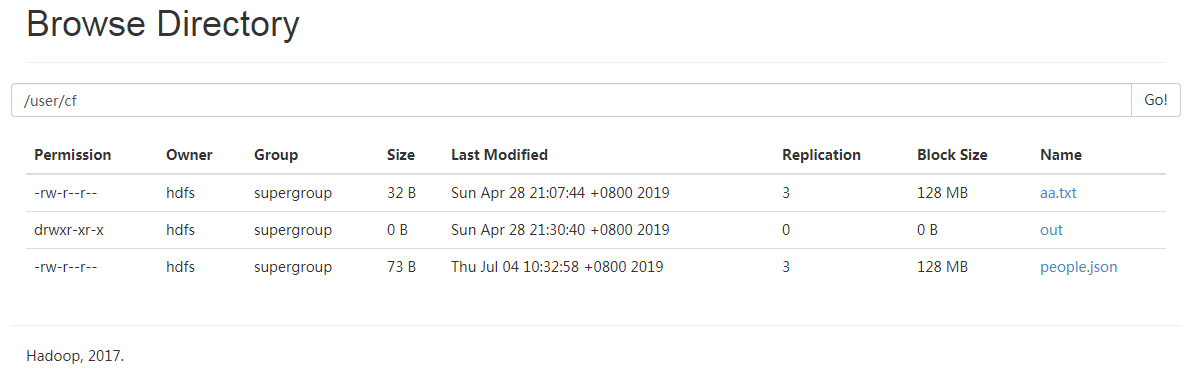

进入Github下载people.json文件:

并上传到HDFS上:

继续执行脚本:

# A JSON dataset is pointed to by path.

# The path can be either a single text file or a directory storing text files.

>>> if len(sys.argv) < 2:

... path = "/user/cf/people.json"

... else:

... path = sys.argv[1]

...

# Create a DataFrame from the file(s) pointed to by path

>>> people = sqlContext.jsonFile(path)

[Stage 5:> (0 + 1) / 2]19/07/04 10:34:33 WARN spark.ExecutorAllocationManager: No stages are running, but numRunningTasks != 0

# The inferred schema can be visualized using the printSchema() method.

>>> people.printSchema()

root

|-- age: long (nullable = true)

|-- name: string (nullable = true)

# Register this DataFrame as a table.

>>> people.registerAsTable("people")

/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/spark/python/pyspark/sql/dataframe.py:142: UserWarning: Use registerTempTable instead of registerAsTable.

warnings.warn("Use registerTempTable instead of registerAsTable.")

# SQL statements can be run by using the sql methods provided by sqlContext

>>> teenagers = sqlContext.sql("SELECT name FROM people WHERE age >= 13 AND age <= 19")

>>> for each in teenagers.collect():

... print(each[0])

...

Justin

执行结束:

>>> sc.stop() >>>

参考程序:

#

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

from __future__ import print_function

import os

import sys

from pyspark import SparkContext

from pyspark.sql import SQLContext

from pyspark.sql.types import Row, StructField, StructType, StringType, IntegerType

if __name__ == "__main__":

sc = SparkContext(appName="PythonSQL")

sqlContext = SQLContext(sc)

# RDD is created from a list of rows

some_rdd = sc.parallelize([Row(name="John", age=19),

Row(name="Smith", age=23),

Row(name="Sarah", age=18)])

# Infer schema from the first row, create a DataFrame and print the schema

some_df = sqlContext.createDataFrame(some_rdd)

some_df.printSchema()

# Another RDD is created from a list of tuples

another_rdd = sc.parallelize([("John", 19), ("Smith", 23), ("Sarah", 18)])

# Schema with two fields - person_name and person_age

schema = StructType([StructField("person_name", StringType(), False),

StructField("person_age", IntegerType(), False)])

# Create a DataFrame by applying the schema to the RDD and print the schema

another_df = sqlContext.createDataFrame(another_rdd, schema)

another_df.printSchema()

# root

# |-- age: integer (nullable = true)

# |-- name: string (nullable = true)

# A JSON dataset is pointed to by path.

# The path can be either a single text file or a directory storing text files.

if len(sys.argv) < 2:

path = "file://" + \

os.path.join(os.environ['SPARK_HOME'], "examples/src/main/resources/people.json")

else:

path = sys.argv[1]

# Create a DataFrame from the file(s) pointed to by path

people = sqlContext.jsonFile(path)

# root

# |-- person_name: string (nullable = false)

# |-- person_age: integer (nullable = false)

# The inferred schema can be visualized using the printSchema() method.

people.printSchema()

# root

# |-- age: IntegerType

# |-- name: StringType

# Register this DataFrame as a table.

people.registerAsTable("people")

# SQL statements can be run by using the sql methods provided by sqlContext

teenagers = sqlContext.sql("SELECT name FROM people WHERE age >= 13 AND age <= 19")

for each in teenagers.collect():

print(each[0])

sc.stop()

Spark教程——(5)PySpark入门的更多相关文章

- Spark教程——(11)Spark程序local模式执行、cluster模式执行以及Oozie/Hue执行的设置方式

本地执行Spark SQL程序: package com.fc //import common.util.{phoenixConnectMode, timeUtil} import org.apach ...

- Spring_MVC_教程_快速入门_深入分析

Spring MVC 教程,快速入门,深入分析 博客分类: SPRING Spring MVC 教程快速入门 资源下载: Spring_MVC_教程_快速入门_深入分析V1.1.pdf Spring ...

- AFNnetworking快速教程,官方入门教程译

AFNnetworking快速教程,官方入门教程译 分类: IOS2013-12-15 20:29 12489人阅读 评论(5) 收藏 举报 afnetworkingjsonios入门教程快速教程 A ...

- 【译】ASP.NET MVC 5 教程 - 1:入门

原文:[译]ASP.NET MVC 5 教程 - 1:入门 本教程将教你使用Visual Studio 2013 预览版构建 ASP.NET MVC 5 Web 应用程序 的基础知识.本主题还附带了一 ...

- Nginx教程(一) Nginx入门教程

Nginx教程(一) Nginx入门教程 1 Nginx入门教程 Nginx是一款轻量级的Web服务器/反向代理服务器及电子邮件(IMAP/POP3)代理服务器,并在一个BSD-like协议下发行.由 ...

- spark教程

某大神总结的spark教程, 地址 http://litaotao.github.io/introduction-to-spark?s=inner

- Android基础-系统架构分析,环境搭建,下载Android Studio,AndroidDevTools,Git使用教程,Github入门,界面设计介绍

系统架构分析 Android体系结构 安卓结构有四大层,五个部分,Android分四层为: 应用层(Applications),应用框架层(Application Framework),系统运行层(L ...

- Spark SQL 编程API入门系列之SparkSQL的依赖

不多说,直接上干货! 不带Hive支持 <dependency> <groupId>org.apache.spark</groupId> <artifactI ...

- spark教程(七)-文件读取案例

sparkSession 读取 csv 1. 利用 sparkSession 作为 spark 切入点 2. 读取 单个 csv 和 多个 csv from pyspark.sql import Sp ...

- spark教程(六)-Python 编程与 spark-submit 命令

hadoop 是 java 开发的,原生支持 java:spark 是 scala 开发的,原生支持 scala: spark 还支持 java.python.R,本文只介绍 python spark ...

随机推荐

- 使用python实现归并排序、快速排序、堆排序

归并排序 使用分治法:分而治之 分: 递归地拆分数组,直到它被分成两对单个元素数组为止. 然后,将这些单个元素中的每一个与它的对合并,然后将这些对与它们的对等合并,直到整个列表按照排序顺序合并为止. ...

- Centos7 将应用添加快捷方式到applications 中以pycham为例[ubuntu]适用

安装版本pycharm-2019.1.3 安装路径:/opt/pycharm-2019.1.3/ vim /usr/share/applications/pycharm.desktop #!/usr/ ...

- DotNet中静态成员、静态类、静态构造方法和实例构造方法的区别与联系

在面向对象的C#程序设计中,关于静态的概念一直是很多人搞不明白的.下面介绍这些带“静态”的名称. 1.静态成员: 定义:静态成员是用static关键字修饰的成员(包括字段属性和方法) 所属:静态成员是 ...

- 验证码 倒计时 vue 操作对象

//html <input type="number" v-model="phoneNumber" placeholder="请输入手机号&qu ...

- Laravel 6.X + Vue.js 2.X + Element UI 开发知乎流程

本流程参照:CODECASTS的Laravel Vuejs 实战:开发知乎 视频教程 1项目环境配置和用户表设计 2Laravel 开发知乎:用户注册 3Laravel 开发知乎:用户登录 4Lara ...

- nyoj 24

素数距离问题 时间限制:3000 ms | 内存限制:65535 KB 难度:2 描述 现在给出你一些数,要求你写出一个程序,输出这些整数相邻最近的素数,并输出其相距长度.如果左右有等距离长度 ...

- springMVC读取本地图片显示到前端页面

@RequestMapping("/getImage") @ResponseBody public void getImagesId(HttpServletResponse rp) ...

- Chrome浏览器 HTML5看视频卡顿

定位问题 起初以为是flash的问题,但是在B站看视频,由html播放改为flash播放后,卡顿现象消失 将相同的B站视频,用edge播放,也无卡顿现象 可以确定,问题出在chrome身上 解决方法 ...

- 创业复盘实战营总结----HHR计划----创业课

一句话总结课程核心点. 一共4节在线课: 第一节课:<创业学习> 投资人最看重的是CEO的快速学习能力,根据IPO思维模型,如果一共CEO每天input大量的信息,高效的process,而 ...

- SpringData学习笔记一

Spring Data : 介绍: Spring 的一个子项目.用于简化数据库访问,支持NoSQL 和 关系数据存储.其主要目标是使数据库的访问变得方便快捷. SpringData 项目所支持 NoS ...