Nginx(四):Keepalived+Nginx 高可用集群

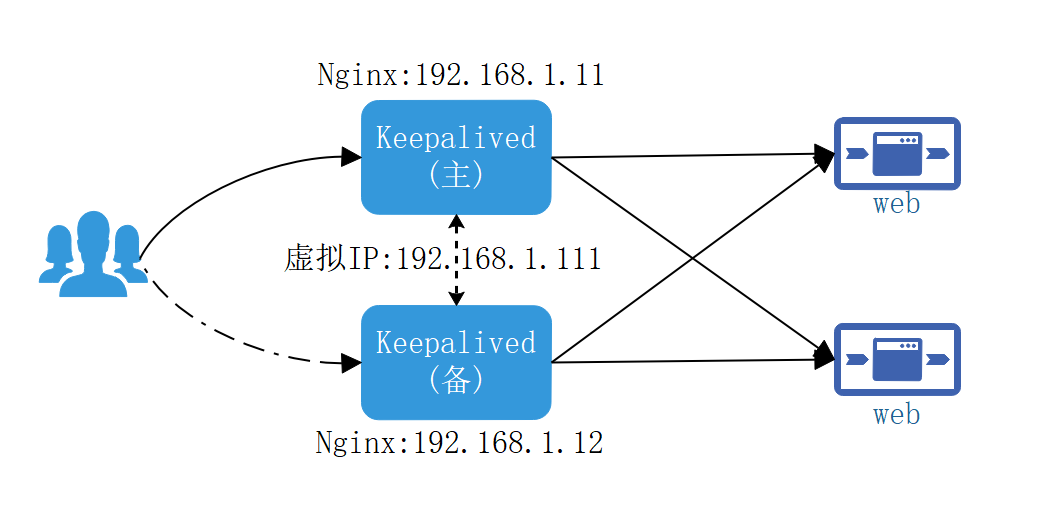

Keepalived+Nginx 高可用集群 (主从模式)

集群架构图

安装keepalived

[root@localhost ~]# yum install -y keepalived

查看状态

[root@localhost ~]# rpm -qa|grep keepalived

keepalived-1.3.5-16.el7.x86_64

查看配置

[root@localhost ~]# cd /etc/keepalived/

[root@localhost keepalived]# ls

keepalived.conf

# 备份配置文件

[root@localhost keepalived]# cp keepalived.conf keepalived.conf.bak

[root@localhost keepalived]# ls

keepalived.conf keepalived.conf.bak

修改配置文件

vrrp_script chk_http_port {

# 检测nginx状态脚本路径

script "/etc/nginx/script/nginx_check.sh"

interval 2 # 检测脚本执行的间隔

weight 2

}

vrrp_instance VI_1 {

state BACKUP # 主机 MASTER,备机BACKUP

interface ens33 # 网卡名称

virtual_router_id 51 # 主,备机的virtual_router_id必须相同

priority 90 # 主,备机取不同的优先级,主机值较大,备份机值较小

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.1.111 # VRRP H虚拟地址

}

}

修改本地hosts配置文件

192.168.1.111 www.123.com

新增检测nginx状态脚本

#!/bin/bash

A=`ps -C nginx –no-header |wc -l`

if [ $A -eq 0 ];then

/usr/local/nginx/sbin/nginx

sleep 2

if [ `ps -C nginx --no-header |wc -l` -eq 0 ];then

killall keepalived

fi

fi

注意:将此脚本放入keepalived配置的路径下,主备Nginx各一份。

修改备机Nginx配置

http {

upstream myserver {

server 192.168.1.11:8080 weight=1;

server 192.168.1.11:8081 weight=10;

}

server {

listen 80;

# listen [::]:80 default_server;

server_name www.123.com;

root /usr/share/nginx/html;

# Load configuration files for the default server block.

include /etc/nginx/default.d/*.conf;

location / {

proxy_pass http://myserver;

}

}

}

启动

启动主机Nginx

[root@localhost ~]# systemctl start nginx

[root@localhost ~]# systemctl status nginx

● nginx.service - The nginx HTTP and reverse proxy server

Loaded: loaded (/usr/lib/systemd/system/nginx.service; disabled; vendor preset: disabled)

Active: active (running) since Sun 2020-04-05 14:32:15 CST; 5s ago

Process: 92510 ExecStart=/usr/sbin/nginx (code=exited, status=0/SUCCESS)

Process: 92506 ExecStartPre=/usr/sbin/nginx -t (code=exited, status=0/SUCCESS)

Process: 92504 ExecStartPre=/usr/bin/rm -f /run/nginx.pid (code=exited, status=0/SUCCESS)

Main PID: 92512 (nginx)

CGroup: /system.slice/nginx.service

├─92512 nginx: master process /usr/sbin/nginx

├─92513 nginx: worker process

├─92514 nginx: worker process

├─92515 nginx: worker process

└─92516 nginx: worker process

Apr 05 14:32:15 localhost systemd[1]: Starting The nginx HTTP and reverse proxy server...

Apr 05 14:32:15 localhost nginx[92506]: nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

Apr 05 14:32:15 localhost nginx[92506]: nginx: configuration file /etc/nginx/nginx.conf test is successful

Apr 05 14:32:15 localhost systemd[1]: Started The nginx HTTP and reverse proxy server.

启动主机keepalived

[root@localhost ~]# systemctl start keepalived

[root@localhost ~]# systemctl status keepalived

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; disabled; vendor preset: disabled)

Active: active (running) since Sun 2020-04-05 14:33:13 CST; 5s ago

Process: 92572 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 92573 (keepalived)

CGroup: /system.slice/keepalived.service

├─92573 /usr/sbin/keepalived -D

├─92574 /usr/sbin/keepalived -D

└─92575 /usr/sbin/keepalived -D

Apr 05 14:33:14 localhost Keepalived_vrrp[92575]: VRRP_Instance(VI_1) Transition to MASTER STATE

Apr 05 14:33:15 localhost Keepalived_vrrp[92575]: VRRP_Instance(VI_1) Entering MASTER STATE

Apr 05 14:33:15 localhost Keepalived_vrrp[92575]: VRRP_Instance(VI_1) setting protocol iptable drop rule

Apr 05 14:33:15 localhost Keepalived_vrrp[92575]: VRRP_Instance(VI_1) setting protocol VIPs.

Apr 05 14:33:15 localhost Keepalived_vrrp[92575]: Sending gratuitous ARP on ens33 for 192.168.1.111

Apr 05 14:33:15 localhost Keepalived_vrrp[92575]: VRRP_Instance(VI_1) Sending/queueing gratuitous ARPs on ens33 for 192.168.1.111

Apr 05 14:33:15 localhost Keepalived_vrrp[92575]: Sending gratuitous ARP on ens33 for 192.168.1.111

Apr 05 14:33:15 localhost Keepalived_vrrp[92575]: Sending gratuitous ARP on ens33 for 192.168.1.111

Apr 05 14:33:15 localhost Keepalived_vrrp[92575]: Sending gratuitous ARP on ens33 for 192.168.1.111

Apr 05 14:33:15 localhost Keepalived_vrrp[92575]: Sending gratuitous ARP on ens33 for 192.168.1.111

启动备机Nginx

[root@localhost nginx]# systemctl start nginx

[root@localhost nginx]# systemctl status nginx

● nginx.service - The nginx HTTP and reverse proxy server

Loaded: loaded (/usr/lib/systemd/system/nginx.service; disabled; vendor preset: disabled)

Active: active (running) since Sun 2020-04-05 22:04:26 CST; 7s ago

Process: 19901 ExecStart=/usr/sbin/nginx (code=exited, status=0/SUCCESS)

Process: 19898 ExecStartPre=/usr/sbin/nginx -t (code=exited, status=0/SUCCESS)

Process: 19896 ExecStartPre=/usr/bin/rm -f /run/nginx.pid (code=exited, status=0/SUCCESS)

Main PID: 19903 (nginx)

CGroup: /system.slice/nginx.service

├─19903 nginx: master process /usr/sbin/nginx

├─19904 nginx: worker process

├─19905 nginx: worker process

├─19906 nginx: worker process

└─19907 nginx: worker process

Apr 05 22:04:26 localhost.localdomain systemd[1]: Starting The nginx HTTP and reverse proxy server...

Apr 05 22:04:26 localhost.localdomain nginx[19898]: nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

Apr 05 22:04:26 localhost.localdomain nginx[19898]: nginx: configuration file /etc/nginx/nginx.conf test is successful

Apr 05 22:04:26 localhost.localdomain systemd[1]: Started The nginx HTTP and reverse proxy server.

启动备机keepalived

[root@localhost nginx]# systemctl start keepalived

[root@localhost nginx]# systemctl status keepalived

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; disabled; vendor preset: disabled)

Active: active (running) since Sun 2020-04-05 22:05:16 CST; 8s ago

Process: 19915 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 19916 (keepalived)

CGroup: /system.slice/keepalived.service

├─19916 /usr/sbin/keepalived -D

├─19917 /usr/sbin/keepalived -D

└─19918 /usr/sbin/keepalived -D

Apr 05 22:05:16 localhost.localdomain Keepalived_healthcheckers[19917]: Activating healthchecker for service [192.168.200.100]:443

Apr 05 22:05:16 localhost.localdomain Keepalived_healthcheckers[19917]: Activating healthchecker for service [10.10.10.2]:1358

Apr 05 22:05:16 localhost.localdomain Keepalived_healthcheckers[19917]: Activating healthchecker for service [10.10.10.2]:1358

Apr 05 22:05:16 localhost.localdomain Keepalived_healthcheckers[19917]: Activating healthchecker for service [10.10.10.3]:1358

Apr 05 22:05:16 localhost.localdomain Keepalived_healthcheckers[19917]: Activating healthchecker for service [10.10.10.3]:1358

Apr 05 22:05:16 localhost.localdomain Keepalived_vrrp[19918]: VRRP_Instance(VI_1) Entering BACKUP STATE

Apr 05 22:05:16 localhost.localdomain Keepalived_vrrp[19918]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)]

Apr 05 22:05:22 localhost.localdomain Keepalived_healthcheckers[19917]: Timeout connecting server [192.168.200.2]:1358.

Apr 05 22:05:22 localhost.localdomain Keepalived_healthcheckers[19917]: Timeout connecting server [192.168.200.4]:1358.

Apr 05 22:05:23 localhost.localdomain Keepalived_healthcheckers[19917]: Timeout connecting server [192.168.200.5]:1358.

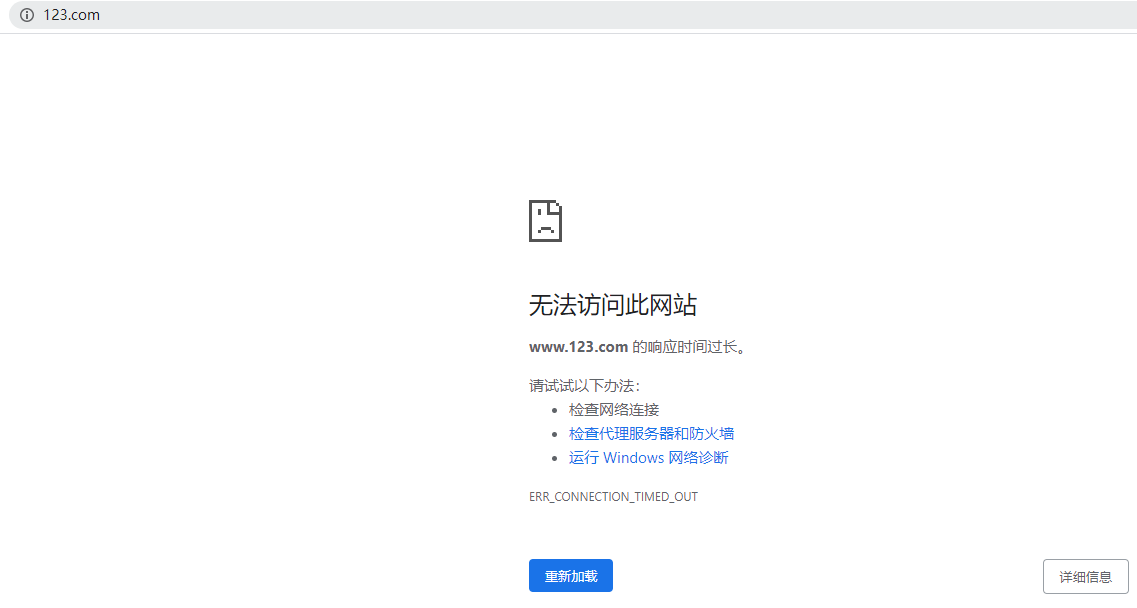

检测

排查

- 是否关联虚拟ip

[root@localhost ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:d6:85:50 brd ff:ff:ff:ff:ff:ff

inet 192.168.1.11/24 brd 192.168.1.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.1.111/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::3e71:f6ff:5b69:2539/64 scope link noprefixroute

valid_lft forever preferred_lft forever

- 是否可以ping通虚拟ip

[root@localhost ~]# ping 192.168.1.111

PING 192.168.1.111 (192.168.1.111) 56(84) bytes of data.

ping不通解决方案:原因是keepalived.conf配置中默认vrrp_strict打开了,需要把它注释掉。重启keepalived即可ping通。

优化keepalived配置

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

# vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

注意:备机中的配置文件也要一起修改

重启keepalived

[root@localhost ~]# systemctl restart keepalived

[root@localhost ~]# systemctl status keepalived

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; disabled; vendor preset: disabled)

Active: active (running) since Sun 2020-04-05 14:46:31 CST; 15s ago

Process: 93230 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 93231 (keepalived)

CGroup: /system.slice/keepalived.service

├─93231 /usr/sbin/keepalived -D

├─93232 /usr/sbin/keepalived -D

└─93233 /usr/sbin/keepalived -D

Apr 05 14:46:38 localhost Keepalived_vrrp[93233]: Sending gratuitous ARP on ens33 for 192.168.1.111

Apr 05 14:46:38 localhost Keepalived_vrrp[93233]: Sending gratuitous ARP on ens33 for 192.168.1.111

Apr 05 14:46:38 localhost Keepalived_healthcheckers[93232]: Timeout connecting server [192.168.200.5]:1358.

Apr 05 14:46:40 localhost Keepalived_healthcheckers[93232]: Timeout connecting server [192.168.200.3]:1358.

Apr 05 14:46:40 localhost Keepalived_healthcheckers[93232]: Timeout connecting server [192.168.201.100]:443.

Apr 05 14:46:43 localhost Keepalived_healthcheckers[93232]: Timeout connecting server [192.168.200.2]:1358.

Apr 05 14:46:44 localhost Keepalived_healthcheckers[93232]: Timeout connecting server [192.168.200.4]:1358.

Apr 05 14:46:44 localhost Keepalived_healthcheckers[93232]: Timeout connecting server [192.168.200.5]:1358.

Apr 05 14:46:46 localhost Keepalived_healthcheckers[93232]: Timeout connecting server [192.168.200.3]:1358.

Apr 05 14:46:46 localhost Keepalived_healthcheckers[93232]: Timeout connecting server [192.168.201.100]:443.

备机同样操作。

校验

关闭主机keepalived

[root@localhost ~]# systemctl stop keepalived

[root@localhost ~]# systemctl status keepalived

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; disabled; vendor preset: disabled)

Active: inactive (dead)

Apr 05 14:47:28 localhost Keepalived_healthcheckers[93232]: Timeout reading data to remote SMTP server [192.168.200.1]:25.

Apr 05 14:47:28 localhost Keepalived_healthcheckers[93232]: Timeout reading data to remote SMTP server [192.168.200.1]:25.

Apr 05 14:50:50 localhost systemd[1]: Stopping LVS and VRRP High Availability Monitor...

Apr 05 14:50:50 localhost Keepalived[93231]: Stopping

Apr 05 14:50:50 localhost Keepalived_healthcheckers[93232]: Stopped

Apr 05 14:50:50 localhost Keepalived_vrrp[93233]: VRRP_Instance(VI_1) sent 0 priority

Apr 05 14:50:50 localhost Keepalived_vrrp[93233]: VRRP_Instance(VI_1) removing protocol VIPs.

Apr 05 14:50:51 localhost Keepalived_vrrp[93233]: Stopped

Apr 05 14:50:51 localhost Keepalived[93231]: Stopped Keepalived v1.3.5 (03/19,2017), git commit v1.3.5-6-g6fa32f2

Apr 05 14:50:51 localhost systemd[1]: Stopped LVS and VRRP High Availability Monitor.

检测

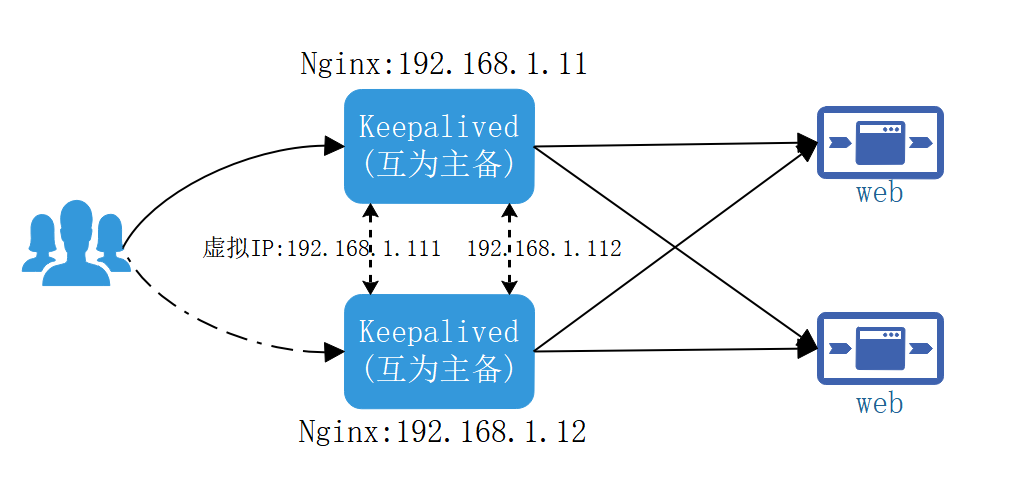

Keepalived+Nginx 高可用集群 (双主模式)

集群架构图

修改配置

[root@localhost ~]# cd /etc/keepalived/

[root@localhost keepalived]# ls

keepalived.conf keepalived.conf.bak

# 建议将主从模式配置备份

[root@localhost keepalived]# cp keepalived.conf keepalived.conf.ms_bk

[root@localhost keepalived]# ls

keepalived.conf keepalived.conf.bak keepalived.conf.ms_bk

修改192.168.1.12配置

vrrp_instance VI_1 {

state BACKUP # 主机 MASTER,备机 BACKUP

interface ens33 # 网卡名称

virtual_router_id 51 # 主,备机的virtual_router_id必须相同

priority 100 # 主,备机取不同的优先级,主机值较大,备份机值较小

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.1.111/24 dev ens33 label ens33:1 # VRRP H虚拟地址

}

}

vrrp_instance VI_2 {

state MASTER # 主机 MASTER,备机BACKUP

interface ens33 # 网卡名称

virtual_router_id 52 # 主,备机的virtual_router_id必须相同

priority 150 # 主,备机取不同的优先级,主机值较大,备份机值较小

advert_int 1

authentication {

auth_type PASS

auth_pass 2222

}

virtual_ipaddress {

192.168.1.112/24 dev ens33 label ens33:2 # VRRP H虚拟地址

}

}

修改192.168.1.11配置

vrrp_instance VI_1 {

state MASTER # 主机 MASTER,备机BACKUP

interface ens33 # 网卡名称

virtual_router_id 51 # 主,备机的virtual_router_id必须相同

priority 150 # 主,备机取不同的优先级,主机值较大,备份机值较小

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.1.111/24 dev ens33 label ens33:1 # VRRP H虚拟地址

}

}

vrrp_instance VI_2 {

state BACKUP # 主机 MASTER,备机BACKUP

interface ens33 # 网卡名称

virtual_router_id 52 # 主,备机的virtual_router_id必须相同

priority 100 # 主,备机取不同的优先级,主机值较大,备份机值较小

advert_int 1

authentication {

auth_type PASS

auth_pass 2222

}

virtual_ipaddress {

192.168.1.112/24 dev ens33 label ens33:2 # VRRP H虚拟地址

}

}

启动keepalived

[root@localhost ~]# systemctl start keepalived

检测

# 192.168.1.11

[root@localhost ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:d6:85:50 brd ff:ff:ff:ff:ff:ff

inet 192.168.1.11/24 brd 192.168.1.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.1.111/24 scope global secondary ens33:1

valid_lft forever preferred_lft forever

inet6 fe80::3e71:f6ff:5b69:2539/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@localhost ~]# ping 192.168.1.111

PING 192.168.1.111 (192.168.1.111) 56(84) bytes of data.

64 bytes from 192.168.1.111: icmp_seq=1 ttl=64 time=0.027 ms

64 bytes from 192.168.1.111: icmp_seq=2 ttl=64 time=0.068 ms

64 bytes from 192.168.1.111: icmp_seq=3 ttl=64 time=0.070 ms

^C

--- 192.168.1.111 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2005ms

rtt min/avg/max/mdev = 0.027/0.055/0.070/0.019 ms

[root@localhost ~]# ping 192.168.1.112

PING 192.168.1.112 (192.168.1.112) 56(84) bytes of data.

64 bytes from 192.168.1.112: icmp_seq=1 ttl=64 time=0.477 ms

64 bytes from 192.168.1.112: icmp_seq=2 ttl=64 time=0.510 ms

64 bytes from 192.168.1.112: icmp_seq=3 ttl=64 time=0.529 ms

^C

--- 192.168.1.112 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2004ms

rtt min/avg/max/mdev = 0.477/0.505/0.529/0.028 ms

# 192.168.1.12

[root@localhost ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:93:75:6a brd ff:ff:ff:ff:ff:ff

inet 192.168.1.12/24 brd 192.168.1.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.1.112/24 scope global secondary ens33:2

valid_lft forever preferred_lft forever

inet6 fe80::3353:a636:630b:4a4f/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@localhost ~]# ping 192.168.1.111

PING 192.168.1.111 (192.168.1.111) 56(84) bytes of data.

64 bytes from 192.168.1.111: icmp_seq=1 ttl=64 time=0.766 ms

64 bytes from 192.168.1.111: icmp_seq=2 ttl=64 time=0.857 ms

64 bytes from 192.168.1.111: icmp_seq=3 ttl=64 time=0.554 ms

^C

--- 192.168.1.111 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2012ms

rtt min/avg/max/mdev = 0.554/0.725/0.857/0.130 ms

[root@localhost ~]# ping 192.168.1.112

PING 192.168.1.112 (192.168.1.112) 56(84) bytes of data.

64 bytes from 192.168.1.112: icmp_seq=1 ttl=64 time=0.050 ms

64 bytes from 192.168.1.112: icmp_seq=2 ttl=64 time=0.072 ms

64 bytes from 192.168.1.112: icmp_seq=3 ttl=64 time=0.071 ms

^C

--- 192.168.1.112 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2006ms

rtt min/avg/max/mdev = 0.050/0.064/0.072/0.012 ms

关闭一台keepalived

[root@localhost ~]# systemctl stop keepalived

[root@localhost ~]# systemctl status keepalived

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; disabled; vendor preset: disabled)

Active: inactive (dead)

Apr 06 08:51:30 localhost Keepalived_healthcheckers[9477]: Timeout reading data to remote SMTP server [192.168.200.1]:25.

Apr 06 08:51:30 localhost Keepalived_healthcheckers[9477]: Timeout reading data to remote SMTP server [192.168.200.1]:25.

Apr 06 09:22:20 localhost Keepalived[9476]: Stopping

Apr 06 09:22:20 localhost systemd[1]: Stopping LVS and VRRP High Availability Monitor...

Apr 06 09:22:20 localhost Keepalived_vrrp[9478]: VRRP_Instance(VI_1) sent 0 priority

Apr 06 09:22:20 localhost Keepalived_vrrp[9478]: VRRP_Instance(VI_1) removing protocol VIPs.

Apr 06 09:22:20 localhost Keepalived_healthcheckers[9477]: Stopped

Apr 06 09:22:21 localhost Keepalived_vrrp[9478]: Stopped

Apr 06 09:22:21 localhost Keepalived[9476]: Stopped Keepalived v1.3.5 (03/19,2017), git commit v1.3.5-6-g6fa32f2

Apr 06 09:22:21 localhost systemd[1]: Stopped LVS and VRRP High Availability Monitor.

Nginx(四):Keepalived+Nginx 高可用集群的更多相关文章

- 集群相关、用keepalived配置高可用集群

1.集群相关 2.keepalived相关 3.用keepalived配置高可用集群 安装:yum install keepalived -y 高可用,主要是针对于服务器硬件或服务器上的应用服务而 ...

- Linux centosVMware 集群介绍、keepalived介绍、用keepalived配置高可用集群

一.集群介绍 根据功能划分为两大类:高可用和负载均衡 高可用集群通常为两台服务器,一台工作,另外一台作为冗余,当提供服务的机器宕机,冗余将接替继续提供服务 实现高可用的开源软件有:heartbeat. ...

- LVS+Keepalived实现高可用集群

LVS+Keepalived实现高可用集群来源: ChinaUnix博客 日期: 2009.07.21 14:49 (共有条评论) 我要评论 操作系统平台:CentOS5.2软件:LVS+keepal ...

- 实战| Nginx+keepalived 实现高可用集群

一个执着于技术的公众号 前言 今天通过两个实战案例,带大家理解Nginx+keepalived 如何实现高可用集群,在学习新知识之前您可以选择性复习之前的知识点: 给小白的 Nginx 10分钟入门指 ...

- 集群介绍 keepalived介绍 用keepalived配置高可用集群

集群介绍 • 根据功能划分为两大类:高可用和负载均衡 • 高可用集群通常为两台服务器,一台工作,另外一台作为冗余,当提供服务的机器宕机,冗余将接替继续提供服务 • 实现高可用的开源软件有:heartb ...

- rabbitmq+ keepalived+haproxy高可用集群详细命令

公司要用rabbitmq研究了两周,特把 rabbitmq 高可用的研究成果备下 后续会更新封装的类库 安装erlang wget http://www.gelou.me/yum/erlang-18. ...

- rabbitmq+haproxy+keepalived实现高可用集群搭建

项目需要搭建rabbitmq的高可用集群,最近在学习搭建过程,在这里记录下可以跟大家一起互相交流(这里只是记录了学习之后自己的搭建过程,许多原理的东西没有细说). 搭建环境 CentOS7 64位 R ...

- keepalived+MySQL高可用集群

基于keepalived搭建MySQL的高可用集群 MySQL的高可用方案一般有如下几种: keepalived+双主,MHA,MMM,Heartbeat+DRBD,PXC,Galera Clus ...

- CentOS7 haproxy+keepalived实现高可用集群搭建

一.搭建环境 CentOS7 64位 Keepalived 1.3.5 Haproxy 1.5.18 后端负载主机:192.168.166.21 192.168.166.22 两台节点上安装rabbi ...

- Keepalived 配置高可用集群

一.Keepalived 简介 (1) Keepalived 能实现高可用也能实现负载均衡,Keepalived 是通过 VRRP 协议 ( Virtual Router Redundancy Pro ...

随机推荐

- javascript实现文件上传之前的预览功能

1.首先要给上传文件表单控件和图片控件设置name属性 <div class="form-group"> <label fo ...

- 深度分析:Java多线程,线程安全,并发包

1:synchronized(保证原子性和可见性) 1.同步锁.多线程同时访问时,同一时刻只能有一个线程能够访问使synchronized修饰的代码块或方法.它修饰的对象有以下几种: 修饰一个代码块, ...

- jQuery 第五章 实例方法 详解内置队列queue() dequeue() 方法

.queue() .dequeue() .clearQueue() ------------------------------------------------------------------ ...

- 【电子取证:抓包篇】Fiddler 抓包配置与数据分析(简)

Fiddler 抓包配置与分析(简) 简单介绍了Fiddler抓包常用到的基础知识,看完可以大概明白怎么分析抓包数据 ---[suy999] Fiddler 抓包工具,可以将网络传输发送与接受的数 ...

- [Android systrace系列] systrace入门第一式

转载请注明出处:https://www.cnblogs.com/zzcperf/p/13978915.html Android systrace是分析性能问题最称手的工具之一,可以提供丰富的手机运行信 ...

- jvm参数与生产配置

堆内存分配:JVM初始分配的内存由-Xms指定,默认是物理内存的1/64JVM最大分配的内存由-Xmx指定,默认是物理内存的1/4默认空余堆内存小于40%时,JVM就会增大堆直到-Xmx的最大限制:空 ...

- [题解] 洛谷 P3393 逃离僵尸岛

题目TP门 很明显是一个最短路,但是如何建图才是关键. 对于每一个不可遍历到的点,可以向外扩散,找到危险城市. 若是对于每一个这样的城市进行搜索,时间复杂度就为\(O(n^2)\),显然过不了.不妨把 ...

- Beta冲刺随笔——Day_Eight

这个作业属于哪个课程 软件工程 (福州大学至诚学院 - 计算机工程系) 这个作业要求在哪里 Beta 冲刺 这个作业的目标 团队进行Beta冲刺 作业正文 正文 其他参考文献 无 今日事今日毕 林涛: ...

- spring boot 访问外部http请求

以前 访问外部请求都要经过 要用 httpClient 需要专门写一个方法 来发送http请求 这个这里就不说了 网上一搜全都是现成的方法 springboot 实现外部http请求 是通过F ...

- 第10.4节 Python模块的弱封装机制

一. 引言 Python模块可以为调用者提供模块内成员的访问和调用,但某些情况下, 因为某些成员可能有特殊访问规则等原因,并不适合将模块内所有成员都提供给调用者访问,此时模块可以类似类的封装机制类似的 ...