deep learning(1)BP神经网络原理与练习

具体原理参考如下讲义:

1、神经网络

2、反向传导

看完材料1和2就可以梳理清楚bp神经网络的基本工作原理,下面通过一个C语言实现的程序来练习这个算法

//Backpropagation, 25x25x8 units, binary sigmoid function network

//Written by Thomas Riga, University of Genoa, Italy

//thomas@magister.magi.unige.it #include <iostream>

#include <fstream>

#include <conio.h>

#include <stdlib.h>

#include <math.h>

#include <ctype.h>

#include <stdio.h>

#include <float.h>

using namespace std; double **input,

*hidden,

**output,

**target,

*bias,

**weight_i_h,

**weight_h_o,

*errorsignal_hidden,

*errorsignal_output; int input_array_size,

hidden_array_size,

output_array_size,

max_patterns,

bias_array_size,

gaset = -,

number_of_input_patterns,

pattern,

file_loaded = ,

ytemp = ,

ztemp = ;

double learning_rate,

max_error_tollerance = 0.1;

char filename[];

#define IA 16807

#define IM 2147483647

#define AM (1.0 / IM)

#define IQ 127773

#define IR 2836

#define NTAB 32

#define NDIV (1+(IM-1) / NTAB)

#define EPS 1.2e-7

#define RNMX (1.0 - EPS)

int compare_output_to_target();

void load_data(char *arg);

void save_data(char *argres);

void forward_pass(int pattern);

void backward_pass(int pattern);

void custom();

void compute_output_pattern();

void get_file_name();

float bedlam(long *idum);

void learn();

void make();

void test();

void print_data();

void print_data_to_screen();

void print_data_to_file();

void output_to_screen();

int getnumber();

void change_learning_rate();

void initialize_net();

void clear_memory(); int main()

{

cout << "backpropagation network by Thomas Riga, University of Genoa, Italy" << endl;

for(;;) {

char choice;

cout << endl << "1. load data" << endl;

cout << "2. learn from data" << endl;

cout << "3. compute output pattern" << endl;

cout << "4. make new data file" << endl;

cout << "5. save data" << endl;

cout << "6. print data" << endl;

cout << "7. change learning rate" << endl;

cout << "8. exit" << endl << endl;

cout << "Enter your choice (1-8)";

do { choice = getch(); } while (choice != '' && choice != '' && choice != '' && choice != '' && choice != '' && choice != '' && choice != '' && choice != '');

switch(choice) {

case '':

{

if (file_loaded == ) clear_memory();

get_file_name();

file_loaded = ;

load_data(filename);

}

break;

case '': learn();

break;

case '': compute_output_pattern();

break;

case '': make();

break;

case '':

{

if (file_loaded == )

{

cout << endl

<< "there is no data loaded into memory"

<< endl;

break;

}

cout << endl << "enter a filename to save data to: ";

cin >> filename;

save_data(filename);

}

break;

case '': print_data();

break;

case '': change_learning_rate();

break;

case '': return ;

};

}

} void initialize_net()

{

int x;

input = new double * [number_of_input_patterns];

if(!input) { cout << endl << "memory problem!"; exit(); }

for(x=; x<number_of_input_patterns; x++)

{

input[x] = new double [input_array_size];

if(!input[x]) { cout << endl << "memory problem!"; exit(); }

}

hidden = new double [hidden_array_size];

if(!hidden) { cout << endl << "memory problem!"; exit(); }

output = new double * [number_of_input_patterns];

if(!output) { cout << endl << "memory problem!"; exit(); }

for(x=; x<number_of_input_patterns; x++)

{

output[x] = new double [output_array_size];

if(!output[x]) { cout << endl << "memory problem!"; exit(); }

}

target = new double * [number_of_input_patterns];

if(!target) { cout << endl << "memory problem!"; exit(); }

for(x=; x<number_of_input_patterns; x++)

{

target[x] = new double [output_array_size];

if(!target[x]) { cout << endl << "memory problem!"; exit(); }

}

bias = new double [bias_array_size];

if(!bias) { cout << endl << "memory problem!"; exit(); }

weight_i_h = new double * [input_array_size];

if(!weight_i_h) { cout << endl << "memory problem!"; exit(); }

for(x=; x<input_array_size; x++)

{

weight_i_h[x] = new double [hidden_array_size];

if(!weight_i_h[x]) { cout << endl << "memory problem!"; exit(); }

}

weight_h_o = new double * [hidden_array_size];

if(!weight_h_o) { cout << endl << "memory problem!"; exit(); }

for(x=; x<hidden_array_size; x++)

{

weight_h_o[x] = new double [output_array_size];

if(!weight_h_o[x]) { cout << endl << "memory problem!"; exit(); }

}

errorsignal_hidden = new double [hidden_array_size];

if(!errorsignal_hidden) { cout << endl << "memory problem!"; exit(); }

errorsignal_output = new double [output_array_size];

if(!errorsignal_output) { cout << endl << "memory problem!"; exit(); }

return;

} void learn()

{

if (file_loaded == )

{

cout << endl

<< "there is no data loaded into memory"

<< endl;

return;

}

cout << endl << "learning..." << endl << "press a key to return to menu" << endl;

register int y;

while(!kbhit()) {

for(y=; y<number_of_input_patterns; y++) {

forward_pass(y);

backward_pass(y);

}

if(compare_output_to_target()) {

cout << endl << "learning successful" << endl;

return;

} }

cout << endl << "learning not successful yet" << endl;

return;

} void load_data(char *arg) {

int x, y;

ifstream in(arg);

if(!in) { cout << endl << "failed to load data file" << endl; file_loaded = ; return; }

in >> input_array_size;

in >> hidden_array_size;

in >> output_array_size;

in >> learning_rate;

in >> number_of_input_patterns;

bias_array_size = hidden_array_size + output_array_size;

initialize_net();

for(x = ; x < bias_array_size; x++) in >> bias[x];

for(x=; x<input_array_size; x++) {

for(y=; y<hidden_array_size; y++) in >> weight_i_h[x][y];

}

for(x = ; x < hidden_array_size; x++) {

for(y=; y<output_array_size; y++) in >> weight_h_o[x][y];

}

for(x=; x < number_of_input_patterns; x++) {

for(y=; y<input_array_size; y++) in >> input[x][y];

}

for(x=; x < number_of_input_patterns; x++) {

for(y=; y<output_array_size; y++) in >> target[x][y];

}

in.close();

cout << endl << "data loaded" << endl;

return;

} void forward_pass(int pattern)

{

_control87(MCW_EM, MCW_EM);

register double temp=;

register int x,y; // INPUT -> HIDDEN

for(y=; y<hidden_array_size; y++) {

for(x=; x<input_array_size; x++) {

temp += (input[pattern][x] * weight_i_h[x][y]);

}

hidden[y] = (1.0 / (1.0 + exp(-1.0 * (temp + bias[y]))));

temp = ;

} // HIDDEN -> OUTPUT

for(y=; y<output_array_size; y++) {

for(x=; x<hidden_array_size; x++) {

temp += (hidden[x] * weight_h_o[x][y]);

}

output[pattern][y] = (1.0 / (1.0 + exp(-1.0 * (temp + bias[y + hidden_array_size]))));

temp = ;

}

return;

} void backward_pass(int pattern)

{

register int x, y;

register double temp = ; // COMPUTE ERRORSIGNAL FOR OUTPUT UNITS

for(x=; x<output_array_size; x++) {

errorsignal_output[x] = (target[pattern][x] - output[pattern][x]);

} // COMPUTE ERRORSIGNAL FOR HIDDEN UNITS

for(x=; x<hidden_array_size; x++) {

for(y=; y<output_array_size; y++) {

temp += (errorsignal_output[y] * weight_h_o[x][y]);

}

errorsignal_hidden[x] = hidden[x] * (-hidden[x]) * temp;

temp = 0.0;

} // ADJUST WEIGHTS OF CONNECTIONS FROM HIDDEN TO OUTPUT UNITS

double length = 0.0;

for (x=; x<hidden_array_size; x++) {

length += hidden[x]*hidden[x];

}

if (length<=0.1) length = 0.1;

for(x=; x<hidden_array_size; x++) {

for(y=; y<output_array_size; y++) {

weight_h_o[x][y] += (learning_rate * errorsignal_output[y] *

hidden[x]/length);

}

} // ADJUST BIASES OF HIDDEN UNITS

for(x=hidden_array_size; x<bias_array_size; x++) {

bias[x] += (learning_rate * errorsignal_output[x] / length);

} // ADJUST WEIGHTS OF CONNECTIONS FROM INPUT TO HIDDEN UNITS

length = 0.0;

for (x=; x<input_array_size; x++) {

length += input[pattern][x]*input[pattern][x];

}

if (length<=0.1) length = 0.1;

for(x=; x<input_array_size; x++) {

for(y=; y<hidden_array_size; y++) {

weight_i_h[x][y] += (learning_rate * errorsignal_hidden[y] *

input[pattern][x]/length);

}

} // ADJUST BIASES FOR OUTPUT UNITS

for(x=; x<hidden_array_size; x++) {

bias[x] += (learning_rate * errorsignal_hidden[x] / length);

}

return;

} int compare_output_to_target()

{

register int y,z;

register double temp, error = 0.0;

temp = target[ytemp][ztemp] - output[ytemp][ztemp];

if (temp < ) error -= temp;

else error += temp;

if(error > max_error_tollerance) return ;

error = 0.0;

for(y=; y < number_of_input_patterns; y++) {

for(z=; z < output_array_size; z++) {

temp = target[y][z] - output[y][z];

if (temp < ) error -= temp;

else error += temp;

if(error > max_error_tollerance) {

ytemp = y;

ztemp = z;

return ;

}

error = 0.0;

}

}

return ;

} void save_data(char *argres) {

int x, y;

ofstream out;

out.open(argres);

if(!out) { cout << endl << "failed to save file" << endl; return; }

out << input_array_size << endl;

out << hidden_array_size << endl;

out << output_array_size << endl;

out << learning_rate << endl;

out << number_of_input_patterns << endl << endl;

for(x=; x<bias_array_size; x++) out << bias[x] << ' ';

out << endl << endl;

for(x=; x<input_array_size; x++) {

for(y=; y<hidden_array_size; y++) out << weight_i_h[x][y] << ' ';

}

out << endl << endl;

for(x=; x<hidden_array_size; x++) {

for(y=; y<output_array_size; y++) out << weight_h_o[x][y] << ' ';

}

out << endl << endl;

for(x=; x<number_of_input_patterns; x++) {

for(y=; y<input_array_size; y++) out << input[x][y] << ' ';

out << endl;

}

out << endl;

for(x=; x<number_of_input_patterns; x++) {

for(y=; y<output_array_size; y++) out << target[x][y] << ' ';

out << endl;

}

out.close();

cout << endl << "data saved" << endl;

return;

} void make()

{

int x, y, z;

double inpx, bias_array_size, input_array_size, hidden_array_size, output_array_size;

char makefilename[];

cout << endl << "enter name of new data file: ";

cin >> makefilename;

ofstream out;

out.open(makefilename);

if(!out) { cout << endl << "failed to open file" << endl; return;}

cout << "how many input units? ";

cin >> input_array_size;

out << input_array_size << endl;

cout << "how many hidden units? ";

cin >> hidden_array_size;

out << hidden_array_size << endl;

cout << "how many output units? ";

cin >> output_array_size;

out << output_array_size << endl;

bias_array_size = hidden_array_size + output_array_size;

cout << endl << "Learning rate: ";

cin >> inpx;

out << inpx << endl;

cout << endl << "Number of input patterns: ";

cin >> z;

out << z << endl << endl;

for(x=; x<bias_array_size; x++) out << (1.0 - (2.0 * bedlam((long*)(gaset)))) << ' ';

out << endl << endl;

for(x=; x<input_array_size; x++) {

for(y=; y<hidden_array_size; y++) out << (1.0 - (2.0 * bedlam((long*)(gaset)))) << ' ';

}

out << endl << endl;

for(x=; x<hidden_array_size; x++) {

for(y=; y<output_array_size; y++) out << (1.0 - (2.0 * bedlam((long*)(gaset)))) << ' ';

}

out << endl << endl;

for(x=; x < z; x++) {

cout << endl << "input pattern " << (x + ) << endl;

for(y=; y<input_array_size; y++) {

cout << (y+) << ": ";

cin >> inpx;

out << inpx << ' ';

}

out << endl;

}

out << endl;

for(x=; x < z; x++) {

cout << endl << "target output pattern " << (x+) << endl;

for(y=; y<output_array_size; y++) {

cout << (y+) << ": ";

cin >> inpx;

out << inpx << ' ';

}

out << endl;

}

out.close();

cout << endl << "data saved, to work with this new data file you first have to load it" << endl;

return;

} float bedlam(long *idum)

{

int xj;

long xk;

static long iy=;

static long iv[NTAB];

float temp; if(*idum <= || !iy)

{

if(-(*idum) < )

{

*idum = + *idum;

}

else

{

*idum = -(*idum);

}

for(xj = NTAB+; xj >= ; xj--)

{

xk = (*idum) / IQ;

*idum = IA * (*idum - xk * IQ) - IR * xk;

if(*idum < )

{

*idum += IM;

}

if(xj < NTAB)

{

iv[xj] = *idum;

}

}

iy = iv[];

} xk = (*idum) / IQ;

*idum = IA * (*idum - xk * IQ) - IR * xk;

if(*idum < )

{

*idum += IM;

}

xj = iy / NDIV;

iy = iv[xj];

iv[xj] = *idum; if((temp=AM*iy) > RNMX)

{

return(RNMX);

}

else

{

return(temp);

}

} void test()

{

pattern = ;

while(pattern == ) {

cout << endl << endl << "There are " << number_of_input_patterns << " input patterns in the file," << endl << "enter a number within this range: ";

pattern = getnumber();

}

pattern--;

forward_pass(pattern);

output_to_screen();

return;

} void output_to_screen()

{

int x;

cout << endl << "Output pattern:" << endl;

for(x=; x<output_array_size; x++) {

cout << endl << (x+) << ": " << output[pattern][x] << " binary: ";

if(output[pattern][x] >= 0.9) cout << "";

else if(output[pattern][x]<=0.1) cout << "";

else cout << "intermediate value";

}

cout << endl;

return;

} int getnumber()

{

int a, b = ;

char c, d[];

while(b<) {

do { c = getch(); } while (c != '' && c != '' && c != '' && c != '' && c != '' && c != '' && c != '' && c != '' && c != '' && c != '' && toascii(c) != );

if(toascii(c)==) break;

if(toascii(c)==) return ;

d[b] = c;

cout << c;

b++;

}

d[b] = '\0';

a = atoi(d);

if(a < || a > number_of_input_patterns) a = ;

return a;

} void get_file_name()

{

cout << endl << "enter name of file to load: ";

cin >> filename;

return;

} void print_data()

{

char choice;

if (file_loaded == )

{

cout << endl

<< "there is no data loaded into memory"

<< endl;

return;

}

cout << endl << "1. print data to screen" << endl;

cout << "2. print data to file" << endl;

cout << "3. return to main menu" << endl << endl;

cout << "Enter your choice (1-3)" << endl;

do { choice = getch(); } while (choice != '' && choice != '' && choice != '');

switch(choice) {

case '': print_data_to_screen();

break;

case '': print_data_to_file();

break;

case '': return;

};

return;

} void print_data_to_screen() {

register int x, y;

cout << endl << endl << "DATA FILE: " << filename << endl;

cout << "learning rate: " << learning_rate << endl;

cout << "input units: " << input_array_size << endl;

cout << "hidden units: " << hidden_array_size << endl;

cout << "output units: " << output_array_size << endl;

cout << "number of input and target output patterns: " << number_of_input_patterns << endl << endl;

cout << "INPUT AND TARGET OUTPUT PATTERNS:";

for(x=; x<number_of_input_patterns; x++) {

cout << endl << "input pattern: " << (x+) << endl;

for(y=; y<input_array_size; y++) cout << input[x][y] << " ";

cout << endl << "target output pattern: " << (x+) << endl;

for(y=; y<output_array_size; y++) cout << target[x][y] << " ";

}

cout << endl << endl << "BIASES:" << endl;

for(x=; x<hidden_array_size; x++) {

cout << "bias of hidden unit " << (x+) << ": " << bias[x];

if(x<output_array_size) cout << " bias of output unit " << (x+) << ": " << bias[x+hidden_array_size];

cout << endl;

}

cout << endl << "WEIGHTS:" << endl;

for(x=; x<input_array_size; x++) {

for(y=; y<hidden_array_size; y++) cout << "i_h[" << x << "][" << y << "]: " << weight_i_h[x][y] << endl;

}

for(x=; x<hidden_array_size; x++) {

for(y=; y<output_array_size; y++) cout << "h_o[" << x << "][" << y << "]: " << weight_h_o[x][y] << endl;

}

return;

} void print_data_to_file()

{

char printfile[];

cout << endl << "enter name of file to print data to: ";

cin >> printfile;

ofstream out;

out.open(printfile);

if(!out) { cout << endl << "failed to open file"; return; }

register int x, y;

out << endl << endl << "DATA FILE: " << filename << endl;

out << "input units: " << input_array_size << endl;

out << "hidden units: " << hidden_array_size << endl;

out << "output units: " << output_array_size << endl;

out << "learning rate: " << learning_rate << endl;

out << "number of input and target output patterns: " << number_of_input_patterns << endl << endl;

out << "INPUT AND TARGET OUTPUT PATTERNS:";

for(x=; x<number_of_input_patterns; x++) {

out << endl << "input pattern: " << (x+) << endl;

for(y=; y<input_array_size; y++) out << input[x][y] << " ";

out << endl << "target output pattern: " << (x+) << endl;

for(y=; y<output_array_size; y++) out << target[x][y] << " ";

}

out << endl << endl << "BIASES:" << endl;

for(x=; x<hidden_array_size; x++) {

out << "bias of hidden unit " << (x+) << ": " << bias[x];

if(x<output_array_size) out << " bias of output unit " << (x+) << ": " << bias[x+hidden_array_size];

out << endl;

}

out << endl << "WEIGHTS:" << endl;

for(x=; x<input_array_size; x++) {

for(y=; y<hidden_array_size; y++) out << "i_h[" << x << "][" << y << "]: " << weight_i_h[x][y] << endl;

}

for(x=; x<hidden_array_size; x++) {

for(y=; y<output_array_size; y++) out << "h_o[" << x << "][" << y << "]: " << weight_h_o[x][y] << endl;

}

out.close();

cout << endl << "data has been printed to " << printfile << endl;

return;

} void change_learning_rate()

{

if (file_loaded == )

{

cout << endl

<< "there is no data loaded into memory"

<< endl;

return;

}

cout << endl << "actual learning rate: " << learning_rate << " new value: ";

cin >> learning_rate;

return;

} void compute_output_pattern()

{

if (file_loaded == )

{

cout << endl

<< "there is no data loaded into memory"

<< endl;

return;

}

char choice;

cout << endl << endl << "1. load trained input pattern into network" << endl;

cout << "2. load custom input pattern into network" << endl;

cout << "3. go back to main menu" << endl << endl;

cout << "Enter your choice (1-3)" << endl;

do { choice = getch(); } while (choice != '' && choice != '' && choice != '');

switch(choice) {

case '': test();

break;

case '': custom();

break;

case '': return;

};

} void custom()

{

_control87 (MCW_EM, MCW_EM);

char filename[];

register double temp=;

register int x,y;

double *custom_input = new double [input_array_size];

if(!custom_input)

{

cout << endl << "memory problem!";

return;

}

double *custom_output = new double [output_array_size];

if(!custom_output)

{

delete [] custom_input;

cout << endl << "memory problem!";

return;

}

cout << endl << endl << "enter file that contains test input pattern: ";

cin >> filename;

ifstream in(filename);

if(!in) { cout << endl << "failed to load data file" << endl; return; }

for(x = ; x < input_array_size; x++) {

in >> custom_input[x];

}

for(y=; y<hidden_array_size; y++) {

for(x=; x<input_array_size; x++) {

temp += (custom_input[x] * weight_i_h[x][y]);

}

hidden[y] = (1.0 / (1.0 + exp(-1.0 * (temp + bias[y]))));

temp = ;

}

for(y=; y<output_array_size; y++) {

for(x=; x<hidden_array_size; x++) {

temp += (hidden[x] * weight_h_o[x][y]);

}

custom_output[y] = (1.0 / (1.0 + exp(-1.0 * (temp + bias[y + hidden_array_size]))));

temp = ;

}

cout << endl << "Input pattern:" << endl;

for(x = ; x < input_array_size; x++) {

cout << "[" << (x + ) << ": " << custom_input[x] << "] ";

}

cout << endl << endl << "Output pattern:";

for(x=; x<output_array_size; x++) {

cout << endl << (x+) << ": " << custom_output[x] << " binary: ";

if(custom_output[x] >= 0.9) cout << "";

else if(custom_output[x]<=0.1) cout << "";

else cout << "intermediate value";

}

cout << endl;

delete [] custom_input;

delete [] custom_output;

return;

} void clear_memory()

{

int x;

for(x=; x<number_of_input_patterns; x++)

{

delete [] input[x];

}

delete [] input;

delete [] hidden;

for(x=; x<number_of_input_patterns; x++)

{

delete [] output[x];

}

delete [] output;

for(x=; x<number_of_input_patterns; x++)

{

delete [] target[x];

}

delete [] target;

delete [] bias;

for(x=; x<input_array_size; x++)

{

delete [] weight_i_h[x];

}

delete [] weight_i_h;

for(x=; x<hidden_array_size; x++)

{

delete [] weight_h_o[x];

}

delete [] weight_h_o;

delete [] errorsignal_hidden;

delete [] errorsignal_output;

file_loaded = ;

return;

}

初始化的神经网络的数据文件:

0.5 5.747781 -6.045236 1.206744 -41.245163 -0.249886 -0.35452 0.0718 -8.446443 9.25553 -6.50087 7.357942 7.777944 1.238442 15.957281 0.452741 -8.19198 9.140881 29.124746 9.806898 5.859479 -5.09182 -3.475694 -4.896269 6.320669 0.213897 !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

!!!!explanation of datafile. Can be deleted. Not necessary for network to work!!!!

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! (number of input units)

(number of hidden units)

(number of output units)

0.5 (learning rate)

(number of input and target output patterns) (has to correspond to the amount of patterns at the end of the datafile)

5.747781 -6.045236 1.206744 -41.245163 -0.249886 -0.35452 0.0718 (biases of hidden and output units, first three are biases of the hidden units, last four are biases of the output units) -8.446443 9.25553 -6.50087 7.357942 7.777944 1.238442 (values of weights from input to hidden units) 15.957281 0.452741 -8.19198 9.140881 29.124746 9.806898 5.859479 -5.09182 -3.475694 -4.896269 6.320669 0.213897 (values of weights from hidden to output units) (input pattern #)

(input pattern #)

(input pattern #)

(input pattern #) (target output pattern #)

(target output pattern #)

(target output pattern #)

(target output pattern #) !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

!!!! end of explanation of datafile. !!!!

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

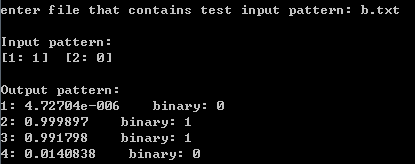

按照数据输入说明,可以再b.txt文件中保存输入数据[0, 1],对应的输入结果如下:

可以看到,输入[0,1]得到的结果为0110,与训练时候的结果一直。

最后,本代码没有深入测试过,同时也只有一个隐层,所以建议只用来配合梳理算法原理用。

deep learning(1)BP神经网络原理与练习的更多相关文章

- 机器学习(4):BP神经网络原理及其python实现

BP神经网络是深度学习的重要基础,它是深度学习的重要前行算法之一,因此理解BP神经网络原理以及实现技巧非常有必要.接下来,我们对原理和实现展开讨论. 1.原理 有空再慢慢补上,请先参考老外一篇不错的 ...

- Deep Learning In NLP 神经网络与词向量

0. 词向量是什么 自然语言理解的问题要转化为机器学习的问题,第一步肯定是要找一种方法把这些符号数学化. NLP 中最直观,也是到目前为止最常用的词表示方法是 One-hot Representati ...

- BP神经网络原理及python实现

[废话外传]:终于要讲神经网络了,这个让我踏进机器学习大门,让我读研,改变我人生命运的四个字!话说那么一天,我在乱点百度,看到了这样的内容: 看到这么高大上,这么牛逼的定义,怎么能不让我这个技术宅男心 ...

- 机器学习入门学习笔记:(一)BP神经网络原理推导及程序实现

机器学习中,神经网络算法可以说是当下使用的最广泛的算法.神经网络的结构模仿自生物神经网络,生物神经网络中的每个神经元与其他神经元相连,当它“兴奋”时,想下一级相连的神经元发送化学物质,改变这些神经元的 ...

- BP神经网络原理详解

转自博客园@编程De: http://www.cnblogs.com/jzhlin/archive/2012/07/28/bp.html http://blog.sina.com.cn/s/blog ...

- BP神经网络原理及在Matlab中的应用

一.人工神经网络 关于对神经网络的介绍和应用,请看如下文章 神经网络潜讲 如何简单形象又有趣地讲解神经网络是什么 二.人工神经网络分类 按照连接方式--前向神经网络.反馈(递归)神经网络 按照 ...

- Deep Learning - 3 改进神经网络的学习方式

反向传播算法是大多数神经网络的基础,我们应该多花点时间掌握它. 还有一些技术能够帮助我们改进反向传播算法,从而改进神经网络的学习方式,包括: 选取更好的代价函数 正则化方法 初始化权重的方法 如何选择 ...

- bp神经网络原理

bp(back propagation)修改每层神经网络向下一层传播的权值,来减少输出层的实际值和理论值的误差 其实就是训练权值嘛 训练方法为梯度下降法 其实就是高等数学中的梯度,将所有的权值看成自变 ...

- Coursera Deep Learning笔记 卷积神经网络基础

参考1 参考2 1. 计算机视觉 使用传统神经网络处理机器视觉的一个主要问题是输入层维度很大.例如一张64x64x3的图片,神经网络输入层的维度为12288. 如果图片尺寸较大,例如一张1000x10 ...

随机推荐

- Python 3.5 for windows 10 通过pip安装mysqlclient模块 error:C1083

$pip install mysqlclient 运行结果如下: 可能是由于不兼容导致的(中间试过各种方法,比如本地安装mysql等等),最后找来mysqlclient-1.3.7-cp35-cp35 ...

- python 脚本

mag3.py 1,import import sys from org.eclipse.jface.dialogs import MessageDialogfrom org.eclipse.core ...

- Unity3d Shader开发(三)Pass(Pass Tags,Name,BindChannels )

Pass Tags 通过使用tags来告诉渲染引擎在什么时候该如何渲染他们所期望的效果. Syntax 语法 Tags { "TagName1" = "Value1&qu ...

- Quartz Scheduler 开发指南(1)

Quartz Scheduler 开发指南(1) 原文地址:http://www.quartz-scheduler.org/generated/2.2.2/html/qtz-all/ 实例化调度程序( ...

- js 转化类似这样的时间( /Date(1389060261000)/)问题

首先在你的js文件里添加这段代码: /** * 日期时间格式化方法, * 可以格式化年.月.日.时.分.秒.周 **/ Date.prototype.Format = function (format ...

- 学无止境,学习AJAX(二)

POST 请求 一个简单 POST 请求: xmlhttp.open("POST","demo_post.asp",true); xmlhttp.send(); ...

- 转 scrollLeft,scrollWidth,clientWidth,offsetWidth之完全详解

scrollHeight: 获取对象的滚动高度. scrollLeft:设置或获取位于对象左边界和窗口中目前可见内容的最左端之间的距离 scrollTop:设置或获取位于对象最顶端和窗口中可见内容的最 ...

- location.search 详解

JS中location.search什么意思 设置或获取网页地址跟在问号后面的部分 当以get方式在url中传递了请求参数时,可以利用location的search属性提取参数的值,下面的代码把参数的 ...

- Android 性能优化之使用MAT分析内存泄露问题

我们平常在开发Android应用程序的时候,稍有不慎就有可能产生OOM,虽然JAVA有垃圾回收机,但也不能杜绝内存泄露,内存溢出等问题,随着科技的进步,移动设备的内存也越来越大了,但由于Android ...

- 【Quick 3.3】资源脚本加密及热更新(三)热更新模块

[Quick 3.3]资源脚本加密及热更新(三)热更新模块 注:本文基于Quick-cocos2dx-3.3版本编写 一.介绍 lua相对于c++开发的优点之一是代码可以在运行的时候才加载,基于此我们 ...