Multivariate Linear Regression

Multiple Features

Linear regression with multiple variables is also known as "multivariate linear regression".

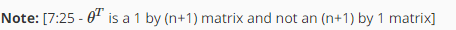

We now introduce notation for equations where we can have any number of input variables.

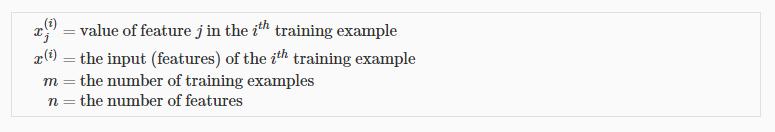

The multivariable form of the hypothesis function accommodating these multiple features is as follows:

In order to develop intuition about this function, we can think about θ0 as the basic price of a house, θ1 as the price per square meter, θ2 as the price per floor, etc. x1 will be the number of square meters in the house, x2 the number of floors, etc.

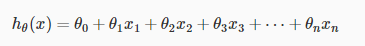

Using the definition of matrix multiplication, our multivariable hypothesis function can be concisely represented as:

This is a vectorization of our hypothesis function for one training example; see the lessons on vectorization to learn more.

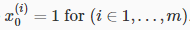

Remark: Note that for convenience reasons in this course we assume  .This allows us to do matrix operations with theta and x. Hence making the two vectors 'θ' and

.This allows us to do matrix operations with theta and x. Hence making the two vectors 'θ' and  match each other element-wise (that is, have the same number of elements: n+1).]

match each other element-wise (that is, have the same number of elements: n+1).]

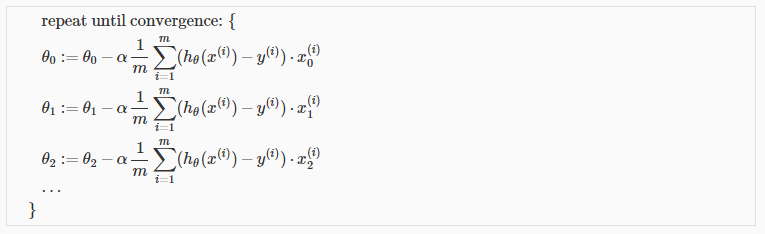

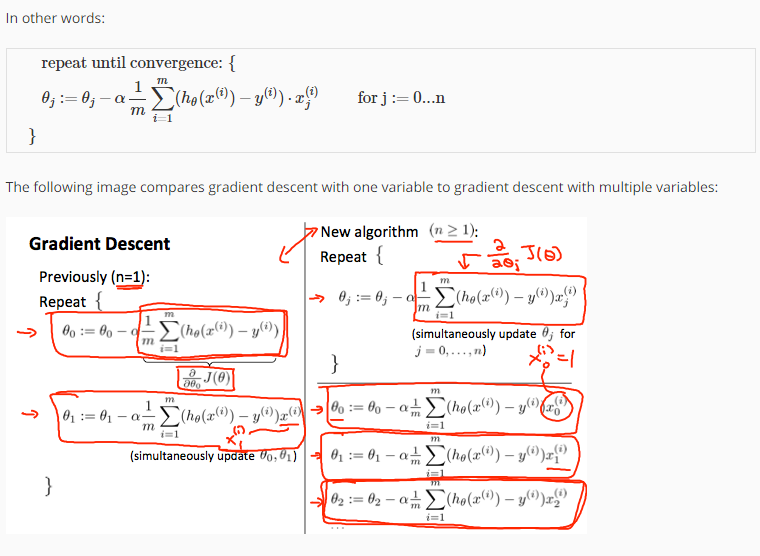

Gradient Descent For Multiple Variables

The gradient descent equation itself is generally the same form; we just have to repeat it for our 'n' features:

Gradient Descent in Practice I - Feature Scaling

Note: [6:20 - The average size of a house is 1000 but 100 is accidentally written instead]

We can speed up gradient descent by having each of our input values in roughly the same range. This is because θ will descend quickly on small ranges and slowly on large ranges, and so will oscillate inefficiently down to the optimum when the variables are very uneven.

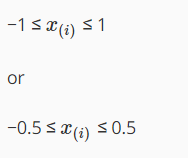

The way to prevent this is to modify the ranges of our input variables so that they are all roughly the same. Ideally:

These aren't exact requirements; we are only trying to speed things up. The goal is to get all input variables into roughly one of these ranges, give or take a few.

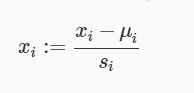

Two techniques to help with this are feature scaling and mean normalization. Feature scaling involves dividing the input values by the range (i.e. the maximum value minus the minimum value) of the input variable, resulting in a new range of just 1. Mean normalization involves subtracting the average value for an input variable from the values for that input variable resulting in a new average value for the input variable of just zero. To implement both of these techniques, adjust your input values as shown in this formula:

Where  is the average of all the values for feature (i) and s_i is the range of values (max - min), or s_i is the standard deviation.

is the average of all the values for feature (i) and s_i is the range of values (max - min), or s_i is the standard deviation.

Note that dividing by the range, or dividing by the standard deviation, give different results. The quizzes in this course use range - the programming exercises use standard deviation.

For example, if x_i represents housing prices with a range of 100 to 2000 and a mean value of 1000, then, x_i := \dfrac{price-1000}{1900}.

Gradient Descent in Practice II - Learning Rate

Note: [5:20 - the x -axis label in the right graph should be θ rather than No. of iterations ]

Debugging gradient descent. Make a plot with number of iterations on the x-axis. Now plot the cost function, J(θ) over the number of iterations of gradient descent. If J(θ) ever increases, then you probably need to decrease α.

Automatic convergence test. Declare convergence if J(θ) decreases by less than E in one iteration, where E is some small value such as 10−3. However in practice it's difficult to choose this threshold value.

It has been proven that if learning rate α is sufficiently small, then J(θ) will decrease on every iteration.

To summarize:

If α is too small: slow convergence.

If α is too large: may not decrease on every iteration and thus may not converge.

Features and Polynomial Regression

We can improve our features and the form of our hypothesis function in a couple different ways.

We can combine multiple features into one. For example, we can combine x1 and x2 into a new feature x3 by taking x1⋅x2.

Polynomial Regression

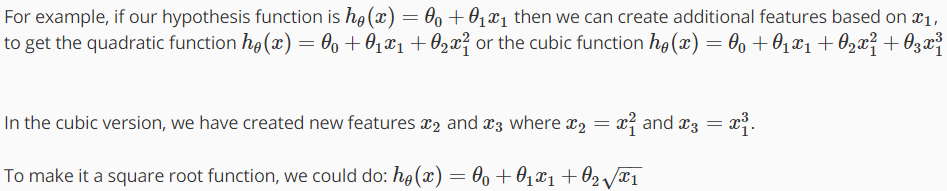

Our hypothesis function need not be linear (a straight line) if that does not fit the data well.

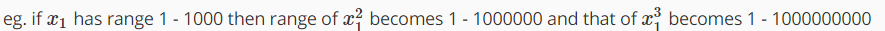

We can change the behavior or curve of our hypothesis function by making it a quadratic, cubic or square root function (or any other form).

One important thing to keep in mind is, if you choose your features this way then feature scaling becomes very important.

Multivariate Linear Regression的更多相关文章

- Machine Learning - week 2 - Multivariate Linear Regression

Multiple Features 上一章中,hθ(x) = θ0 + θ1x,表示只有一个 feature.现在,有多个 features,所以 hθ(x) = θ0 + θ1x1 + θ2x2 + ...

- 多元线性回归(Multivariate Linear Regression)简单应用

警告:本文为小白入门学习笔记 数据集: http://openclassroom.stanford.edu/MainFolder/DocumentPage.php?course=DeepLearnin ...

- Machine Learning – 第2周(Linear Regression with Multiple Variables、Octave/Matlab Tutorial)

Machine Learning – Coursera Octave for Microsoft Windows GNU Octave官网 GNU Octave帮助文档 (有900页的pdf版本) O ...

- 【转】Derivation of the Normal Equation for linear regression

I was going through the Coursera "Machine Learning" course, and in the section on multivar ...

- 机器学习---线性回归(Machine Learning Linear Regression)

线性回归是机器学习中最基础的模型,掌握了线性回归模型,有利于以后更容易地理解其它复杂的模型. 线性回归看似简单,但是其中包含了线性代数,微积分,概率等诸多方面的知识.让我们先从最简单的形式开始. 一元 ...

- Andrew Ng Machine Learning 专题【Linear Regression】

此文是斯坦福大学,机器学习界 superstar - Andrew Ng 所开设的 Coursera 课程:Machine Learning 的课程笔记. 力求简洁,仅代表本人观点,不足之处希望大家探 ...

- CheeseZH: Stanford University: Machine Learning Ex1:Linear Regression

(1) How to comput the Cost function in Univirate/Multivariate Linear Regression; (2) How to comput t ...

- Coursera machine learning 第二周 quiz 答案 Linear Regression with Multiple Variables

https://www.coursera.org/learn/machine-learning/exam/7pytE/linear-regression-with-multiple-variables ...

- Multivariate Adaptive Regression Splines (MARSplines)

Introductory Overview Regression Problems Multivariate Adaptive Regression Splines Model Selection a ...

随机推荐

- js22--链式调用

<!DOCTYPE HTML PUBLIC "-//W3C//DTD HTML 4.01//EN" "http://www.w3.org/TR/html4/stri ...

- android图像处理(3)底片效果

这篇将讲到图片特效处理的底片效果.跟前面一样是对像素点进行处理,算法是通用的. 算法原理:将当前像素点的RGB值分别与255之差后的值作为当前点的RGB值. 例: ABC 求B点的底片效果: B.r ...

- IK分词器插件elasticsearch-analysis-ik 6.1.1

http://88250.b3log.org/full-text-search-elasticsearch#b3_solo_h3_0 IK分词器插件 (1)源码 https://github.com/ ...

- ReactJs 入门DEMO(转自别人)

附件是分享的一些他人的ReactJs入门DEMO,以前版本使用的是JSXTransformer.js,新版的用browser.min.js替代了. DEMO 下载地址:http://files.cnb ...

- HDU4630-No Pain No Game(离线,线段树)

Problem Description Life is a game,and you lose it,so you suicide. But you can not kill yourself bef ...

- MariaDB 安装 (YUM)

在CentOS 7.0安装MariaDB的数据库,在这里记录下安装过程,以便以后查看. 1. 安装MariaDB 安装命令 yum -y install mariadb mariadb-server ...

- Python操作MySQL数据库完成简易的增删改查功能

说明:该篇博客是博主一字一码编写的,实属不易,请尊重原创,谢谢大家! 目录 一丶项目介绍 二丶效果展示 三丶数据准备 四丶代码实现 五丶完整代码 一丶项目介绍 1.叙述 博主闲暇之余花了10个小时写的 ...

- Direct2D开发:MFC下从资源文件中加载位图

转载请注明出处:http://www.cnblogs.com/ye-ming 0X01 概述: 相对于GDI处理界面,Direct2D有得天独厚的优势,下图就是Direct2D与GDI的效果对比,wi ...

- Shiro学习总结(3)——Apache Shiro身份认证

身份验证,即在应用中谁能证明他就是他本人.一般提供如他们的身份ID一些标识信息来表明他就是他本人,如提供身份证,用户名/密码来证明. 在shiro中,用户需要提供principals (身份)和cre ...

- Shiro学习总结(10)——Spring集成Shiro

1.引入Shiro的Maven依赖 [html] view plain copy <!-- Spring 整合Shiro需要的依赖 --> <dependency> <g ...